Towards CI Foundations Wodzisaw Duch Department of Informatics

![k. D case 3 -bit functions: X=[b 1 b 2 b 3], from [0, k. D case 3 -bit functions: X=[b 1 b 2 b 3], from [0,](https://slidetodoc.com/presentation_image_h2/0a06bee2a191e6a7494e0bbc1125e1d1/image-7.jpg)

- Slides: 14

Towards CI Foundations Włodzisław Duch Department of Informatics, Nicolaus Copernicus University, Toruń, Poland Google: W. Duch WCCI’ 08 Panel Discussion

Questions • • • Nature of CI Current state of CI Promoting CI CI and Smart Adaptive Systems CI and Nature-inspiration Future of CI

CI definition Computational Intelligence. An International Journal (1984) + 10 other journals with “Computational Intelligence”, D. Poole, A. Mackworth & R. Goebel, Computational Intelligence - A Logical Approach. (OUP 1998), GOFAI book, logic and reasoning. CI should: • be problem-oriented, not method oriented; • cover all that CI community is doing now, and is likely to do in future; • include AI – they also think they are CI. . . CI: science of solving (effectively) non-algorithmizable problems. Problem-oriented definition, firmly anchored in computer sci/engineering. AI: focused problems requiring higher-level cognition, the rest of CI is more focused on problems related to perception/action/control.

Are we really so good? Surprise! Almost nothing can be learned using current CI tools! Ex: complex logic; natural language; natural perception.

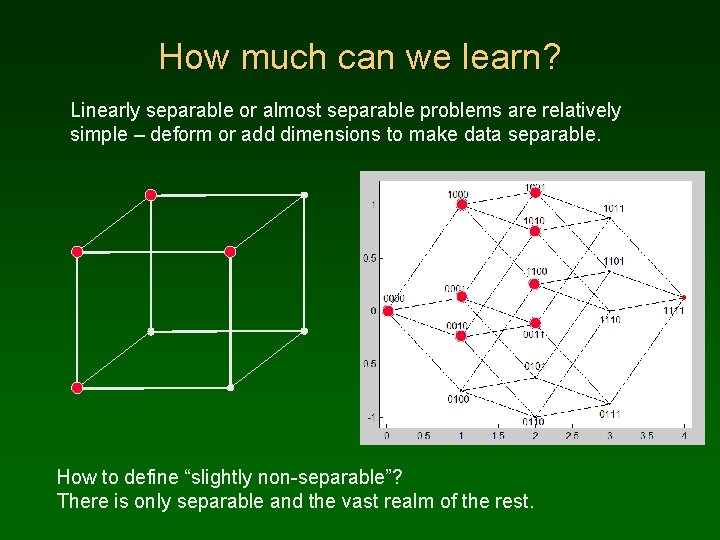

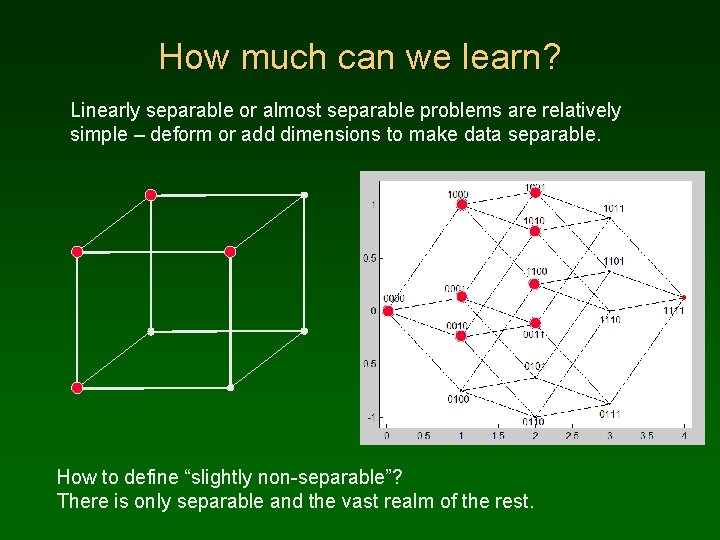

How much can we learn? Linearly separable or almost separable problems are relatively simple – deform or add dimensions to make data separable. How to define “slightly non-separable”? There is only separable and the vast realm of the rest.

Boolean functions n=2, 16 functions, 12 separable, 4 not separable. n=3, 256 f, 104 separable (41%), 152 not separable. n=4, 64 K=65536, only 1880 separable (3%) n=5, 4 G, but << 1% separable. . . bad news! Existing methods may learn some non-separable functions, but most functions cannot be learned ! Example: n-bit parity problem; many papers in top journals. No off-the-shelf systems are able to solve such problems. For parity problems SVM may go below base rate! Such problems are solved only by special neural architectures or special classifiers – if the type of function is known. But parity is still trivial. . . solved by

![k D case 3 bit functions Xb 1 b 2 b 3 from 0 k. D case 3 -bit functions: X=[b 1 b 2 b 3], from [0,](https://slidetodoc.com/presentation_image_h2/0a06bee2a191e6a7494e0bbc1125e1d1/image-7.jpg)

k. D case 3 -bit functions: X=[b 1 b 2 b 3], from [0, 0, 0] to [1, 1, 1] f(b 1, b 2, b 3) and f(b 1, b 2, b 3) are symmetric (color change) 8 cube vertices, 28=256 Boolean functions. 0 to 8 red vertices: 1, 8, 28, 56, 70, 56, 28, 8, 1 functions. For arbitrary direction W index projection W. X gives: k=1 in 2 cases, all 8 vectors in 1 cluster (all black or all white) k=2 in 14 cases, 8 vectors in 2 clusters (linearly separable) k=3 in 42 cases, clusters B R B or W R W k=4 in 70 cases, clusters R W or W R Symmetrically, k=5 -8 for 70, 42, 14, 2. Most logical functions have 4 or 5 -separable projections. Learning = find best projection for each function. Number of k=1 to 4 -separable functions is: 2, 102, 126 and 26 126 of all functions may be learned using 3 -separability.

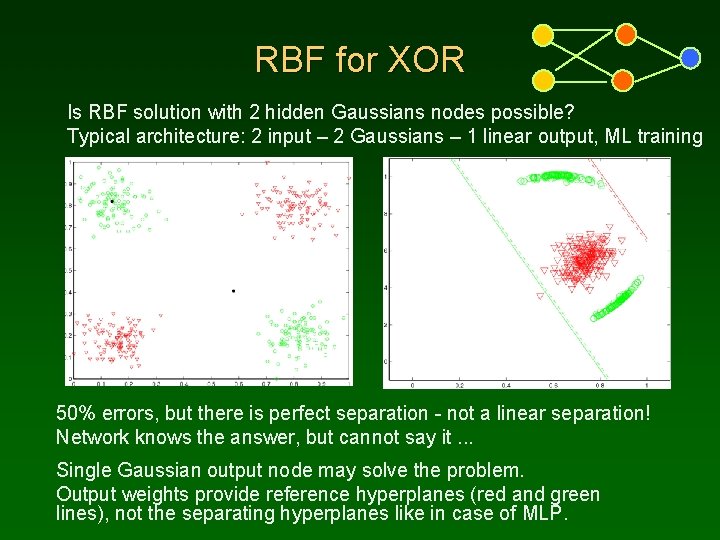

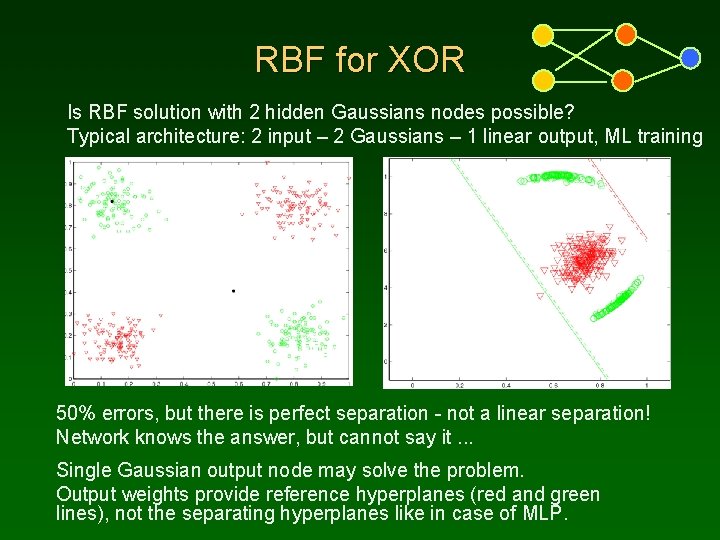

RBF for XOR Is RBF solution with 2 hidden Gaussians nodes possible? Typical architecture: 2 input – 2 Gaussians – 1 linear output, ML training 50% errors, but there is perfect separation - not a linear separation! Network knows the answer, but cannot say it. . . Single Gaussian output node may solve the problem. Output weights provide reference hyperplanes (red and green lines), not the separating hyperplanes like in case of MLP.

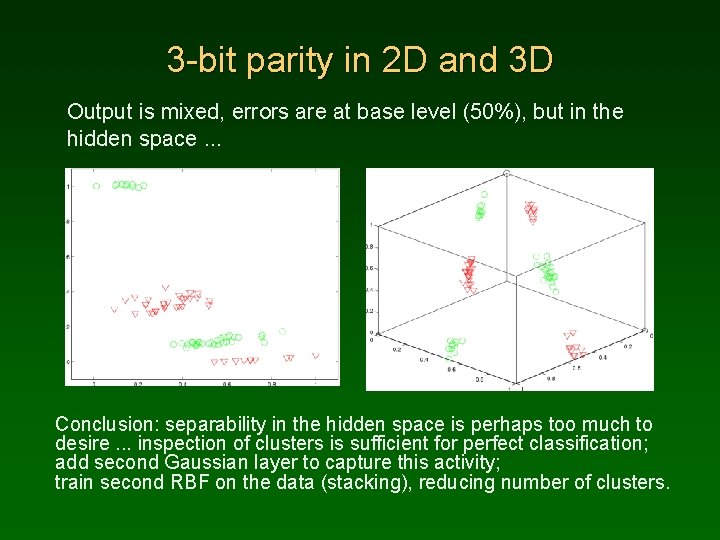

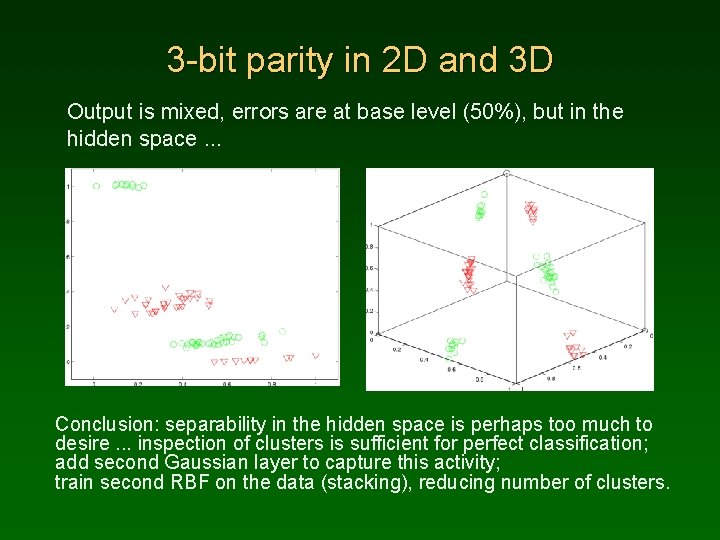

3 -bit parity in 2 D and 3 D Output is mixed, errors are at base level (50%), but in the hidden space. . . Conclusion: separability in the hidden space is perhaps too much to desire. . . inspection of clusters is sufficient for perfect classification; add second Gaussian layer to capture this activity; train second RBF on the data (stacking), reducing number of clusters.

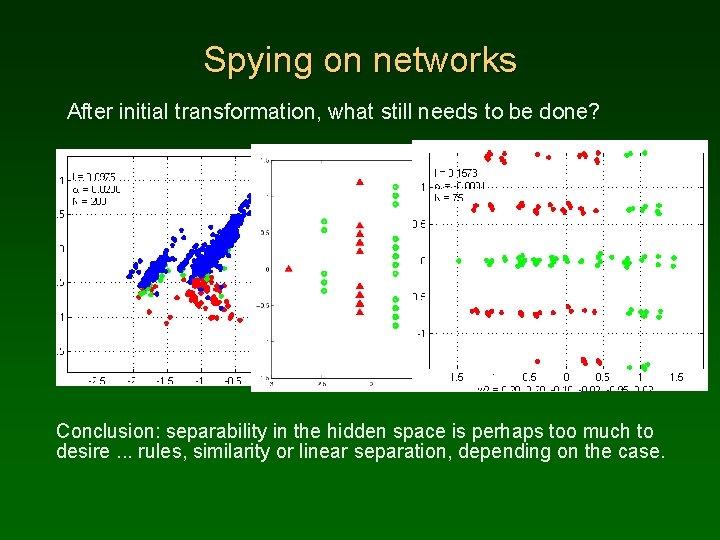

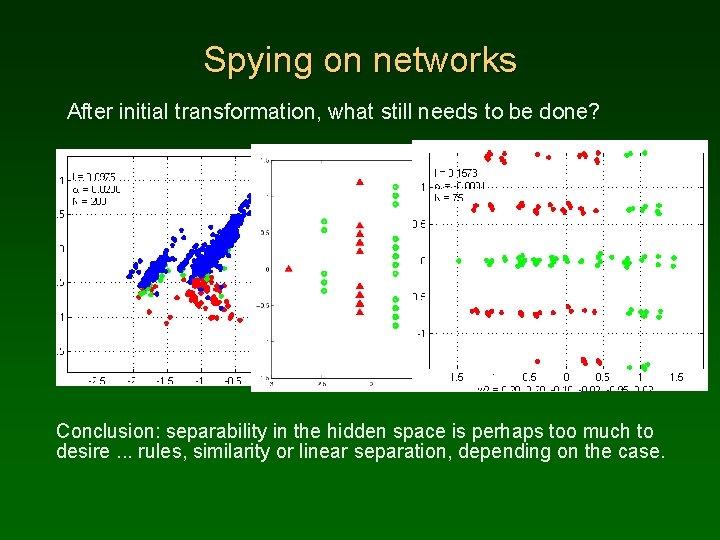

Spying on networks After initial transformation, what still needs to be done? Conclusion: separability in the hidden space is perhaps too much to desire. . . rules, similarity or linear separation, depending on the case.

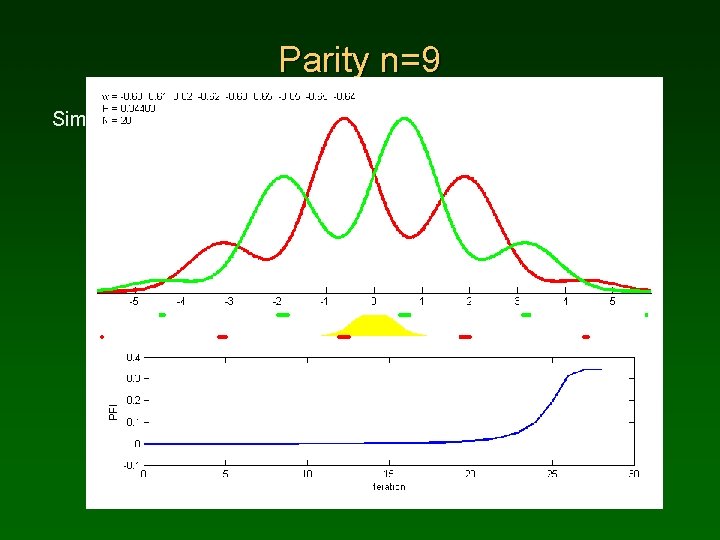

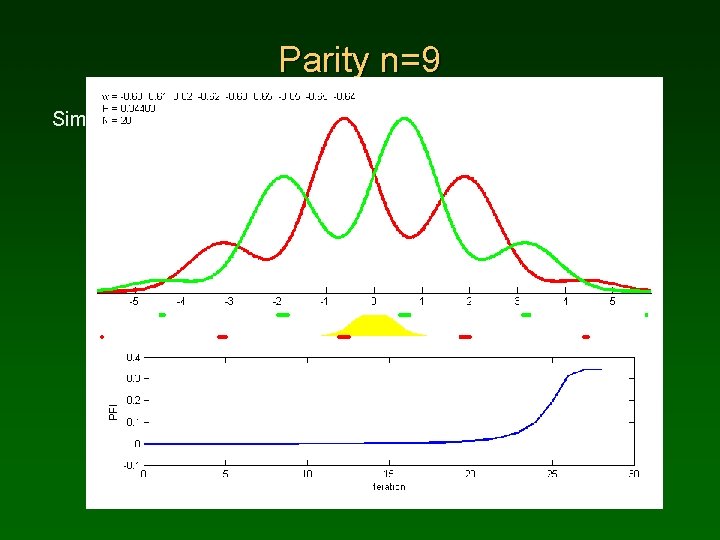

Parity n=9 Simple gradient learning; quality index shown below.

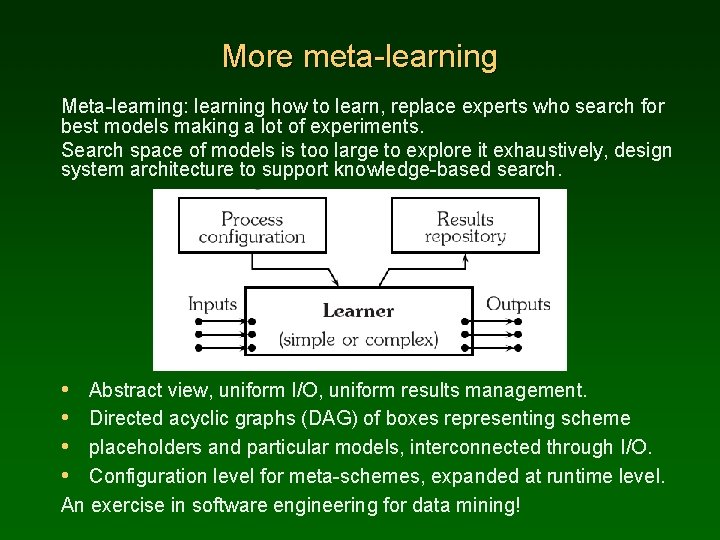

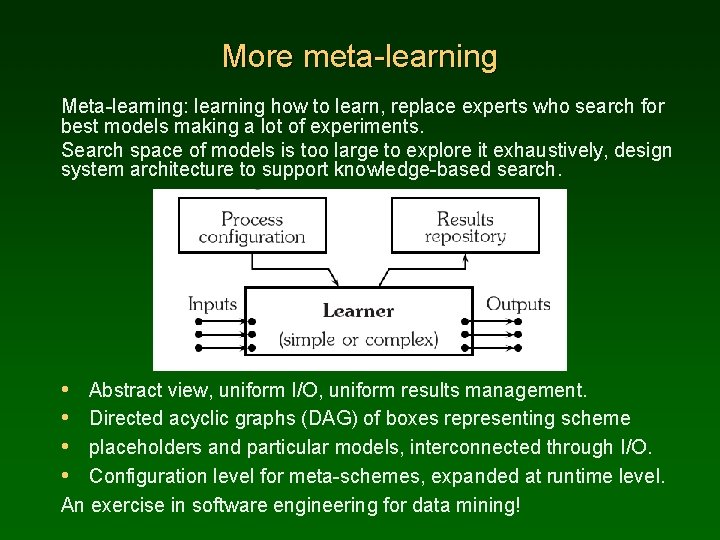

More meta-learning Meta-learning: learning how to learn, replace experts who search for best models making a lot of experiments. Search space of models is too large to explore it exhaustively, design system architecture to support knowledge-based search. • • Abstract view, uniform I/O, uniform results management. Directed acyclic graphs (DAG) of boxes representing scheme placeholders and particular models, interconnected through I/O. Configuration level for meta-schemes, expanded at runtime level. An exercise in software engineering for data mining!

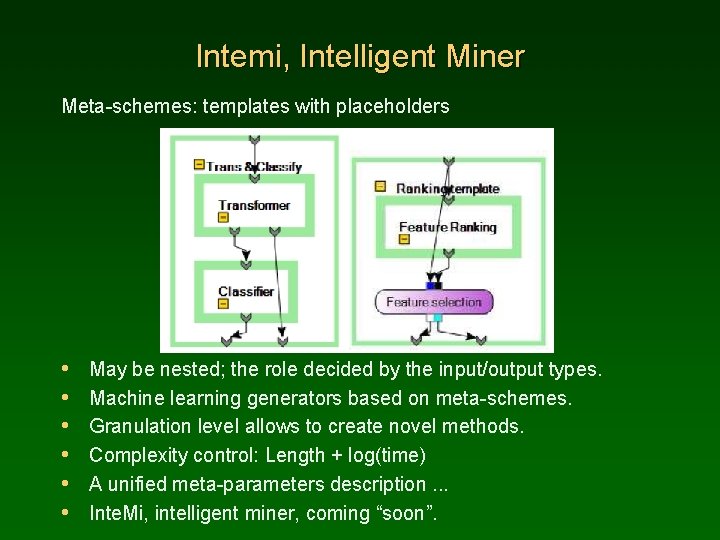

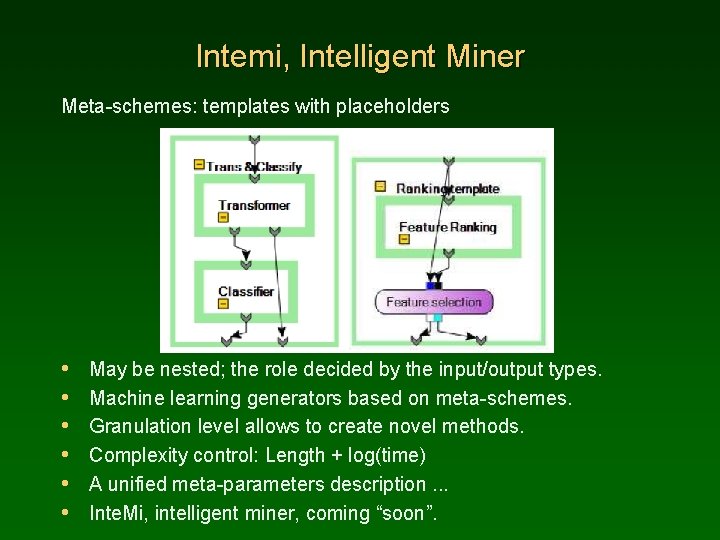

Intemi, Intelligent Miner Meta-schemes: templates with placeholders • • • May be nested; the role decided by the input/output types. Machine learning generators based on meta-schemes. Granulation level allows to create novel methods. Complexity control: Length + log(time) A unified meta-parameters description. . . Inte. Mi, intelligent miner, coming “soon”.

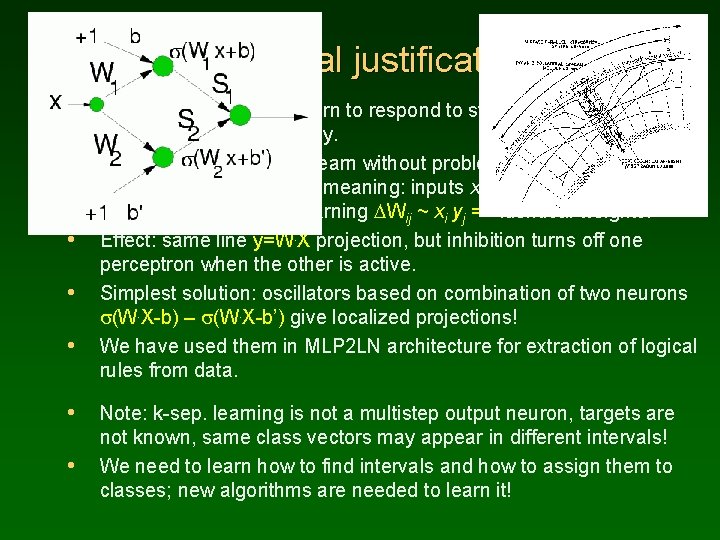

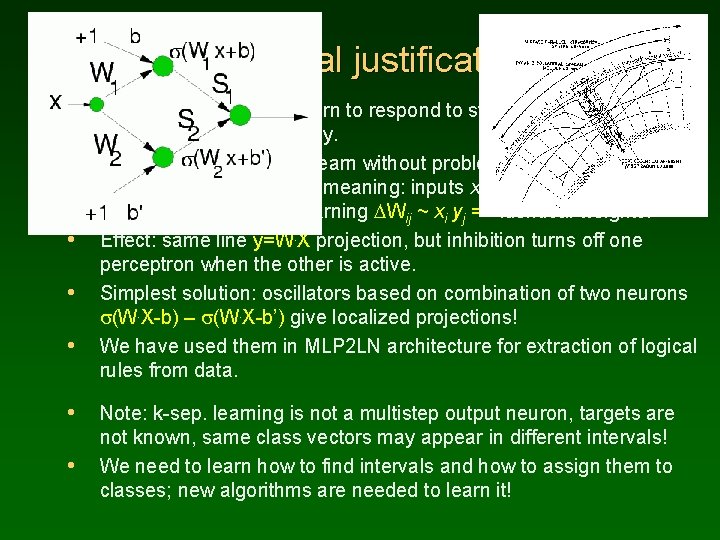

Biological justification • • Cortical columns may learn to respond to stimuli with complex logic resonating in different way. The second column will learn without problems that such different reactions have the same meaning: inputs xi and training targets yj. are same => Hebbian learning DWij ~ xi yj => identical weights. Effect: same line y=W. X projection, but inhibition turns off one perceptron when the other is active. Simplest solution: oscillators based on combination of two neurons s(W. X-b) – s(W. X-b’) give localized projections! We have used them in MLP 2 LN architecture for extraction of logical rules from data. Note: k-sep. learning is not a multistep output neuron, targets are not known, same class vectors may appear in different intervals! We need to learn how to find intervals and how to assign them to classes; new algorithms are needed to learn it!

Hong kong 1980 grid system

Hong kong 1980 grid system Lau duch

Lau duch Protestantská etika a duch kapitalismu

Protestantská etika a duch kapitalismu Jezis moj pred tvojou stojim obetou akordy

Jezis moj pred tvojou stojim obetou akordy W zdrowym ciele zdrowy duch wikipedia

W zdrowym ciele zdrowy duch wikipedia Patryk duch

Patryk duch Ruah co to znaczy

Ruah co to znaczy Duch prorocki a wróżby

Duch prorocki a wróżby Príď svätý duch vojdi do nás

Príď svätý duch vojdi do nás Robert rumas termofory

Robert rumas termofory Duch svätý symboly

Duch svätý symboly Googleduch

Googleduch Formułka pragniemy, aby duch święty

Formułka pragniemy, aby duch święty Dziady cz ii prezentacja

Dziady cz ii prezentacja Hebrajskie duch to

Hebrajskie duch to