SVO Fast SemiDirect Monocular Visual Odometry Christian Forster

- Slides: 17

SVO Fast Semi-Direct Monocular Visual Odometry Christian Forster, Matia Pizzoli, Davide Scaramuzza Shervin Ghasemlou – November 2015

INTRODUCTION SVO: • Is a method that combines Feature based methods and direct methods for visual Odometry • According to authors, All Visual Odometry works for MAVs are featurebased. • SVO is more robust and allows faster flight maneuvers • Higher accuracy and speed Shervin Ghasemlou – December 2015

INTRODUCTION Feature based Methods: • Extract a sparse set of features • Match them in successive frames • Recover camera pose and also structure using epipolar geometry • Finally refine pose and structure Shervin Ghasemlou – December 2015

INTRODUCTION Direct Methods: • Uses intensity of the image • Exploit information from all parts of the image • These methods outperform feature based mthods in term of robustness • In scenes with little textures • Camera defocus • Motion blur • They save time of feature detection Shervin Ghasemlou – December 2015

CONTRIBUTIONS 1 -A novel semi direct VO method for MAVs, which in comparison with the state of the art methods, is • faster • More accurate 2 -Integration of a probabilistic mapping method • Robust to outliers Shervin Ghasemlou – December 2015

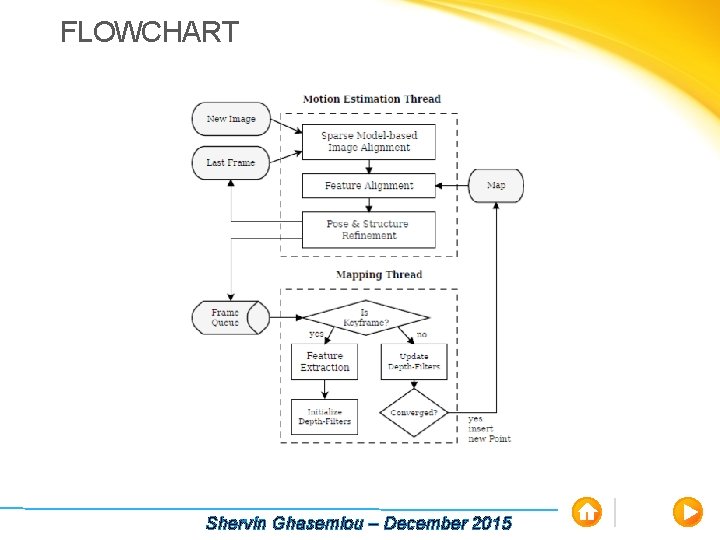

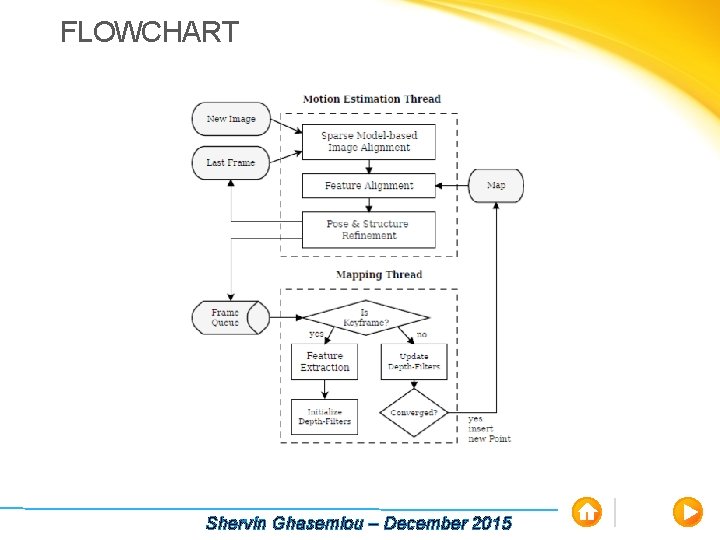

ALGORITHM Two Thread • Motion Estimation • Sparse model-based image alignment • Feature alignment • Pose and structure refinement • Mapping Shervin Ghasemlou – December 2015

FLOWCHART Shervin Ghasemlou – December 2015

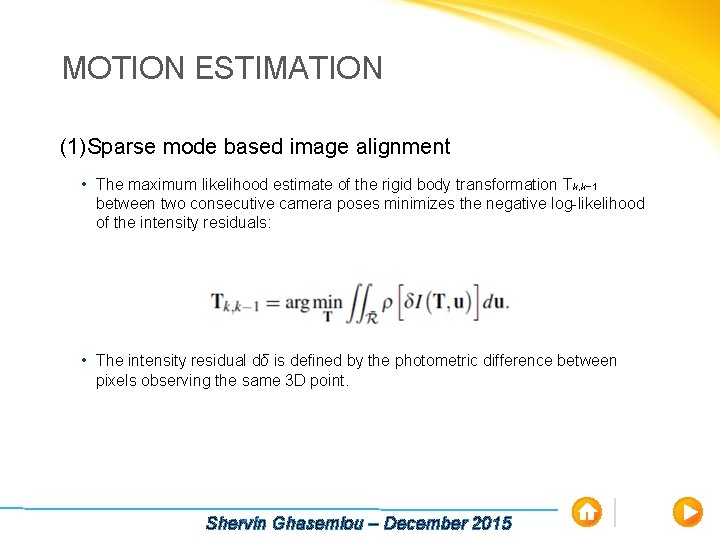

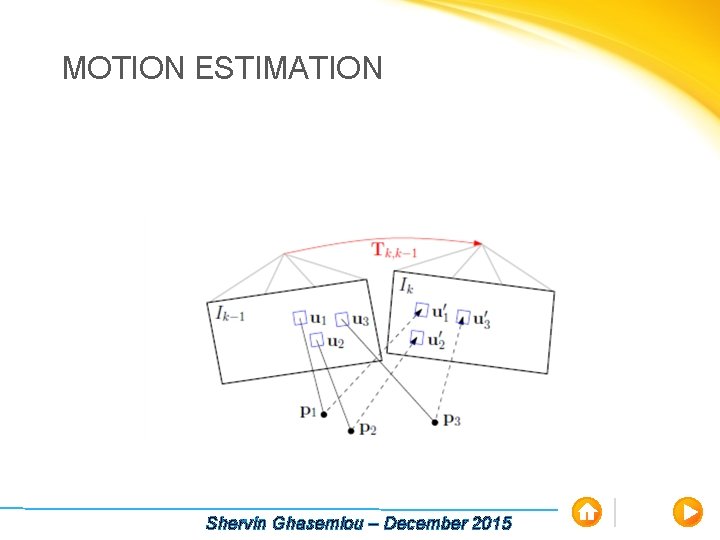

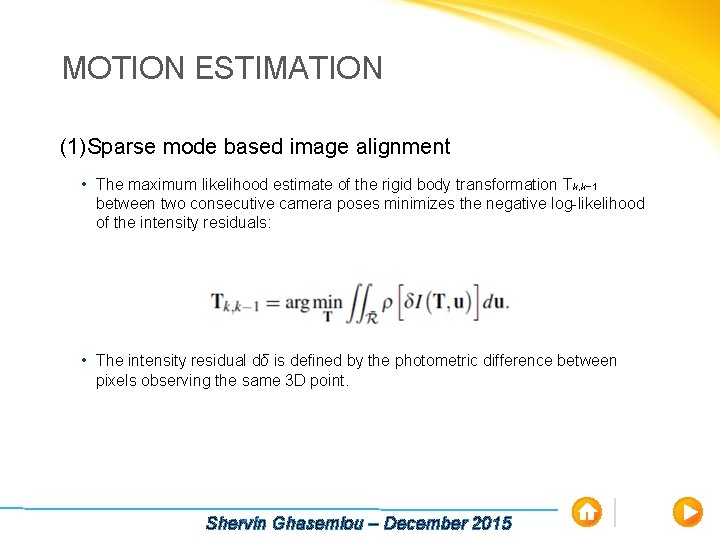

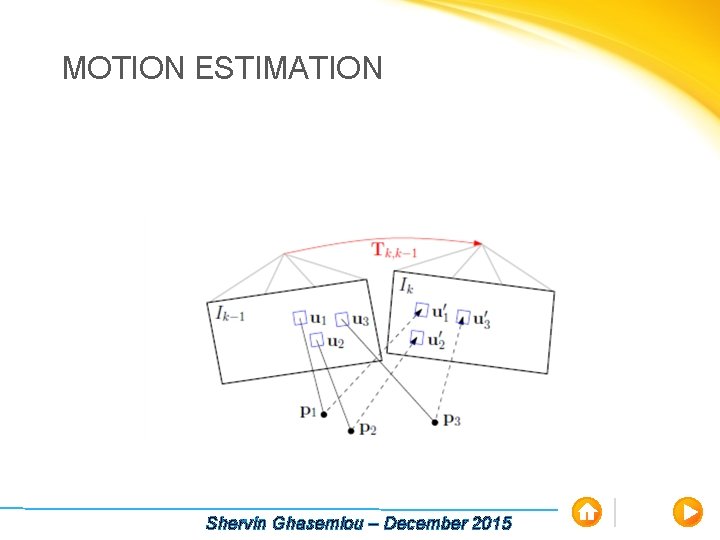

MOTION ESTIMATION (1)Sparse mode based image alignment • The maximum likelihood estimate of the rigid body transformation Tk, k− 1 between two consecutive camera poses minimizes the negative log-likelihood of the intensity residuals: • The intensity residual dδ is defined by the photometric difference between pixels observing the same 3 D point. Shervin Ghasemlou – December 2015

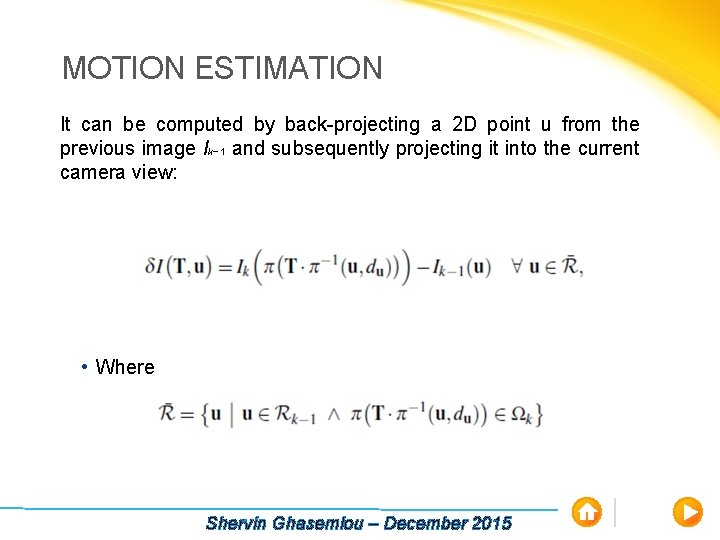

MOTION ESTIMATION It can be computed by back-projecting a 2 D point u from the previous image Ik− 1 and subsequently projecting it into the current camera view: • Where Shervin Ghasemlou – December 2015

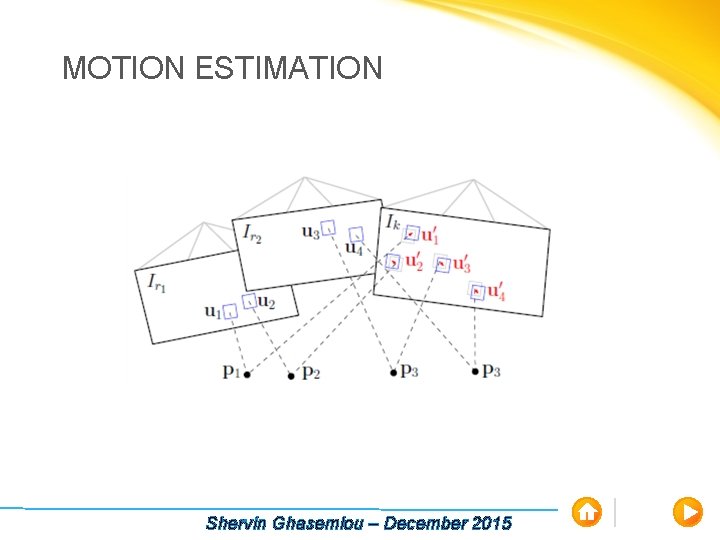

MOTION ESTIMATION Shervin Ghasemlou – December 2015

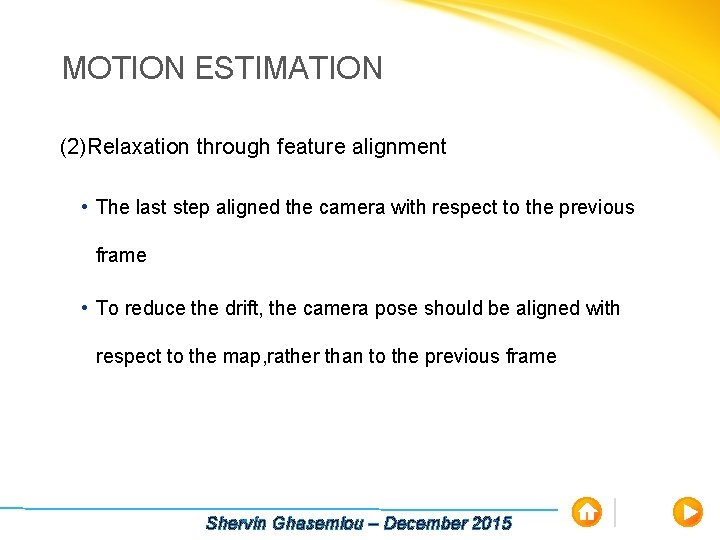

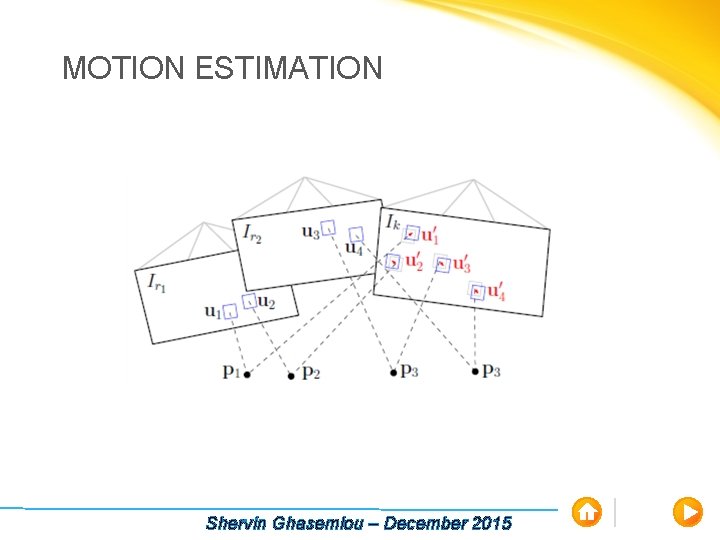

MOTION ESTIMATION (2)Relaxation through feature alignment • The last step aligned the camera with respect to the previous frame • To reduce the drift, the camera pose should be aligned with respect to the map, rather than to the previous frame Shervin Ghasemlou – December 2015

MOTION ESTIMATION Shervin Ghasemlou – December 2015

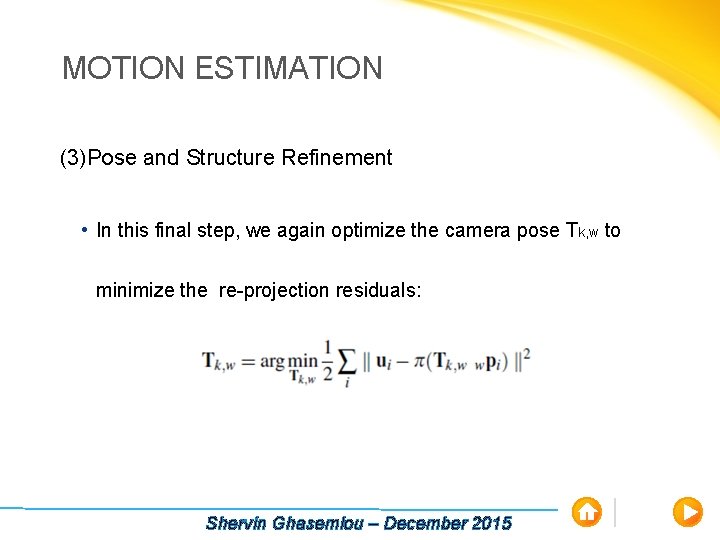

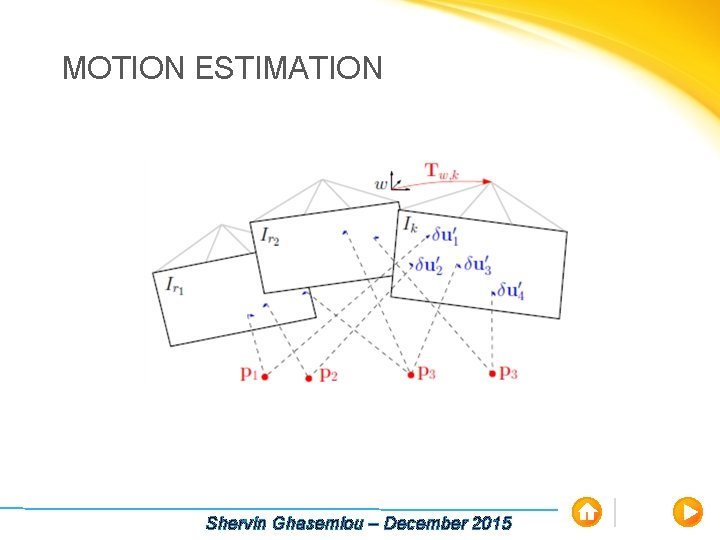

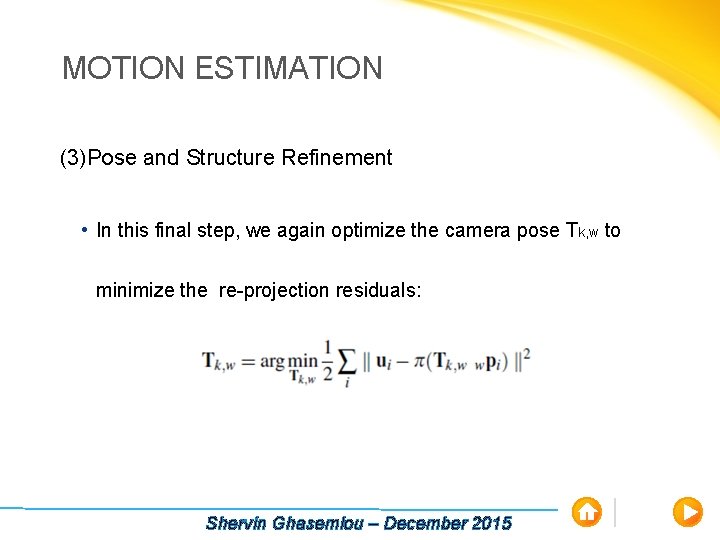

MOTION ESTIMATION (3)Pose and Structure Refinement • In this final step, we again optimize the camera pose Tk, w to minimize the re-projection residuals: Shervin Ghasemlou – December 2015

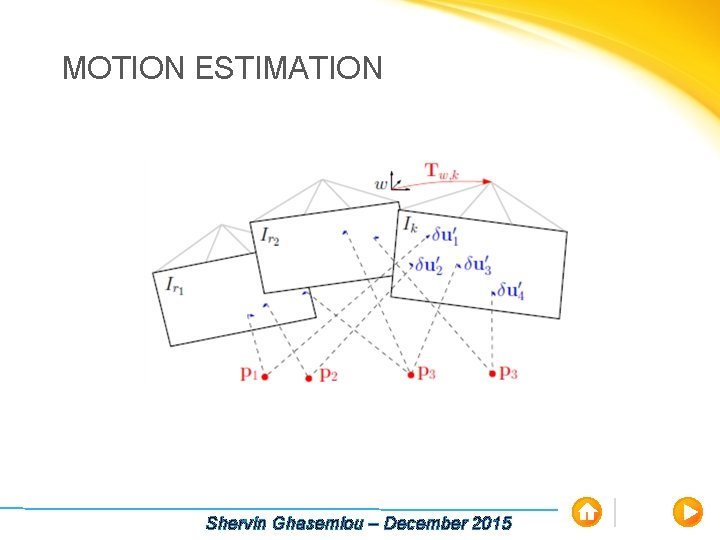

MOTION ESTIMATION Shervin Ghasemlou – December 2015

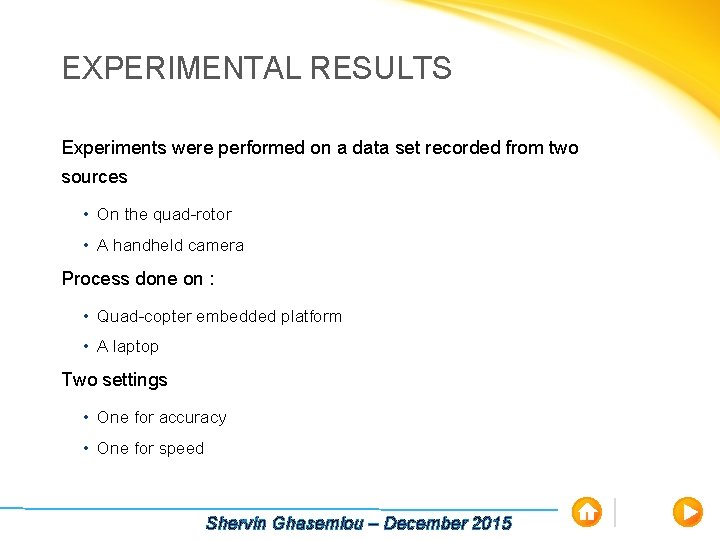

EXPERIMENTAL RESULTS Experiments were performed on a data set recorded from two sources • On the quad-rotor • A handheld camera Process done on : • Quad-copter embedded platform • A laptop Two settings • One for accuracy • One for speed Shervin Ghasemlou – December 2015

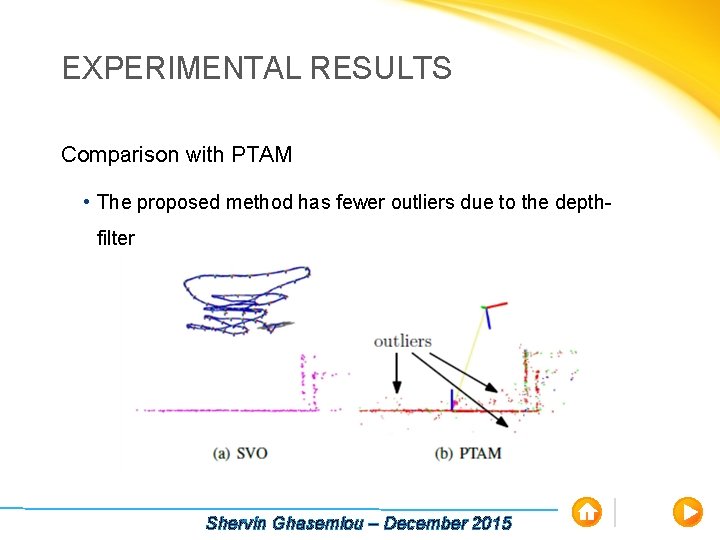

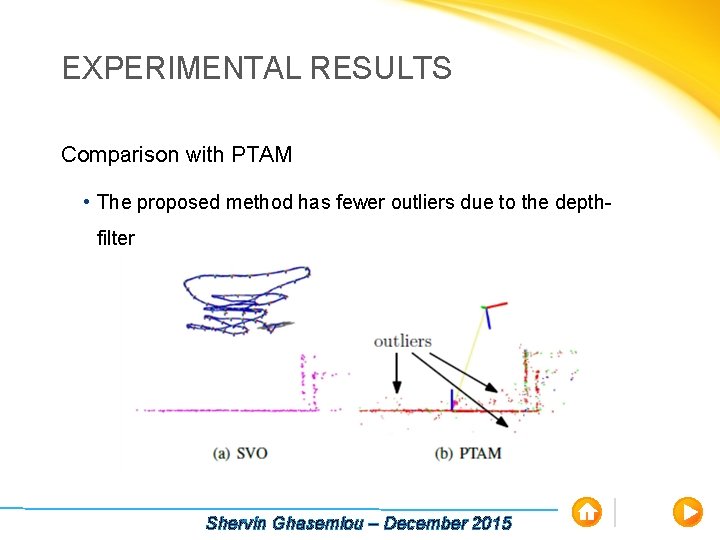

EXPERIMENTAL RESULTS Comparison with PTAM • The proposed method has fewer outliers due to the depthfilter Shervin Ghasemlou – December 2015

Questions?