Summary of Category 3 HENP Computing Systems and

- Slides: 19

Summary of Category 3 HENP Computing Systems and Infrastructure Ian Fisk and Michael Ernst CHEP 2003 March 28, 2003

Introduction u. We tried to break the week into themes èWe Discussed Fabrics and Architectures on Monday Heard general talks about building and securing large multi-purpose facilities § As well as updates from a number of HEPN computing efforts èWe discussed emerging hardware and software technology on Tuesday § Review of the most recent pasta report and update of commodity disk storage work § Software for flexible clusters: MOSIX. Advanced storage and data serving: CASTOR, ENSTOR, d. Cache, Data Farm and ROOT-IO èWe discussed Grid and other services on Thursday § Grid Interfaces and Storage Management over the grid § Monitoring services § u. It was a full week with a lot to discuss. Special thanks to all those who presented. èThere is no way to cover very much of what was presented in a thirty minute talk. CAS 2002 -10 -24 Ian M. Fisk, UCSD 2

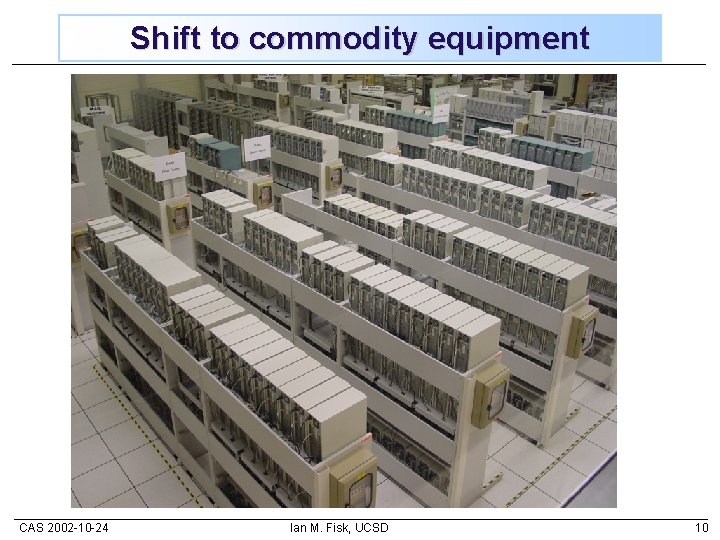

General Observations u. Grid functionality is coming quickly èBasic underlying concepts of distributed, parasitic, and multi-purpose computing are already being deployed in running experiments èEarly implementation of interfaces for grid services to fabrics èI would expect by the time the LHC experiments have real data that the tools and techniques will have been well broken-in by experiments running today u. Shift to commodity equipment accelerated since the last CHEP èI would argue that the shift is nearly complete At least two large computing centers admitted to having nothing in their work rooms but Linux systems and a few Suns to debug software èThis has resulted in the development of tools to help handle this complicated component environment § u. With notable exceptions high energy computing does not work well together èThe individual experiments often have subtly different requirements, which results in completely independent development efforts CAS 2002 -10 -24 Ian M. Fisk, UCSD 3

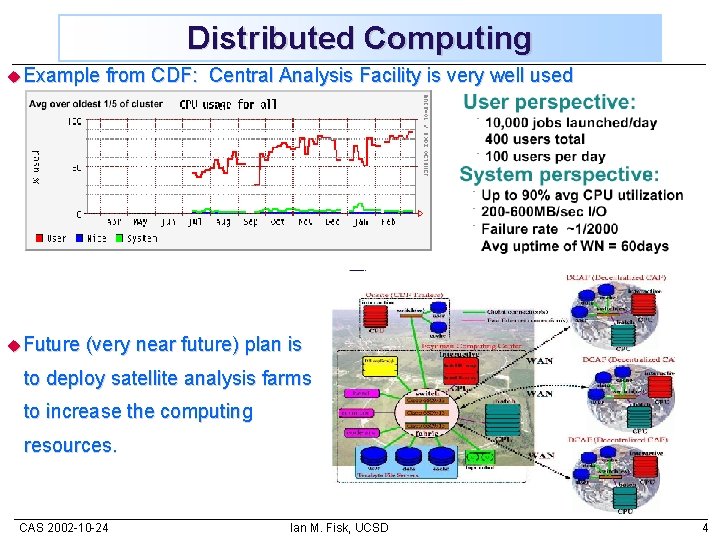

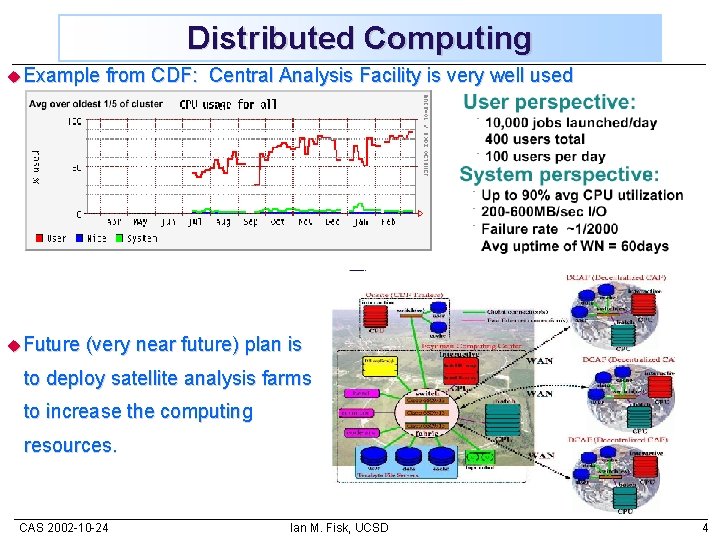

Distributed Computing u. Example u. Future from CDF: Central Analysis Facility is very well used (very near future) plan is to deploy satellite analysis farms to increase the computing resources. CAS 2002 -10 -24 Ian M. Fisk, UCSD 4

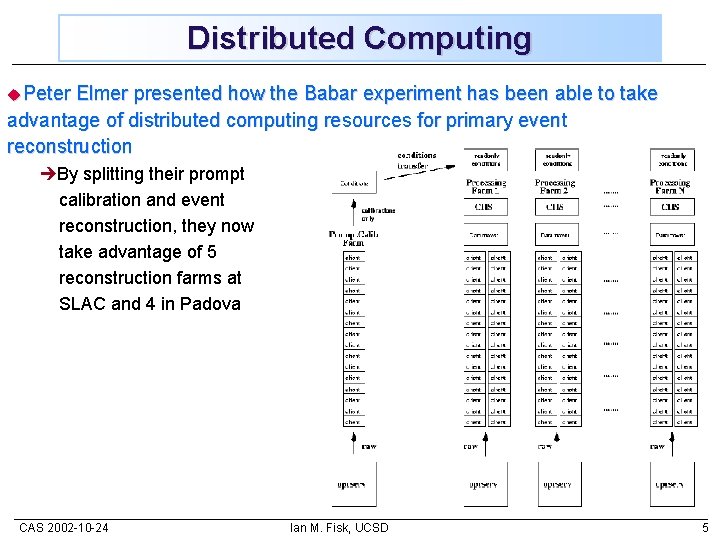

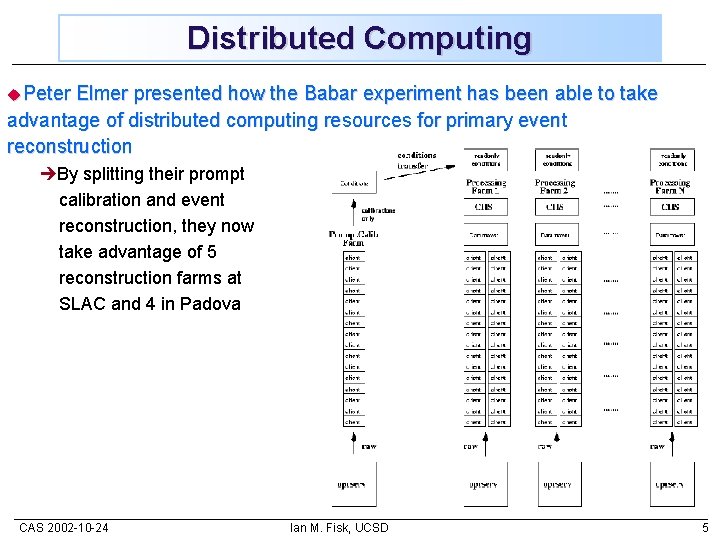

Distributed Computing u. Peter Elmer presented how the Babar experiment has been able to take advantage of distributed computing resources for primary event reconstruction èBy splitting their prompt calibration and event reconstruction, they now take advantage of 5 reconstruction farms at SLAC and 4 in Padova CAS 2002 -10 -24 Ian M. Fisk, UCSD 5

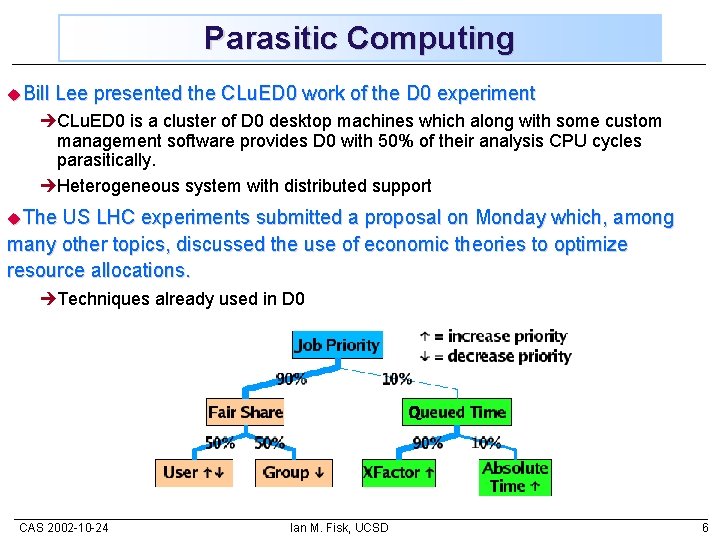

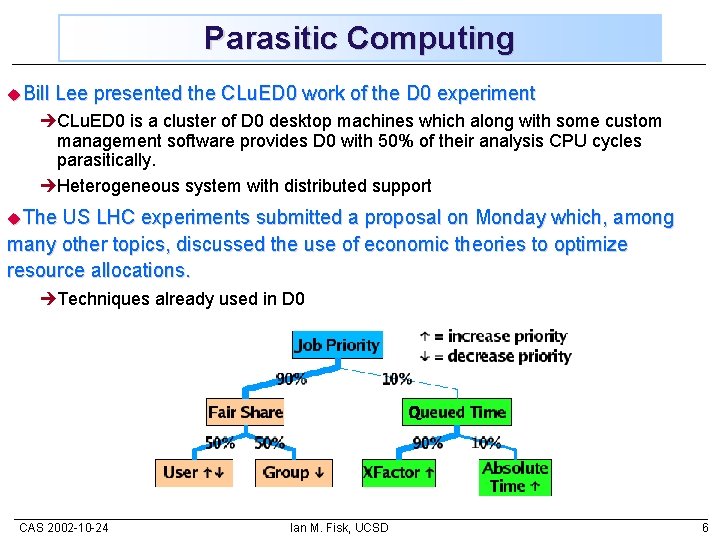

Parasitic Computing u. Bill Lee presented the CLu. ED 0 work of the D 0 experiment èCLu. ED 0 is a cluster of D 0 desktop machines which along with some custom management software provides D 0 with 50% of their analysis CPU cycles parasitically. èHeterogeneous system with distributed support u. The US LHC experiments submitted a proposal on Monday which, among many other topics, discussed the use of economic theories to optimize resource allocations. èTechniques already used in D 0 CAS 2002 -10 -24 Ian M. Fisk, UCSD 6

Multipurpose Computing u. Fundamental to a grid connected facility is the ability to support multiple experiments at a minimum and ideally multiple disciplines èThe people responsible for computing systems have been thinking about how to make this possible, because so many regional computing centers have to support multiple experiments and user communities. èJohn Gordon gave an interesting talk on whether it was possible to build a multipurpose center § John identified 6 categories of problems and discussed possible solutions v Software levels v ‘experts’ v Local rules v Security v Firewalls v The accelerator centres CAS 2002 -10 -24 Ian M. Fisk, UCSD 7

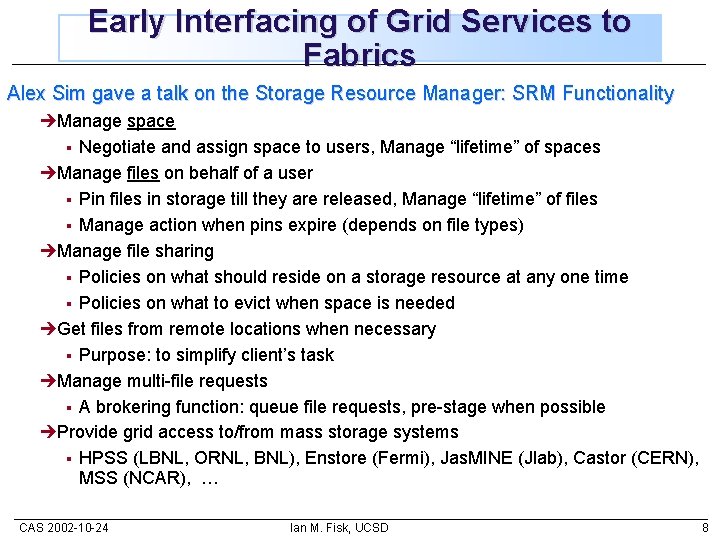

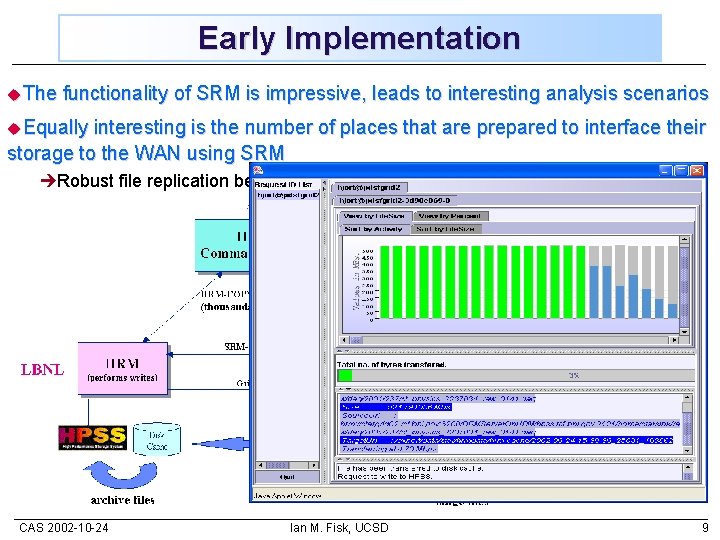

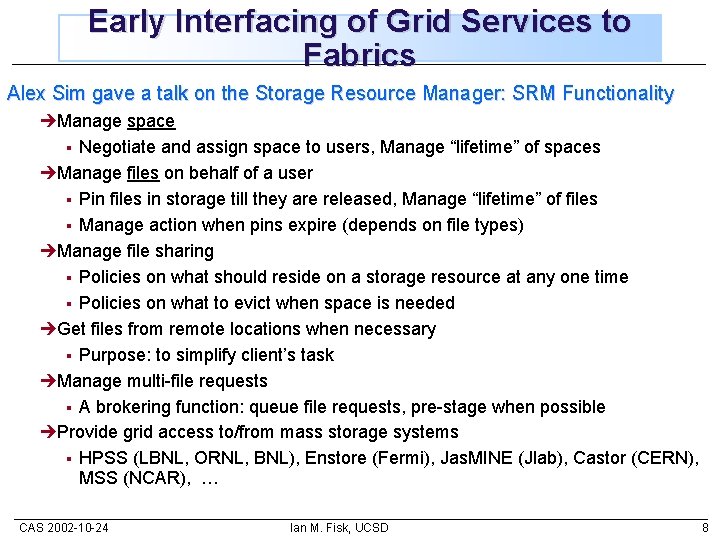

Early Interfacing of Grid Services to Fabrics Alex Sim gave a talk on the Storage Resource Manager: SRM Functionality èManage space Negotiate and assign space to users, Manage “lifetime” of spaces èManage files on behalf of a user § Pin files in storage till they are released, Manage “lifetime” of files § Manage action when pins expire (depends on file types) èManage file sharing § Policies on what should reside on a storage resource at any one time § Policies on what to evict when space is needed èGet files from remote locations when necessary § Purpose: to simplify client’s task èManage multi-file requests § A brokering function: queue file requests, pre-stage when possible èProvide grid access to/from mass storage systems § HPSS (LBNL, ORNL, BNL), Enstore (Fermi), Jas. MINE (Jlab), Castor (CERN), MSS (NCAR), … § CAS 2002 -10 -24 Ian M. Fisk, UCSD 8

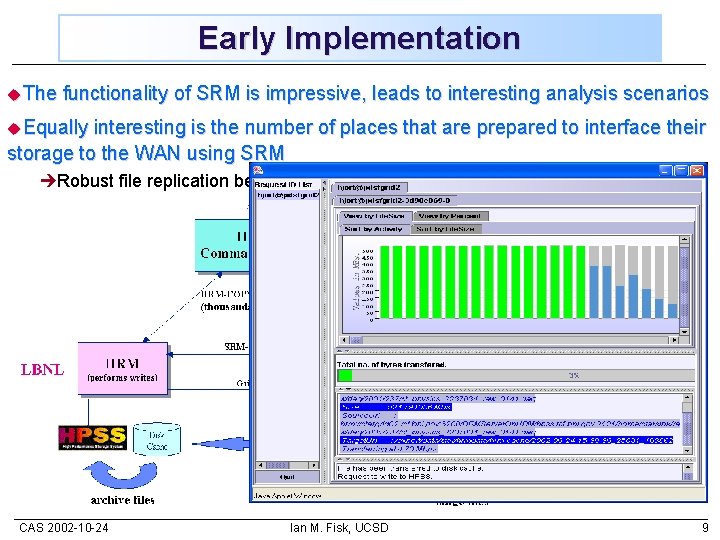

Early Implementation u. The functionality of SRM is impressive, leads to interesting analysis scenarios u. Equally interesting is the number of places that are prepared to interface their storage to the WAN using SRM èRobust file replication between BNL and LBNL CAS 2002 -10 -24 Ian M. Fisk, UCSD 9

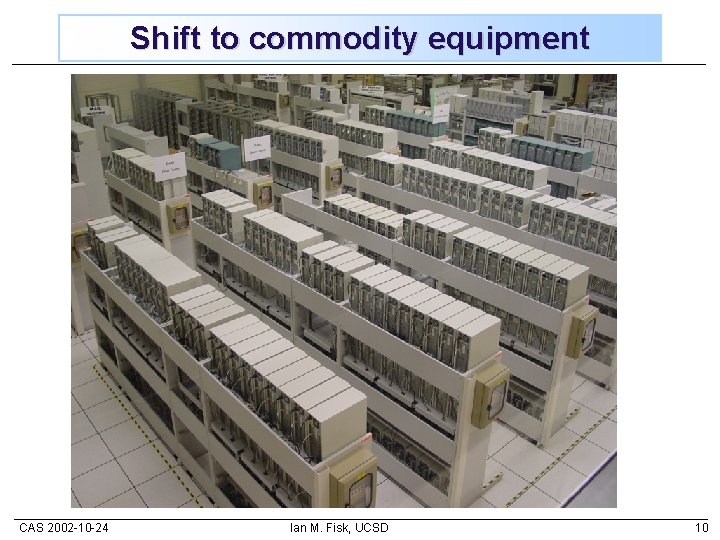

Shift to commodity equipment CAS 2002 -10 -24 Ian M. Fisk, UCSD 10

Benefits and Complications u. The benefit is very substantial computing resources at a reasonable hardware cost. u. The complication is the scale and complexity of the commodity computing cluster èA reasonably big computing cluster today might be 1000 systems With all the possible hardware problems associated with 1000 systems bought from the lowest bidder èConsiderable amount of deployment, integration, and development effort to create tools that allow a shelf or rack of linux boxes to behave like a computing resource. § Configuration Tools § Monitoring Tools § Tools for systems control § Scheduling Tools § Security Techniques § CAS 2002 -10 -24 Ian M. Fisk, UCSD 11

Configuration Tools u. We heard an interesting talk from Thorsten Kleinwork on install and running systems at CERN èSystems are installed with kickstart and RPMs u. CERN and several other centers are deploying the configuration tools from EDG WP 4 èPan & CDB (Configuration Data Base) for describing hosts: èPan is a very flexible language for describing host configuration information: Expressed in templates (ASCII) § Allows includes (inheritance) èPan is compiled into XML, inside CDB èXML is downloaded and the information provided by CCConfig, which is the high level API § u. Complicated even to track what it is you have. èWe had an interesting presentation from Jens Kreutzkamp from DESY about how they track their IT assets. CAS 2002 -10 -24 Ian M. Fisk, UCSD 12

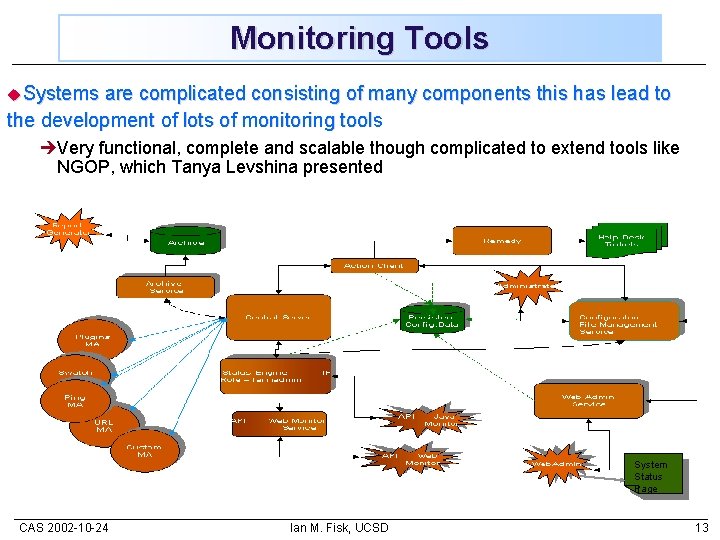

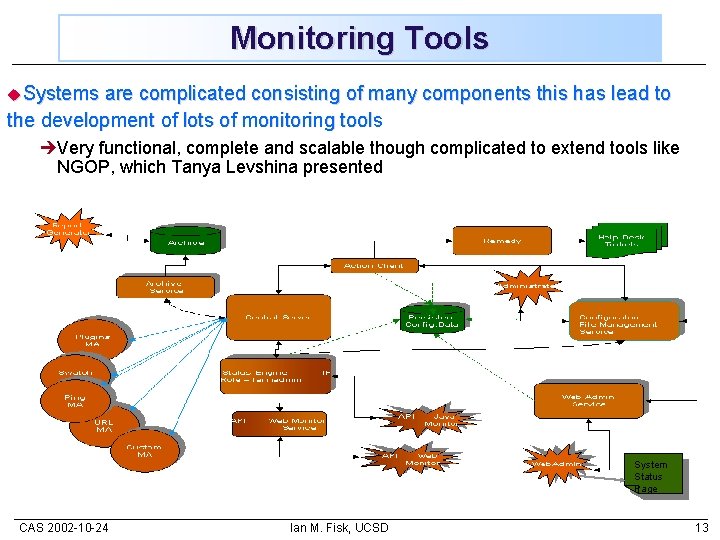

Monitoring Tools u. Systems are complicated consisting of many components this has lead to the development of lots of monitoring tools èVery functional, complete and scalable though complicated to extend tools like NGOP, which Tanya Levshina presented System Status Page CAS 2002 -10 -24 Ian M. Fisk, UCSD 13

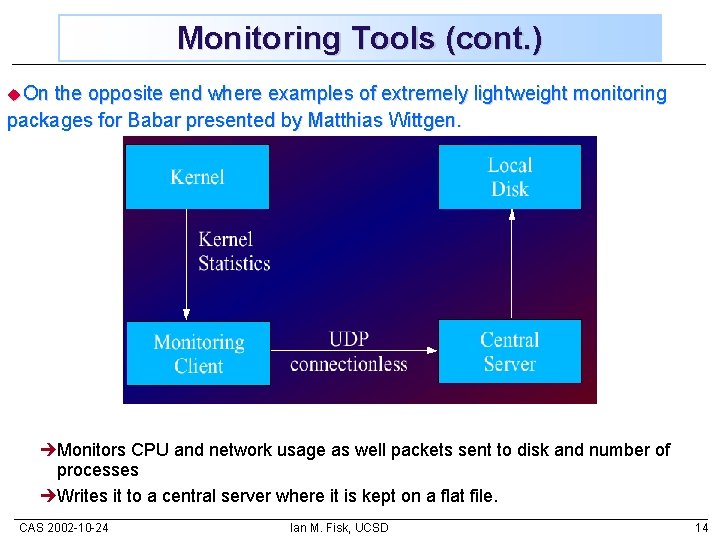

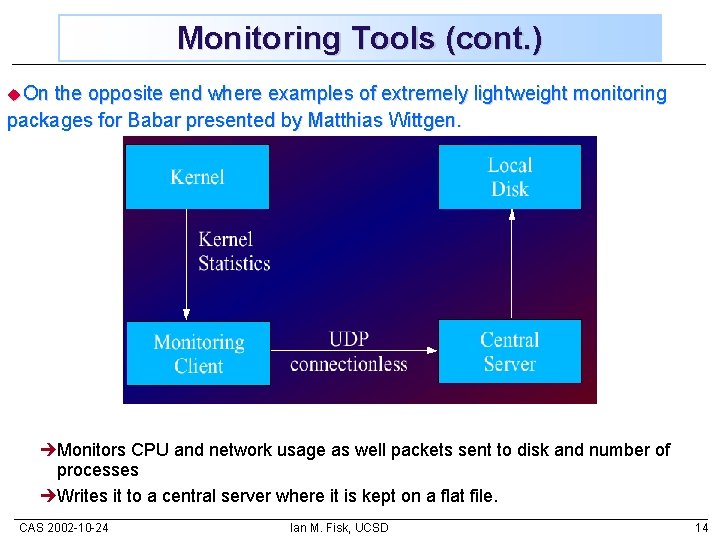

Monitoring Tools (cont. ) u. On the opposite end where examples of extremely lightweight monitoring packages for Babar presented by Matthias Wittgen. èMonitors CPU and network usage as well packets sent to disk and number of processes èWrites it to a central server where it is kept on a flat file. CAS 2002 -10 -24 Ian M. Fisk, UCSD 14

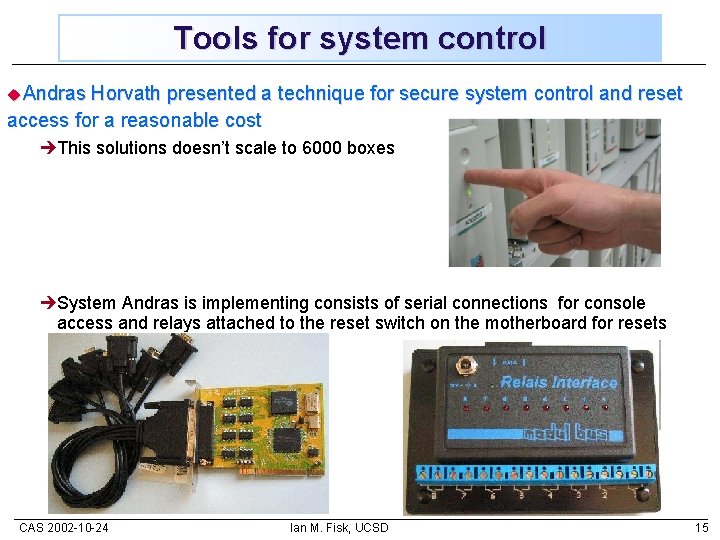

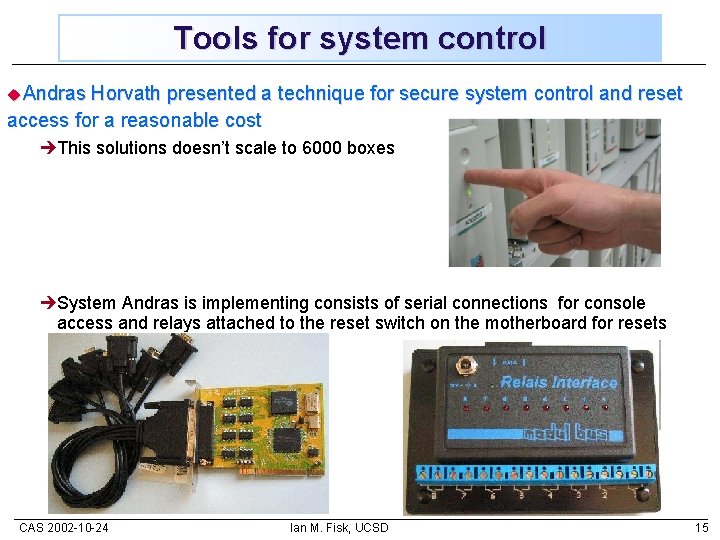

Tools for system control u. Andras Horvath presented a technique for secure system control and reset access for a reasonable cost èThis solutions doesn’t scale to 6000 boxes èSystem Andras is implementing consists of serial connections for console access and relays attached to the reset switch on the motherboard for resets CAS 2002 -10 -24 Ian M. Fisk, UCSD 15

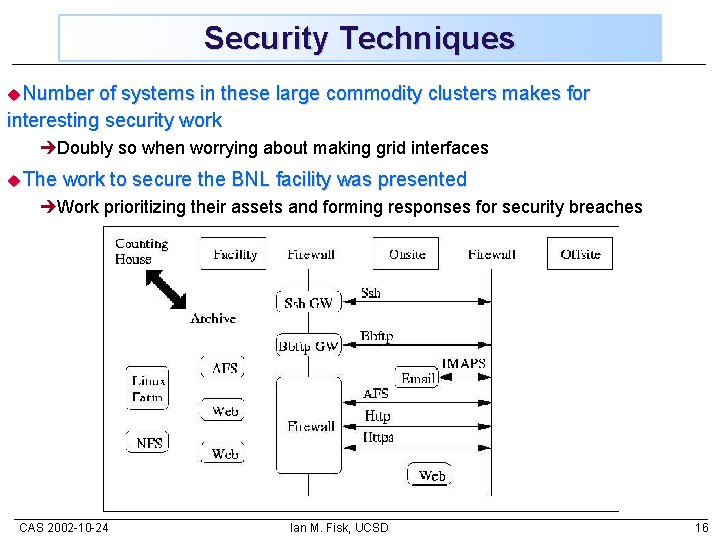

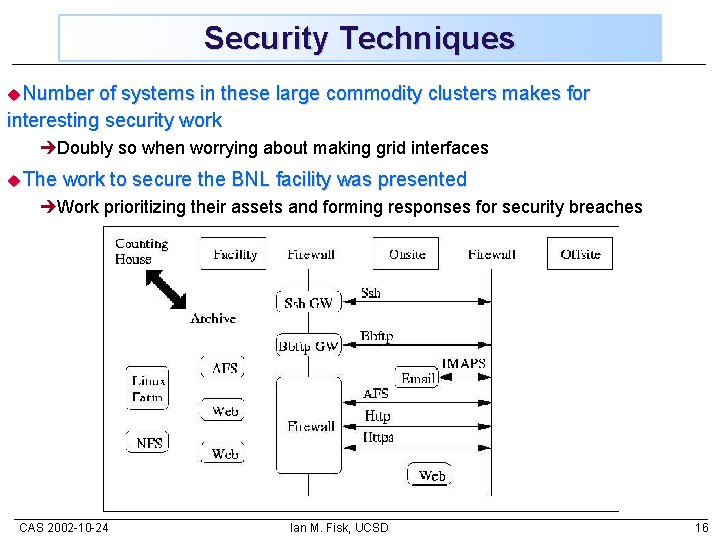

Security Techniques u. Number of systems in these large commodity clusters makes for interesting security work èDoubly so when worrying about making grid interfaces u. The work to secure the BNL facility was presented èWork prioritizing their assets and forming responses for security breaches CAS 2002 -10 -24 Ian M. Fisk, UCSD 16

Field doesn’t cooperate well u. This is not necessarily a problem, nor is it a criticism, simply an observation èOne doesn’t see a lot of common detector building projects, maybe it isn’t surprising that there aren’t a lot common computing development efforts èI noticed during the week that there is a lot of duplication of effort, even between experiments that are geographically close èWe have forums for exchange like HEPIX and the Large Cluster Workshop meetings § Even with these, we don’t seem to do much development in common u. There are notable exceptions èAlan Silverman presented the work to write a guide to building and operating a large cluster § Their noble if somewhat ambitious goal is to “Produce the definitive guide to building and running a cluster - how to choose, acquire, test and operate the hardware; software installation and upgrade tools; performance mgmt, logging, accounting, alarms, security, etc” CAS 2002 -10 -24 Ian M. Fisk, UCSD 17

Grid Projects u. The grid projects are another area in which the field is working effectively together èA number of sites indicated the desire to use common tools developed by EDG Work Package 4 èGood buy in from fabric managers about the use of SRM èSoftware deployment through the VDT CAS 2002 -10 -24 Ian M. Fisk, UCSD 18

Conclusions u. It was a long and interesting week u. Apologies for not being able to summarize everything èWe had very interesting discussions and presentations yesterday about how to interface the fabrics and the grid services èI also didn’t get a change to cover some of the hardware and software R&D results u. I encourage people to look at the web page. Almost all the talks were posted. CAS 2002 -10 -24 Ian M. Fisk, UCSD 19