Privacypreserving and Secure AI FCAI Minisymposium Machine Learning

- Slides: 15

Privacy-preserving and Secure AI FCAI Minisymposium

Machine Learning in the presence of adversaries N. Asokan http: //asokan. org/asokan/ @nasokan (joint work with Mika Juuti, Jian Liu, Andrew Paverd and Samuel Marchal)

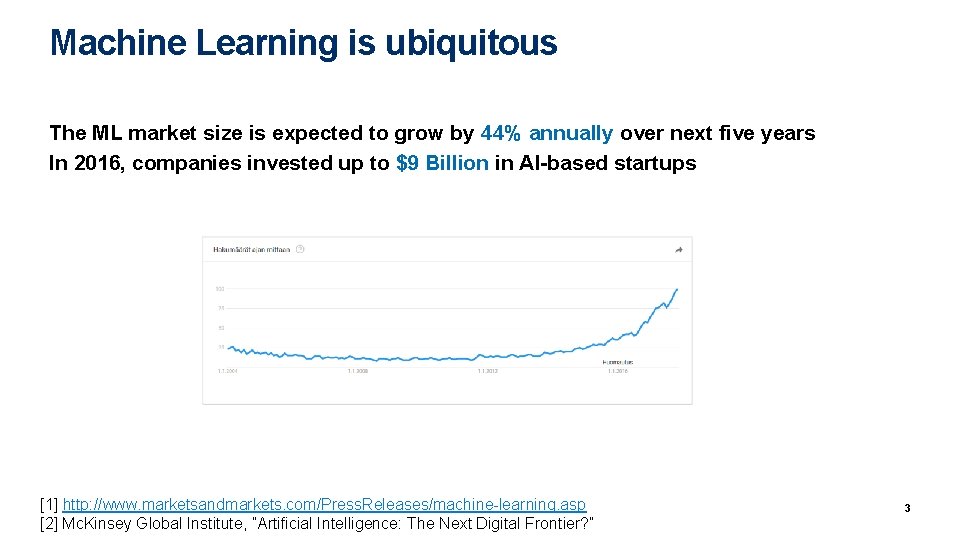

Machine Learning is ubiquitous The ML market size is expected to grow by 44% annually over next five years In 2016, companies invested up to $9 Billion in AI-based startups Machine Learning and Deep Learning is getting more and attention. . . [1] http: //www. marketsandmarkets. com/Press. Releases/machine-learning. asp [2] Mc. Kinsey Global Institute, ”Artificial Intelligence: The Next Digital Frontier? ” 3

How do we evaluate ML-based systems? Effectiveness of inference • measures of accuracy Performance • inference speed and memory consumption. . . Meet these criteria even in the presence of adversaries 4

Security and Privacy of Machine Learning

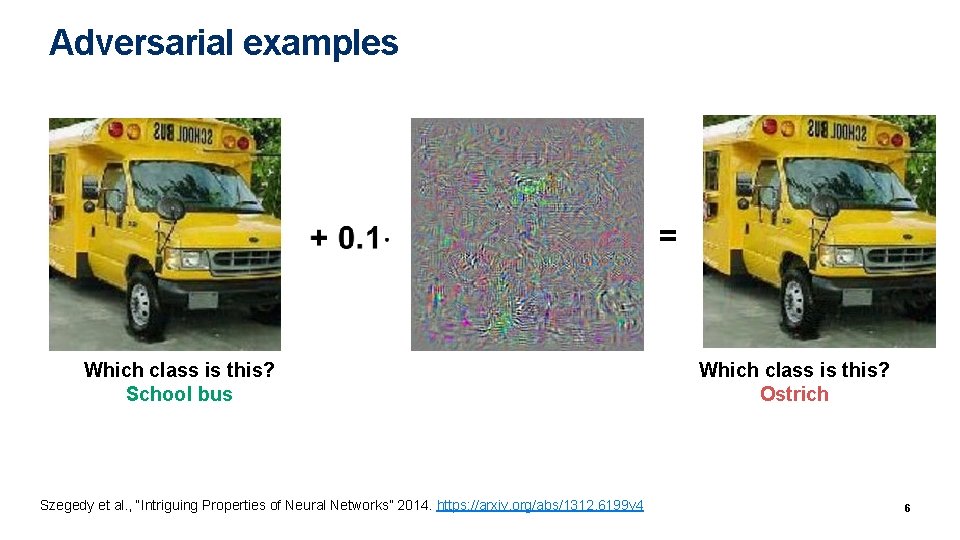

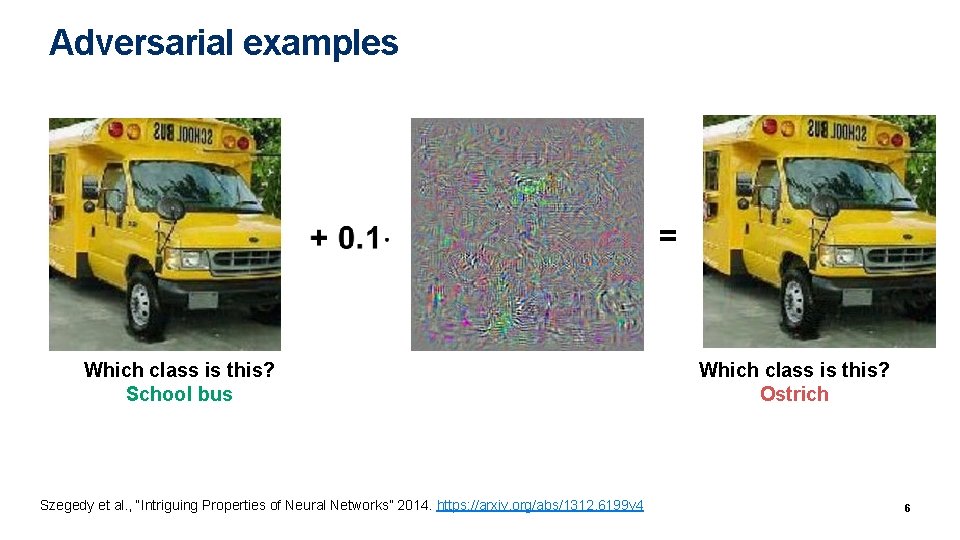

Adversarial examples = Which class is this? School bus Szegedy et al. , “Intriguing Properties of Neural Networks” 2014. https: //arxiv. org/abs/1312. 6199 v 4 Which class is this? Ostrich 6

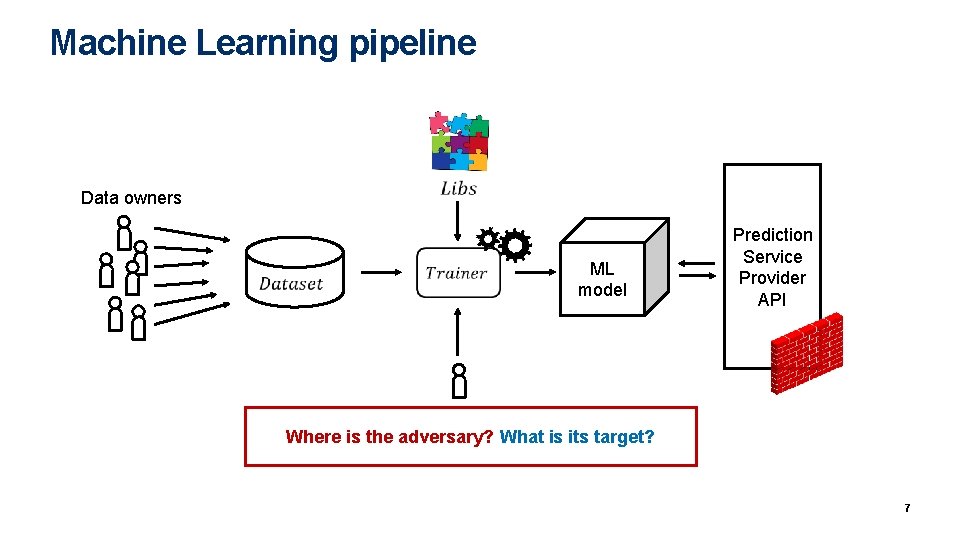

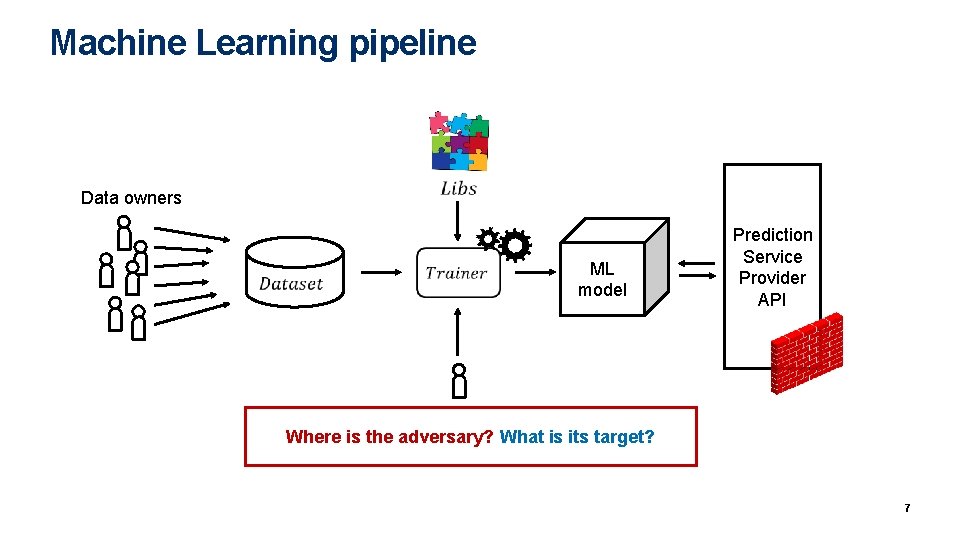

Machine Learning pipeline Data owners ML model Prediction Service Provider Client. API Analyst Where is the adversary? What is its target? 7

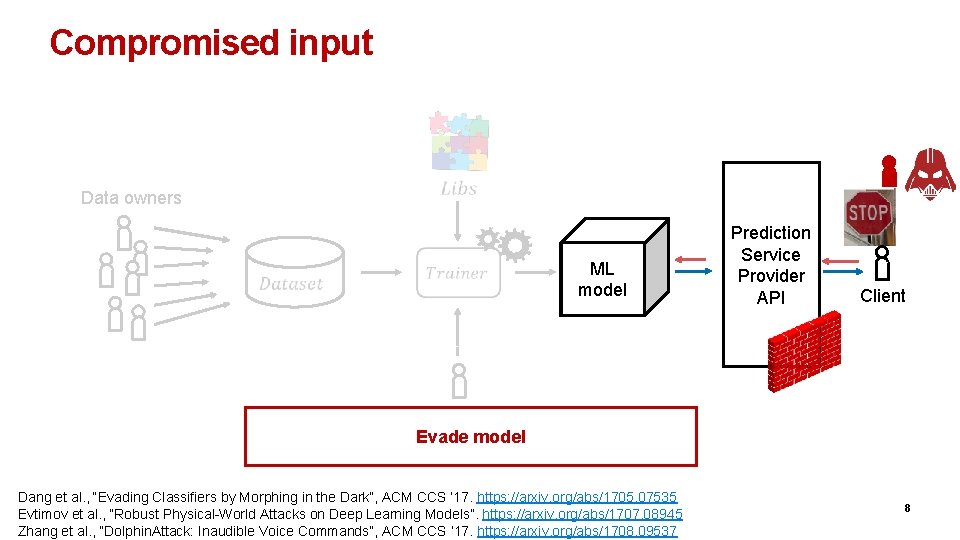

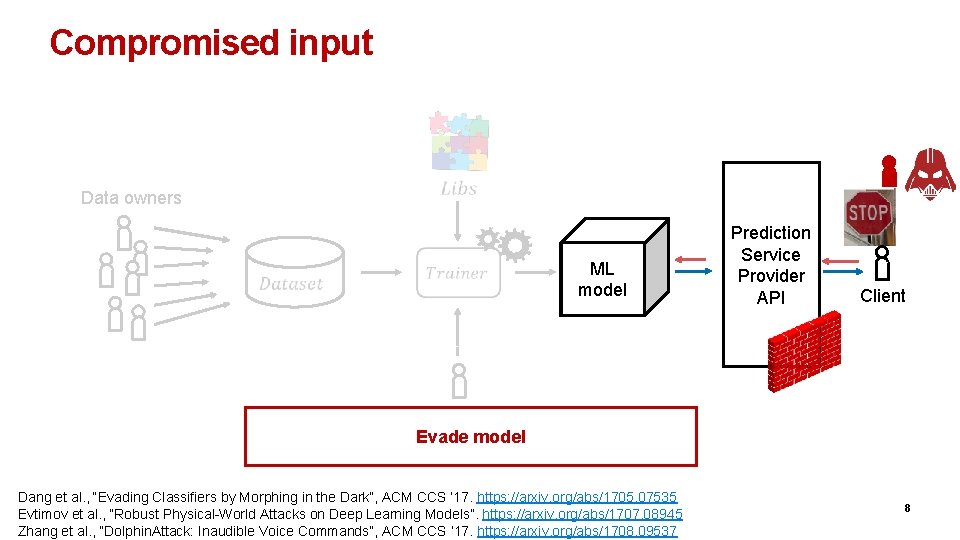

Compromised input Data owners ML limit Speed 80 km/h model Prediction Service Provider API Client Analyst Evade model Dang et al. , “Evading Classifiers by Morphing in the Dark”, ACM CCS ’ 17. https: //arxiv. org/abs/1705. 07535 Evtimov et al. , “Robust Physical-World Attacks on Deep Learning Models”. https: //arxiv. org/abs/1707. 08945 Zhang et al. , “Dolphin. Attack: Inaudible Voice Commands”, ACM CCS ’ 17. https: //arxiv. org/abs/1708. 09537 8

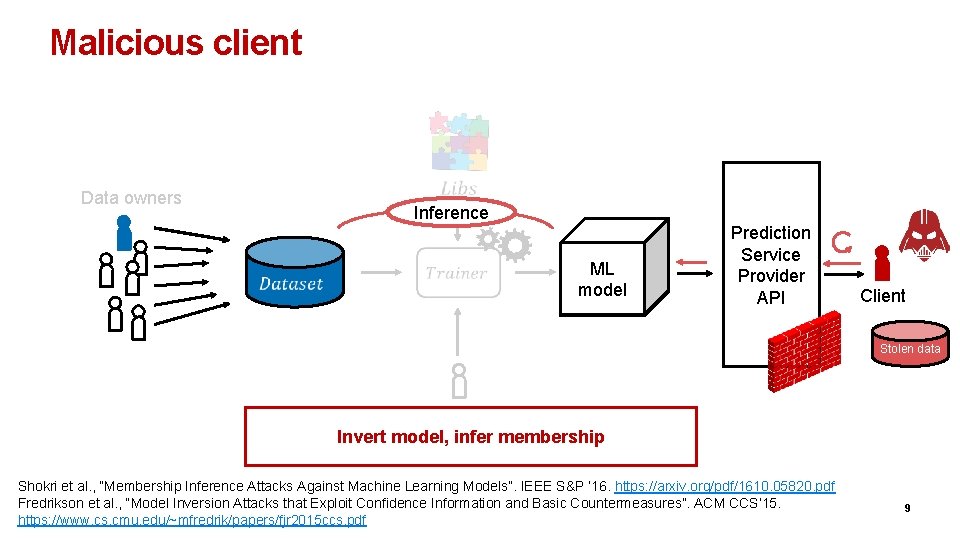

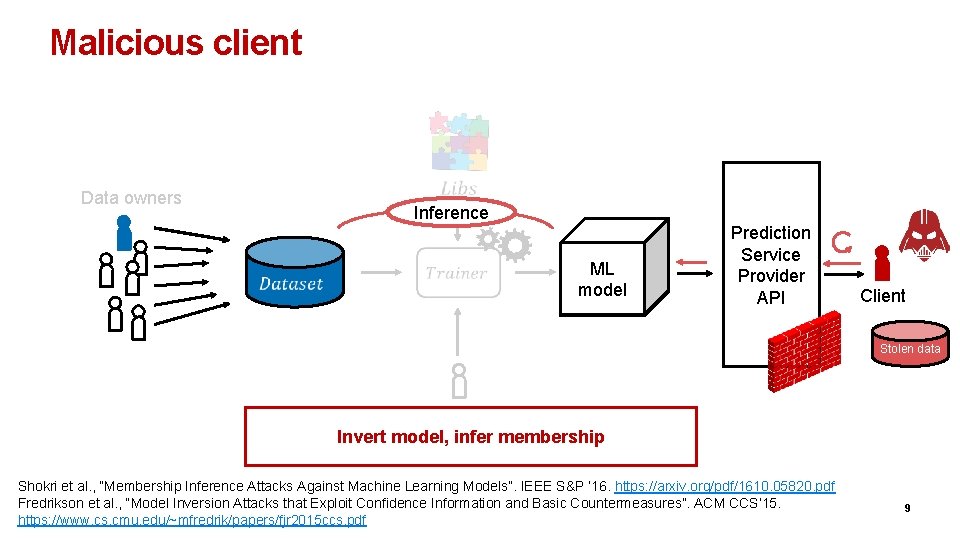

Malicious client Data owners Inference ML model Prediction Service Provider API Client Stolen data Analyst Invert model, infer membership Shokri et al. , “Membership Inference Attacks Against Machine Learning Models”. IEEE S&P ’ 16. https: //arxiv. org/pdf/1610. 05820. pdf Fredrikson et al. , “Model Inversion Attacks that Exploit Confidence Information and Basic Countermeasures”. ACM CCS’ 15. https: //www. cs. cmu. edu/~mfredrik/papers/fjr 2015 ccs. pdf 9

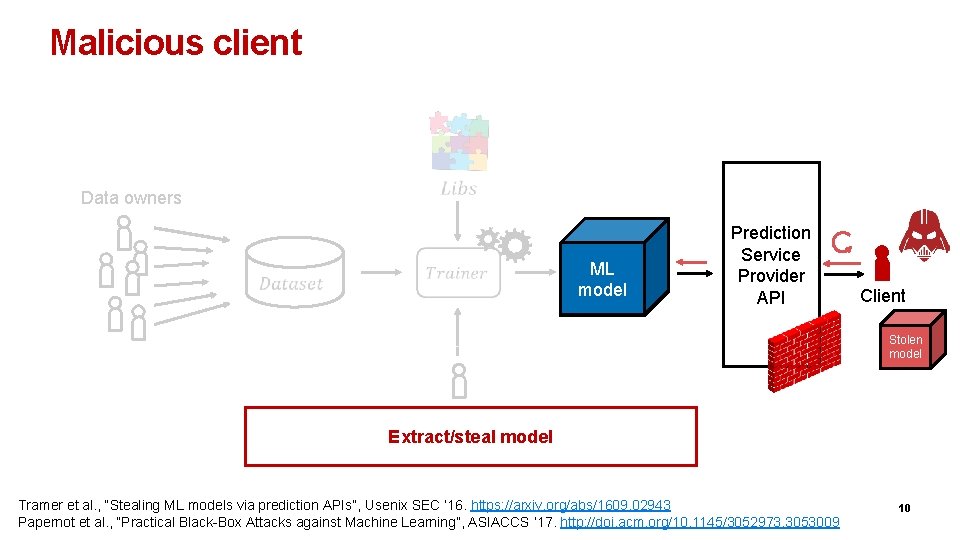

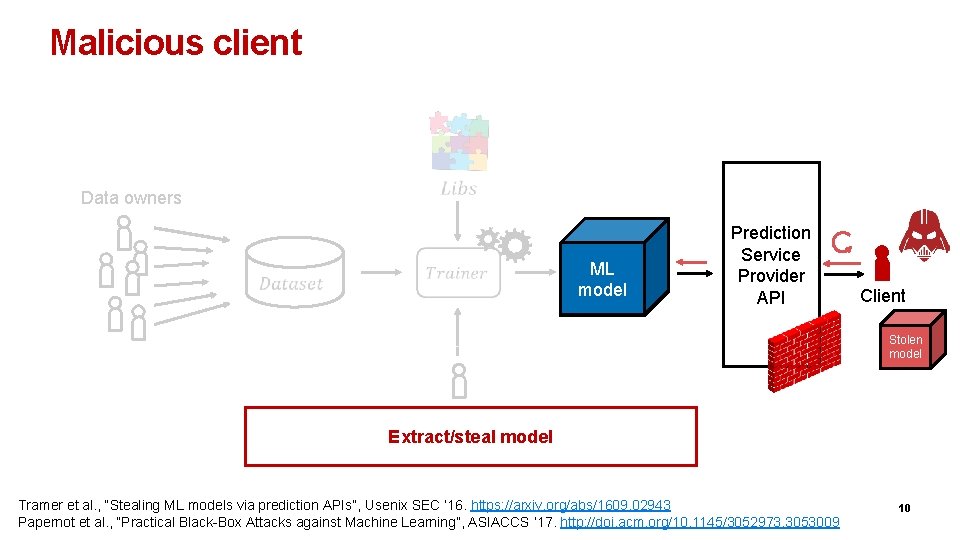

Malicious client Data owners ML model Prediction Service Provider API Client Stolen model Analyst Extract/steal model Tramer et al. , “Stealing ML models via prediction APIs”, Usenix SEC ’ 16. https: //arxiv. org/abs/1609. 02943 Papernot et al. , “Practical Black-Box Attacks against Machine Learning”, ASIACCS ’ 17. http: //doi. acm. org/10. 1145/3052973. 3053009 10

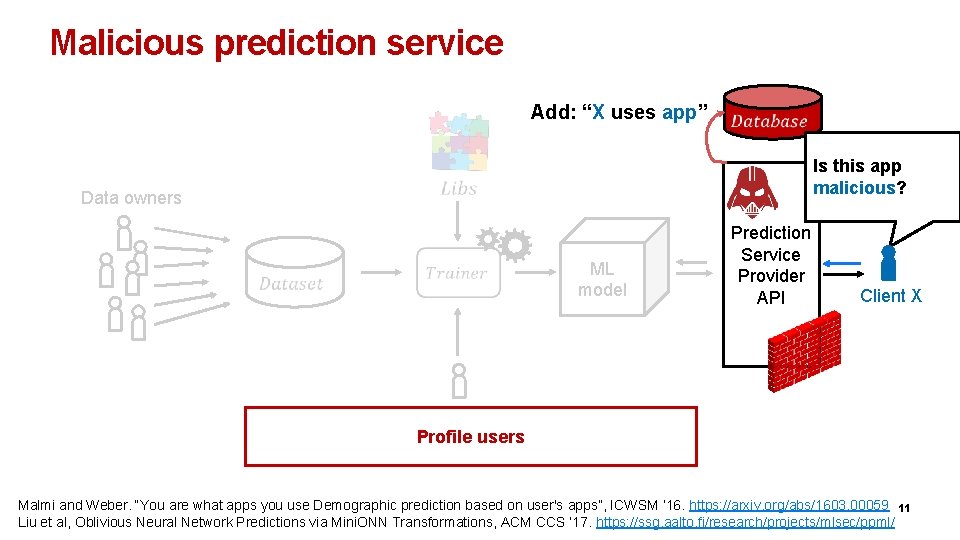

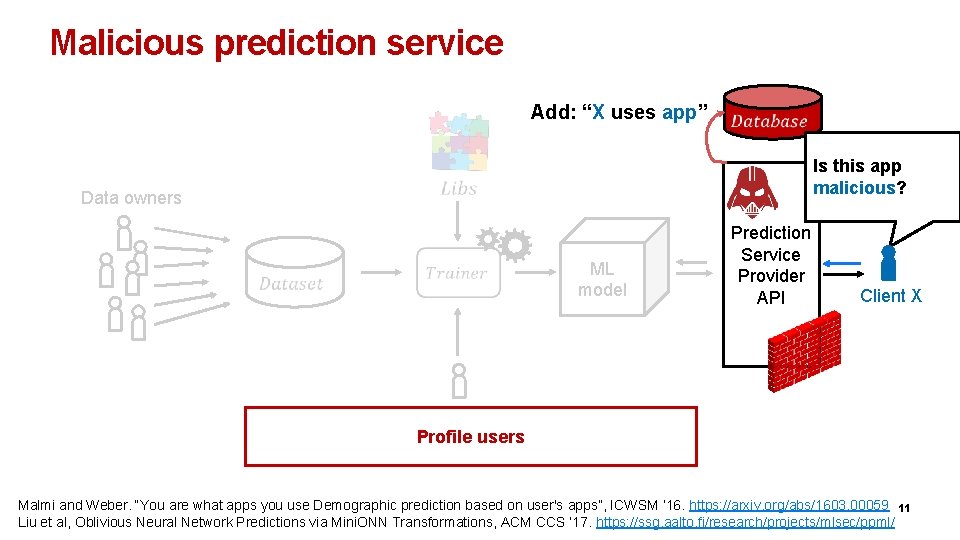

Malicious prediction service Add: “X uses app” Is this app malicious? Data owners ML model Prediction Service Provider API Client X Analyst Profile users Malmi and Weber. “You are what apps you use Demographic prediction based on user's apps”, ICWSM ‘ 16. https: //arxiv. org/abs/1603. 00059 11 Liu et al, Oblivious Neural Network Predictions via Mini. ONN Transformations, ACM CCS ’ 17. https: //ssg. aalto. fi/research/projects/mlsec/ppml/

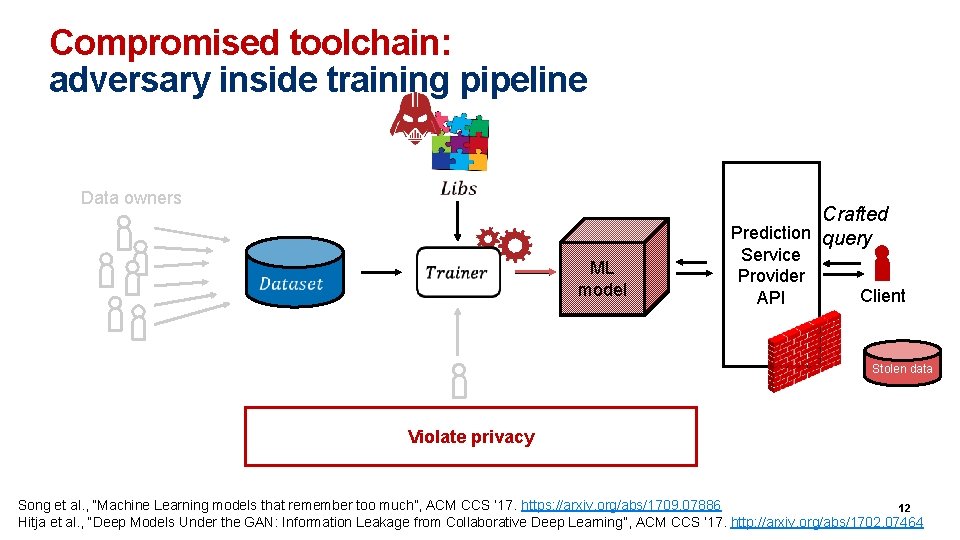

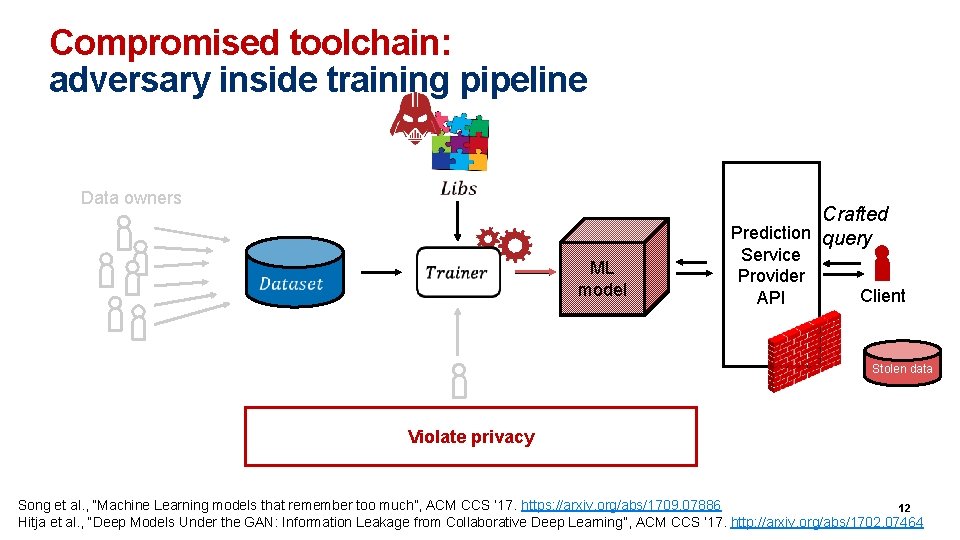

Compromised toolchain: adversary inside training pipeline Data owners Crafted Prediction query ML model Service Provider API Client Stolen data Analyst Violate privacy Song et al. , “Machine Learning models that remember too much”, ACM CCS ’ 17. https: //arxiv. org/abs/1709. 07886 12 Hitja et al. , “Deep Models Under the GAN: Information Leakage from Collaborative Deep Learning”, ACM CCS ’ 17. http: //arxiv. org/abs/1702. 07464

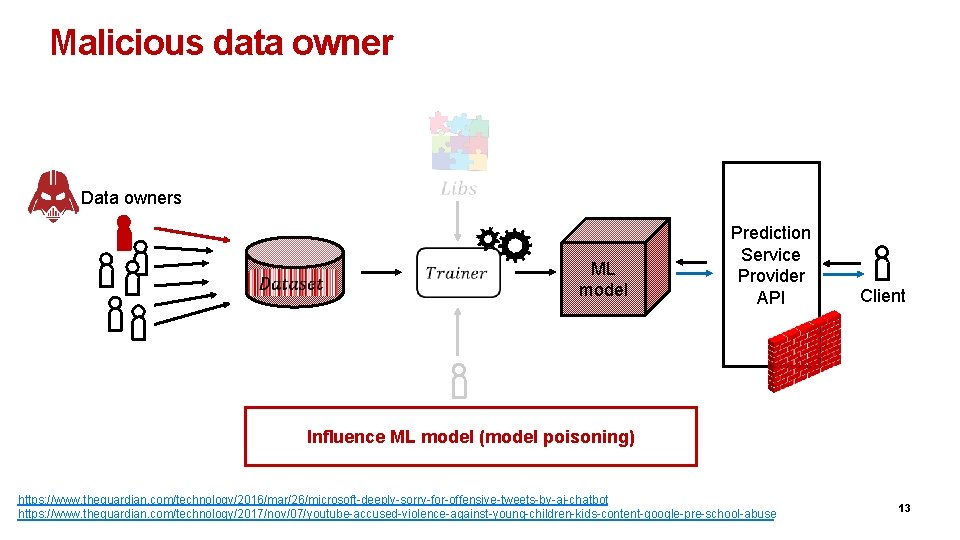

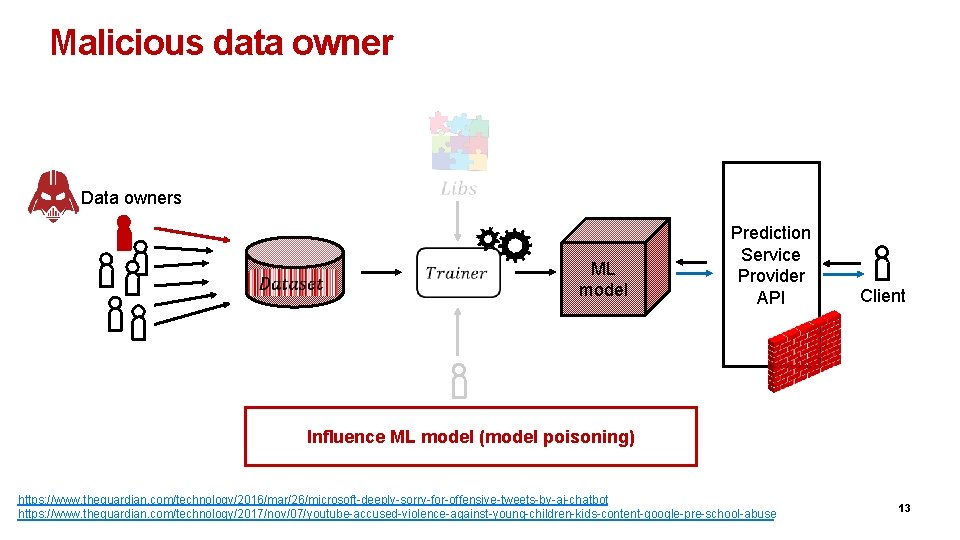

Malicious data owner Data owners ML model Prediction Service Provider API Client Analyst Influence ML model (model poisoning) https: //www. theguardian. com/technology/2016/mar/26/microsoft-deeply-sorry-for-offensive-tweets-by-ai-chatbot https: //www. theguardian. com/technology/2017/nov/07/youtube-accused-violence-against-young-children-kids-content-google-pre-school-abuse 13

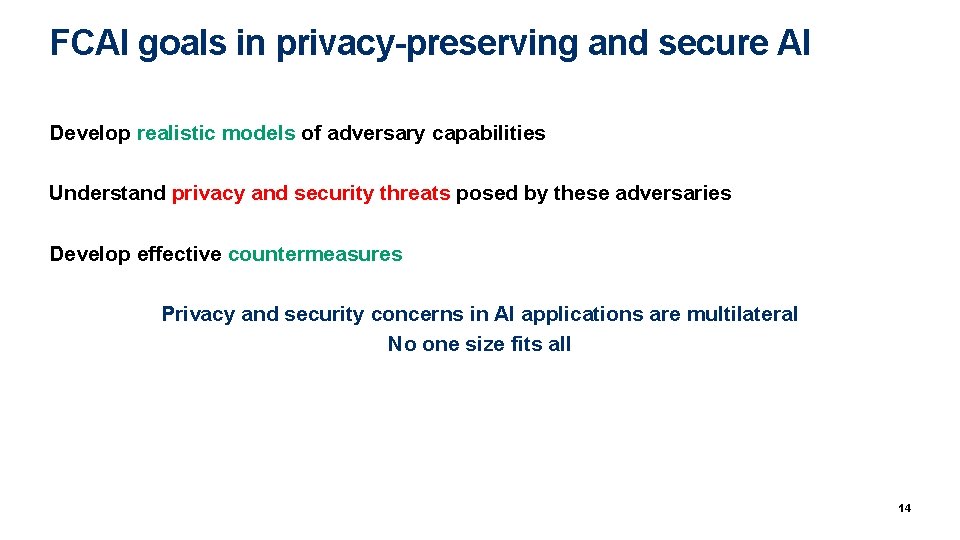

FCAI goals in privacy-preserving and secure AI Develop realistic models of adversary capabilities Understand privacy and security threats posed by these adversaries Develop effective countermeasures Privacy and security concerns in AI applications are multilateral No one size fits all 14

Agenda for today Security and machine learning 1. Application: Luiza Sayfullina: Android Malware Classification: How to Deal With Extremely Sparse Features 2. Attack/defense: Mika Juuti: Stealing DNN Models: Attacks and Defenses 3. Application: Nikolaj Tatti (F-Secure): Reducing False Positives in Intrusion Detection Privacy of machine learning 4. Antti Honkela: Differential Privacy and Machine Learning 5. Mikko Heikkilä: Differentially private Bayesian learning on distributed data 6. Adrian Flanagan (Huawei): Privacy Preservation with Federated Learning in Personalized Recommendation Systems 15

Inductive and analytical learning

Inductive and analytical learning Inductive vs analytical learning

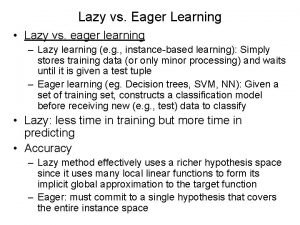

Inductive vs analytical learning Eager learning vs lazy learning

Eager learning vs lazy learning Concept learning task in machine learning

Concept learning task in machine learning Analytical learning in machine learning

Analytical learning in machine learning Pac learning model in machine learning

Pac learning model in machine learning Pac learning model in machine learning

Pac learning model in machine learning Instance based learning in machine learning

Instance based learning in machine learning Inductive learning machine learning

Inductive learning machine learning First order rule learning in machine learning

First order rule learning in machine learning Cmu machine learning

Cmu machine learning Cuadro comparativo e-learning b-learning m-learning

Cuadro comparativo e-learning b-learning m-learning Moore machine and mealy machine

Moore machine and mealy machine Energy work and simple machines chapter 10 answers

Energy work and simple machines chapter 10 answers Machine learning and data mining

Machine learning and data mining Andrew ng hbr

Andrew ng hbr