PERFORMANCE METRICS Software Performance Engineering 1 How will

- Slides: 16

PERFORMANCE METRICS Software Performance Engineering 1

How will you measure? ■ Metrics are a “standard of measurement” used to enforce your expectations – “Metric” is a method, e. g. your height – “Measurement” is a number or label, e. g. 5 ft 10 in ■ Defining your performance metrics is crucial – Clear requirements good tests – Clear requirements efficient development – Customer contracts ■ The earlier you can measure, the earlier you can inform decisions (esp. architectural decisions!) ■ Two types: – Resource metrics – User experience (UX) metrics 2

Common Metrics: UX ■ Response time – The total period of time between initiating a job and the job being completed – Can be defined at the system level, or subsystem level – Tends to be all-inclusive ■ ■ e. g. response time of a website includes network latency, server, application, database, etc. e. g. response time of a web server includes IO latency, communicating with other services, etc. ■ Service time – The time taken for a (sub)system to complete its task – Defined over a given “piece” of the system ■ Response time includes service time (and various other times) ■ Throughput – The rate at which the system completes a job 3

Common Metrics: Resource ■ Processor utilization – The percentage of time that the processor is used – This can be measured by application time, system time, user mode, privileged mode, kernel mode ■ Gets tricky when your system involves OS commands! – Typically measured by subtracting cycles from the “idle process” – Always defined over a period of time ■ Bandwidth utilization – Number of bits per second being transmitted divided by maximum bandwidth – Always defined over a period of time 4

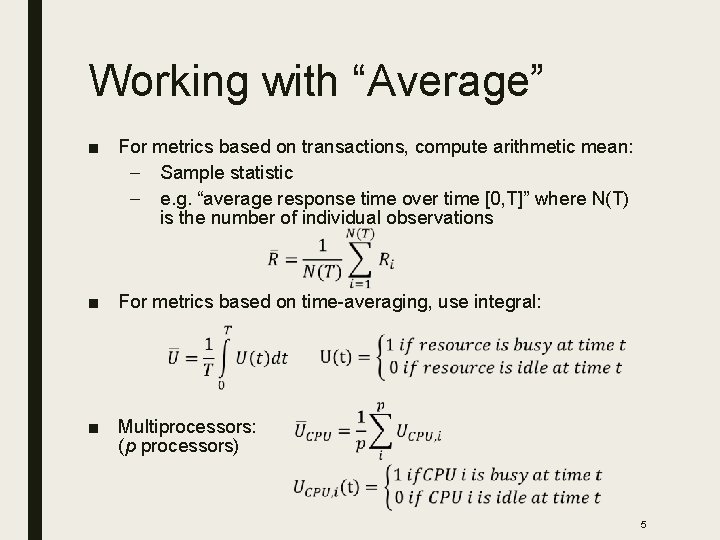

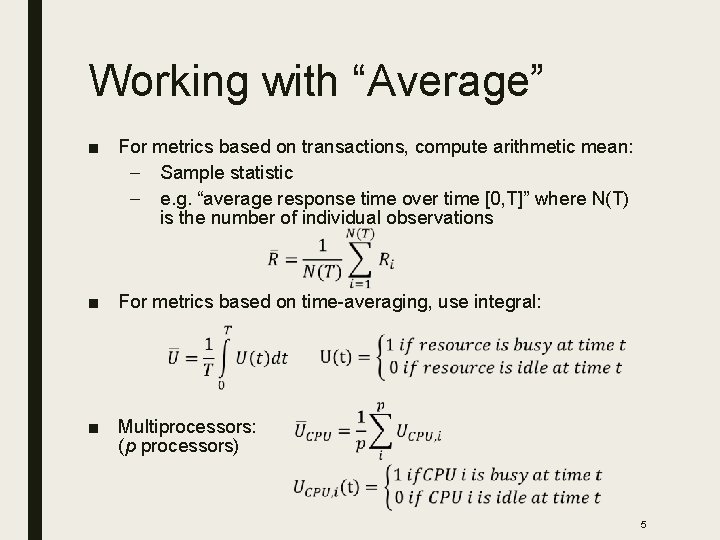

Working with “Average” ■ For metrics based on transactions, compute arithmetic mean: – Sample statistic – e. g. “average response time over time [0, T]” where N(T) is the number of individual observations ■ For metrics based on time-averaging, use integral: ■ Multiprocessors: (p processors) 5

Common Metrics: Resource (2) ■ Device utilization – The percentage of time that any devices are being used ■ ■ e. g. disk utilization e. g. sensor devices like a gyroscope – Typically measured by sampling ■ Memory occupancy – The percentage of memory being used over time – Memory “footprint” is the maximum memory used – Measured in bytes – Can also be measured in malloc() calls and object instantiations 6

What Makes a Good Metric? ■ There are many, MANY properties of good metrics. ■ Here are some: – Linearity – Reliability – Repeatability – Ease of measurement – Consistency – Representation condition Source: Andrew Meneely, Ben H. Smith, Laurie Williams: Validating software metrics: A spectrum of philosophies. ACM Trans. Softw. Eng. Methodol. 21(4): 24: 124: 28 (2012) ■ Running example metrics – MIPS: millions of instructions per second to measure processor speed – SLOC: number of lines of source code 7

Linearity & Reliability ■ Linearity – Our notion of performance tends to be linear, and so should our metrics – Many of our future analyses of queuing theory assume linearity of metrics ■ – e. g. “the average response time decreased by 50%” should mean that the response time is actually half Metrics that are NOT linear ■ ■ Decibels – logarithmic Richter scale for earthquakes – logarithmic ■ Reliability – System A can outperform System B whenever the metric indicates that it does – Execution time is fairly reliable, but MIPS is not (memory not included) 8

Repeatability & Ease of Measurement ■ Repeatability – If we run the experiment multiple times under the same conditions, do we get the same results? – SLOC is repeatable – Execution time is repeatable, but the conditions can be tough to replicate every time ■ Ease of measurement – Is the procedure for collecting this metric feasible? – Do we require too much sampling? Does the sampling interfere with the rest of the system? 9

Consistency ■ Consistency – Is the metric the same across all systems? – MIPS is not consistent across architectures: CISC and RISC – SLOC is not consistent across languages, e. g. Ruby SLOC versus C SLOC 10

Representation Condition ■ Your empirical measurements should represent your theoretical model – “Under the Representation Condition, any property of the number system must appropriately map to a property of the attribute being measured (and vice versa) – Linearity is an example of applying the representation condition to scale – i. e. Mathematical transformations on the empirical data should align with – e. g. SLOC fails RC for functional size because poorlywritten code can be large in SLOC but functionally small 11

Key Metric Lessons ■ One metric does not tell the whole story – You will always need multiple – Performance is a multi-dimensional concept ■ A performance requirements must define context – What metrics? – Under what load conditions? – Over what period of time? 12

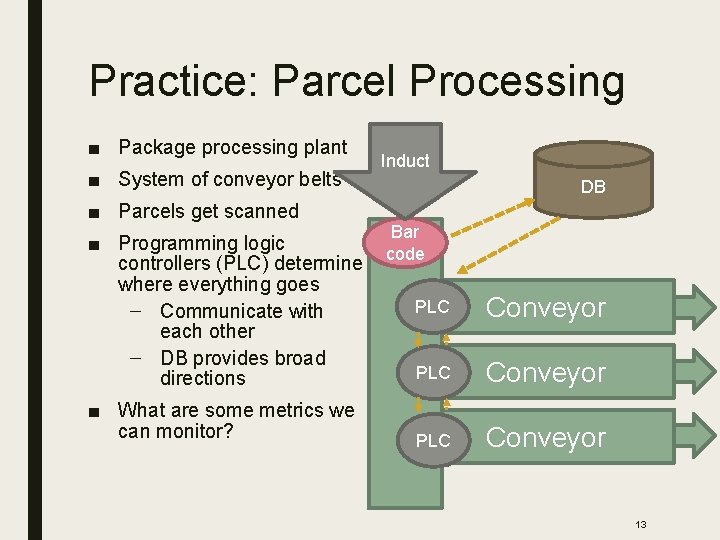

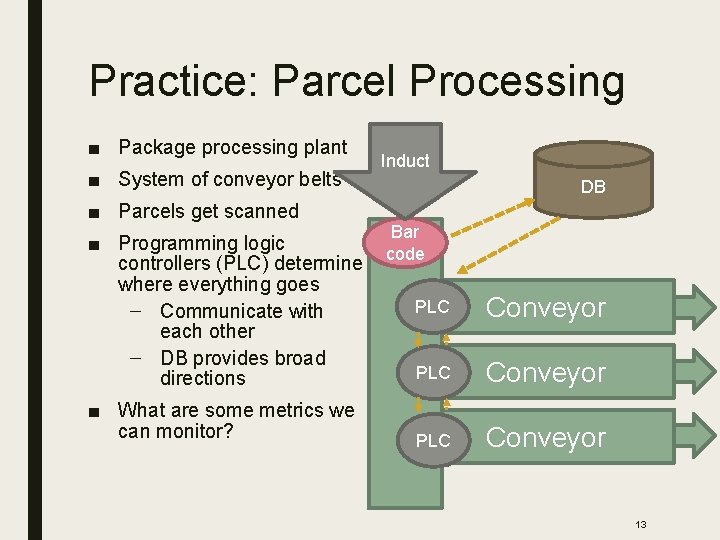

Practice: Parcel Processing ■ Package processing plant ■ System of conveyor belts ■ Parcels get scanned Induct DB Bar ■ Programming logic code controllers (PLC) determine where everything goes PLC – Communicate with each other – DB provides broad PLC directions ■ What are some metrics we can monitor? PLC Conveyor 13

Metrics for Parcel Processing ■ # parcels per second at each point on the conveyor ■ Speed of the conveyor ■ Distance between parcels ■ Average length of a parcel ■ Query rate of parcel routing database ■ Response time to parcel routing database ■ Latencies between PLCs ■ PLC service time 14

Practice: Global Village ■ Let’s model as a class the Global Village cafeteria 15

More practice ideas ■ Bar at a theater during intermission ■ Fire alarm control panel 16