An Engineering Approach to Performance Define Performance Metrics

- Slides: 50

An Engineering Approach to Performance • • Define Performance Metrics Measure Performance Analyze Results Develop Cost x Performance Alternatives: – prototype – modeling • Assess Alternatives • Implement Best Alternative 1

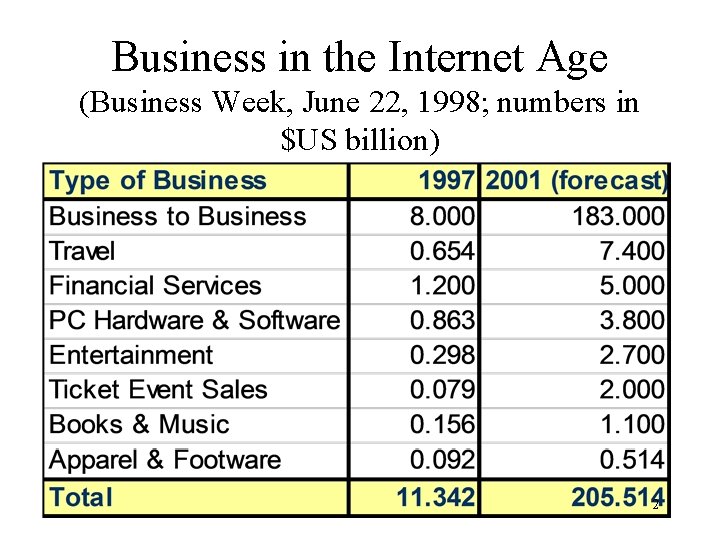

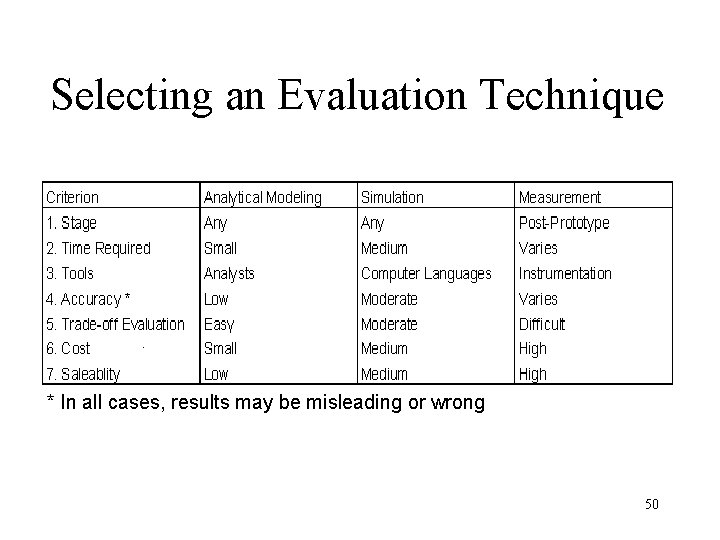

Business in the Internet Age (Business Week, June 22, 1998; numbers in $US billion) 2

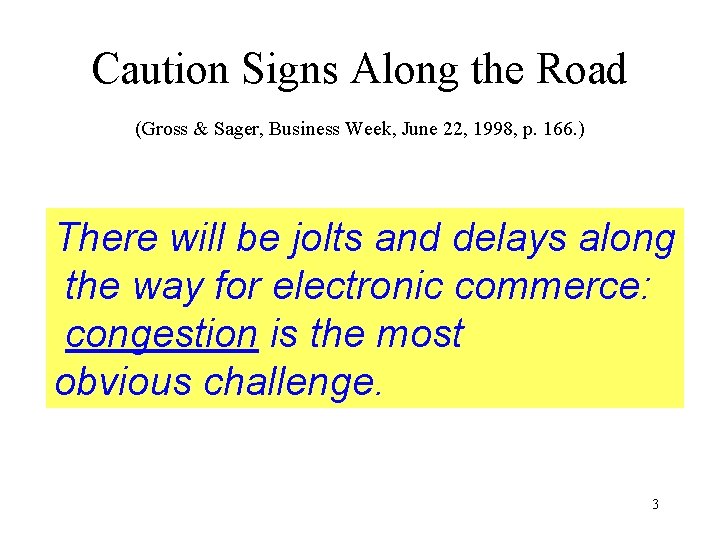

Caution Signs Along the Road (Gross & Sager, Business Week, June 22, 1998, p. 166. ) There will be jolts and delays along the way for electronic commerce: congestion is the most obvious challenge. 3

Electronic Commerce: online sales are soaring “… IT and electronic commerce can be expected to drive economic growth for many years to come. ” The Emerging Digital Economy, US Dept. of Commerce, 1998. 4

What are people saying about Web performance… • “Tripod’s Web site is our business. If it’s not fast and reliable, there goes our business. ”, Don Zereski, Tripod’s vice-president of Technology (Internet World) • “Computer shuts down Amazon. com book sales. The site went down at about 10 a. m. and stayed out of service until 10 p. m. ” The Seattle Times, 01/08/98 5

What are people saying about Web performance… • “Sites have been concentrating on the right content. Now, more of them -- specially e-commerce sites -- realize that performance is crucial in attracting and retaining online customers. ” Gene Shklar, Keynote, The New York Times, 8/8/98 6

What are people saying about Web performance… • “Capacity is King. ” Mike Krupit, Vice President of Technology, CDnow, 06/01/98 • “Being able to manage hit storms on commerce sites requires more than just buying more plumbing. ” Harry Fenik, vice president of technology, Zona Research, LANTimes, 6/22/98 7

Introduction 8

Objectives Performance Analysis = Analysis + Computer System Performance Analyst = Mathematician + Computer Systems Person 9

You Will learn • • Specifying performance requirements Evaluating design alternatives Comparing two or more systems Determining the optimal value of a parameter (system tuning) • Finding the performance bottleneck (bottleneck identification) 10

You Will learn (cont’d) • Characterizing the load on the system (workload characterization) • Determining the number and size of components (capacity planning) • Predicting the performance at future loads (forecasting) 11

Performance Analysis Objectives Involve: • • Procurement Improvement Capacity Planning Design 12

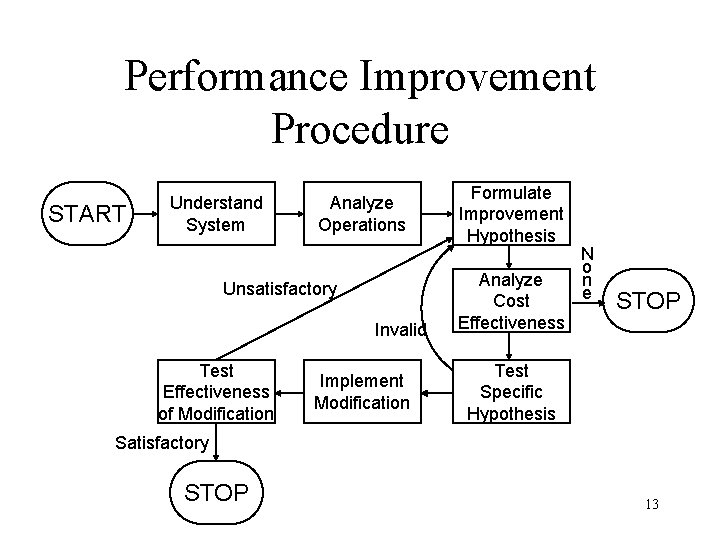

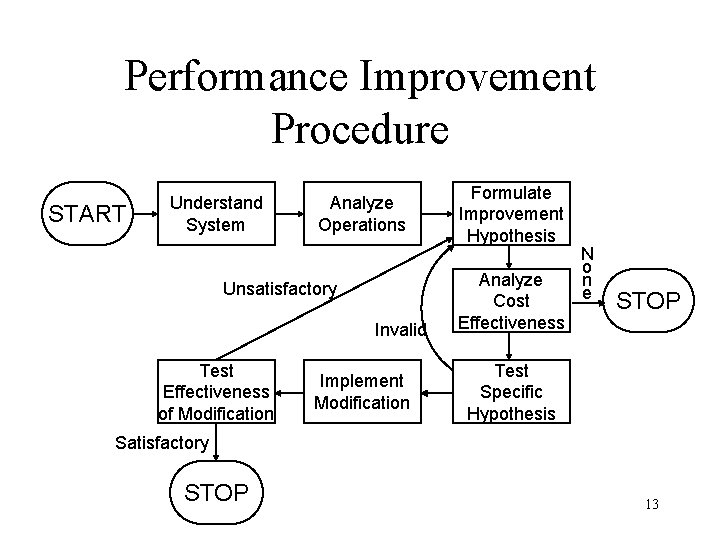

Performance Improvement Procedure START Understand System Analyze Operations Unsatisfactory Invalid Test Effectiveness of Modification Implement Modification Formulate Improvement Hypothesis Analyze Cost Effectiveness N o n e STOP Test Specific Hypothesis Satisfactory STOP 13

Basic Terms • System: Any collection of hardware, software, and firmware • Metrics: The criteria used to evaluate the performance of the system components • Workloads: The requests made by the users of the system 14

Examples of Performance Indexes • External Indexes Turnaround Time Response Time Through Put Capacity Availability Reliability 15

Examples of Performance Indexes • Internal Indexes CPU Utilization Overlap of Activities Multiprog. Stretch Factor Multiprog. Level Paging Rate Reaction time 16

Example I What performance metrics should be used to compare the performance of the following systems. 1. Two disk drivers? 2. Two transaction-processing systems? 3. Two packet-retransmission algorithms? 17

Example II Which type of monitor (software or hardware) would be more suitable for measuring each of the following quantities: 1. Number of Instructions executed by a processor? 2. Degree of multiprogramming on a timesharing system? 3. Response time of packets on a network? 18

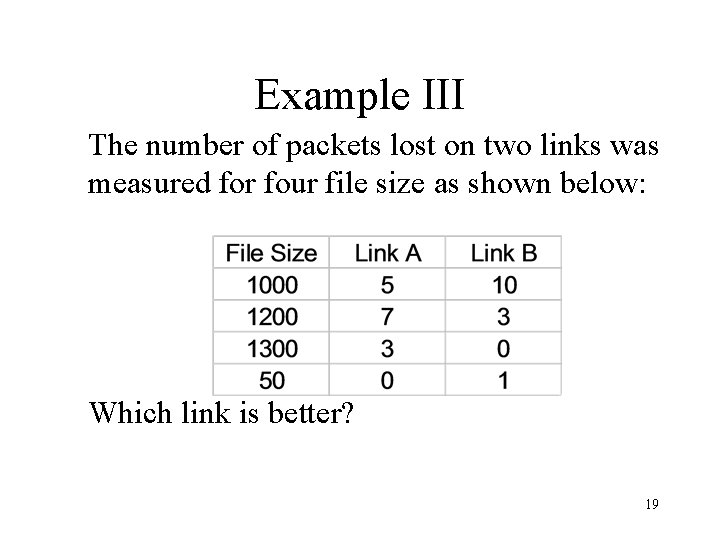

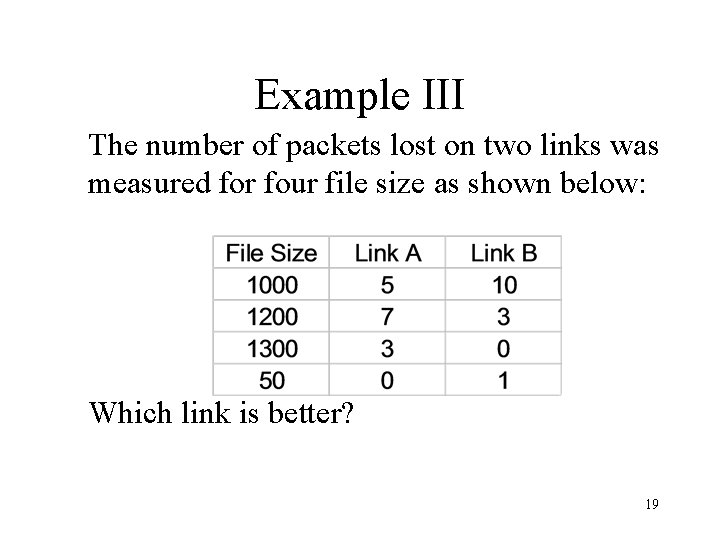

Example III The number of packets lost on two links was measured for four file size as shown below: Which link is better? 19

Example IV In order to compare the performance of two cache replacement algorithms: 1. What type of simulation model should be used? 2. How long should the simulation be run? 3. What can be done to get the same accuracy with a shorter run? 4. How can one decide if the random-number generator in the simulation is a good generator? 20

Example V The average response time of a database system is three seconds. During a one-minute observation interval, the idle time on a system was ten seconds. Using a queueing model for the system, determine the following: 21

Example V (cont’d) 1. System utilization 2. Average service time per query 3. Number of queries completed during the observation interval 4. Average number of jobs in the system 5. Probability of number of jobs in the system being greater than 1 6. 90 -percentile response time 7. 90 -percentile waiting time 22

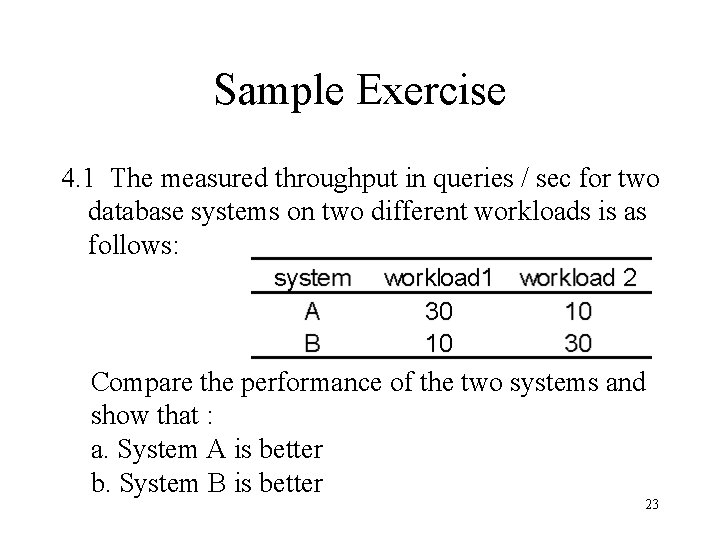

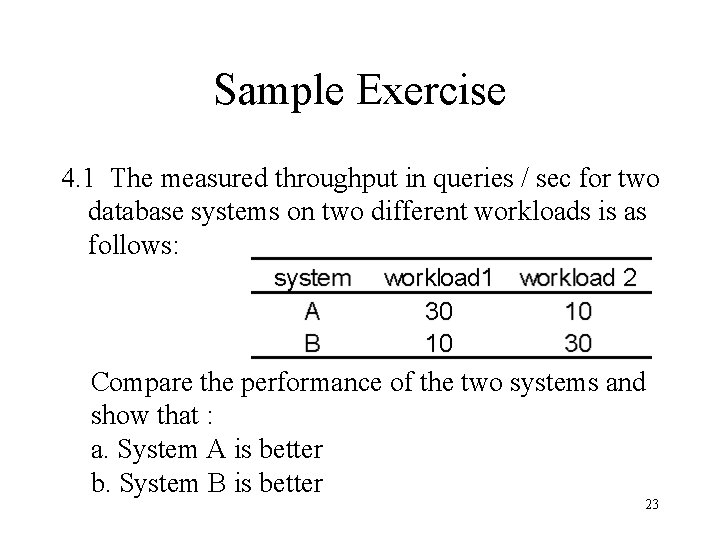

Sample Exercise 4. 1 The measured throughput in queries / sec for two database systems on two different workloads is as follows: Compare the performance of the two systems and show that : a. System A is better b. System B is better 23

The Bank Problem The board of directors requires answers to several questions that are posed to the IS facility manager. Environment: • 3 Fully automated branch offices all located in the same city • ATMs are available at 10 locations through out the city • 2 mainframes (One used for on-line processing ; the other for batch) 24

The Bank Problem (cont’d) • 24 tellers in the 3 branch offices • Each teller serves an average of 20 customers / hr during peak times ; otherwise, 12 customers / hr • Each customer generates 2 one-line transactions on the average • Thus, during peak times, IS facility receives an average of 960 (24 x 20 x 2) teller originated transaction / hr 25

The Bank Problem (cont’d) • ATMs are active 24 hrs / day. During peak volume, each ATM serves an average of 15 customers / hr. Each customer generates an average of 1. 2 transactions • Thus, during the peak period for ATMs, IS facility receives an average of 180 (10 x 15 x 1. 2) ATM transactions / hr ; Otherwise ATM transaction rate is about 50 % of this 26

The Bank Problem (cont’d) • Measured average response time – Tellers : 1. 23 seconds during peak hrs ; 3 seconds are acceptable – ATM : 1. 02 seconds during peak hr ; 4 seconds are acceptable 27

The Bank Problem (cont’d) Board of directors requires answers to several questions • Will the current central IS facility allow for the expected growth of the bank while maintaining the average response time figures at the tellers and at the ATMs within the stated acceptable limits? • If not, when will it be necessary to upgrade the current IS environment? • Of several possible upgrades (e. g. adding more disks, adding memory, replacing the central processor boards), which represents the best cost-performance trade-off? • Should the data processing facility of the bank remain centralized, or should the bank consider a distributed processing alternative? 28

Capacity Planning Concept • Capacity planning is the determination of the predicted time in the future when system saturation is going to occur, and of the most cost-effective way of delaying system saturation as long as possible 29

Questions • Why is saturation occurring? • In which parts of the system (CPU, disks, memory, queues) will a transaction or job be spending most of its execution time at the saturation points? • Which are the best cost-effective alternatives for avoiding (or, at least, delaying) saturation? 30

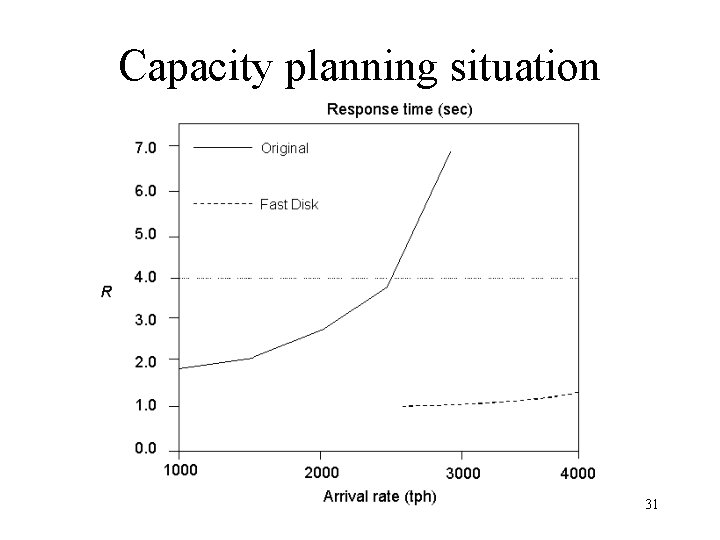

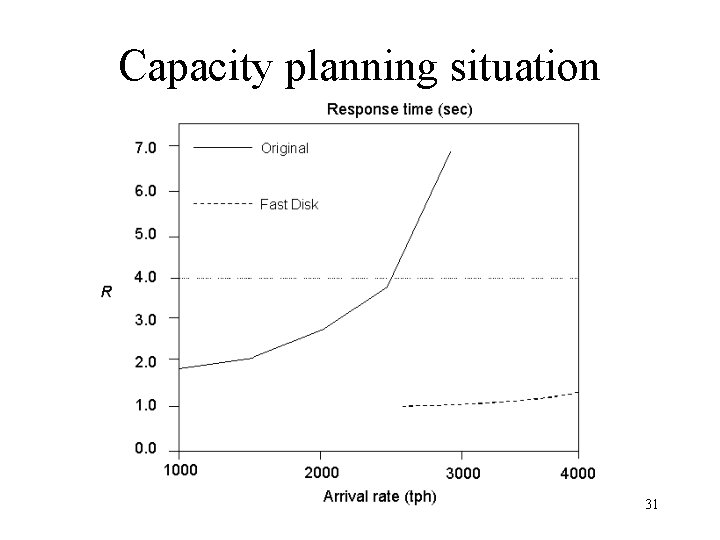

Capacity planning situation 31

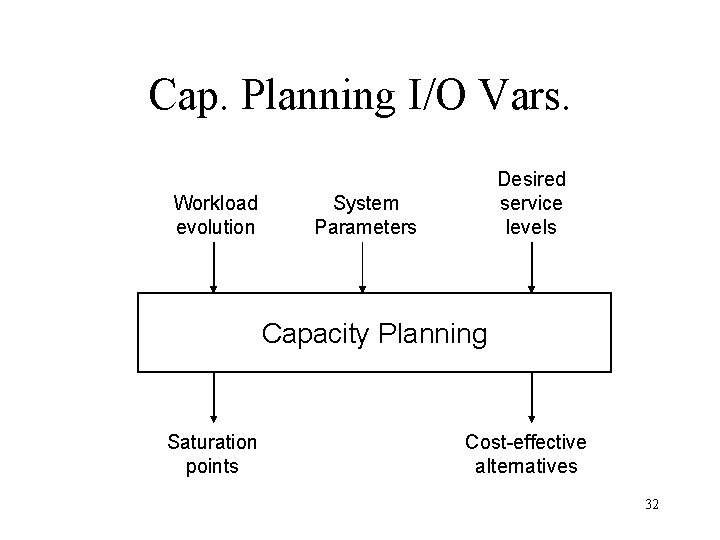

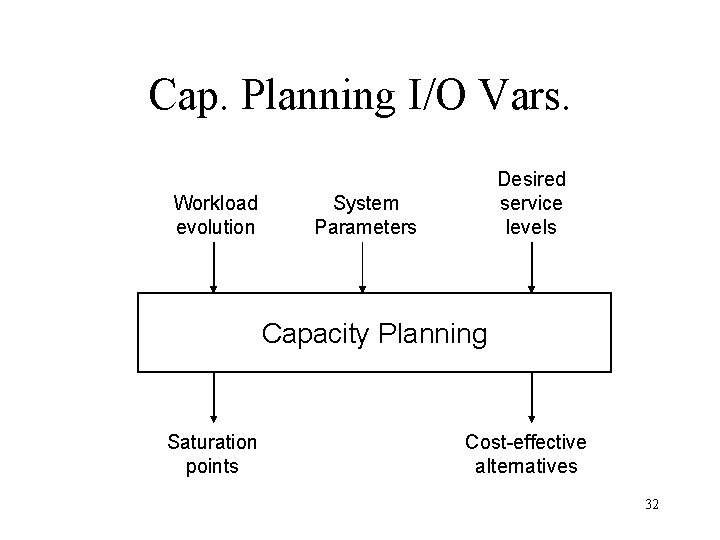

Cap. Planning I/O Vars. Workload evolution Desired service levels System Parameters Capacity Planning Saturation points Cost-effective alternatives 32

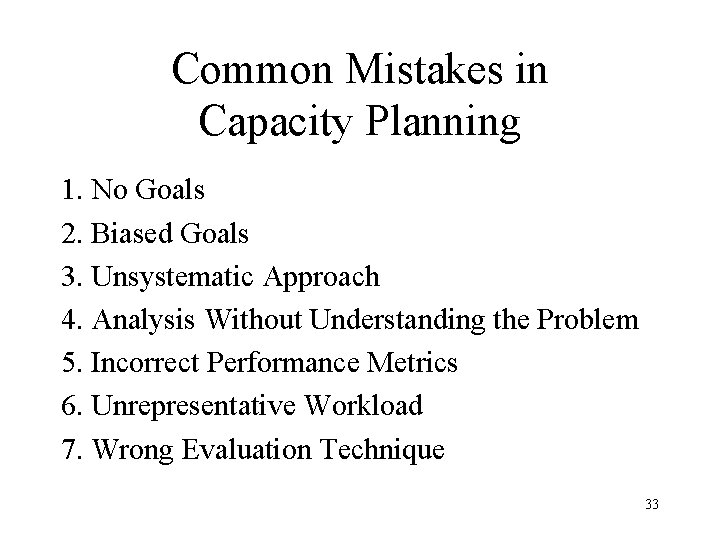

Common Mistakes in Capacity Planning 1. No Goals 2. Biased Goals 3. Unsystematic Approach 4. Analysis Without Understanding the Problem 5. Incorrect Performance Metrics 6. Unrepresentative Workload 7. Wrong Evaluation Technique 33

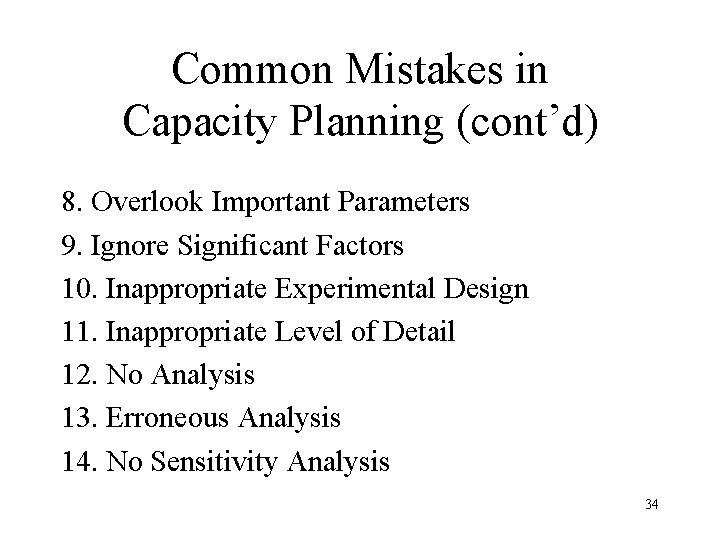

Common Mistakes in Capacity Planning (cont’d) 8. Overlook Important Parameters 9. Ignore Significant Factors 10. Inappropriate Experimental Design 11. Inappropriate Level of Detail 12. No Analysis 13. Erroneous Analysis 14. No Sensitivity Analysis 34

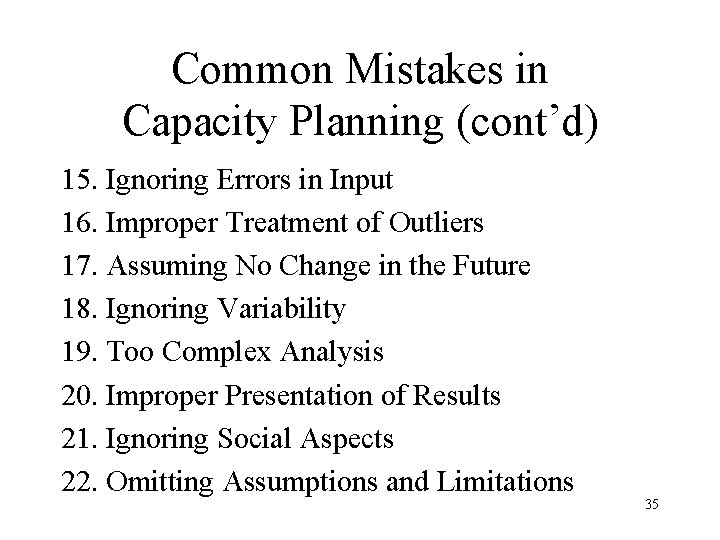

Common Mistakes in Capacity Planning (cont’d) 15. Ignoring Errors in Input 16. Improper Treatment of Outliers 17. Assuming No Change in the Future 18. Ignoring Variability 19. Too Complex Analysis 20. Improper Presentation of Results 21. Ignoring Social Aspects 22. Omitting Assumptions and Limitations 35

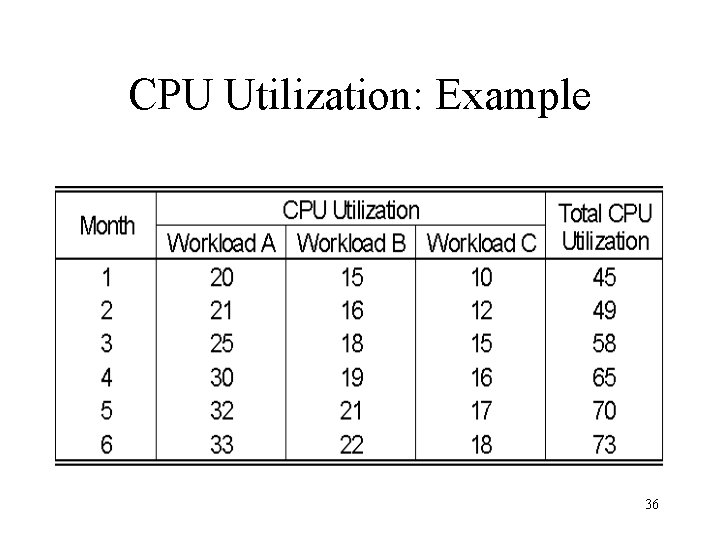

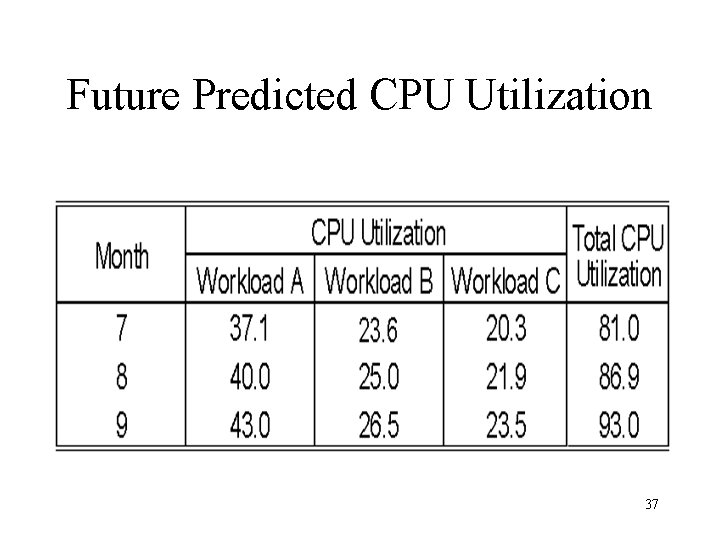

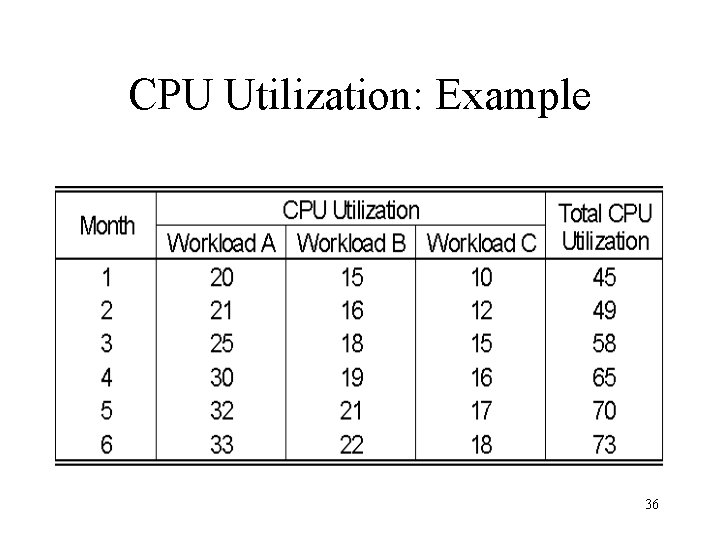

CPU Utilization: Example 36

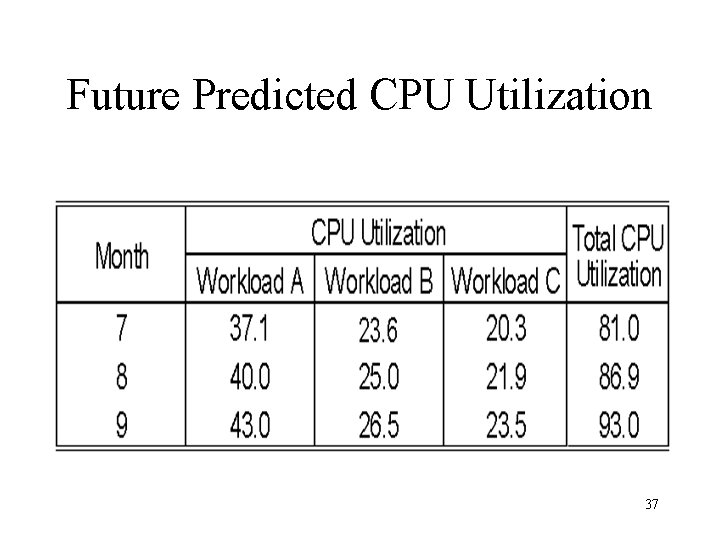

Future Predicted CPU Utilization 37

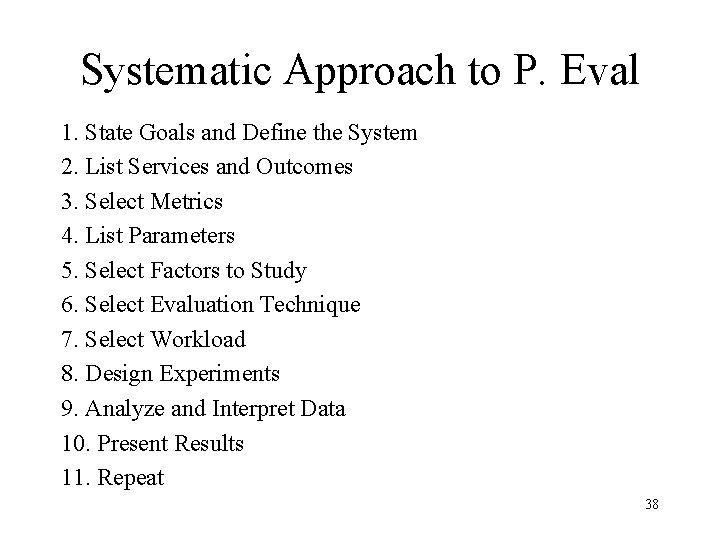

Systematic Approach to P. Eval 1. State Goals and Define the System 2. List Services and Outcomes 3. Select Metrics 4. List Parameters 5. Select Factors to Study 6. Select Evaluation Technique 7. Select Workload 8. Design Experiments 9. Analyze and Interpret Data 10. Present Results 11. Repeat 38

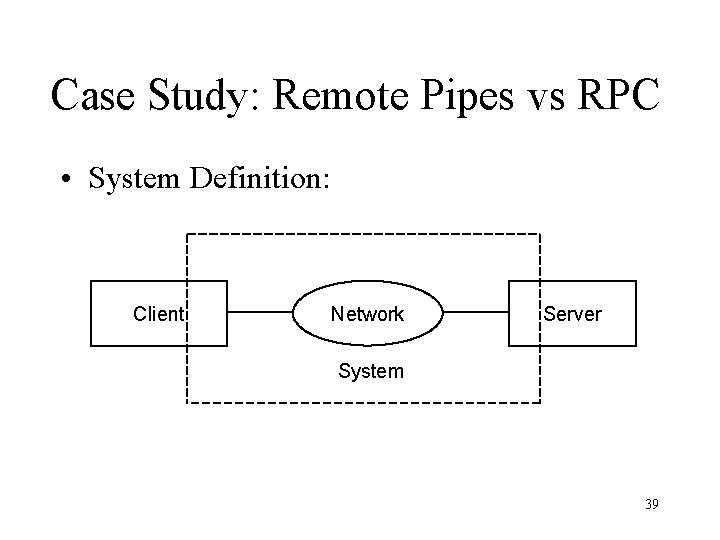

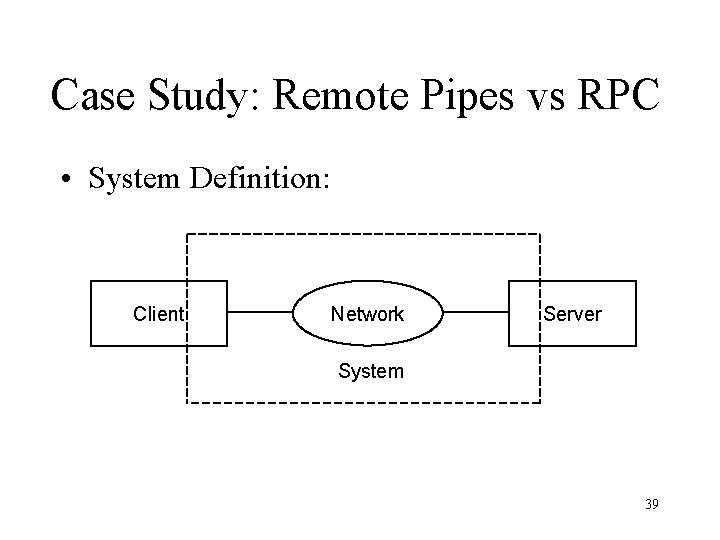

Case Study: Remote Pipes vs RPC • System Definition: Client Network Server System 39

Case Study (cont’d) • Services: Small data transfer or large data transfer • Metrics: – No errors and failures. Correct operation only – Rate, Time, Resource per service – Resource = Client, Server, Network 40

Case Study (cont’d) This leads to: 1. Elapsed time per call 2. Maximum call rate per unit of time, or equivalently, the time required to complete a block of n successive calls 3. Local CPU time per call 4. Remote CPU time per call 5. Number of bytes sent on the link per call 41

Case Study (cont’d) • Parameters: – System Parameters: 1. Speed of the local CPU 2. Speed of the remote CPU 3. Speed of the network 4. Operating system overhead for interfacing with the channels 5. Operating system overhead for interfacing with the networks 6. Reliability of the network affecting the number of retransmissions required 42

Case Study (cont’d) • Parameters : (cont’d) – Workload parameters: 1. Time between successive calls 2. Number and size of the call parameters 3. Number and size of the results 4. Type of channel 5. Other loads on the local and remote CPUs 6. Other loads on the network 43

Case Study (cont’d) • Factors: 1. Type of channel: Remote pipes and remote procedure calls 2. Size of the network: short distance and long distance 3. Size of the call parameters: small and large 4. Number n of consecutive calls: 1, 2, 4, 8, 16, 32, ··· , 512, and 1024 44

Case Study (cont’d) Note: – Fixed: type of CPUs and operating systems – Ignore retransmissions due to network error – Measure under no other load on the hosts and the network 45

Case Study (cont’d) • Evaluation Technique: Prototypes implemented Measurements Use analytical modeling for validation • Workload: Synthetic program generating the specified types of channel requests Null channel requests Resource used in monitoring and logging 46

Case Study (cont’d) • Experimental Design: A full factorial experimental design with 23 x 11 = 88 experiments will be used • Data Analysis: – Analysis of Variance (ANOVA) for the first three factors – Regression for number n of successive calls • Data Presentation: The final results will be plotted as a function of the block size n 47

Exercises 1. From published literature, select an article or a report that presents results of a performance evaluation study. Make a list of good and bad points of the study. What would you do different, if you wore asked to repeat the study. 48

Exercises (cont’d) 2. Choose a system for performance study. Briefly describe the system and list: a. Services b. Performance metrics c. System parameters d. Workload parameters e. Factors and their ranges f. Evaluation technique g. Workload Justify your choices. Suggestion: Each student should select a different system such as a network, database, processor, and so on and then present the solution to the class. 49

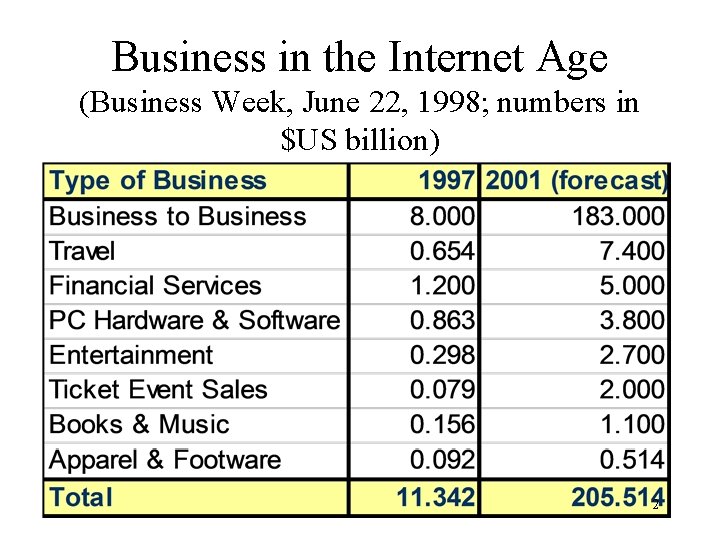

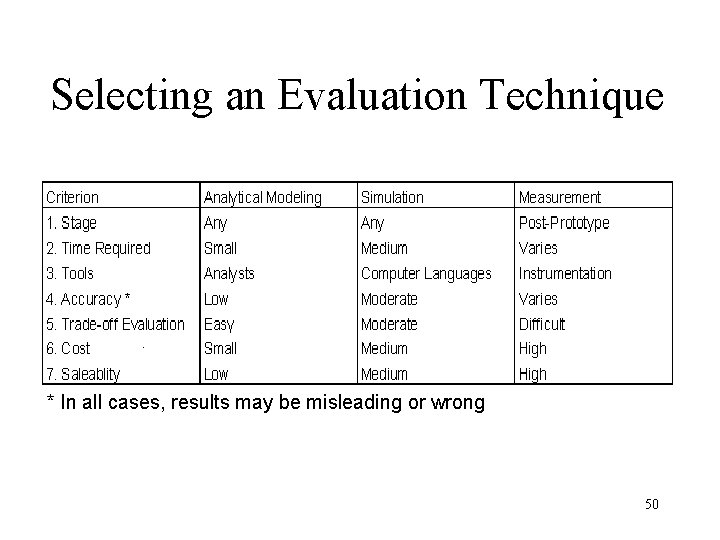

Selecting an Evaluation Technique * In all cases, results may be misleading or wrong 50