part 1 Lee Jang Won 14 05 16

![[개인 세미나 (part 1)] Lee Jang Won 14. 05. 16 Human Media Communication & [개인 세미나 (part 1)] Lee Jang Won 14. 05. 16 Human Media Communication &](https://slidetodoc.com/presentation_image_h2/b26599ec999e38fcad15cbc9fe85d85b/image-1.jpg)

![1 RNNLM § RNNLM can compress long context information in hidden (context) layer [1] 1 RNNLM § RNNLM can compress long context information in hidden (context) layer [1]](https://slidetodoc.com/presentation_image_h2/b26599ec999e38fcad15cbc9fe85d85b/image-3.jpg)

![3 RNNLM Rescoring in WFST framework § Kaldi speech recognition toolkit [3] support WFST-based 3 RNNLM Rescoring in WFST framework § Kaldi speech recognition toolkit [3] support WFST-based](https://slidetodoc.com/presentation_image_h2/b26599ec999e38fcad15cbc9fe85d85b/image-5.jpg)

- Slides: 10

![개인 세미나 part 1 Lee Jang Won 14 05 16 Human Media Communication [개인 세미나 (part 1)] Lee Jang Won 14. 05. 16 Human Media Communication &](https://slidetodoc.com/presentation_image_h2/b26599ec999e38fcad15cbc9fe85d85b/image-1.jpg)

[개인 세미나 (part 1)] Lee Jang Won 14. 05. 16 Human Media Communication & Processing Lab.

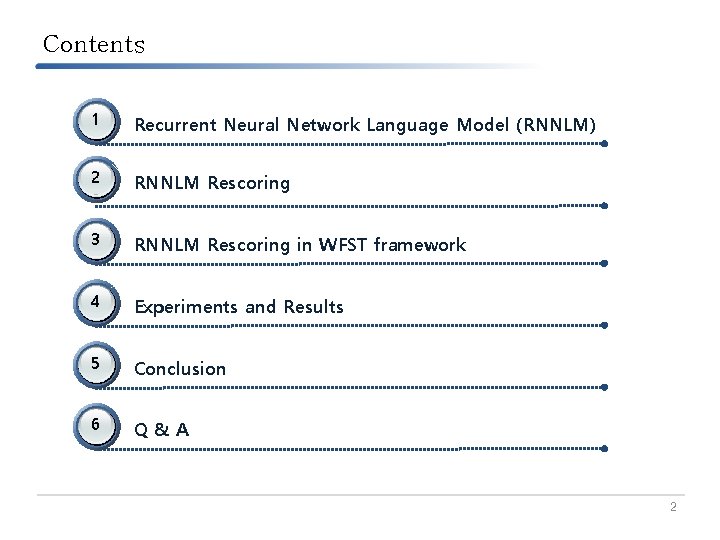

Contents 1 Recurrent Neural Network Language Model (RNNLM) 2 RNNLM Rescoring 3 RNNLM Rescoring in WFST framework 4 Experiments and Results 5 Conclusion 6 Q&A 2

![1 RNNLM RNNLM can compress long context information in hidden context layer 1 1 RNNLM § RNNLM can compress long context information in hidden (context) layer [1]](https://slidetodoc.com/presentation_image_h2/b26599ec999e38fcad15cbc9fe85d85b/image-3.jpg)

1 RNNLM § RNNLM can compress long context information in hidden (context) layer [1] • It is effective in estimating long context sentence-level probability (1) (2) (3) (4) (5) <Fig 1. > Simple recurrent neural network [1] T. Mikolov, M. Karafia t, L. Burget, J. C ernocky , S. Khudanpur: Recurrent neural network based language model, In: Proc. INTERSPEECH 2010 3

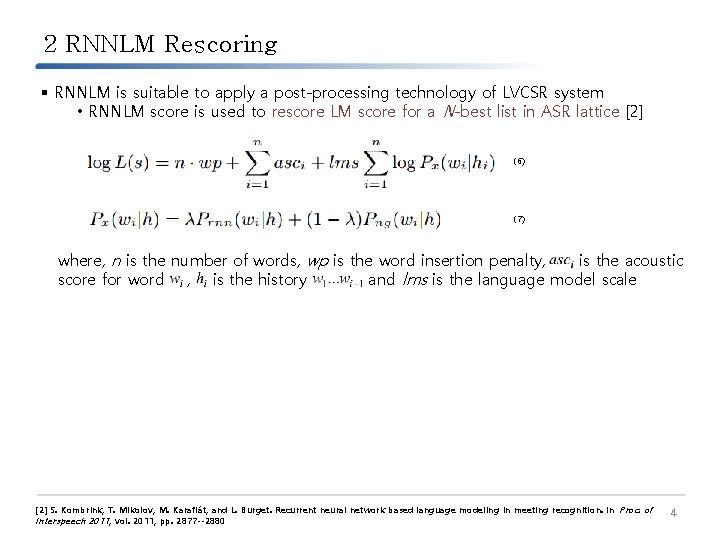

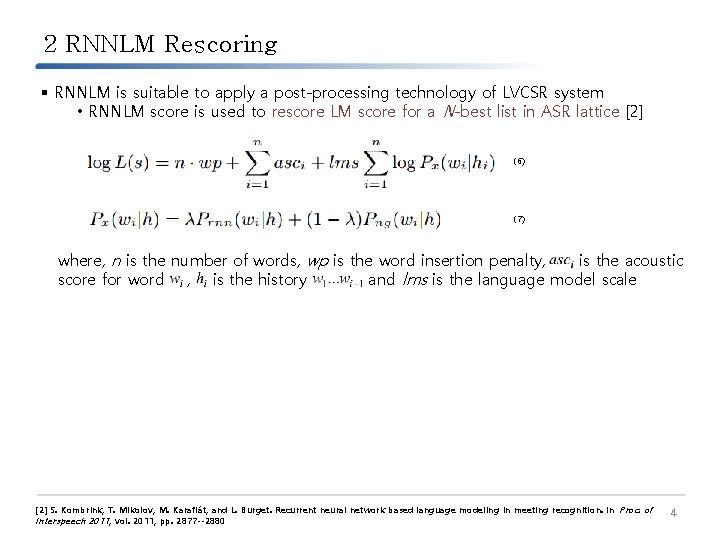

2 RNNLM Rescoring § RNNLM is suitable to apply a post-processing technology of LVCSR system • RNNLM score is used to rescore LM score for a N-best list in ASR lattice [2] (6) (7) where, n is the number of words, wp is the word insertion penalty, is the acoustic score for word , is the history and lms is the language model scale [2] S. Kombrink, T. Mikolov, M. Karafiát, and L. Burget. Recurrent neural network based language modeling in meeting recognition. In Proc. of Interspeech 2011 , vol. 2011, pp. 2877 --2880 4

![3 RNNLM Rescoring in WFST framework Kaldi speech recognition toolkit 3 support WFSTbased 3 RNNLM Rescoring in WFST framework § Kaldi speech recognition toolkit [3] support WFST-based](https://slidetodoc.com/presentation_image_h2/b26599ec999e38fcad15cbc9fe85d85b/image-5.jpg)

3 RNNLM Rescoring in WFST framework § Kaldi speech recognition toolkit [3] support WFST-based ASR system § WFST-based ASR lattice (Kaldi format) is follow <Fig 2. > lattice arc in Kaldi format where, GS is the graph score, which contains transition and pronunciation probabilities arising from HCLG (WFST decoding graph [4]), and AS is the acoustic score Weight is followed by lexicographic semiring [5] [3] Povey, D. , et. al. : The Kaldi speech recognition toolkit. In: Proceedings of IEEE ASRU, Honolulu, HI, pp. 1 -4, (2011) [4] Povey, D. , Hannemann. : Generating exact lattices in the WFST framework. In: Proceedings of ICASSP, Kyoto, Japan, pp. 4213 -4216 (2012) [5] B. Roark, "Lexicographic semirings for exact automata encoding of sequence models, " in Proc. ACL-HLT, 2011, Portland, OR, 2011, pp. 1 -5 5

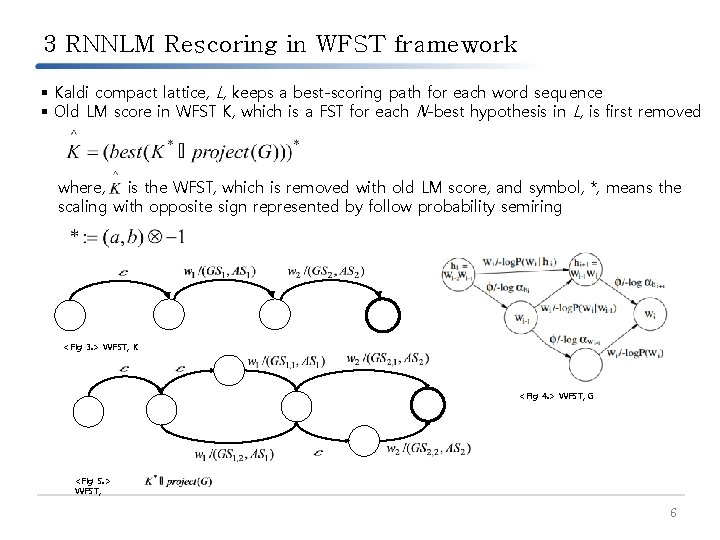

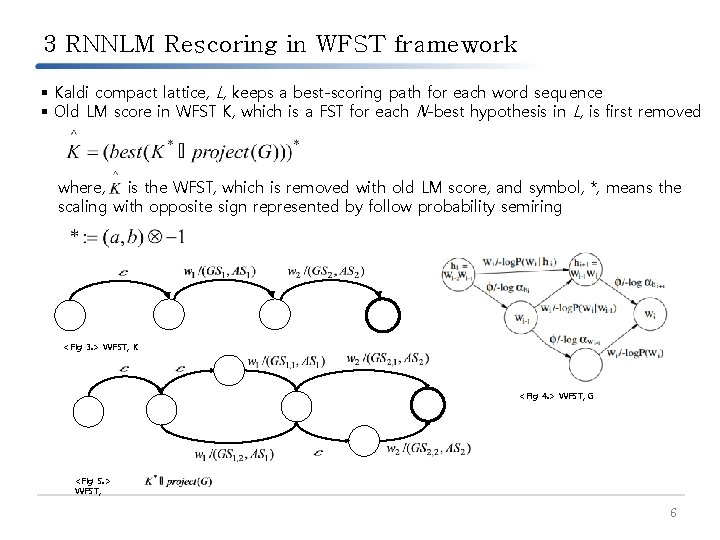

3 RNNLM Rescoring in WFST framework § Kaldi compact lattice, L, keeps a best-scoring path for each word sequence § Old LM score in WFST K, which is a FST for each N-best hypothesis in L, is first removed where, is the WFST, which is removed with old LM score, and symbol, *, means the scaling with opposite sign represented by follow probability semiring <Fig 3. > WFST, K <Fig 4. > WFST, G <Fig 5. > WFST, 6

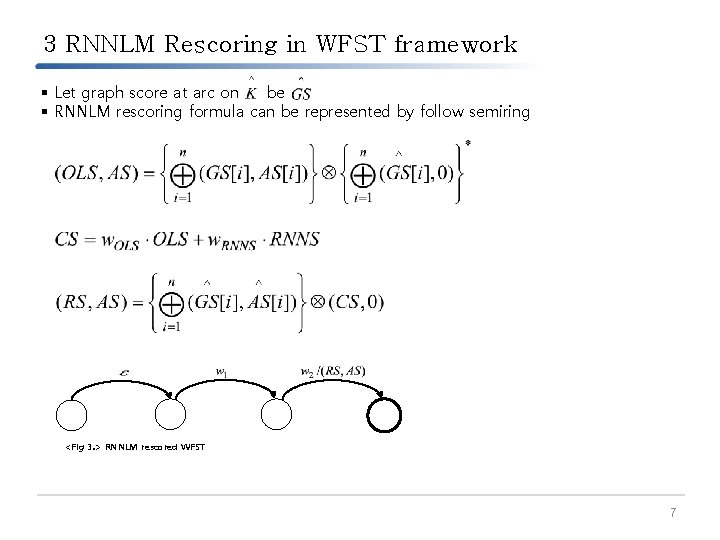

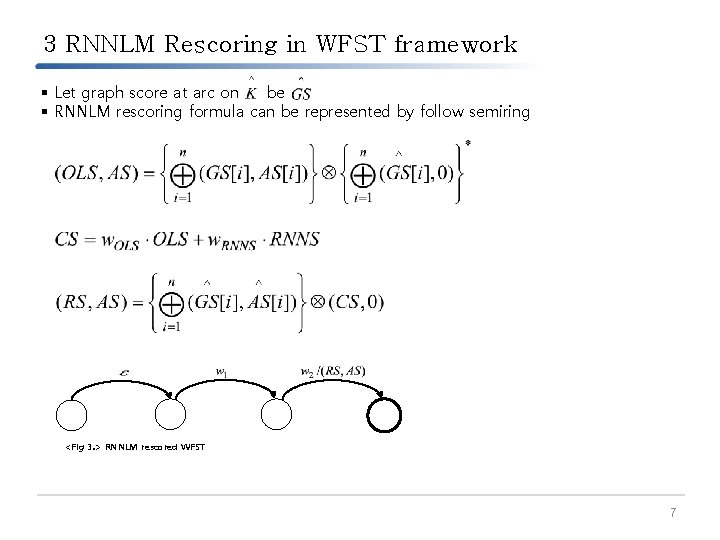

3 RNNLM Rescoring in WFST framework § Let graph score at arc on be § RNNLM rescoring formula can be represented by follow semiring <Fig 3. > RNNLM rescored WFST 7

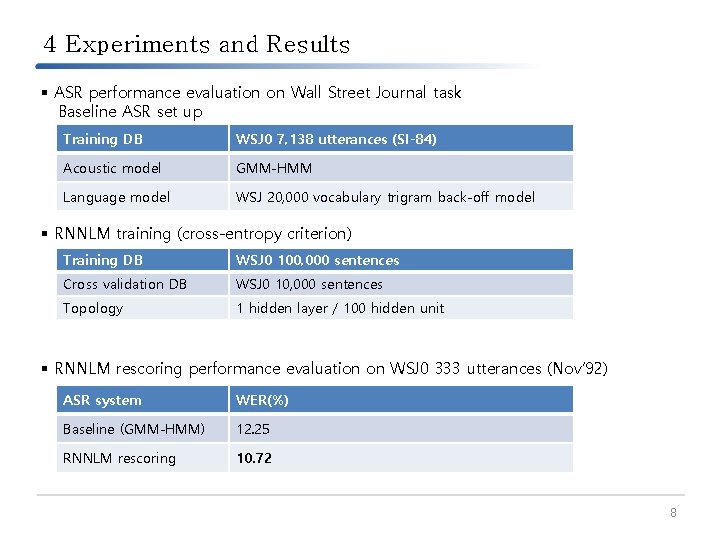

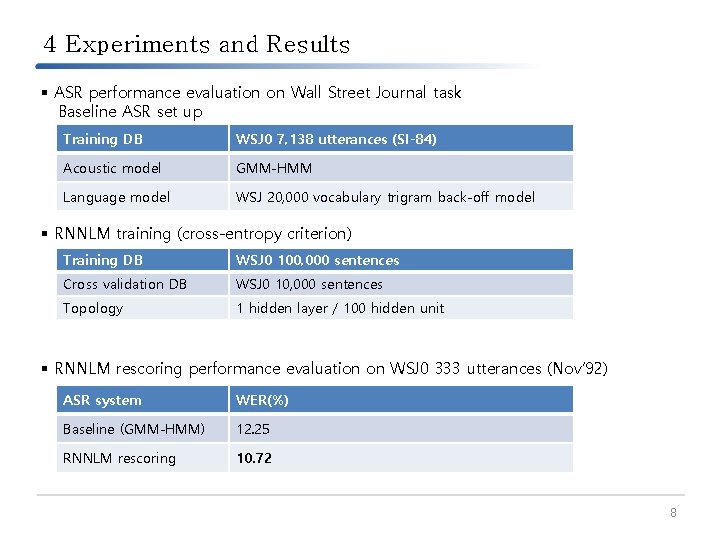

4 Experiments and Results § ASR performance evaluation on Wall Street Journal task Baseline ASR set up Training DB WSJ 0 7, 138 utterances (SI-84) Acoustic model GMM-HMM Language model WSJ 20, 000 vocabulary trigram back-off model § RNNLM training (cross-entropy criterion) Training DB WSJ 0 100, 000 sentences Cross validation DB WSJ 0 10, 000 sentences Topology 1 hidden layer / 100 hidden unit § RNNLM rescoring performance evaluation on WSJ 0 333 utterances (Nov’ 92) ASR system WER(%) Baseline (GMM-HMM) 12. 25 RNNLM rescoring 10. 72 8

4 Conclusion § RNNLM is effective in rescoring N-best list in ASR lattice § RNNLM can compress long context information § In WFST framework, it must be still studied about a composition of old grammar FST and ASR lattice with exact old LM score removing algorithm § WFST decoding graph composition problem with RNNLM-based grammar FST [6] § Exact n-gram probability estimation with RNNLM is required [7] [6] G. Lecorve, P. Motlicek: Conversion of Recurrent Neural Network Language Models to Weighted Finite State Transducers for Automatic Speech Recognition, In: Proc. INTERSPEECH 2012 [7] E. Arisoy, S. F. Chen, B. Ramabhadran, and A. Sethy (2013), “Converting neural network language models into back-off language models for efficient decoding in automatic speech recognition, ” in Proc. ICASSP, Vancouver, Canada, 2013, pp. 8242– 8246. 9

Q&A 10