Language Model for Machine Translation Jang Ha Young

- Slides: 12

Language Model for Machine Translation Jang, Ha. Young

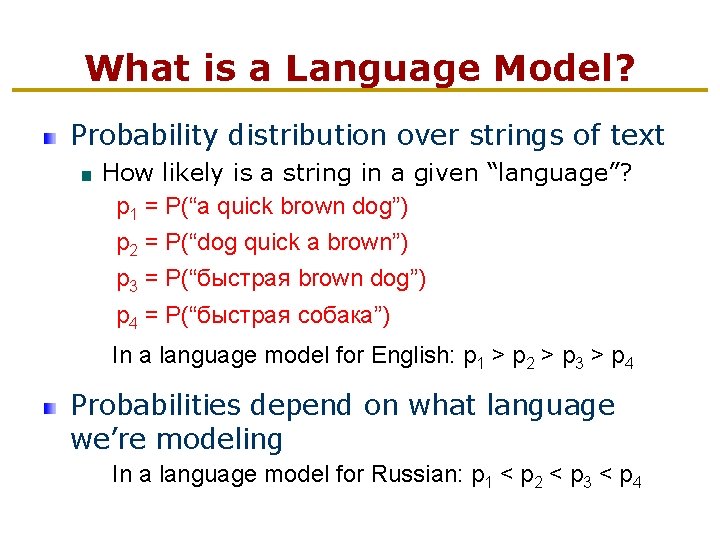

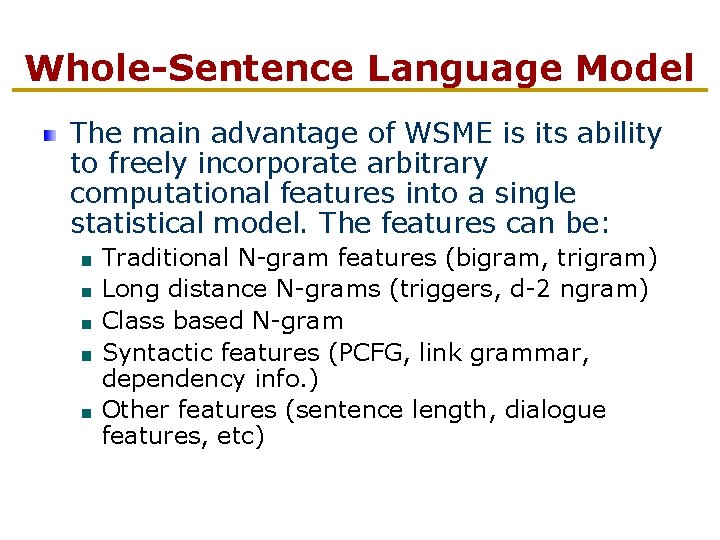

What is a Language Model? Probability distribution over strings of text How likely is a string in a given “language”? p 1 = P(“a quick brown dog”) p 2 = P(“dog quick a brown”) p 3 = P(“быстрая brown dog”) p 4 = P(“быстрая собака”) In a language model for English: p 1 > p 2 > p 3 > p 4 Probabilities depend on what language we’re modeling In a language model for Russian: p 1 < p 2 < p 3 < p 4

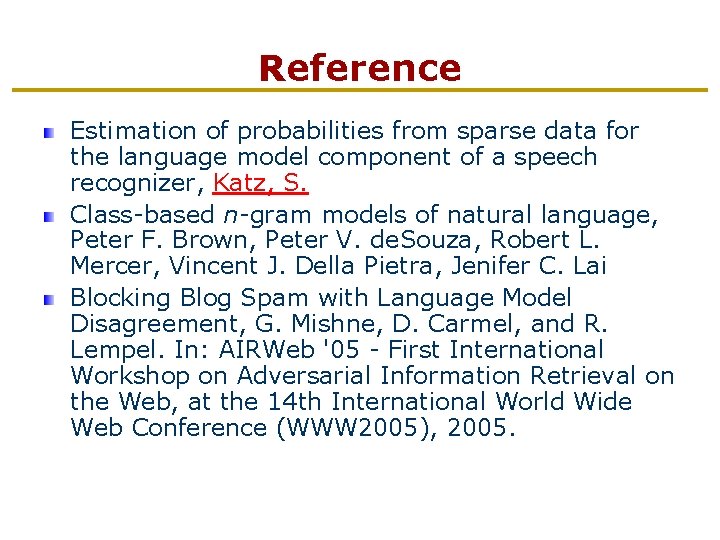

Language Model from Wikipedia A statistical language model assigns a probability to a sequence of words P(w 1. . n) by means of a probability distribution. Language modeling is used in many natural language processing applications such as speech recognition, machine translation, partof-speech tagging, parsing and information retrieval. Estimating the probabilty of sequences can become difficult in corpora, in which phrases or sentences can be arbitrarily long and hence some sequences are not observed during training of the language model (data sparseness problem of overfitting). For that reason these models are often approximated using smoothed N-gram models. In speech recognition and in data compression, such a model tries to capture the properties of a language, and to predict the next word in a speech sequence. When used in information retrieval, a language model is associated with a document in a collection. With query Q as input, retrieved documents are ranked based on the probability that the document's language model would generate the terms of the query, P(Q|Md).

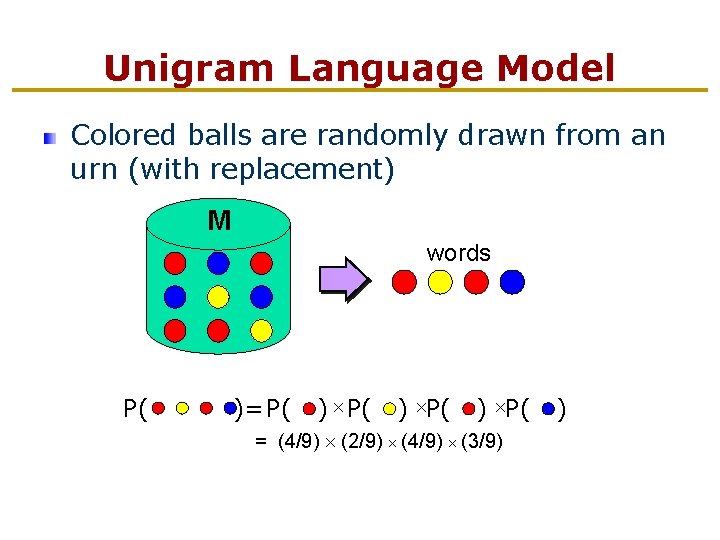

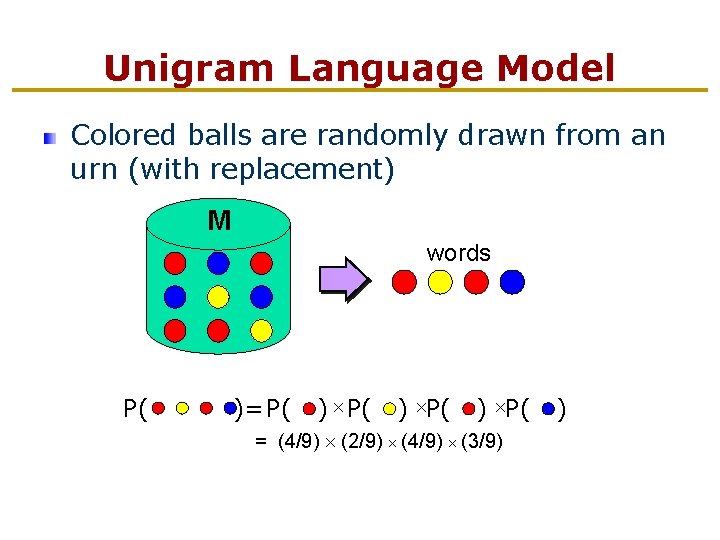

Unigram Language Model Colored balls are randomly drawn from an urn (with replacement) M words P( )= P( ) = (4/9) (2/9) (4/9) (3/9)

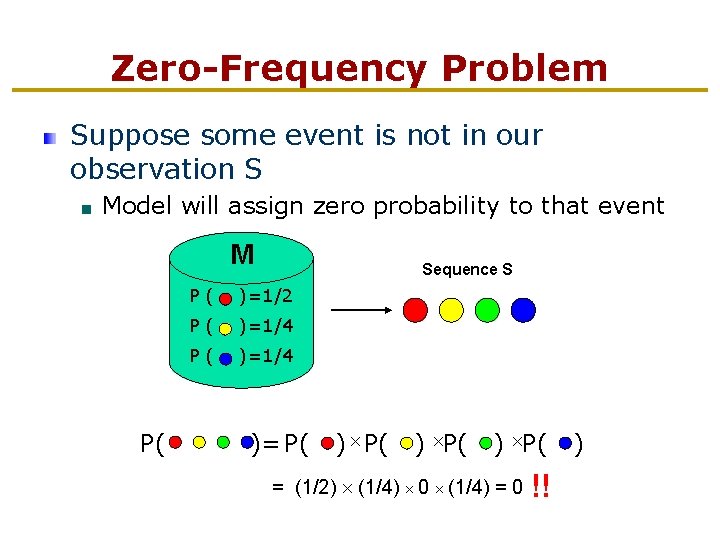

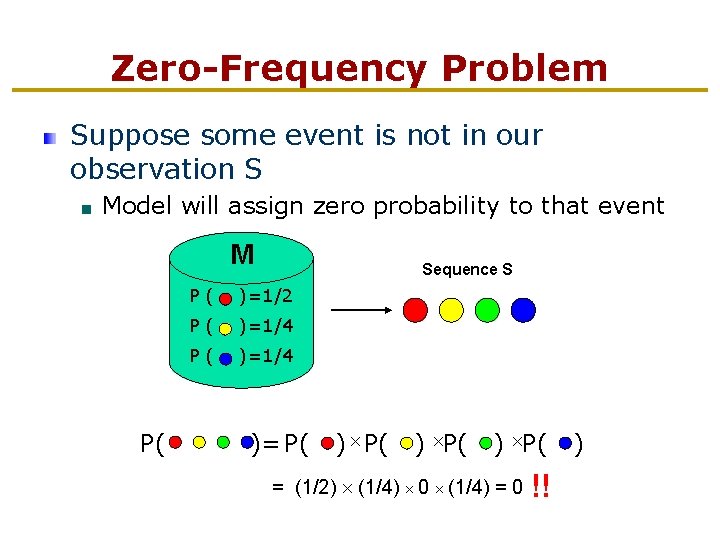

Zero-Frequency Problem Suppose some event is not in our observation S Model will assign zero probability to that event M Sequence S P ( )=1/2 P ( )=1/4 P( )= P( ) = (1/2) (1/4) 0 (1/4) = 0 !!

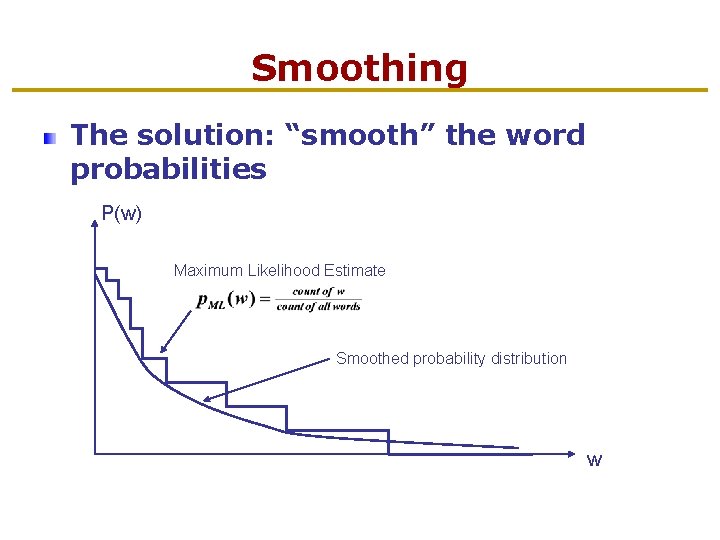

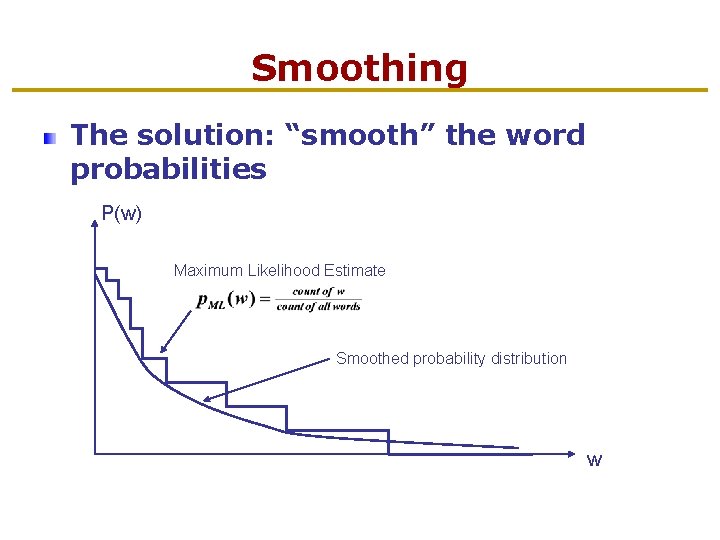

Smoothing The solution: “smooth” the word probabilities P(w) Maximum Likelihood Estimate Smoothed probability distribution w

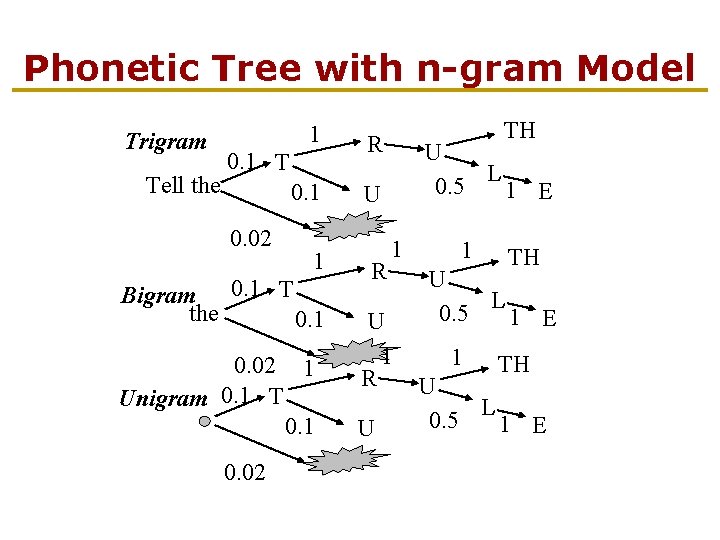

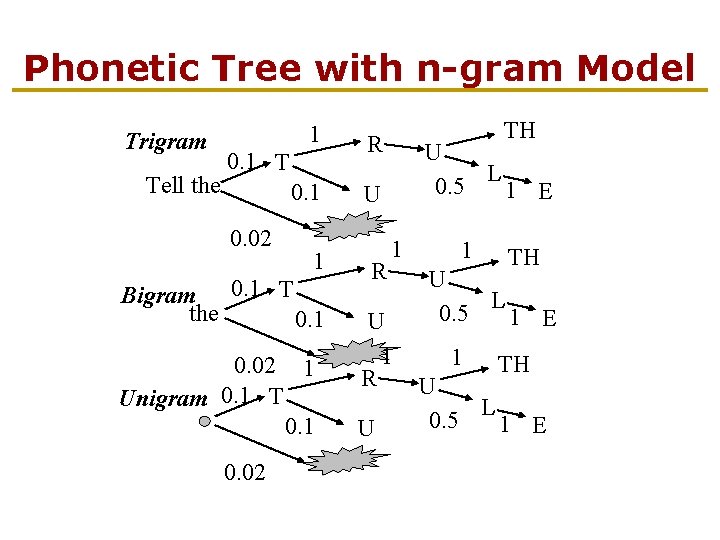

Phonetic Tree with n-gram Model Trigram Tell the 1 R 0. 1 U 0. 1 T 0. 02 Bigram the U L 0. 5 1 E 1 1 R 0. 1 U 1 R 0. 1 T 0. 02 1 Unigram 0. 1 T 0. 1 0. 02 TH U 1 TH U L 0. 5 1 E

n-grams n-gram A sequence of n symbols n-gram Language Model A model to predict a symbol in a sequence, given its n-1 predecessors Why use them? Estimate the probability of a symbol in unknown text, given the frequency of its occurrence in known text

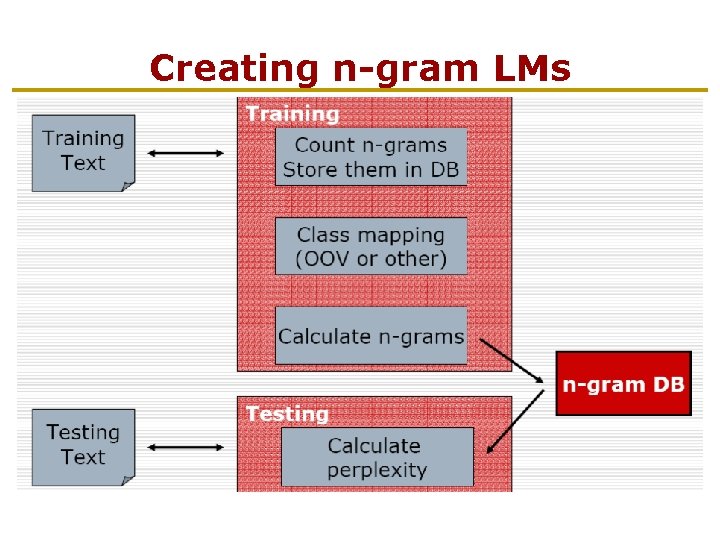

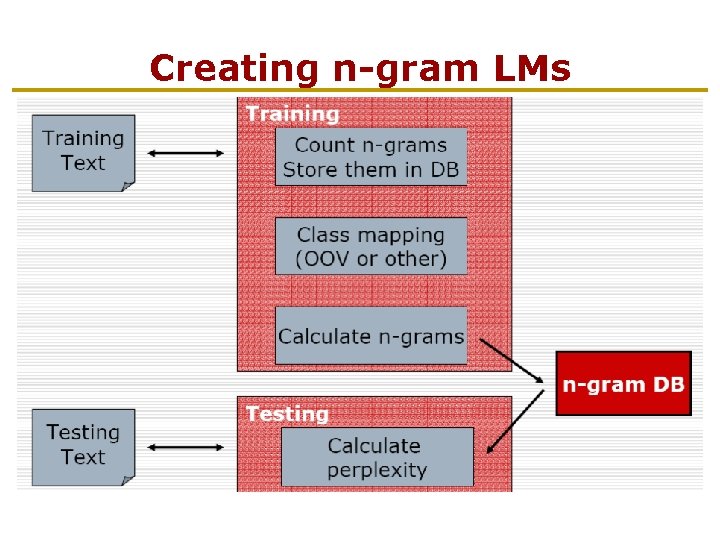

Creating n-gram LMs

Problems with n-grams More n-grams than those that can be observed Sensitivity to the genre of the training text Newpaper articles Personal letters Fixed n-gram Vocabulary Any additions lead to re-compilation of the ngram model

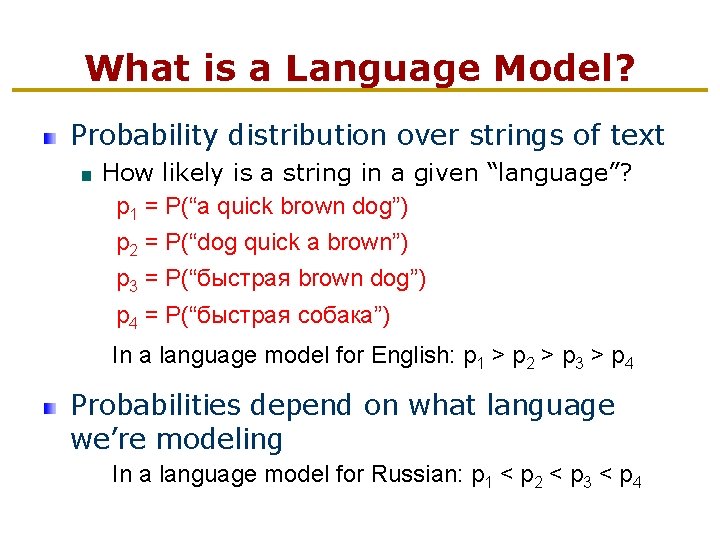

Whole-Sentence Language Model The main advantage of WSME is its ability to freely incorporate arbitrary computational features into a single statistical model. The features can be: Traditional N-gram features (bigram, trigram) Long distance N-grams (triggers, d-2 ngram) Class based N-gram Syntactic features (PCFG, link grammar, dependency info. ) Other features (sentence length, dialogue features, etc)

Reference Estimation of probabilities from sparse data for the language model component of a speech recognizer, Katz, S. Class-based n-gram models of natural language, Peter F. Brown, Peter V. de. Souza, Robert L. Mercer, Vincent J. Della Pietra, Jenifer C. Lai Blocking Blog Spam with Language Model Disagreement, G. Mishne, D. Carmel, and R. Lempel. In: AIRWeb '05 - First International Workshop on Adversarial Information Retrieval on the Web, at the 14 th International World Wide Web Conference (WWW 2005), 2005.