NearTerm NCCS Discover Cluster Changes and Integration Plans

- Slides: 22

Near-Term NCCS & Discover Cluster Changes and Integration Plans: A Briefing for NCCS Users October 30, 2014

Agenda • • Storage Augmentations Discover Cluster Hardware Changes SLURM Changes Q&A NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 2

Storage Augmentations • Dirac (Mass Storage) Disk Augmentation – 4 Petabytes usable (5 Petabytes “raw”) – Arriving November 2014 – Gradual integration (many files, “inodes” to move) • Discover Storage Expansion – 8 Petabytes usable (10 Petabytes “raw”) – Arriving December 2014 – For both targeted “Climate Downscaling” project and general use – Begin operational use in mid/late December NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 3

Motivation for Near-Term Discover Changes • Due to demand for more resources, we’re undertaking major Discover cluster augmentations. Ø Result will be: 3 x computing capacity increase, net increase of 20, 000 cores for Discover! • But – we have floor space and power limitations, so we need to do phased removal of oldest Discover processors (12 -core Westmeres). • Interim reduction in Discover cores will be partly relieved by the addition of previously-dedicated compute nodes. • Prudent use of SLURM features can help optimize your job’s turnaround during the “crunch time” (More on this later…) NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 4

Discover Hardware Changes • What we have now (October 2014): – 12 -core ‘Westmere’ and 16 -core ‘Sandy Bridge’ • What’s being delivered near term: – 28 -core ‘Haswell’ • What’s being removed to make room: – 12 -core ‘Westmere’ • Impacts for Discover users: there will be an interim “crunch time” with fewer nodes/cores available. • (Transition schedule is subject to change. ) NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 5

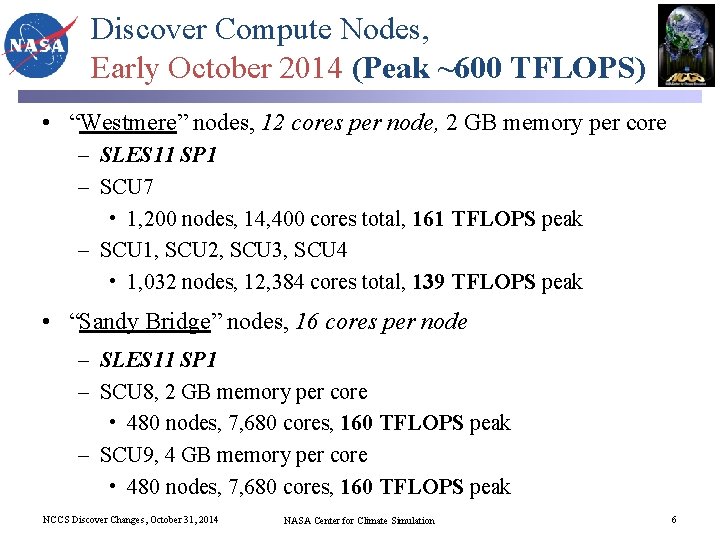

Discover Compute Nodes, Early October 2014 (Peak ~600 TFLOPS) • “Westmere” nodes, 12 cores per node, 2 GB memory per core – SLES 11 SP 1 – SCU 7 • 1, 200 nodes, 14, 400 cores total, 161 TFLOPS peak – SCU 1, SCU 2, SCU 3, SCU 4 • 1, 032 nodes, 12, 384 cores total, 139 TFLOPS peak • “Sandy Bridge” nodes, 16 cores per node – SLES 11 SP 1 – SCU 8, 2 GB memory per core • 480 nodes, 7, 680 cores, 160 TFLOPS peak – SCU 9, 4 GB memory per core • 480 nodes, 7, 680 cores, 160 TFLOPS peak NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 6

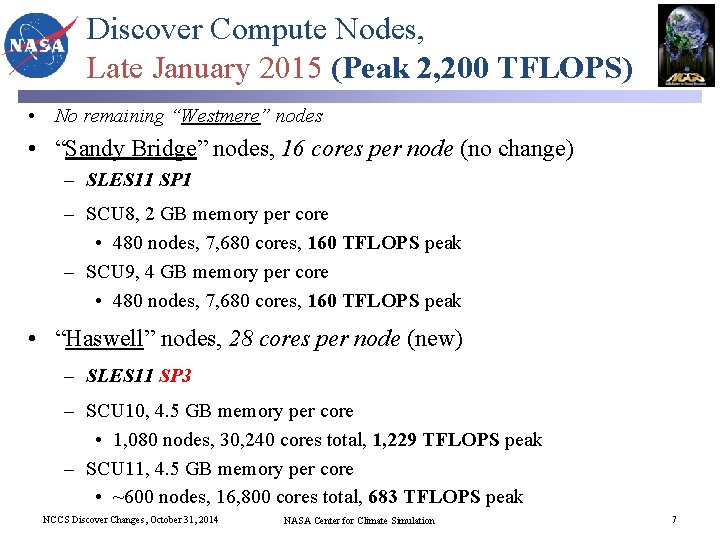

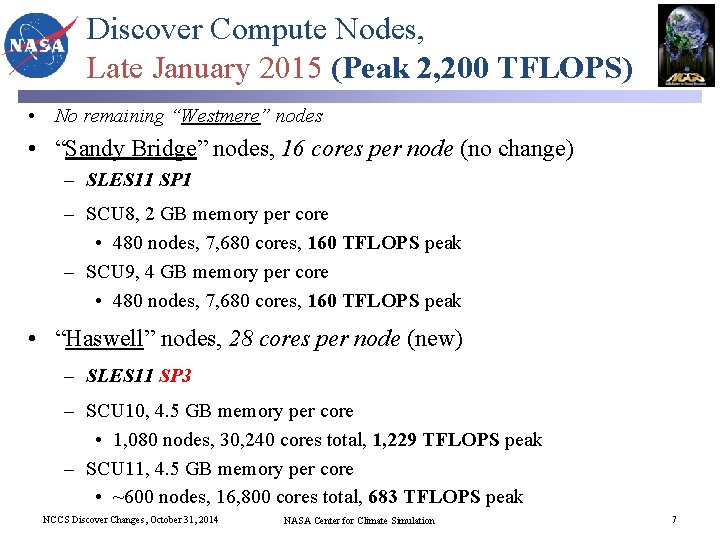

Discover Compute Nodes, Late January 2015 (Peak 2, 200 TFLOPS) • No remaining “Westmere” nodes • “Sandy Bridge” nodes, 16 cores per node (no change) – SLES 11 SP 1 – SCU 8, 2 GB memory per core • 480 nodes, 7, 680 cores, 160 TFLOPS peak – SCU 9, 4 GB memory per core • 480 nodes, 7, 680 cores, 160 TFLOPS peak • “Haswell” nodes, 28 cores per node (new) – SLES 11 SP 3 – SCU 10, 4. 5 GB memory per core • 1, 080 nodes, 30, 240 cores total, 1, 229 TFLOPS peak – SCU 11, 4. 5 GB memory per core • ~600 nodes, 16, 800 cores total, 683 TFLOPS peak NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 7

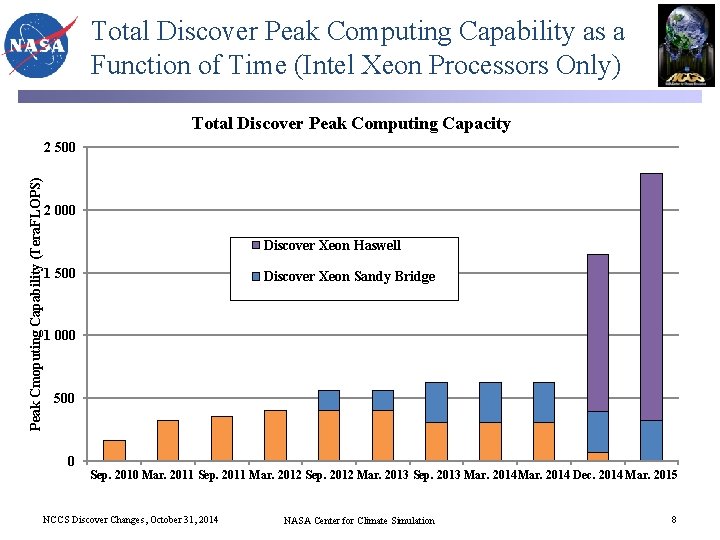

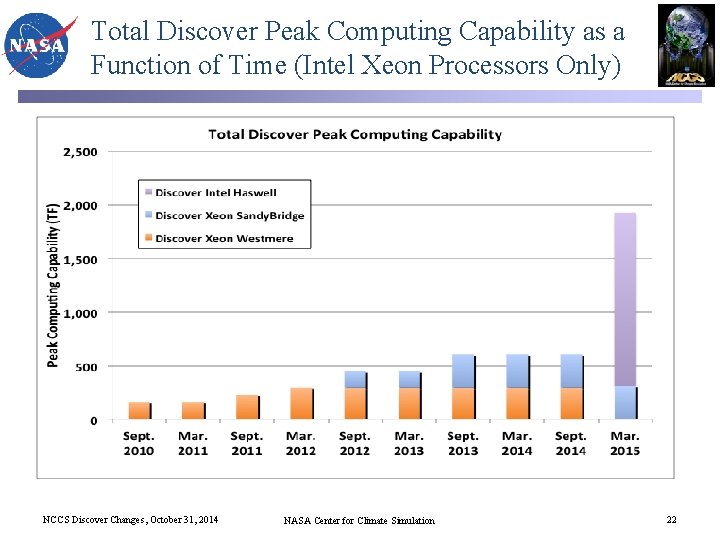

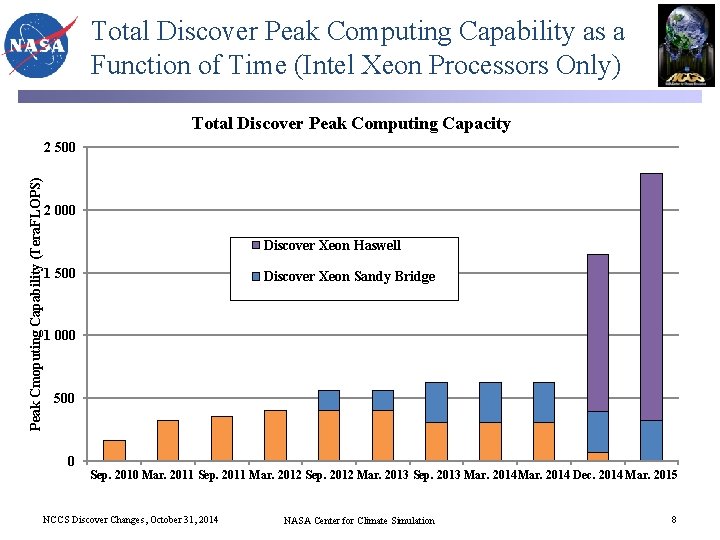

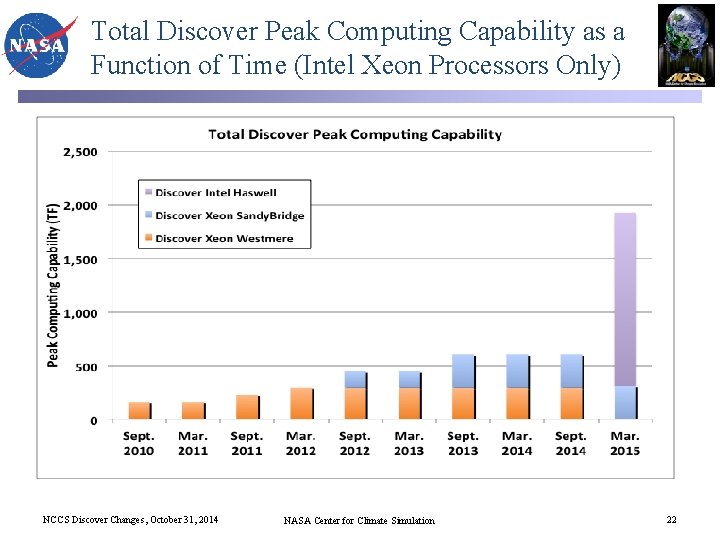

Total Discover Peak Computing Capability as a Function of Time (Intel Xeon Processors Only) Total Discover Peak Computing Capacity Peak Cmoputing Capability (Tera. FLOPS) 2 500 2 000 Discover Xeon Haswell 1 500 Discover Xeon Sandy Bridge 1 000 500 0 Sep. 2010 Mar. 2011 Sep. 2011 Mar. 2012 Sep. 2012 Mar. 2013 Sep. 2013 Mar. 2014 Dec. 2014 Mar. 2015 NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 8

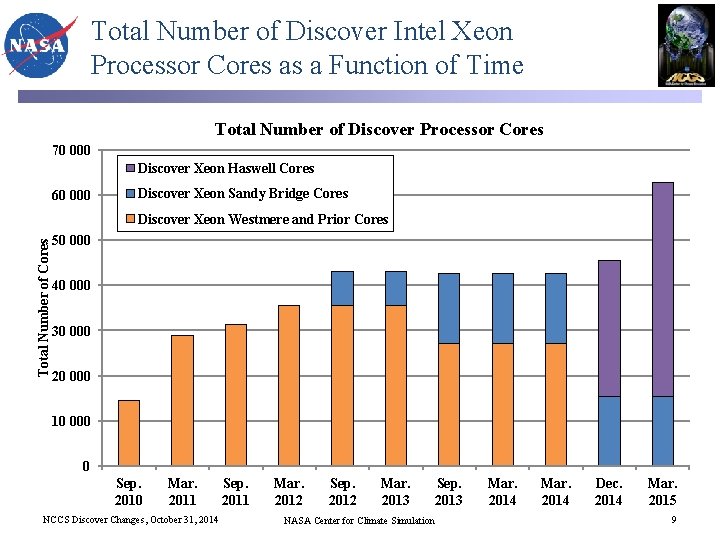

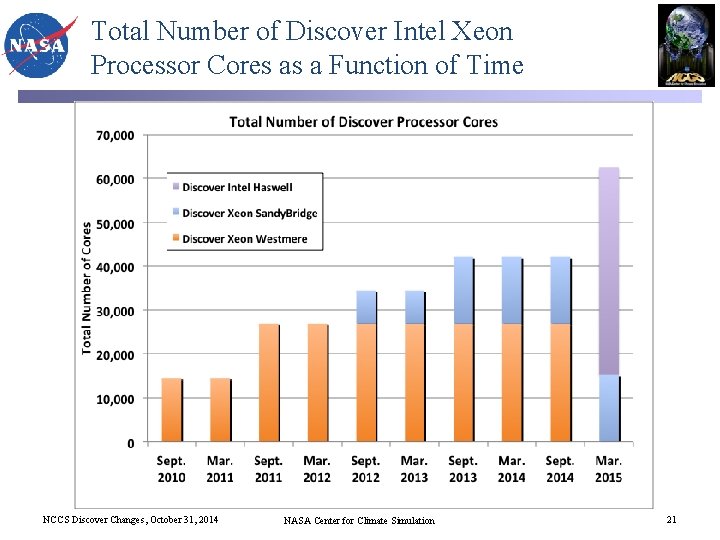

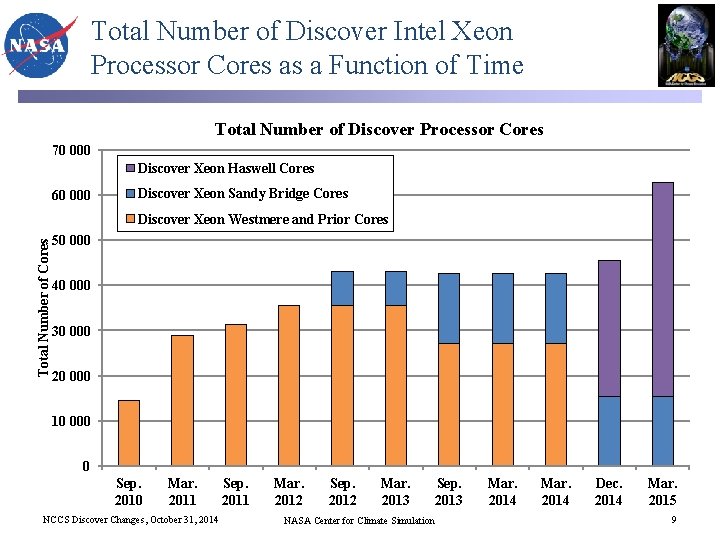

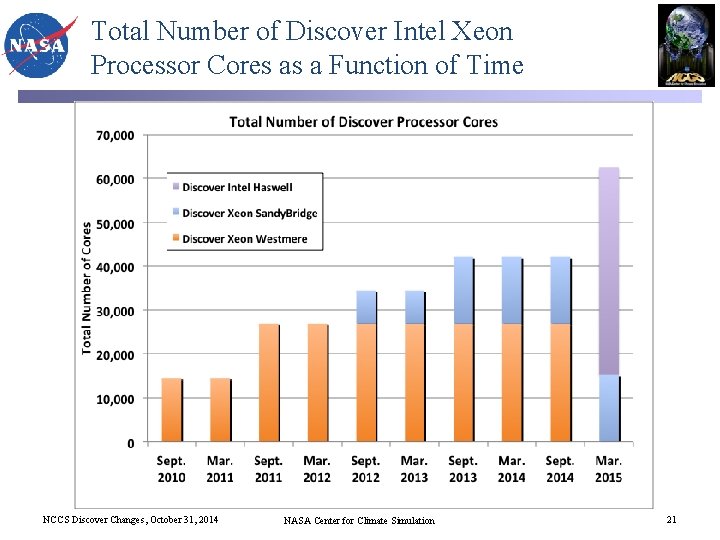

Total Number of Discover Intel Xeon Processor Cores as a Function of Time Total Number of Discover Processor Cores 70 000 Discover Xeon Haswell Cores 60 000 Discover Xeon Sandy Bridge Cores Total Number of Cores Discover Xeon Westmere and Prior Cores 50 000 40 000 30 000 20 000 10 000 0 Sep. 2010 Mar. 2011 NCCS Discover Changes, October 31, 2014 Sep. 2011 Mar. 2012 Sep. 2012 Mar. 2013 NASA Center for Climate Simulation Sep. 2013 Mar. 2014 Dec. 2014 Mar. 2015 9

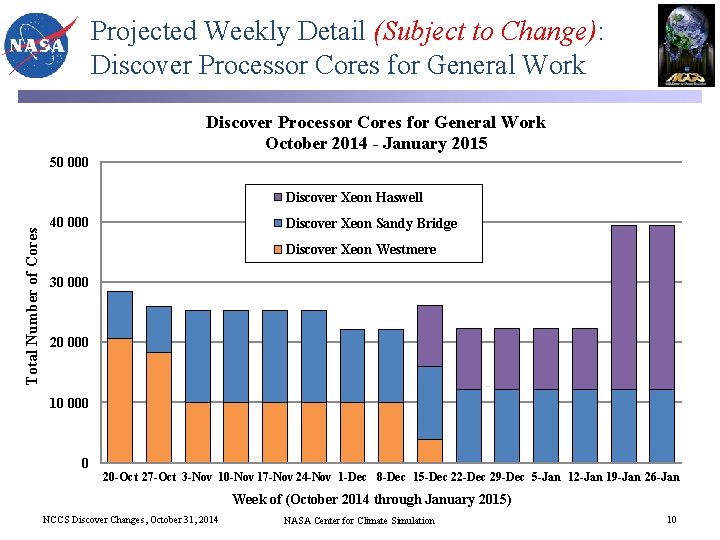

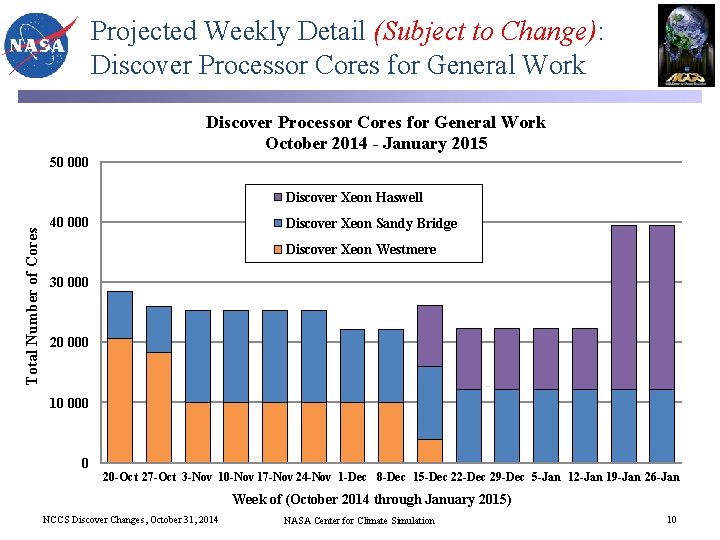

Projected Weekly Detail (Subject to Change): Discover Processor Cores for General Work October 2014 - January 2015 50 000 Total Number of Cores Discover Xeon Haswell 40 000 Discover Xeon Sandy Bridge Discover Xeon Westmere 30 000 20 000 10 000 0 20 -Oct 27 -Oct 3 -Nov 10 -Nov 17 -Nov 24 -Nov 1 -Dec 8 -Dec 15 -Dec 22 -Dec 29 -Dec 5 -Jan 12 -Jan 19 -Jan 26 -Jan Week of (October 2014 through January 2015) NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 10

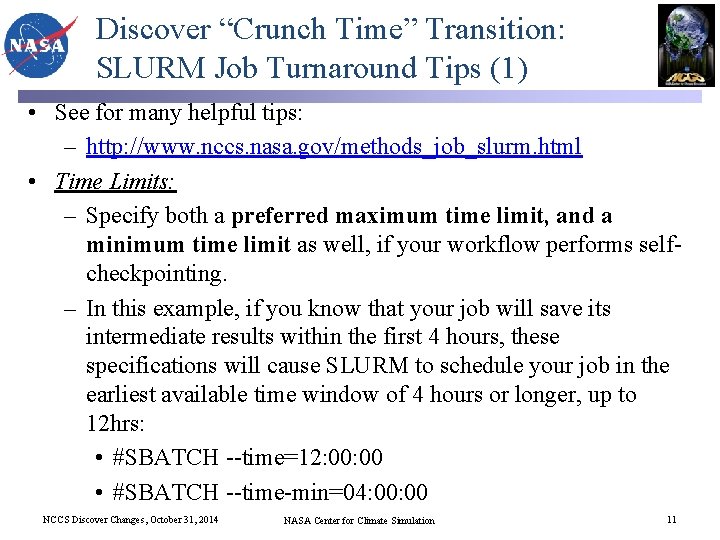

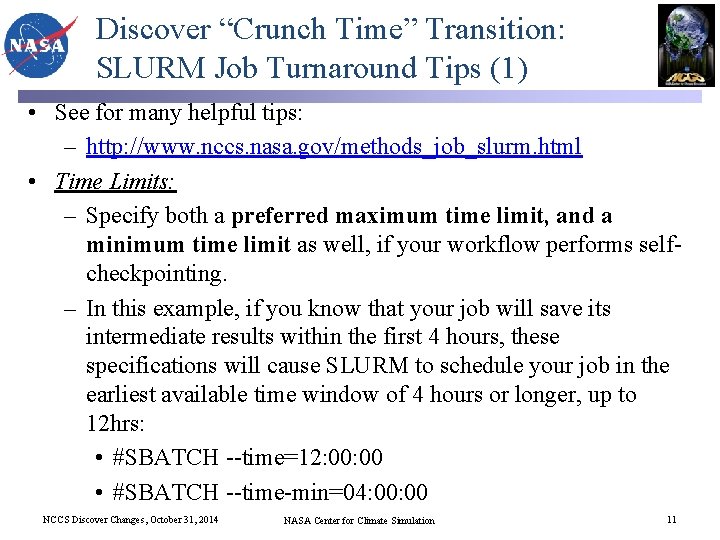

Discover “Crunch Time” Transition: SLURM Job Turnaround Tips (1) • See for many helpful tips: – http: //www. nccs. nasa. gov/methods_job_slurm. html • Time Limits: – Specify both a preferred maximum time limit, and a minimum time limit as well, if your workflow performs selfcheckpointing. – In this example, if you know that your job will save its intermediate results within the first 4 hours, these specifications will cause SLURM to schedule your job in the earliest available time window of 4 hours or longer, up to 12 hrs: • #SBATCH --time=12: 00 • #SBATCH --time-min=04: 00 NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 11

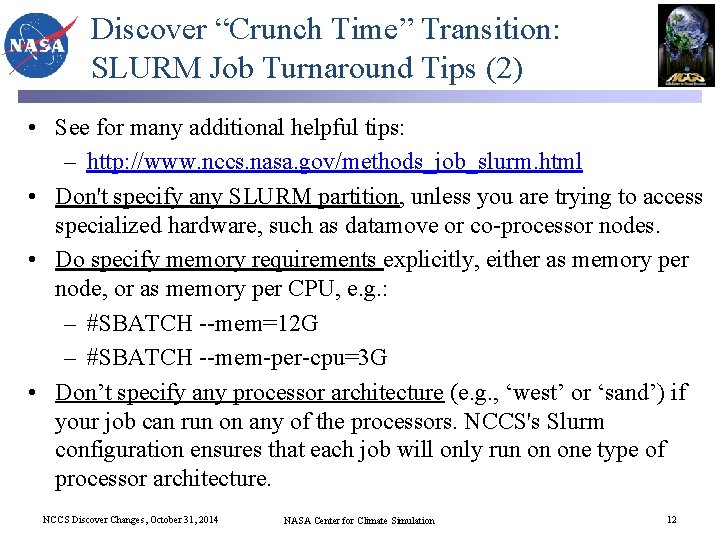

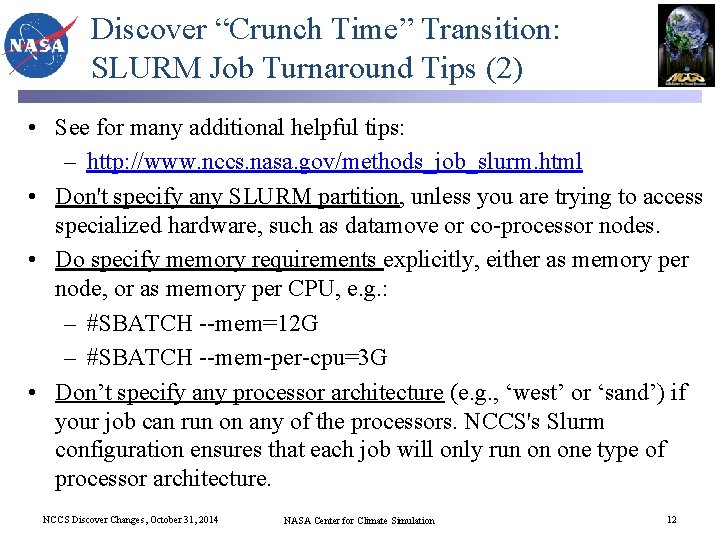

Discover “Crunch Time” Transition: SLURM Job Turnaround Tips (2) • See for many additional helpful tips: – http: //www. nccs. nasa. gov/methods_job_slurm. html • Don't specify any SLURM partition, unless you are trying to access specialized hardware, such as datamove or co-processor nodes. • Do specify memory requirements explicitly, either as memory per node, or as memory per CPU, e. g. : – #SBATCH --mem=12 G – #SBATCH --mem-per-cpu=3 G • Don’t specify any processor architecture (e. g. , ‘west’ or ‘sand’) if your job can run on any of the processors. NCCS's Slurm configuration ensures that each job will only run on one type of processor architecture. NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 12

Questions & Answers NCCS User Services: support@nccs. nasa. gov 301 -286 -9120 https: //www. nccs. nasa. gov Thank you

SUPPLEMENTAL SLIDES NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 14

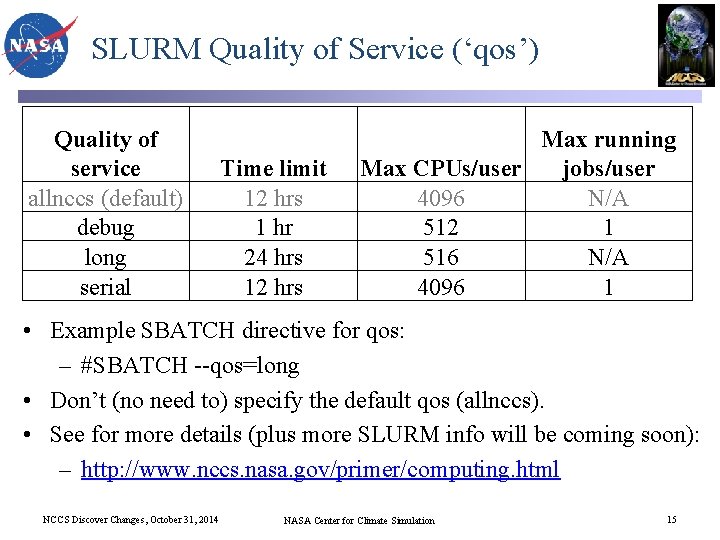

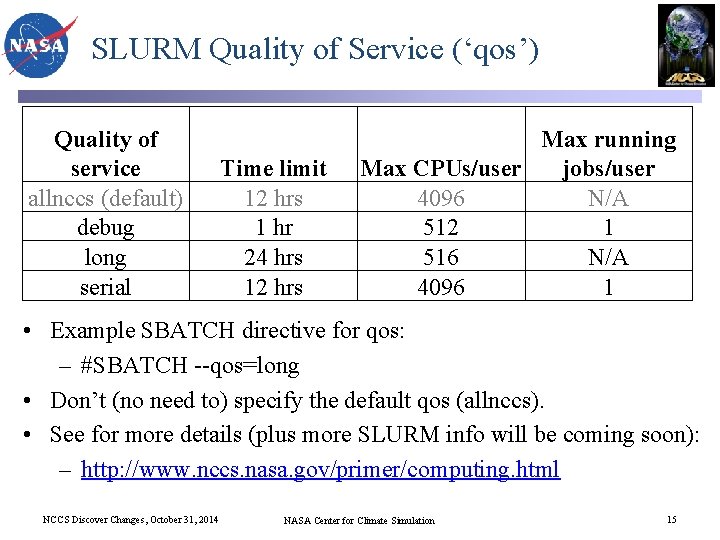

SLURM Quality of Service (‘qos’) Quality of service allnccs (default) debug long serial Time limit 12 hrs 1 hr 24 hrs 12 hrs Max running Max CPUs/user jobs/user 4096 N/A 512 1 516 N/A 4096 1 • Example SBATCH directive for qos: – #SBATCH --qos=long • Don’t (no need to) specify the default qos (allnccs). • See for more details (plus more SLURM info will be coming soon): – http: //www. nccs. nasa. gov/primer/computing. html NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 15

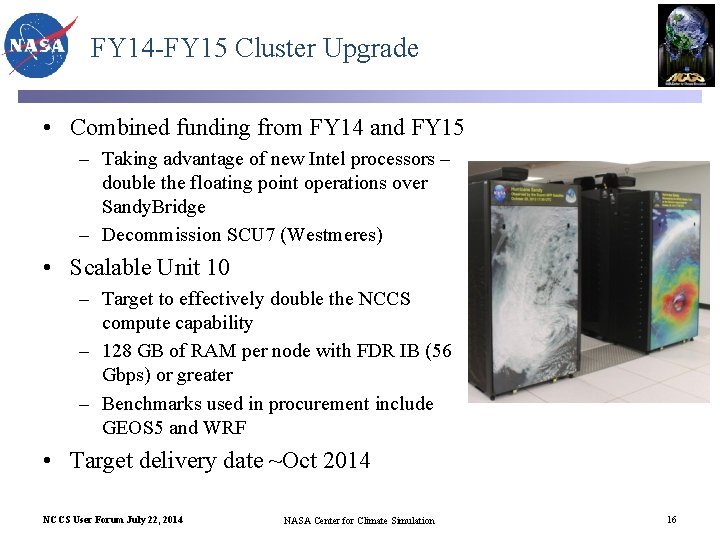

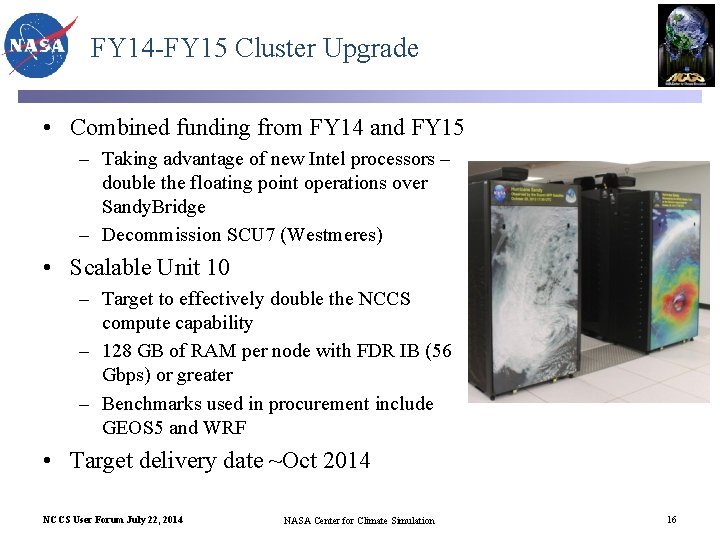

FY 14 -FY 15 Cluster Upgrade • Combined funding from FY 14 and FY 15 – Taking advantage of new Intel processors – double the floating point operations over Sandy. Bridge – Decommission SCU 7 (Westmeres) • Scalable Unit 10 – Target to effectively double the NCCS compute capability – 128 GB of RAM per node with FDR IB (56 Gbps) or greater – Benchmarks used in procurement include GEOS 5 and WRF • Target delivery date ~Oct 2014 NCCS User Forum July 22, 2014 NASA Center for Climate Simulation 16

Letter to NCCS Users The NCCS is committed to providing the best possible high performance solutions to meet the NASA science requirements. To this end, the NCCS is undergoing major integration efforts over the next several months to dramatically increase both the overall compute and storage capacity within the Discover cluster. The end result will increase the total numbers of processors by over 20, 000 cores while increasing the peak computing capacity by almost a factor of 3 x! Given the budgetary and facility constraints, the NCCS will be removing parts of Discover to make room for the upgrades. The charts shown on this web page (PUT URL FOR INTEGRATION SCHEDULE HERE) show the current time schedules and the impacts for changes to the cluster environment. The decommissioning of Scalable Compute Unit 7 (SCU 7) has already begun and will be complete by early November. After the availability of the new Scalable Compute Unit 10 (SCU 10), the removal of Scalable Compute Units 1 through 4 will occur later this year. The end result will be the removal of all Intel Westmere processors from the Discover environment by the end of the 2014 calendar year. While we are taking resources out of the environment, users may run into longer wait times as the new systems are integrated into operations. In order to alleviate this potential issue, the NCCS has coordinated with projects that are currently using dedicated systems in order to free up resources for general processing. Given the current workload, we are confident that curtailing the dedication of resources for specialized projects will keep the wait times at their current levels. The NCCS will be communicating frequently with our user community throughout the integration efforts. Email will be sent out with information about the systems that are being taken off line and added. This web page, while subject to change, will be updated frequently, and as always, users are welcome to contact the support desk (support@nccs. nasa. gov) with any questions. There is never a good time to remove computational capabilities, but the end result will be a major boost to the overall science community. Throughout this process, we are committed to doing everything possible to work with you to get your science done. We are asking for your patience as we work through these changes to the environment, and we are excited about the future science that will be accomplished using the NCCS resources! Sincerely, The NCCS Leadership Team NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 17

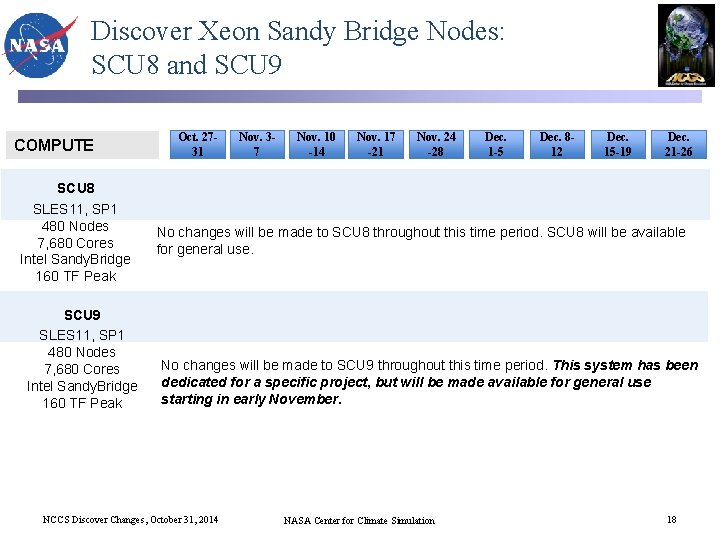

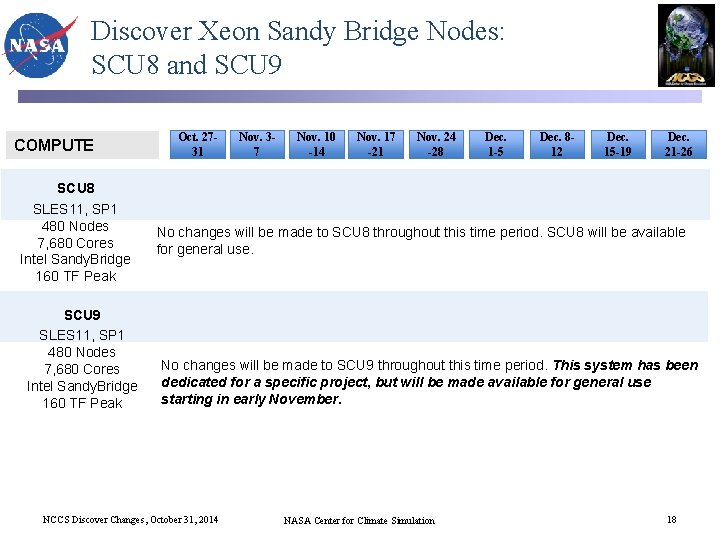

Discover Xeon Sandy Bridge Nodes: SCU 8 and SCU 9 COMPUTE SCU 8 SLES 11, SP 1 480 Nodes 7, 680 Cores Intel Sandy. Bridge 160 TF Peak SCU 9 SLES 11, SP 1 480 Nodes 7, 680 Cores Intel Sandy. Bridge 160 TF Peak Oct. 2731 Nov. 37 Nov. 10 -14 Nov. 17 -21 Nov. 24 -28 Dec. 1 -5 Dec. 812 Dec. 15 -19 Dec. 21 -26 No changes will be made to SCU 8 throughout this time period. SCU 8 will be available for general use. No changes will be made to SCU 9 throughout this time period. This system has been dedicated for a specific project, but will be made available for general use starting in early November. NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 18

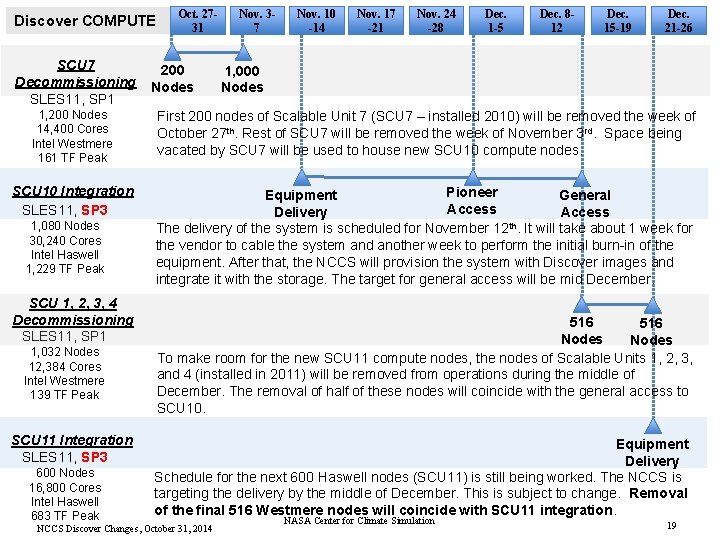

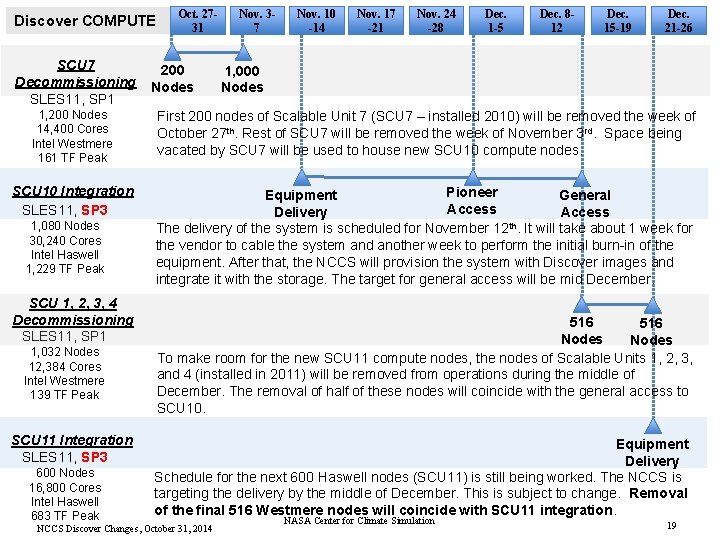

Discover COMPUTE SCU 7 Decommissioning SLES 11, SP 1 Oct. 2731 200 Nodes Nov. 37 Nov. 10 -14 Nov. 17 -21 Nov. 24 -28 Dec. 1 -5 Dec. 812 Dec. 15 -19 Dec. 21 -26 1, 000 Nodes 1, 200 Nodes 14, 400 Cores Intel Westmere 161 TF Peak First 200 nodes of Scalable Unit 7 (SCU 7 – installed 2010) will be removed the week of October 27 th. Rest of SCU 7 will be removed the week of November 3 rd. Space being vacated by SCU 7 will be used to house new SCU 10 compute nodes. SCU 10 Integration SLES 11, SP 3 Pioneer Equipment General Access Delivery Access th The delivery of the system is scheduled for November 12. It will take about 1 week for the vendor to cable the system and another week to perform the initial burn-in of the equipment. After that, the NCCS will provision the system with Discover images and integrate it with the storage. The target for general access will be mid December. 1, 080 Nodes 30, 240 Cores Intel Haswell 1, 229 TF Peak SCU 1, 2, 3, 4 Decommissioning SLES 11, SP 1 1, 032 Nodes 12, 384 Cores Intel Westmere 139 TF Peak SCU 11 Integration SLES 11, SP 3 600 Nodes 16, 800 Cores Intel Haswell 683 TF Peak 516 Nodes To make room for the new SCU 11 compute nodes, the nodes of Scalable Units 1, 2, 3, and 4 (installed in 2011) will be removed from operations during the middle of December. The removal of half of these nodes will coincide with the general access to SCU 10. Equipment Delivery Schedule for the next 600 Haswell nodes (SCU 11) is still being worked. The NCCS is targeting the delivery by the middle of December. This is subject to change. Removal of the final 516 Westmere nodes will coincide with SCU 11 integration. NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 19

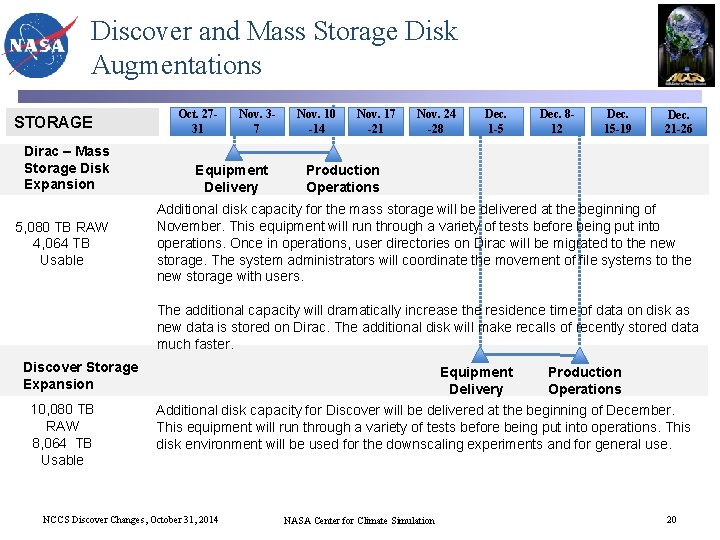

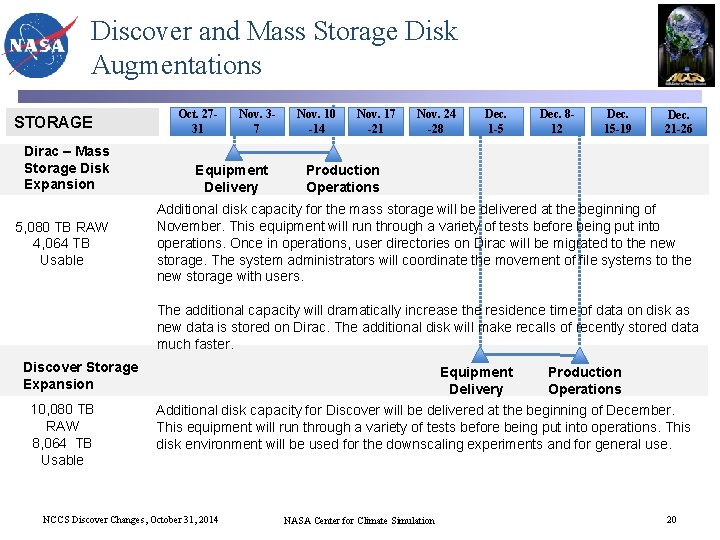

Discover and Mass Storage Disk Augmentations STORAGE Dirac – Mass Storage Disk Expansion 5, 080 TB RAW 4, 064 TB Usable Oct. 2731 Nov. 37 Equipment Delivery Nov. 10 -14 Nov. 17 -21 Nov. 24 -28 Dec. 1 -5 Dec. 812 Dec. 15 -19 Dec. 21 -26 Production Operations Additional disk capacity for the mass storage will be delivered at the beginning of November. This equipment will run through a variety of tests before being put into operations. Once in operations, user directories on Dirac will be migrated to the new storage. The system administrators will coordinate the movement of file systems to the new storage with users. The additional capacity will dramatically increase the residence time of data on disk as new data is stored on Dirac. The additional disk will make recalls of recently stored data much faster. Discover Storage Expansion 10, 080 TB RAW 8, 064 TB Usable Equipment Delivery Production Operations Additional disk capacity for Discover will be delivered at the beginning of December. This equipment will run through a variety of tests before being put into operations. This disk environment will be used for the downscaling experiments and for general use. NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 20

Total Number of Discover Intel Xeon Processor Cores as a Function of Time NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 21

Total Discover Peak Computing Capability as a Function of Time (Intel Xeon Processors Only) NCCS Discover Changes, October 31, 2014 NASA Center for Climate Simulation 22