MIMD Computers Based on Textbook Chapter 4 1

MIMD Computers Based on Textbook – Chapter 4 1

PMS Notation (pg. 59) (Bell & Newell, 1987) • Similar to a block notation, except usingle letters • Can augment letter with ( ) containing attributes 2

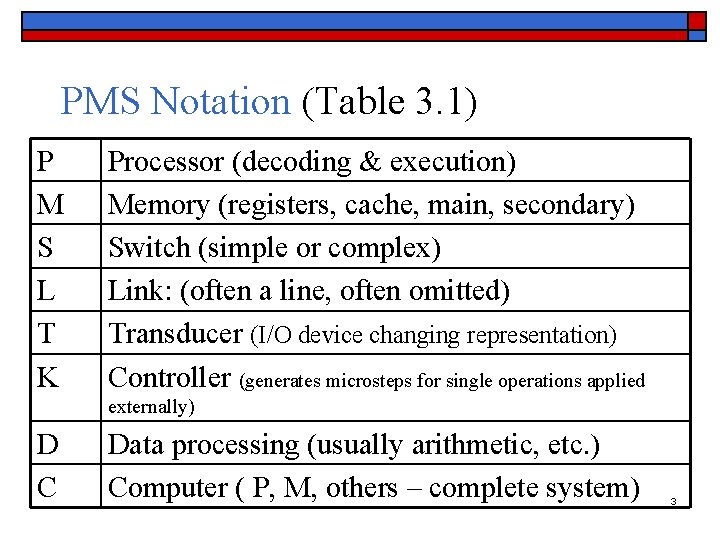

PMS Notation (Table 3. 1) P M S L T K Processor (decoding & execution) Memory (registers, cache, main, secondary) Switch (simple or complex) Link: (often a line, often omitted) Transducer (I/O device changing representation) Controller (generates microsteps for single operations applied externally) D C Data processing (usually arithmetic, etc. ) Computer ( P, M, others – complete system) 3

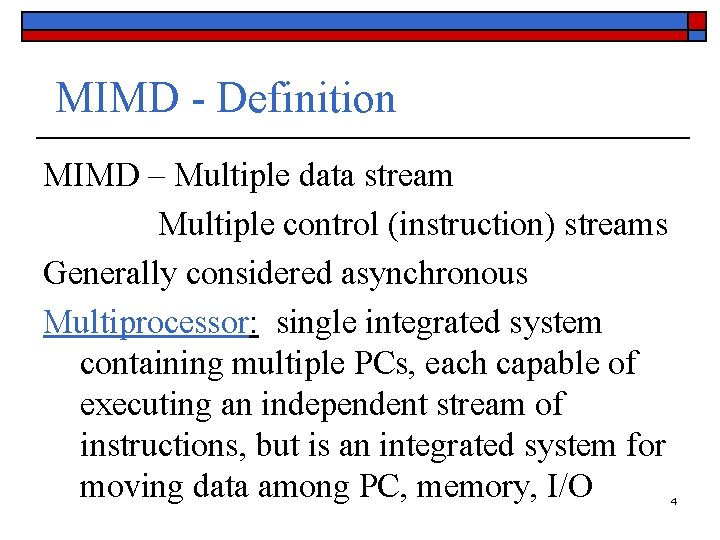

MIMD - Definition MIMD – Multiple data stream Multiple control (instruction) streams Generally considered asynchronous Multiprocessor: single integrated system containing multiple PCs, each capable of executing an independent stream of instructions, but is an integrated system for moving data among PC, memory, I/O 4

MIMD – General Usage Can use each PC for a different job – multiprogramming o Our interest: use for one job o 5

Granularity/Coupling Course Grain/Loosely Coupled: infrequent data communication separated by long periods of independent computations Fine Grain/Tightly Coupled: frequent data communications, usually in small amounts Grain: determined by program subroutines, basic blocks, stream or machine level 6

Types of MIMD Characterized by how data/information from one PC is made available to other PCs n Shared memory n Message passing o o Fixed connection Distributed memory 7

Switches In distributed memory –> interconnection n. w. Features: Bandwidth: bytes per second Bisection bandwidth Latency: total time from transmission to reception Concurrency: number of independent connections that can be made 8

Hybrid Computers – Mixed Type Saw many in the class presentations Many shared memory PC have some local memory NUMA: non-uniform memory access – some memory locations have larger access time Clusters: group of shared memory PC’s plus memory “separated” from other clusters; clusters message passing between clusters 9

Shared Memory Features o o o Interprocessor communication via R/W instructions Memory: maybe physically distributed (banks), may have different access times, may collide in switch Memory latency maybe “long”, variable “Messages” thru switch generally one “word” Randomization of request maybe used to reduce memory collisions 10

Message Passing Features Aka Distributed Memory o o o Interprocessor communication via send/receive instructions R/W refer to local memory Data maybe collected into long messages before sending Long transmissions may mask latency Global scheduling maybe used to avoid message collisions 11

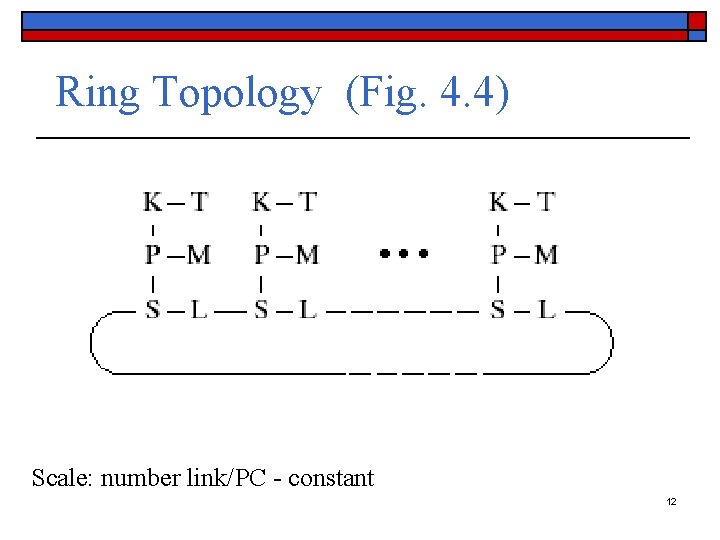

Ring Topology (Fig. 4. 4) Scale: number link/PC - constant 12

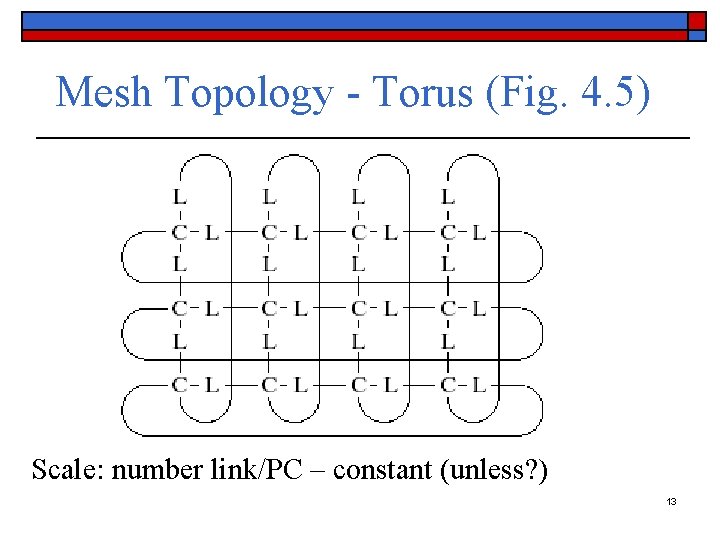

Mesh Topology - Torus (Fig. 4. 5) Scale: number link/PC – constant (unless? ) 13

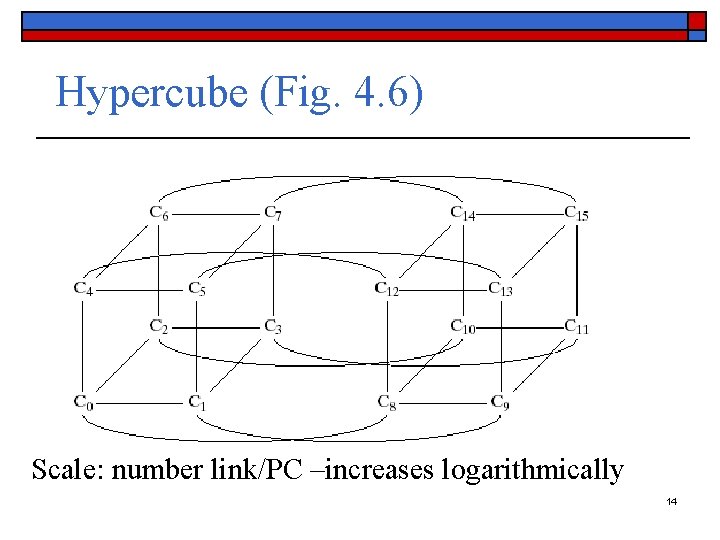

Hypercube (Fig. 4. 6) Scale: number link/PC –increases logarithmically 14

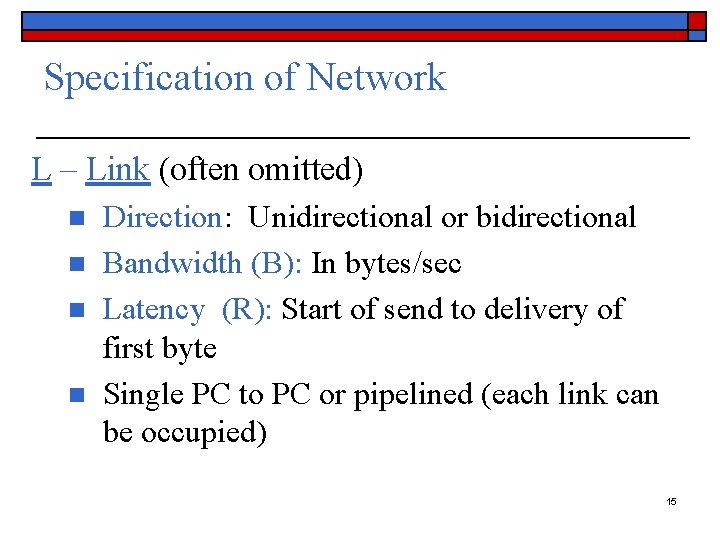

Specification of Network L – Link (often omitted) n n Direction: Unidirectional or bidirectional Bandwidth (B): In bytes/sec Latency (R): Start of send to delivery of first byte Single PC to PC or pipelined (each link can be occupied) 15

- Slides: 15