Media Streaming Protocols CSE 228 Multimedia Systems May

- Slides: 16

Media Streaming Protocols CSE 228: Multimedia Systems May 29 th, 2003 Presented by: Janice Ng and Yekaterina Tsipenyuk

Why the Traditional Protocols Like HTTP, TCP, and UDP Are Not Sufficient HTTP • Designers of HTTP had fixed media in mind: HTML, images etc. • HTTP does not target stored continuous media (i. e. , audio, video etc. ) TCP • Reliable, in-order delivery, but no enforcement of timing constraints for continuity of media; retransmissions can violate time constraints • Overhead of acknowledging packets – when all applications care about is getting a packet on time UDP • Eliminates acknowledgement overhead • Very primitive: no flow control, no synchronization between stream, no higher level functions for multimedia

Currently Used Media Streaming Protocols Protocol Stack • RTSP • • RTP and RTCP • • • Real Time Streaming Protocol Real Time Control Protocol UDP or TCP Functions • Application level functions: • Play, Fast Forward, Rewind • Stream synchronization, timing, jitter control, rate manipulation • Underlying transport protocol

Real Time Streaming Protocol: RTSP: RFC 2326 • Client-server application layer protocol • For user to control display: rewind, fast forward, pause, resume, repositioning, etc… Example - Real. Networks • Server and player use RTSP to send control info to each other What it doesn’t do: • Does not define how audio/video is encapsulated for streaming over network • Does not restrict how streamed media is transported; it can be transported over UDP or TCP • Does not specify how the media player buffers audio/video • Does not offer Qo. S type guarantees – Qo. S is another topic in an of itself

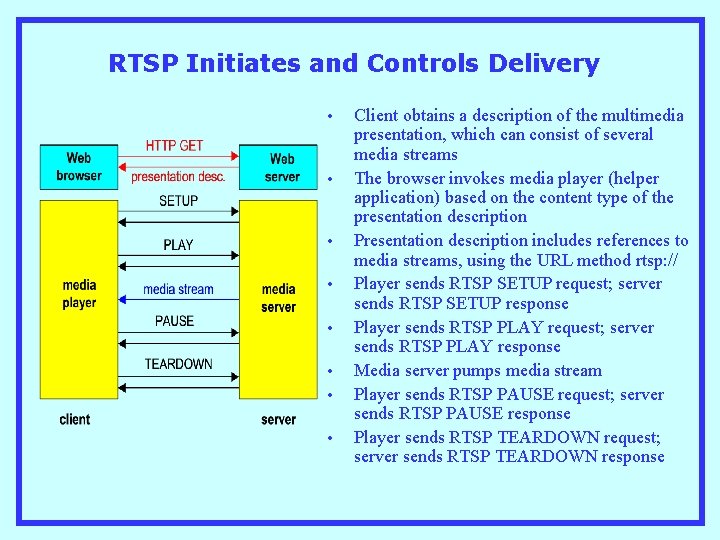

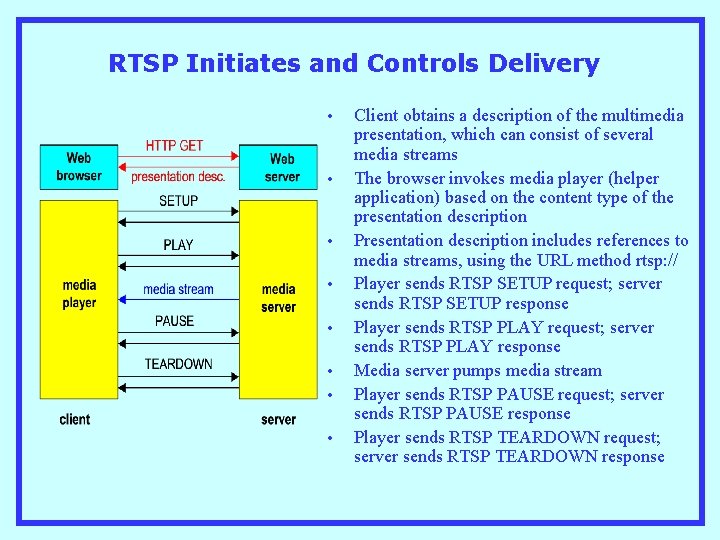

RTSP Initiates and Controls Delivery • • Client obtains a description of the multimedia presentation, which can consist of several media streams The browser invokes media player (helper application) based on the content type of the presentation description Presentation description includes references to media streams, using the URL method rtsp: // Player sends RTSP SETUP request; server sends RTSP SETUP response Player sends RTSP PLAY request; server sends RTSP PLAY response Media server pumps media stream Player sends RTSP PAUSE request; server sends RTSP PAUSE response Player sends RTSP TEARDOWN request; server sends RTSP TEARDOWN response

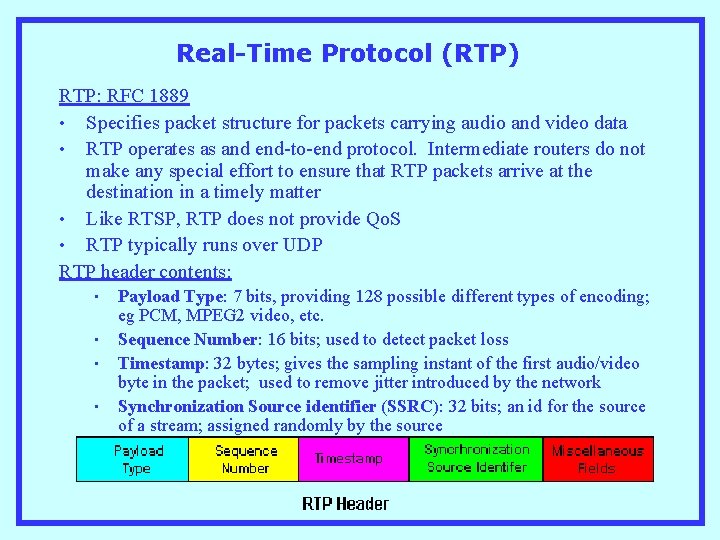

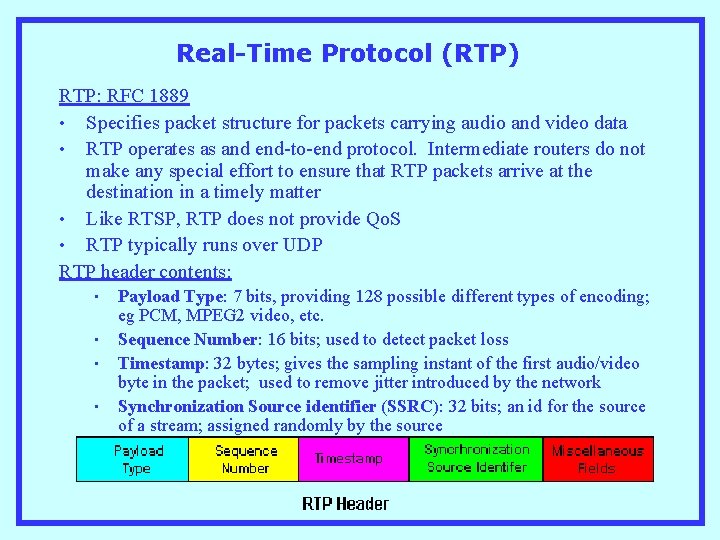

Real-Time Protocol (RTP) RTP: RFC 1889 • Specifies packet structure for packets carrying audio and video data • RTP operates as and end-to-end protocol. Intermediate routers do not make any special effort to ensure that RTP packets arrive at the destination in a timely matter • Like RTSP, RTP does not provide Qo. S • RTP typically runs over UDP RTP header contents: • • Payload Type: 7 bits, providing 128 possible different types of encoding; eg PCM, MPEG 2 video, etc. Sequence Number: 16 bits; used to detect packet loss Timestamp: 32 bytes; gives the sampling instant of the first audio/video byte in the packet; used to remove jitter introduced by the network Synchronization Source identifier (SSRC): 32 bits; an id for the source of a stream; assigned randomly by the source

RTP Streams • • • RTP allows each source (for example, a camera or a microphone) to be assigned its own independent RTP stream of packets • For example, for a videoconference between two participants, four RTP streams could be opened: two streams for transmitting the audio (one in each direction) and two streams for the video (again, one in each direction) However, some popular encoding techniques -- including MPEG 1 and MPEG 2 -- bundle the audio and video into a single stream during the encoding process. In this case, only one RTP stream is generated in each direction For a many-to-many multicast session, all of the senders and sources typically send their RTP streams into the same multicast tree with the same multicast address

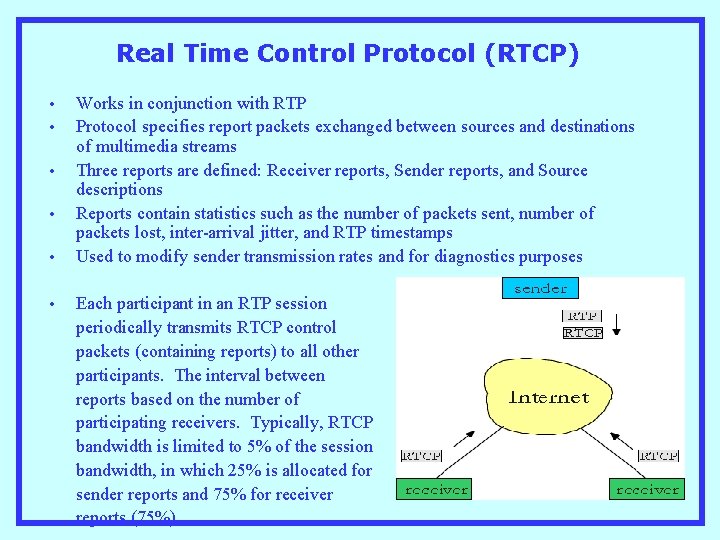

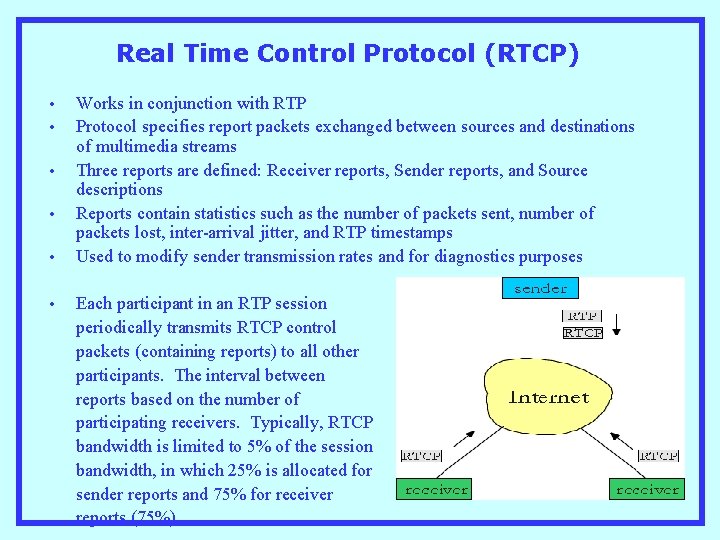

Real Time Control Protocol (RTCP) • • • Works in conjunction with RTP Protocol specifies report packets exchanged between sources and destinations of multimedia streams Three reports are defined: Receiver reports, Sender reports, and Source descriptions Reports contain statistics such as the number of packets sent, number of packets lost, inter-arrival jitter, and RTP timestamps Used to modify sender transmission rates and for diagnostics purposes Each participant in an RTP session periodically transmits RTCP control packets (containing reports) to all other participants. The interval between reports based on the number of participating receivers. Typically, RTCP bandwidth is limited to 5% of the session bandwidth, in which 25% is allocated for sender reports and 75% for receiver reports (75%)

Synchronization of Streams • • • RTCP is used to synchronize different media streams within a RTP session Consider a videoconferencing application in which each sender generates one RTP stream for video and one for audio The timestamps in these RTP packets are tied to the video and audio sampling clocks, and are not tied to the wall-clock time (i. e. , to real time) Each RTCP sender-report contains, for the most recently generated packet in the associated RTP stream, the timestamp of the RTP packet and the wall-clock time for when the packet was created, thus, associating the sampling clock to the real-time clock Receivers can use this association to synchronize the playback of audio and video

Recent Research Topics • • • Protocols for distributed video streaming • Packet-switched, best-effort networks • Multiple senders, single receiver • Forward Error Correction (FEC) to minimize packet loss Protocols for adaptive streaming of multimedia data • Quality adaptation for internet video streaming • Sender/receiver/proxy initiated adaptation • Objectives: network heterogeneity, heterogeneity in client’s capabilities, fluctuations in bandwidth Protocols for scalable on-demand media streaming with packet loss recovery

Prefetching Protocol for Streaming of Prerecorded Continuous Media in Wireless Environments • Problems: continuity is difficult to achieve, unreliability of wireless links • Properties of prerecorded continuous media: • Client consumption rate over duration of playback is known when streaming starts • Parts of the stream can be prefetched into client’s memory • Solutions: • Use Join-the-Shortest-Queue (JSQ) policy to balance prefetched reserves in wireless clients within wireless cells • Use channel probing to utilize transmission capacities of wireless links

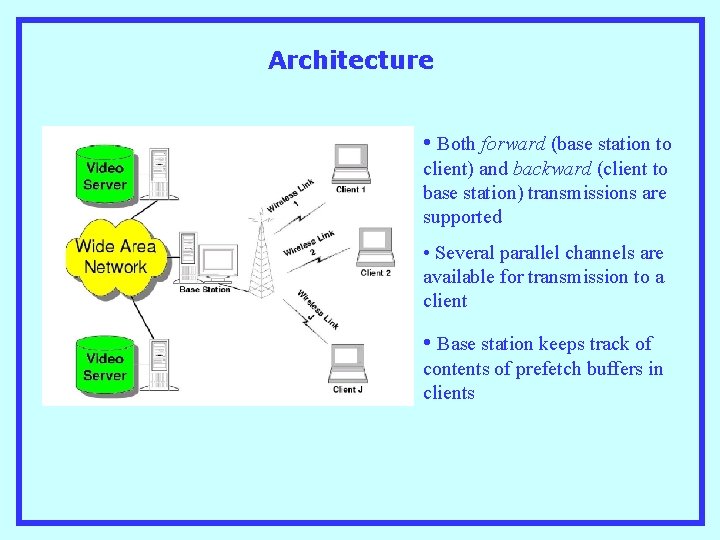

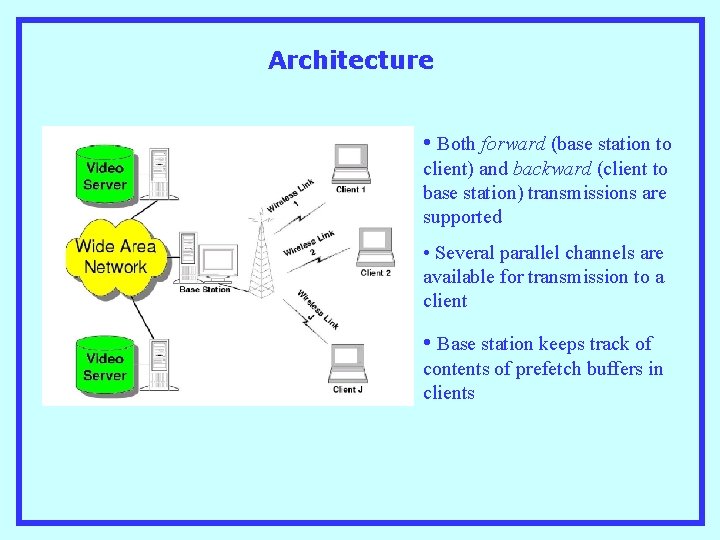

Architecture • Both forward (base station to client) and backward (client to base station) transmissions are supported • Several parallel channels are available for transmission to a client • Base station keeps track of contents of prefetch buffers in clients

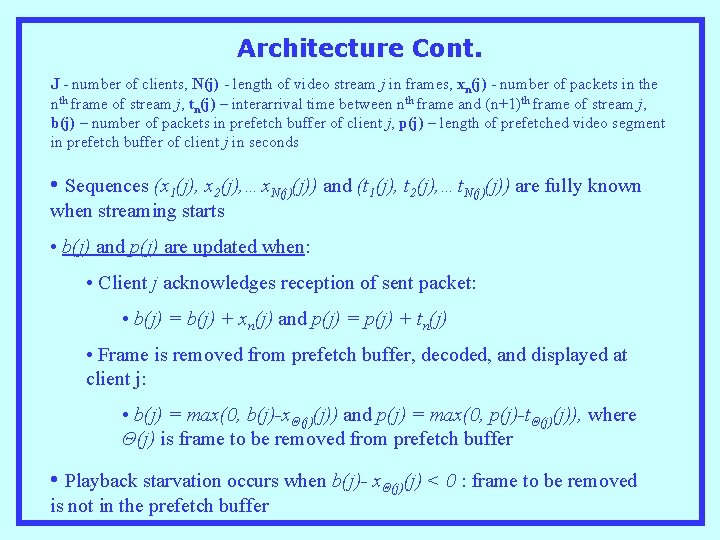

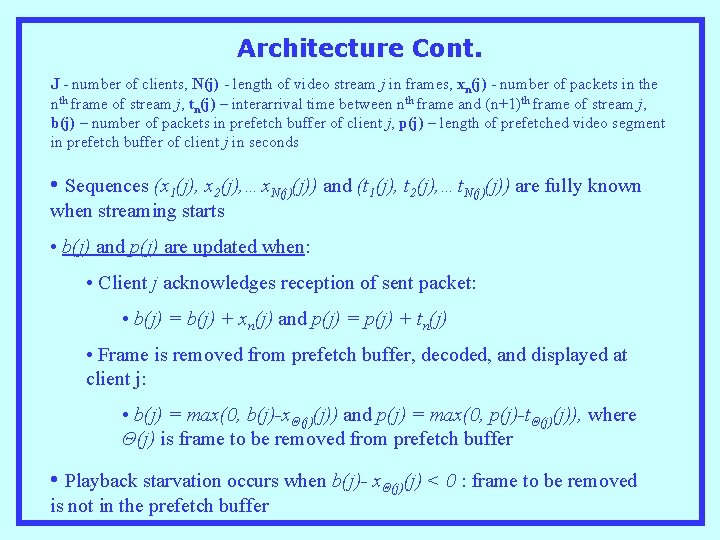

Architecture Cont. J - number of clients, N(j) - length of video stream j in frames, xn(j) - number of packets in the nth frame of stream j, tn(j) – interarrival time between nth frame and (n+1)th frame of stream j, b(j) – number of packets in prefetch buffer of client j, p(j) – length of prefetched video segment in prefetch buffer of client j in seconds • Sequences (x 1(j), x 2(j), …x. N(j)(j)) and (t 1(j), t 2(j), …t. N(j)(j)) are fully known when streaming starts • b(j) and p(j) are updated when: • Client j acknowledges reception of sent packet: • b(j) = b(j) + xn(j) and p(j) = p(j) + tn(j) • Frame is removed from prefetch buffer, decoded, and displayed at client j: • b(j) = max(0, b(j)-xΘ(j)(j)) and p(j) = max(0, p(j)-tΘ(j)(j)), where Θ(j) is frame to be removed from prefetch buffer • Playback starvation occurs when b(j)- xΘ(j)(j) < 0 : frame to be removed is not in the prefetch buffer

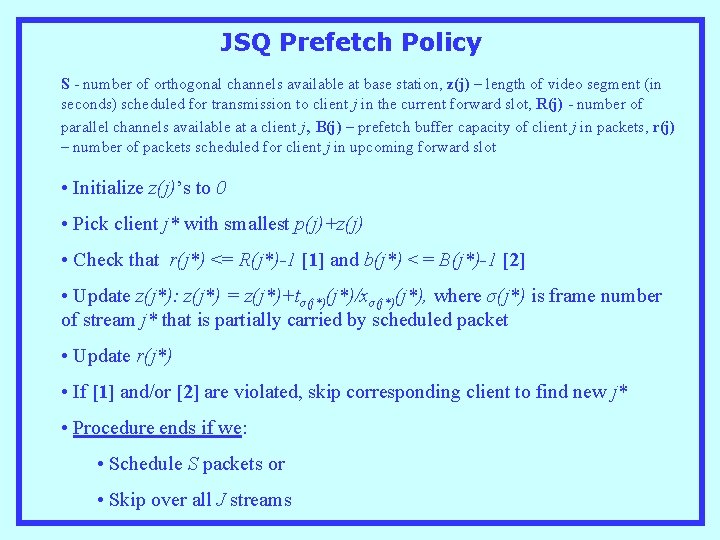

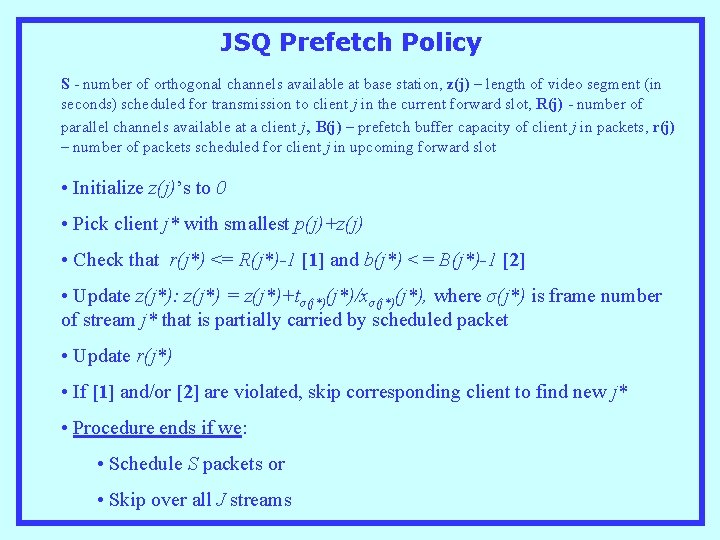

JSQ Prefetch Policy S - number of orthogonal channels available at base station, z(j) – length of video segment (in seconds) scheduled for transmission to client j in the current forward slot, R(j) - number of parallel channels available at a client j, B(j) – prefetch buffer capacity of client j in packets, r(j) – number of packets scheduled for client j in upcoming forward slot • Initialize z(j)’s to 0 • Pick client j* with smallest p(j)+z(j) • Check that r(j*) <= R(j*)-1 [1] and b(j*) <= B(j*)-1 [2] • Update z(j*): z(j*) = z(j*)+tσ(j*)/xσ(j*), where σ(j*) is frame number of stream j* that is partially carried by scheduled packet • Update r(j*) • If [1] and/or [2] are violated, skip corresponding client to find new j* • Procedure ends if we: • Schedule S packets or • Skip over all J streams

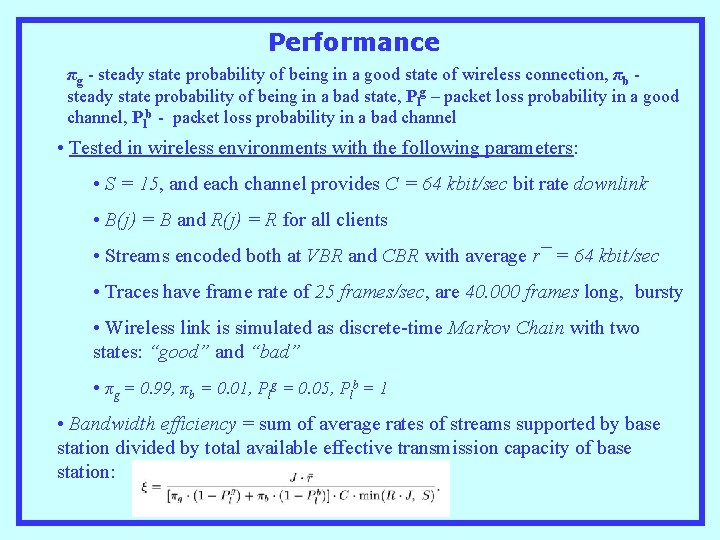

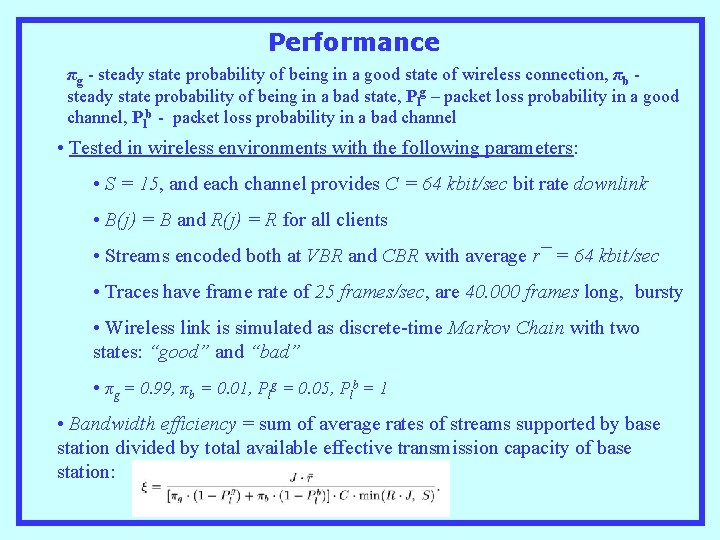

Performance πg - steady state probability of being in a good state of wireless connection, πb steady state probability of being in a bad state, Plg – packet loss probability in a good channel, Plb - packet loss probability in a bad channel • Tested in wireless environments with the following parameters: • S = 15, and each channel provides C = 64 kbit/sec bit rate downlink • B(j) = B and R(j) = R for all clients • Streams encoded both at VBR and CBR with average r¯ = 64 kbit/sec • Traces have frame rate of 25 frames/sec, are 40. 000 frames long, bursty • Wireless link is simulated as discrete-time Markov Chain with two states: “good” and “bad” • πg = 0. 99, πb = 0. 01, Plg = 0. 05, Plb = 1 • Bandwidth efficiency = sum of average rates of streams supported by base station divided by total available effective transmission capacity of base station:

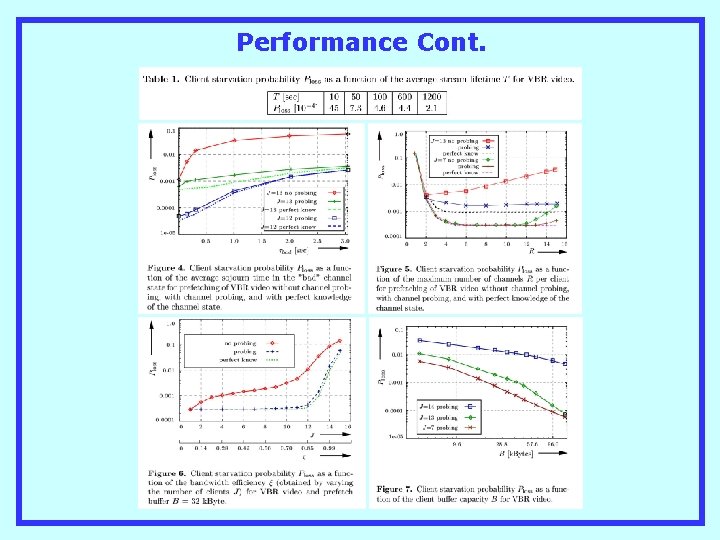

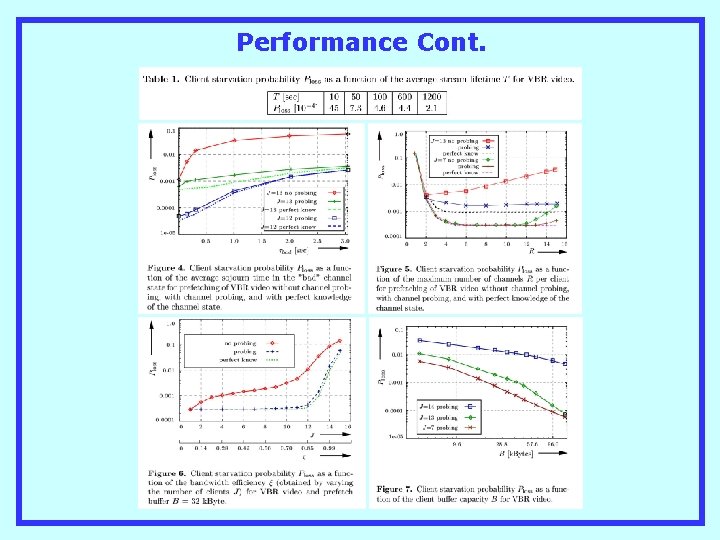

Performance Cont.