LCG Service Challenge at INFN Tier 1 CERN

- Slides: 6

LCG Service Challenge at INFN Tier 1 CERN, 24 February 2005 T. Ferrari, L. d. Agnello, G. Lore, S. Zani INFN-CNAF

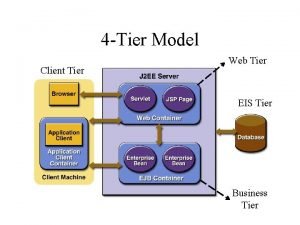

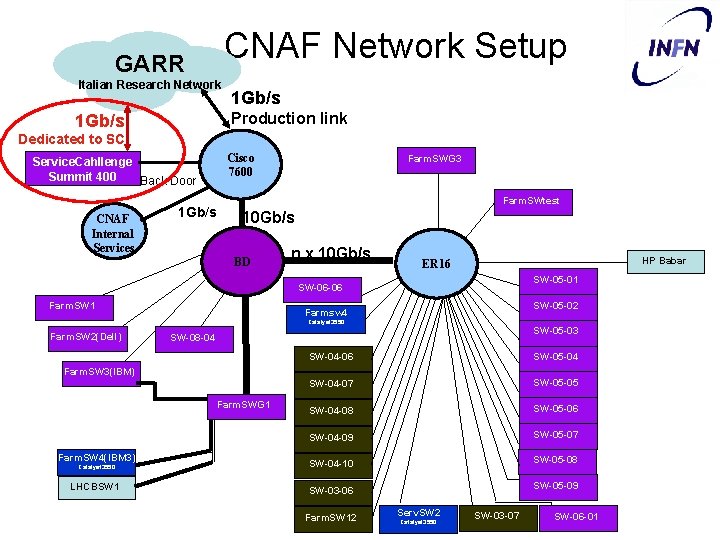

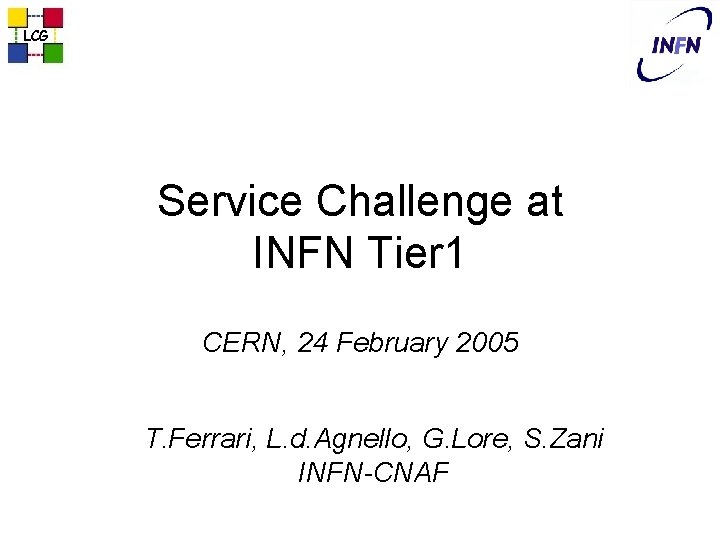

CNAF Network Setup GARR Italian Research Network 1 Gb/s Production link 1 Gb/s Dedicated to SC Cisco 7600 Service. Cahllenge Summit 400 Back Door CNAF Internal Services 1 Gb/s Farm. SWG 3 Farm. SWtest 10 Gb/s BD n x 10 Gb/s HP Babar ER 16 SW-05 -01 SW-06 -06 Farm. SW 1 SW-05 -02 Farmsw 4 Catalyst 3550 Farm. SW 2(Dell) SW-05 -03 SW-08 -04 SW-04 -06 SW-05 -04 SW-04 -07 SW-05 -05 SW-04 -08 SW-05 -06 SW-04 -09 SW-05 -07 SW-04 -10 SW-05 -08 Farm. SW 3(IBM) Farm. SWG 1 Farm. SW 4(IBM 3) Catalyst 3550 LHCBSW 1 SW-05 -09 SW-03 -06 Farm. SW 12 Serv. SW 2 Catalyst 3550 SW-03 -07 SW-06 -01

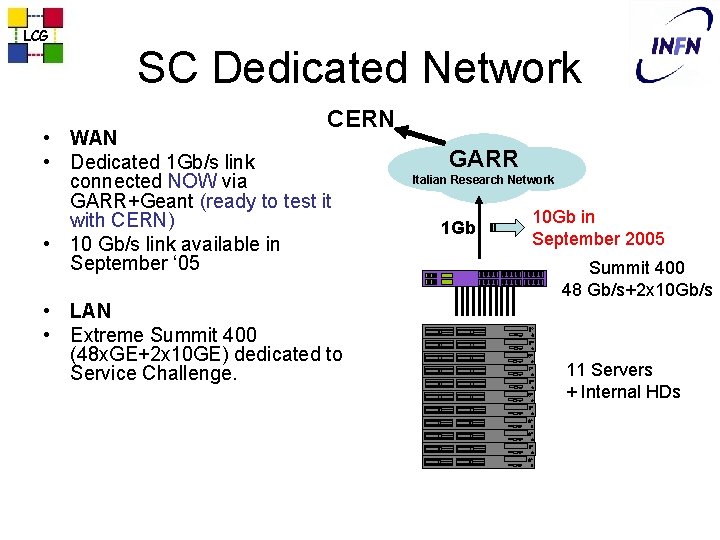

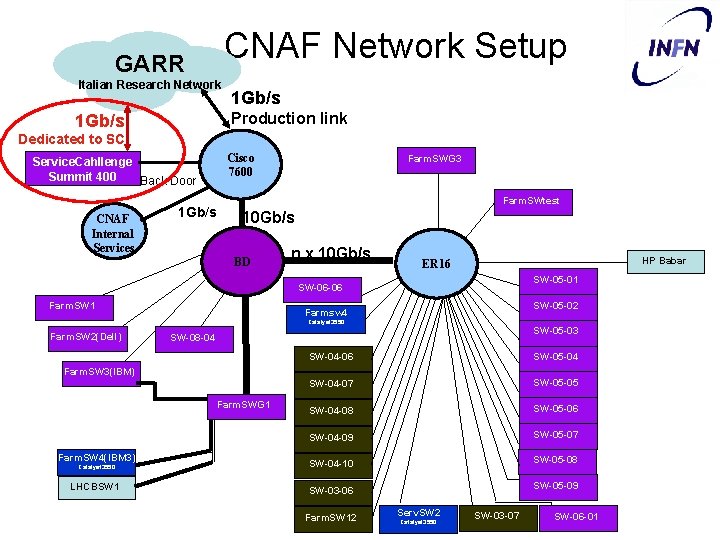

LCG SC Dedicated Network CERN • WAN • Dedicated 1 Gb/s link connected NOW via GARR+Geant (ready to test it with CERN) • 10 Gb/s link available in September ‘ 05 • LAN • Extreme Summit 400 (48 x. GE+2 x 10 GE) dedicated to Service Challenge. GARR Italian Research Network 1 Gb 10 Gb in September 2005 Summit 400 48 Gb/s+2 x 10 Gb/s 11 Servers + Internal HDs

LCG Transfer cluster (1) • Delivered (with 1 month of delay) 11 SUN Fire V 20 Dual Opteron (2, 2 Ghz) – 2 x 73 GB U 320 SCSI HD – 2 x Gbit Ethernet interfaces – 2 x PCI-X Slots • OS: SLC 3 (arch x 86_64), the kernel is 2. 4. 21 -20. Installation going on this week. Tests (bonnie++/IOzone, CPU benchmarks, iperf on LAN) within February. • Globus/Grid. FTP v 2. 4. 3, CASTOR SRM v 1. 2. 12, Stager CASTOR v 1. 7. 1. 5. Installation and tests within the first half of March.

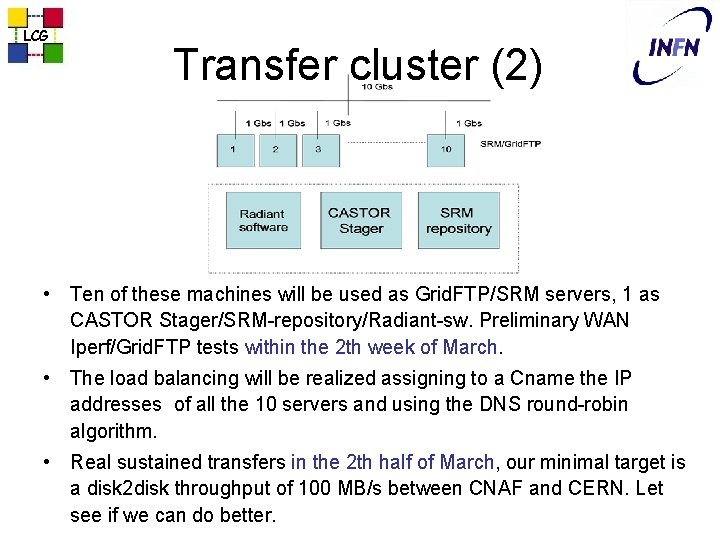

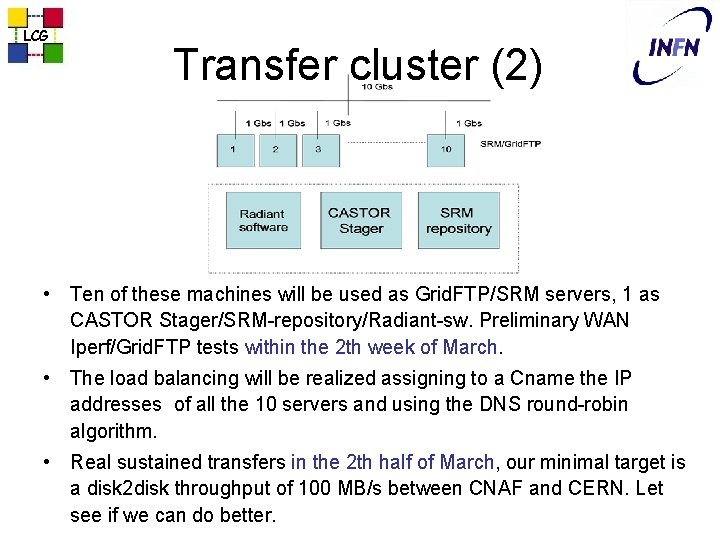

LCG Transfer cluster (2) • Ten of these machines will be used as Grid. FTP/SRM servers, 1 as CASTOR Stager/SRM-repository/Radiant-sw. Preliminary WAN Iperf/Grid. FTP tests within the 2 th week of March. • The load balancing will be realized assigning to a Cname the IP addresses of all the 10 servers and using the DNS round-robin algorithm. • Real sustained transfers in the 2 th half of March, our minimal target is a disk 2 disk throughput of 100 MB/s between CNAF and CERN. Let see if we can do better.

LCG Storage • For SC 2 we plan to use the internal disks (are 70 x 10=700 GB enough? ). • We can also provide different kinds of high performances Fiber Channel SAN attached devices (Infortrend Enstor, IBM Fast-T 900, . . ). • For SC 3 we can add CASTOR tape servers with IBM LTO 2 or STK 9940 B drives.