Kees van Deemter Generation of Referring Expressions a

- Slides: 11

Kees van Deemter Generation of Referring Expressions: a crash course Background information and Project HIT 2010

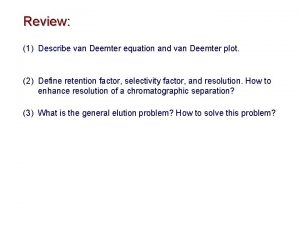

1. Background Information A survey paper (draft!) on Generation of Referring Expressions, which I’m writing with Emiel Krahmer (University of Tilburg). Go to the course web page: http: //www. csd. abdn. ac. uk/~kvdeemte/Har bin-2010/ then click on Survey (draft, in pdf format) Particularly relevant for you: Sections 1, 2, 5.

2. Project: Describing objects in a Knowledge Base

After the lecture and some reading, you will know about reference • To refer to an object is to identify it • GRE algorithms produce descriptions that refer in this strict sense • In daily life, it is often important not just to identify an object, but to say what’s special about it • Example: Given a database of digital cameras, where one camera is highlighted, describe this camera.

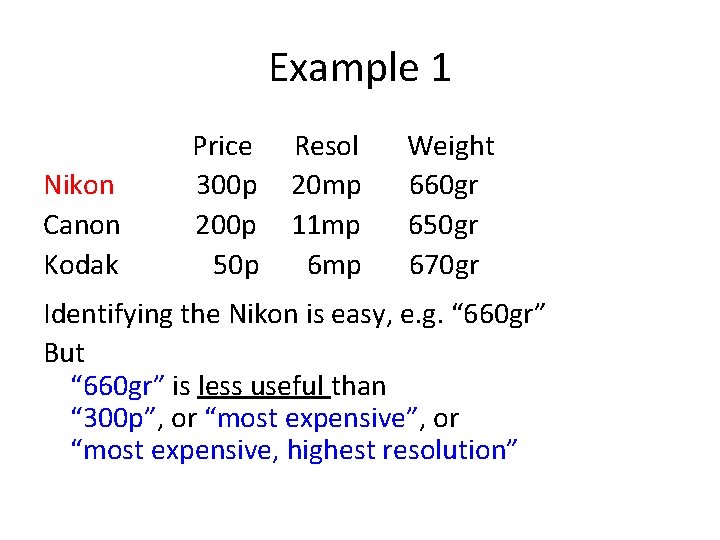

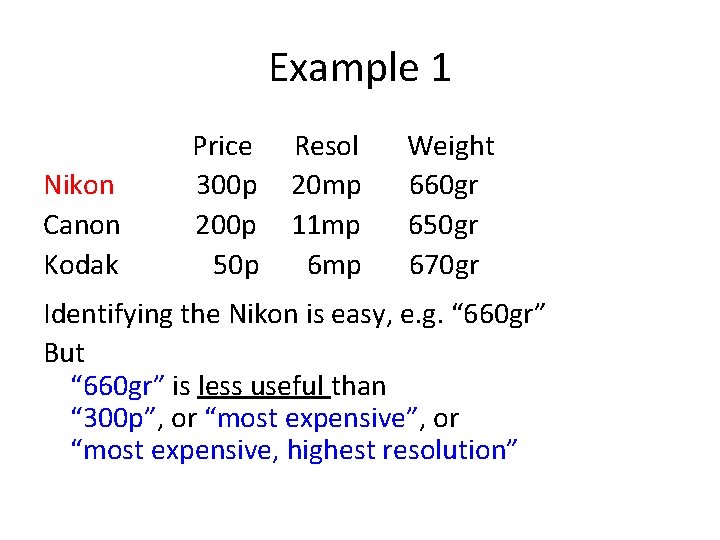

Example 1 Nikon Canon Kodak Price 300 p 200 p 50 p Resol 20 mp 11 mp 6 mp Weight 660 gr 650 gr 670 gr Identifying the Nikon is easy, e. g. “ 660 gr” But “ 660 gr” is less useful than “ 300 p”, or “most expensive, highest resolution”

PROJECT 1: Describing individual items • Choose a domain of products and a KB that contains products of this kind (e. g. , digital cameras, chosen from an existing web page) • Construct an NLG program that can describe any given item in the KB, in such a way that it is likely to be useful to a customer • Invent an evaluation procedure for your algorithm. Purpose: test the usefulness of the descriptions generated

PROJECT 1 • Your program should work on any KB that has the required format – But some objects may be difficult to describe in a useful way (e. g. , if some other objects are very similar to the highlighted object) • I want you to focus on content determination (i. e. , choice of properties is more important than choice of words) • I don’t expect you to have time to actually perform the evaluation

PROJECT 2: Compare & Contrast • Similar to PROJECT 1, but • Given a KB with m entities in it, and 0≤n≤m of these m entities are highlighted: compare and contrast these n • Special cases to watch out for: n=1 n=m

Example 2 Nikon Canon Kodak Price 300 p 200 p 50 p Resol 20 mp 11 mp 6 mp Weight 660 gr 650 gr 670 gr In English: “These are the two most expensive cameras in this KB. Of these two, the Nikon has the highest resolution. ” Focussing on content: Common: most expensive Contrast: Resol(Nikon) > Resol(Canon)

Your report should contain • What types of inputs your program accepts • A description of your algorithm • Examples of output texts (+ the inputs from which they were generated) • Discussion of pros and cons of your approach – What would you do differently next time? – What would you do next if you had time? • Installation & usual manuals for your program

Summing up • This project comes in two different flavours • In each case, you’ll be looking at a possible generalisation of GRE: – Project 1: Descriptions that do more (and probably less!) than identification – Project 2: Compare & Contrast • Questions, or alternative ideas? Let me know!