Inspector Joins 1 Shimin Chen 1 Anastassia Ailamaki

![Cache Partitioning [Shatdal et al. 94] [Boncz et al. ’ 99] [Manegold et al. Cache Partitioning [Shatdal et al. 94] [Boncz et al. ’ 99] [Manegold et al.](https://slidetodoc.com/presentation_image_h2/f1eb1d39379b871875447f319f0bed06/image-7.jpg)

![Cache Prefetching [Chen et al. 04] · Reduce impact of cache misses Exploit available Cache Prefetching [Chen et al. 04] · Reduce impact of cache misses Exploit available](https://slidetodoc.com/presentation_image_h2/f1eb1d39379b871875447f319f0bed06/image-8.jpg)

- Slides: 35

Inspector Joins 1 Shimin Chen 1 Anastassia Ailamaki 1 Phillip B. Gibbons 2 Todd C. Mowry 1, 2 Carnegie Mellon University 2 Intel Research Pittsburgh Databases @Carnegie Mellon

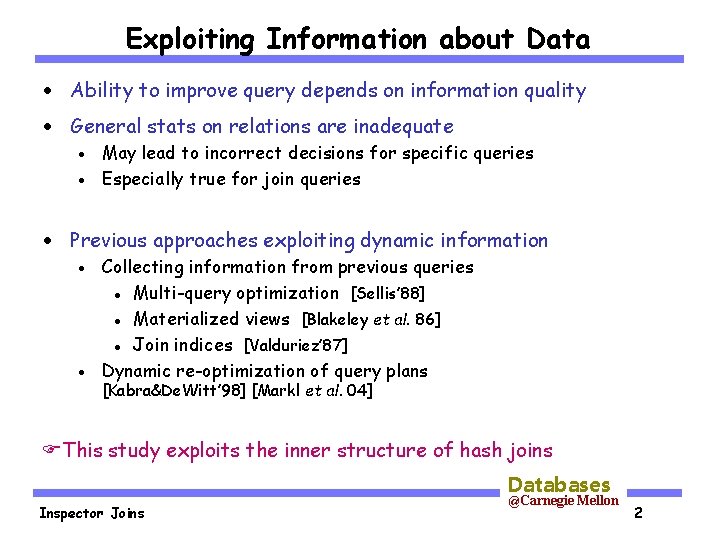

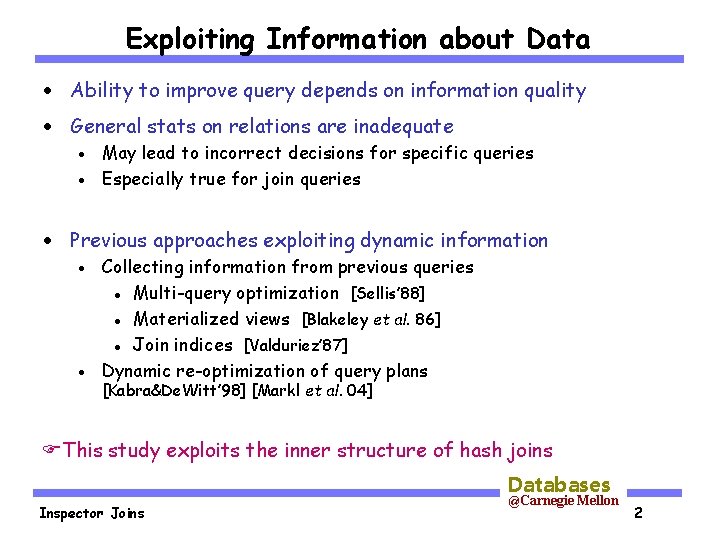

Exploiting Information about Data · Ability to improve query depends on information quality · General stats on relations are inadequate May lead to incorrect decisions for specific queries · Especially true for join queries · · Previous approaches exploiting dynamic information Collecting information from previous queries · Multi-query optimization [Sellis’ 88] · Materialized views [Blakeley et al. 86] · Join indices [Valduriez’ 87] · Dynamic re-optimization of query plans · [Kabra&De. Witt’ 98] [Markl et al. 04] This study exploits the inner structure of hash joins Databases Inspector Joins @Carnegie Mellon 2

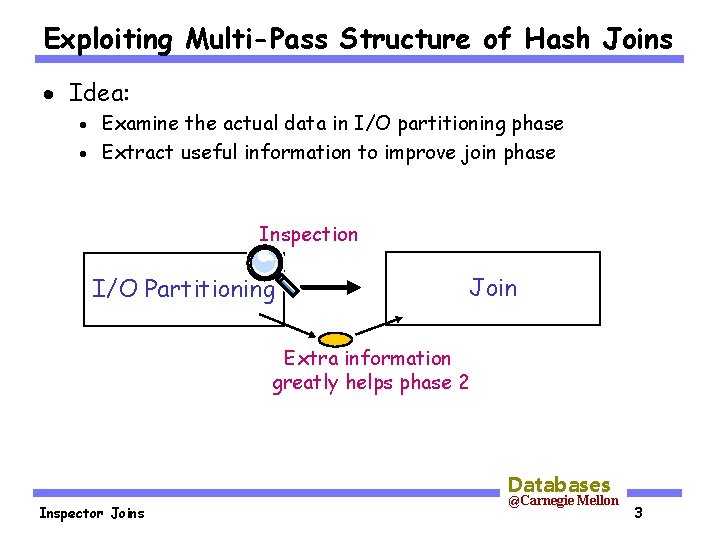

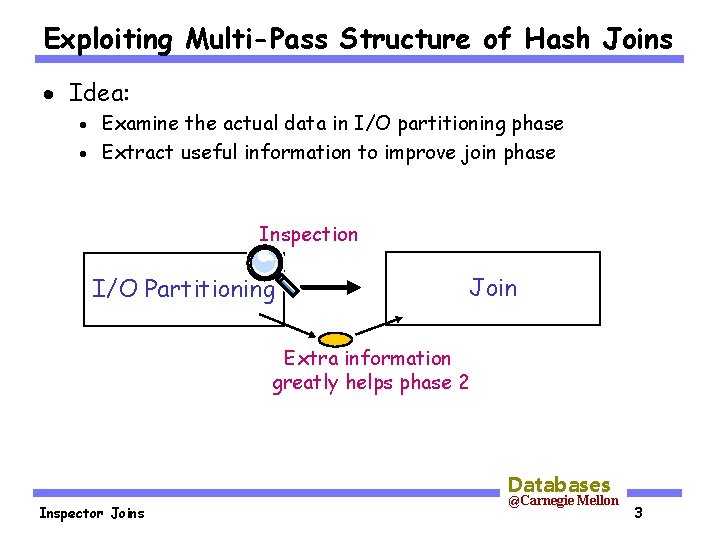

Exploiting Multi-Pass Structure of Hash Joins · Idea: Examine the actual data in I/O partitioning phase · Extract useful information to improve join phase · Inspection I/O Partitioning Join Extra information greatly helps phase 2 Databases Inspector Joins @Carnegie Mellon 3

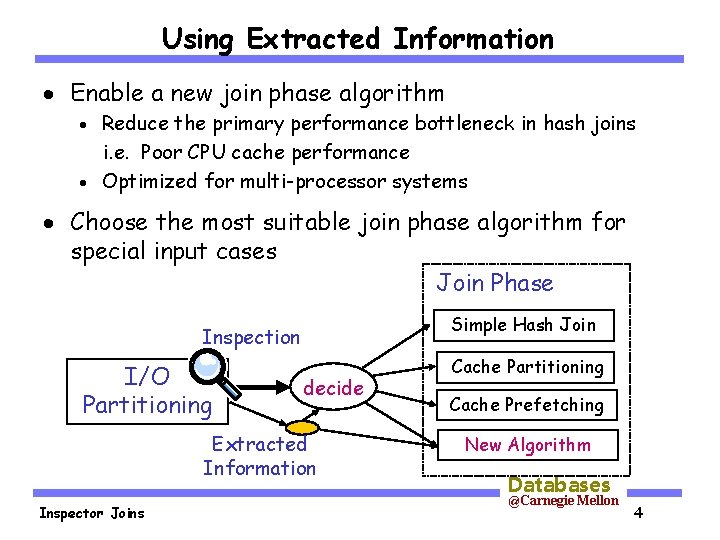

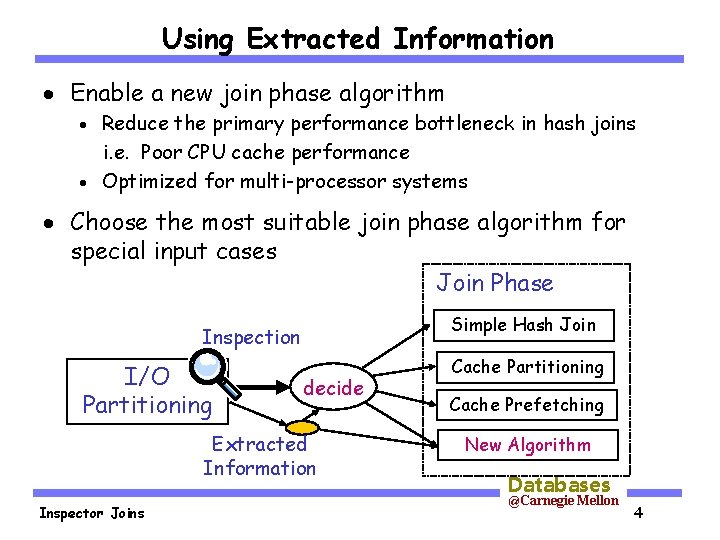

Using Extracted Information · Enable a new join phase algorithm Reduce the primary performance bottleneck in hash joins i. e. Poor CPU cache performance · Optimized for multi-processor systems · · Choose the most suitable join phase algorithm for special input cases Join Phase Simple Hash Join Inspection I/O Partitioning decide Extracted Information Inspector Joins Cache Partitioning Cache Prefetching New Algorithm Databases @Carnegie Mellon 4

Outline · Motivation · Previous hash join algorithms · Hash join performance on SMP systems · Inspector join · Experimental results · Conclusions Databases Inspector Joins @Carnegie Mellon 5

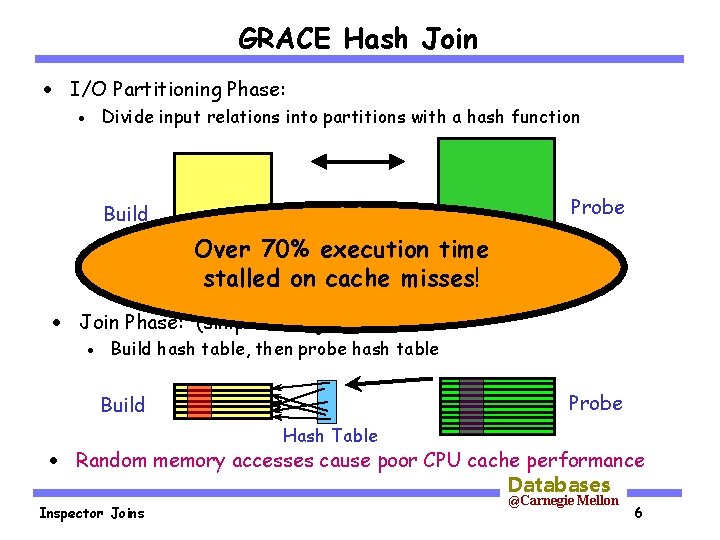

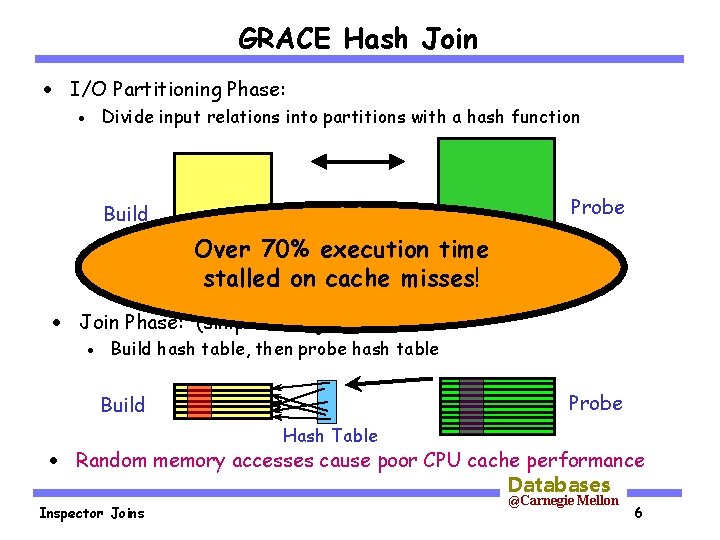

GRACE Hash Join · I/O Partitioning Phase: Divide input relations into partitions with a hash function · Probe Build Over 70% execution time stalled on cache misses! · Join Phase: (simple hash join) · Build hash table, then probe hash table Probe Build Hash Table · Random memory accesses cause poor CPU cache performance Databases Inspector Joins @Carnegie Mellon 6

![Cache Partitioning Shatdal et al 94 Boncz et al 99 Manegold et al Cache Partitioning [Shatdal et al. 94] [Boncz et al. ’ 99] [Manegold et al.](https://slidetodoc.com/presentation_image_h2/f1eb1d39379b871875447f319f0bed06/image-7.jpg)

Cache Partitioning [Shatdal et al. 94] [Boncz et al. ’ 99] [Manegold et al. ’ 00] · Recursively produce cache-sized partitions after I/O partitioning Build Memory-sized Cache-sized Partitions Probe · Avoid cache misses when joining cache-sized partitions · Overhead of re-partitioning Inspector Joins Databases @Carnegie Mellon 7

![Cache Prefetching Chen et al 04 Reduce impact of cache misses Exploit available Cache Prefetching [Chen et al. 04] · Reduce impact of cache misses Exploit available](https://slidetodoc.com/presentation_image_h2/f1eb1d39379b871875447f319f0bed06/image-8.jpg)

Cache Prefetching [Chen et al. 04] · Reduce impact of cache misses Exploit available memory bandwidth · Overlap cache misses and computations · Insert cache prefetch instructions into code · Build Probe Hash Table · Still incurs the same number of cache misses Databases Inspector Joins @Carnegie Mellon 8

Outline · Motivation · Previous hash join algorithms · Hash join performance on SMP systems · Inspector join · Experimental results · Conclusions Databases Inspector Joins @Carnegie Mellon 9

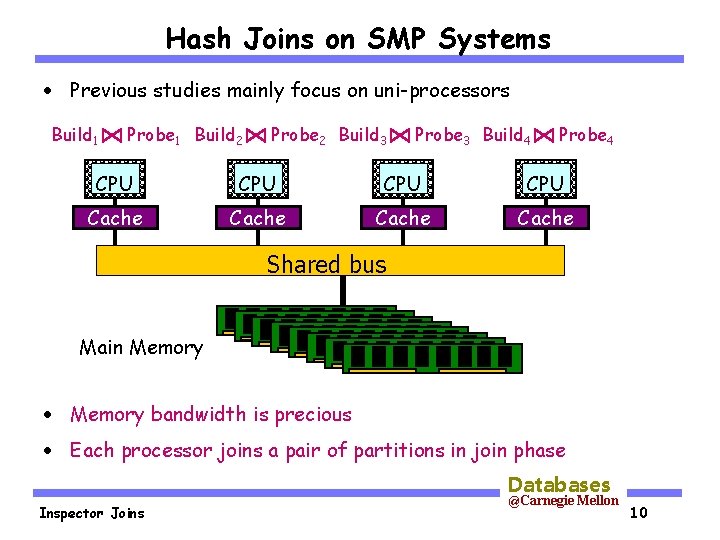

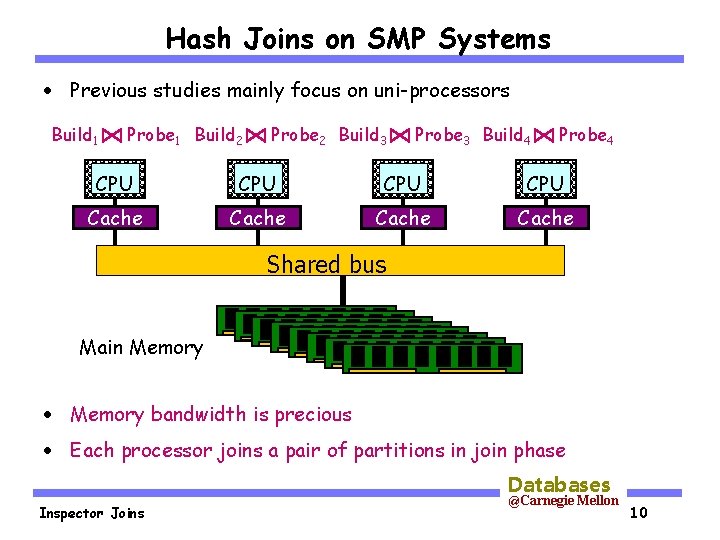

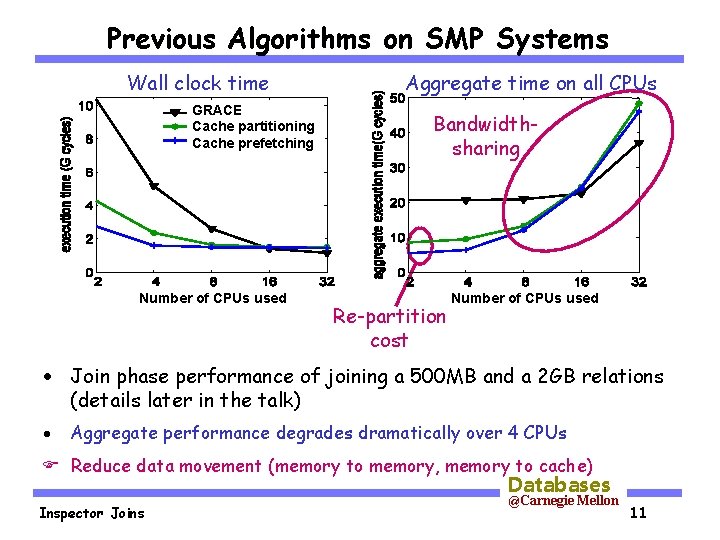

Hash Joins on SMP Systems · Previous studies mainly focus on uni-processors Build 1 Probe 1 Build 2 Probe 2 Build 3 Probe 3 Build 4 Probe 4 CPU CPU Cache Shared bus Main Memory · Memory bandwidth is precious · Each processor joins a pair of partitions in join phase Databases Inspector Joins @Carnegie Mellon 10

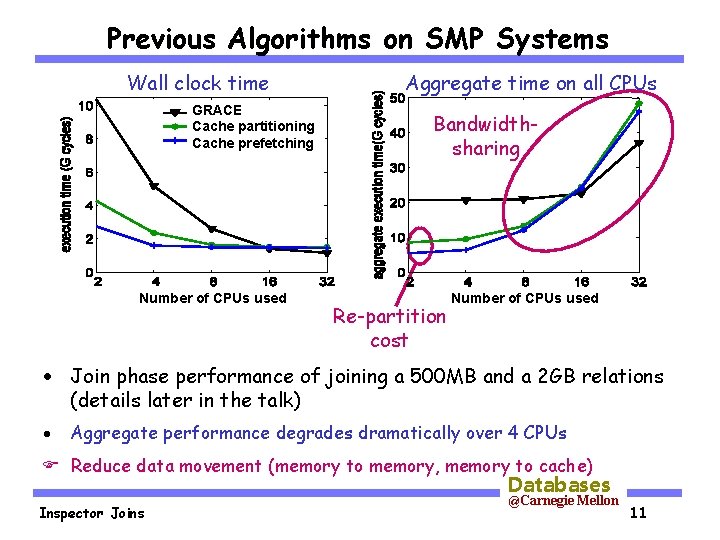

Previous Algorithms on SMP Systems Wall clock time GRACE Cache partitioning Cache prefetching Number of CPUs used Aggregate time on all CPUs Bandwidthsharing Re-partition cost Number of CPUs used · Join phase performance of joining a 500 MB and a 2 GB relations (details later in the talk) · Aggregate performance degrades dramatically over 4 CPUs Reduce data movement (memory to memory, memory to cache) Databases Inspector Joins @Carnegie Mellon 11

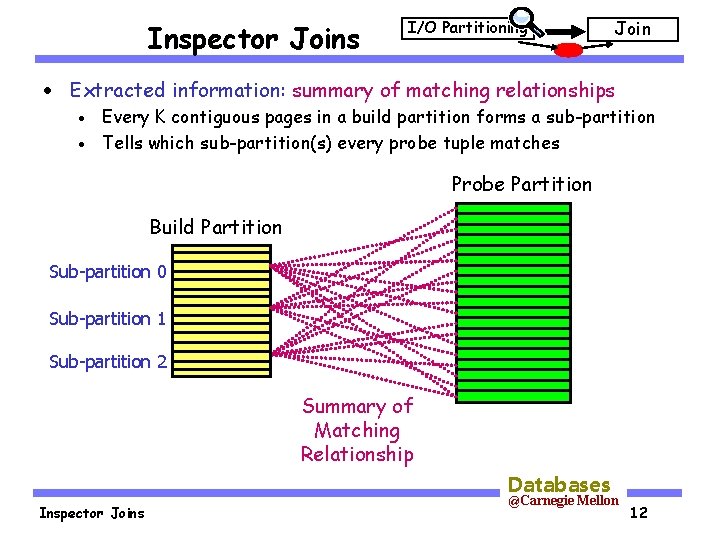

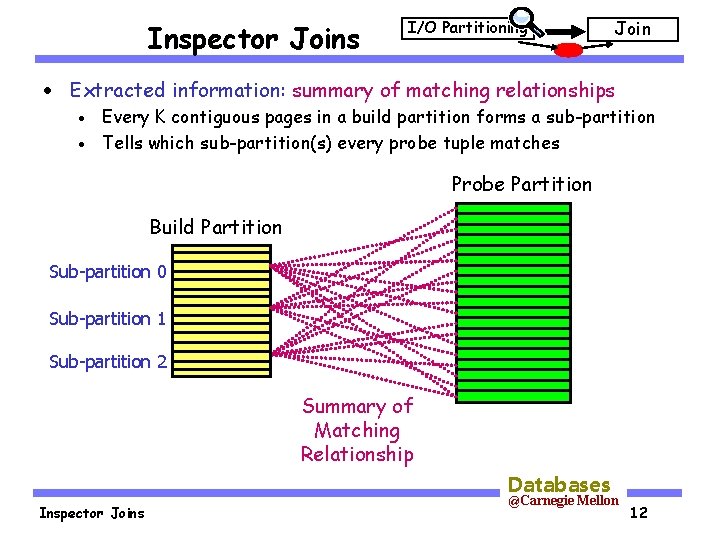

Inspector Joins I/O Partitioning Join · Extracted information: summary of matching relationships Every K contiguous pages in a build partition forms a sub-partition · Tells which sub-partition(s) every probe tuple matches · Probe Partition Build Partition Sub-partition 0 Sub-partition 1 Sub-partition 2 Summary of Matching Relationship Databases Inspector Joins @Carnegie Mellon 12

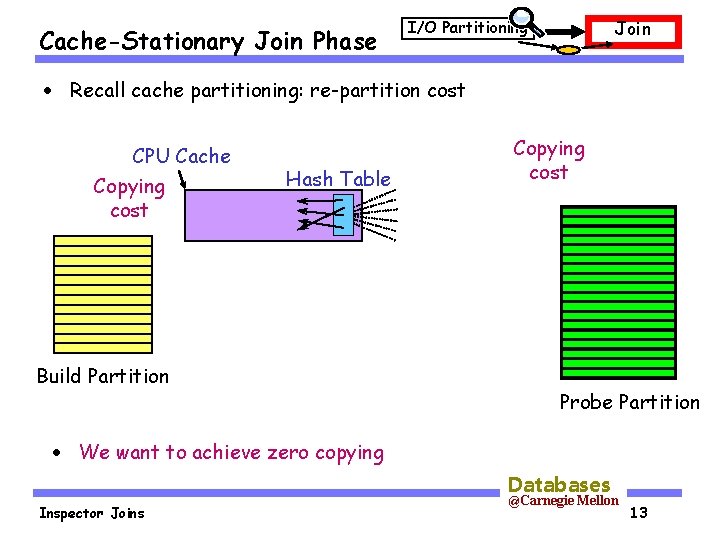

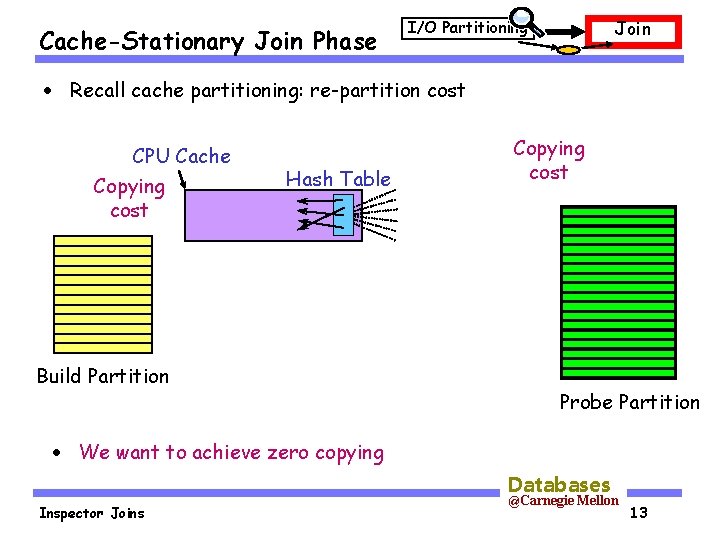

Cache-Stationary Join Phase Join I/O Partitioning · Recall cache partitioning: re-partition cost CPU Cache Copying cost Hash Table Build Partition Copying cost Probe Partition · We want to achieve zero copying Databases Inspector Joins @Carnegie Mellon 13

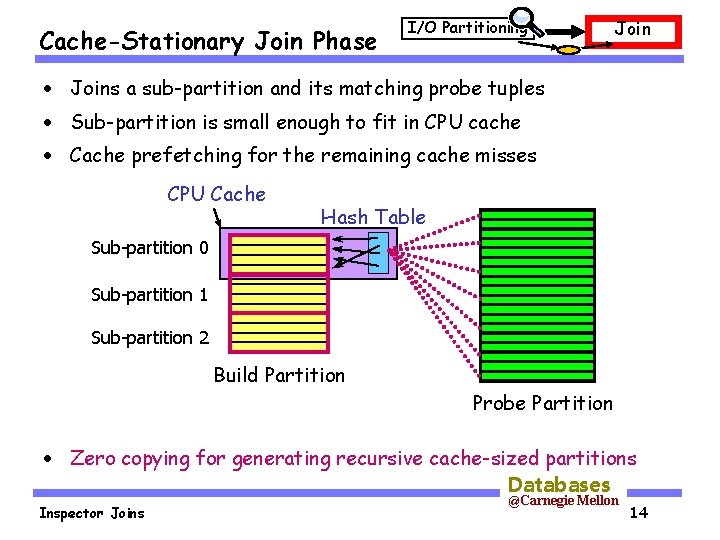

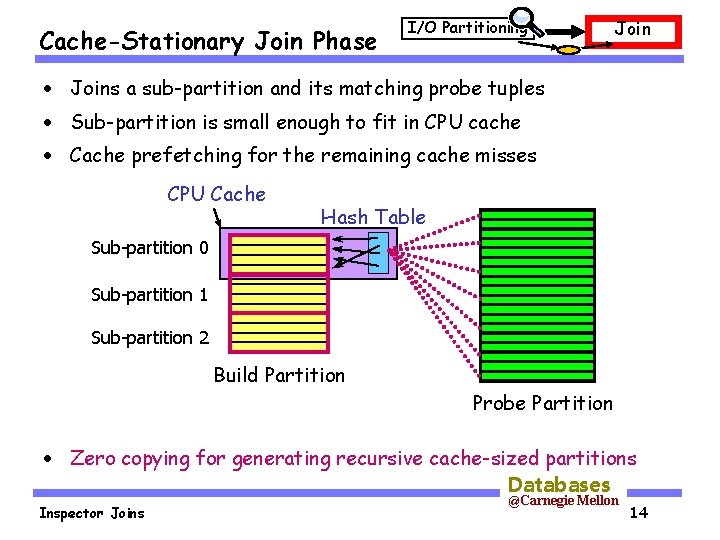

Cache-Stationary Join Phase I/O Partitioning Join · Joins a sub-partition and its matching probe tuples · Sub-partition is small enough to fit in CPU cache · Cache prefetching for the remaining cache misses CPU Cache Hash Table Sub-partition 0 Sub-partition 1 Sub-partition 2 Build Partition Probe Partition · Zero copying for generating recursive cache-sized partitions Databases Inspector Joins @Carnegie Mellon 14

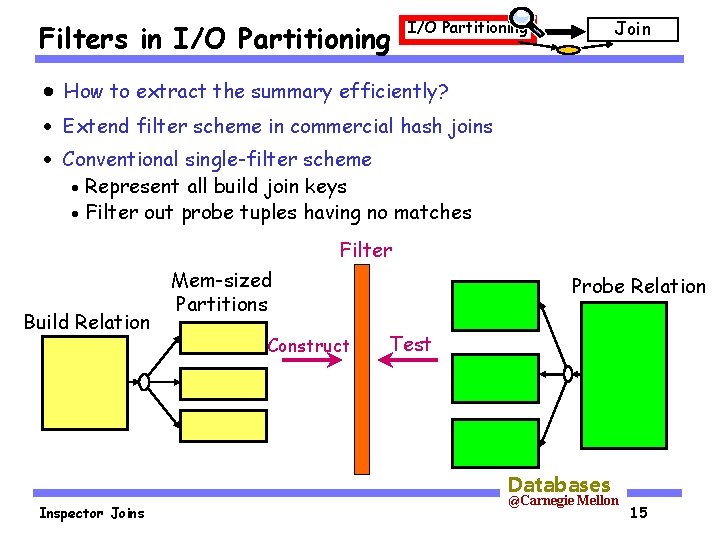

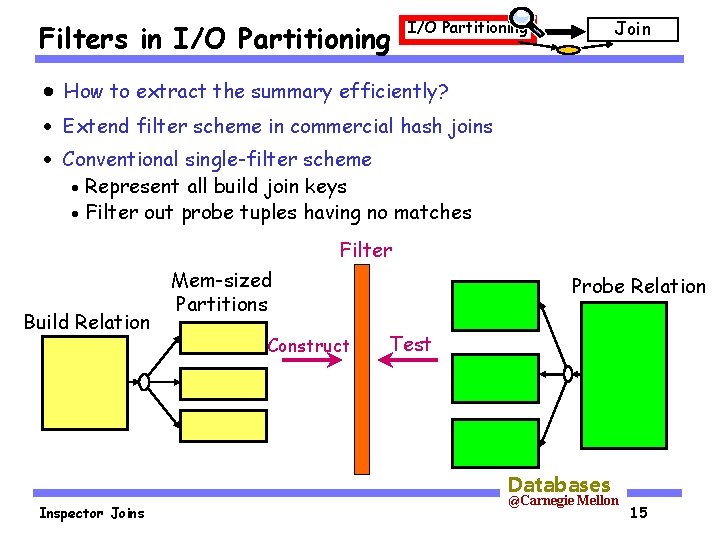

Filters in I/O Partitioning Join I/O Partitioning · How to extract the summary efficiently? · Extend filter scheme in commercial hash joins · Conventional single-filter scheme · Represent all build join keys · Filter out probe tuples having no matches Filter Build Relation Mem-sized Partitions Construct Probe Relation Test Databases Inspector Joins @Carnegie Mellon 15

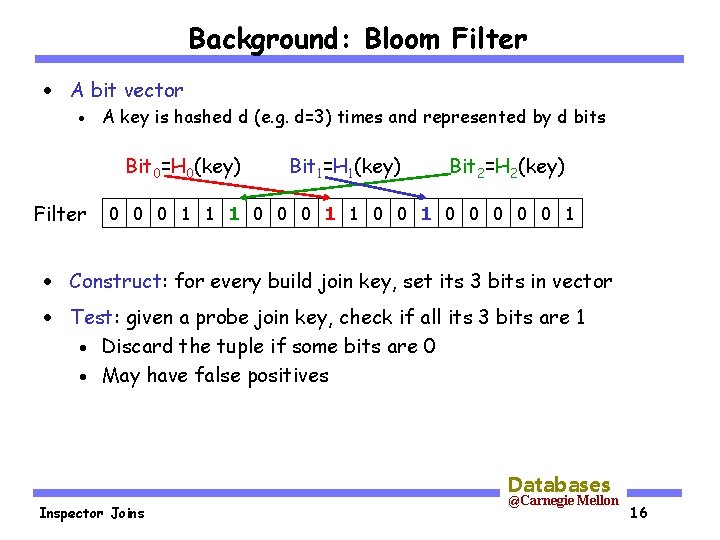

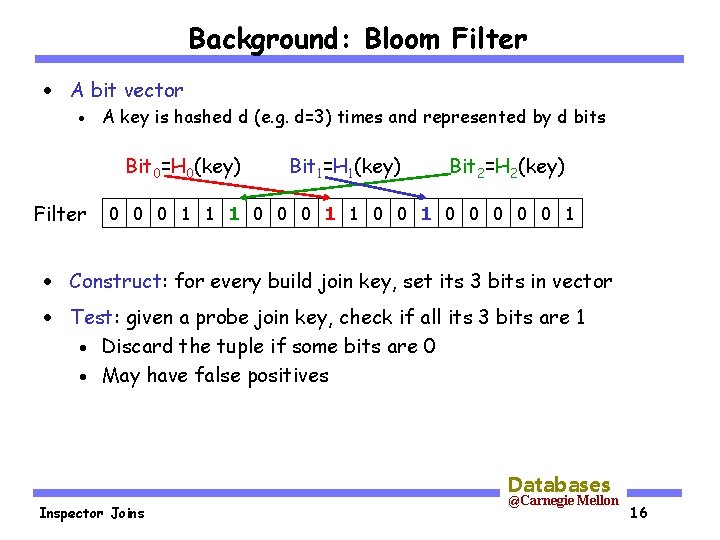

Background: Bloom Filter · A bit vector · A key is hashed d (e. g. d=3) times and represented by d bits Bit 0=H 0(key) Filter Bit 1=H 1(key) Bit 2=H 2(key) 0 0 0 1 1 1 0 0 0 0 0 1 · Construct: for every build join key, set its 3 bits in vector · Test: given a probe join key, check if all its 3 bits are 1 · Discard the tuple if some bits are 0 · May have false positives Databases Inspector Joins @Carnegie Mellon 16

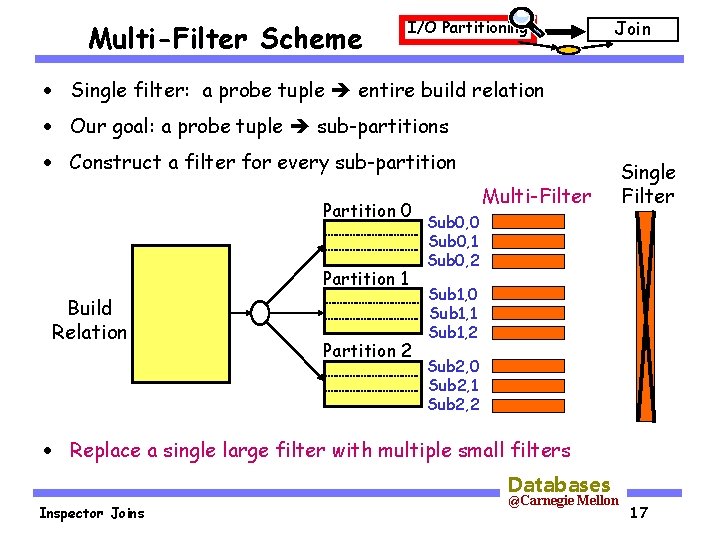

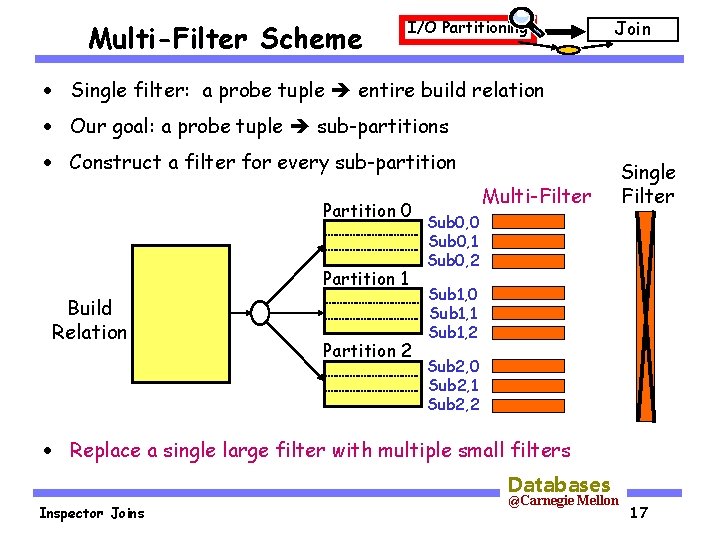

Multi-Filter Scheme I/O Partitioning Join · Single filter: a probe tuple entire build relation · Our goal: a probe tuple sub-partitions · Construct a filter for every sub-partition Partition 0 Partition 1 Build Relation Partition 2 Multi-Filter Single Filter Sub 0, 0 Sub 0, 1 Sub 0, 2 Sub 1, 0 Sub 1, 1 Sub 1, 2 Sub 2, 0 Sub 2, 1 Sub 2, 2 · Replace a single large filter with multiple small filters Databases Inspector Joins @Carnegie Mellon 17

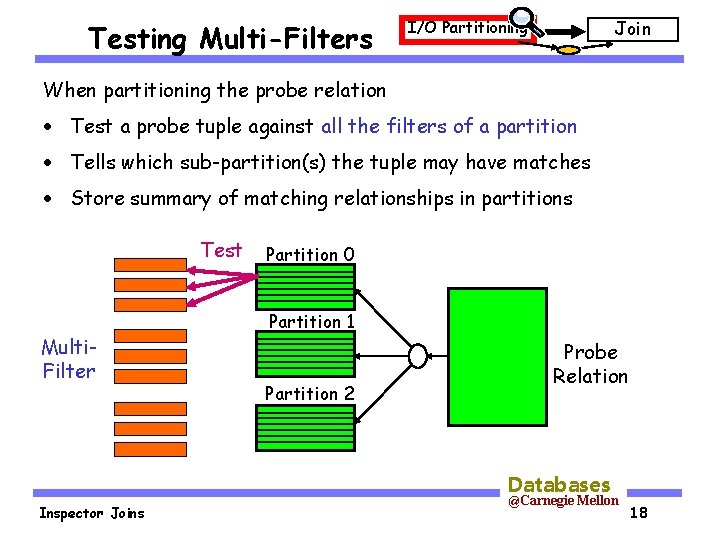

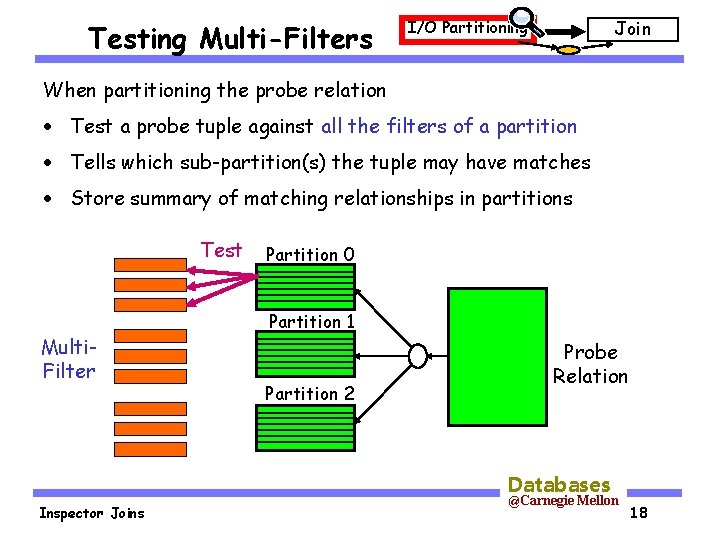

Testing Multi-Filters Join I/O Partitioning When partitioning the probe relation · Test a probe tuple against all the filters of a partition · Tells which sub-partition(s) the tuple may have matches · Store summary of matching relationships in partitions Test Multi. Filter Partition 0 Partition 1 Partition 2 Probe Relation Databases Inspector Joins @Carnegie Mellon 18

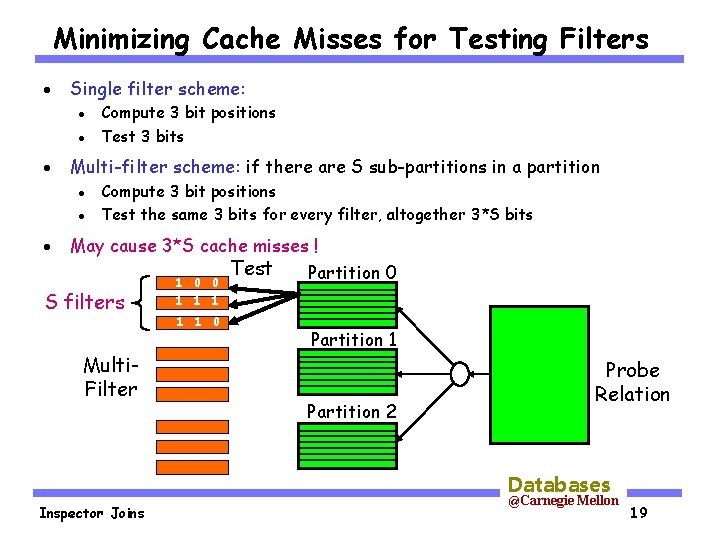

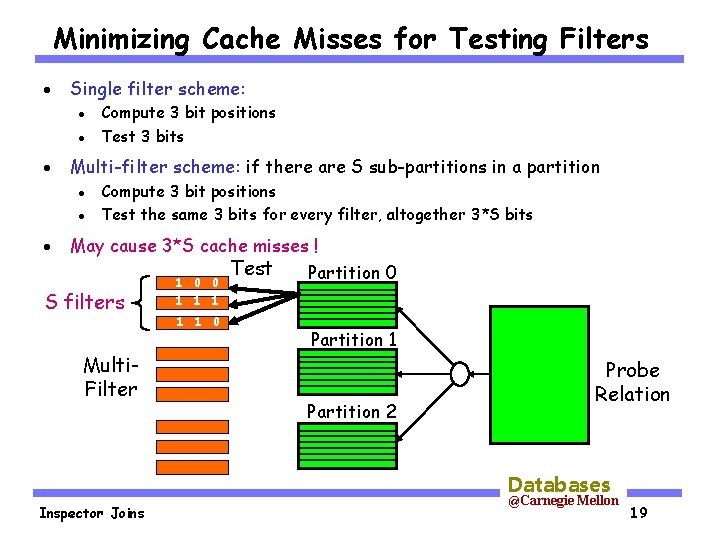

Minimizing Cache Misses for Testing Filters · Single filter scheme: · · · Multi-filter scheme: if there are S sub-partitions in a partition · · · Compute 3 bit positions Test 3 bits Compute 3 bit positions Test the same 3 bits for every filter, altogether 3*S bits May cause 3*S cache misses ! Test Partition 0 1 0 0 S filters Multi. Filter 1 1 1 0 Partition 1 Partition 2 Probe Relation Databases Inspector Joins @Carnegie Mellon 19

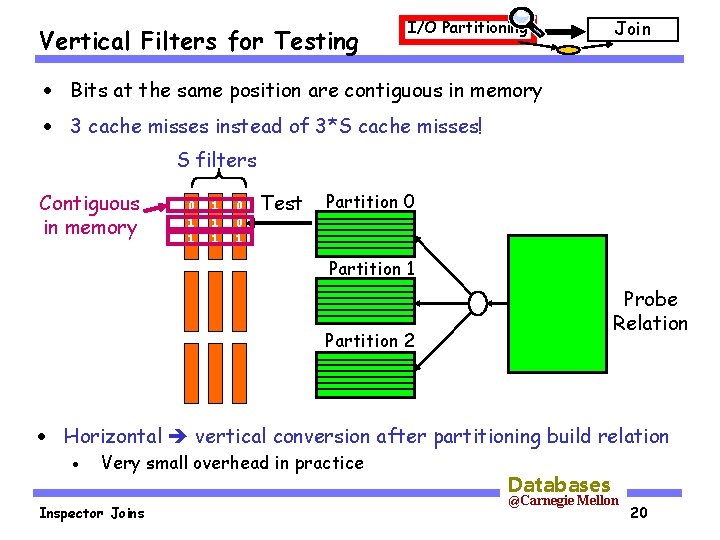

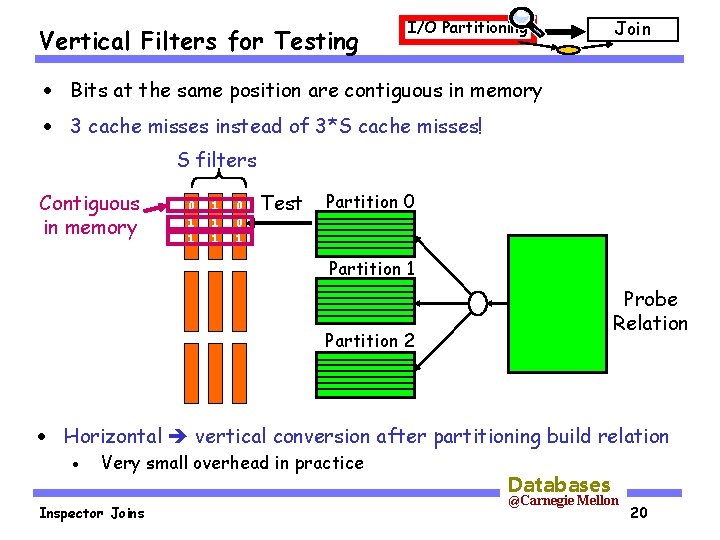

Vertical Filters for Testing I/O Partitioning Join · Bits at the same position are contiguous in memory · 3 cache misses instead of 3*S cache misses! S filters Contiguous in memory 0 1 1 1 0 0 1 Test Partition 0 Partition 1 Probe Relation Partition 2 · Horizontal vertical conversion after partitioning build relation · Very small overhead in practice Inspector Joins Databases @Carnegie Mellon 20

More Details in Paper · Moderate memory space requirement for filters · Summary information representation in intermediate partitions · Preprocessing for cache-stationary join phase · Prefetching for improving efficiency and robustness Databases Inspector Joins @Carnegie Mellon 21

Outline · Motivation · Previous hash join algorithms · Hash join performance on SMP systems · Inspector join · Experimental results · Conclusions Databases Inspector Joins @Carnegie Mellon 22

Experimental Setup · Relation schema: 4 -byte join attribute + fixed length payload · No selection, no projection · 50 MB memory per CPU available for the join phase · Same join algorithm run on every CPU joining different partitions · Detailed cycle-by-cycle simulations A shared-bus SMP system with 1. 5 GHz processors · Memory hierarchy is based on Itanium 2 processor · Databases Inspector Joins @Carnegie Mellon 23

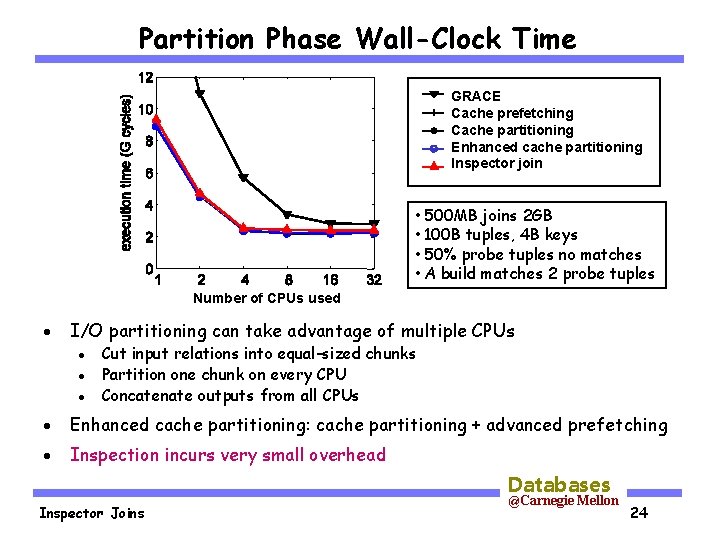

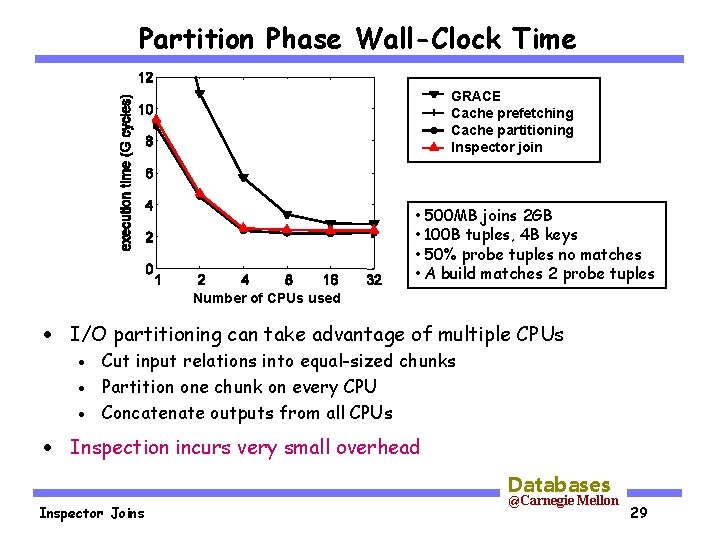

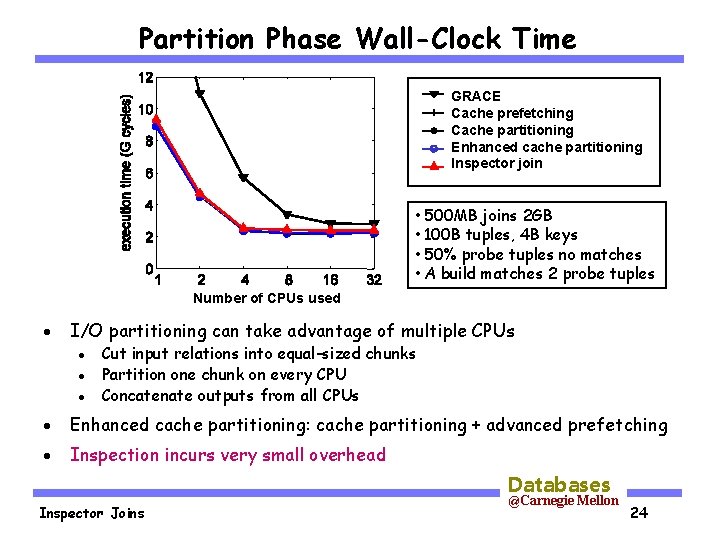

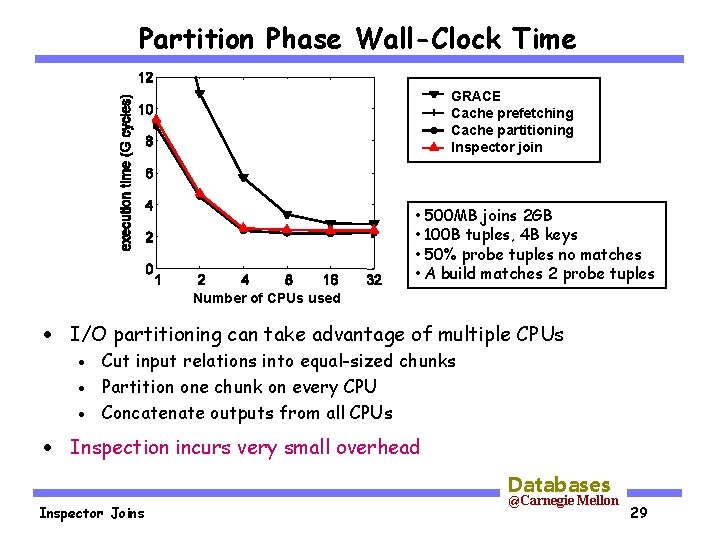

Partition Phase Wall-Clock Time GRACE Cache prefetching Cache partitioning Enhanced cache partitioning Inspector join • 500 MB joins 2 GB • 100 B tuples, 4 B keys • 50% probe tuples no matches • A build matches 2 probe tuples Number of CPUs used · I/O partitioning can take advantage of multiple CPUs · · · Cut input relations into equal-sized chunks Partition one chunk on every CPU Concatenate outputs from all CPUs · Enhanced cache partitioning: cache partitioning + advanced prefetching · Inspection incurs very small overhead Databases Inspector Joins @Carnegie Mellon 24

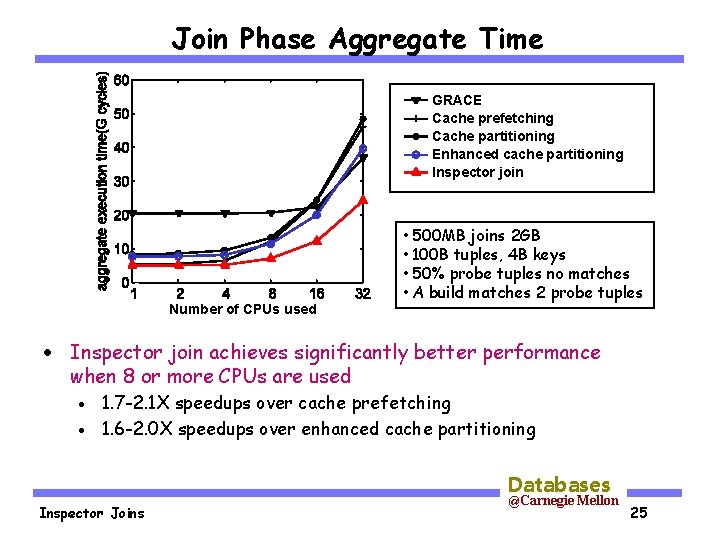

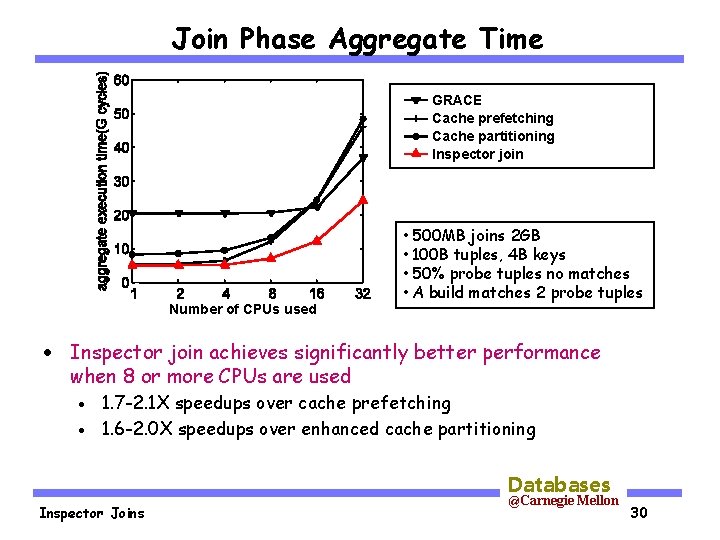

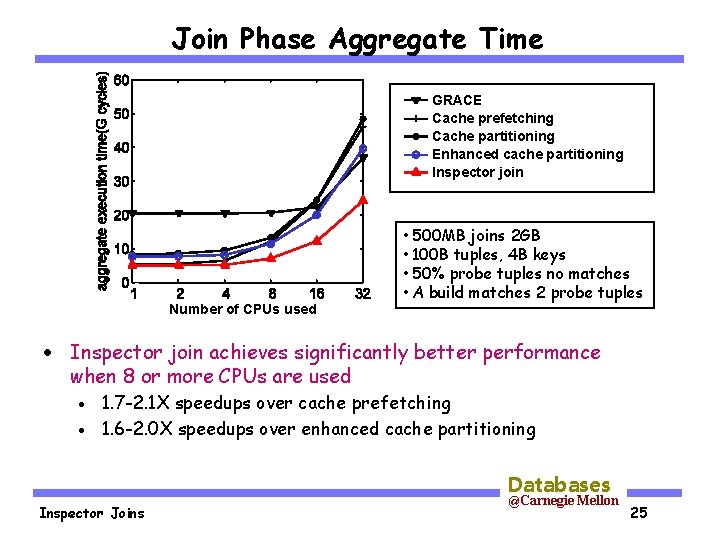

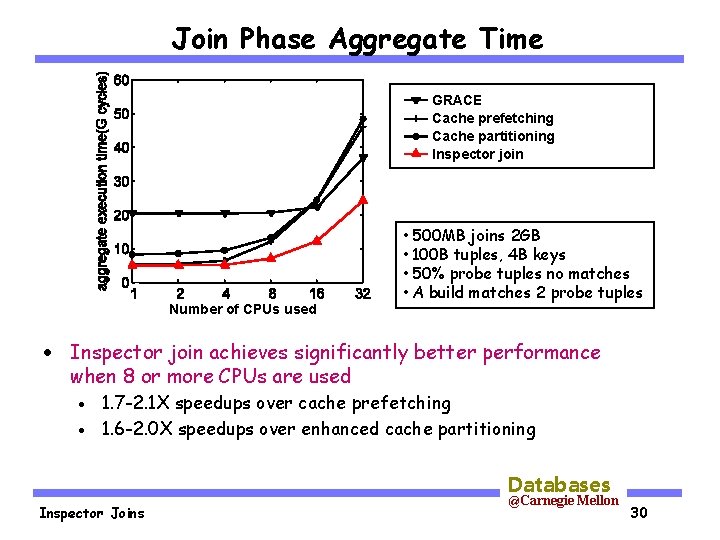

Join Phase Aggregate Time GRACE Cache prefetching Cache partitioning Enhanced cache partitioning Inspector join Number of CPUs used • 500 MB joins 2 GB • 100 B tuples, 4 B keys • 50% probe tuples no matches • A build matches 2 probe tuples · Inspector join achieves significantly better performance when 8 or more CPUs are used 1. 7 -2. 1 X speedups over cache prefetching · 1. 6 -2. 0 X speedups over enhanced cache partitioning · Databases Inspector Joins @Carnegie Mellon 25

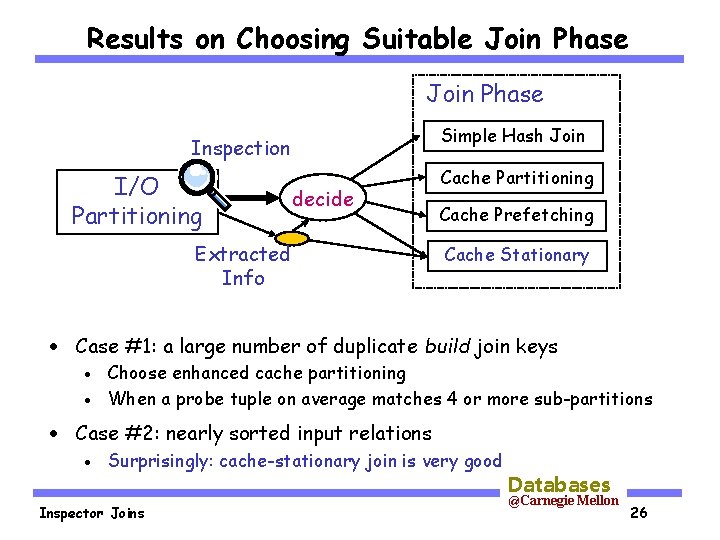

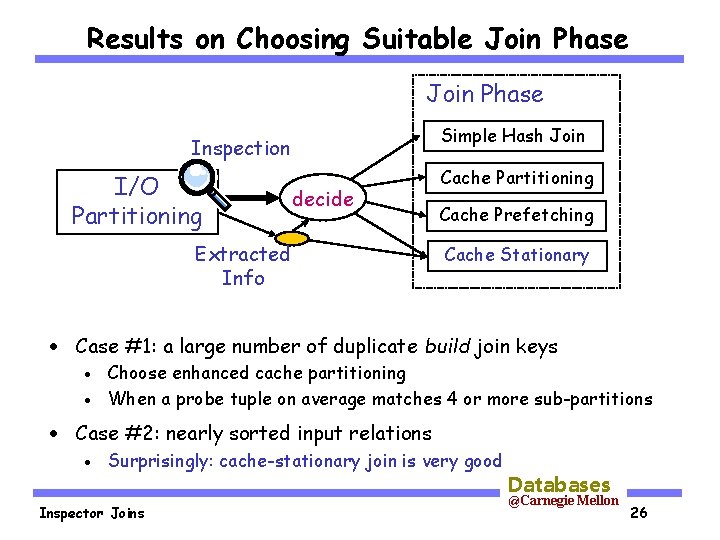

Results on Choosing Suitable Join Phase Simple Hash Join Inspection I/O Partitioning decide Extracted Info Cache Partitioning Cache Prefetching Cache Stationary · Case #1: a large number of duplicate build join keys Choose enhanced cache partitioning · When a probe tuple on average matches 4 or more sub-partitions · · Case #2: nearly sorted input relations · Surprisingly: cache-stationary join is very good Inspector Joins Databases @Carnegie Mellon 26

Conclusions · Exploit multi-pass structure for higher quality info about data · Achieve significantly better cache performance 1. 6 X speedups over previous cache-friendly algorithms · When 8 or more CPUs are used · · Choose most suitable algorithms for special input cases · Idea may be applicable to other multi-pass algorithms Databases Inspector Joins @Carnegie Mellon 27

Thank You ! Databases Inspector Joins @Carnegie Mellon 28

Partition Phase Wall-Clock Time GRACE Cache prefetching Cache partitioning Inspector join • 500 MB joins 2 GB • 100 B tuples, 4 B keys • 50% probe tuples no matches • A build matches 2 probe tuples Number of CPUs used · I/O partitioning can take advantage of multiple CPUs Cut input relations into equal-sized chunks · Partition one chunk on every CPU · Concatenate outputs from all CPUs · · Inspection incurs very small overhead Databases Inspector Joins @Carnegie Mellon 29

Join Phase Aggregate Time GRACE Cache prefetching Cache partitioning Inspector join Number of CPUs used • 500 MB joins 2 GB • 100 B tuples, 4 B keys • 50% probe tuples no matches • A build matches 2 probe tuples · Inspector join achieves significantly better performance when 8 or more CPUs are used 1. 7 -2. 1 X speedups over cache prefetching · 1. 6 -2. 0 X speedups over enhanced cache partitioning · Databases Inspector Joins @Carnegie Mellon 30

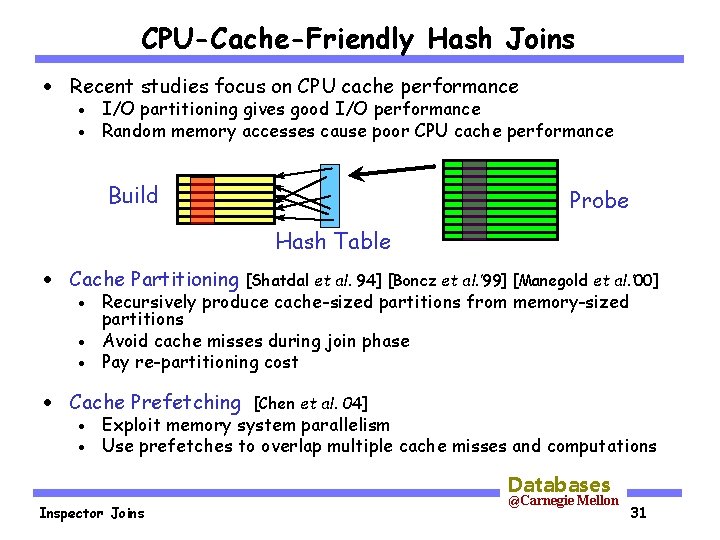

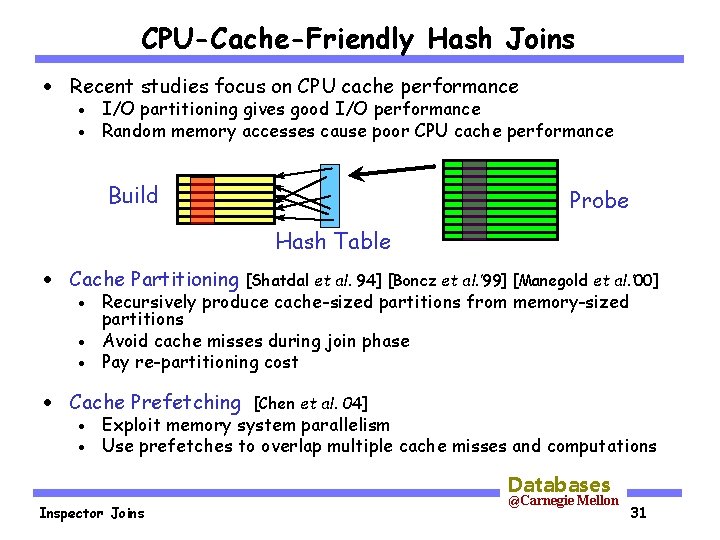

CPU-Cache-Friendly Hash Joins · Recent studies focus on CPU cache performance · · I/O partitioning gives good I/O performance Random memory accesses cause poor CPU cache performance Build Probe Hash Table · Cache Partitioning [Shatdal et al. 94] [Boncz et al. ’ 99] [Manegold et al. ’ 00] Recursively produce cache-sized partitions from memory-sized partitions · Avoid cache misses during join phase · Pay re-partitioning cost · · Cache Prefetching · · [Chen et al. 04] Exploit memory system parallelism Use prefetches to overlap multiple cache misses and computations Databases Inspector Joins @Carnegie Mellon 31

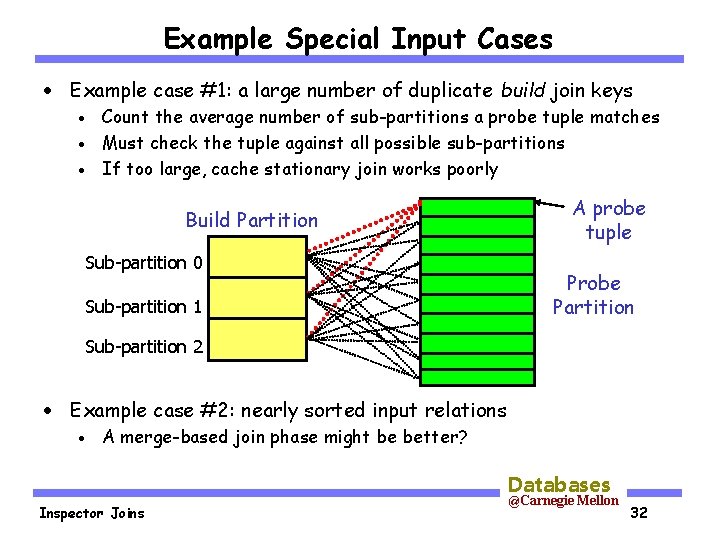

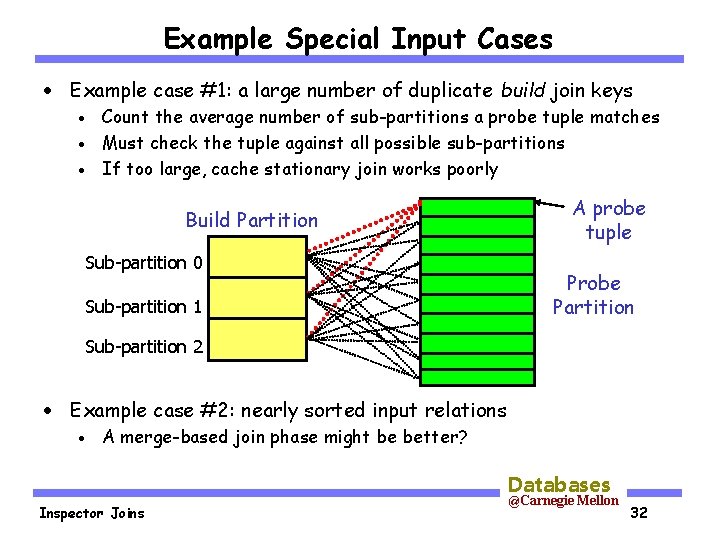

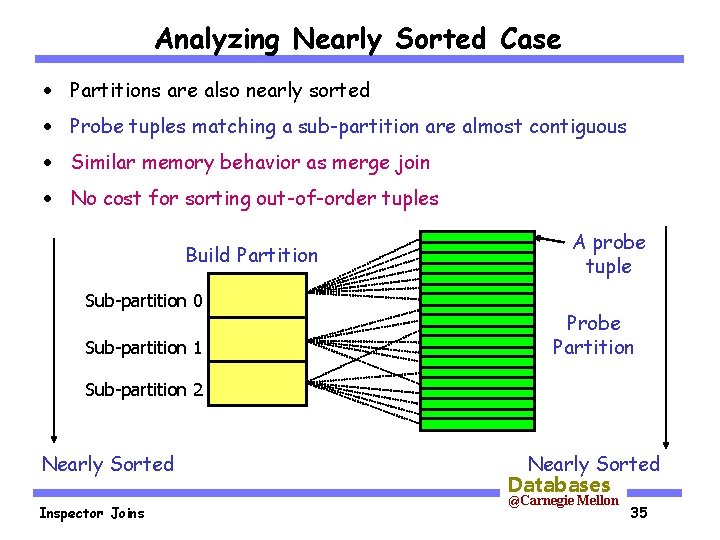

Example Special Input Cases · Example case #1: a large number of duplicate build join keys Count the average number of sub-partitions a probe tuple matches · Must check the tuple against all possible sub-partitions · If too large, cache stationary join works poorly · Build Partition Sub-partition 0 Sub-partition 1 A probe tuple Probe Partition Sub-partition 2 · Example case #2: nearly sorted input relations · A merge-based join phase might be better? Databases Inspector Joins @Carnegie Mellon 32

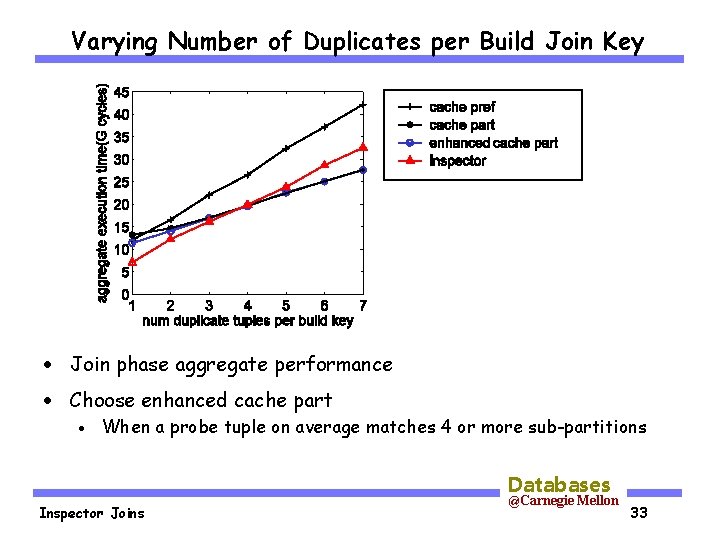

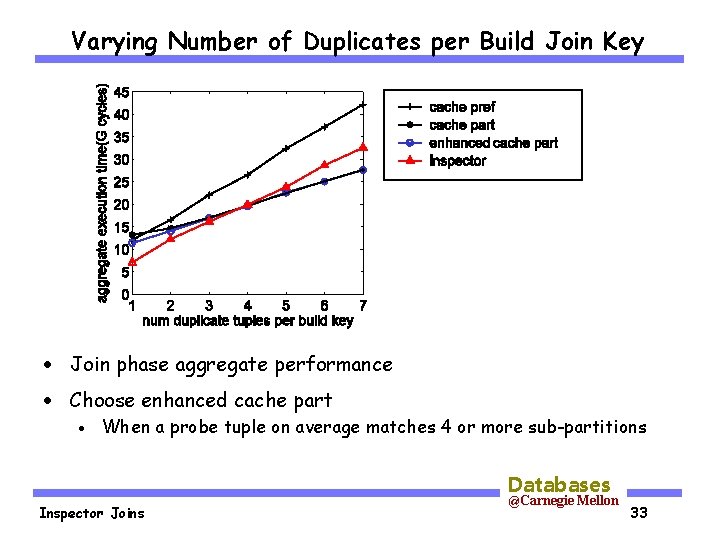

Varying Number of Duplicates per Build Join Key · Join phase aggregate performance · Choose enhanced cache part · When a probe tuple on average matches 4 or more sub-partitions Databases Inspector Joins @Carnegie Mellon 33

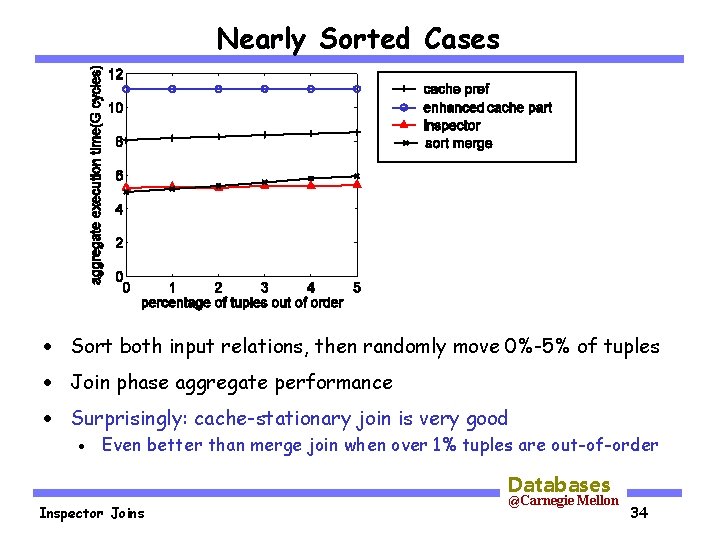

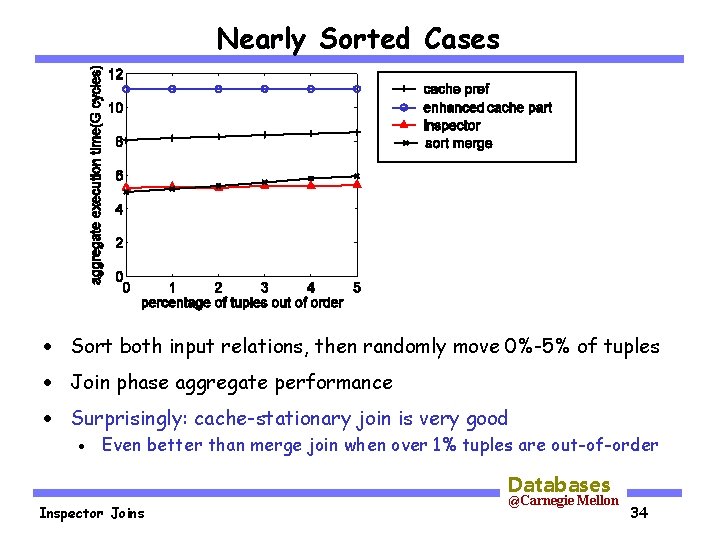

Nearly Sorted Cases · Sort both input relations, then randomly move 0%-5% of tuples · Join phase aggregate performance · Surprisingly: cache-stationary join is very good · Even better than merge join when over 1% tuples are out-of-order Databases Inspector Joins @Carnegie Mellon 34

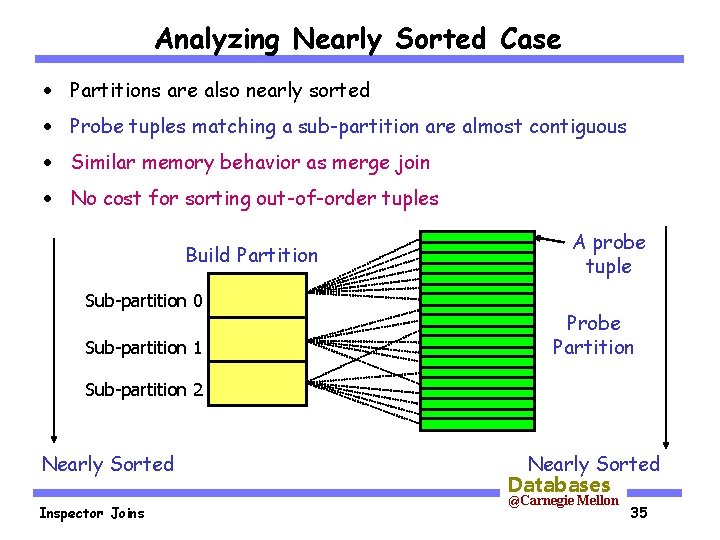

Analyzing Nearly Sorted Case · Partitions are also nearly sorted · Probe tuples matching a sub-partition are almost contiguous · Similar memory behavior as merge join · No cost for sorting out-of-order tuples Build Partition Sub-partition 0 Sub-partition 1 A probe tuple Probe Partition Sub-partition 2 Nearly Sorted Inspector Joins Nearly Sorted Databases @Carnegie Mellon 35

Anastassia gukova

Anastassia gukova Anastassia beliakova

Anastassia beliakova Chen chen berlin

Chen chen berlin Chapter 12 dna and rna

Chapter 12 dna and rna Zhuoyue zhao

Zhuoyue zhao Break definition

Break definition A _________bond joins amino acids together.

A _________bond joins amino acids together. These are words that are called joiners or connectors.

These are words that are called joiners or connectors. Method of joins

Method of joins Joins two words together

Joins two words together Two word conjunctions

Two word conjunctions Inner join

Inner join Interjection word

Interjection word Joins

Joins The inspector need not be a big man

The inspector need not be a big man Inspector of turns

Inspector of turns An inspector calls opening stage directions

An inspector calls opening stage directions Sheila birling character profile

Sheila birling character profile Alberta boilers safety association

Alberta boilers safety association Kahoot an inspector calls

Kahoot an inspector calls The inspector need not be a big man

The inspector need not be a big man Context of an inspector calls

Context of an inspector calls No he's giving us the rope

No he's giving us the rope Cohmed

Cohmed Denuncias ministerio de trabajo bolivia

Denuncias ministerio de trabajo bolivia Ofsted inspector professional indemnity insurance

Ofsted inspector professional indemnity insurance Edexcel gcse english literature past papers

Edexcel gcse english literature past papers An inspector calls act 1 questions

An inspector calls act 1 questions Paraview animation tutorial

Paraview animation tutorial Pharmacy regulation

Pharmacy regulation An inspector calls character

An inspector calls character An inspector calls summary act 2

An inspector calls summary act 2 Miners strike 1912

Miners strike 1912 Nys office of medicaid inspector general

Nys office of medicaid inspector general Inspector goole adjectives

Inspector goole adjectives Warm up to irony

Warm up to irony