Fro NTier Integration Testing CMS Lee Lueking 3

- Slides: 22

Fro. NTier Integration &Testing @ CMS Lee Lueking 3 D DB Preparedness Workshop Feb 6, 2006

Contents • • • The Concept November Test Goals and Setup Test Results Next Steps Conclusions 2

Using Frontier w/ POOL • The Object to Relational mapping provided in the original Frontier product (ala CDF) is not used. • A query pass-through feature is added to the Frontier servlet. • A POOL(CORAL)/Oracle-Frontier plugin is provided that uses a special Frontier client library. • Frontier features employed are: 1) HTTP is used for the transport protocol from the Frontier server to client, 2) Caching is provided by Squid proxy/caching servers. 3

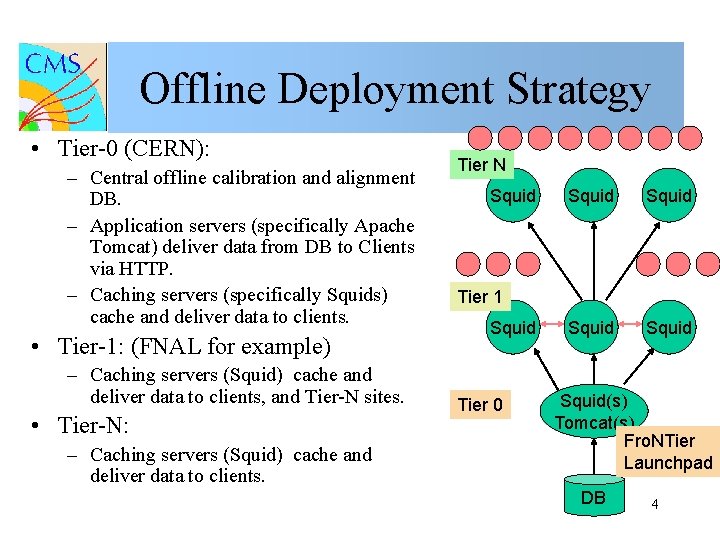

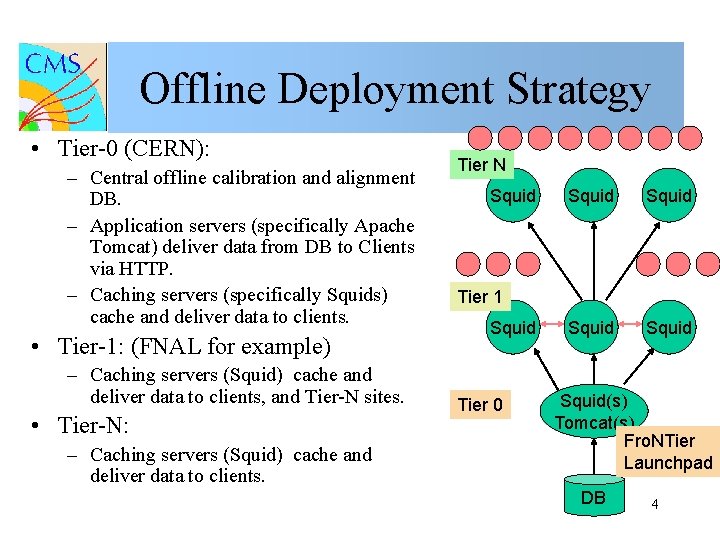

Offline Deployment Strategy • Tier-0 (CERN): – Central offline calibration and alignment DB. – Application servers (specifically Apache Tomcat) deliver data from DB to Clients via HTTP. – Caching servers (specifically Squids) cache and deliver data to clients. • Tier-1: (FNAL for example) – Caching servers (Squid) cache and deliver data to clients, and Tier-N sites. • Tier-N: – Caching servers (Squid) cache and deliver data to clients. Tier N Squid Squid Tier 1 Squid Tier 0 Squid(s) Tomcat(s) Fro. NTier Launchpad DB 4

November Test Goals • Demonstrate the feasibility for deployment of the Frontier infrastructure. • Explore the maintenance and operation issues of the model. • Test for the following: – – Functionality Performance Reliability Scalability • Compare Frontier approach to direct Oracle Access. 5

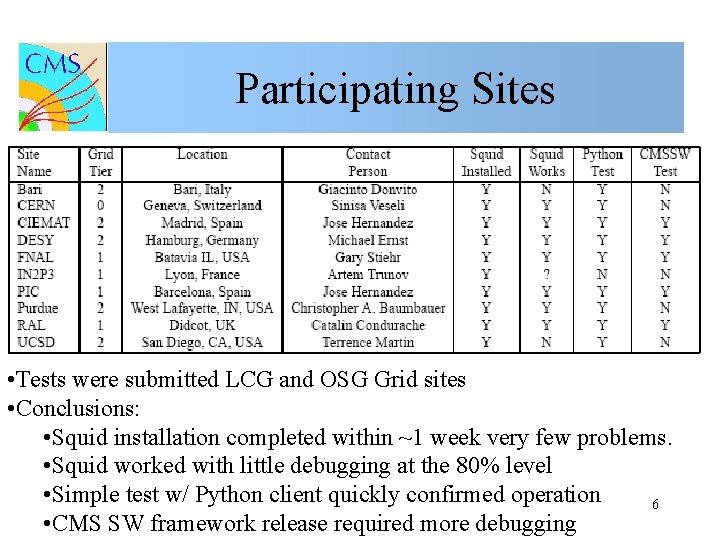

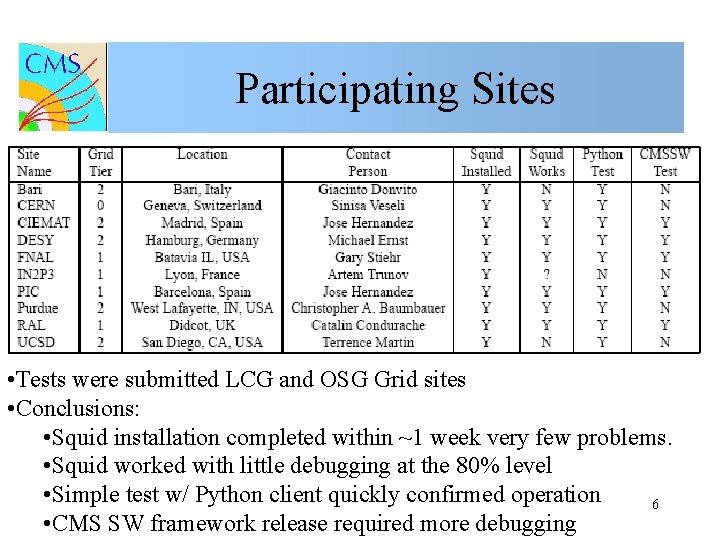

Participating Sites • Tests were submitted LCG and OSG Grid sites • Conclusions: • Squid installation completed within ~1 week very few problems. • Squid worked with little debugging at the 80% level • Simple test w/ Python client quickly confirmed operation 6 • CMS SW framework release required more debugging

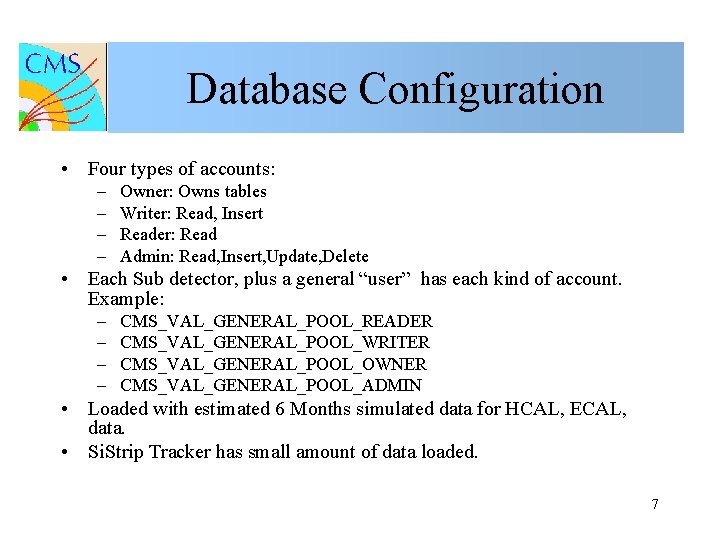

Database Configuration • Four types of accounts: – – Owner: Owns tables Writer: Read, Insert Reader: Read Admin: Read, Insert, Update, Delete • Each Sub detector, plus a general “user” has each kind of account. Example: – – CMS_VAL_GENERAL_POOL_READER CMS_VAL_GENERAL_POOL_WRITER CMS_VAL_GENERAL_POOL_OWNER CMS_VAL_GENERAL_POOL_ADMIN • Loaded with estimated 6 Months simulated data for HCAL, ECAL, data. • Si. Strip Tracker has small amount of data loaded. 7

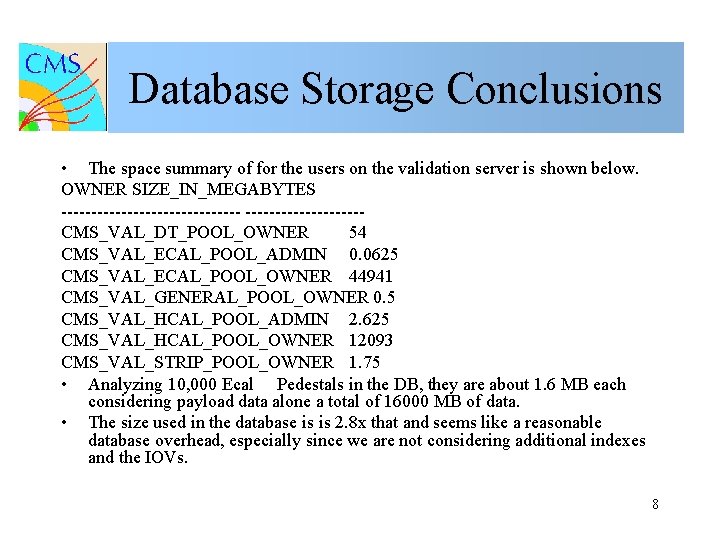

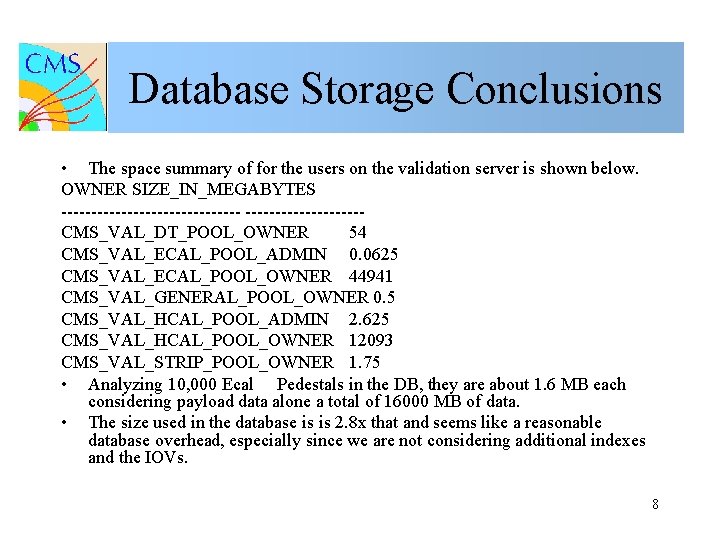

Database Storage Conclusions • The space summary of for the users on the validation server is shown below. OWNER SIZE_IN_MEGABYTES ---------------CMS_VAL_DT_POOL_OWNER 54 CMS_VAL_ECAL_POOL_ADMIN 0. 0625 CMS_VAL_ECAL_POOL_OWNER 44941 CMS_VAL_GENERAL_POOL_OWNER 0. 5 CMS_VAL_HCAL_POOL_ADMIN 2. 625 CMS_VAL_HCAL_POOL_OWNER 12093 CMS_VAL_STRIP_POOL_OWNER 1. 75 • Analyzing 10, 000 Ecal Pedestals in the DB, they are about 1. 6 MB each considering payload data alone a total of 16000 MB of data. • The size used in the database is is 2. 8 x that and seems like a reasonable database overhead, especially since we are not considering additional indexes and the IOVs. 8

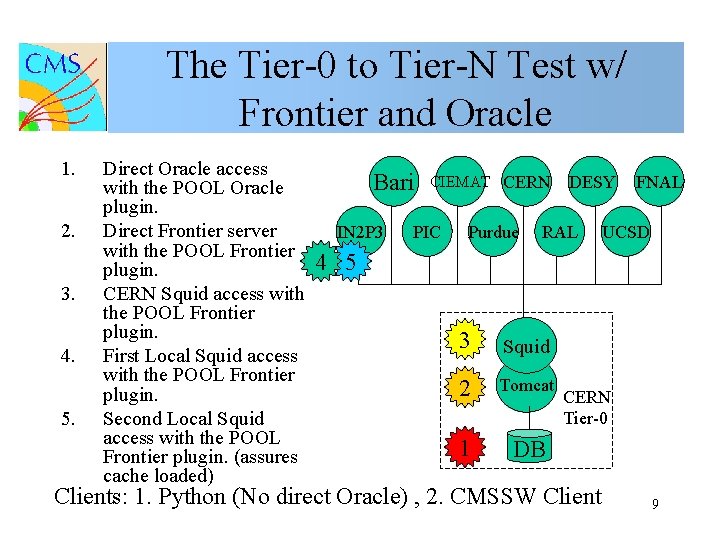

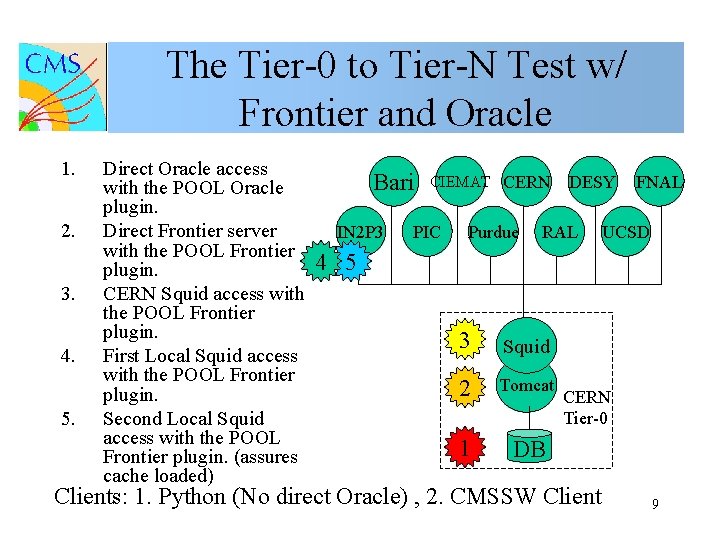

The Tier-0 to Tier-N Test w/ Frontier and Oracle 1. 2. 3. 4. 5. Direct Oracle access with the POOL Oracle plugin. Direct Frontier server with the POOL Frontier plugin. CERN Squid access with the POOL Frontier plugin. First Local Squid access with the POOL Frontier plugin. Second Local Squid access with the POOL Frontier plugin. (assures cache loaded) Bari IN 2 P 3 CIEMAT CERN PIC Purdue DESY RAL FNAL UCSD 4 5 3 Squid 2 Tomcat 1 DB CERN Tier-0 Clients: 1. Python (No direct Oracle) , 2. CMSSW Client 9

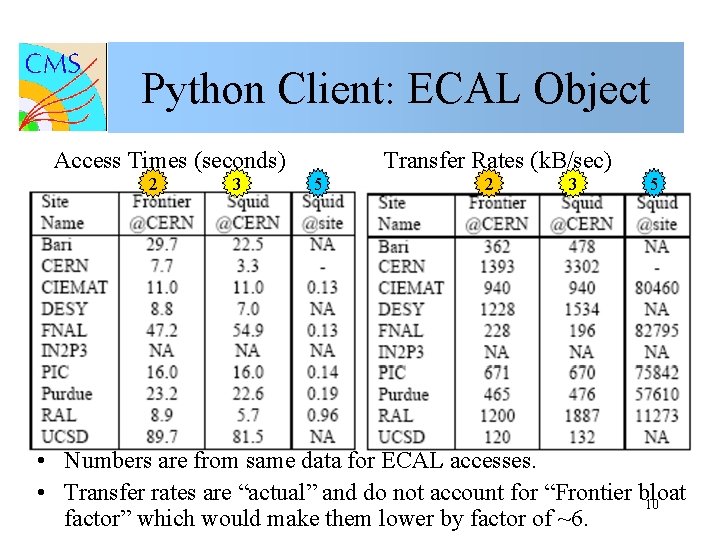

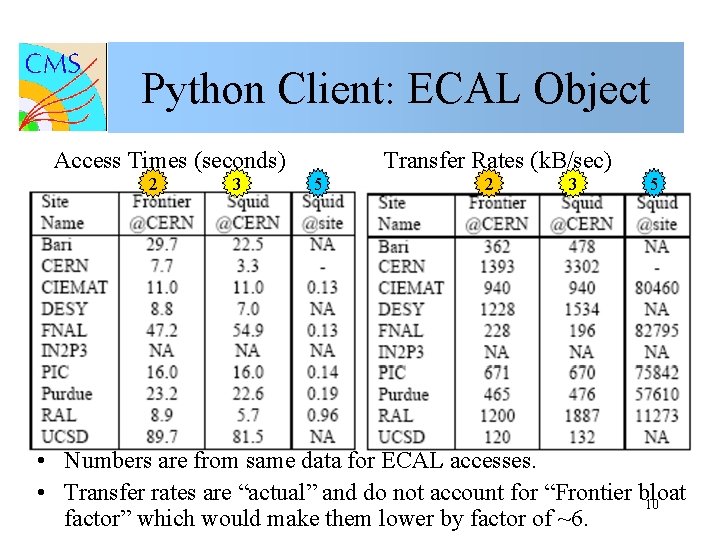

Python Client: ECAL Object Access Times (seconds) 2 3 Transfer Rates (k. B/sec) 5 2 3 5 • Numbers are from same data for ECAL accesses. • Transfer rates are “actual” and do not account for “Frontier bloat 10 factor” which would make them lower by factor of ~6.

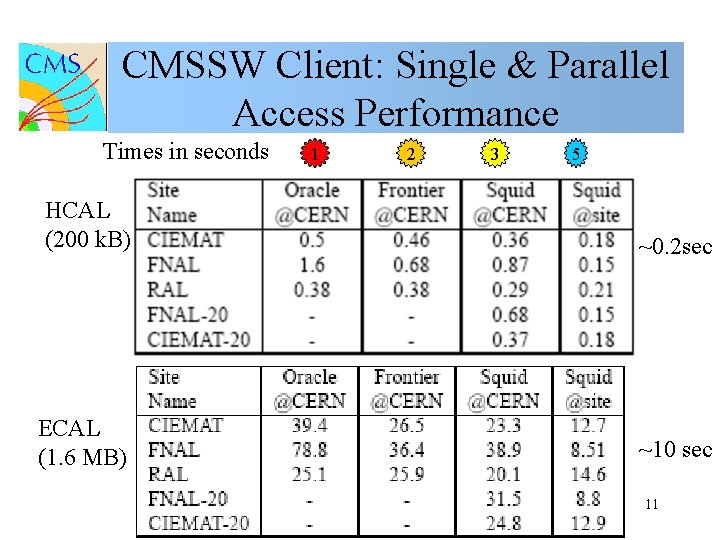

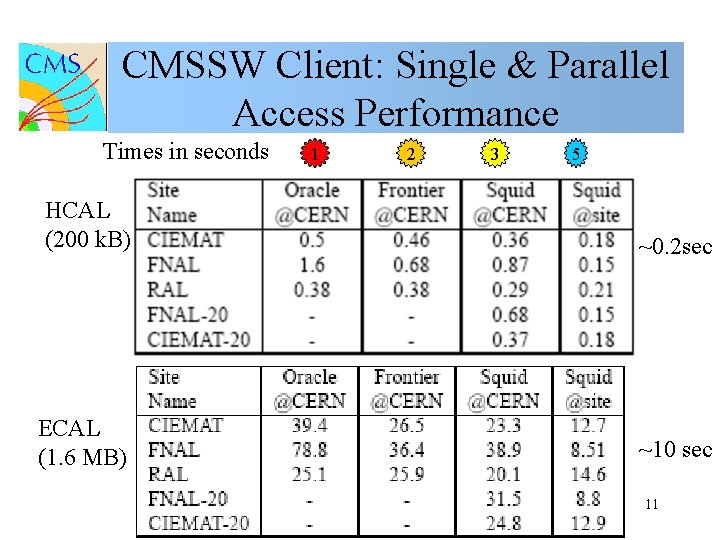

CMSSW Client: Single & Parallel Access Performance Times in seconds 1 2 3 5 HCAL (200 k. B) ~0. 2 sec ECAL (1. 6 MB) ~10 sec 11

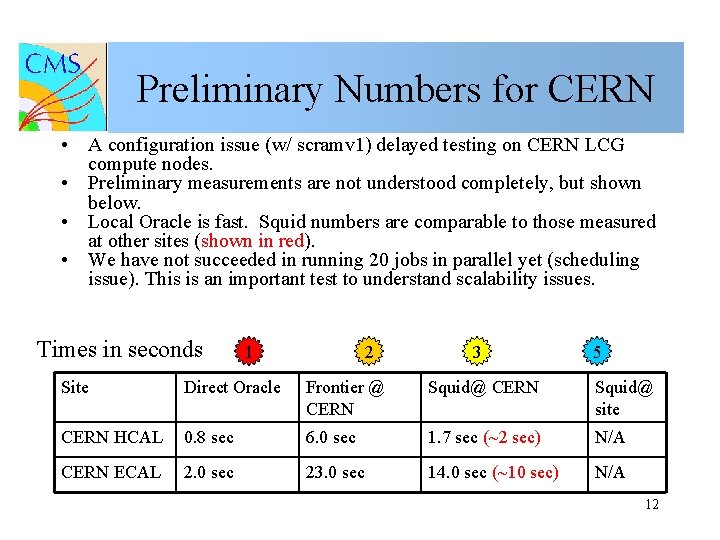

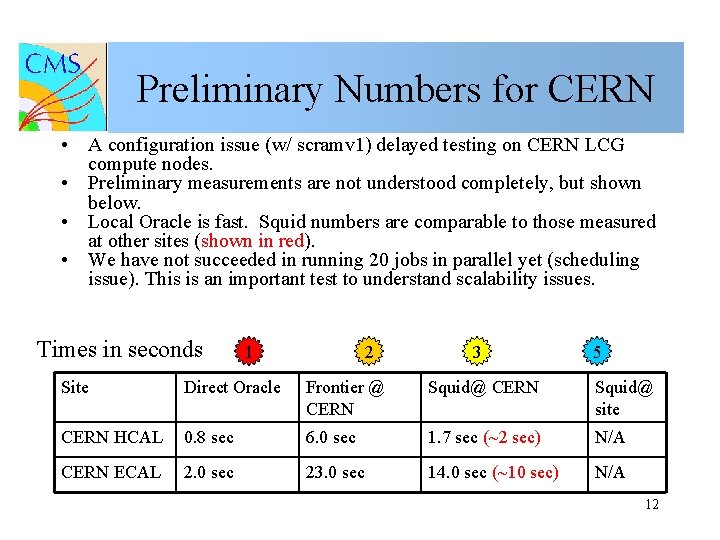

Preliminary Numbers for CERN • A configuration issue (w/ scramv 1) delayed testing on CERN LCG compute nodes. • Preliminary measurements are not understood completely, but shown below. • Local Oracle is fast. Squid numbers are comparable to those measured at other sites (shown in red). • We have not succeeded in running 20 jobs in parallel yet (scheduling issue). This is an important test to understand scalability issues. Times in seconds 1 2 3 5 Site Direct Oracle Frontier @ CERN Squid@ site CERN HCAL 0. 8 sec 6. 0 sec 1. 7 sec (~2 sec) N/A CERN ECAL 2. 0 sec 23. 0 sec 14. 0 sec (~10 sec) N/A 12

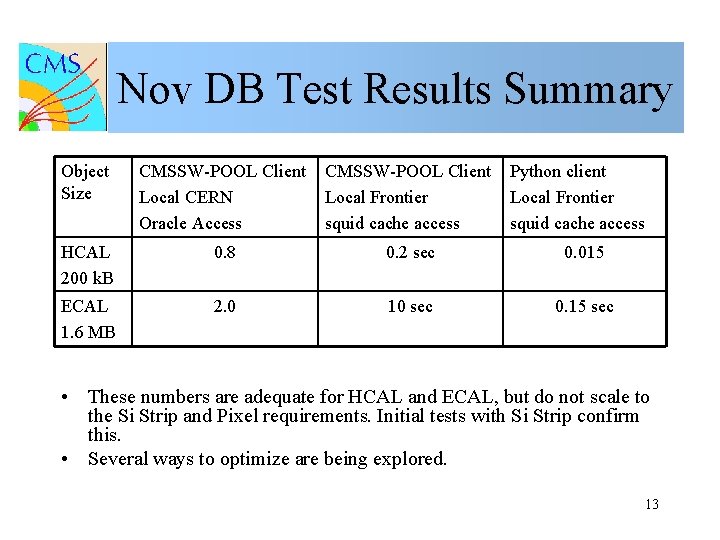

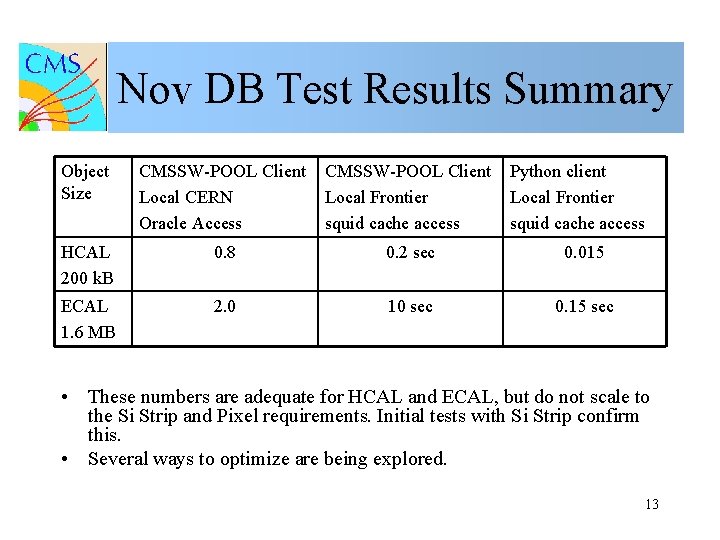

Nov DB Test Results Summary Object Size CMSSW-POOL Client Local CERN Oracle Access CMSSW-POOL Client Local Frontier squid cache access Python client Local Frontier squid cache access HCAL 200 k. B 0. 8 0. 2 sec 0. 015 ECAL 1. 6 MB 2. 0 10 sec 0. 15 sec • These numbers are adequate for HCAL and ECAL, but do not scale to the Si Strip and Pixel requirements. Initial tests with Si Strip confirm this. • Several ways to optimize are being explored. 13

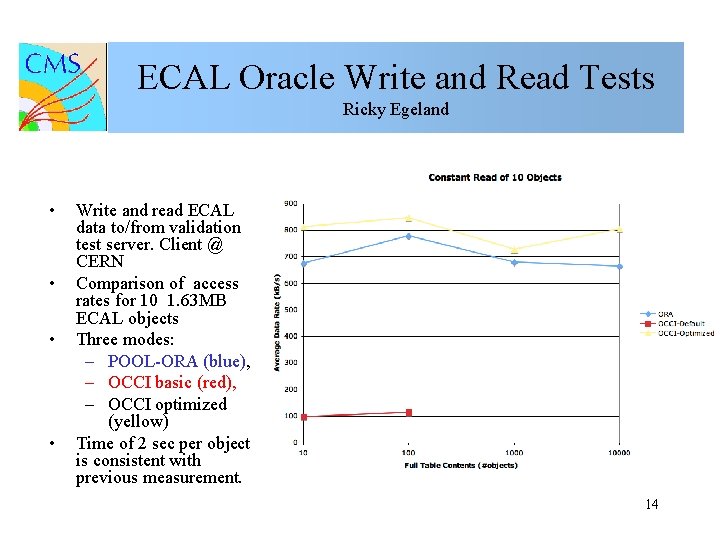

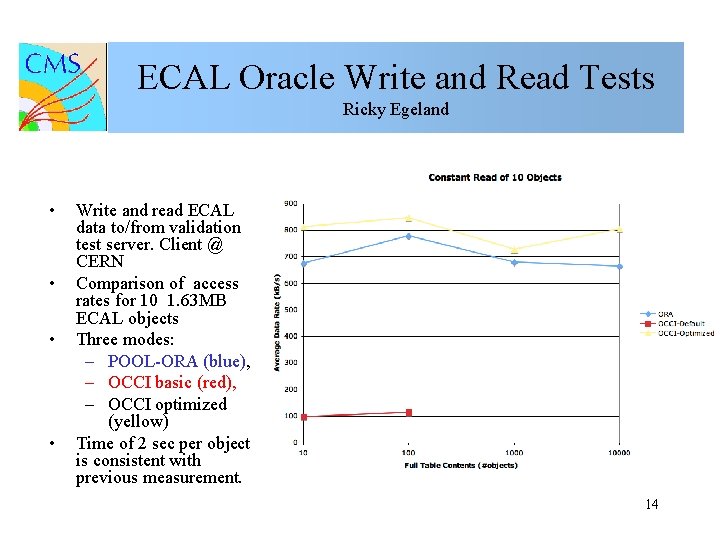

ECAL Oracle Write and Read Tests Ricky Egeland • • Write and read ECAL data to/from validation test server. Client @ CERN Comparison of access rates for 10 1. 63 MB ECAL objects Three modes: – POOL-ORA (blue), – OCCI basic (red), – OCCI optimized (yellow) Time of 2 sec per object is consistent with previous measurement. 14

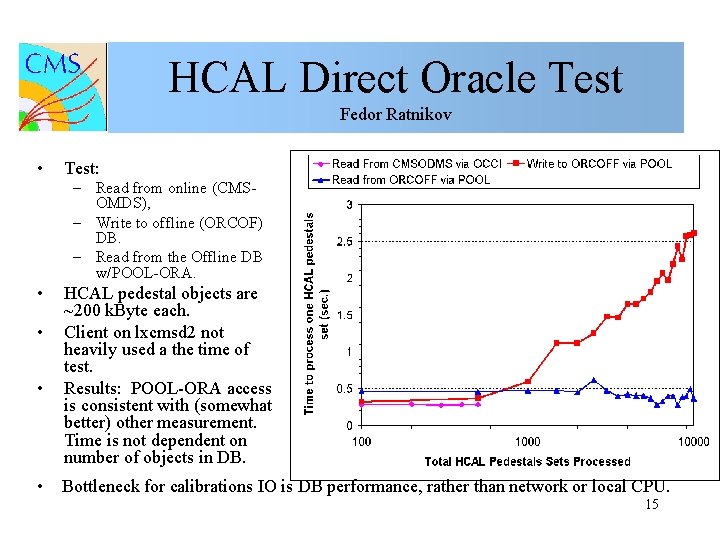

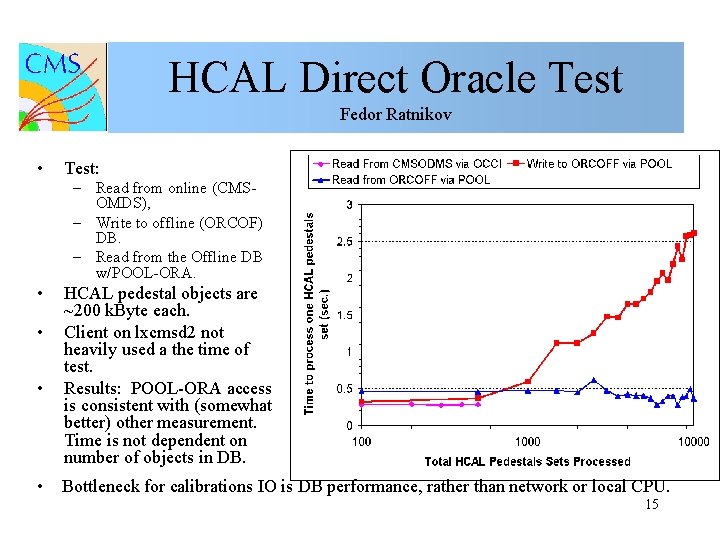

HCAL Direct Oracle Test Fedor Ratnikov • Test: – Read from online (CMSOMDS), – Write to offline (ORCOF) DB. – Read from the Offline DB w/POOL-ORA. • • • HCAL pedestal objects are ~200 k. Byte each. Client on lxcmsd 2 not heavily used a the time of test. Results: POOL-ORA access is consistent with (somewhat better) other measurement. Time is not dependent on number of objects in DB. • Bottleneck for calibrations IO is DB performance, rather than network or local CPU. 15

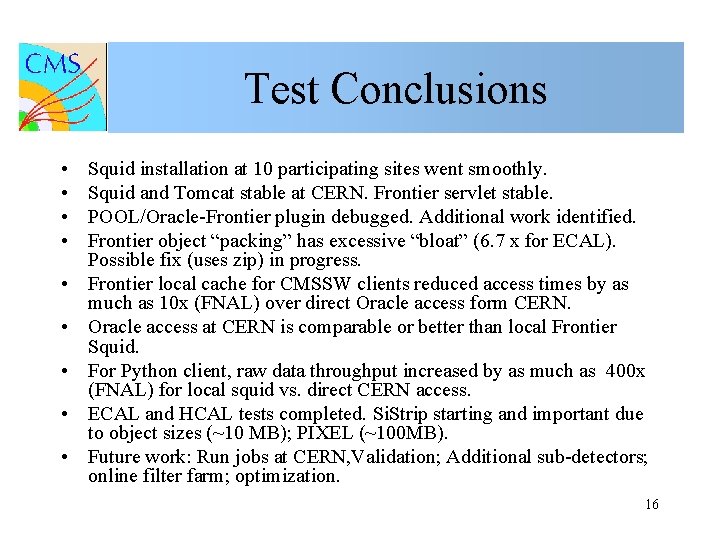

Test Conclusions • • • Squid installation at 10 participating sites went smoothly. Squid and Tomcat stable at CERN. Frontier servlet stable. POOL/Oracle-Frontier plugin debugged. Additional work identified. Frontier object “packing” has excessive “bloat” (6. 7 x for ECAL). Possible fix (uses zip) in progress. Frontier local cache for CMSSW clients reduced access times by as much as 10 x (FNAL) over direct Oracle access form CERN. Oracle access at CERN is comparable or better than local Frontier Squid. For Python client, raw data throughput increased by as much as 400 x (FNAL) for local squid vs. direct CERN access. ECAL and HCAL tests completed. Si. Strip starting and important due to object sizes (~10 MB); PIXEL (~100 MB). Future work: Run jobs at CERN, Validation; Additional sub-detectors; online filter farm; optimization. 16

Next Steps

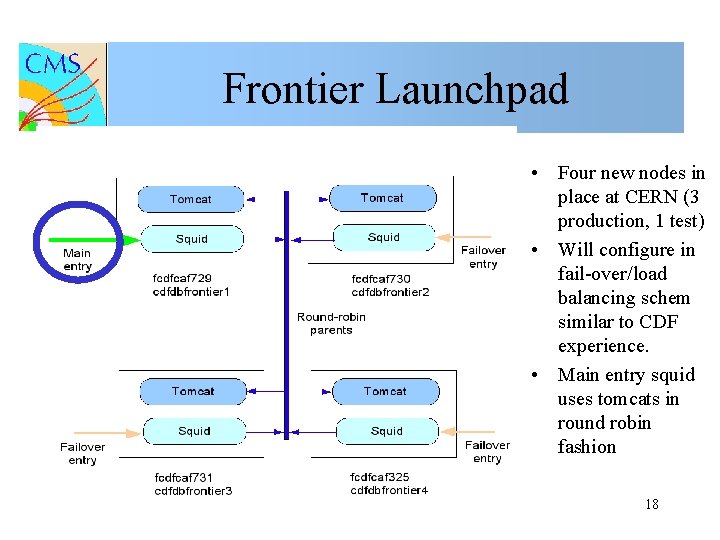

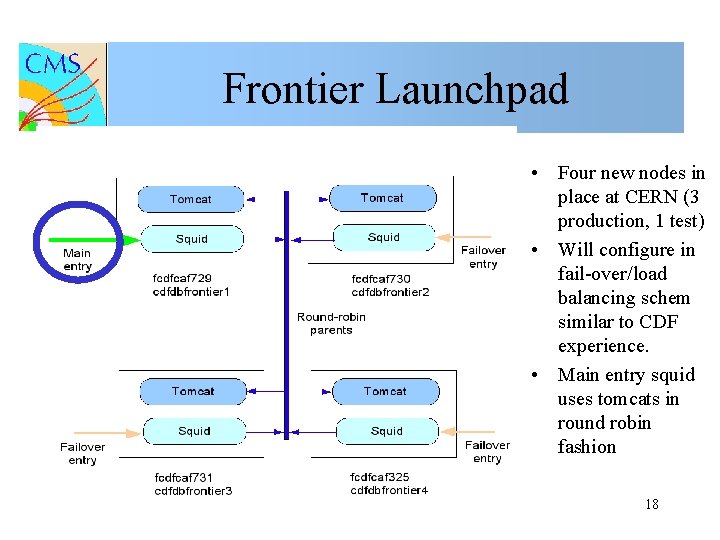

Frontier Launchpad • Four new nodes in place at CERN (3 production, 1 test) • Will configure in fail-over/load balancing schem similar to CDF experience. • Main entry squid uses tomcats in round robin fashion 18

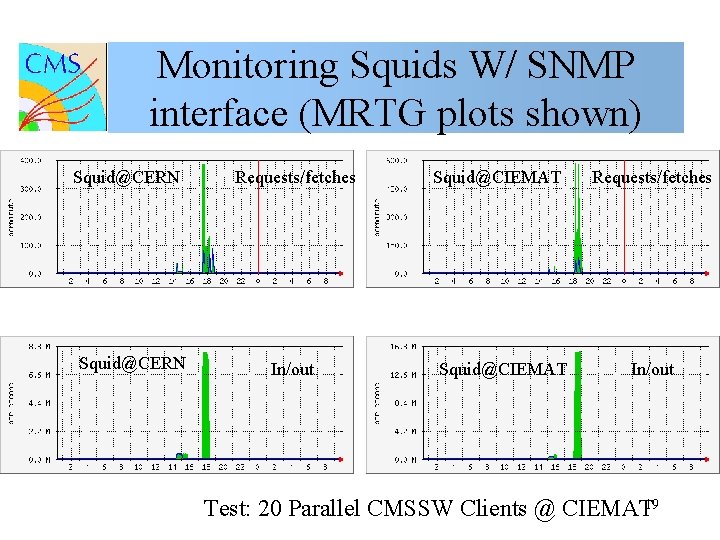

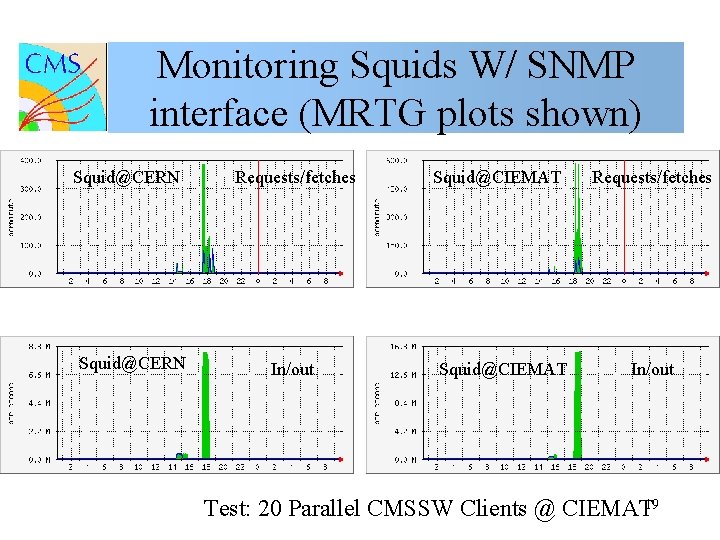

Monitoring Squids W/ SNMP interface (MRTG plots shown) Squid@CERN Requests/fetches In/out Squid@CIEMAT Requests/fetches In/out Test: 20 Parallel CMSSW Clients @ CIEMAT 19

Frontier Servers @ CERN • Dirk Duellmann has already requested 4 boxes (3 for the production and 1 for the testbed) • He is in contact with FIO about the automated installation (quattor) of the additional s/w (tomcat/squid) which could potentially be quattorised as well. • Proposal (caviat - using the frontier caching is still under CMS review): – CMS will set up and run Frontier on the production machines initially and only require basic OS level services from IT for these boxes. – This will be reviewed after some operational experience (eg at the summer database workshop when deciding on the full production setup). – Need contact in CMS responsible for the setup and initial support. – Squid deployment and support at Tier-N centers: CMS Will provide written description of our (CMS) needs/plan so it can be incorporated into the WLCG planning. 20

Conclusions • An approach using POOL with a Frontier Caching mechanism has been implemented. • Testing has indicated the deployment model works, and the software is functional. • Performance for the local frontier (squid) and local Oracle access is comparable for single client tests. Multi client testing is still in progress. • A phase 1 deployment plan is in progress and will include failover and load balancing features to extend the reliability and performance of the system. • There is still work to be done… 21

Finish