Atlas Fro NTier stress tests at CERN April

![Recent testing results (read only, no writing to DB) #client hosts Throughp ut [hz] Recent testing results (read only, no writing to DB) #client hosts Throughp ut [hz]](https://slidetodoc.com/presentation_image_h2/79f111a02a3cbd70a5305b0b994666d6/image-7.jpg)

- Slides: 8

Atlas Fro. NTier stress tests at CERN April 2010 David Front, Weizmann Institute April 2010 Fro. NTier stress tests at CERN

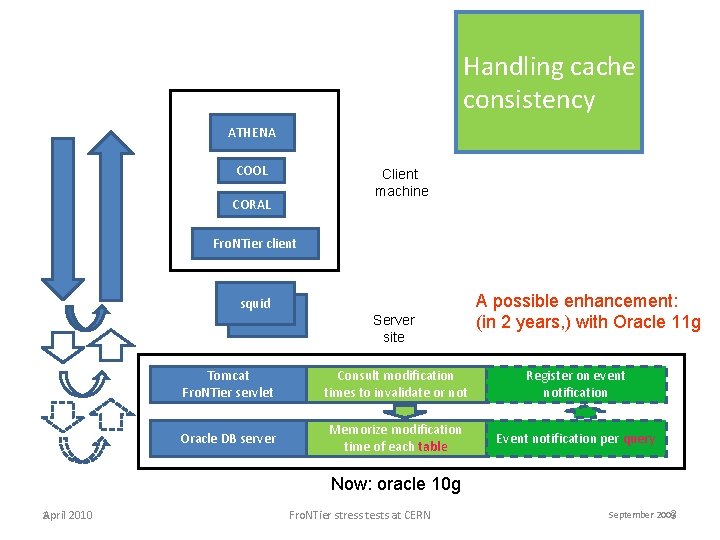

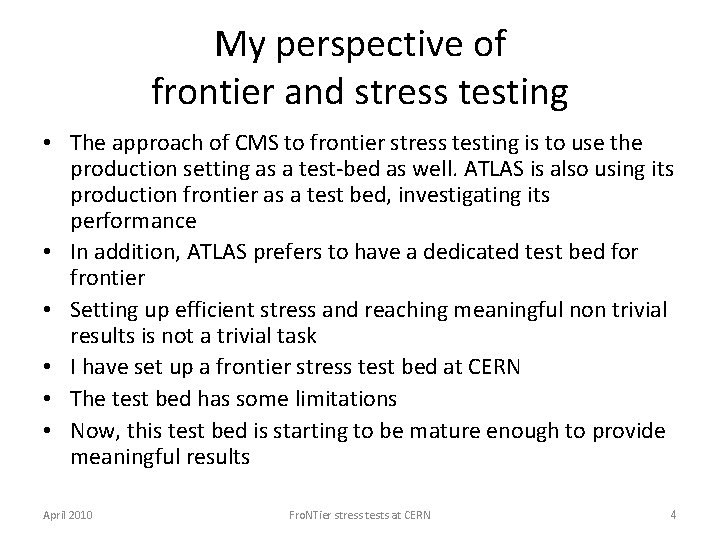

My perspective of frontier and stress testing • About a year ago, I started to work 20 -25% of my time for LCG, via Andrea Valassi from IT, ES. It has been agreed with Sasha Vanyashin and Elizabeth Gallas that I’ll work on ATLAS frontier. Andrea is also interested in comparing the performance of frontier against Coral server. On the rest of my time, I work for TGC DB SW. • I first worked on cache consistency: – Atlas re-uses the solution of CMS: using a proprietary mechanism, based on: per table modification times. This should be accurate but not efficient – As of Oracle 11 g (to be deployed in about 2 years), and using its enhanced per query event notification, I suggest to look into enhancing frontier cache consistency accordingly April 2010 Fro. NTier stress tests at CERN 2

Handling cache consistency ATHENA COOL Client machine CORAL Fro. NTier client squid Server site A possible enhancement: (in 2 years, ) with Oracle 11 g Tomcat Fro. NTier servlet Consult modification times to invalidate or not Register on event notification Oracle DB server Memorize modification time of each table Event notification per query Now: oracle 10 g 3 April 2010 Fro. NTier stress tests at CERN September 2009 3

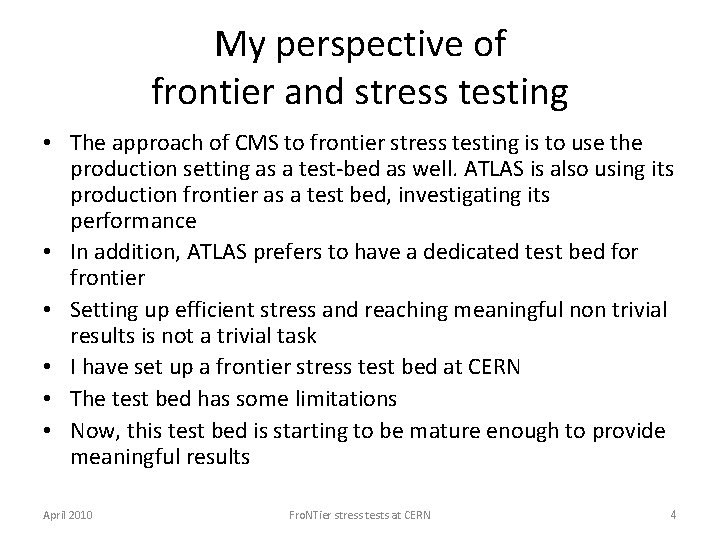

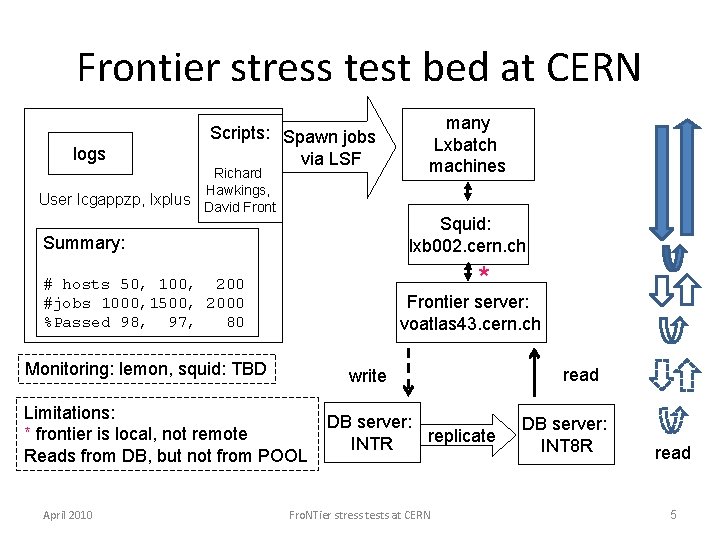

My perspective of frontier and stress testing • The approach of CMS to frontier stress testing is to use the production setting as a test-bed as well. ATLAS is also using its production frontier as a test bed, investigating its performance • In addition, ATLAS prefers to have a dedicated test bed for frontier • Setting up efficient stress and reaching meaningful non trivial results is not a trivial task • I have set up a frontier stress test bed at CERN • The test bed has some limitations • Now, this test bed is starting to be mature enough to provide meaningful results April 2010 Fro. NTier stress tests at CERN 4

Frontier stress test bed at CERN logs Scripts: Spawn jobs via LSF Richard Hawkings, User lcgappzp, lxplus David Front Squid: lxb 002. cern. ch Summary: * # hosts 50, 100, 200 #jobs 1000, 1500, 2000 %Passed 98, 97, 80 Frontier server: voatlas 43. cern. ch Monitoring: lemon, squid: TBD write Limitations: * frontier is local, not remote Reads from DB, but not from POOL April 2010 many Lxbatch machines DB server: replicate INTR Fro. NTier stress tests at CERN read DB server: INT 8 R read 5

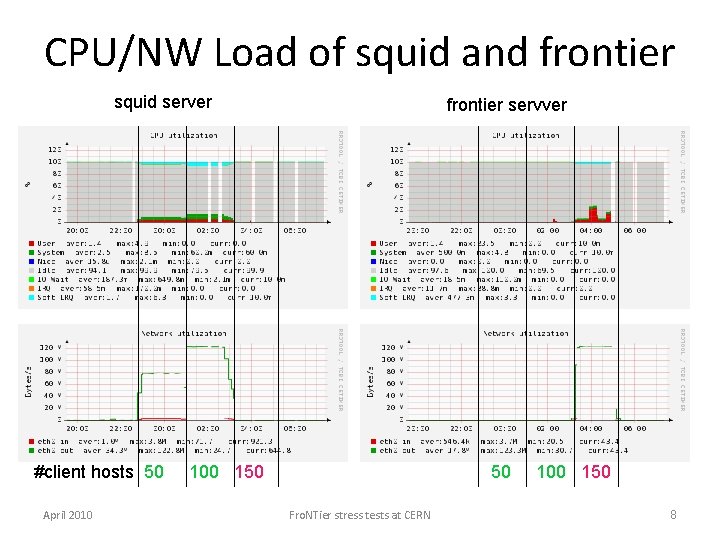

ATLAS frontier stress testing goals • Previous – simulate multiple clients and multiple squid servers bombarding one central frontier server • Current – simulate multiple clients bombarding one squid server (more restricted, due to Douglas Smith) • Future – TBD: – Cache consistency: Do write to DB while reading, see how performance is effected by cached queries being invalidated – New SW versions: Test each new SW version performance against previous SW version performance – Possibility: measure the effect of changing parameters, such as squid/frontier server delays on performance April 2010 Fro. NTier stress tests at CERN 6

![Recent testing results read only no writing to DB client hosts Throughp ut hz Recent testing results (read only, no writing to DB) #client hosts Throughp ut [hz]](https://slidetodoc.com/presentation_image_h2/79f111a02a3cbd70a5305b0b994666d6/image-7.jpg)

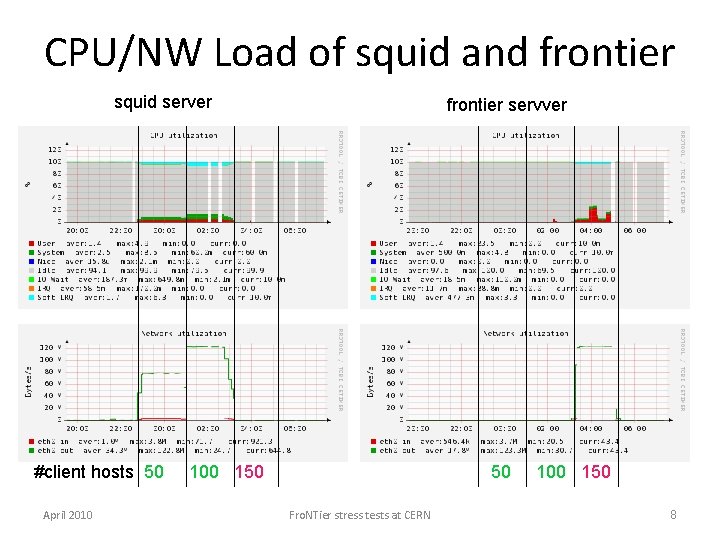

Recent testing results (read only, no writing to DB) #client hosts Throughp ut [hz] Jobs passed [%] #jobs read Load of [k] squid server [%] NW utilization squid server [MB/s] Load on frontier server [%] NW utilization frontier server [MB/s] 50 2. 54 99 18. 2 ~10 80 ~0 0 100 3. 99 99 28. 7 ~15 120 ~0 0 150 4 99 28. 8 ~0 0 ~8 -25 120 Conclusions: • A squid server handles up to ~100 concurrent clients reasonably well • 100 reading clients saturate the 1 GB NW connection • Each client uses ~1. 6 MB/s (12. 8 Mb/s). • (1000/12. 8) ~80 clients are expected to saturate a 1 GB NW connection • to be further investigated: 150 is too much: local squid can not handle this load and transfers the load to frontier server April 2010 Fro. NTier stress tests at CERN 7

CPU/NW Load of squid and frontier squid server #client hosts 50 April 2010 frontier servver 100 150 50 Fro. NTier stress tests at CERN 100 150 8