Fall 2020 CSC 594 Topics in AI Advanced

- Slides: 30

Fall 2020 CSC 594 Topics in AI: Advanced Deep Learning 8. Capsule (Overview) Noriko Tomuro 1

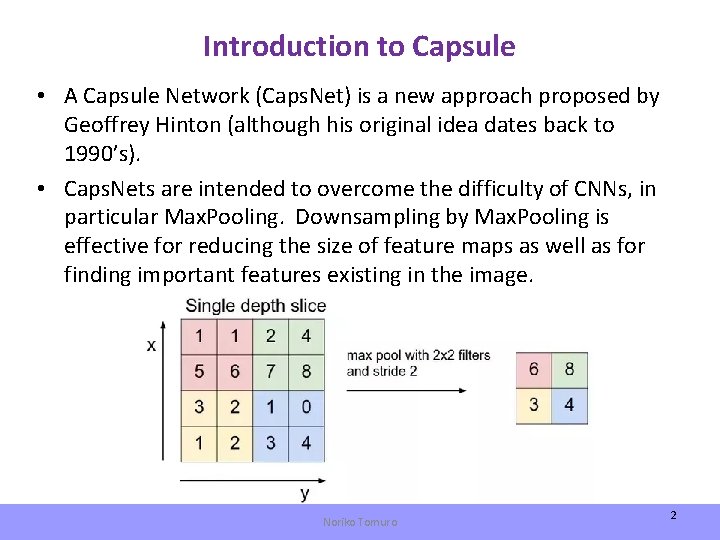

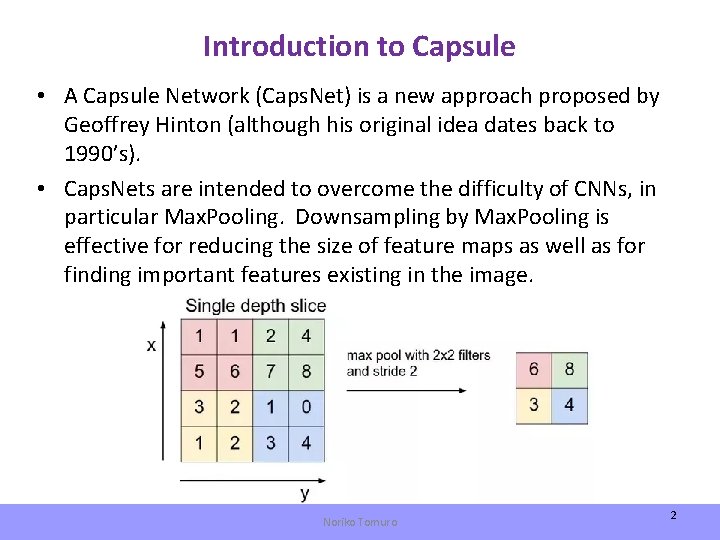

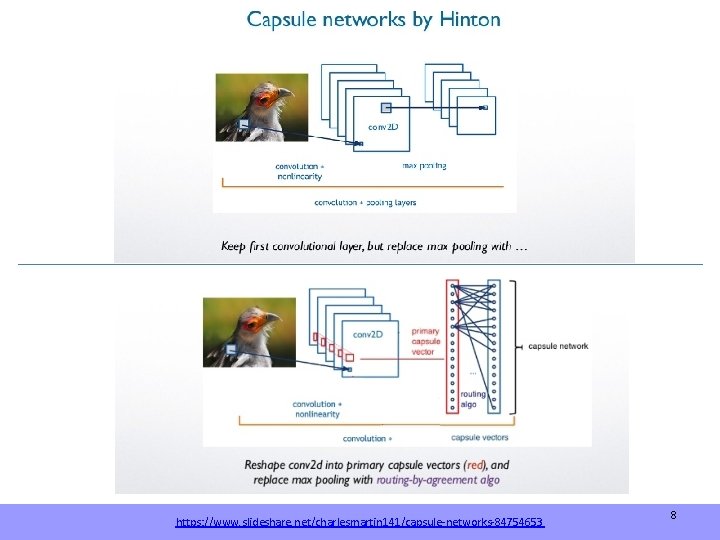

Introduction to Capsule • A Capsule Network (Caps. Net) is a new approach proposed by Geoffrey Hinton (although his original idea dates back to 1990’s). • Caps. Nets are intended to overcome the difficulty of CNNs, in particular Max. Pooling. Downsampling by Max. Pooling is effective for reducing the size of feature maps as well as for finding important features existing in the image. Noriko Tomuro 2

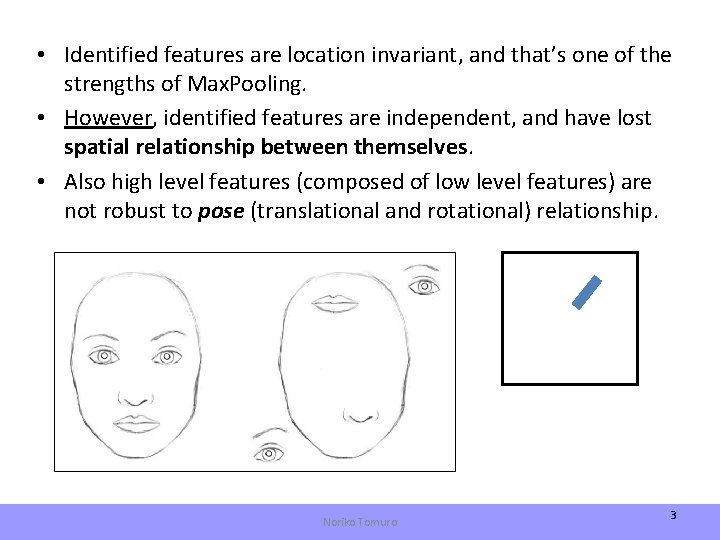

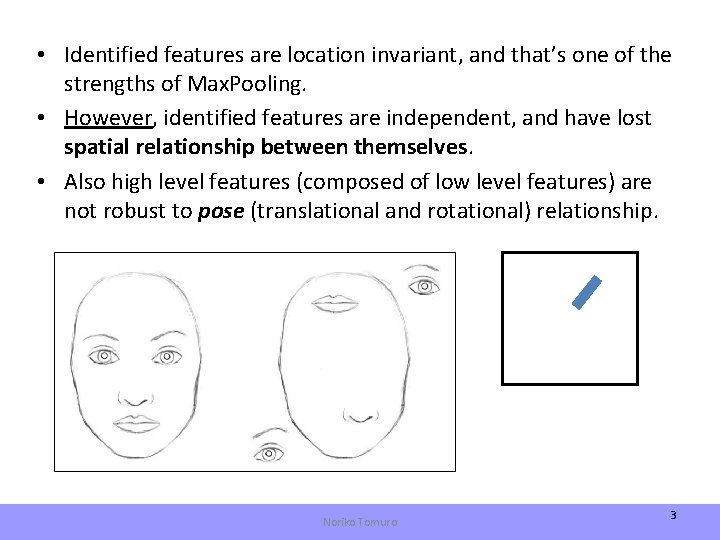

• Identified features are location invariant, and that’s one of the strengths of Max. Pooling. • However, identified features are independent, and have lost spatial relationship between themselves. • Also high level features (composed of low level features) are not robust to pose (translational and rotational) relationship. Noriko Tomuro 3

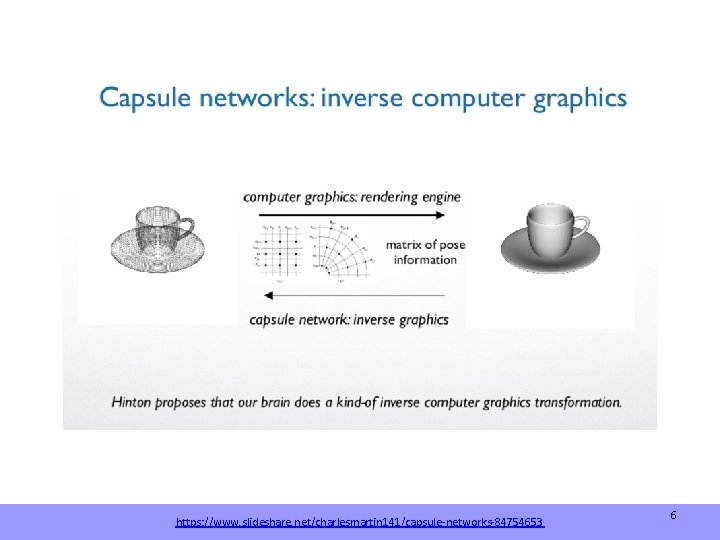

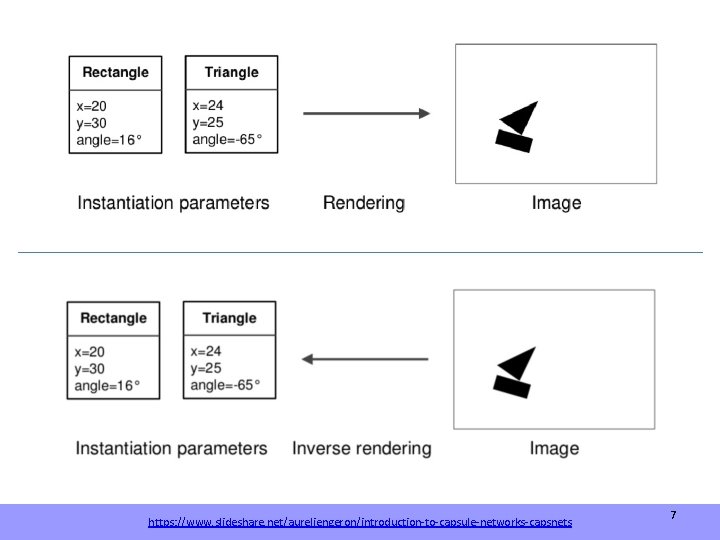

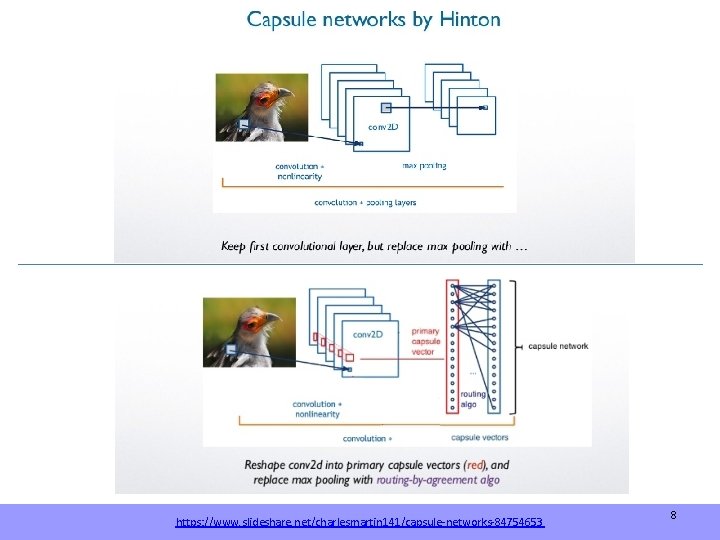

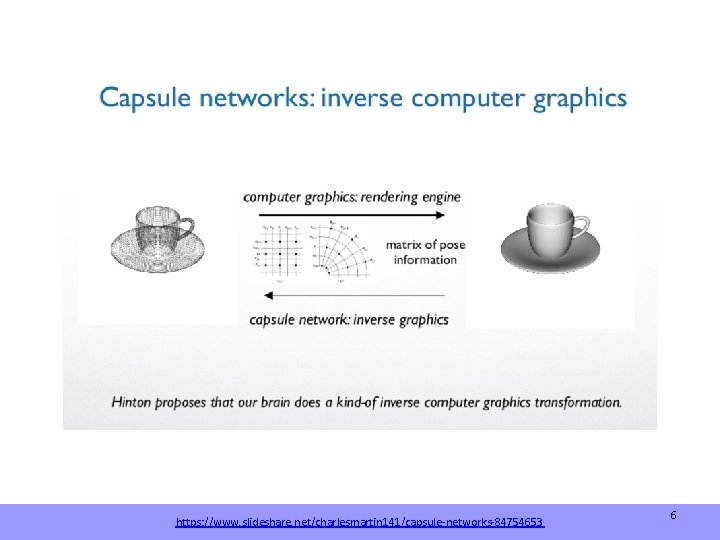

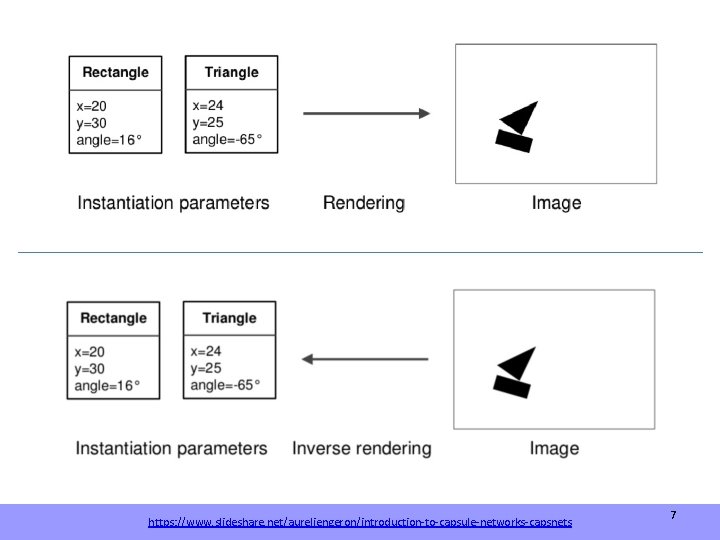

• Hinton himself stated that the fact that max pooling is working so well is a big mistake and a disaster: “The pooling operation used in convolutional neural networks is a big mistake and the fact that it works so well is a disaster. ” Solution: Capsel Networks • Hinton took inspiration from a field that already solved that problem: 3 D computer graphics. • In 3 D graphics, a pose matrix is a special technique to represent the relationships between objects. Poses are essentially matrices representing translation plus rotation. • It also more closely mimic the human visual system, which creates a tree-like hierarchical structure for each focal point to recognize objects. Noriko Tomuro 4

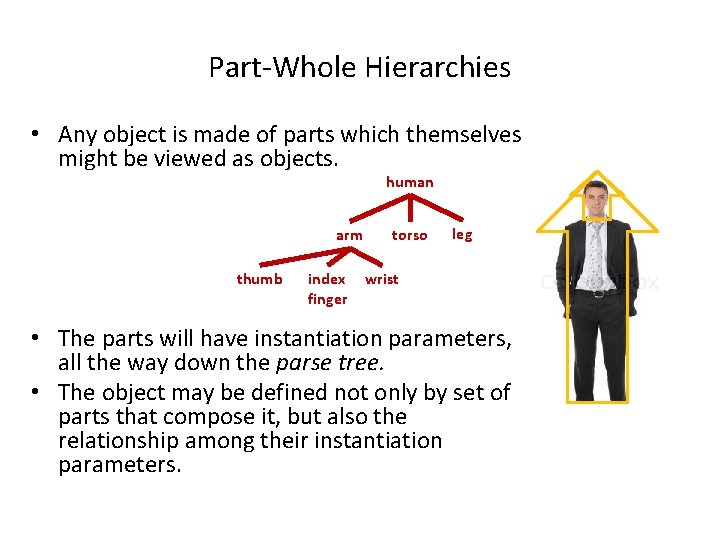

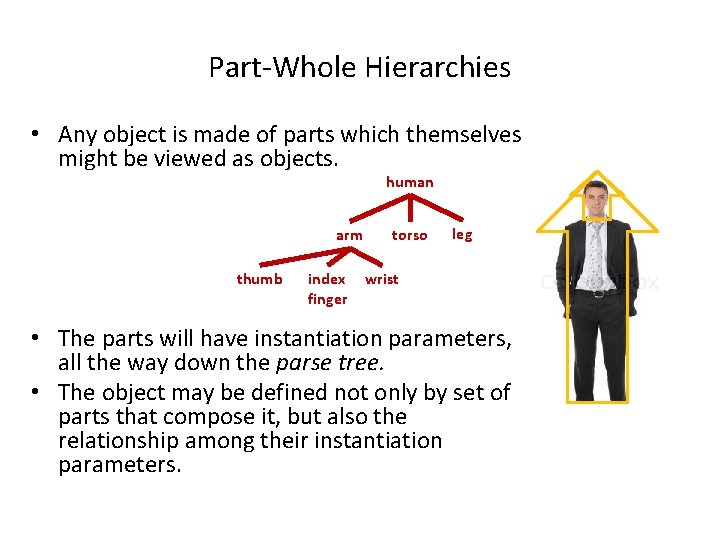

Part-Whole Hierarchies • Any object is made of parts which themselves might be viewed as objects. human arm thumb index finger torso leg wrist • The parts will have instantiation parameters, all the way down the parse tree. • The object may be defined not only by set of parts that compose it, but also the relationship among their instantiation parameters.

https: //www. slideshare. net/charlesmartin 141/capsule-networks-84754653 6

https: //www. slideshare. net/aureliengeron/introduction-to-capsule-networks-capsnets 7

https: //www. slideshare. net/charlesmartin 141/capsule-networks-84754653 8

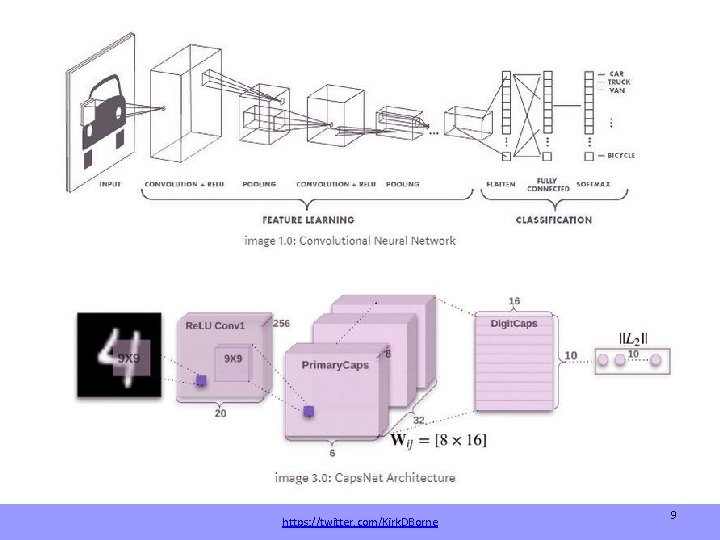

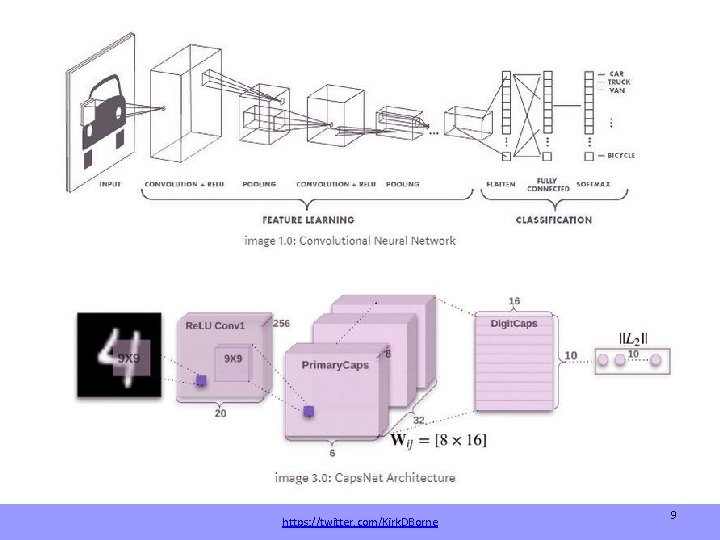

https: //twitter. com/Kirk. DBorne 9

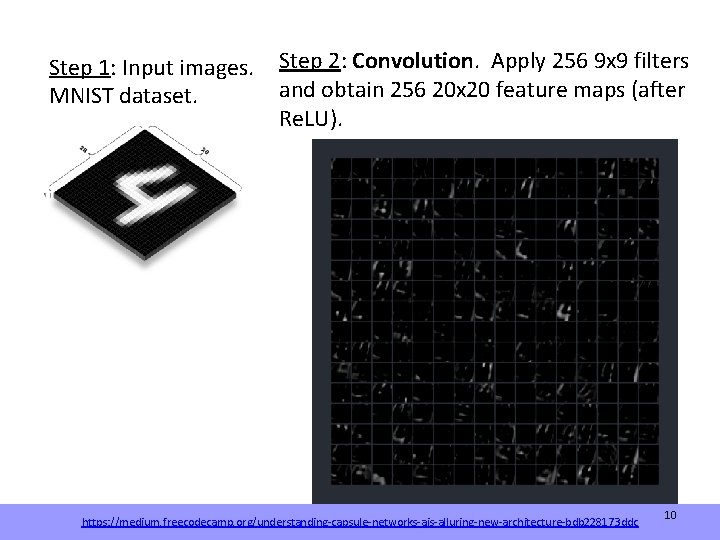

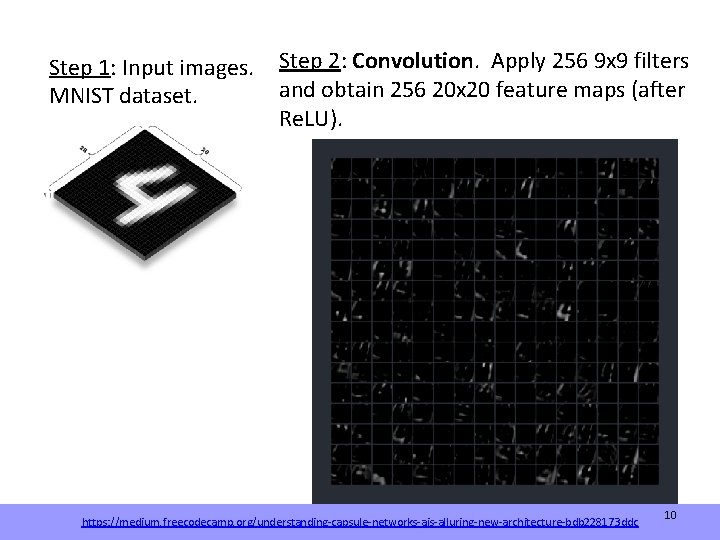

Step 1: Input images. MNIST dataset. Step 2: Convolution. Apply 256 9 x 9 filters and obtain 256 20 x 20 feature maps (after Re. LU). https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 10

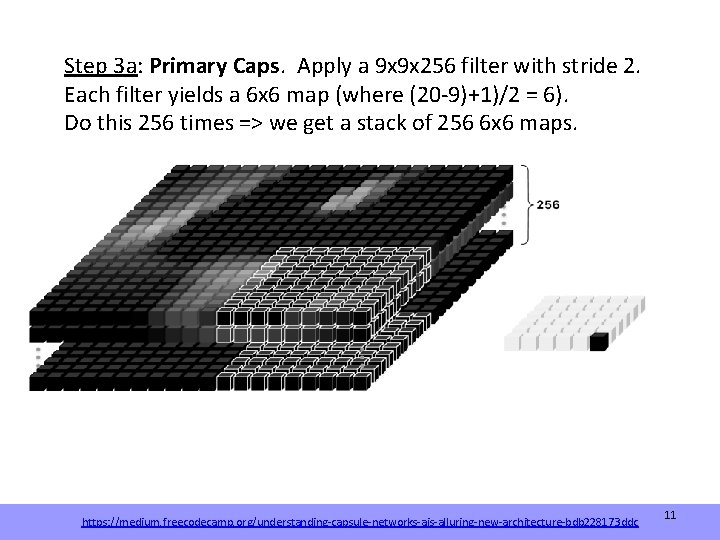

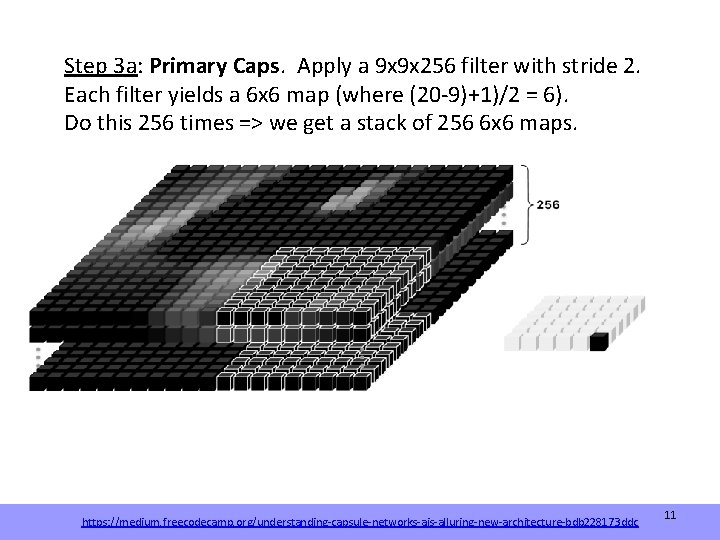

Step 3 a: Primary Caps. Apply a 9 x 9 x 256 filter with stride 2. Each filter yields a 6 x 6 map (where (20 -9)+1)/2 = 6). Do this 256 times => we get a stack of 256 6 x 6 maps. https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 11

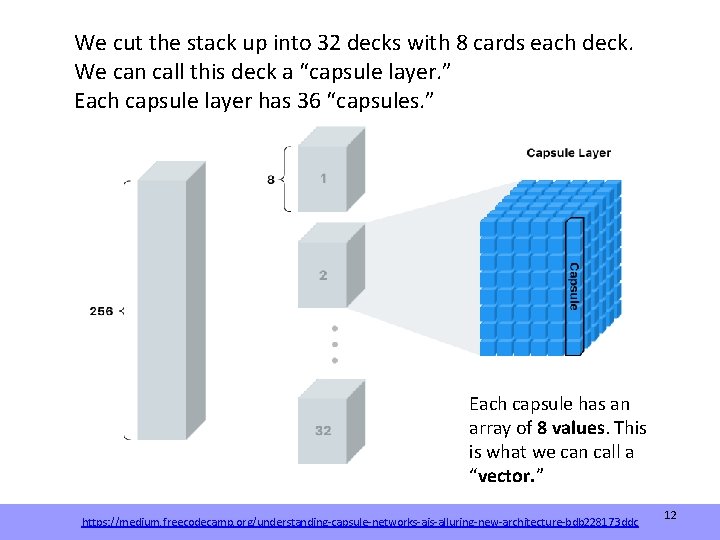

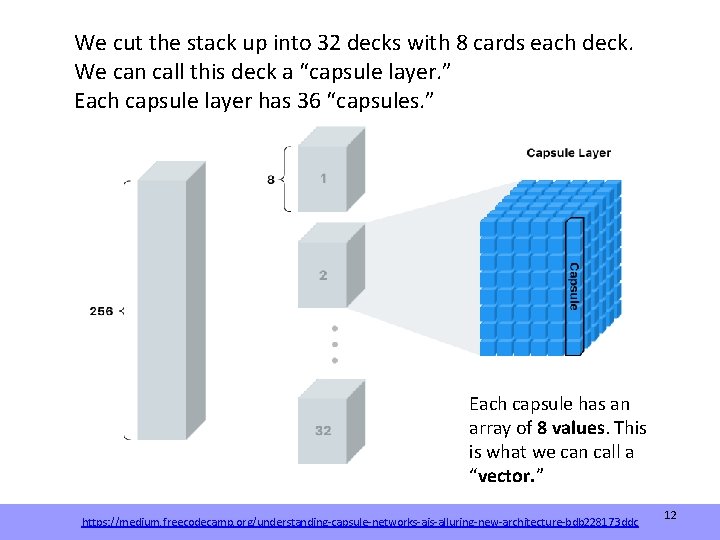

We cut the stack up into 32 decks with 8 cards each deck. We can call this deck a “capsule layer. ” Each capsule layer has 36 “capsules. ” Each capsule has an array of 8 values. This is what we can call a “vector. ” https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 12

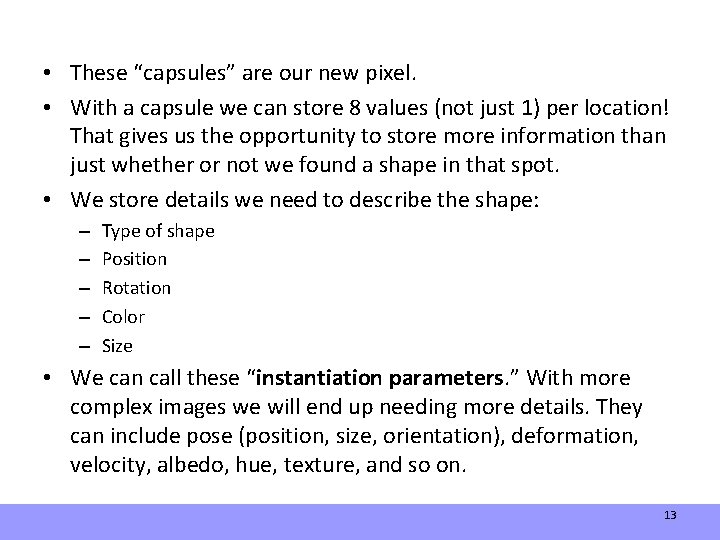

• These “capsules” are our new pixel. • With a capsule we can store 8 values (not just 1) per location! That gives us the opportunity to store more information than just whether or not we found a shape in that spot. • We store details we need to describe the shape: – – – Type of shape Position Rotation Color Size • We can call these “instantiation parameters. ” With more complex images we will end up needing more details. They can include pose (position, size, orientation), deformation, velocity, albedo, hue, texture, and so on. 13

Noriko Tomuro 14

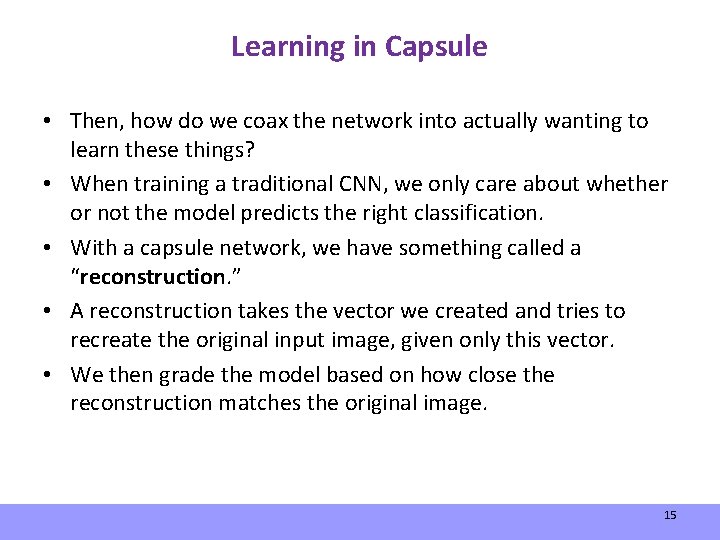

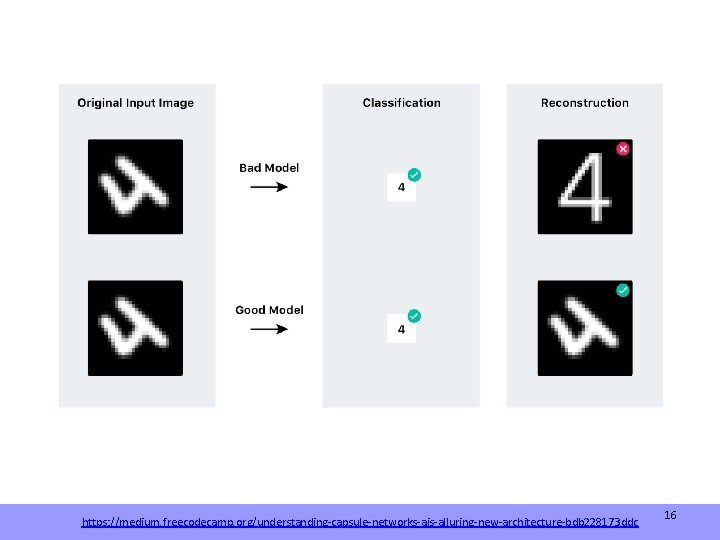

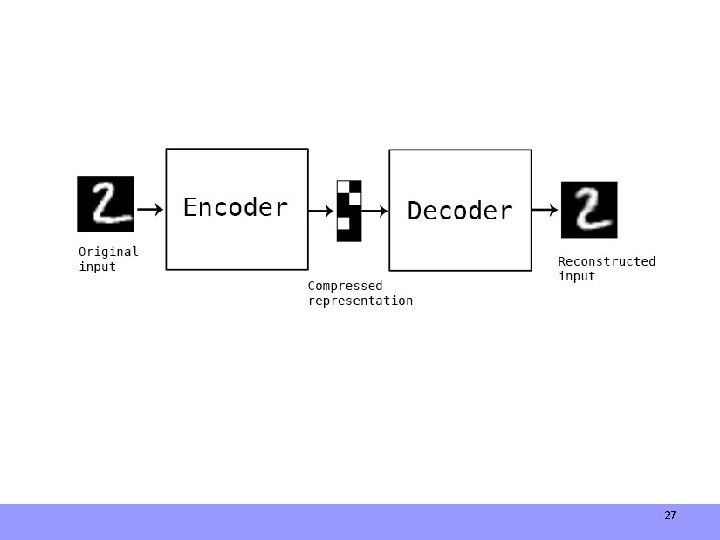

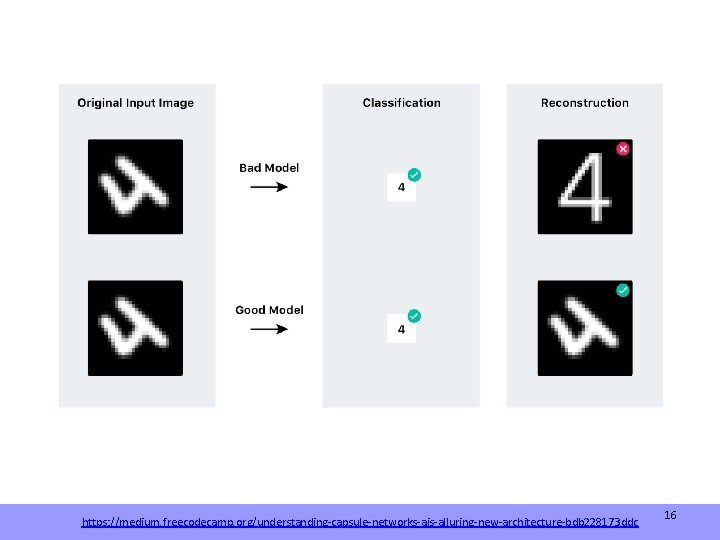

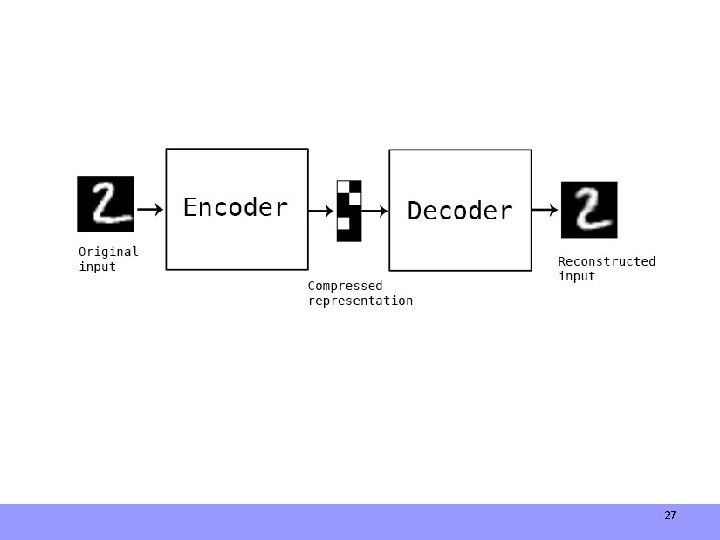

Learning in Capsule • Then, how do we coax the network into actually wanting to learn these things? • When training a traditional CNN, we only care about whether or not the model predicts the right classification. • With a capsule network, we have something called a “reconstruction. ” • A reconstruction takes the vector we created and tries to recreate the original input image, given only this vector. • We then grade the model based on how close the reconstruction matches the original image. 15

https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 16

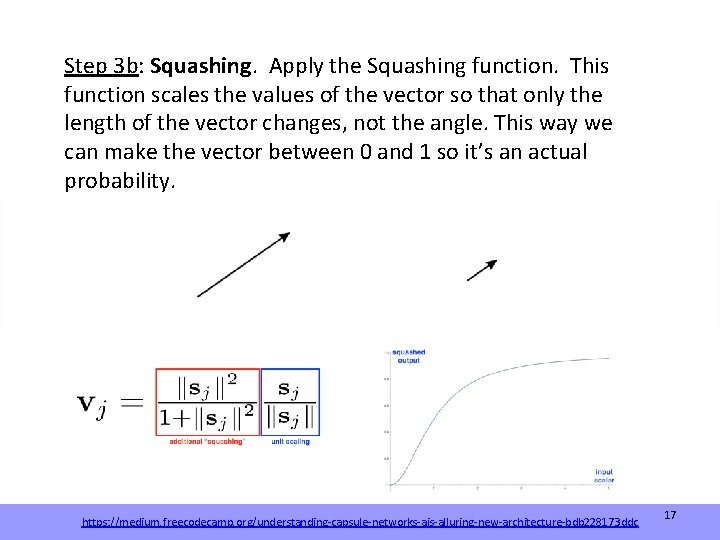

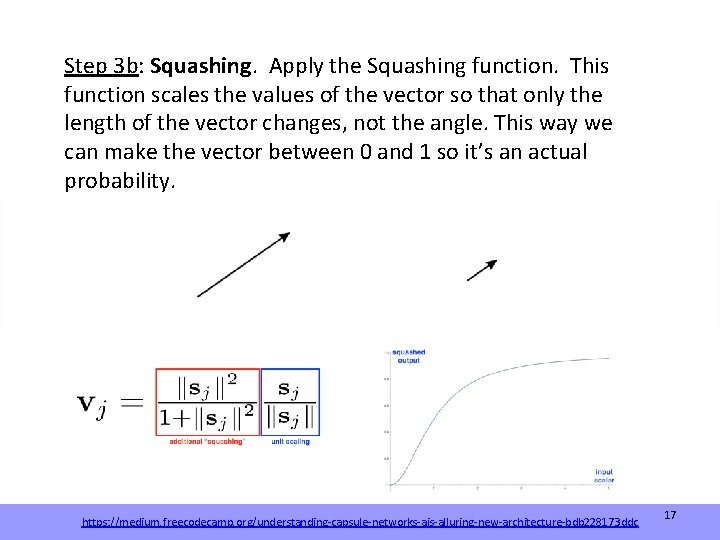

Step 3 b: Squashing. Apply the Squashing function. This function scales the values of the vector so that only the length of the vector changes, not the angle. This way we can make the vector between 0 and 1 so it’s an actual probability. https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 17

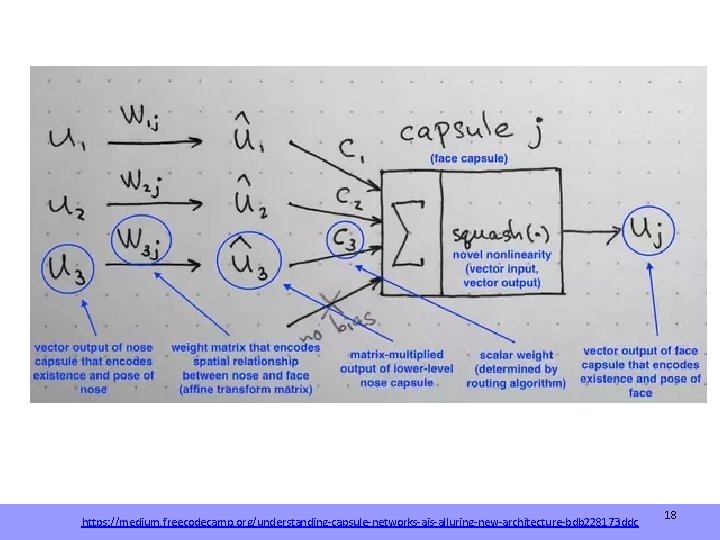

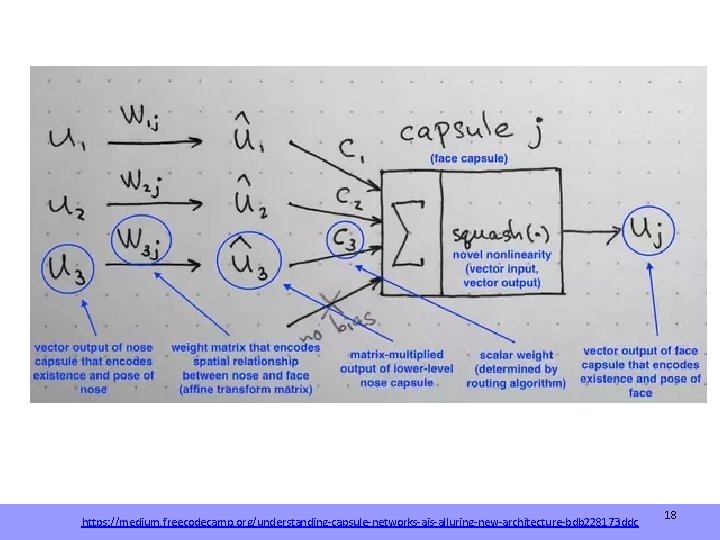

https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 18

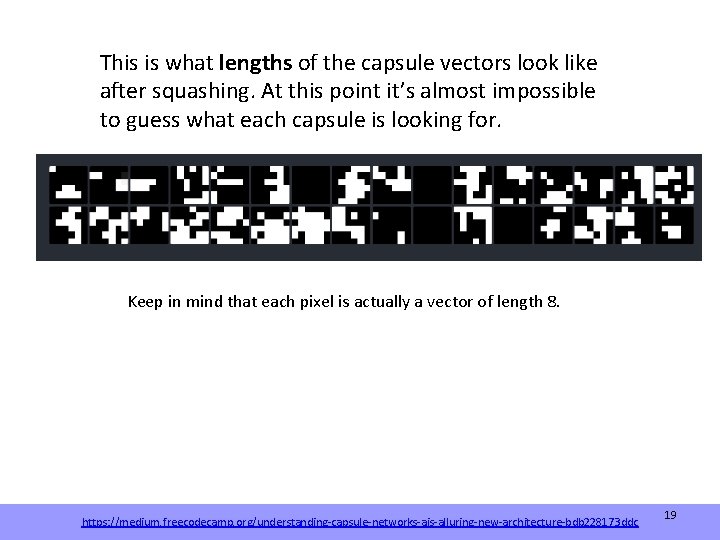

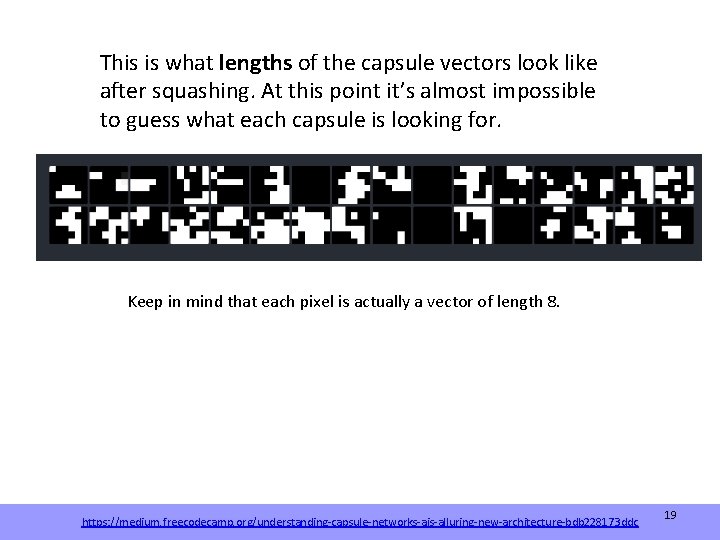

This is what lengths of the capsule vectors look like after squashing. At this point it’s almost impossible to guess what each capsule is looking for. Keep in mind that each pixel is actually a vector of length 8. https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 19

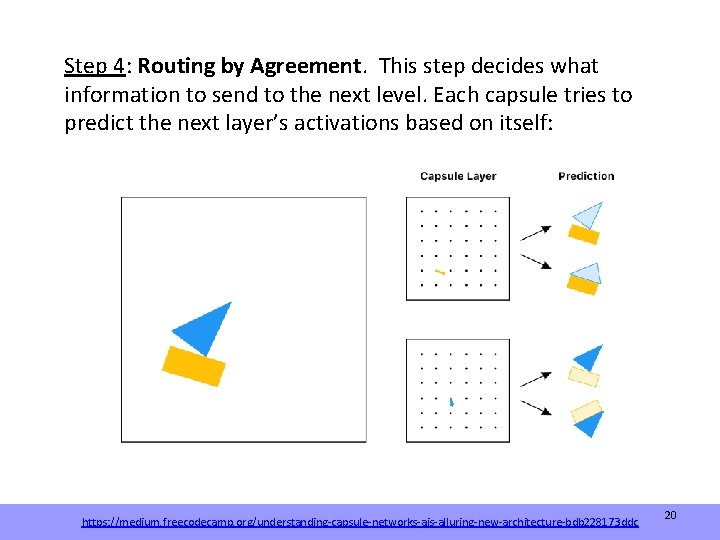

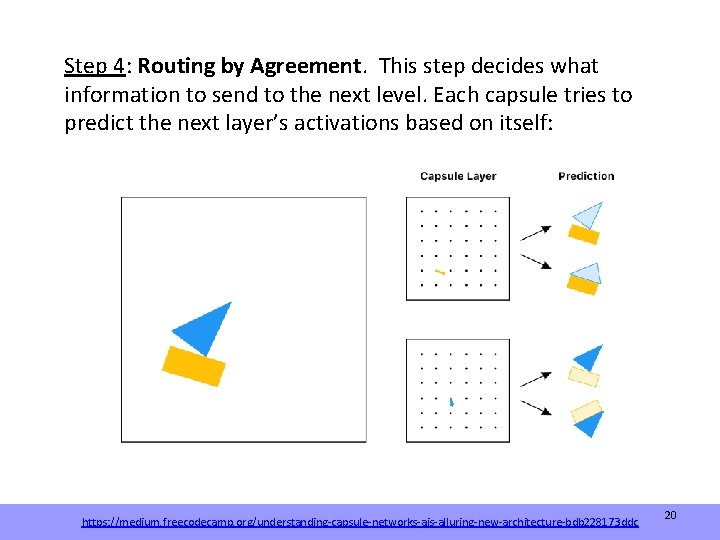

Step 4: Routing by Agreement. This step decides what information to send to the next level. Each capsule tries to predict the next layer’s activations based on itself: https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 20

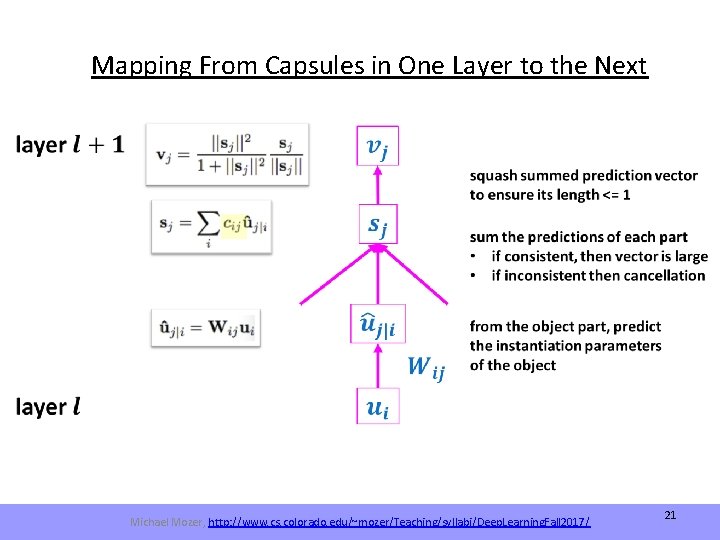

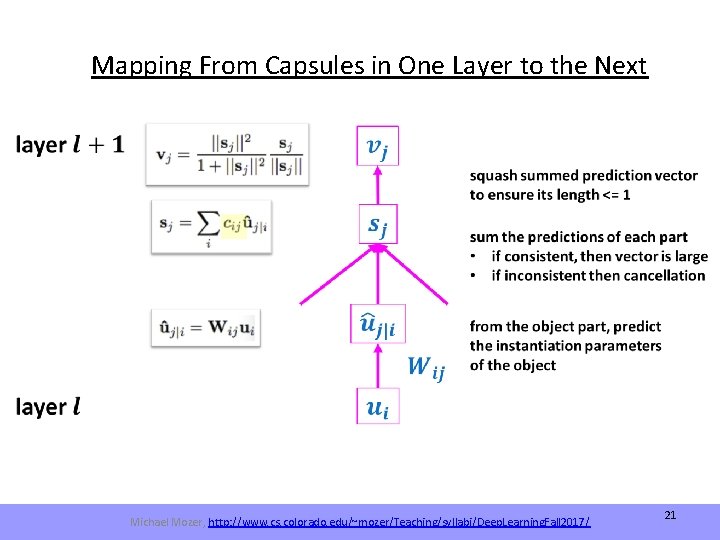

Mapping From Capsules in One Layer to the Next Michael Mozer, http: //www. cs. colorado. edu/~mozer/Teaching/syllabi/Deep. Learning. Fall 2017/ 21

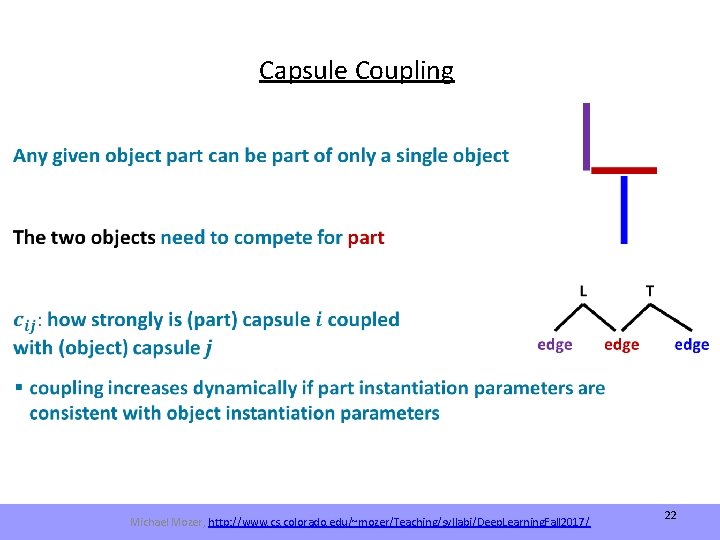

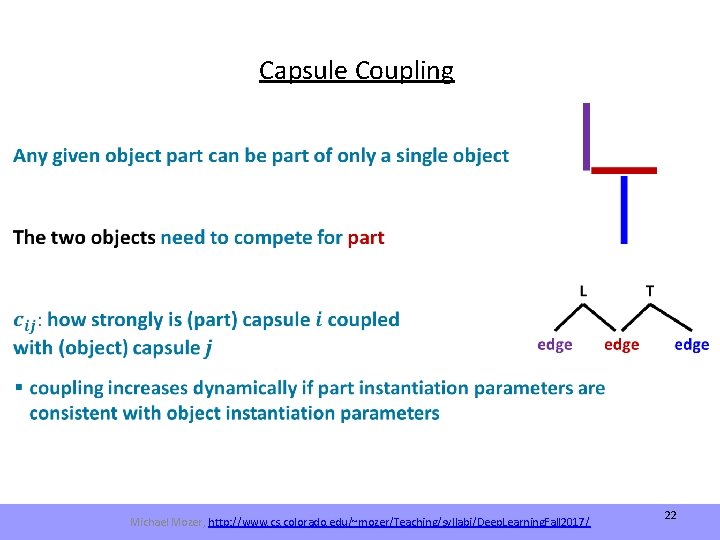

Capsule Coupling Michael Mozer, http: //www. cs. colorado. edu/~mozer/Teaching/syllabi/Deep. Learning. Fall 2017/ 22

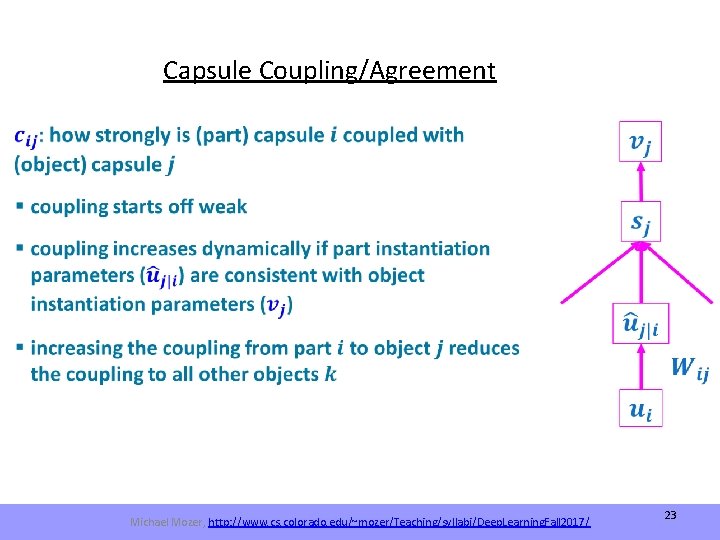

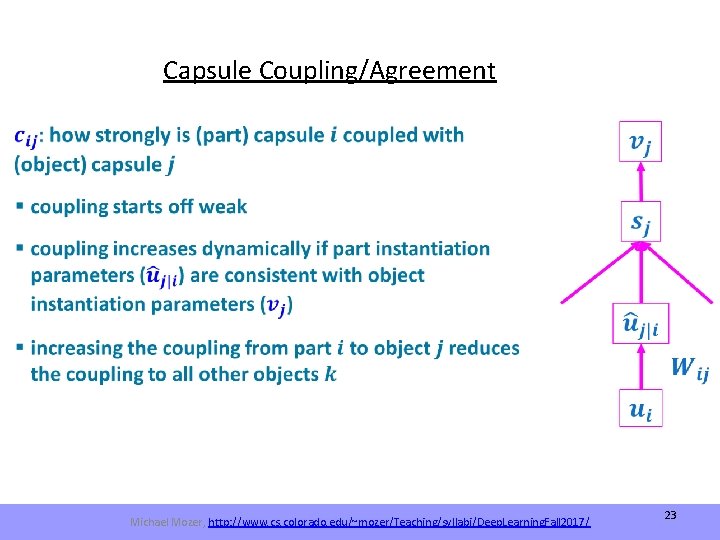

Capsule Coupling/Agreement Michael Mozer, http: //www. cs. colorado. edu/~mozer/Teaching/syllabi/Deep. Learning. Fall 2017/ 23

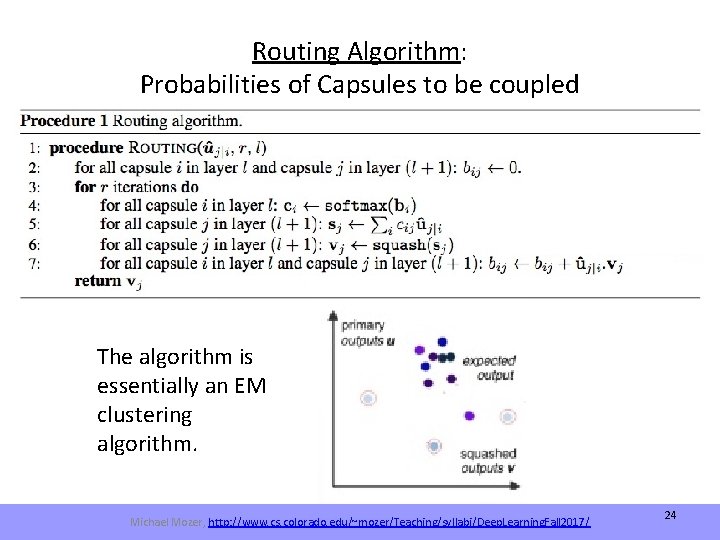

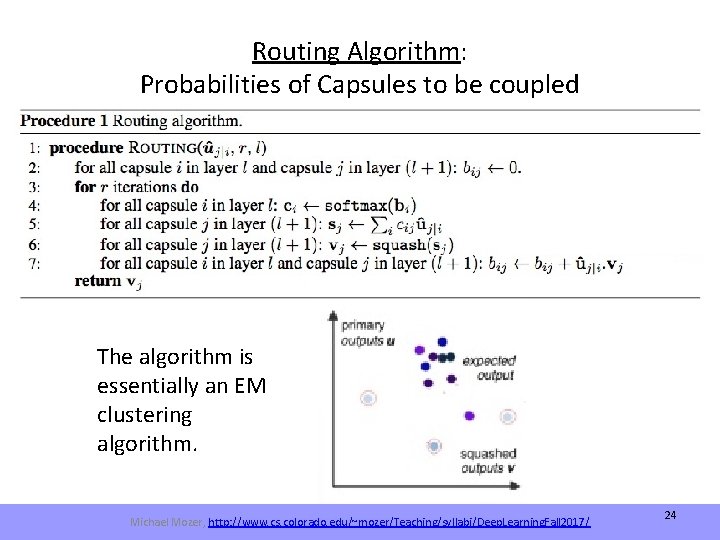

Routing Algorithm: Probabilities of Capsules to be coupled The algorithm is essentially an EM clustering algorithm. Michael Mozer, http: //www. cs. colorado. edu/~mozer/Teaching/syllabi/Deep. Learning. Fall 2017/ 24

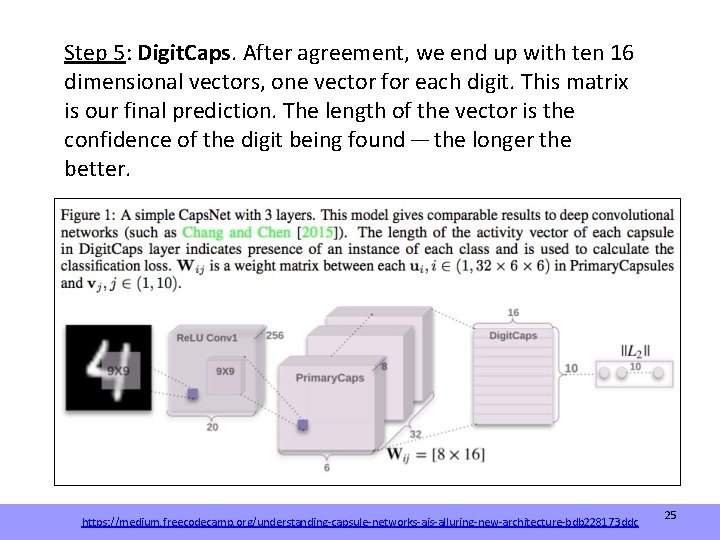

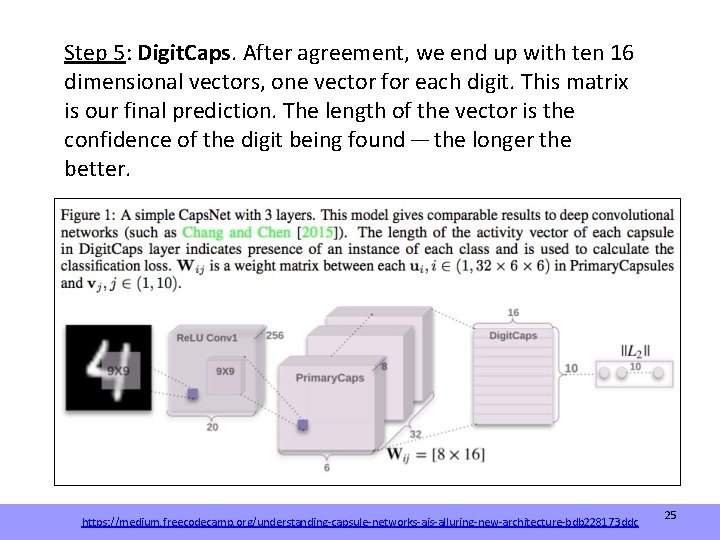

Step 5: Digit. Caps. After agreement, we end up with ten 16 dimensional vectors, one vector for each digit. This matrix is our final prediction. The length of the vector is the confidence of the digit being found — the longer the better. https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 25

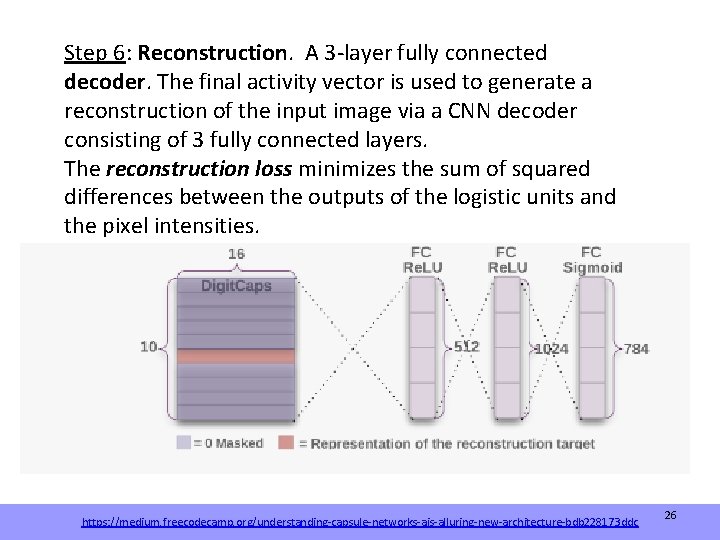

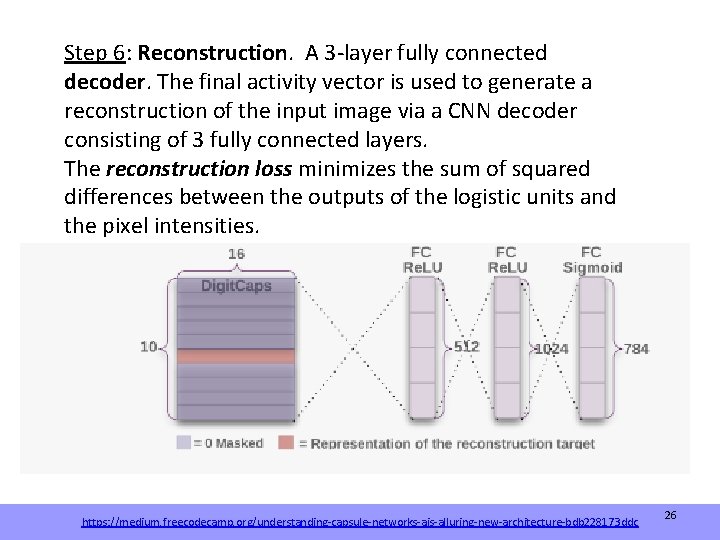

Step 6: Reconstruction. A 3 -layer fully connected decoder. The final activity vector is used to generate a reconstruction of the input image via a CNN decoder consisting of 3 fully connected layers. The reconstruction loss minimizes the sum of squared differences between the outputs of the logistic units and the pixel intensities. https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 26

27

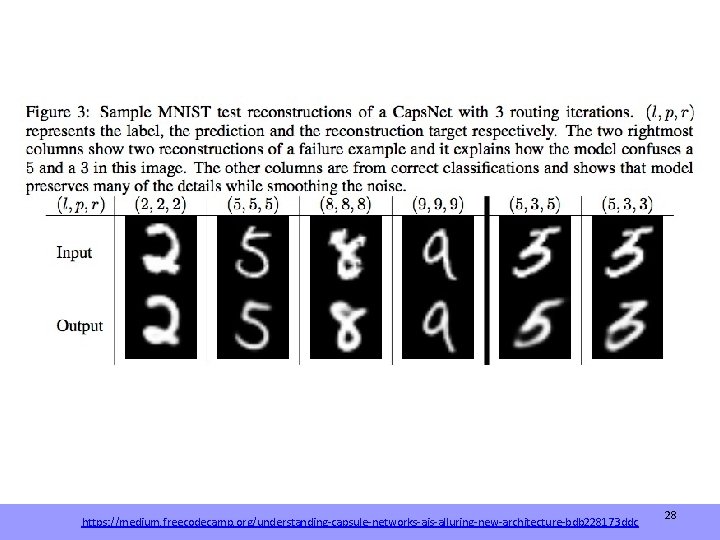

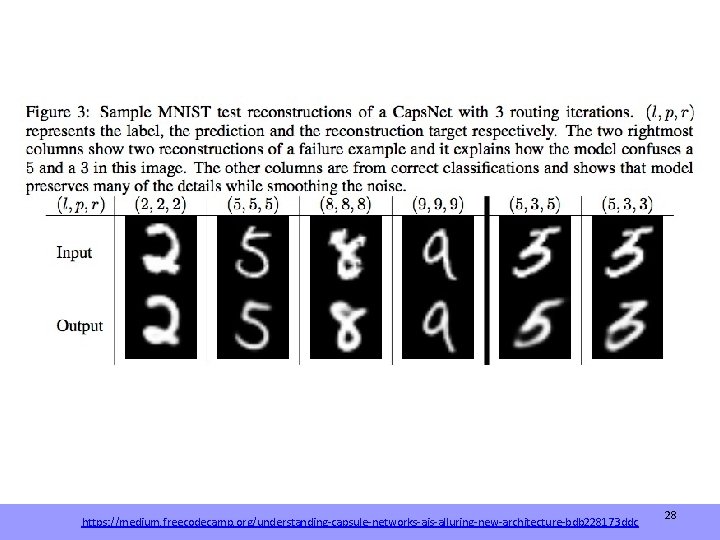

https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 28

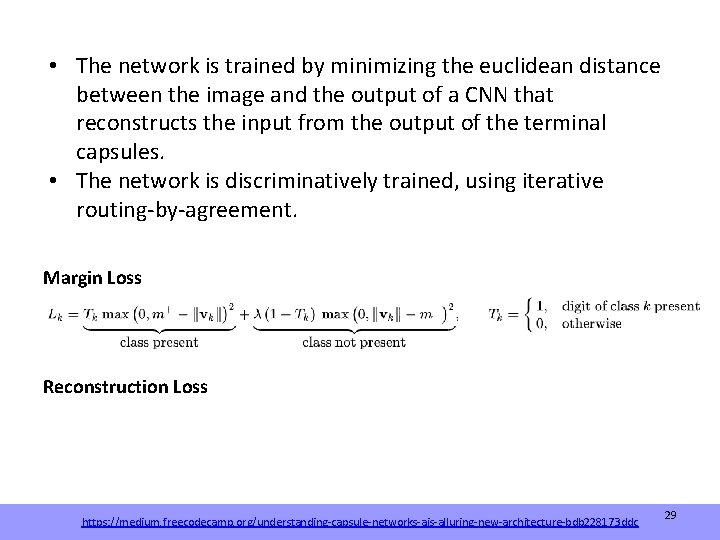

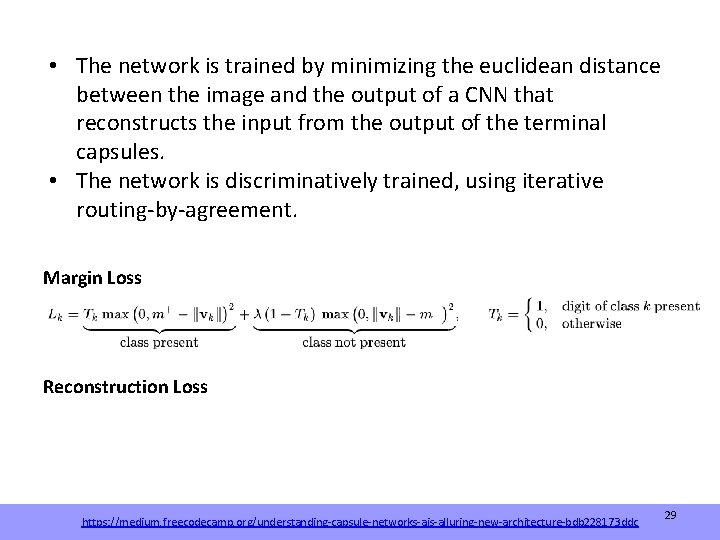

• The network is trained by minimizing the euclidean distance between the image and the output of a CNN that reconstructs the input from the output of the terminal capsules. • The network is discriminatively trained, using iterative routing-by-agreement. Margin Loss Reconstruction Loss https: //medium. freecodecamp. org/understanding-capsule-networks-ais-alluring-new-architecture-bdb 228173 ddc 29

Capsule – Future of ANN? • Everybody agrees with the idea. • But it has not been tested with other large data. The first results seem promising, but so far tested with a few datasets. • Also the implemented systems are very slow to train. [Quora] “At this moment in time it is not possible to say whether capsule networks are the future for neural AI. Other experiments besides image classification will need to be conducted to proof that the techniques is robust for all other kinds of learning that involve other aspects of perception besides the visual one. … Lots more work has to be done in the structure of these learning architectures. ” Noriko Tomuro 30