Echelon NVIDIAs ExtremeScale Computing Project Echelon Team System

- Slides: 20

Echelon NVIDIA’s Extreme-Scale Computing Project

Echelon Team

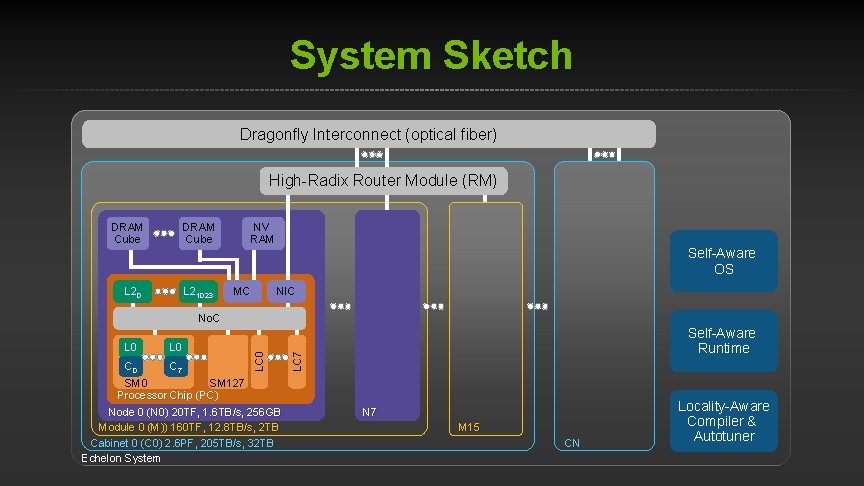

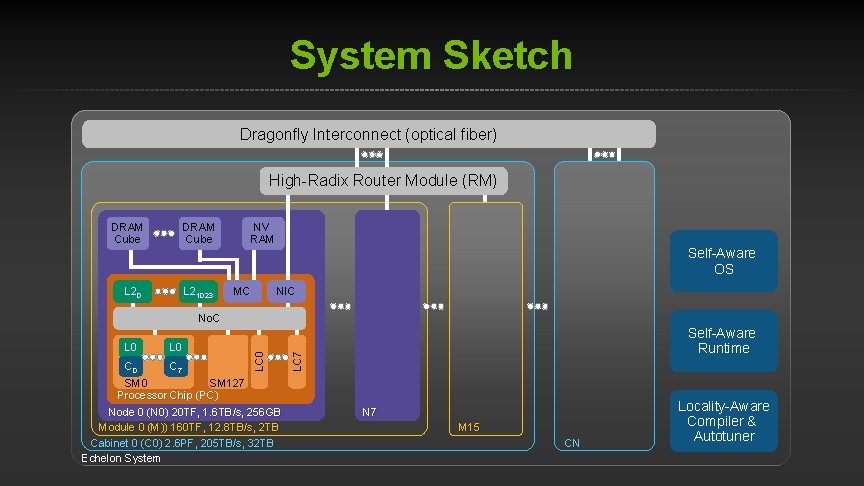

System Sketch Dragonfly Interconnect (optical fiber) High-Radix Router Module (RM) DRAM Cube NV RAM Self-Aware OS L 20 L 21023 MC NIC L 0 C 7 SM 0 SM 127 Processor Chip (PC) Node 0 (N 0) 20 TF, 1. 6 TB/s, 256 GB Module 0 (M)) 160 TF, 12. 8 TB/s, 2 TB Cabinet 0 (C 0) 2. 6 PF, 205 TB/s, 32 TB Echelon System Self-Aware Runtime LC 7 L 0 LC 0 No. C N 7 M 15 CN Locality-Aware Compiler & Autotuner

Power is THE Problem 1 Data Movement Dominates Power 2 Optimize the Storage Hierarchy 3 Tailor Memory to the Application

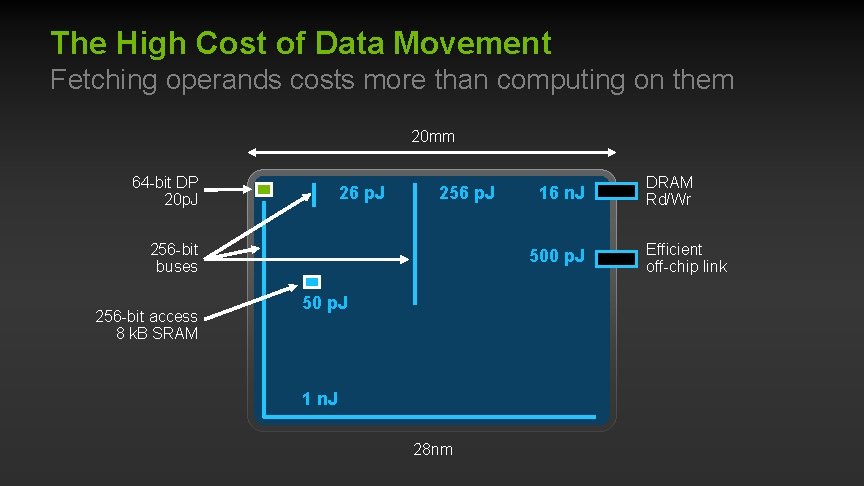

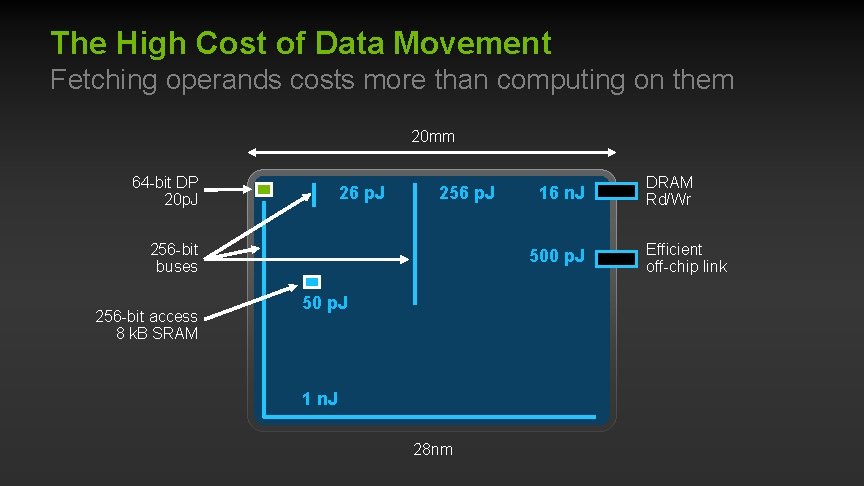

The High Cost of Data Movement Fetching operands costs more than computing on them 20 mm 64 -bit DP 20 p. J 26 p. J 256 -bit buses 256 -bit access 8 k. B SRAM 16 n. J 500 p. J 50 p. J 1 n. J 28 nm DRAM Rd/Wr Efficient off-chip link

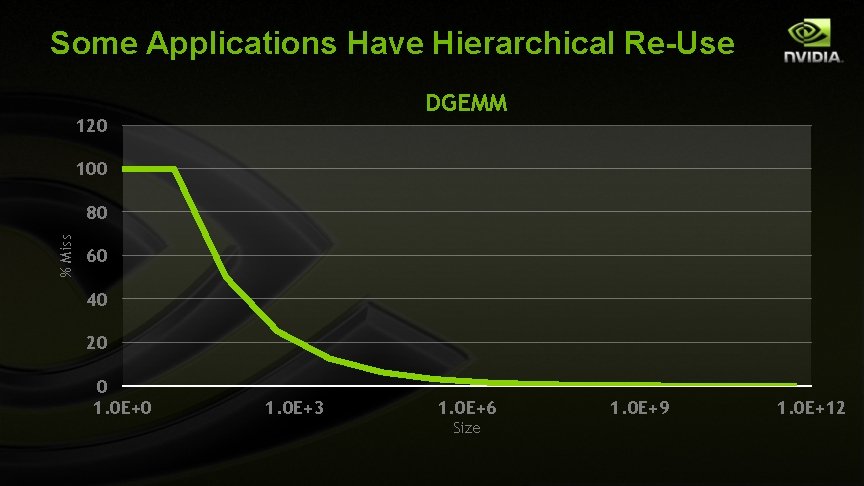

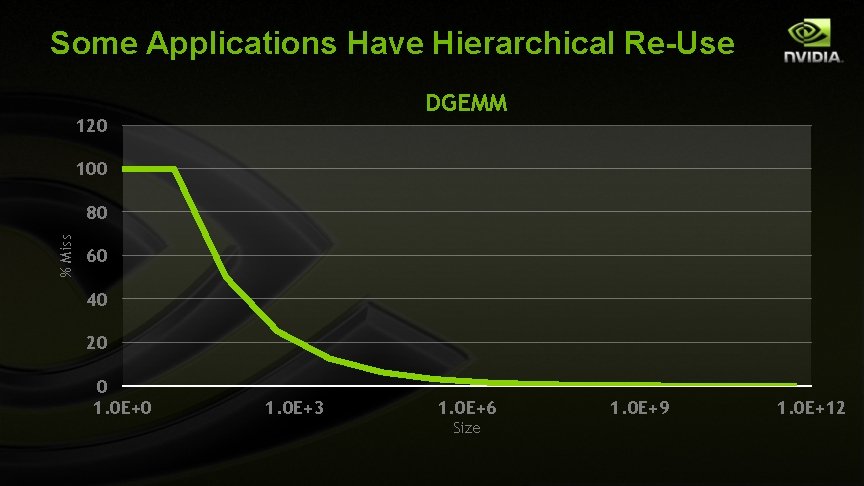

Some Applications Have Hierarchical Re-Use DGEMM 120 100 % Miss 80 60 40 20 0 1. 0 E+3 1. 0 E+6 Size 1. 0 E+9 1. 0 E+12

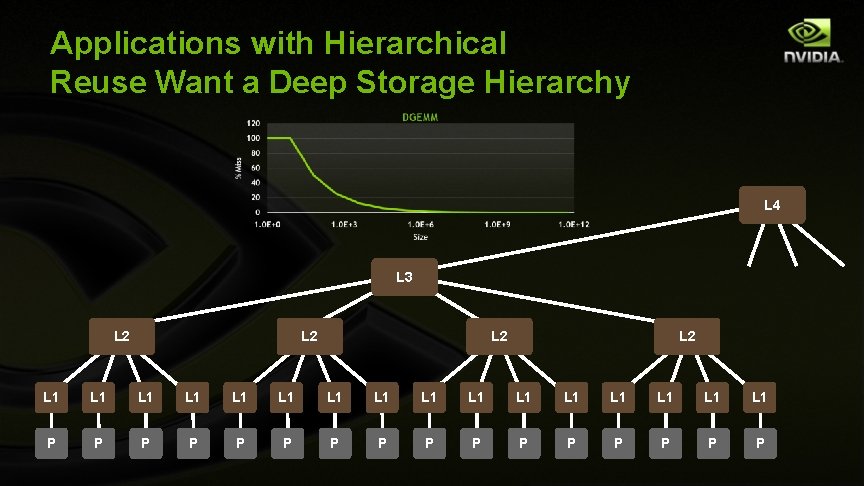

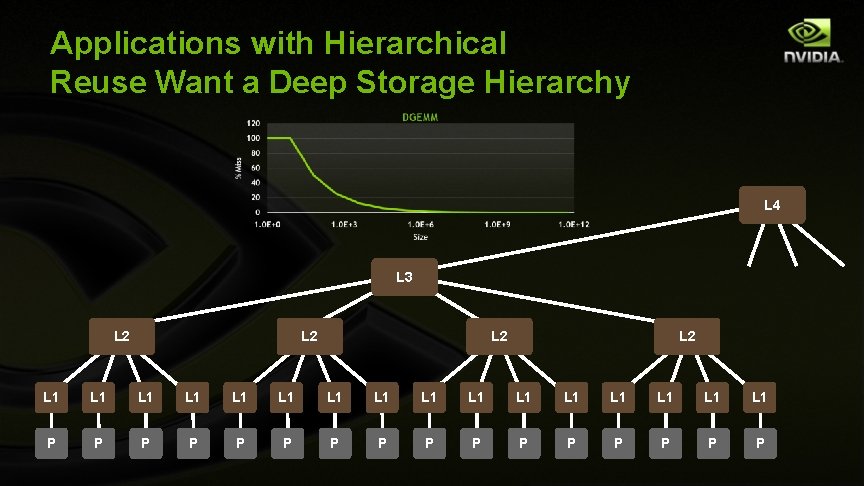

Applications with Hierarchical Reuse Want a Deep Storage Hierarchy L 4 L 3 L 2 L 2 L 1 L 1 L 1 L 1 P P P P

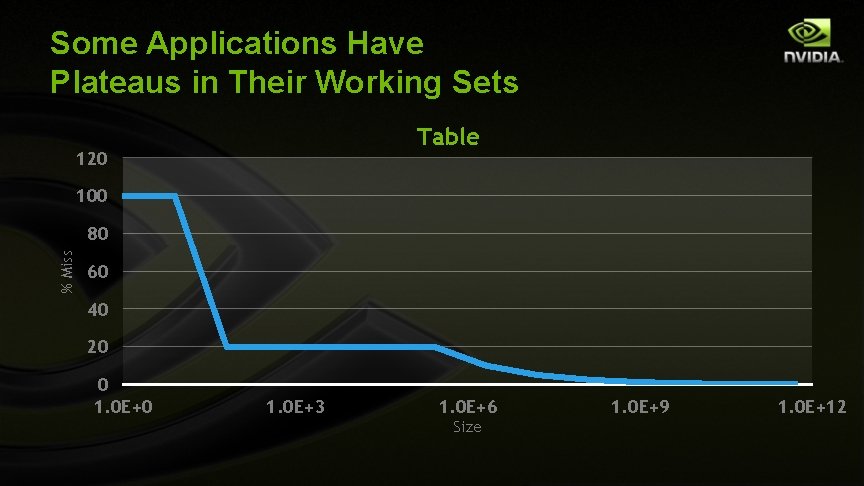

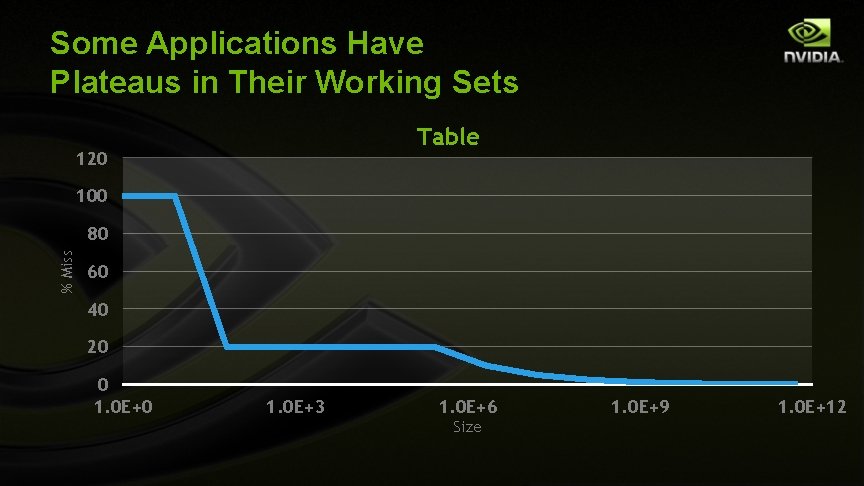

% Miss Some Applications Have Plateaus in Their Working Sets Size

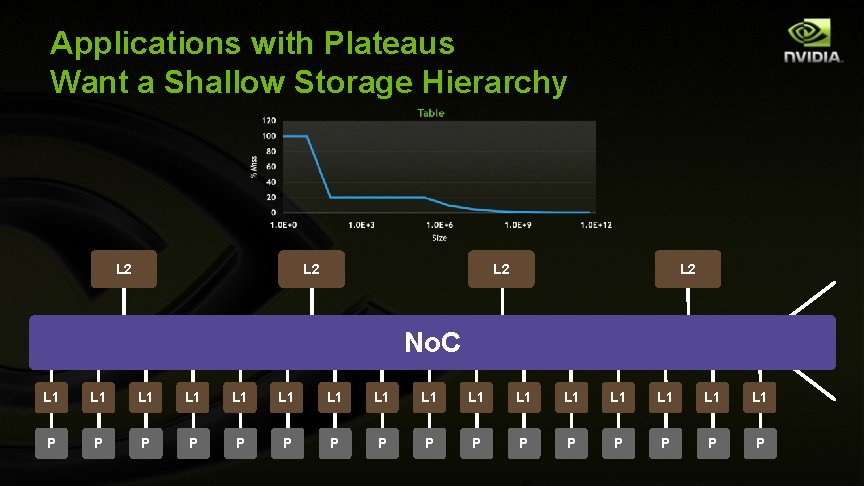

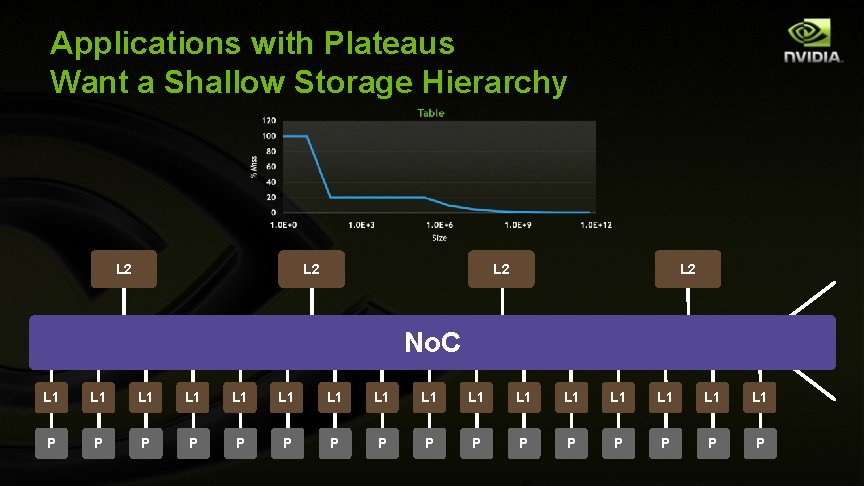

Applications with Plateaus Want a Shallow Storage Hierarchy L 2 L 2 No. C L 1 L 1 L 1 L 1 P P P P

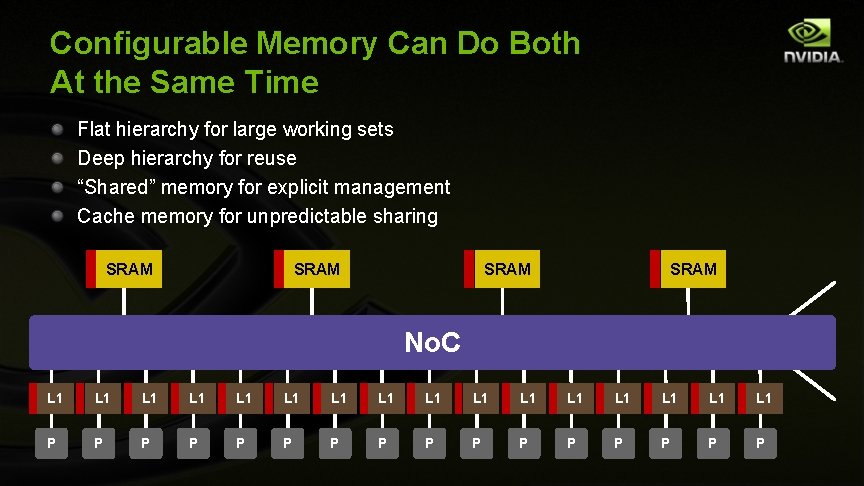

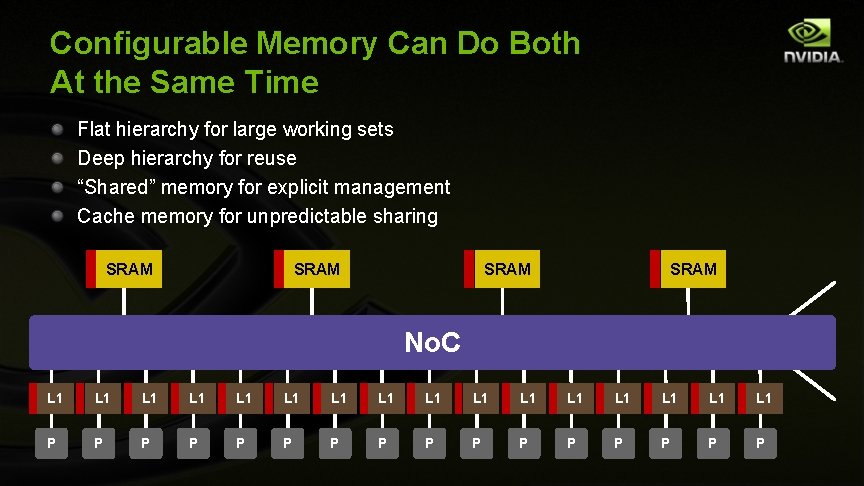

Configurable Memory Can Do Both At the Same Time Flat hierarchy for large working sets Deep hierarchy for reuse “Shared” memory for explicit management Cache memory for unpredictable sharing SRAM No. C L 1 L 1 L 1 L 1 P P P P

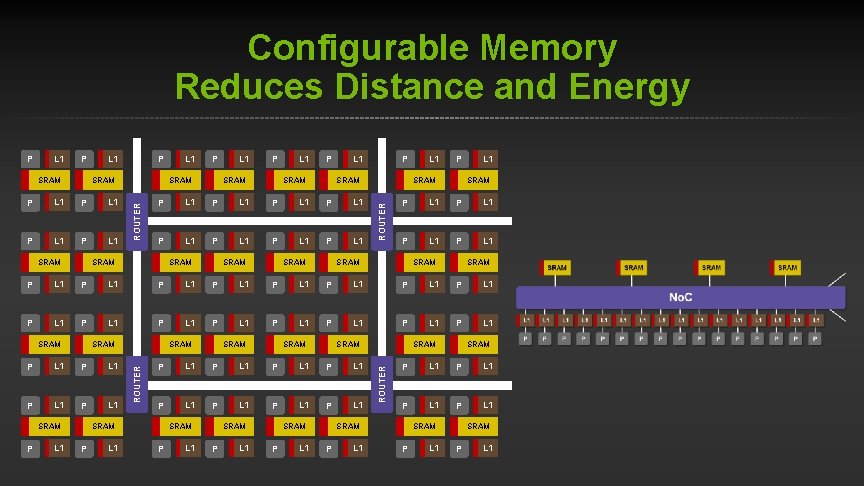

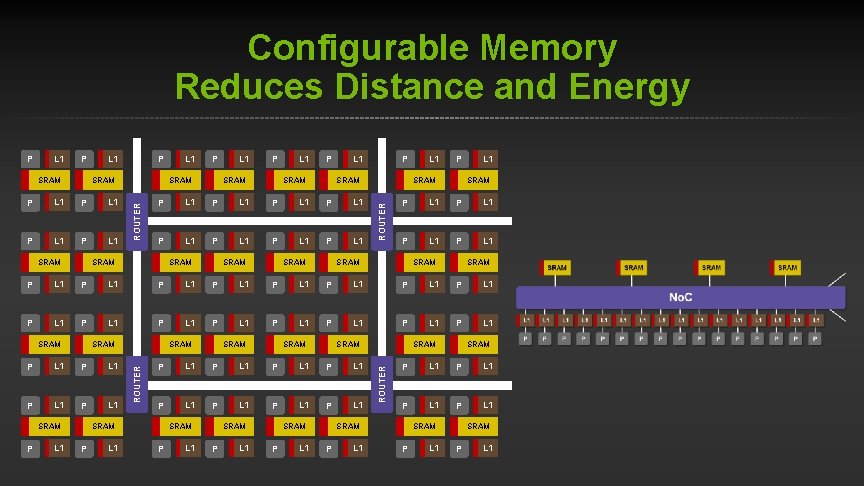

Configurable Memory Reduces Distance and Energy P SRAM L 1 P SRAM P L 1 SRAM L 1 P SRAM L 1 SRAM P L 1 P L 1 SRAM P SRAM L 1 P SRAM ROUTER L 1 ROUTER P L 1 SRAM P L 1 SRAM P L 1 P L 1 P L 1 P L 1 P L 1 SRAM P L 1 SRAM P L 1 P L 1 SRAM P L 1 SRAM P L 1 SRAM ROUTER SRAM P L 1 SRAM P L 1

An NVIDIA Exa. Scale Machine

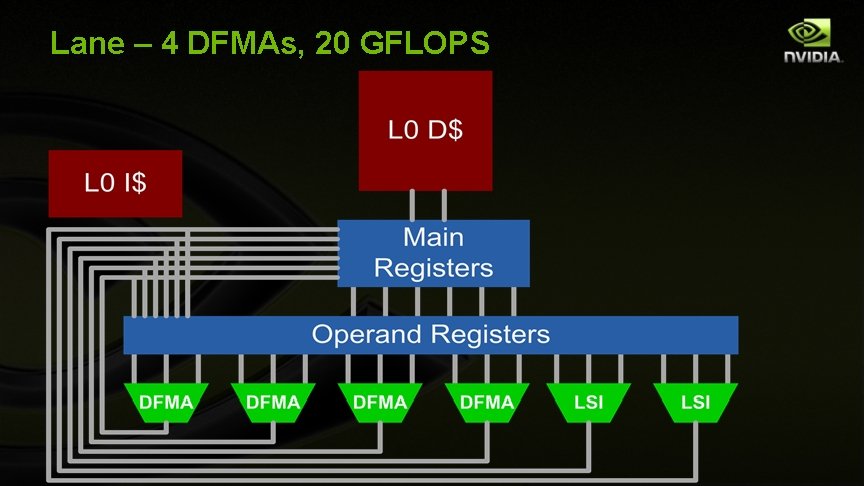

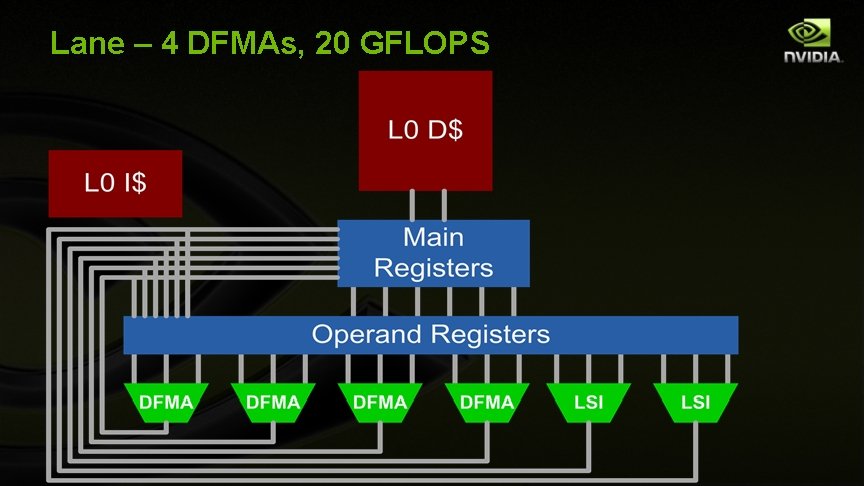

Lane – 4 DFMAs, 20 GFLOPS

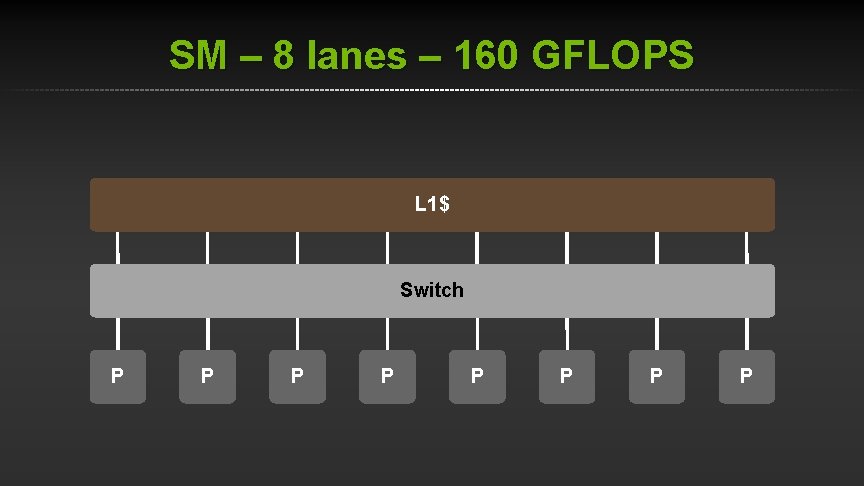

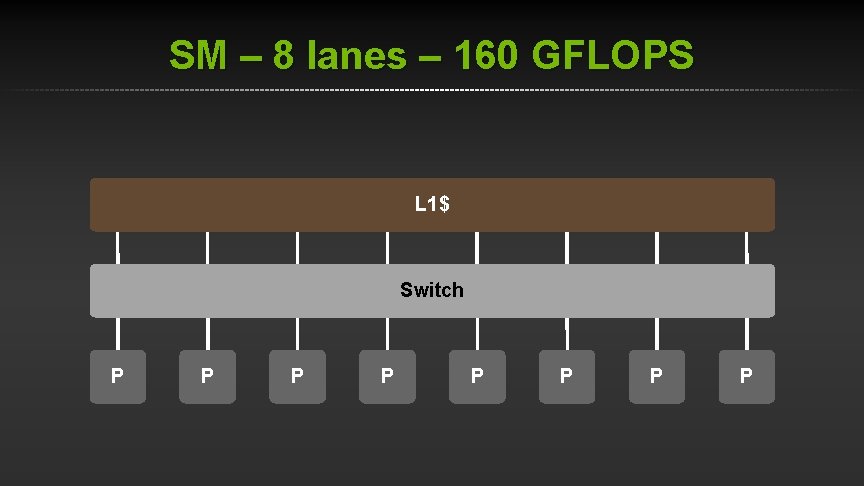

SM – 8 lanes – 160 GFLOPS L 1$ Switch P P P P

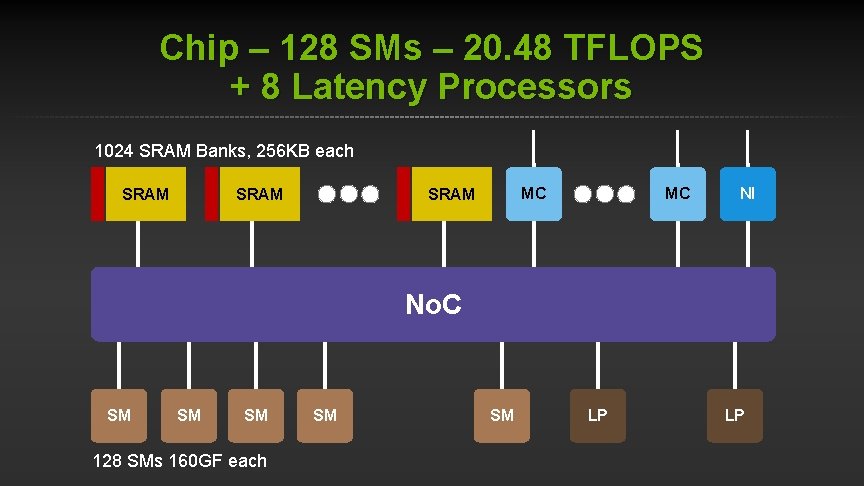

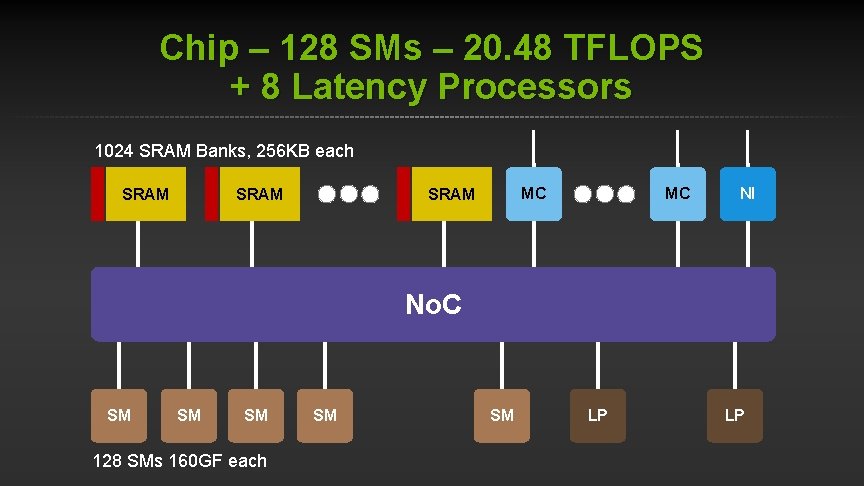

Chip – 128 SMs – 20. 48 TFLOPS + 8 Latency Processors 1024 SRAM Banks, 256 KB each SRAM MC NI No. C SM SM SM 128 SMs 160 GF each SM SM LP LP

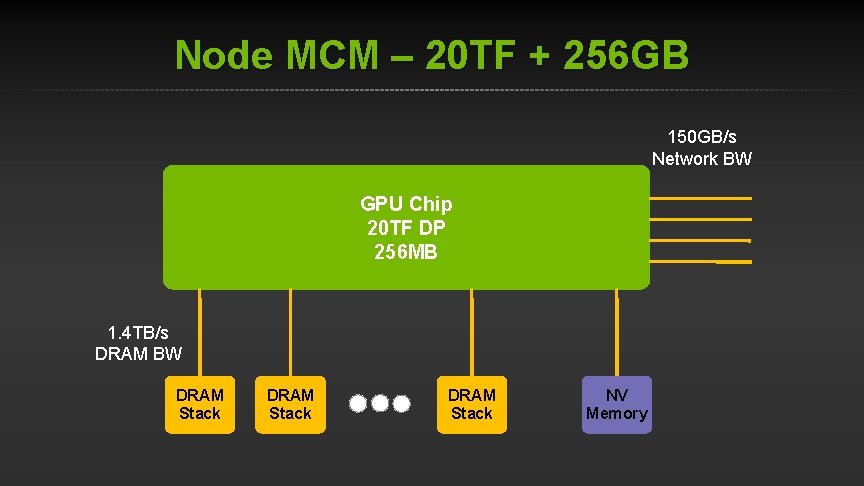

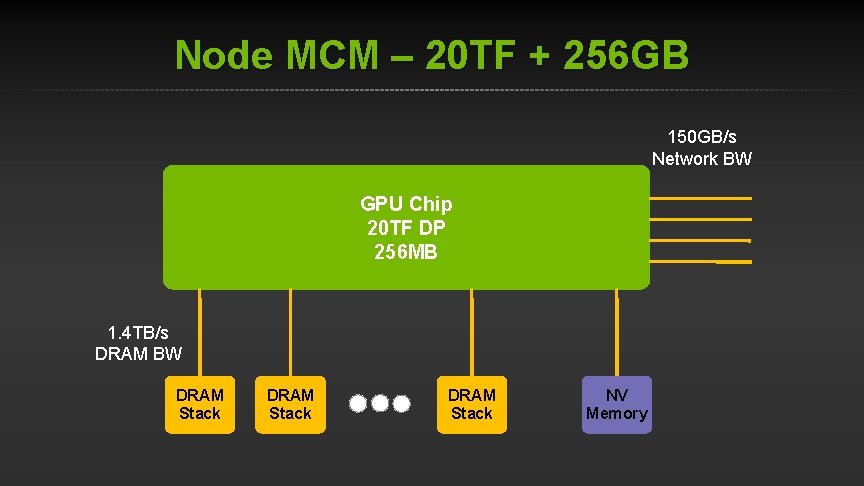

Node MCM – 20 TF + 256 GB 150 GB/s Network BW GPU Chip 20 TF DP 256 MB 1. 4 TB/s DRAM BW DRAM Stack NV Memory

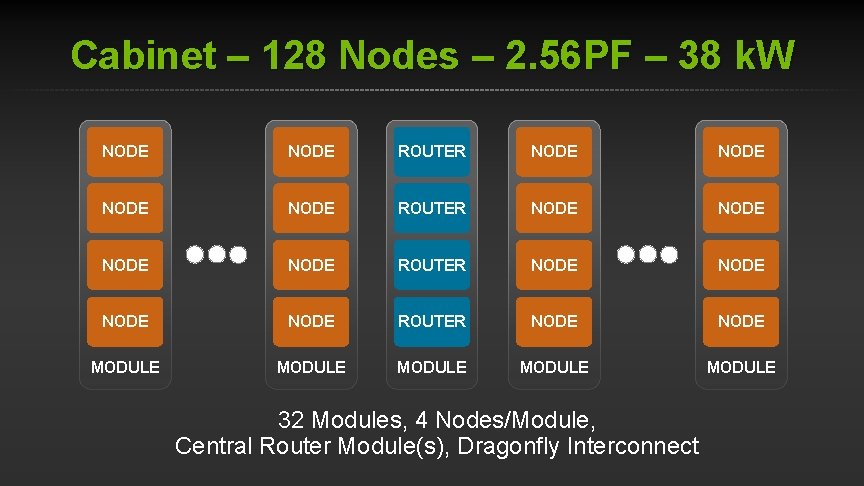

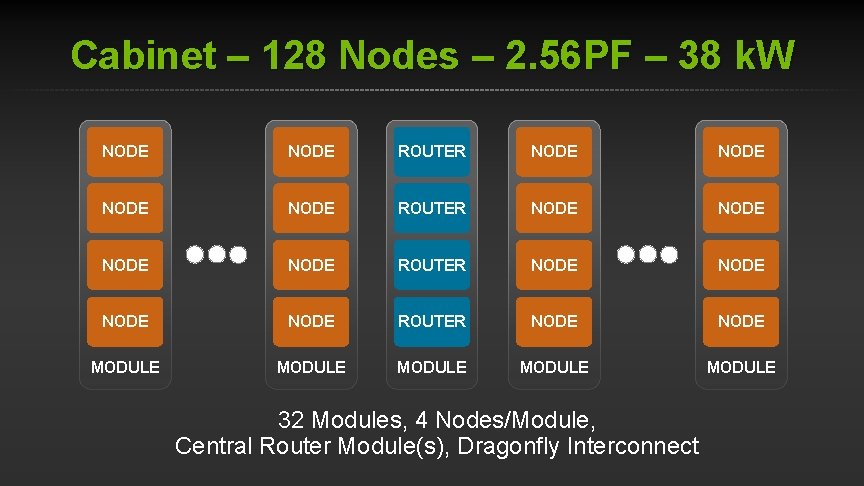

Cabinet – 128 Nodes – 2. 56 PF – 38 k. W NODE NODE ROUTER NODE NODE MODULE MODULE 32 Modules, 4 Nodes/Module, Central Router Module(s), Dragonfly Interconnect

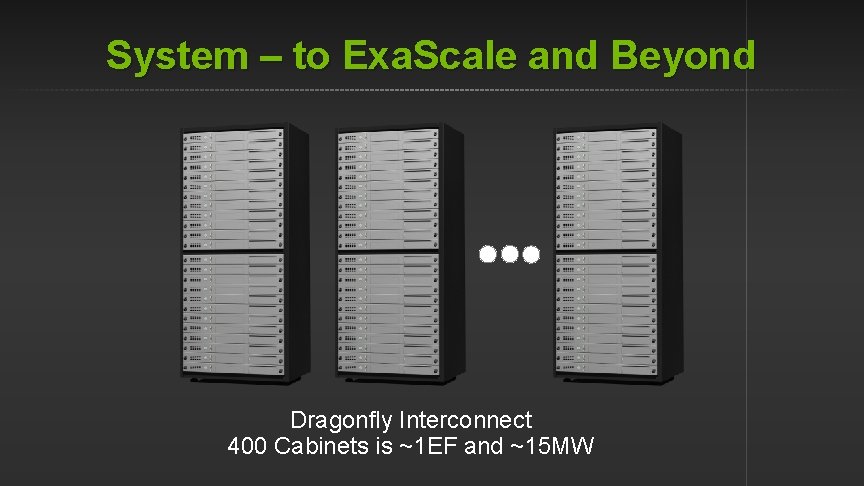

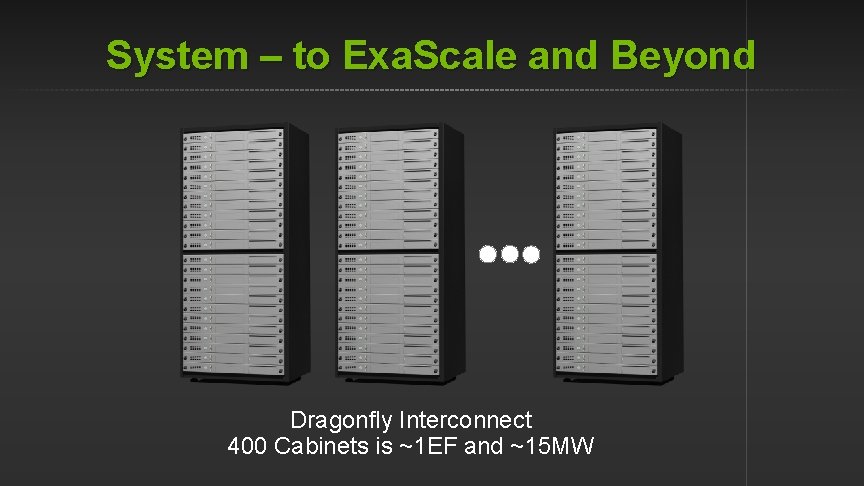

System – to Exa. Scale and Beyond Dragonfly Interconnect 400 Cabinets is ~1 EF and ~15 MW

CONCLUSION

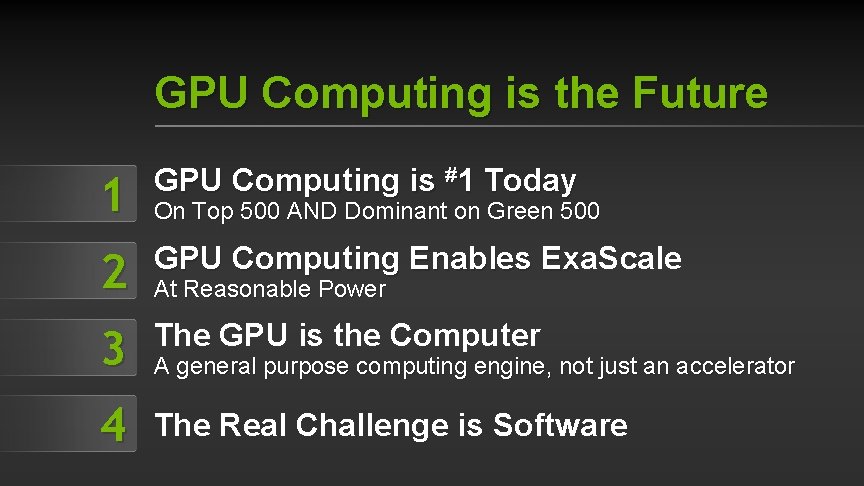

GPU Computing is the Future 1 GPU Computing is #1 Today 2 GPU Computing Enables Exa. Scale Ex 3 The GPU is the Computer 4 The Real Challenge is Software On Top 500 AND Dominant on Green 500 At Reasonable Power A general purpose computing engine, not just an accelerator