Discourse Annotation for Improving Spoken Dialogue Systems Joel

- Slides: 22

Discourse Annotation for Improving Spoken Dialogue Systems Joel Tetreault, Mary Swift, Preethum Prithviraj, Myroslava Dzikovska, James Allen University of Rochester Department of Computer Science ACL Workshop on Discourse Annotation July 25, 2004

Reference in Spoken Dialogue n Resolving anaphoric expressions correctly is critical in task-oriented domains q n Reference resolution module provides feedback to other components in system q n Makes conversation easier for humans Ie. Incremental Parsing, Interpretation Module Investigate how to improve RRM: q q Does deep semantic information provide an improvement over syntactic approaches? Discourse Structure could be effective in reducing search space of antecedents and improving accuracy (Grosz and Sidner, 1986)

Goal n Construct a linguistically rich parsed corpus to test algorithms and theories on reference in spoken dialogue, to provide overall system improvement q n Implicit roles Paucity of empirical work on reference in spoken dialogue (Bryon and Stent 1998, Eckert & Strube, 2000; etc. )

Outline n Corpus Construction q q q n Results q q n Parsing Monroe Domain Reference Annotation Dialogue Structure Annotation Personal pronoun evaluation Dialogue Structure Summary

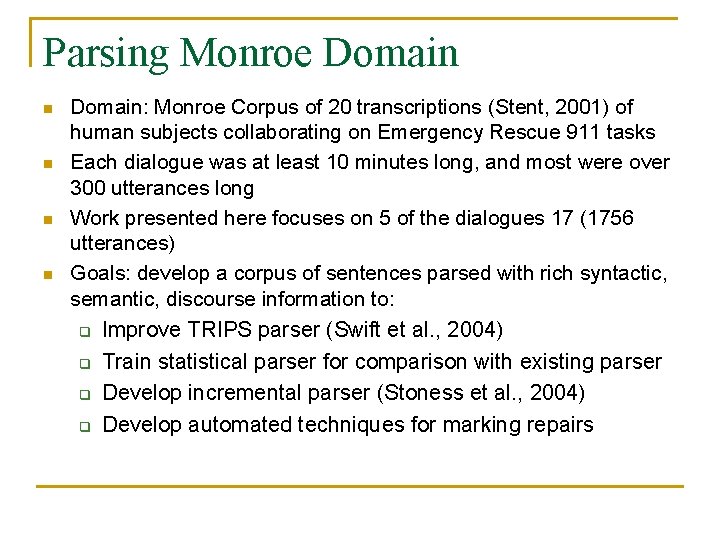

Parsing Monroe Domain n n Domain: Monroe Corpus of 20 transcriptions (Stent, 2001) of human subjects collaborating on Emergency Rescue 911 tasks Each dialogue was at least 10 minutes long, and most were over 300 utterances long Work presented here focuses on 5 of the dialogues 17 (1756 utterances) Goals: develop a corpus of sentences parsed with rich syntactic, semantic, discourse information to: q Improve TRIPS parser (Swift et al. , 2004) q q q Train statistical parser for comparison with existing parser Develop incremental parser (Stoness et al. , 2004) Develop automated techniques for marking repairs

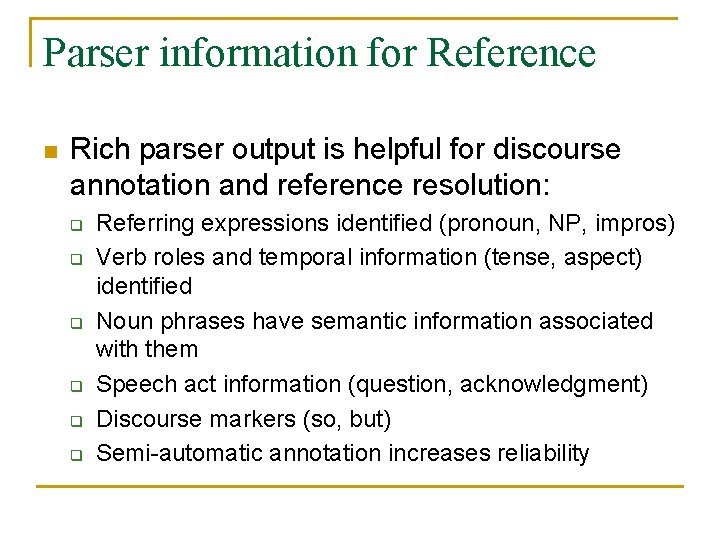

Parser information for Reference n Rich parser output is helpful for discourse annotation and reference resolution: q q q Referring expressions identified (pronoun, NP, impros) Verb roles and temporal information (tense, aspect) identified Noun phrases have semantic information associated with them Speech act information (question, acknowledgment) Discourse markers (so, but) Semi-automatic annotation increases reliability

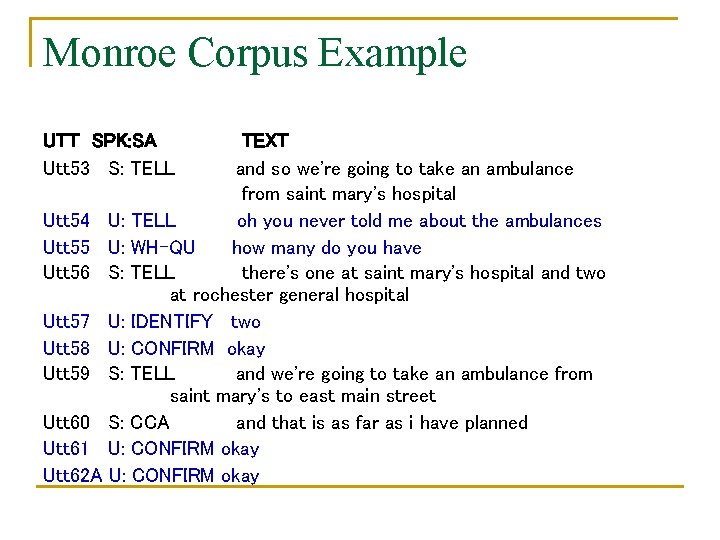

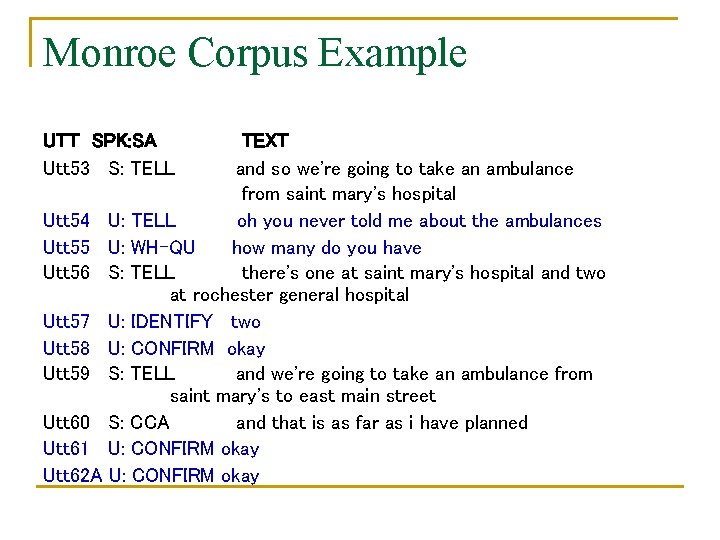

Monroe Corpus Example UTT SPK: SA Utt 53 S: TELL TEXT and so we're going to take an ambulance from saint mary's hospital Utt 54 U: TELL oh you never told me about the ambulances Utt 55 U: WH-QU how many do you have Utt 56 S: TELL there's one at saint mary's hospital and two at rochester general hospital Utt 57 U: IDENTIFY two Utt 58 U: CONFIRM okay Utt 59 S: TELL and we're going to take an ambulance from saint mary's to east main street Utt 60 S: CCA and that is as far as i have planned Utt 61 U: CONFIRM okay Utt 62 A U: CONFIRM okay

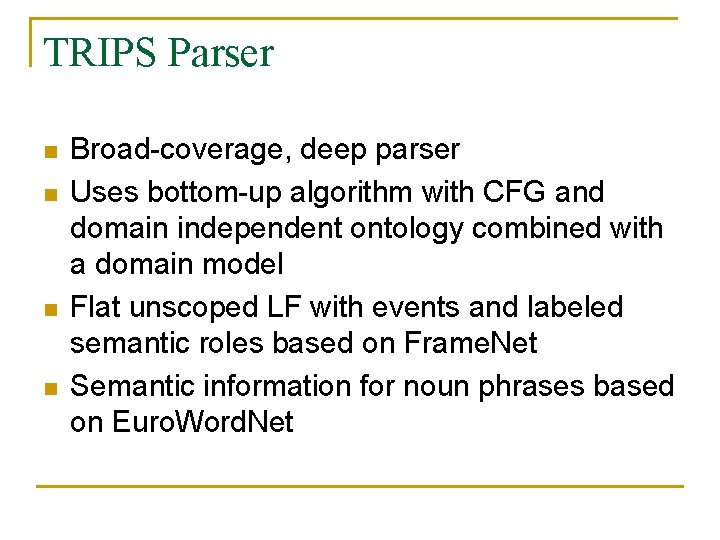

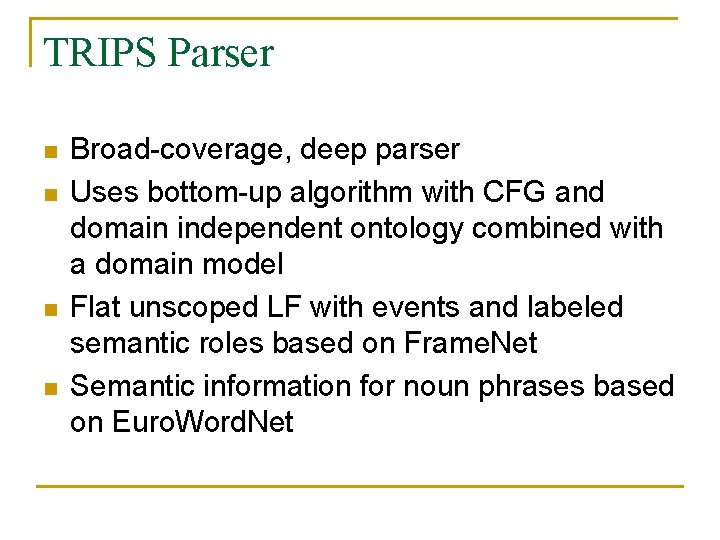

TRIPS Parser n n Broad-coverage, deep parser Uses bottom-up algorithm with CFG and domain independent ontology combined with a domain model Flat unscoped LF with events and labeled semantic roles based on Frame. Net Semantic information for noun phrases based on Euro. Word. Net

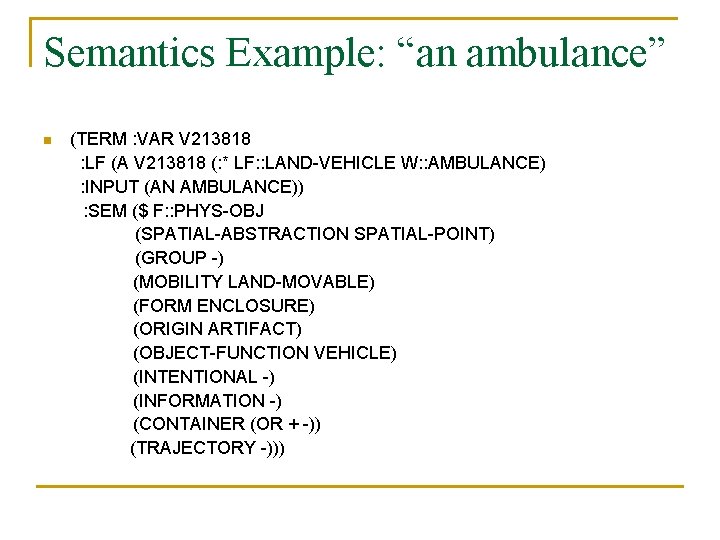

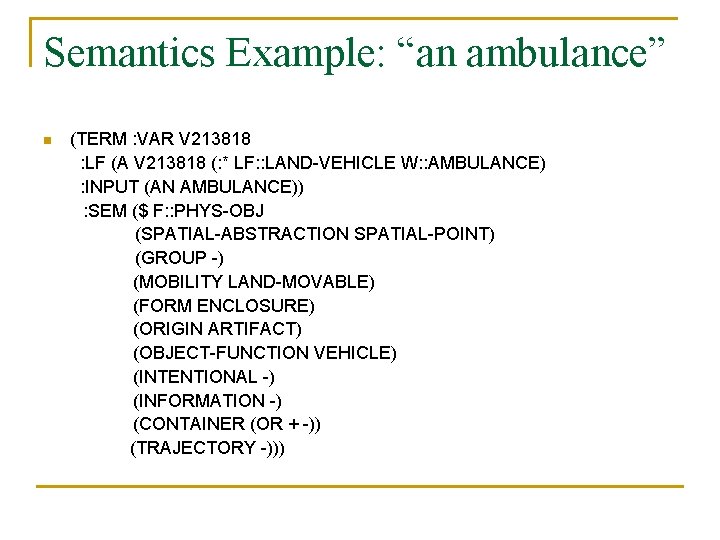

Semantics Example: “an ambulance” n (TERM : VAR V 213818 : LF (A V 213818 (: * LF: : LAND-VEHICLE W: : AMBULANCE) : INPUT (AN AMBULANCE)) : SEM ($ F: : PHYS-OBJ (SPATIAL-ABSTRACTION SPATIAL-POINT) (GROUP -) (MOBILITY LAND-MOVABLE) (FORM ENCLOSURE) (ORIGIN ARTIFACT) (OBJECT-FUNCTION VEHICLE) (INTENTIONAL -) (INFORMATION -) (CONTAINER (OR + -)) (TRAJECTORY -)))

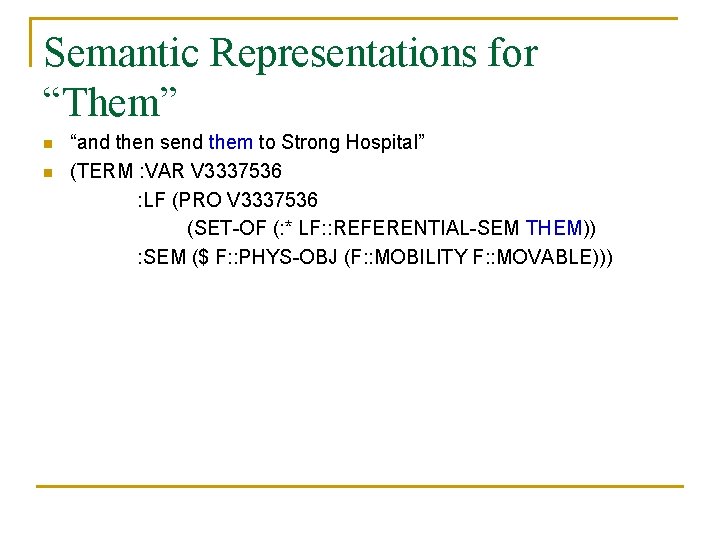

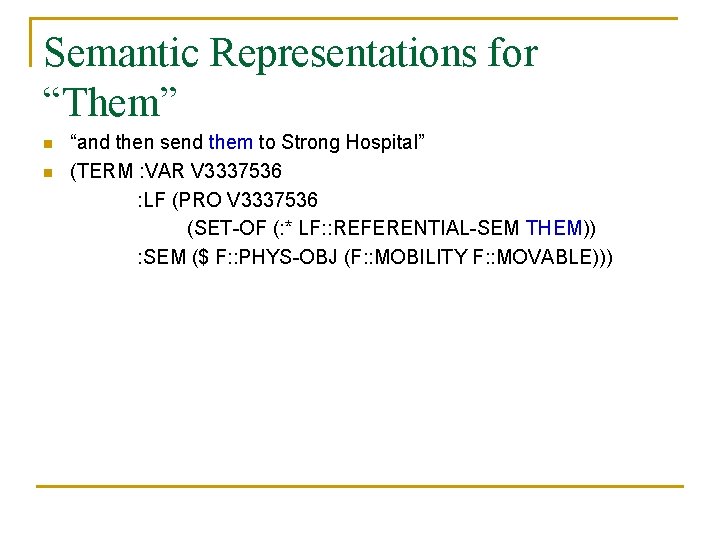

Semantic Representations for “Them” n n “and then send them to Strong Hospital” (TERM : VAR V 3337536 : LF (PRO V 3337536 (SET-OF (: * LF: : REFERENTIAL-SEM THEM)) : SEM ($ F: : PHYS-OBJ (F: : MOBILITY F: : MOVABLE)))

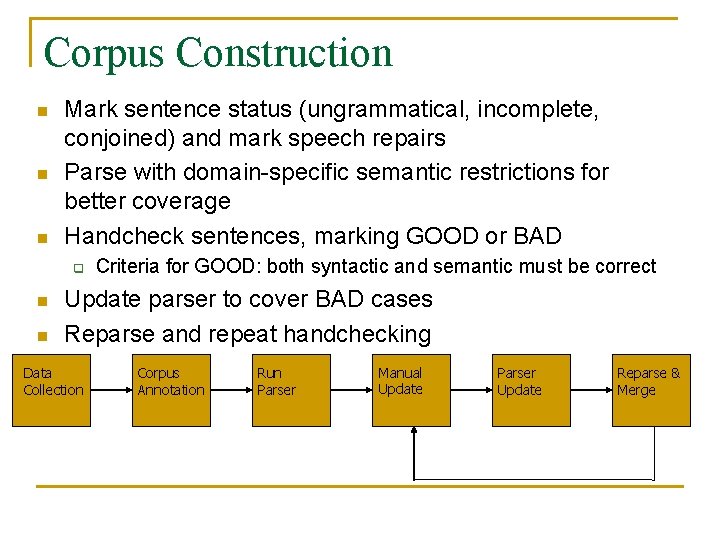

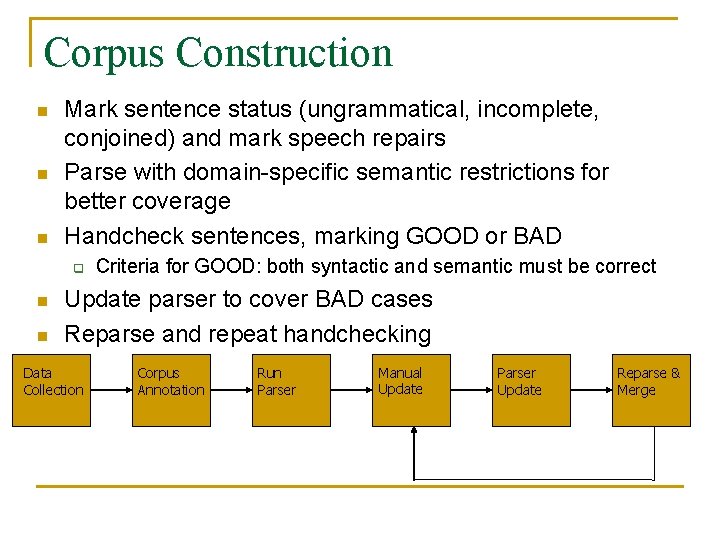

Corpus Construction n Mark sentence status (ungrammatical, incomplete, conjoined) and mark speech repairs Parse with domain-specific semantic restrictions for better coverage Handcheck sentences, marking GOOD or BAD q n n Criteria for GOOD: both syntactic and semantic must be correct Update parser to cover BAD cases Reparse and repeat handchecking Data Collection Corpus Annotation Run Parser Manual Update Parser Update Reparse & Merge

Current Coverage Corpus % Good Bad NA Total S 2 90. 8% 325 34 37 405 S 4 76. 1% 246 78 61 388 S 12 89. 9% 151 17 21 189 S 16 84. 2% 298 56 29 383 S 17 85. 2% 311 54 26 392 Overall 84. 1% 1331 239 174 1757

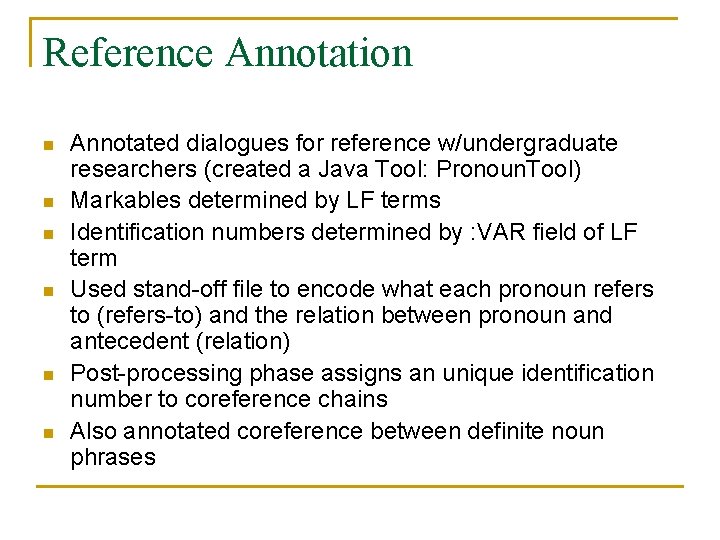

Reference Annotation n n n Annotated dialogues for reference w/undergraduate researchers (created a Java Tool: Pronoun. Tool) Markables determined by LF terms Identification numbers determined by : VAR field of LF term Used stand-off file to encode what each pronoun refers to (refers-to) and the relation between pronoun and antecedent (relation) Post-processing phase assigns an unique identification number to coreference chains Also annotated coreference between definite noun phrases

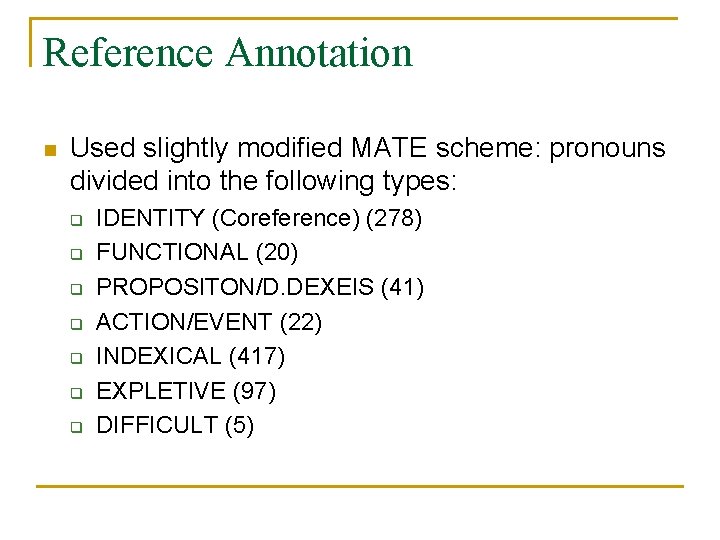

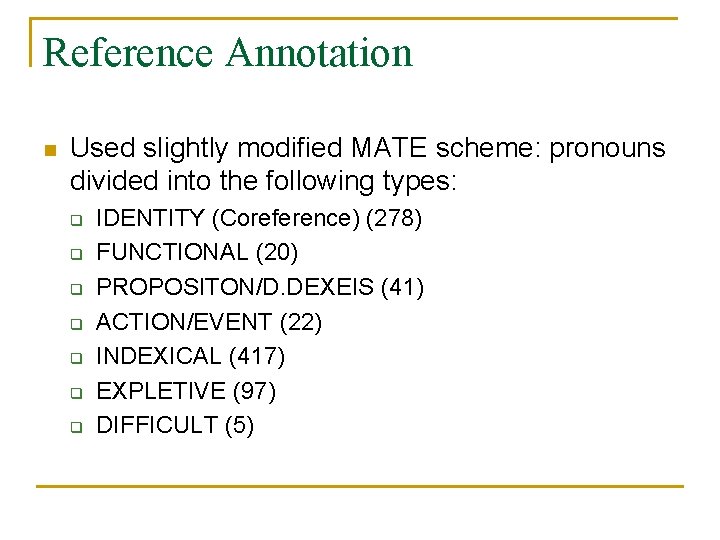

Reference Annotation n Used slightly modified MATE scheme: pronouns divided into the following types: q q q q IDENTITY (Coreference) (278) FUNCTIONAL (20) PROPOSITON/D. DEXEIS (41) ACTION/EVENT (22) INDEXICAL (417) EXPLETIVE (97) DIFFICULT (5)

Dialogue Structure n n How to integrate discourse structure into a reference module? Is it worth it? Shallow techniques may work better: may not be necessary to get a fine embedding to improve reference resolution Implemented QUD-based technique and Dialogue Act model (Eckert and Strube, 2000) Annotated in a stand-off file

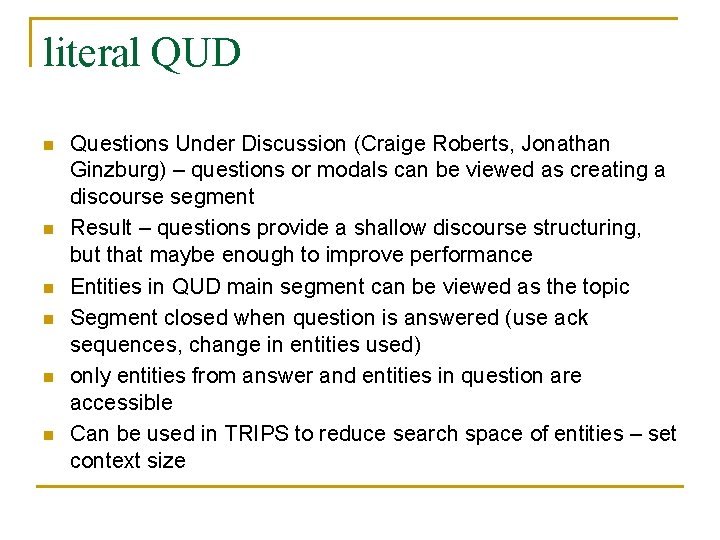

literal QUD n n n Questions Under Discussion (Craige Roberts, Jonathan Ginzburg) – questions or modals can be viewed as creating a discourse segment Result – questions provide a shallow discourse structuring, but that maybe enough to improve performance Entities in QUD main segment can be viewed as the topic Segment closed when question is answered (use ack sequences, change in entities used) only entities from answer and entities in question are accessible Can be used in TRIPS to reduce search space of entities – set context size

QUD Annotation Scheme n Annotate: q q q Start utterance End utterance Type (aside, repeated question, unanswered, nil)

QUD n n n Issue 1: easy to detect Q’s (use Speech-Act information), but how do you know Q is answered? Cue words, multiple acknowledgements, changes in entities discussed provide strong clues that question is finishing Issue 2: what is more salient to a QUD pronoun – the QUD topic or a more recent entity?

Dialogue Act Segmentation n Utterances that are not acknowledged by the listener may not be in common ground and thus not accessible to pronominal reference Evaluation showed improvement for pronouns referring to abstract entities, and strong annotator reliability Each utterance marked as I: contains content (initiation), A: acknowledgment, C: combination of the above

Results n n Incorporating semantics into reference resolution algorithm (LRC) improves performance from 61. 5% to 66. 9% (CATALOG ’ 04) Preliminary QUD results show an additional boost to 67. 3% (DAARC ’ 04) E&S Automated: 63. 4% E&S Manual: 60. 0%

Issues n Inter-annotator agreement for QUD annotation q n Ungrammatical and fragmented utterances: q n n Segment ends are hardest to synch Parse automatically or manually? Small corpus size: need more data for statistical evaluations Parser freeze? important for annotators to stay abreast of latest changes

Summary n n n Semi-automated parsing process to produce reliable discourse annotation Discourse annotation done manually, but automated data helps guide manual annotation Result: spoken dialogue corpus with rich linguistic data