Data Collection and Dissemination Outline Data Dissemination Trickle

![Data Dissemination - Trickle [Dissemination_1] 3 Data Dissemination - Trickle [Dissemination_1] 3](https://slidetodoc.com/presentation_image/2fc10780602019c992077142d72c34bc/image-3.jpg)

- Slides: 32

Data Collection and Dissemination

Outline • Data Dissemination – Trickle – Address single packet • Data Collection – DSF

![Data Dissemination Trickle Dissemination1 3 Data Dissemination - Trickle [Dissemination_1] 3](https://slidetodoc.com/presentation_image/2fc10780602019c992077142d72c34bc/image-3.jpg)

Data Dissemination - Trickle [Dissemination_1] 3

Simple Broadcast Retransmission • Broadcast Storm Problem – Redundant rebroadcasts – Severe contention – Collision

Trickle • Motivation – WSNs require network code propagation • Challenges – WSNs exhibit highly transient loss patterns, susceptible to environmental changes – WSNs network membership is not static – Motes must periodically communicate to learn when there is new code • Periodical metadata exchange is costly

Trickle Requirement • Low Maintenance • Rapid Propagation • Scalability

Trickle • An algorithm for code propagation and maintenance in WSNs • Based on “Polite Gossip” – Each node only gossip about new things that it has heard from its neighbors, but it won’t repeat gossip it has already heard, as that would be rude • Code updates “trickle” through the network

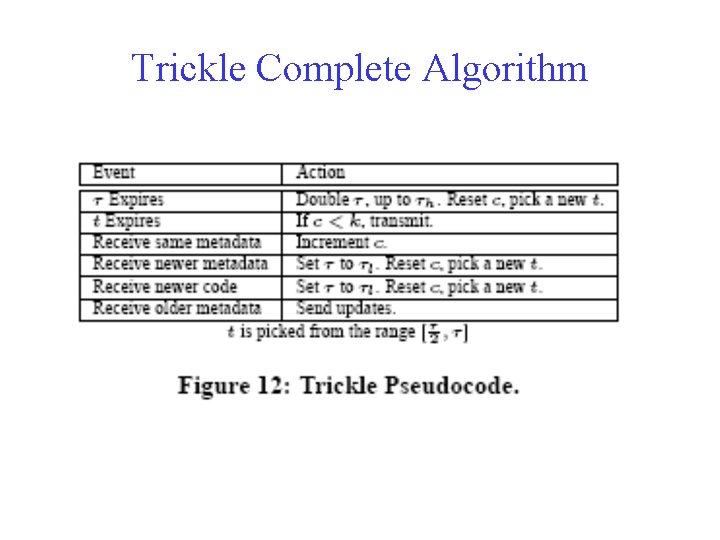

Trickle • Within a node time period – If a node hears older metadata, it broadcasts the new data – If a node hears newer metadata, it broadcasts its own metadata (which will cause other nodes to send the new code) – If a node hears the same metadata, it increases a counter • If a threshold is reached, the node does not transmit its metadata • Otherwise, it transmits metadata

Trickle – Main Parameters • Counter c: Count how many times identical metadata has been heard • k: threshold to determine how many times identical metadata must be heard before suppressing transmission of a node’s metadata • t: the time at which a node will transmit its metadata. t is in the range of [0, τ]

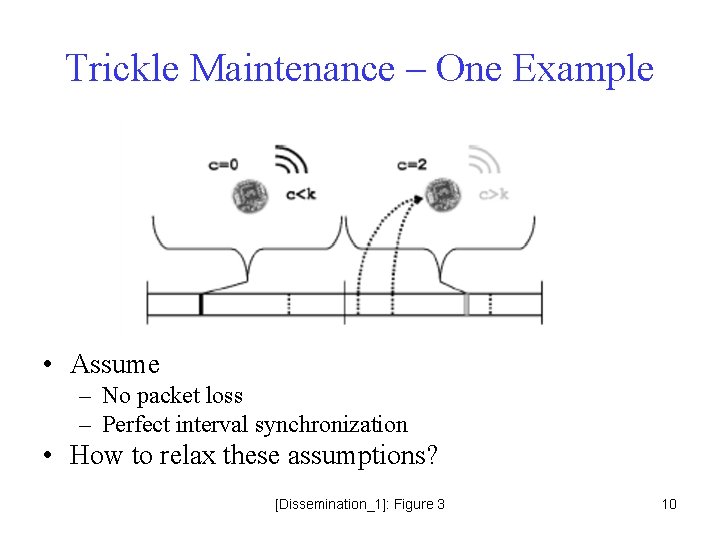

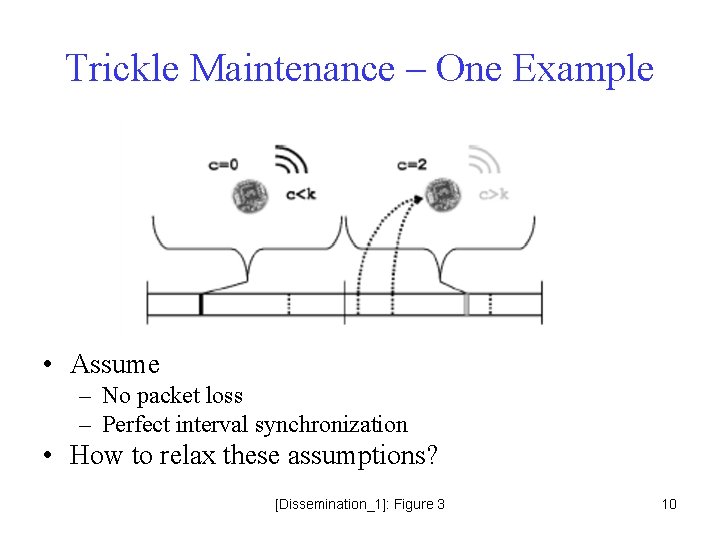

Trickle Maintenance – One Example • Assume – No packet loss – Perfect interval synchronization • How to relax these assumptions? [Dissemination_1]: Figure 3 10

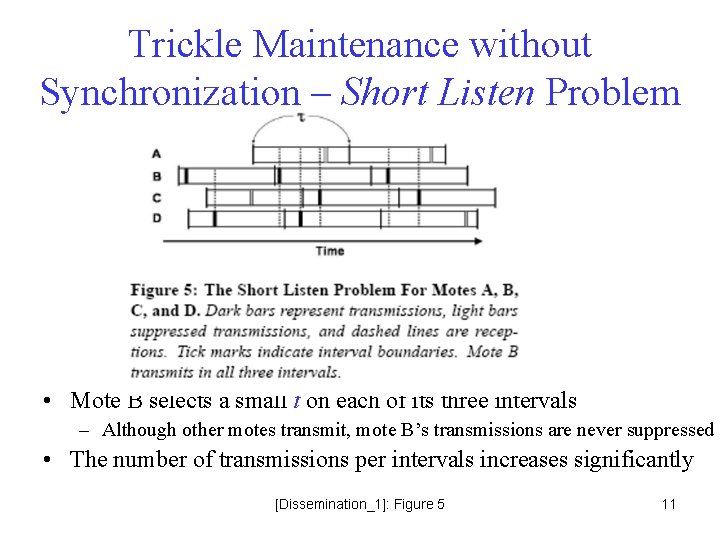

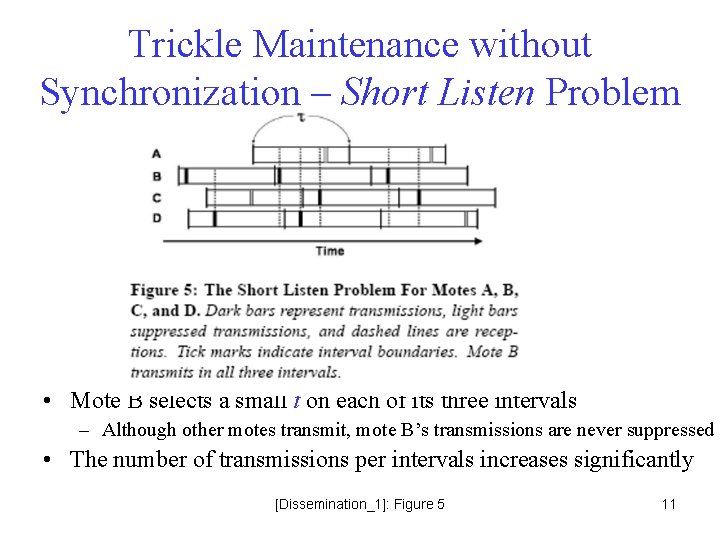

Trickle Maintenance without Synchronization – Short Listen Problem • Mote B selects a small t on each of its three intervals – Although other motes transmit, mote B’s transmissions are never suppressed • The number of transmissions per intervals increases significantly [Dissemination_1]: Figure 5 11

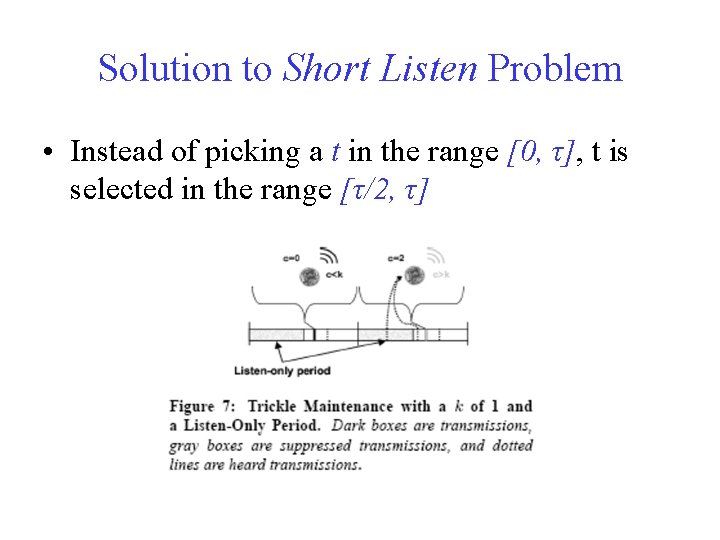

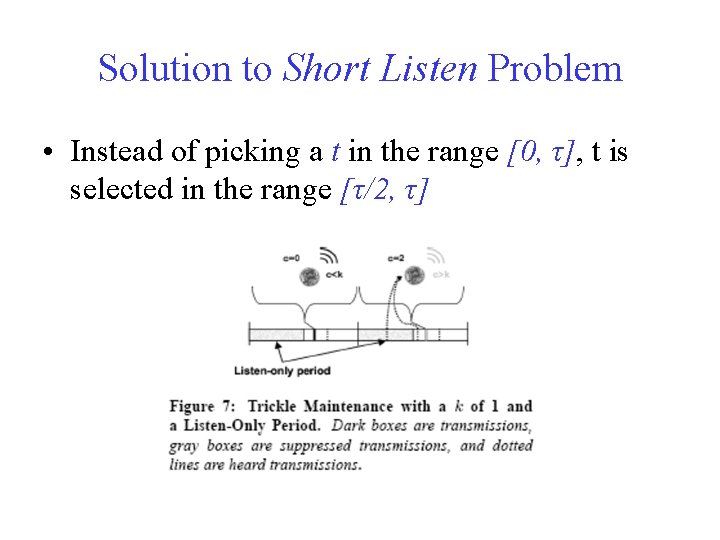

Solution to Short Listen Problem • Instead of picking a t in the range [0, τ], t is selected in the range [τ/2, τ]

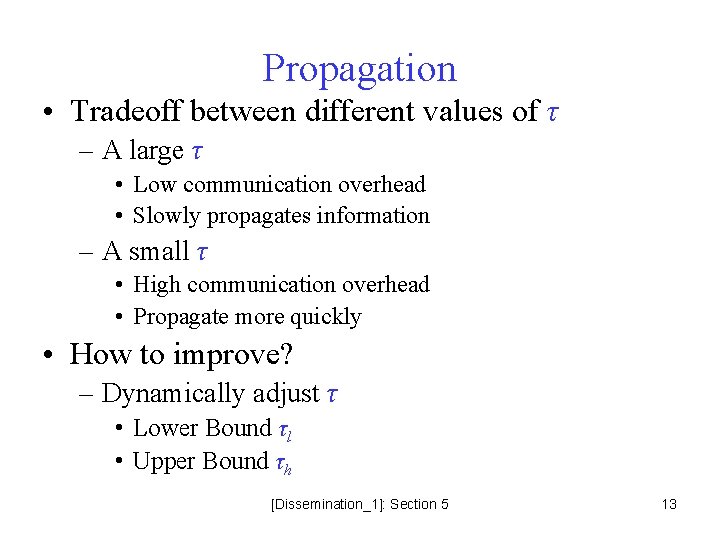

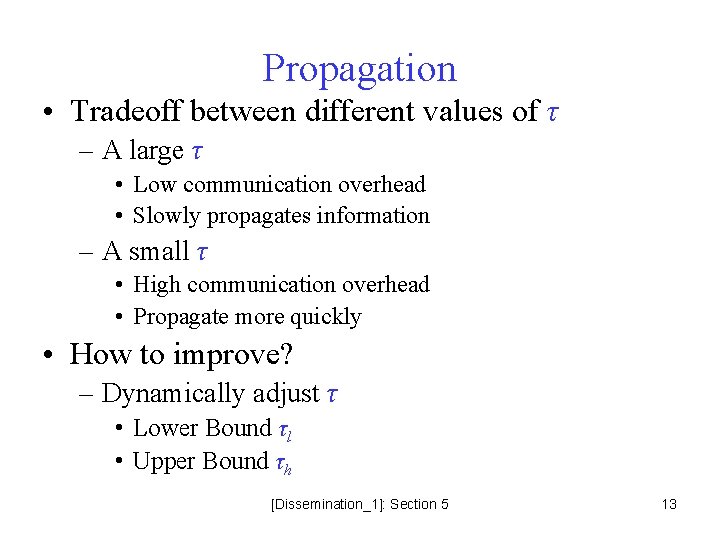

Propagation • Tradeoff between different values of τ – A large τ • Low communication overhead • Slowly propagates information – A small τ • High communication overhead • Propagate more quickly • How to improve? – Dynamically adjust τ • Lower Bound τl • Upper Bound τh [Dissemination_1]: Section 5 13

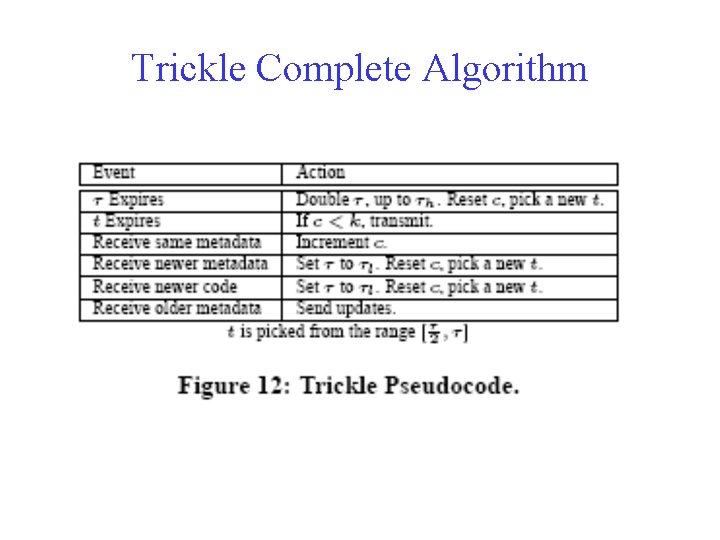

Trickle Complete Algorithm

Data Collection

Data Collection • Link-Quality based Data Forwarding – Wireless communication links are extremely unreliable – ETX: to find high-throughput paths on multiple • Sleep-Latency Based Forwarding – Duty Cycling: sensor nodes turn off their radios when not needed • Idle listening waste much energy [Collection_2] 16

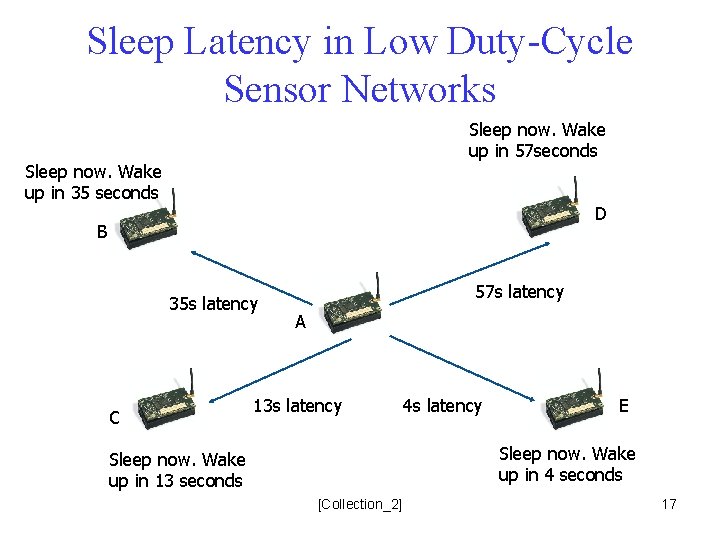

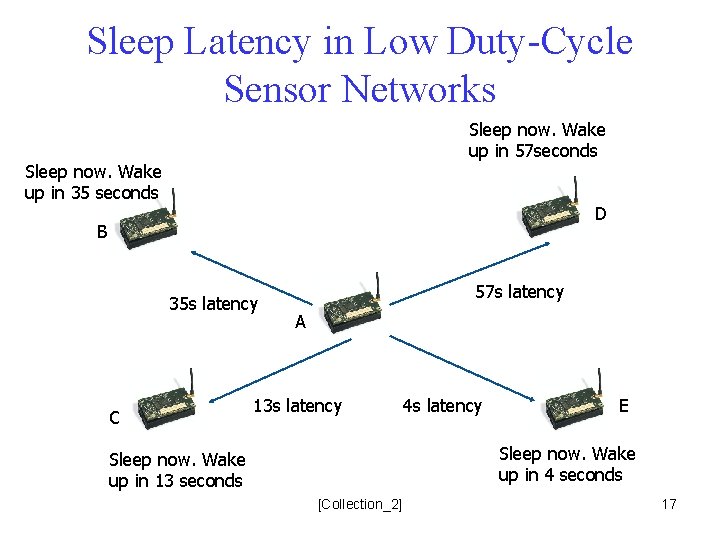

Sleep Latency in Low Duty-Cycle Sensor Networks Sleep now. Wake up in 57 seconds Sleep now. Wake up in 35 seconds D B 35 s latency C 57 s latency A 13 s latency 4 s latency E Sleep now. Wake up in 4 seconds Sleep now. Wake up in 13 seconds [Collection_2] 17

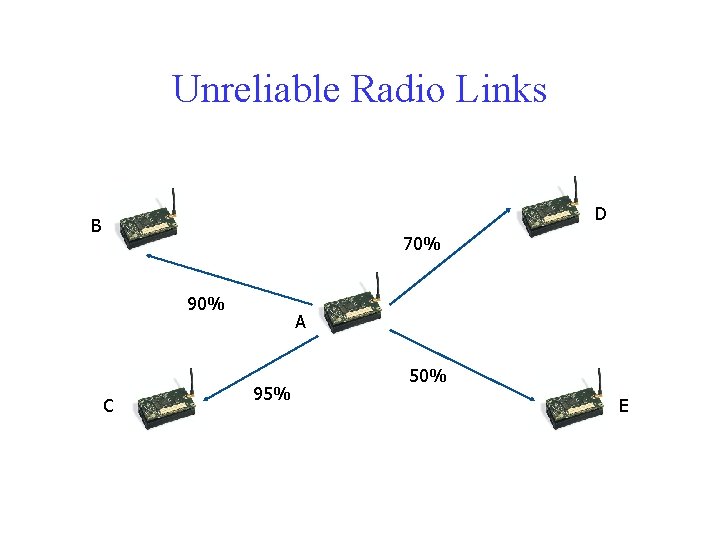

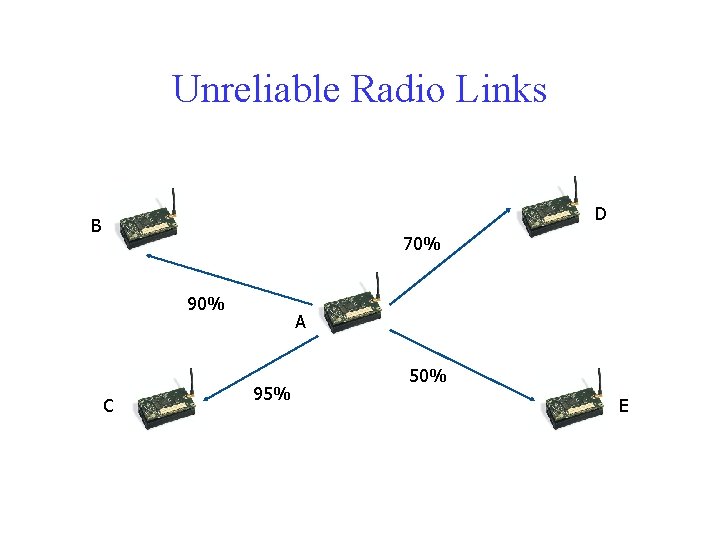

Unreliable Radio Links D B 70% 90% C A 95% 50% E

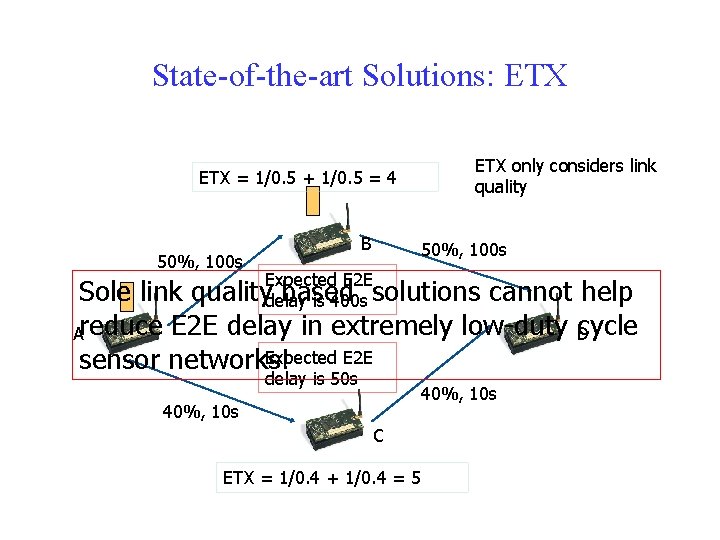

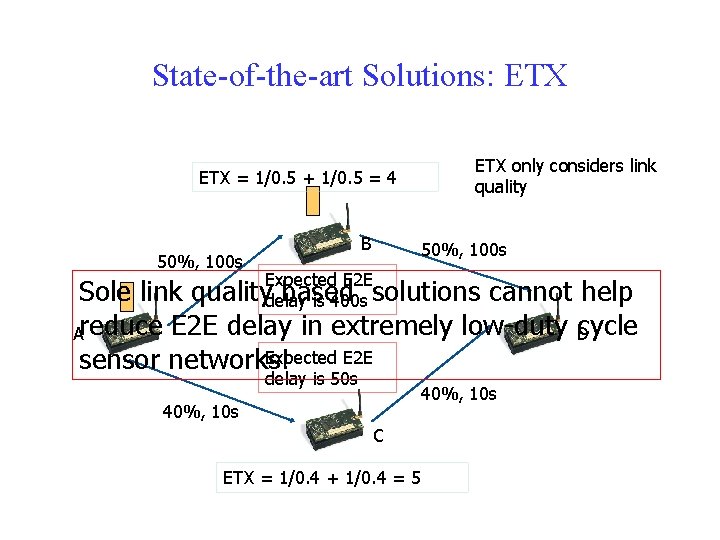

State-of-the-art Solutions: ETX = 1/0. 5 + 1/0. 5 = 4 B 50%, 100 s ETX only considers link quality 50%, 100 s Expected E 2 E qualitydelay based is 400 s solutions Sole link cannot help Areduce E 2 E delay in extremely low-duty cycle D Expected E 2 E sensor networks! delay is 50 s 40%, 10 s C ETX = 1/0. 4 + 1/0. 4 = 5

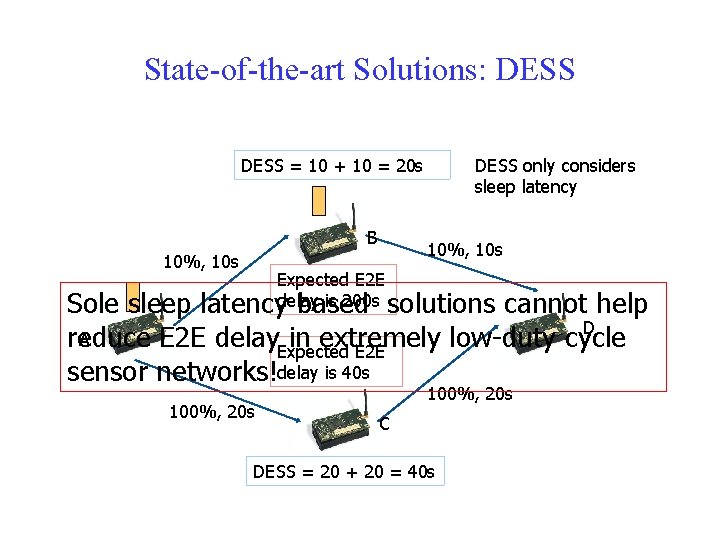

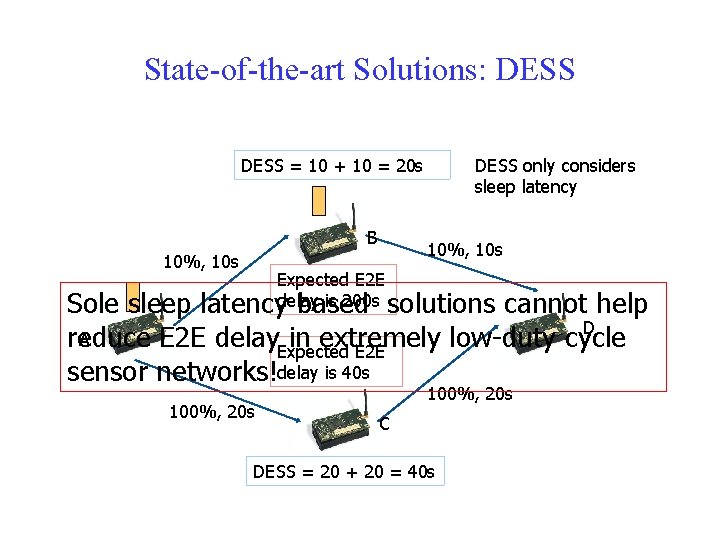

State-of-the-art Solutions: DESS = 10 + 10 = 20 s B DESS only considers sleep latency 10%, 10 s Expected E 2 E is 200 s solutions latencydelay based Sole sleep cannot help D A reduce E 2 E delay. Expected in extremely low-duty cycle E 2 E sensor networks!delay is 40 s 100%, 20 s C DESS = 20 + 20 = 40 s

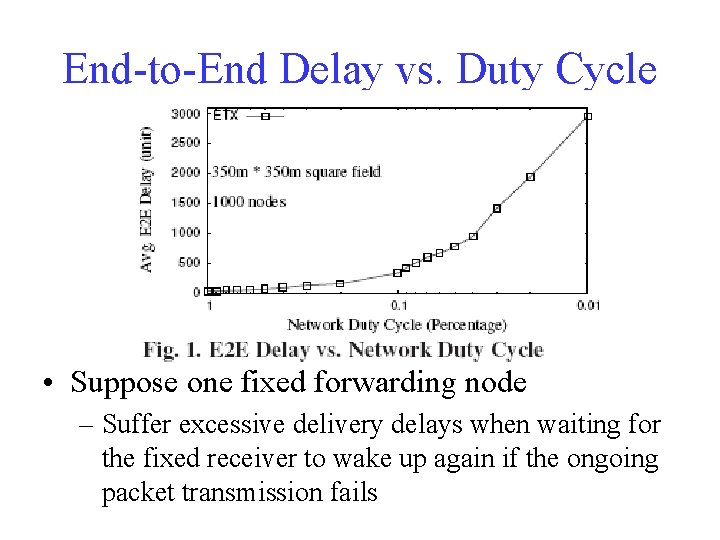

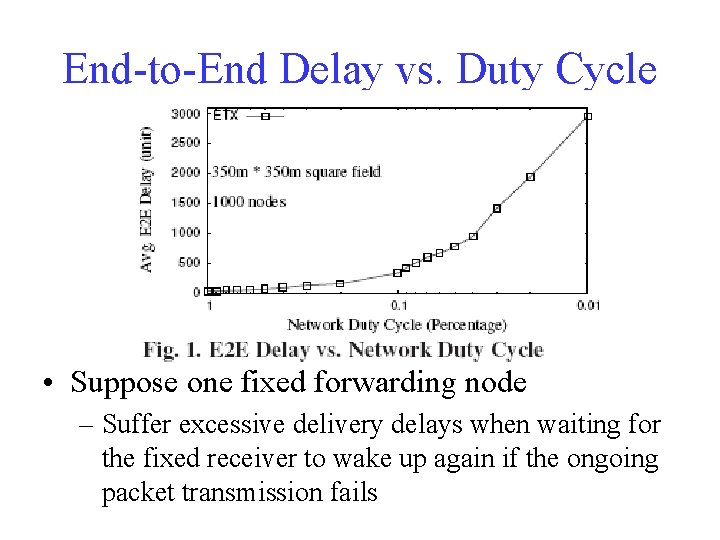

End-to-End Delay vs. Duty Cycle • Suppose one fixed forwarding node – Suffer excessive delivery delays when waiting for the fixed receiver to wake up again if the ongoing packet transmission fails

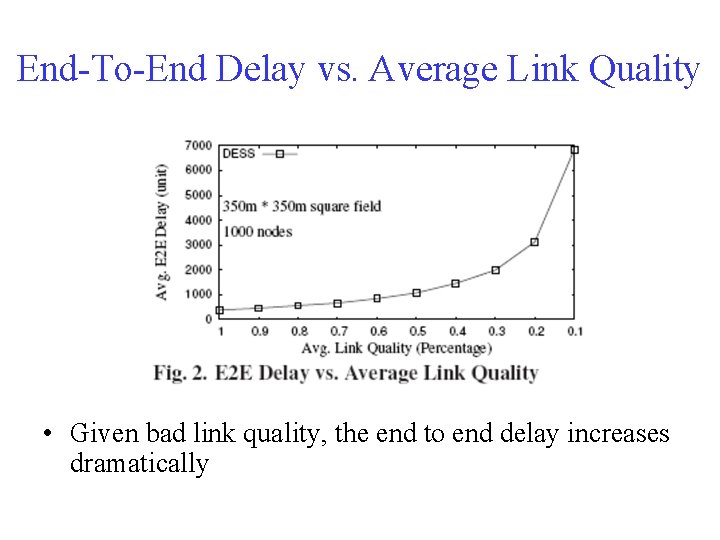

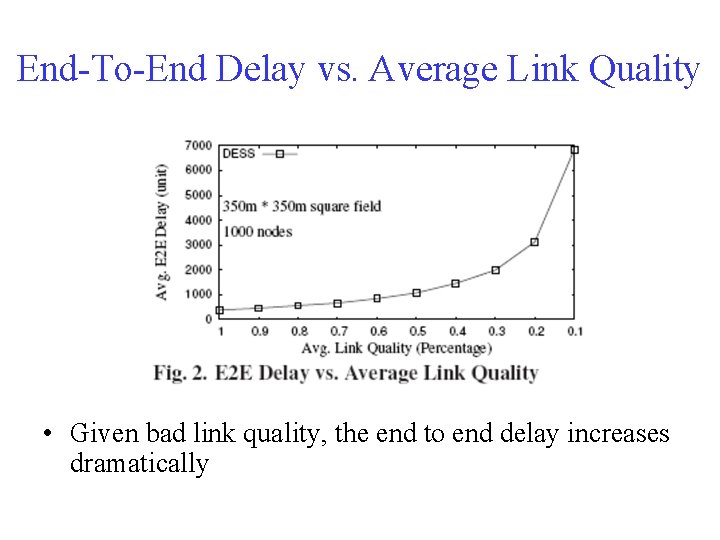

End-To-End Delay vs. Average Link Quality • Given bad link quality, the end to end delay increases dramatically

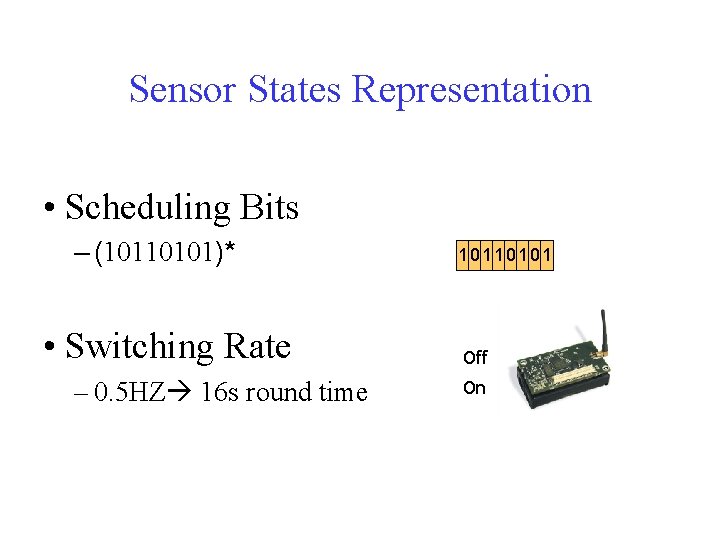

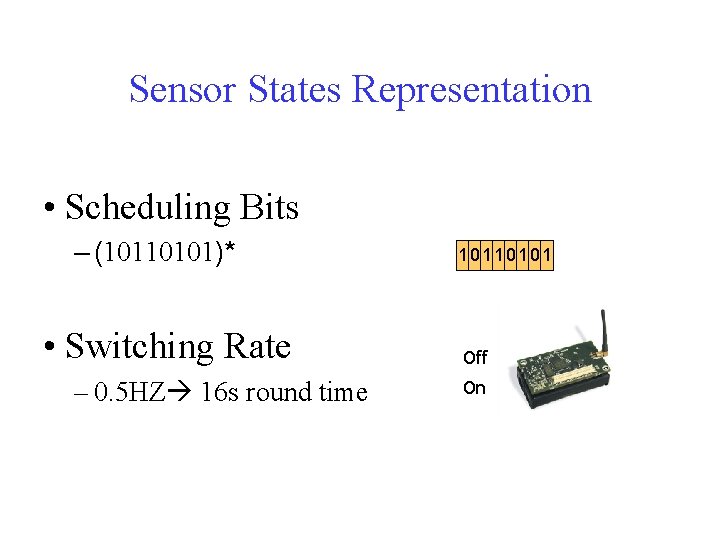

Sensor States Representation • Scheduling Bits – (10110101)* • Switching Rate – 0. 5 HZ 16 s round time 10110101 Off On

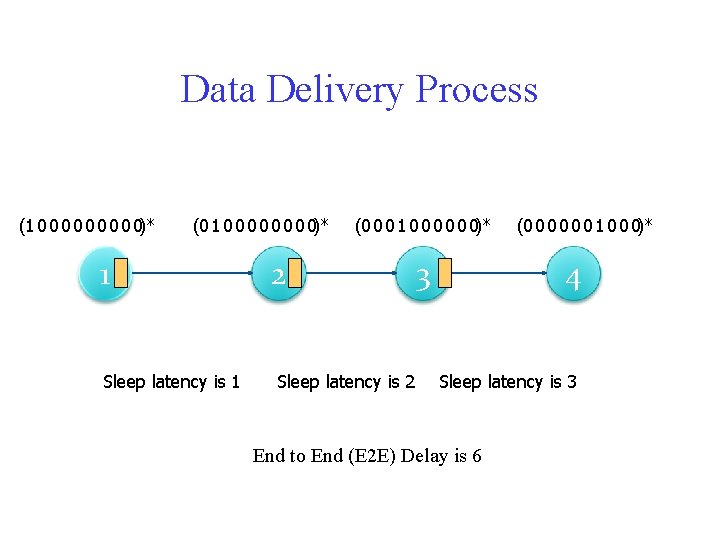

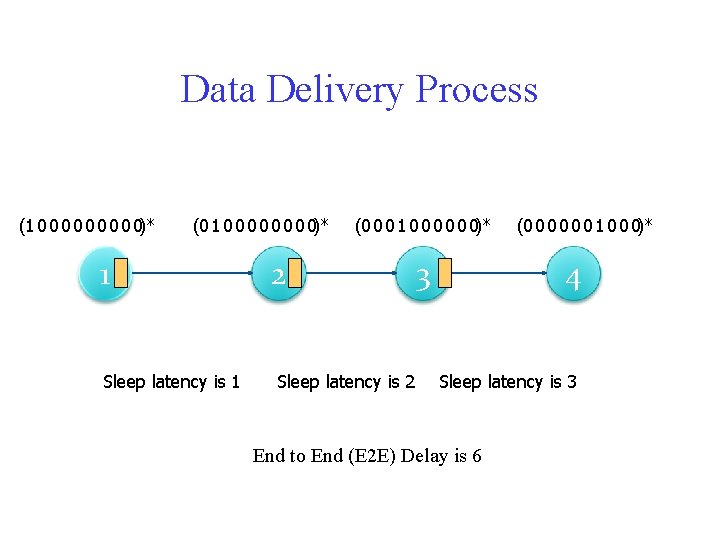

Data Delivery Process (1 0 0 0 0 0)* (0 1 0 0 0 0)* 1 Sleep latency is 1 (0 0 0 1 0 0 0)* 2 Sleep latency is 2 3 (0 0 0 1 0 0 0)* 4 Sleep latency is 3 End to End (E 2 E) Delay is 6

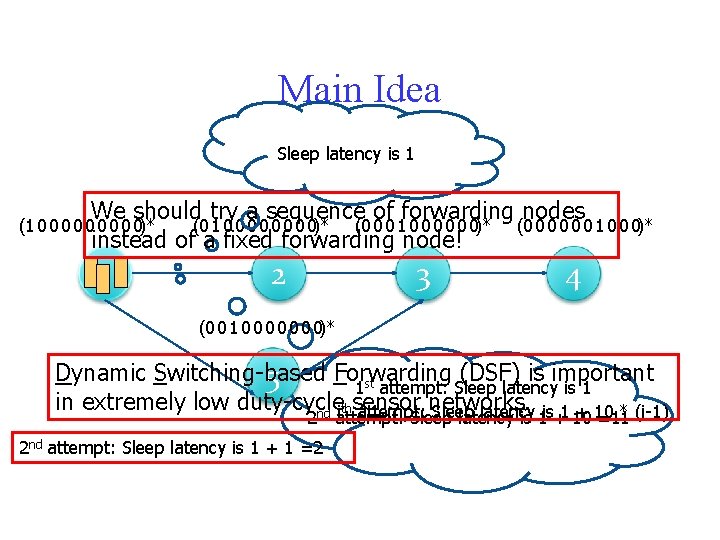

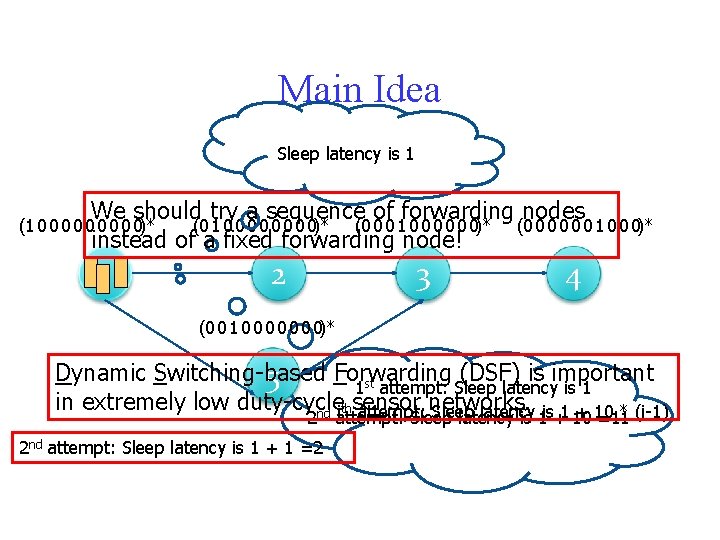

Main Idea Sleep latency is 1 We should try a sequence of forwarding nodes (0 1 0 0 0 0)* (0 0 0 1 0 0 0)* instead of a fixed forwarding node! (1 0 0 0 0 0)* 1 2 3 4 (0 0 1 0 0 0 0)* Dynamic Switching-based Forwarding (DSF) is important 5 1 st attempt: Sleep latency is 1 in extremely low duty-cycle sensor networks. nd ith attempt: Sleep latency is 1 + 10 * (i-1) 2 2 nd attempt: Sleep latency is 1 + 1 =2 attempt: Sleep latency is 1 + 10 =11

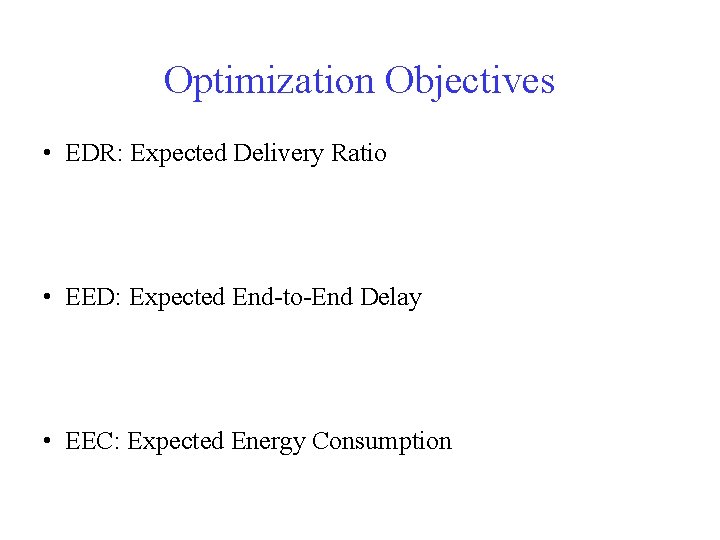

Optimization Objectives • EDR: Expected Delivery Ratio • EED: Expected End-to-End Delay • EEC: Expected Energy Consumption

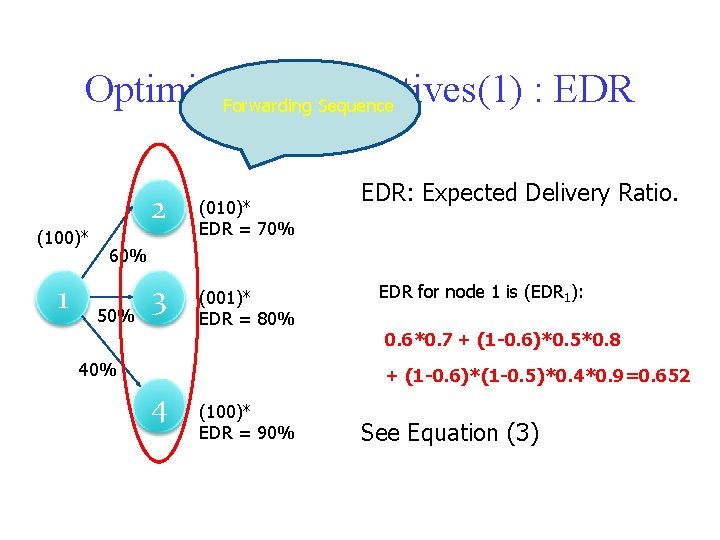

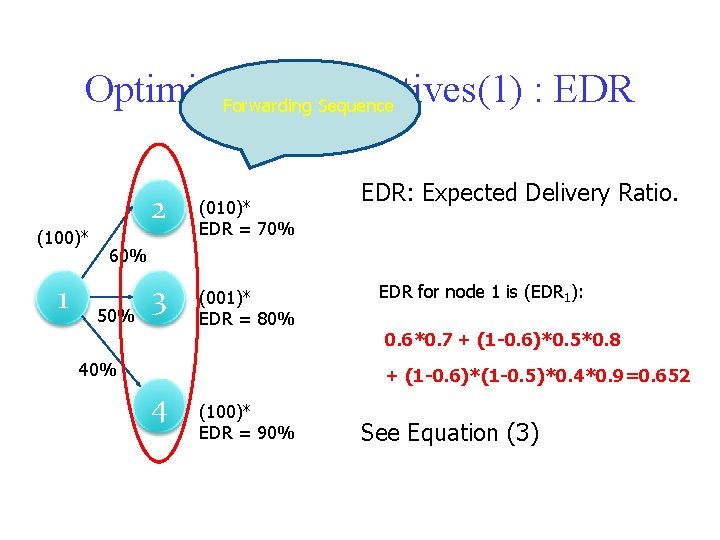

Optimization Objectives(1) : EDR Forwarding Sequence (100)* 1 2 (010)* EDR = 70% 3 (001)* EDR = 80% EDR: Expected Delivery Ratio. 60% 50% 40% EDR for node 1 is (EDR 1): 0. 6*0. 7 + (1 -0. 6)*0. 5*0. 8 + (1 -0. 6)*(1 -0. 5)*0. 4*0. 9=0. 652 4 (100)* EDR = 90% See Equation (3)

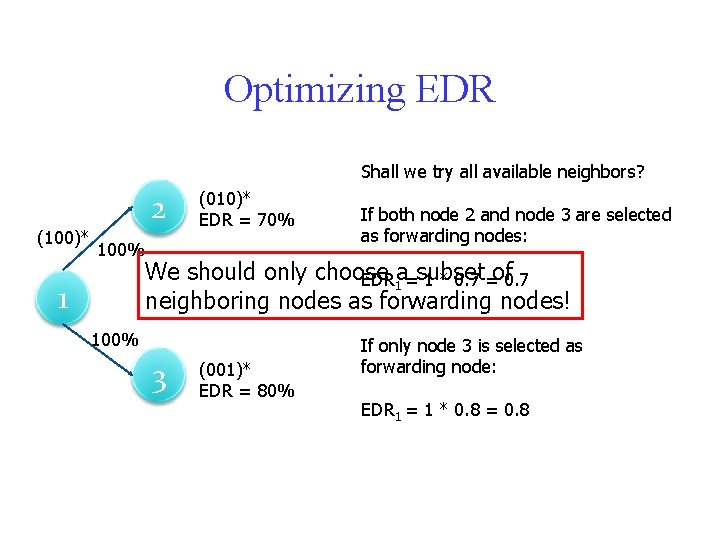

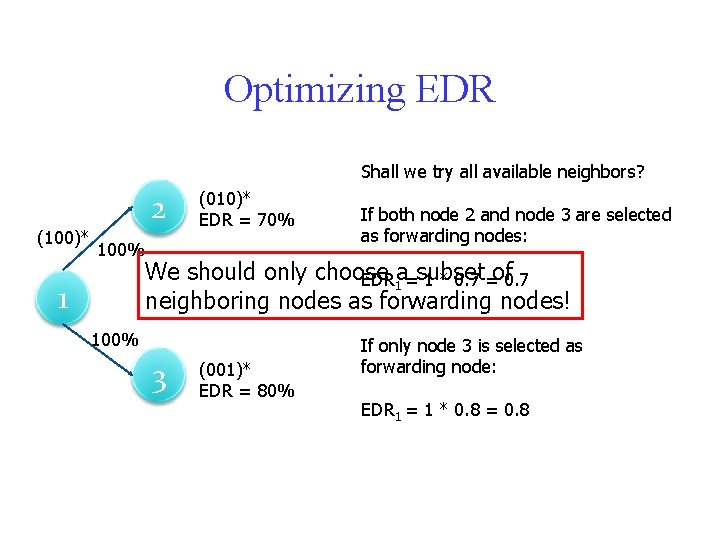

Optimizing EDR Shall we try all available neighbors? (100)* 2 100% 1 (010)* EDR = 70% If both node 2 and node 3 are selected as forwarding nodes: We should only choose EDR 1 a=subset 1 * 0. 7 =of 0. 7 neighboring nodes as forwarding nodes! 100% 3 (001)* EDR = 80% If only node 3 is selected as forwarding node: EDR 1 = 1 * 0. 8 = 0. 8

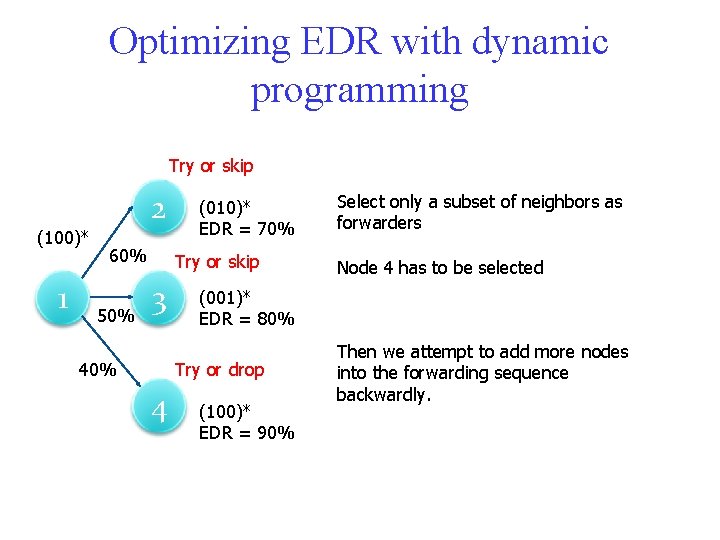

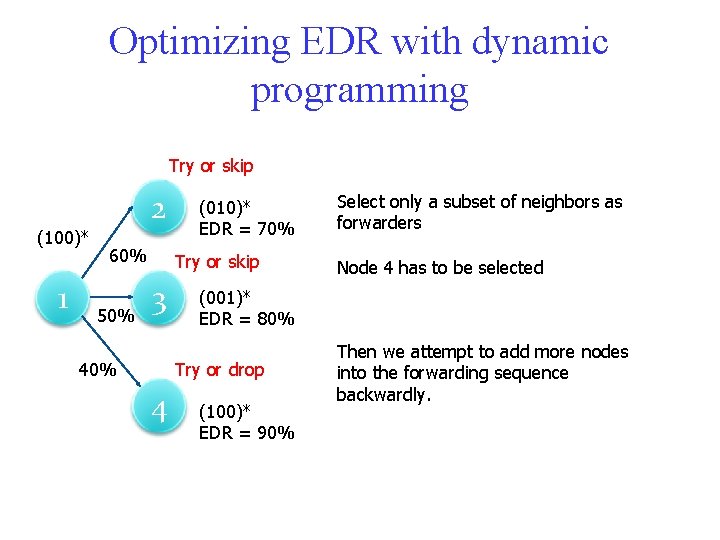

Optimizing EDR with dynamic programming Try or skip (100)* 1 2 60% 50% (010)* EDR = 70% Try or skip 3 40% Node 4 has to be selected (001)* EDR = 80% Try or drop 4 Select only a subset of neighbors as forwarders (100)* EDR = 90% Then we attempt to add more nodes into the forwarding sequence backwardly.

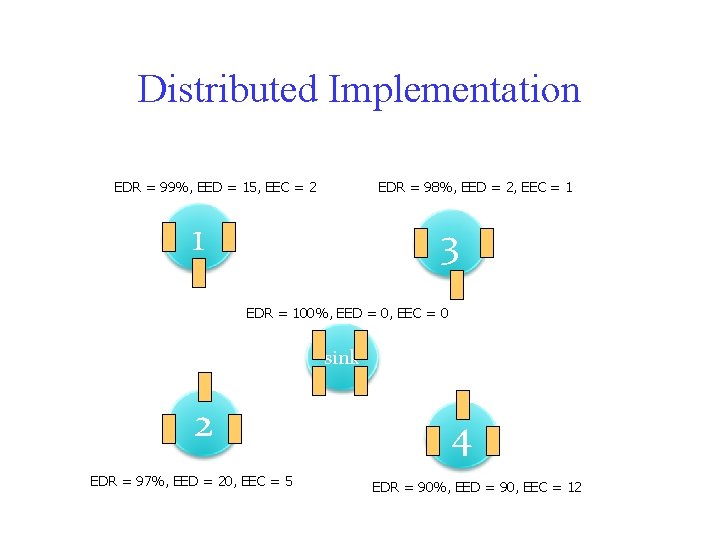

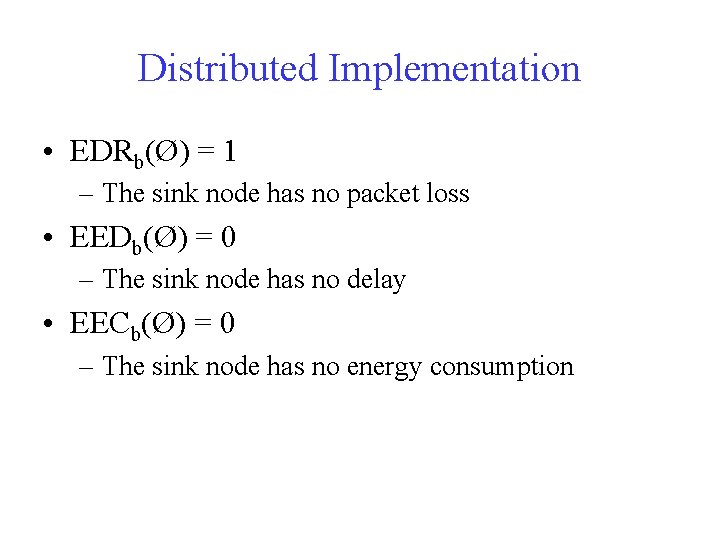

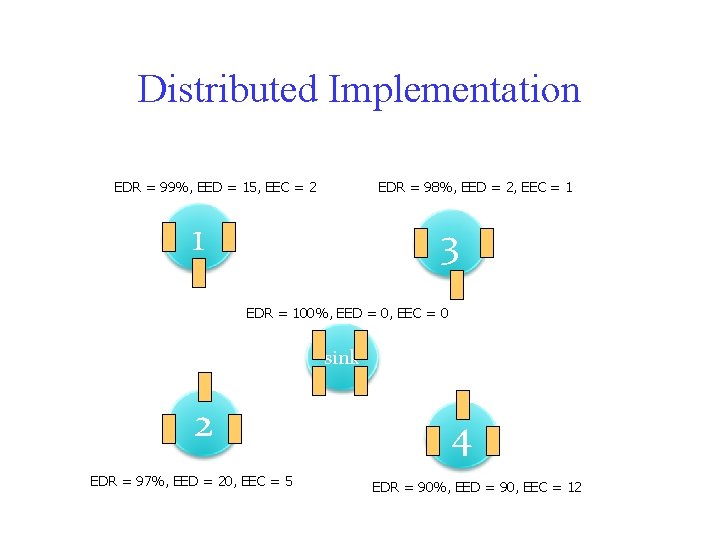

Distributed Implementation • EDRb(Ø) = 1 – The sink node has no packet loss • EEDb(Ø) = 0 – The sink node has no delay • EECb(Ø) = 0 – The sink node has no energy consumption

Distributed Implementation EDR = 99%, EED = 15, EEC = 2 EDR = 98%, EED = 2, EEC = 1 1 3 EDR = 100%, EED = 0, EEC = 0 sink 2 EDR = 97%, EED = 20, EEC = 5 4 EDR = 90%, EED = 90, EEC = 12

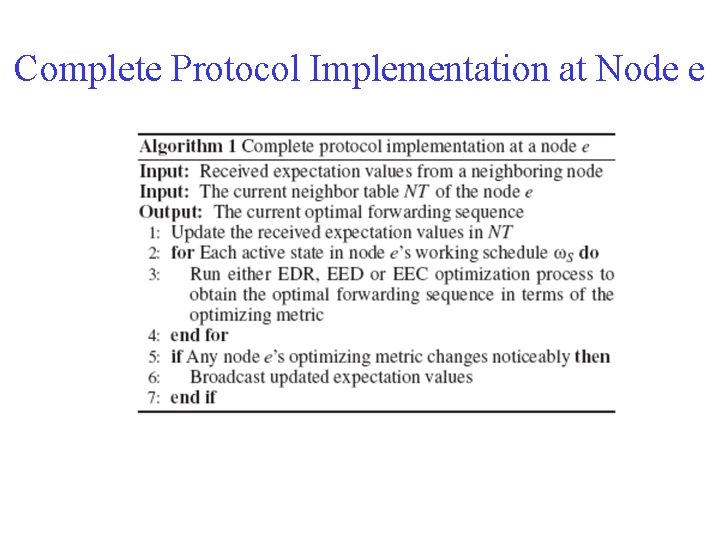

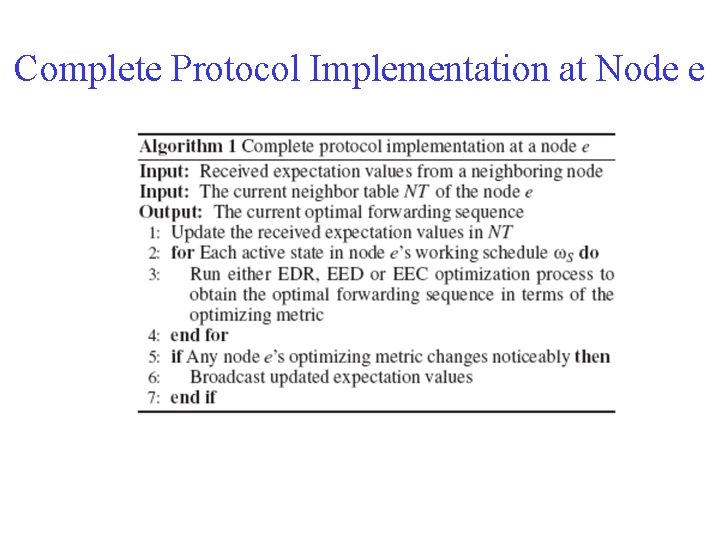

Complete Protocol Implementation at Node e