Concurrent Algorithms Summing the elements of an array

- Slides: 11

Concurrent Algorithms

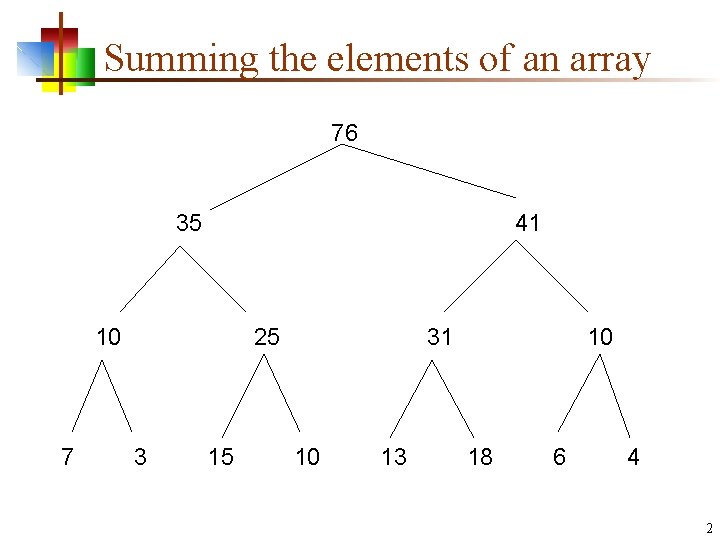

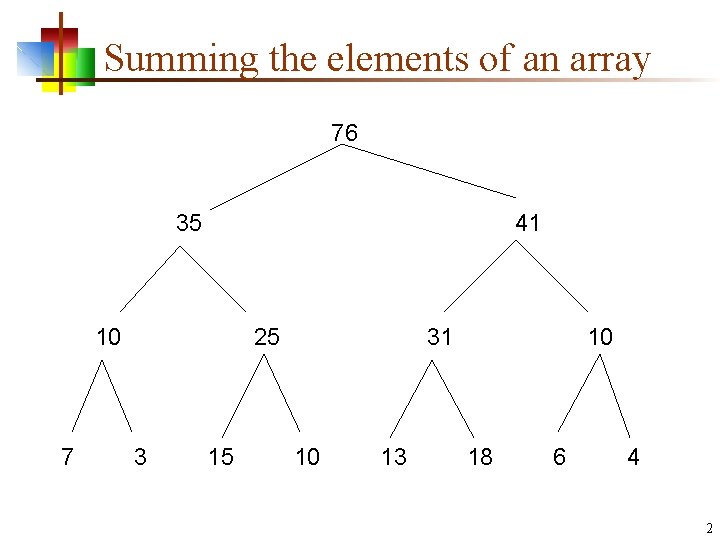

Summing the elements of an array 76 35 41 10 7 25 3 15 31 10 13 10 18 6 4 2

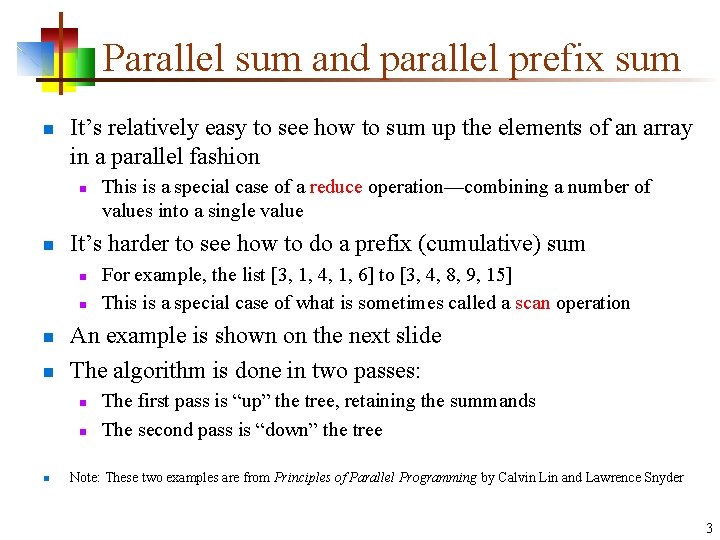

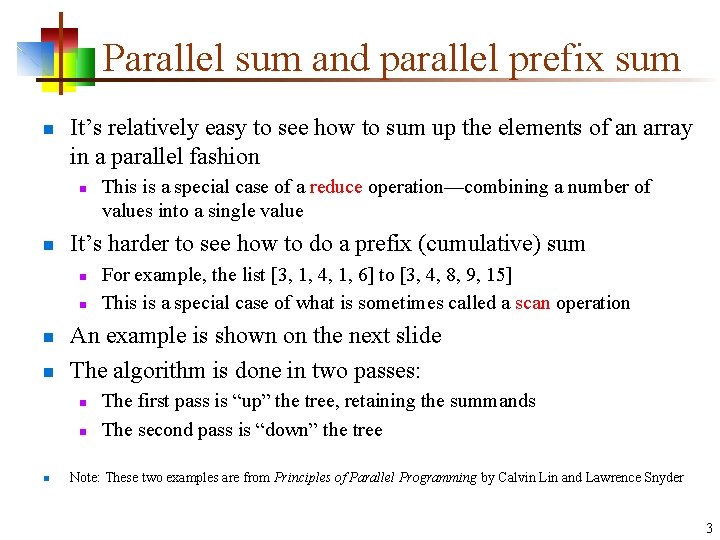

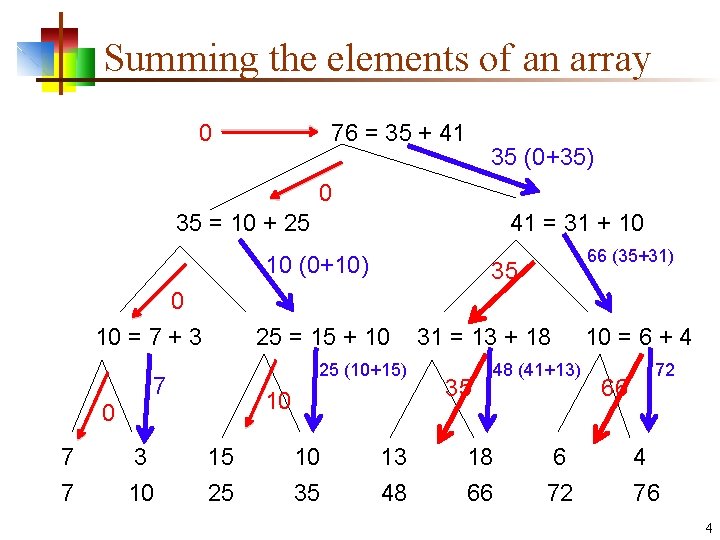

Parallel sum and parallel prefix sum n It’s relatively easy to see how to sum up the elements of an array in a parallel fashion n n It’s harder to see how to do a prefix (cumulative) sum n n For example, the list [3, 1, 4, 1, 6] to [3, 4, 8, 9, 15] This is a special case of what is sometimes called a scan operation An example is shown on the next slide The algorithm is done in two passes: n n n This is a special case of a reduce operation—combining a number of values into a single value The first pass is “up” the tree, retaining the summands The second pass is “down” the tree Note: These two examples are from Principles of Parallel Programming by Calvin Lin and Lawrence Snyder 3

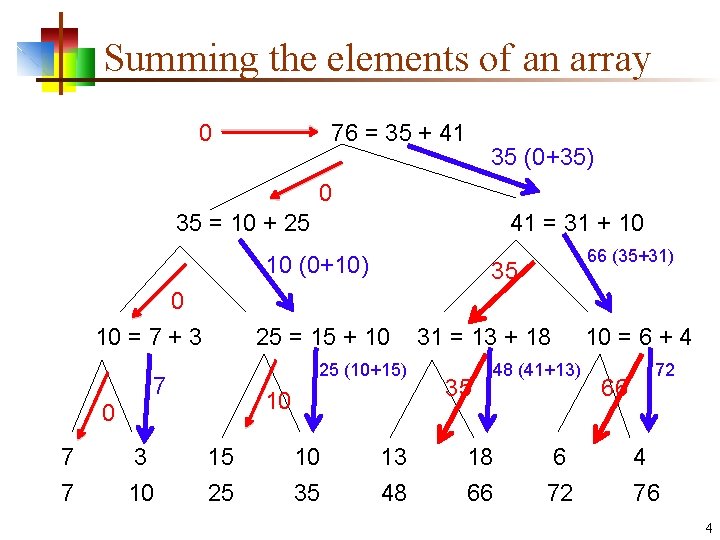

Summing the elements of an array 0 76 = 35 + 41 35 (0+35) 0 35 = 10 + 25 41 = 31 + 10 10 (0+10) 66 (35+31) 35 0 10 = 7 + 3 25 = 15 + 10 25 (10+15) 7 10 0 7 7 3 10 15 25 10 35 13 48 31 = 13 + 18 35 48 (41+13) 18 66 6 72 10 = 6 + 4 66 72 4 76 4

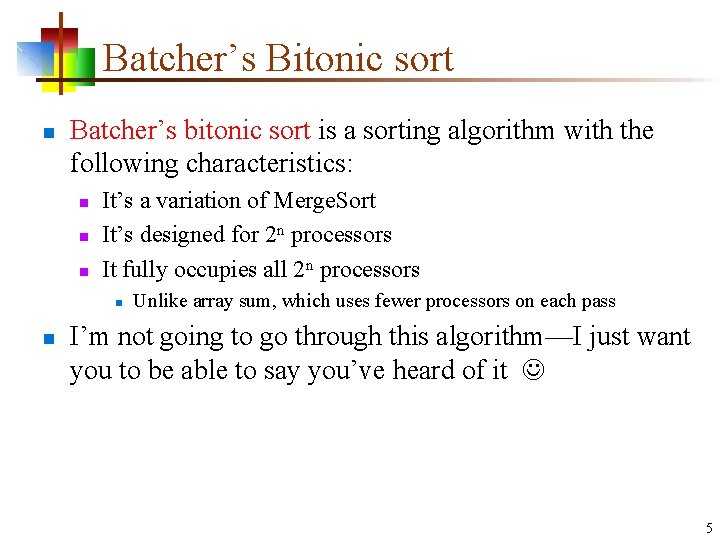

Batcher’s Bitonic sort n Batcher’s bitonic sort is a sorting algorithm with the following characteristics: n n n It’s a variation of Merge. Sort It’s designed for 2 n processors It fully occupies all 2 n processors n n Unlike array sum, which uses fewer processors on each pass I’m not going to go through this algorithm—I just want you to be able to say you’ve heard of it 5

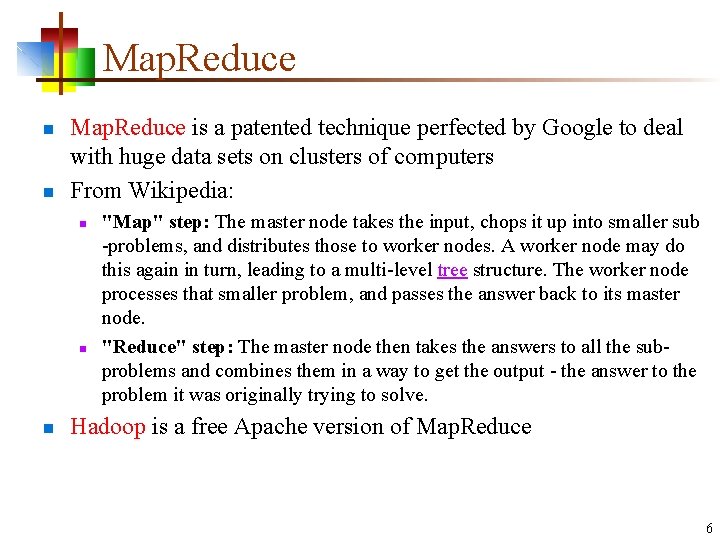

Map. Reduce n n Map. Reduce is a patented technique perfected by Google to deal with huge data sets on clusters of computers From Wikipedia: n n n "Map" step: The master node takes the input, chops it up into smaller sub -problems, and distributes those to worker nodes. A worker node may do this again in turn, leading to a multi-level tree structure. The worker node processes that smaller problem, and passes the answer back to its master node. "Reduce" step: The master node then takes the answers to all the subproblems and combines them in a way to get the output - the answer to the problem it was originally trying to solve. Hadoop is a free Apache version of Map. Reduce 6

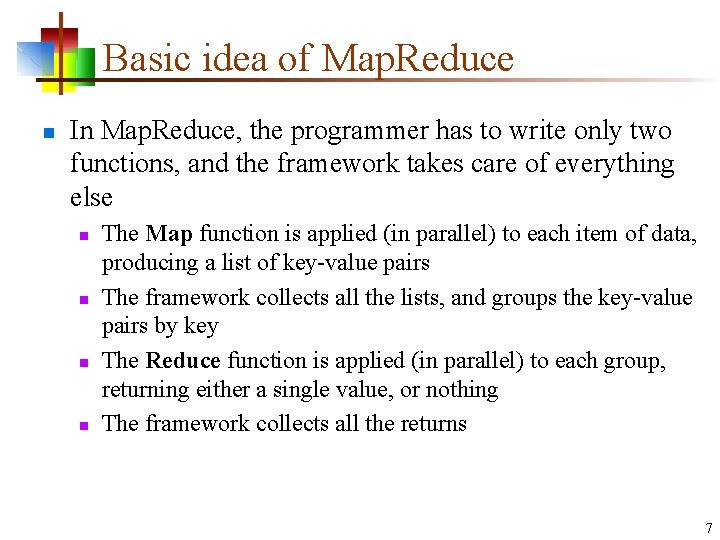

Basic idea of Map. Reduce n In Map. Reduce, the programmer has to write only two functions, and the framework takes care of everything else n n The Map function is applied (in parallel) to each item of data, producing a list of key-value pairs The framework collects all the lists, and groups the key-value pairs by key The Reduce function is applied (in parallel) to each group, returning either a single value, or nothing The framework collects all the returns 7

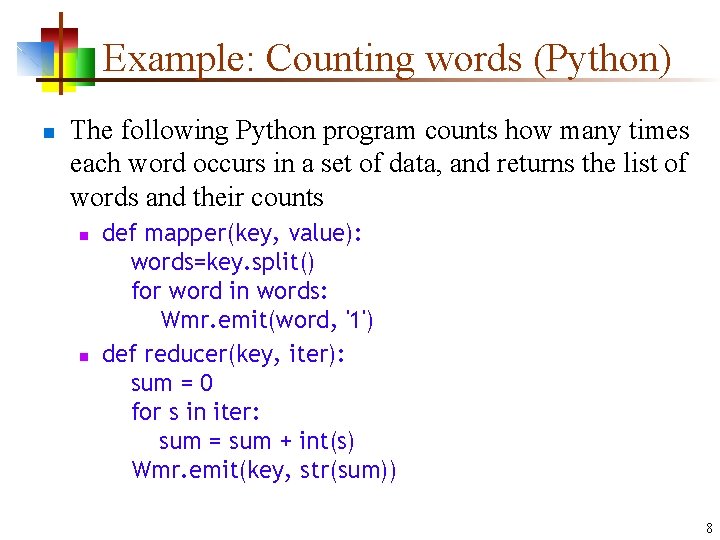

Example: Counting words (Python) n The following Python program counts how many times each word occurs in a set of data, and returns the list of words and their counts n n def mapper(key, value): words=key. split() for word in words: Wmr. emit(word, '1') def reducer(key, iter): sum = 0 for s in iter: sum = sum + int(s) Wmr. emit(key, str(sum)) 8

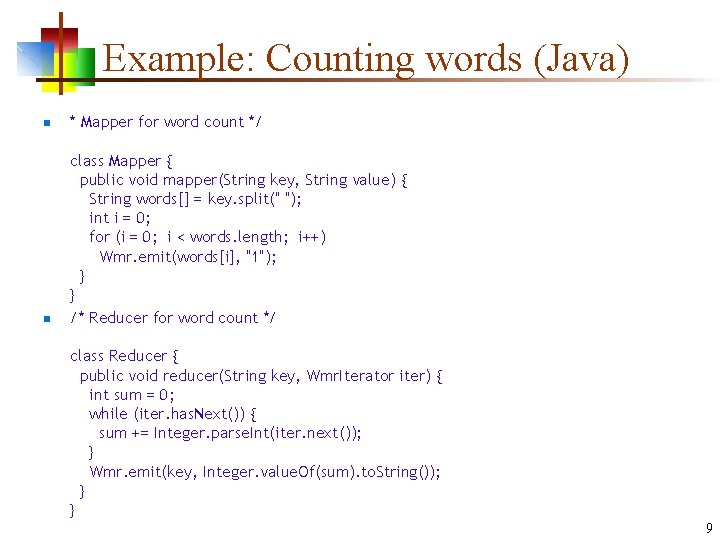

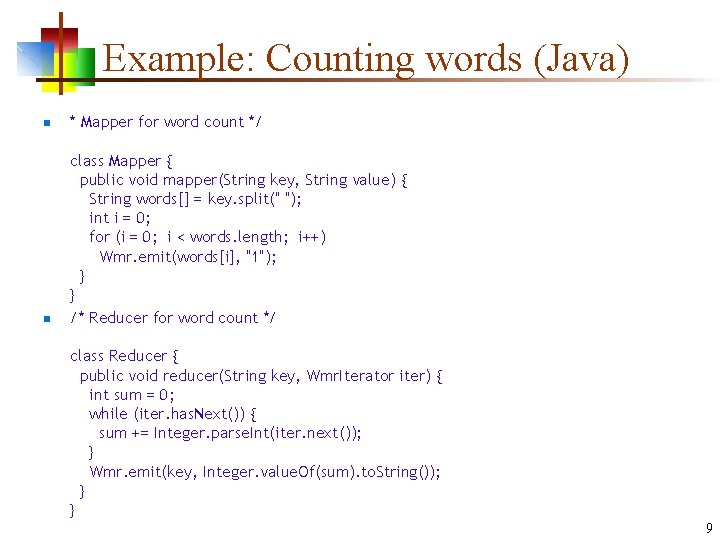

Example: Counting words (Java) n * Mapper for word count */ n class Mapper { public void mapper(String key, String value) { String words[] = key. split(" "); int i = 0; for (i = 0; i < words. length; i++) Wmr. emit(words[i], "1"); } } /* Reducer for word count */ class Reducer { public void reducer(String key, Wmr. Iterator iter) { int sum = 0; while (iter. has. Next()) { sum += Integer. parse. Int(iter. next()); } Wmr. emit(key, Integer. value. Of(sum). to. String()); } } 9

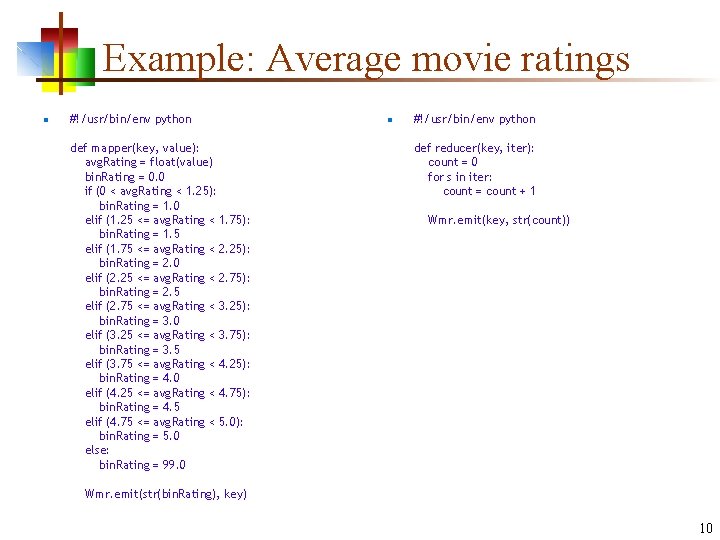

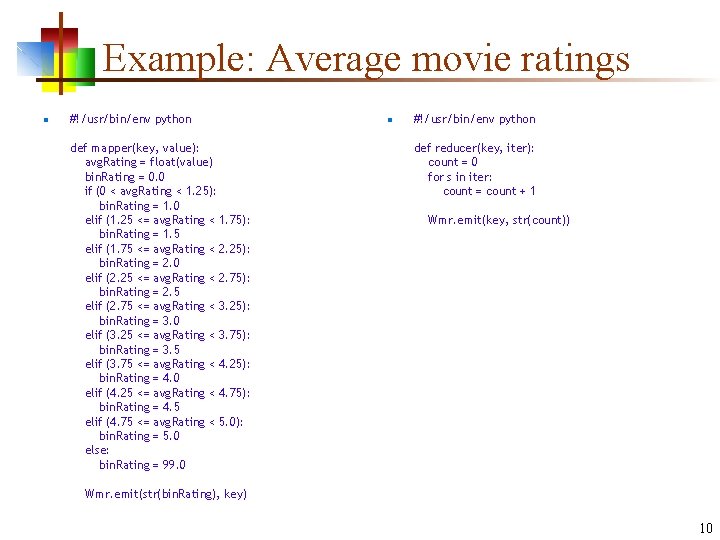

Example: Average movie ratings n #!/usr/bin/env python def mapper(key, value): avg. Rating = float(value) bin. Rating = 0. 0 if (0 < avg. Rating < 1. 25): bin. Rating = 1. 0 elif (1. 25 <= avg. Rating < 1. 75): bin. Rating = 1. 5 elif (1. 75 <= avg. Rating < 2. 25): bin. Rating = 2. 0 elif (2. 25 <= avg. Rating < 2. 75): bin. Rating = 2. 5 elif (2. 75 <= avg. Rating < 3. 25): bin. Rating = 3. 0 elif (3. 25 <= avg. Rating < 3. 75): bin. Rating = 3. 5 elif (3. 75 <= avg. Rating < 4. 25): bin. Rating = 4. 0 elif (4. 25 <= avg. Rating < 4. 75): bin. Rating = 4. 5 elif (4. 75 <= avg. Rating < 5. 0): bin. Rating = 5. 0 else: bin. Rating = 99. 0 n #!/usr/bin/env python def reducer(key, iter): count = 0 for s in iter: count = count + 1 Wmr. emit(key, str(count)) Wmr. emit(str(bin. Rating), key) 10

The End 11