Compilers Source language Scanner lexical analysis tokens Parser

- Slides: 27

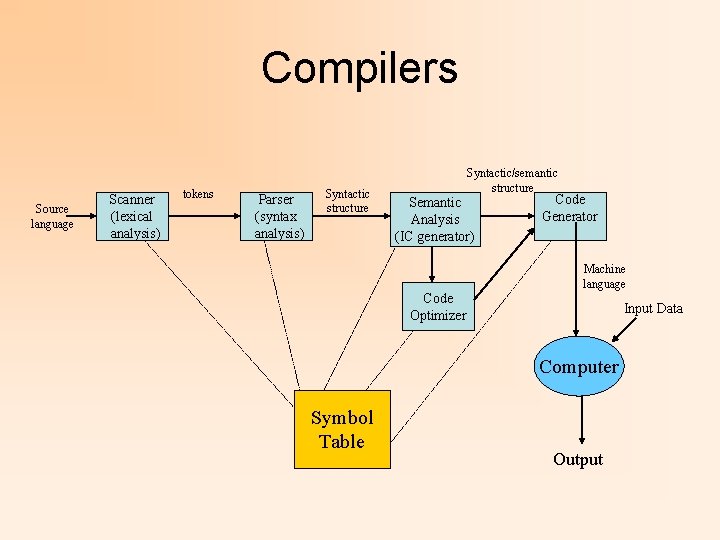

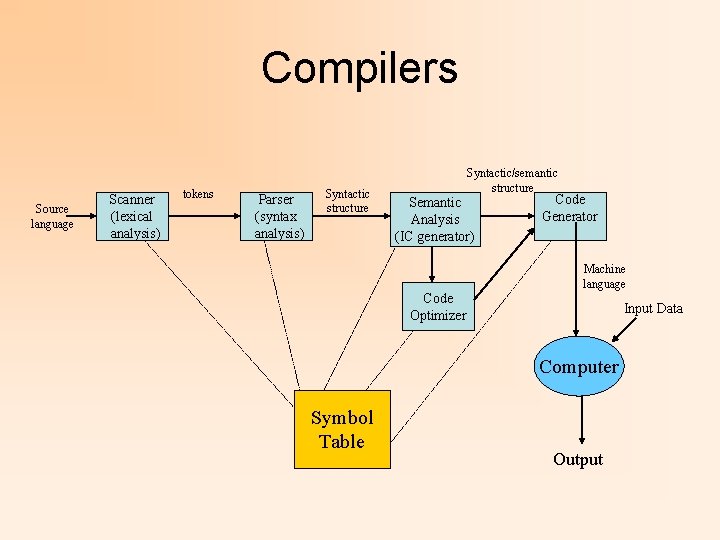

Compilers Source language Scanner (lexical analysis) tokens Parser (syntax analysis) Syntactic structure Syntactic/semantic structure Semantic Analysis (IC generator) Code Generator Machine language Code Optimizer Input Data Computer Symbol Table Output

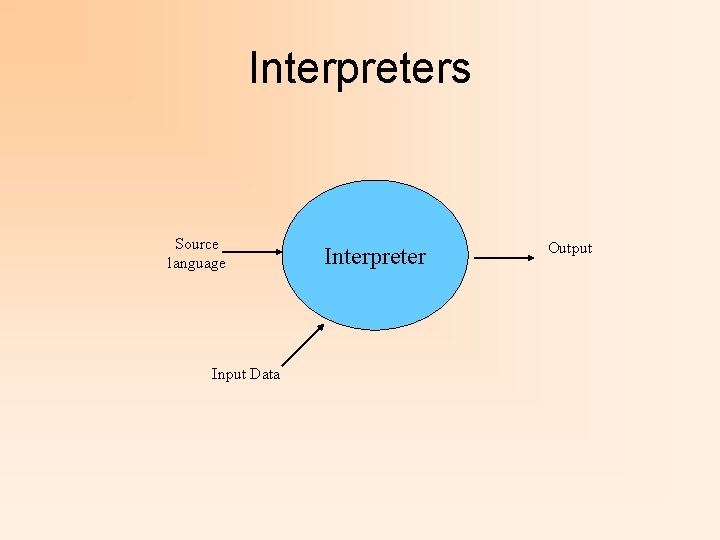

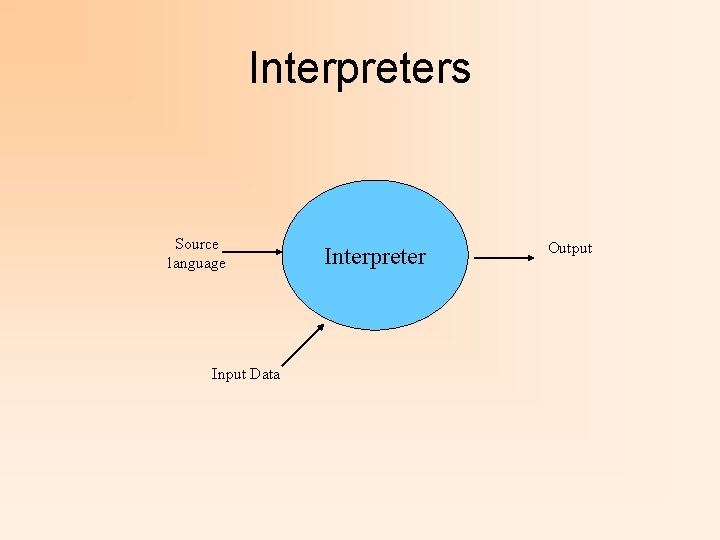

Interpreters Source language Input Data Interpreter Output

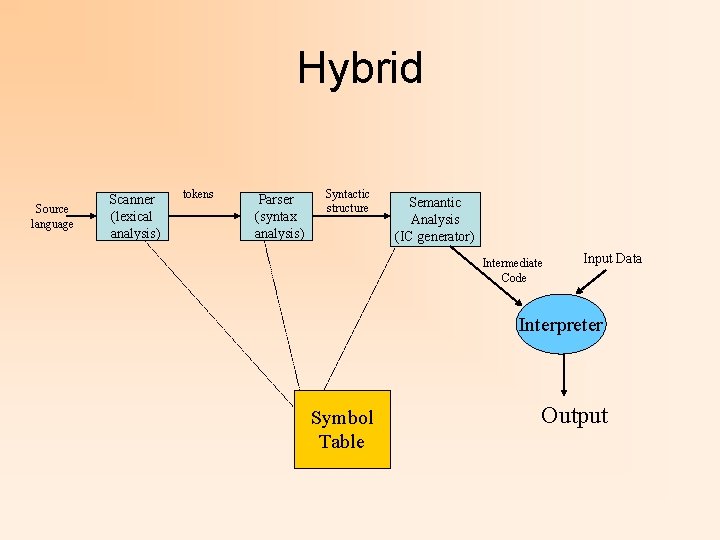

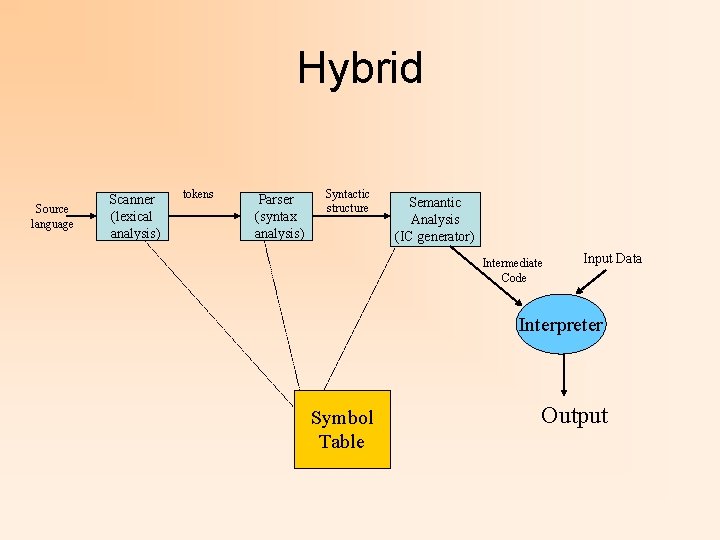

Hybrid Source language Scanner (lexical analysis) tokens Parser (syntax analysis) Syntactic structure Semantic Analysis (IC generator) Intermediate Code Input Data Interpreter Symbol Table Output

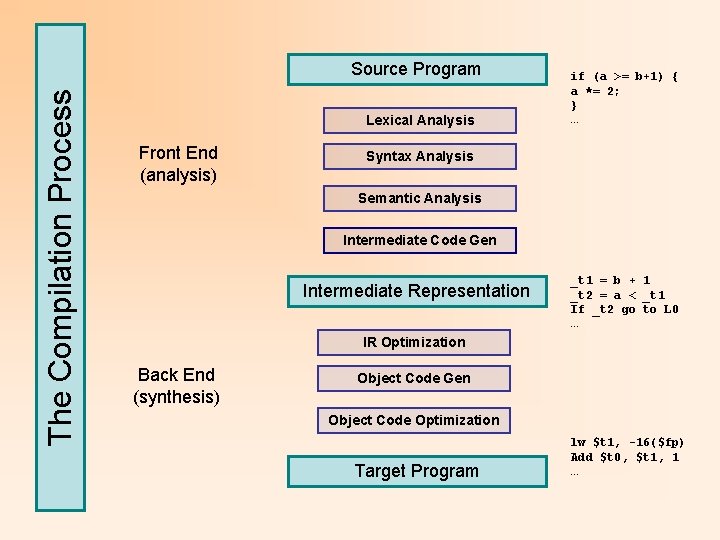

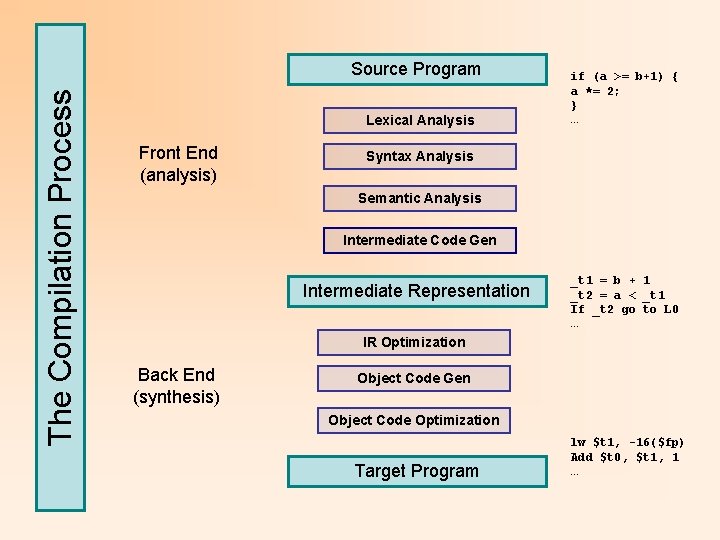

The Compilation Process Source Program Lexical Analysis Front End (analysis) if (a >= b+1) { a *= 2; } … Syntax Analysis Semantic Analysis Intermediate Code Gen Intermediate Representation _t 1 = b + 1 _t 2 = a < _t 1 If _t 2 go to L 0 … IR Optimization Back End (synthesis) Object Code Gen Object Code Optimization Target Program lw $t 1, -16($fp) Add $t 0, $t 1, 1 …

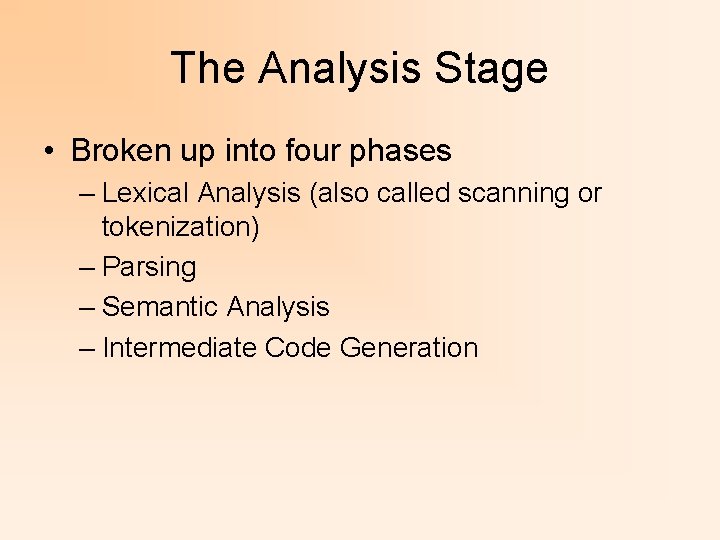

The Analysis Stage • Broken up into four phases – Lexical Analysis (also called scanning or tokenization) – Parsing – Semantic Analysis – Intermediate Code Generation

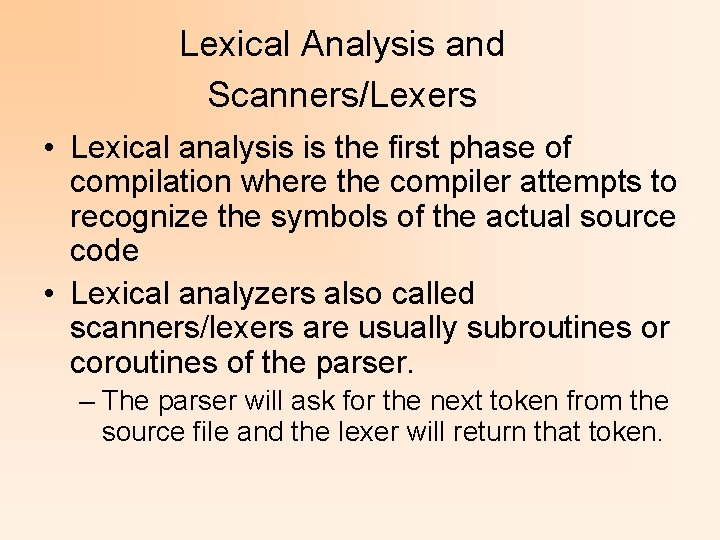

Lexical Analysis and Scanners/Lexers • Lexical analysis is the first phase of compilation where the compiler attempts to recognize the symbols of the actual source code • Lexical analyzers also called scanners/lexers are usually subroutines or coroutines of the parser. – The parser will ask for the next token from the source file and the lexer will return that token.

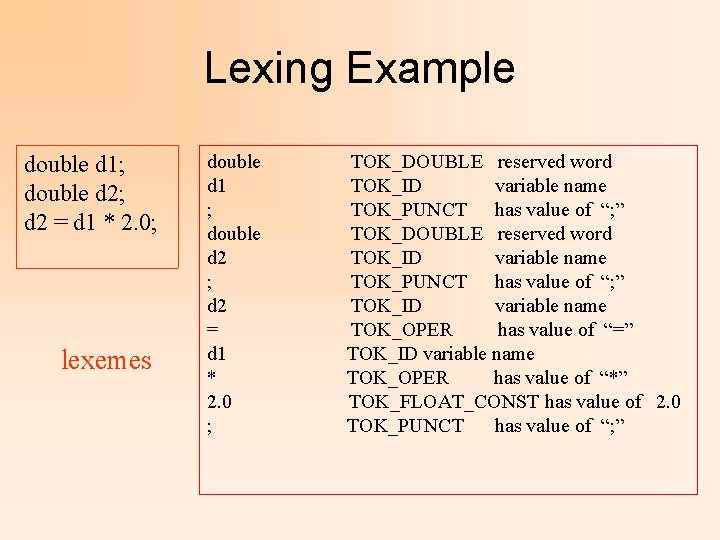

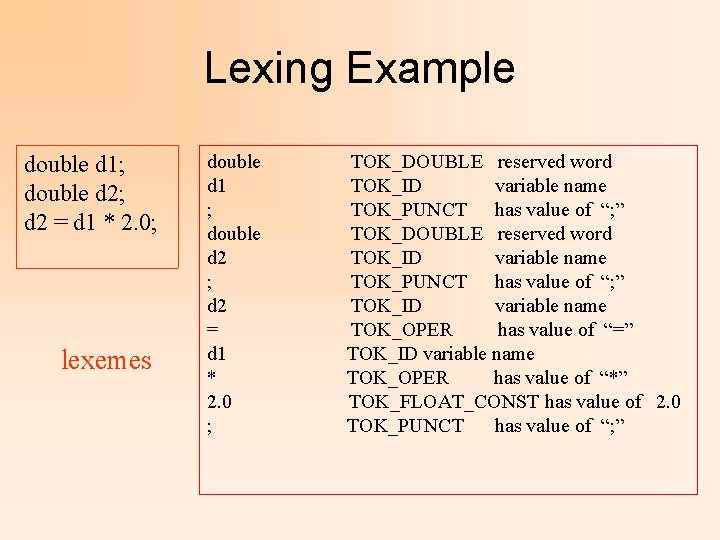

Lexing Example double d 1; double d 2; d 2 = d 1 * 2. 0; lexemes double d 1 ; double d 2 ; d 2 = d 1 * 2. 0 ; TOK_DOUBLE reserved word TOK_ID variable name TOK_PUNCT has value of “; ” TOK_ID variable name TOK_OPER has value of “=” TOK_ID variable name TOK_OPER has value of “*” TOK_FLOAT_CONST has value of 2. 0 TOK_PUNCT has value of “; ”

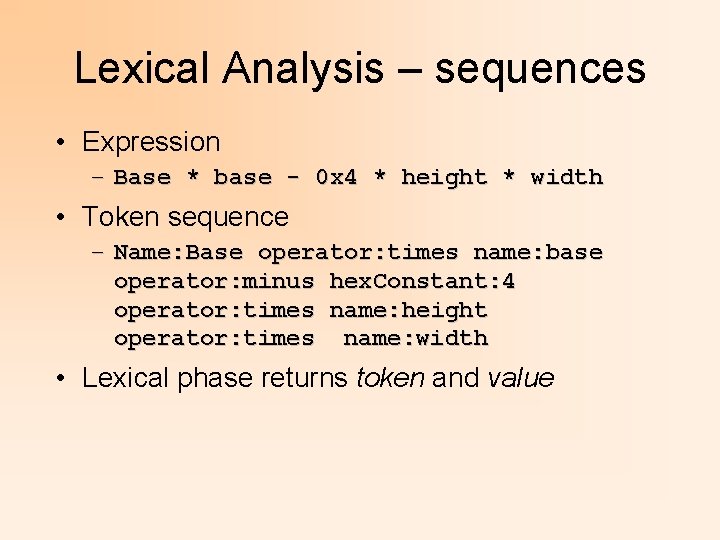

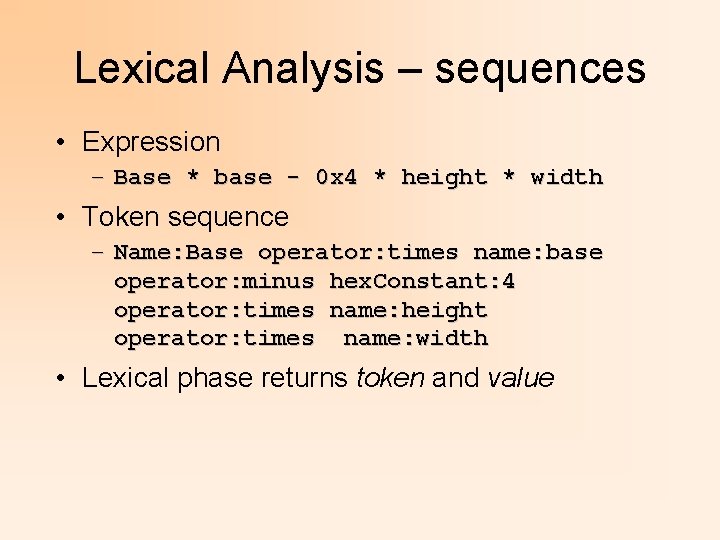

Lexical Analysis – sequences • Expression – Base * base - 0 x 4 * height * width • Token sequence – Name: Base operator: times name: base operator: minus hex. Constant: 4 operator: times name: height operator: times name: width • Lexical phase returns token and value

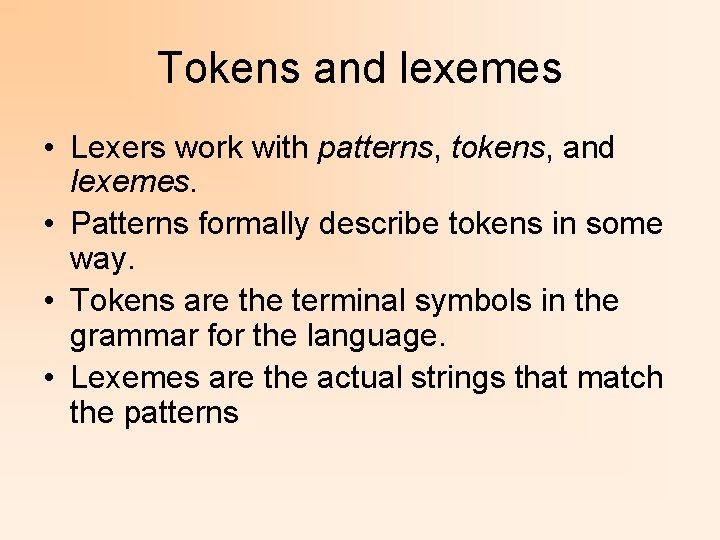

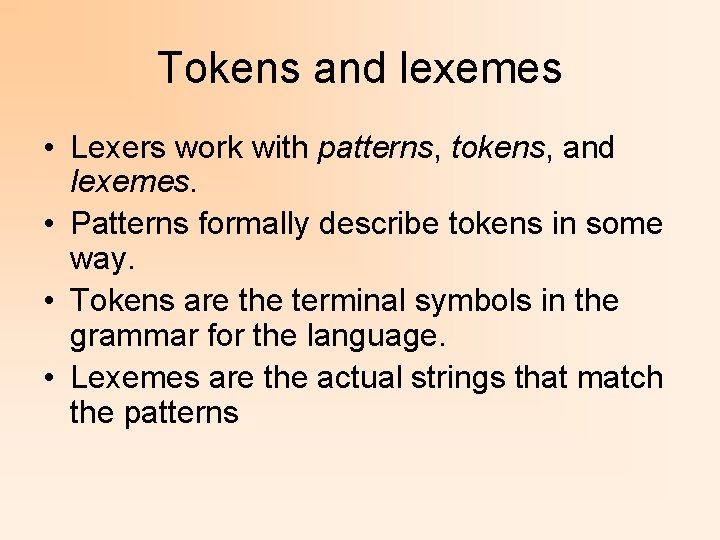

Tokens and lexemes • Lexers work with patterns, tokens, and lexemes. • Patterns formally describe tokens in some way. • Tokens are the terminal symbols in the grammar for the language. • Lexemes are the actual strings that match the patterns

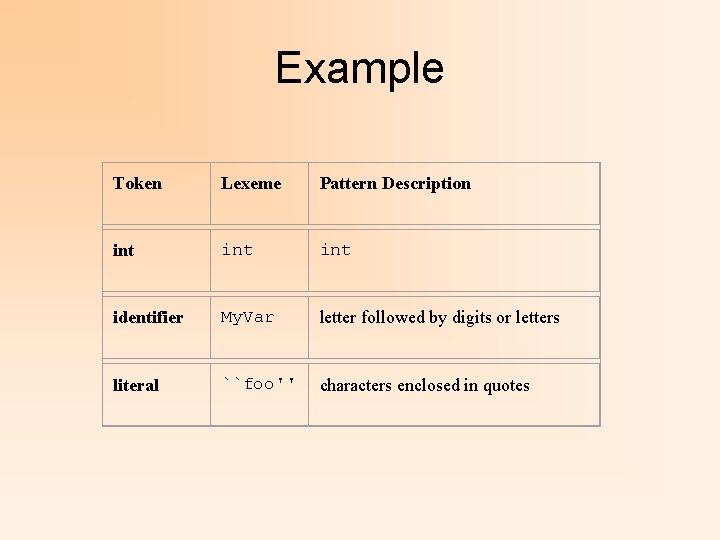

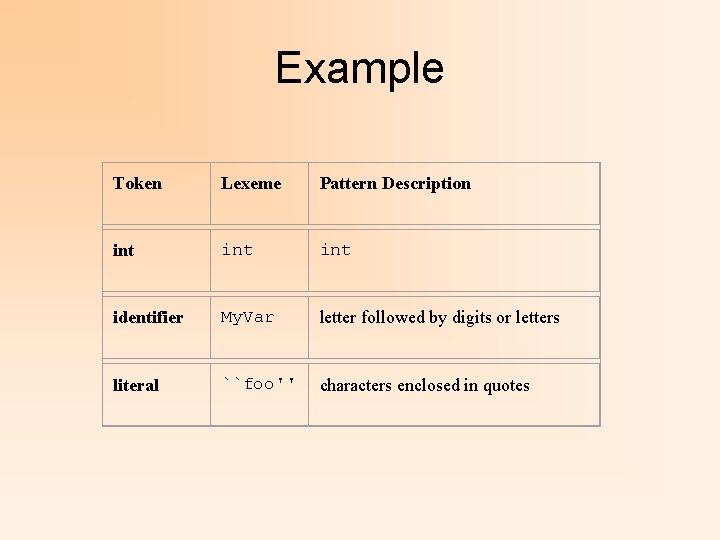

Example Token Lexeme Pattern Description int int identifier My. Var letter followed by digits or letters literal ``foo'' characters enclosed in quotes

Expressing Patterns for Tokens • As you may have already guessed (or know), the easiest way to specify a token is with a regular expression.

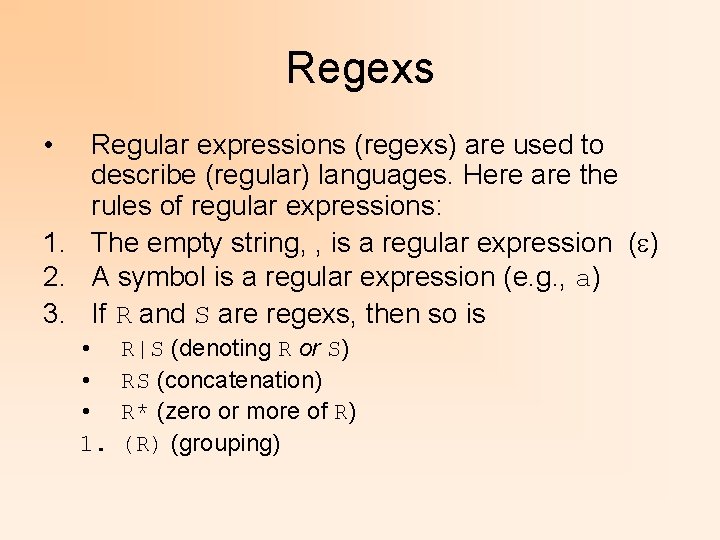

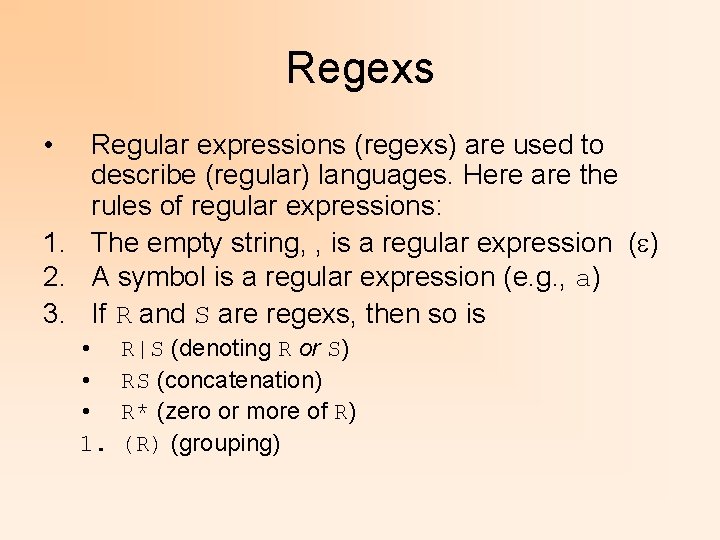

Regexs • Regular expressions (regexs) are used to describe (regular) languages. Here are the rules of regular expressions: 1. The empty string, , is a regular expression (e) 2. A symbol is a regular expression (e. g. , a) 3. If R and S are regexs, then so is • • • 1. R|S (denoting R or S) RS (concatenation) R* (zero or more of R) (grouping)

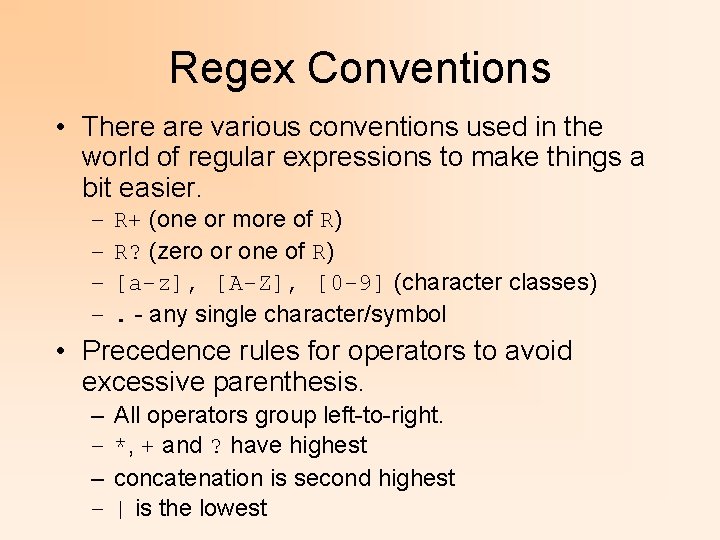

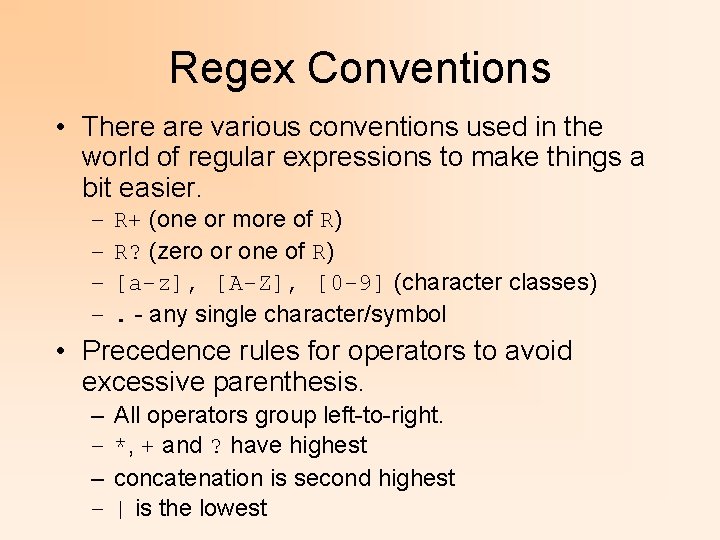

Regex Conventions • There are various conventions used in the world of regular expressions to make things a bit easier. – – R+ (one or more of R) R? (zero or one of R) [a-z], [A-Z], [0 -9] (character classes). - any single character/symbol • Precedence rules for operators to avoid excessive parenthesis. – – All operators group left-to-right. *, + and ? have highest concatenation is second highest | is the lowest

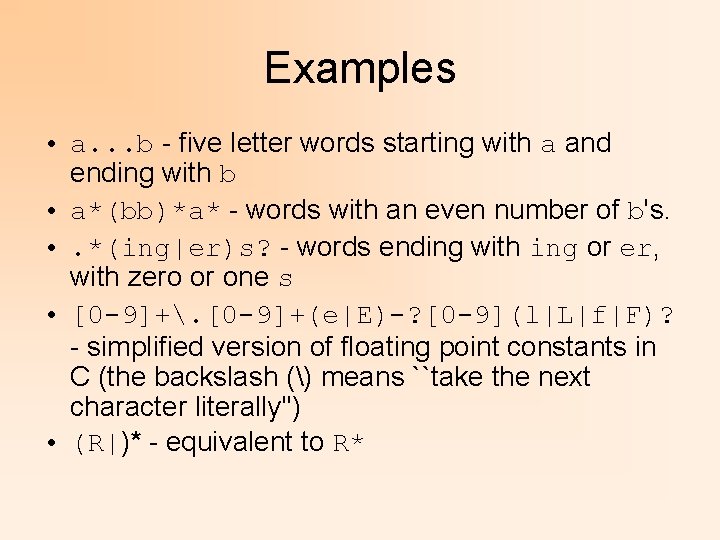

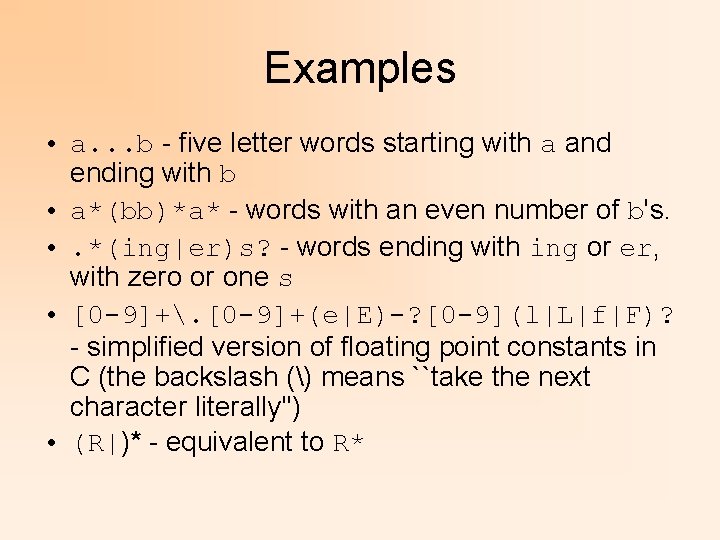

Examples • a. . . b - five letter words starting with a and ending with b • a*(bb)*a* - words with an even number of b's. • . *(ing|er)s? - words ending with ing or er, with zero or one s • [0 -9]+. [0 -9]+(e|E)-? [0 -9](l|L|f|F)? - simplified version of floating point constants in C (the backslash () means ``take the next character literally'') • (R|)* - equivalent to R*

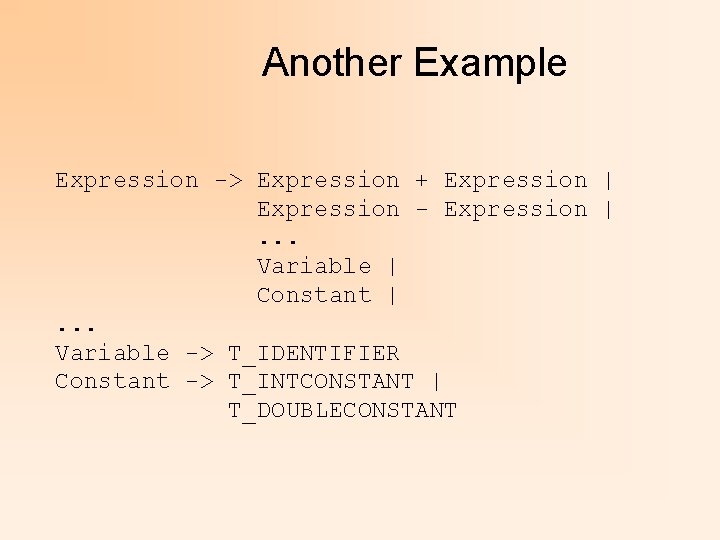

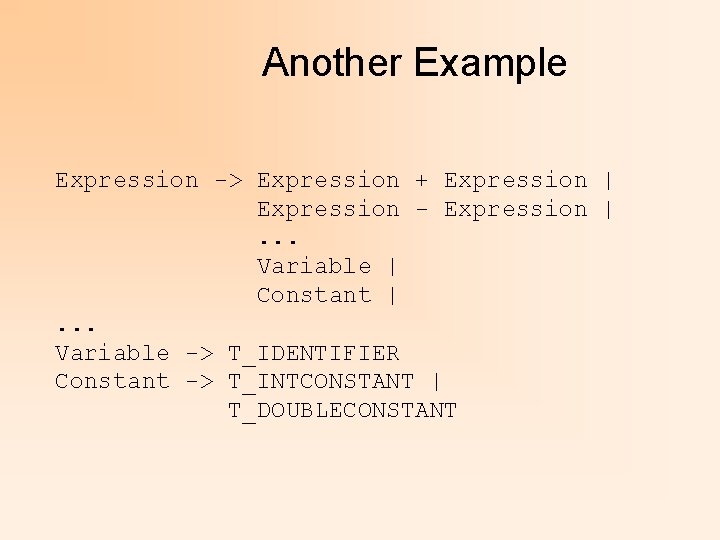

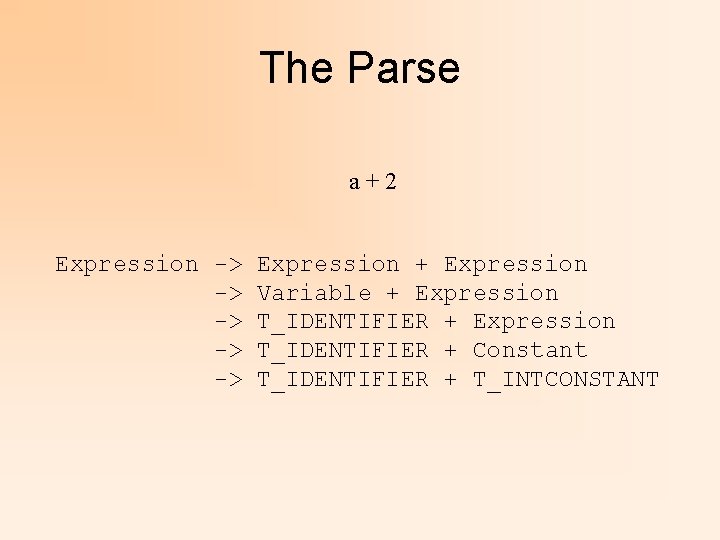

Another Example Expression -> Expression + Expression | Expression - Expression |. . . Variable | Constant |. . . Variable -> T_IDENTIFIER Constant -> T_INTCONSTANT | T_DOUBLECONSTANT

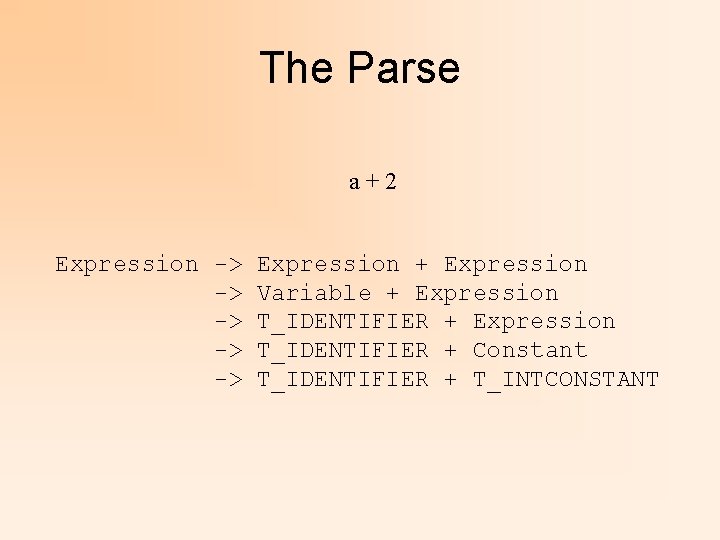

The Parse a+2 Expression -> -> -> Expression + Expression Variable + Expression T_IDENTIFIER + Constant T_IDENTIFIER + T_INTCONSTANT

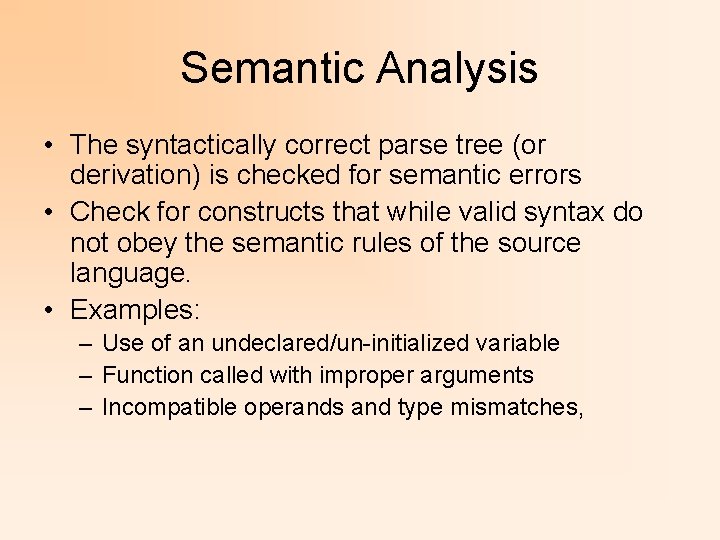

Semantic Analysis • The syntactically correct parse tree (or derivation) is checked for semantic errors • Check for constructs that while valid syntax do not obey the semantic rules of the source language. • Examples: – Use of an undeclared/un-initialized variable – Function called with improper arguments – Incompatible operands and type mismatches,

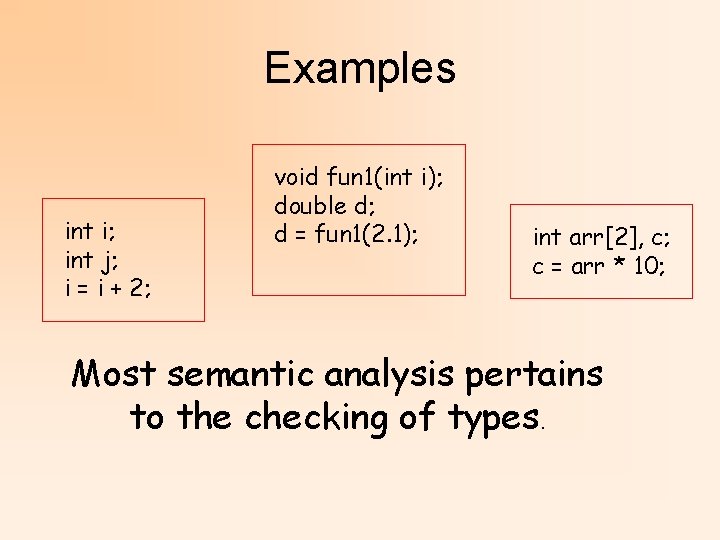

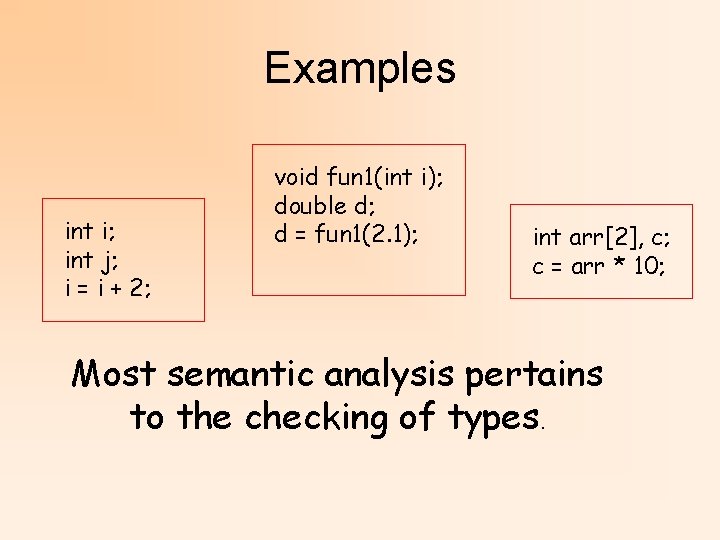

Examples int i; int j; i = i + 2; void fun 1(int i); double d; d = fun 1(2. 1); int arr[2], c; c = arr * 10; Most semantic analysis pertains to the checking of types.

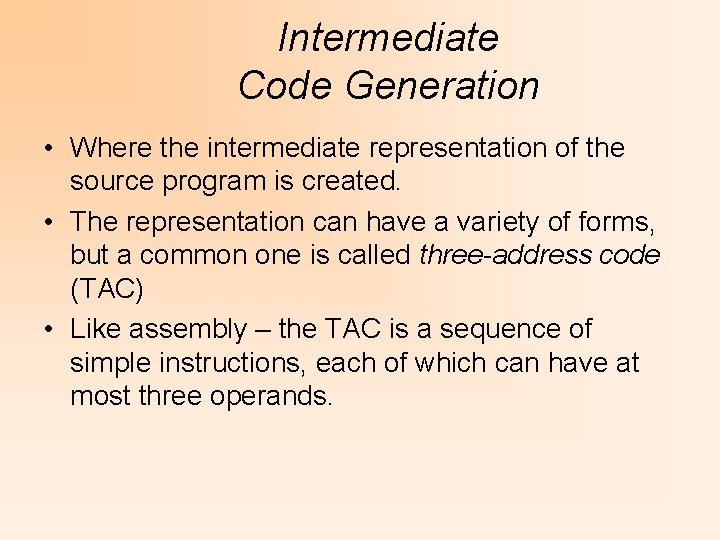

Intermediate Code Generation • Where the intermediate representation of the source program is created. • The representation can have a variety of forms, but a common one is called three-address code (TAC) • Like assembly – the TAC is a sequence of simple instructions, each of which can have at most three operands.

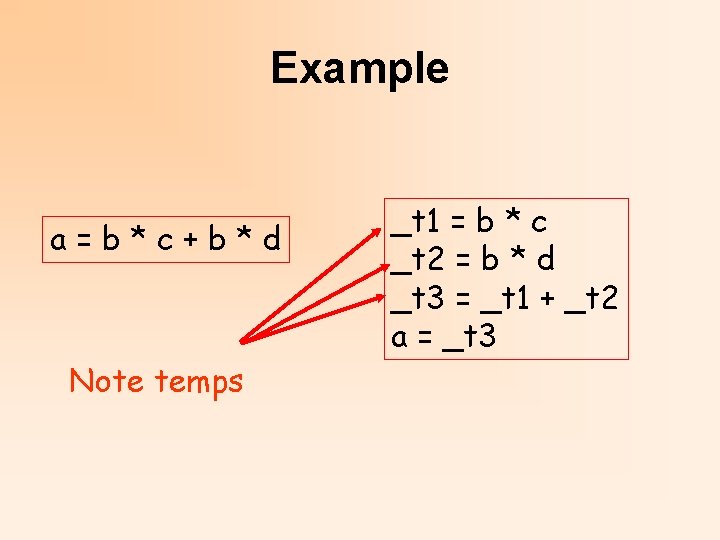

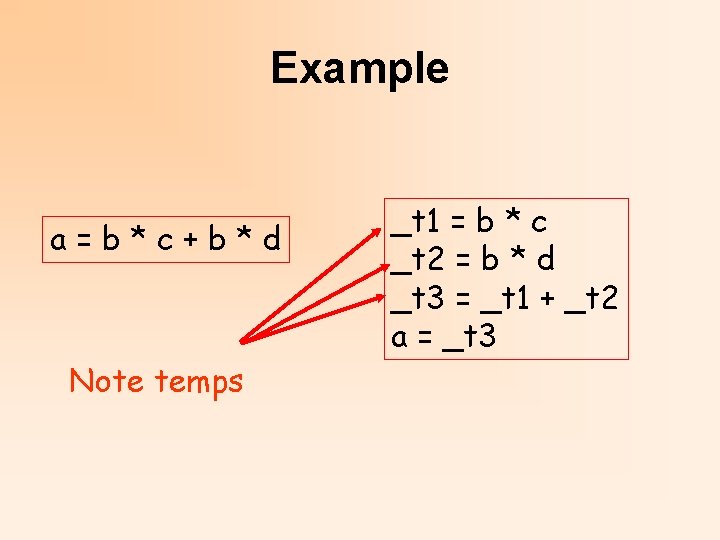

Example a=b*c+b*d Note temps _t 1 = b * c _t 2 = b * d _t 3 = _t 1 + _t 2 a = _t 3

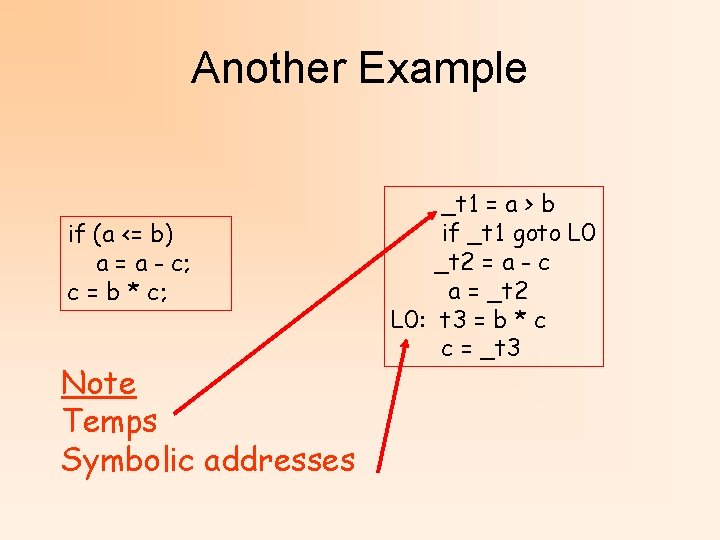

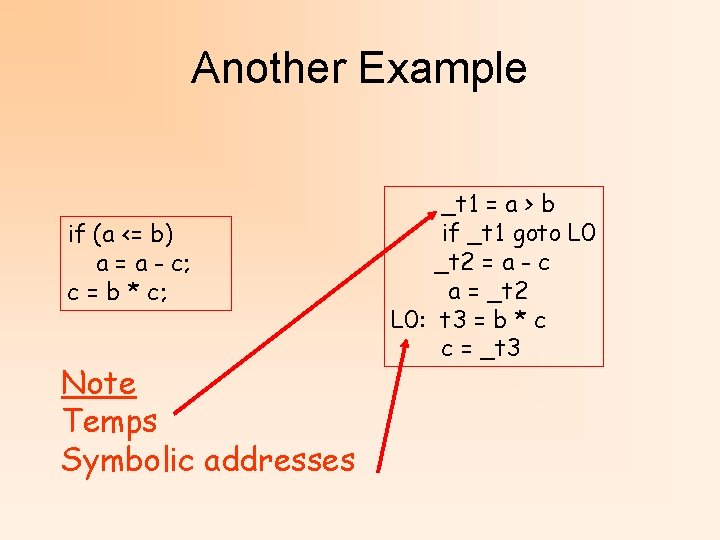

Another Example if (a <= b) a = a - c; c = b * c; Note Temps Symbolic addresses _t 1 = a > b if _t 1 goto L 0 _t 2 = a - c a = _t 2 L 0: t 3 = b * c c = _t 3

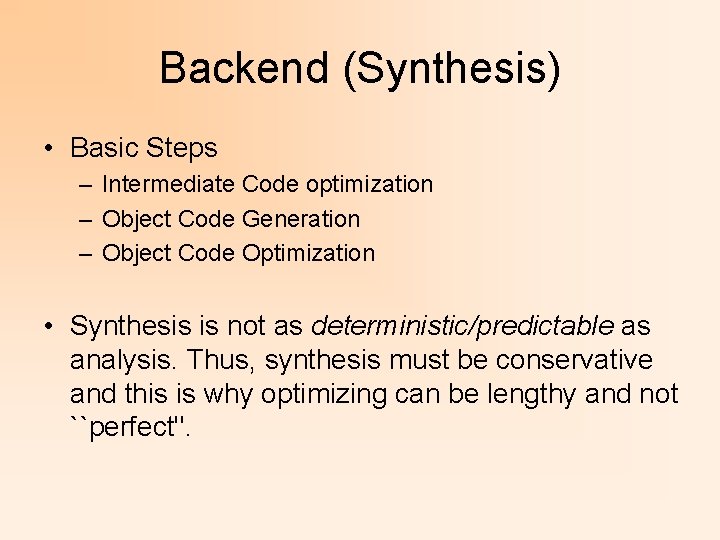

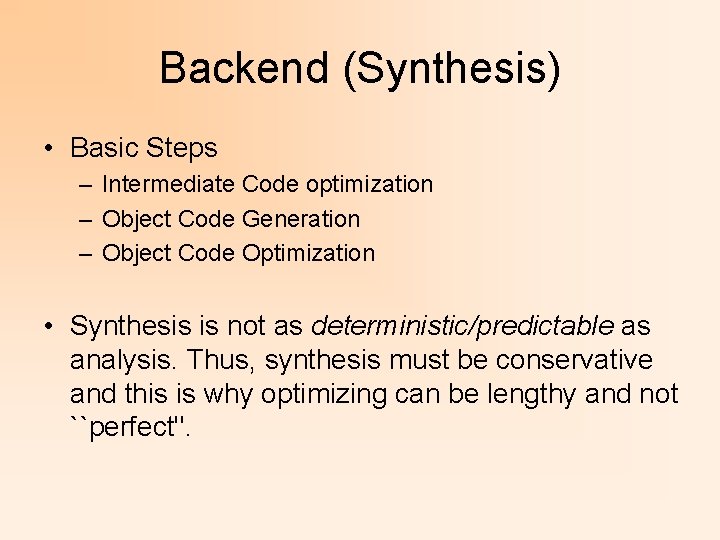

Backend (Synthesis) • Basic Steps – Intermediate Code optimization – Object Code Generation – Object Code Optimization • Synthesis is not as deterministic/predictable as analysis. Thus, synthesis must be conservative and this is why optimizing can be lengthy and not ``perfect''.

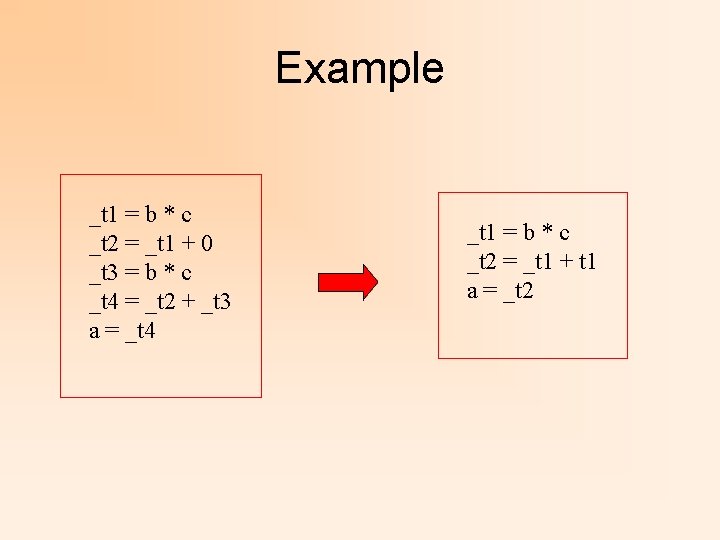

Intermediate Code optimization • Input is IR, output is optimized IR • What are some of the optimizations that can be performed? – Algebraic simplifications (*1, /1, *0, factoring, etc) – Moving invariant code out of loops – Removal of isolated code and unused variables – Removing variables that are not used

IR Optimization • Optimizations take place with IR and when manipulating actual machine code. • However the optimizations done at the IR stage can be done to any program, regardless of architecture. • The optimizations done with machine/object code usually exploit some feature of the target architecture in some way • What’s this say about a JITC approach?

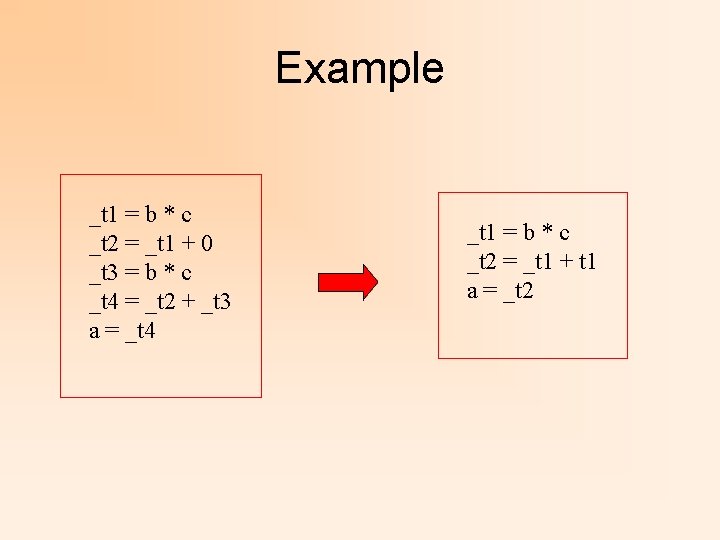

Example _t 1 = b * c _t 2 = _t 1 + 0 _t 3 = b * c _t 4 = _t 2 + _t 3 a = _t 4 _t 1 = b * c _t 2 = _t 1 + t 1 a = _t 2

Object Code Generation • The output of this stage is machine or assembly code • Variables get mapped to memory locations (Variables are just a shorthand for that anyway) • Actual machine instructions are swapped for symbolic ones

Object Code Optimization • May follow code generation – Optional – only on demand – Variable – Like IR Optimization may be expensive – Levels • Exploits machine detail – Examples: • Register pools • Instruction Pipelining