Chapter 21 Evaluating Systems Dr Wayne Summers Department

- Slides: 17

Chapter 21: Evaluating Systems Dr. Wayne Summers Department of Computer Science Columbus State University Summers_wayne@colstate. edu http: //csc. colstate. edu/summers

Goals of Formal Evaluation ¨ Provide a set of requirements defining the security functionality for the system or product ¨ Provide a set of assurance requirements that delineate the steps for establishing that the system or product meets its functional requirements. ¨ Provide a methodology for determining that the product or system meets the functional requirements based on analysis of the assurance evidence. ¨ Provide a measure of the evaluation result that indicates how trustworthy the product or system is with respect to the security functional requirements defined for it. 2

TCSEC: 1983 -1999 ¨ Trusted Computer System Evaluation Criteria (Orange Book): D, C 1, C 2, B 1, B 2, B 3, A 1 – Emphasized confidentiality – TCSEC Functional Requirements • • Discretionary Access control (DAC) Object reuse requirements Mandatory access control (MAC) (>=B 1) Label requirements (>=B 1) Identification and authentication requirements Trusted path requirements (>=B 2) Audit requirements 3

TCSEC: 1983 -1999 ¨ TSEC Assurance Requirements – Configuration management (>= B 2) – Trusted distribution (A 1) – TCSEC systems architecture (C 1 -B 3) – mandate modularity, minimize complexity, keep TCB as small and simple as possible – Design specification and verification (>=B 1) – Testing requirements – Product documentation requirements 4

TCSEC: 1983 -1999 ¨ TCSEC Evaluation Classes – C 1 – discretionary protection – C 2 – controlled access protection – B 1 – labeled security protection – B 2 – structural protection – B 3 – security domains – A 1 – verified protection 5

International Efforts and the ITSEC: 1991 -2001 ¨ Information Technology Security Evaluation Criteria - European Standard since 1991 (E 0, E 1, E 2, E 3, E 4, E 5, E 6) – Did not include tamperproof reference validation mechanisms, process isolation, principle of least privilege, well-defined user interface, and requirement for system integrity – Did require assessment of security measures used for the developer environment during the development and maintenance, submission of code, procedures for delivery, ease of use analysis 6

ITSEC ¨ E 1 – required a security target, informal description of architecture. ¨ E 2 – required informal description of the detailed ¨ ¨ design, configuration control, distribution control process E 3 – more stringent requirements on detail desing and correspondence between source code and security requirements E 4 – requires formal model of security policy, more rigorous structured approach to architectural and detailed design, and a design level vulnerability analysis E 5 – requires correspondence between detailed desing and source code level vulnerability analysis E 6 – requires extensive use of formal methods 7

Common Criteria: 1998 -Present ¨ CC – defacto standard for U. S. and many other countries; ISO Standard 15408 – TOE (target of evaluation) product/system that is the subject of the evaluation – TSP (TOE Security Policy) – set of rules that regulate how assets are managed, protected, and distributed – TSF (TOE Security Functions) – h’ware, s’ware, and firmware that must be relied on to enforce the TSP (generalization of TCSEC’s trusted computing base (TCB)) 8

Common Criteria ¨ CC Protection Profile (PP) – implementation independent set of security requirements for a category of products/systems that meet specific consumer needs – Introduction (PP Indentification & PP Overview) – – – Product/System Family Description Product/System Family Security Environment Security Objectives (product/system; environment) IT Security Requirements (functional and assurance) Rationale (objectives and requirements) 9

Common Criteria ¨ Security Target (ST) – set of security requirements and 10 specifications to be used as the basis for evaluation of an identified product/system – Introduction (ST Indentification & ST Overview) – Product/System Family Description – Product/System Family Security Environment – Security Objectives (product/system; environment) – IT Security Requirements (functional and assurance) – Product/System Summary Specification – PP Claims (claims of conformance) – Rationale (objectives, requirements, TOE summary specification, PP claims)

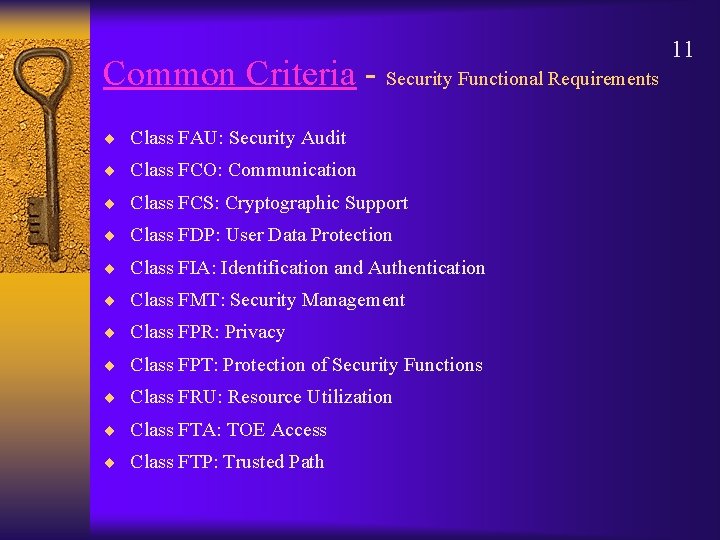

Common Criteria - Security Functional Requirements ¨ Class FAU: Security Audit ¨ Class FCO: Communication ¨ Class FCS: Cryptographic Support ¨ Class FDP: User Data Protection ¨ Class FIA: Identification and Authentication ¨ Class FMT: Security Management ¨ Class FPR: Privacy ¨ Class FPT: Protection of Security Functions ¨ Class FRU: Resource Utilization ¨ Class FTA: TOE Access ¨ Class FTP: Trusted Path 11

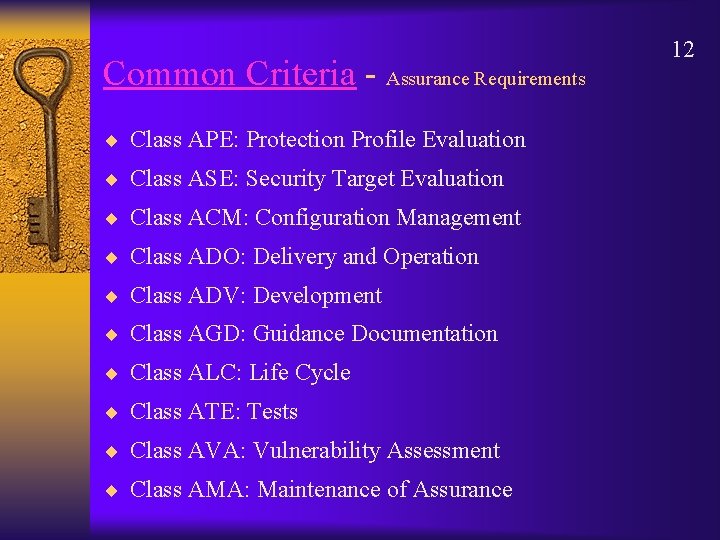

Common Criteria - Assurance Requirements ¨ Class APE: Protection Profile Evaluation ¨ Class ASE: Security Target Evaluation ¨ Class ACM: Configuration Management ¨ Class ADO: Delivery and Operation ¨ Class ADV: Development ¨ Class AGD: Guidance Documentation ¨ Class ALC: Life Cycle ¨ Class ATE: Tests ¨ Class AVA: Vulnerability Assessment ¨ Class AMA: Maintenance of Assurance 12

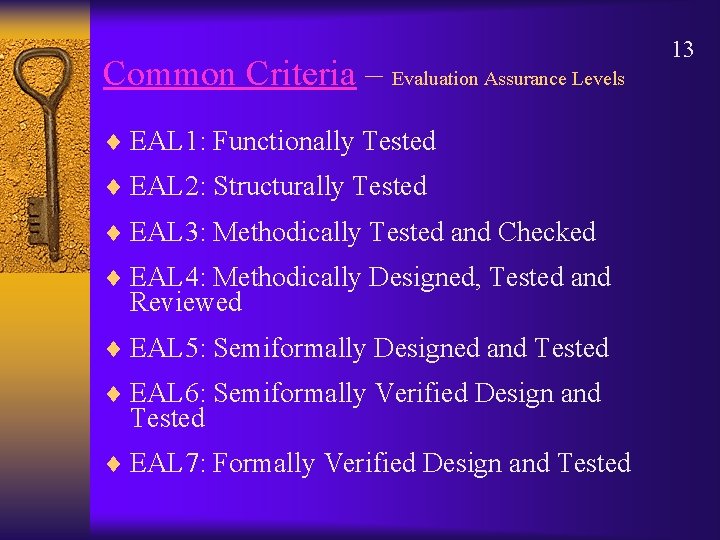

Common Criteria – Evaluation Assurance Levels ¨ EAL 1: Functionally Tested ¨ EAL 2: Structurally Tested ¨ EAL 3: Methodically Tested and Checked ¨ EAL 4: Methodically Designed, Tested and Reviewed ¨ EAL 5: Semiformally Designed and Tested ¨ EAL 6: Semiformally Verified Design and Tested ¨ EAL 7: Formally Verified Design and Tested 13

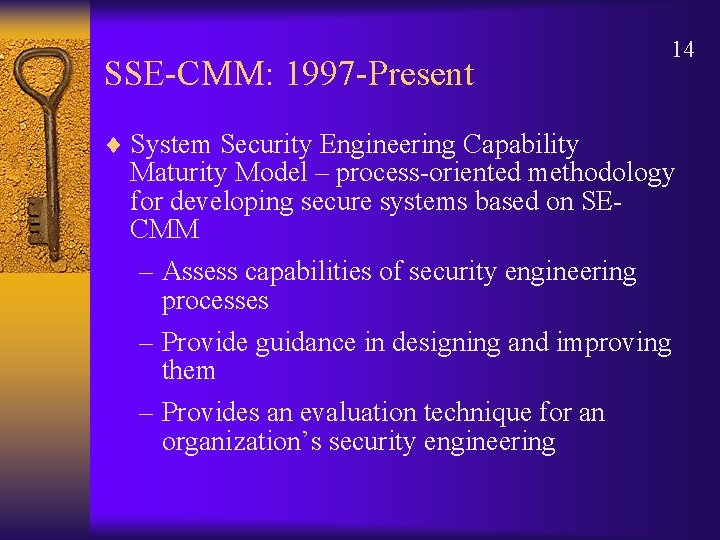

SSE-CMM: 1997 -Present 14 ¨ System Security Engineering Capability Maturity Model – process-oriented methodology for developing secure systems based on SECMM – Assess capabilities of security engineering processes – Provide guidance in designing and improving them – Provides an evaluation technique for an organization’s security engineering

SSE-CMM Model ¨ Process capability – range of expected results that can be achieved by following the process ¨ Process performance – measure of actual results achieved ¨ Process maturity – extent to which a process is explicitly defined, managed, measured, controlled, and effective 15

SSE-CMM Process Areas ¨ Administer Security Controls ¨ Assess Impact ¨ Assess Security Risks ¨ Assess Threat ¨ Assess Vulnerability ¨ Build Assurance Argument ¨ Coordinate Security ¨ Monitor System Security Posture ¨ Provide Security Input ¨ Specify Security Needs ¨ Verify and Validate Security 16

SSE-CMM Capability Maturity Levels ¨ Performed Informally – base processes are performed ¨ Planned and Tracked – Project-level definition, planning, and performance verification issues are addressed ¨ Well-Defined – focus on defining and refining standard practice and coordinating it across the organization ¨ Quantitatively Controlled – focus on establishing measurable quality goals and objectively managing their performance ¨ Continuously Improving – organizational capability and process effectiveness are improved 17