CHAPTER 13 ANALYSIS AND INTERPRETATION EXPOSITION OF DATA

- Slides: 35

CHAPTER 13 ANALYSIS AND INTERPRETATION: EXPOSITION OF DATA

DESCRIPTIVE STATISTICS: WHY SUMMARIZE ■ A clear presentation. DATA? of the data is necessary because it allows the reader to critically evaluate the data you are reporting ■ Before we summarize data, LOOK AT the data to identify possible omissions, errors, or other anomalies – Advantage: Make sure all errors have been removed before further analysis is conducted

DESCRIPTIVE STATISTICS: WHY SUMMARIZE DATA? ■ Two common ways to analyze a data set – Describe it – Make decisions about how to interpret it ■ Descriptive statistics – Procedures used to summarize, organize, and make sense of a set of scores or observations, typically presented graphically, in tabular form (in tables), or as summary statistics (single values) – Includes the mean, median, mode, variance, and frequencies – Can use only with quantitative, not qualitative, data

Dealing with Outliers: To Drop or Not to Drop? There is an argument to be made regarding retaining outliers. By lieu of simply existing, they are representative of the population from which they are drawn. However…

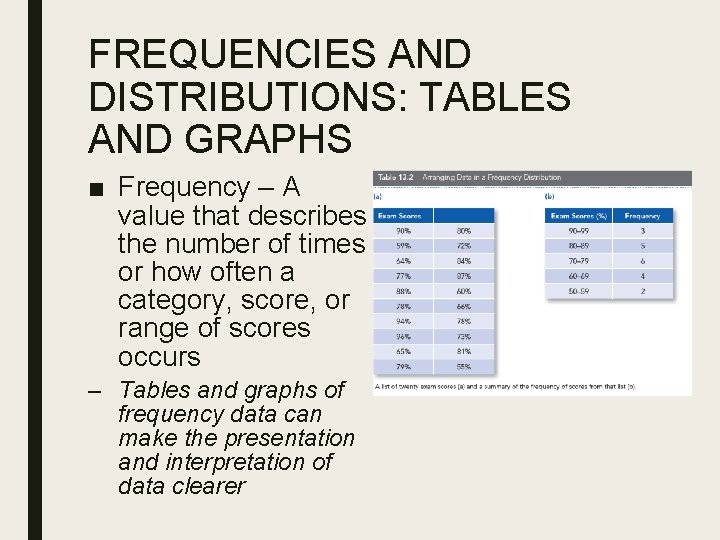

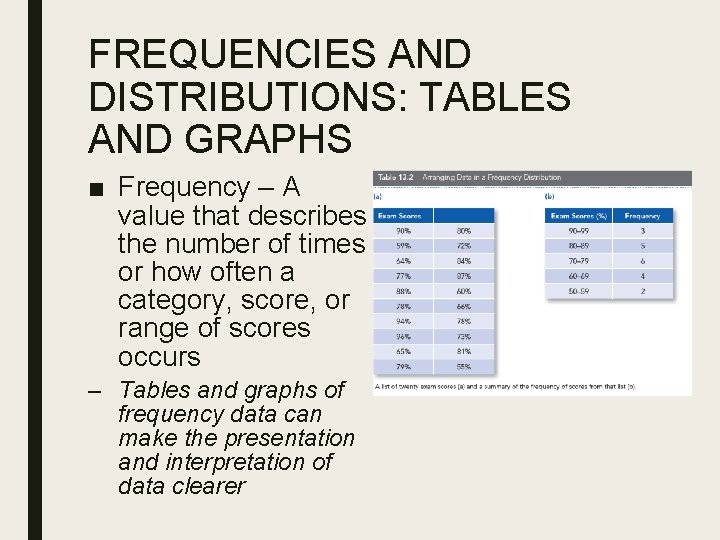

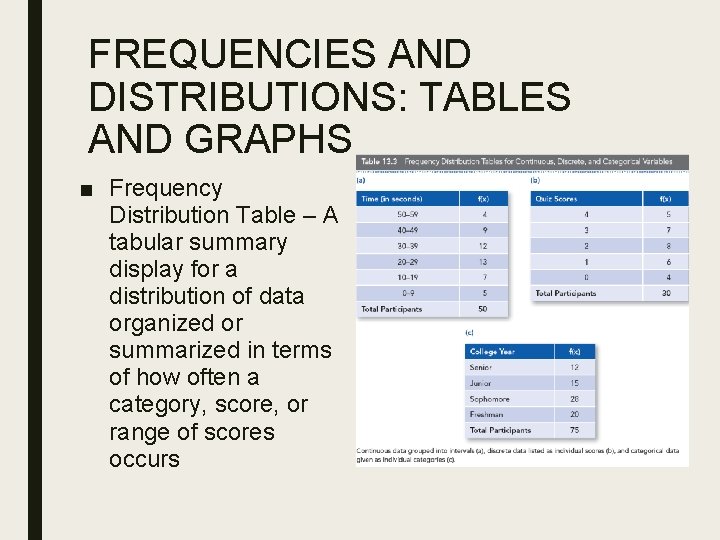

FREQUENCIES AND DISTRIBUTIONS: TABLES AND GRAPHS ■ Frequency – A value that describes the number of times or how often a category, score, or range of scores occurs – Tables and graphs of frequency data can make the presentation and interpretation of data clearer

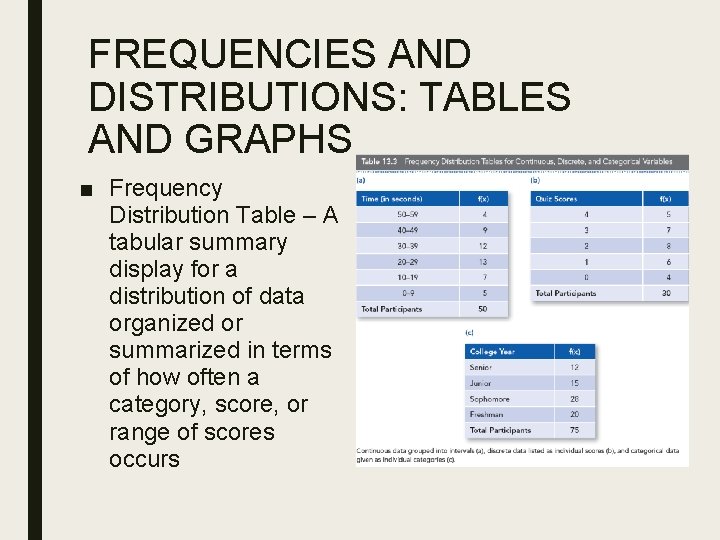

FREQUENCIES AND DISTRIBUTIONS: TABLES AND GRAPHS ■ Frequency Distribution Table – A tabular summary display for a distribution of data organized or summarized in terms of how often a category, score, or range of scores occurs

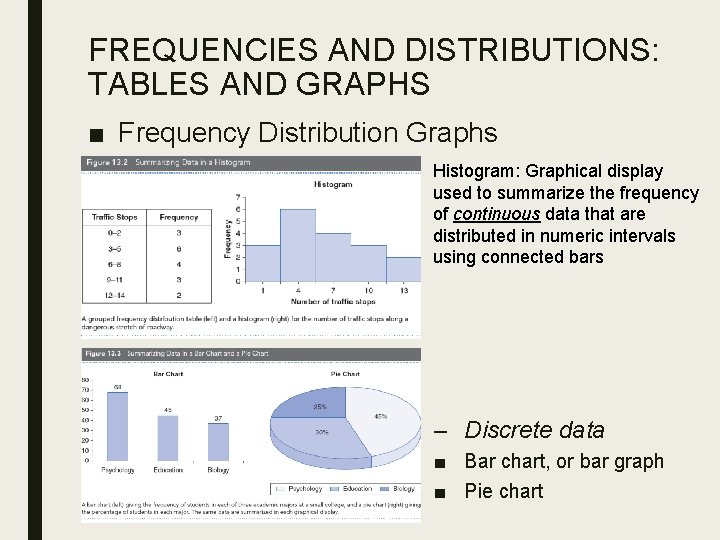

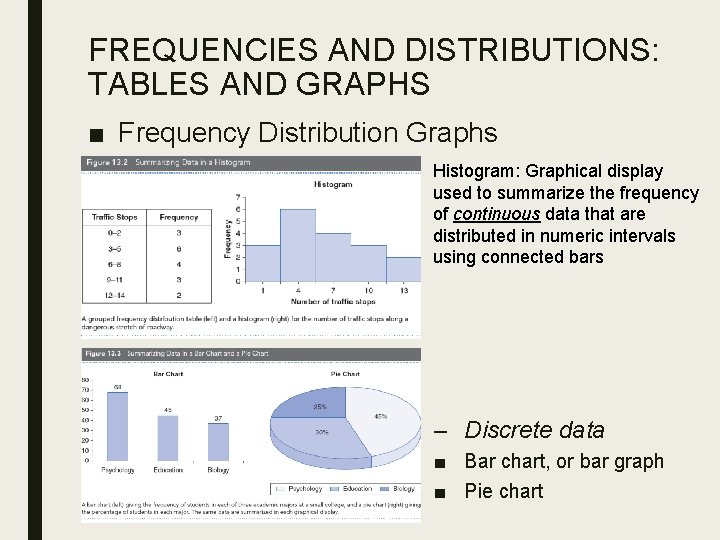

FREQUENCIES AND DISTRIBUTIONS: TABLES AND GRAPHS ■ Frequency Distribution Graphs Histogram: Graphical display used to summarize the frequency of continuous data that are distributed in numeric intervals using connected bars – Discrete data ■ Bar chart, or bar graph ■ Pie chart

MEASURES OF CENTRAL TENDENCY ■ Central tendency – Statistical measures for locating a single score that tends to be near the center of a distribution and is more representative or descriptive of all scores in a distribution – Although we lose some meaning anytime we reduce a set of data to a single score, statistical measures of central tendency ensure that the single score meaningfully represents a data set – Three measures of central tendency: ■ ■ ■ The mean The median The mode

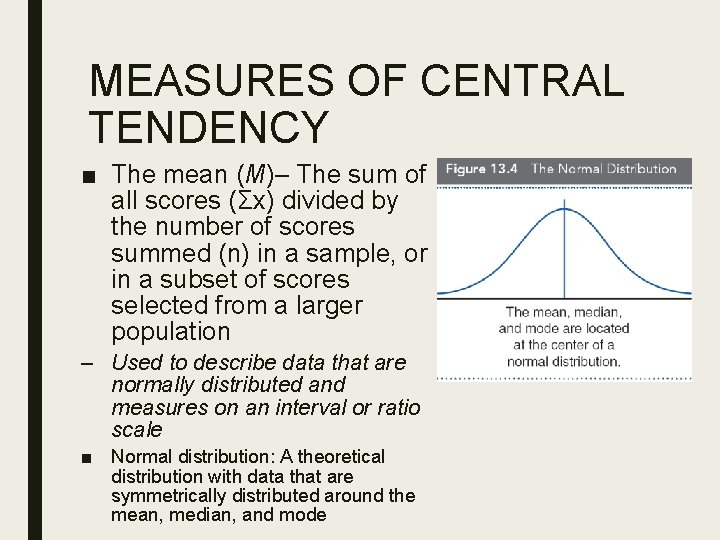

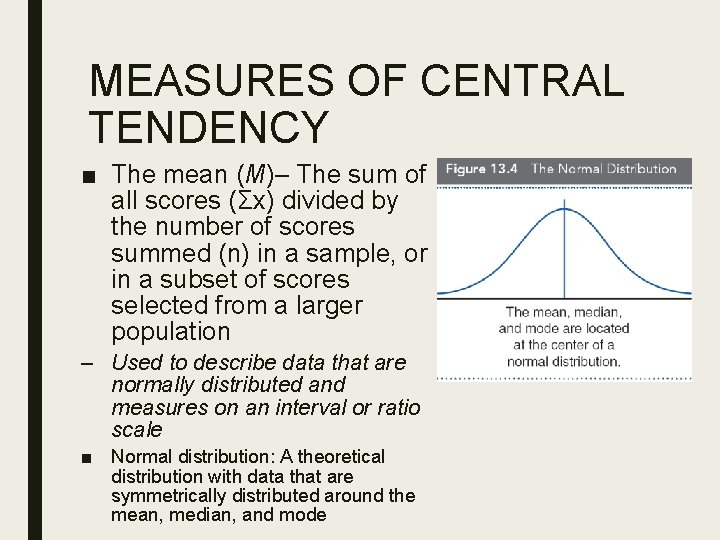

MEASURES OF CENTRAL TENDENCY ■ The mean (M)– The sum of all scores (Σx) divided by the number of scores summed (n) in a sample, or in a subset of scores selected from a larger population – Used to describe data that are normally distributed and measures on an interval or ratio scale ■ Normal distribution: A theoretical distribution with data that are symmetrically distributed around the mean, median, and mode

MEASURES OF CENTRAL TENDENCY ■ The median – The middle value in a distribution of data listed in numeric order – Used to describe data that have a skewed distribution and measures on an ordinal scale ■ Skewed distribution: A distribution of scores that includes outliers or scores that fall substantially above or below most other scores in a data set ■ The mode – The value in a data set that occurs most often or more frequently – Can be used to describe data in any distribution, so long as one or more scores occur most often – The mode is rarely used as the sole way to describe data – Typically used to describe data in a modal distribution and measures on a nominal scale

MEASURES OF VARIABILITY ■ Measures of central tendency inform us only of scores that tend to be near the center of a distribution, but do not inform us of all other scores in a distribution ■ The most common procedure for locating all other scores is to identify the mean and then compute the variability of scores from the mean – Variability: A measure of the dispersion or spread of scores in a distribution and ranges from 0 to +∞ ■ ■ Variability can never be negative Two key measures of variability are: – – Variance Standard deviation

MEASURES OF ■ VARIABILITY The variance – Sample variance (s 2): A measure of variability for the average squared distance that scores in a sample deviate from the sample mean ■ A deviation is a measure of distance – Sum of squares (SS): The sum of the squared deviations of scores from the mean and is the value placed in the numerator of the sample variance formula ■ SS = Σ (x – M)2 ■ To find the average squared distance of scores from the mean, divide by the number of scores However, dividing by the number of scores or sample size, will underestimate the variance of scores in a population The solution is to divide by one less than the number of scores or deviations summed ■ ■ – Degrees of freedom (df) for sample variance: One less than the sample size, or n – 1 ■ df = n – 1

MEASURES OF VARIABILITY ■ An advantage of the sample variance is that its interpretation is clear: The larger the sample variance, the farther that scores deviate from the mean on average ■ One limitation is that the average distance of scores from the mean is squared – To find the distance, and not the squared distance, of scores from the mean we need a new measure of variability called the standard deviation

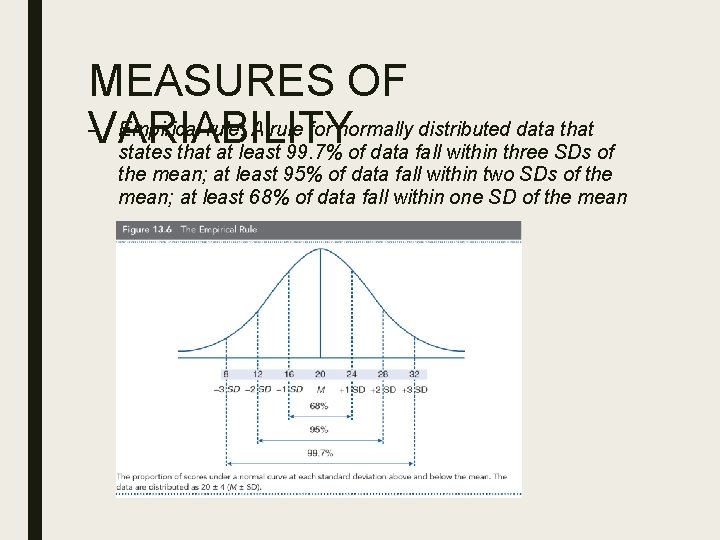

MEASURES OF ■ The standard deviation VARIABILITY – Sample standard deviation (SD): Measure of variability for the average distance that scores in a sample deviate from the sample mean and is computed by taking the square root of the sample variance ■ The SD is most informative for the normal distribution ■ For a normal distribution, over 99% of all scores will fall within three SDs of the mean

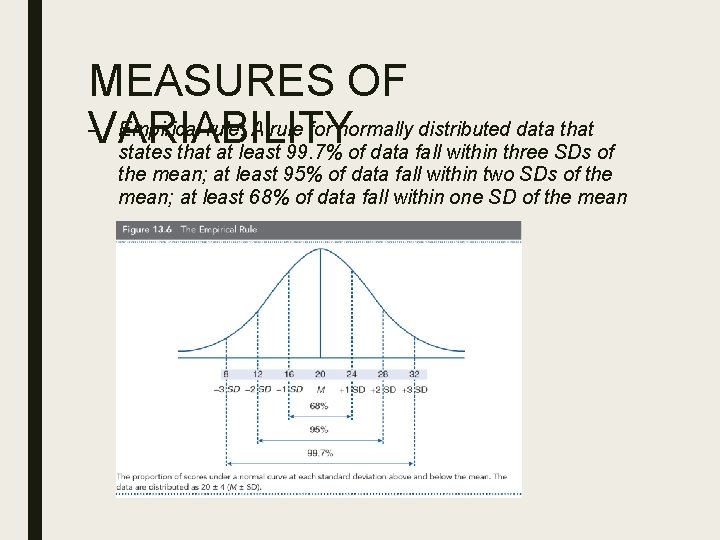

MEASURES OF – Empirical rule: A rule for normally distributed data that VARIABILITY states that at least 99. 7% of data fall within three SDs of the mean; at least 95% of data fall within two SDs of the mean; at least 68% of data fall within one SD of the mean

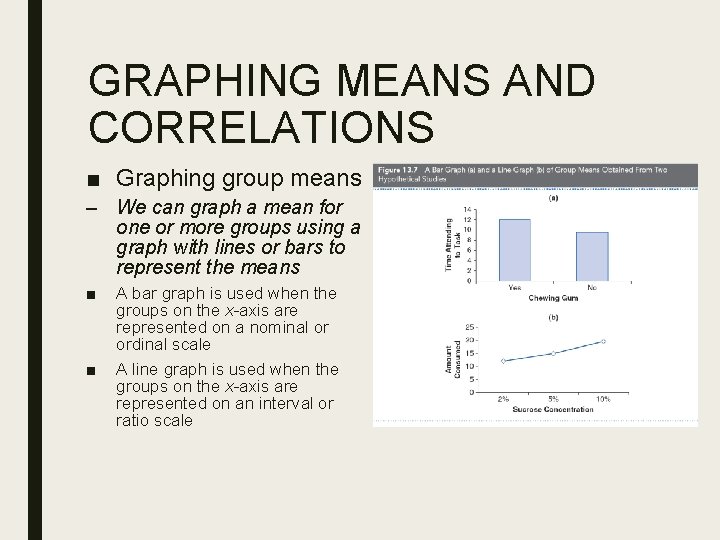

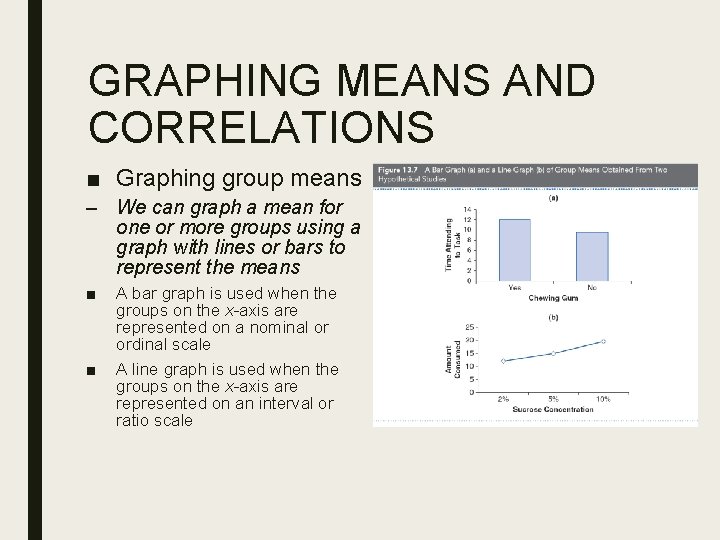

GRAPHING MEANS AND CORRELATIONS ■ Graphing group means – We can graph a mean for one or more groups using a graph with lines or bars to represent the means ■ ■ A bar graph is used when the groups on the x-axis are represented on a nominal or ordinal scale A line graph is used when the groups on the x-axis are represented on an interval or ratio scale

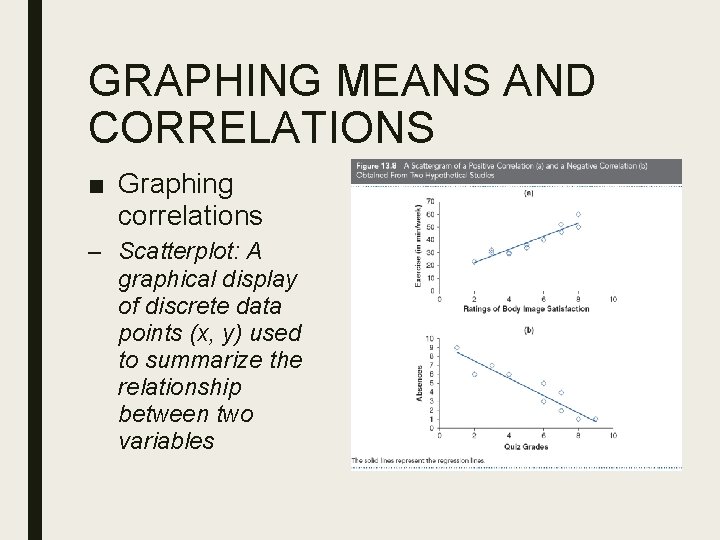

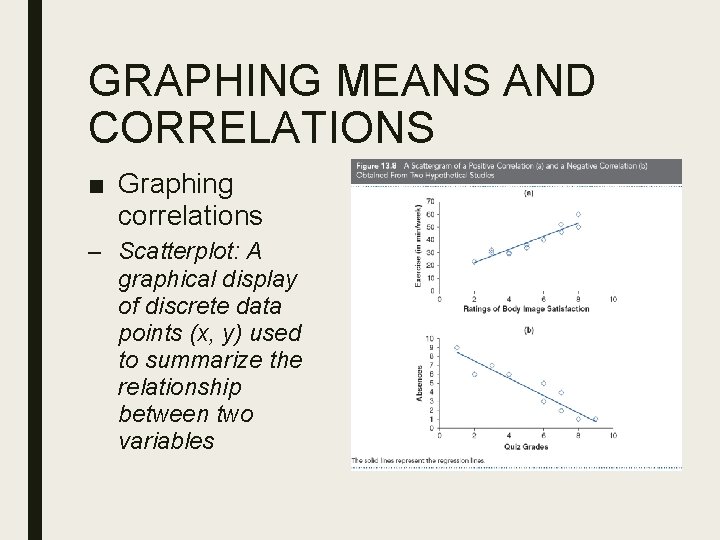

GRAPHING MEANS AND CORRELATIONS ■ Graphing correlations – Scatterplot: A graphical display of discrete data points (x, y) used to summarize the relationship between two variables

ETHICS IN FOCUS: DECEPTION DUE TO THE DISTORTION OF DATA ■ Presenting data can be an ethical concern when the data are distorted in any way ■ When a graph is distorted, it can deceive the reader into thinking differences exist, when in truth differences are negligible (Frankford. Nachmias & Leon-Guerrero, 2006) – Common distortions to look for in graphs are: ■ Displays with an unlabeled axis ■ Displays in which the vertical axis (y-axis) does not begin with 0

ETHICS IN FOCUS: DECEPTION DUE TO THE DISTORTION OF DATA ■ Distortion can occur when presenting summary statistics – Two common distortions to look for with summary statistics: ■ ■ When data are omitted When differences are described in a way that gives the impression of larger differences than really are meaningful in the data – Some data should naturally be reported together ■ Means and SDs should be reported together; correlations and proportions should be reported with sample size; standard error should be reported anytime data are recorded in a sample

CHAPTER 14 ANALYSIS AND INTERPRETATION: MAKING DECISIONS ABOUT DATA

INFERENTIAL STATISTICS: WHAT ARE WE MAKING INFERENCES ABOUT? ■ Inferential statistics – Procedures that allow researchers to infer or generalize observations made with samples to the larger population from which they were selected – Allows us to use data measured in a sample to draw conclusions about the larger population of interest, which would not otherwise be possible

INFERENTIAL STATISTICS: WHAT ARE WE MAKING INFERENCES ABOUT? ■ Null hypothesis significance testing (NHST) – Inferential statistics include a diverse set of tests of statistical significant more formally known as NHST – To use NHST, we begin by stating a null hypothesis ■ Null hypothesis, stated as the null: A statement about a population parameter, such as the population mean, that is assumed to be true, but contradicts the research hypothesis – After we state a null hypothesis, then we set an alpha level and subsequently determine the critical value of our test statistic with which we will decide to retain or reject the null hypothesis

INFERENTIAL STATISTICS: WHAT ARE WE MAKING INFERENCES ABOUT? ■ Level of significance, or significance level (alpha level): A criterion of judgment upon which a decision is made regarding the value stated in a null hypothesis. ■ The level of significance for most studies in the behavioral sciences is. 05 or 5% ■ When the likelihood of obtaining a sample outcome is less than 5% if the null hypothesis were true, we reject the null hypothesis ■ When the likelihood of obtaining a sample outcome is greater than 5% if the null hypothesis were true, we retain the null hypothesis

INFERENTIAL STATISTICS: WHAT ARE WE MAKING INFERENCES ABOUT? – To determine the likelihood or probability of obtaining a sample outcome, if the value stated in the null hypothesis is true, we compute a test statistic ■ Test statistic: A mathematical formula that allows researchers to determine the likelihood of obtaining sample outcomes if the null hypothesis were true – The value of the test statistic can be used to make a decision regarding the null hypothesis – Used to find the p value

INFERENTIAL STATISTICS: WHAT ARE WE MAKING INFERENCES ABOUT? ■ p value: Probability of obtaining a sample outcome if the value stated in the null hypothesis were true. The p is compared to the level of significance to make a decision about a null hypothesis – – – The p value is interpreted as error When p >. 05, we retain the null hypothesis and state that an effect or difference failed to reach significance When p <. 05, we reject the null hypothesis and state that an effect or difference reached significance ■ Significance, or statistical significance: Describes a decision made concerning a value stated in the null hypothesis

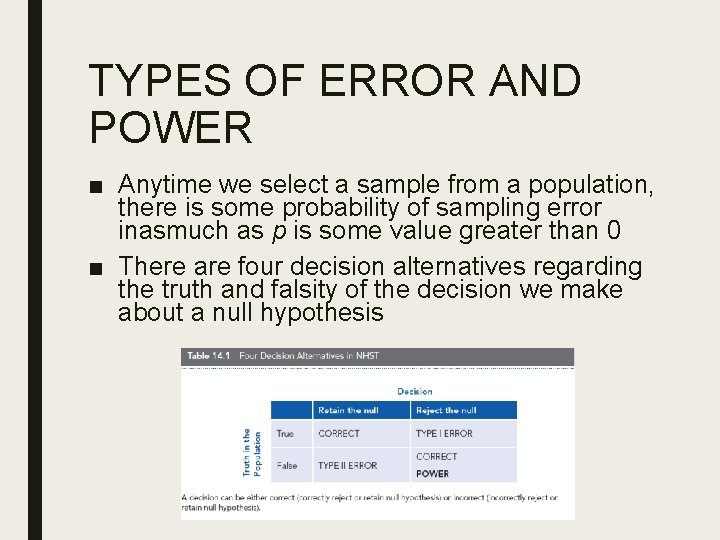

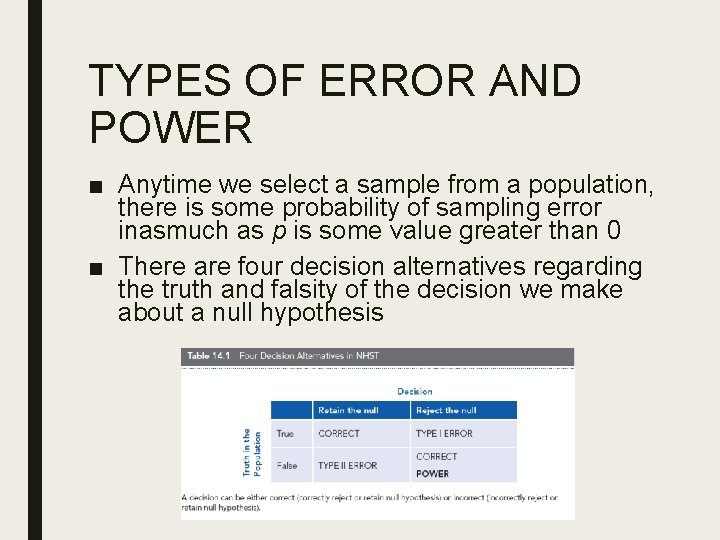

TYPES OF ERROR AND POWER ■ Anytime we select a sample from a population, there is some probability of sampling error inasmuch as p is some value greater than 0 ■ There are four decision alternatives regarding the truth and falsity of the decision we make about a null hypothesis

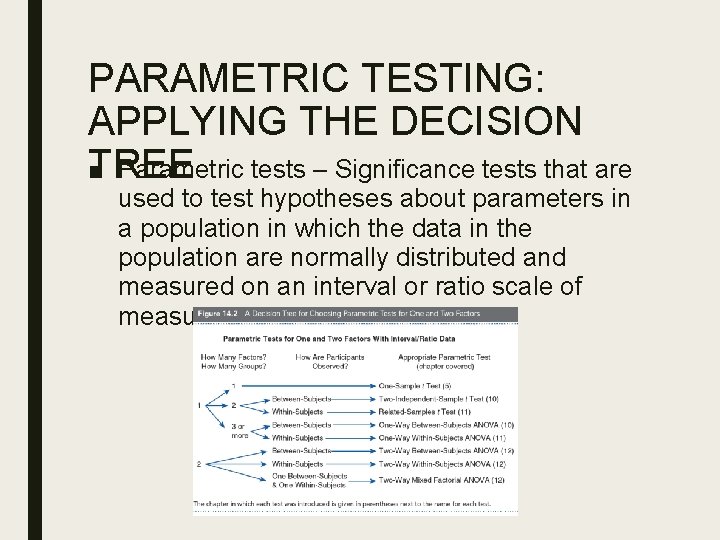

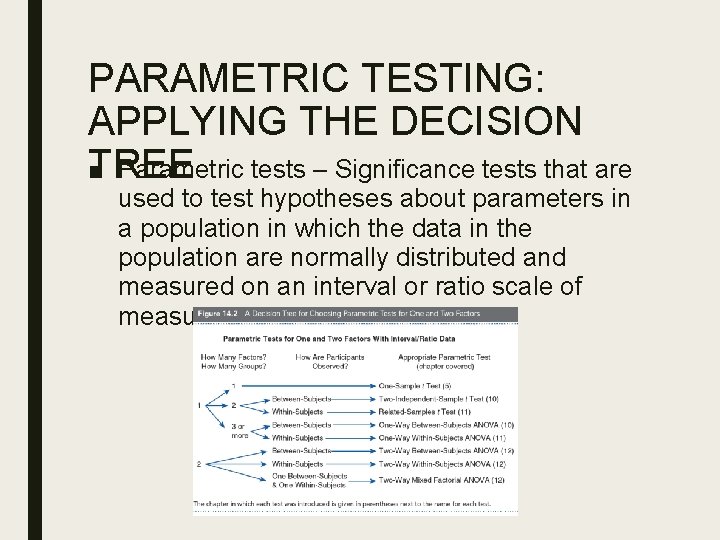

PARAMETRIC TESTING: APPLYING THE DECISION TREE ■ Parametric tests – Significance tests that are used to test hypotheses about parameters in a population in which the data in the population are normally distributed and measured on an interval or ratio scale of measurement

NONPARAMETRIC TESTS: APPLYING THE DECISION TREE ■ Nonparametric tests – Significance tests that are used to test hypotheses about data that can have any type of distribution and to analyze data on a nominal or ordinal scale of measurement – Often called distribution-free tests because the shape of the distribution in the population can be any shape

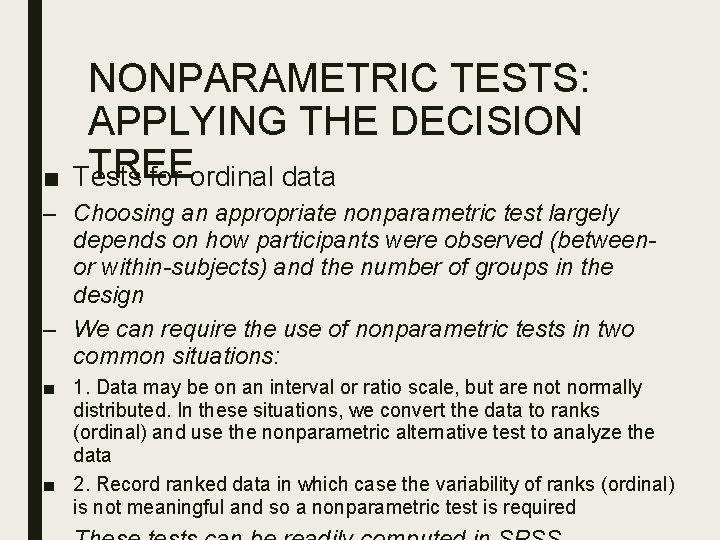

■ NONPARAMETRIC TESTS: APPLYING THE DECISION TREE Tests for ordinal data – Choosing an appropriate nonparametric test largely depends on how participants were observed (betweenor within-subjects) and the number of groups in the design – We can require the use of nonparametric tests in two common situations: ■ 1. Data may be on an interval or ratio scale, but are not normally distributed. In these situations, we convert the data to ranks (ordinal) and use the nonparametric alternative test to analyze the data ■ 2. Record ranked data in which case the variability of ranks (ordinal) is not meaningful and so a nonparametric test is required

NONPARAMETRIC TESTS: APPLYING THE DECISION TREE

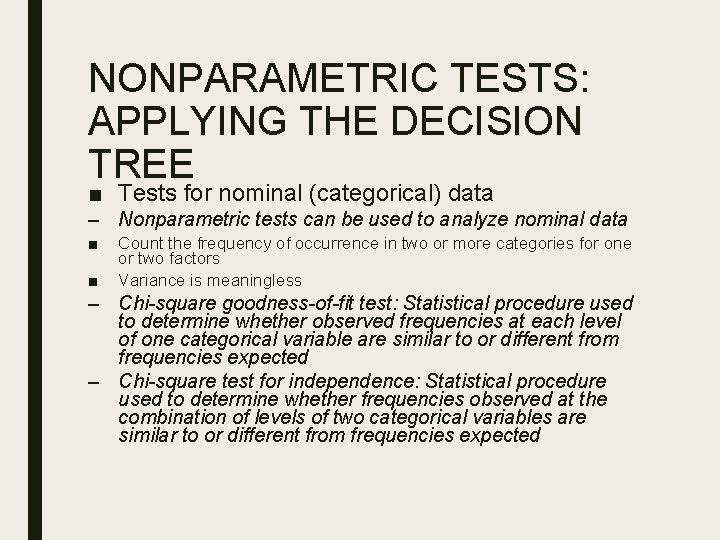

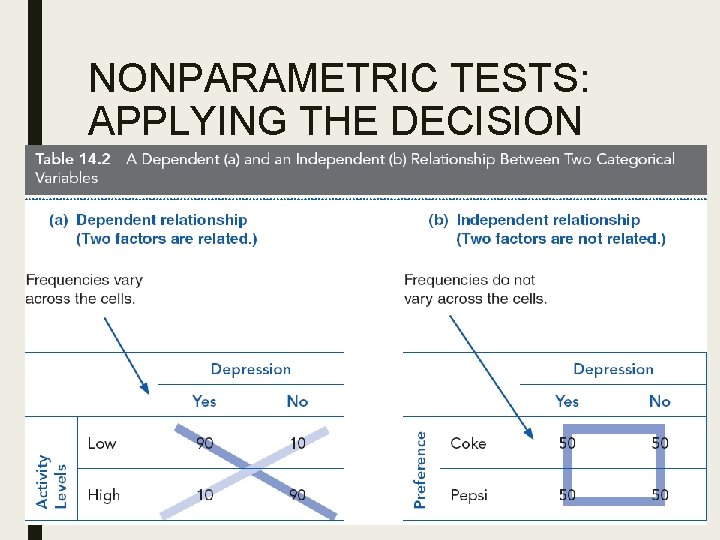

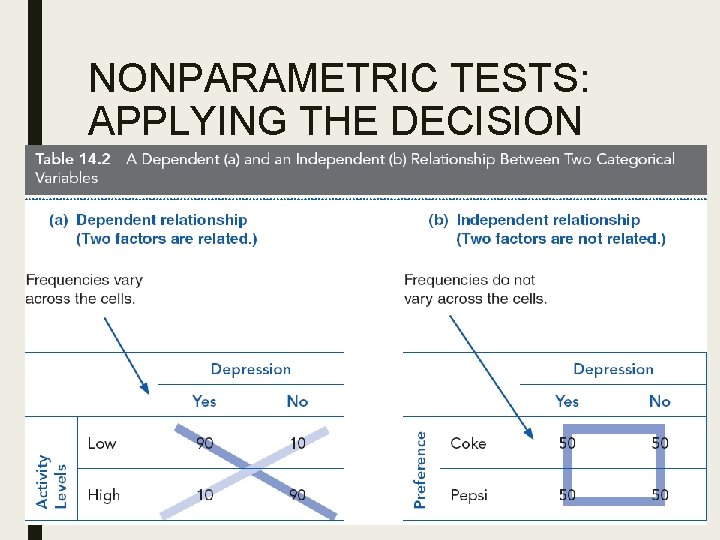

NONPARAMETRIC TESTS: APPLYING THE DECISION TREE ■ Tests for nominal (categorical) data – Nonparametric tests can be used to analyze nominal data ■ ■ Count the frequency of occurrence in two or more categories for one or two factors Variance is meaningless – Chi-square goodness-of-fit test: Statistical procedure used to determine whether observed frequencies at each level of one categorical variable are similar to or different from frequencies expected – Chi-square test for independence: Statistical procedure used to determine whether frequencies observed at the combination of levels of two categorical variables are similar to or different from frequencies expected

NONPARAMETRIC TESTS: APPLYING THE DECISION TREE

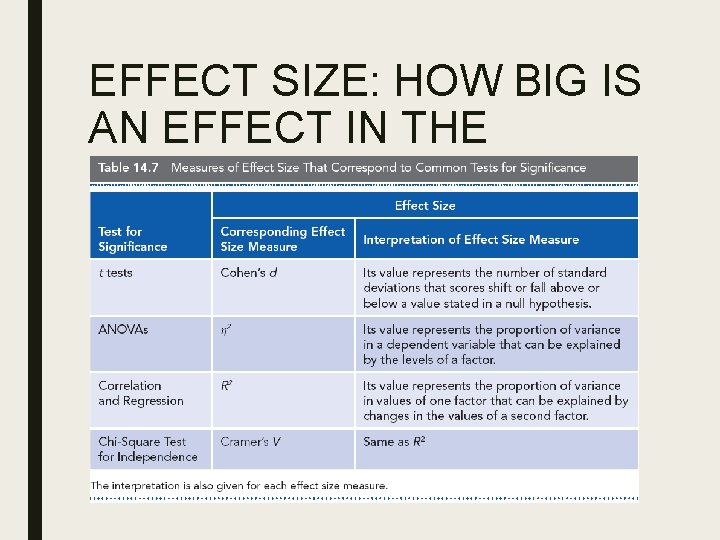

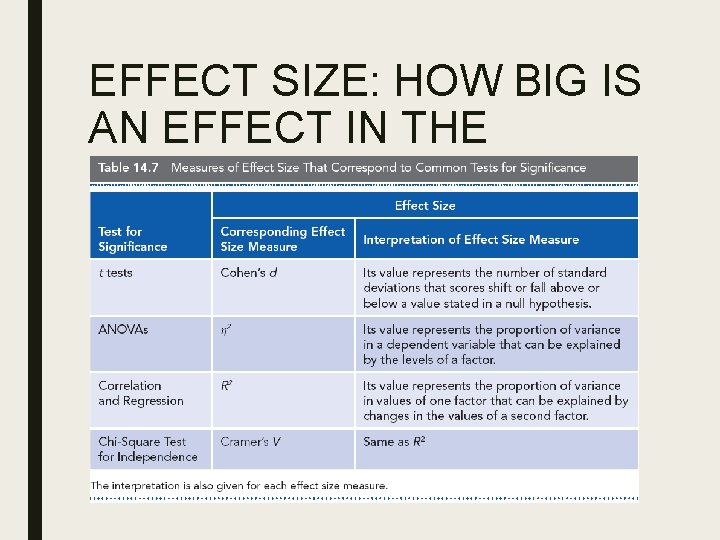

EFFECT SIZE: HOW BIG IS AN EFFECT IN THE POPULATION? ■ Effect – A mean difference or discrepancy between what was observed in a sample and what was expected to be observed in the population – The decision using NHST only indicates if an effect exists but does not inform us of the size of that effect in the population ■ Effect size – A statistical measure of the size or magnitude of an observed effect in a population, which allows researchers to describe how far scores shifted in a population, or the percent of variance in a DV that can be explained by the levels of a factor

EFFECT SIZE: HOW BIG IS AN EFFECT IN THE POPULATION?

“I’ve come to think that the most fundamental problem with p-values is that no one can really say what they are. ” Five. Thirty. Eight ■ http: //fivethirtyeight. com/features/not-even-scientists-caneasily-explain-p-values/ ■ Problems with p-values ■ 1. Easy to misrepresent/commit fraud by “data snooping” ■ 2. Easy to commit errors with multiple comparisons ■ 3. Significant difference between two samples doesn’t necessarily reflect the same difference in the parent populations • Confounded by n • A significant difference may be useless without effect size