Azure Synapse Analytics Limitless cloud data warehouse with

- Slides: 19

Azure Synapse Analytics Limitless cloud data warehouse with unmatched time to insight Azure Synapse Analytics is Azure SQL Data Warehouse evolved—blending big data, data warehousing, and data integration into a single service for endto-end analytics at cloud scale.

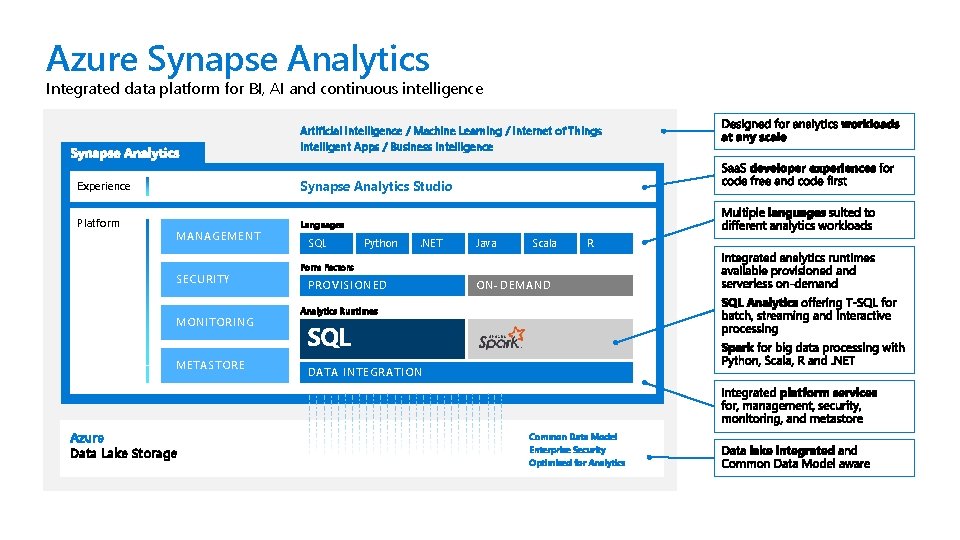

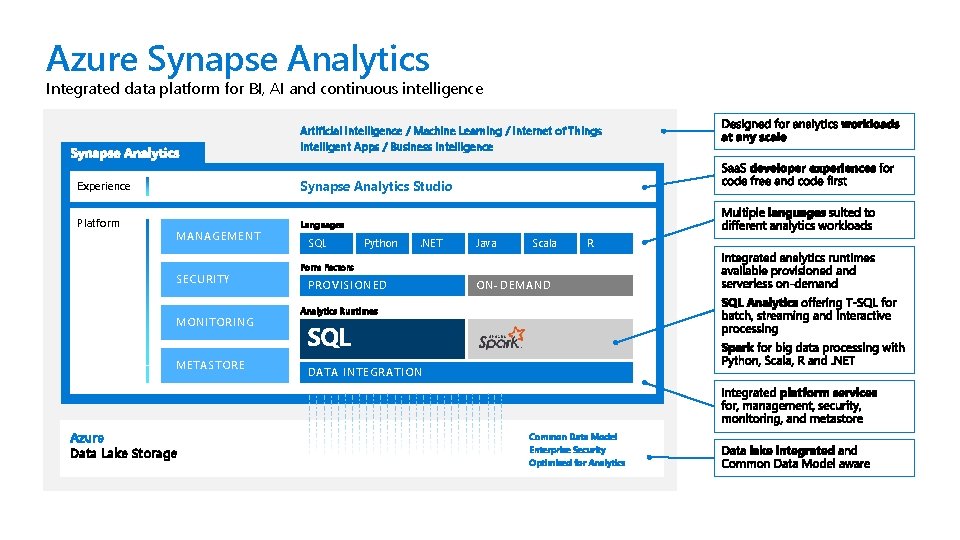

Azure Synapse Analytics Integrated data platform for BI, AI and continuous intelligence Artificial Intelligence / Machine Learning / Internet of Things Intelligent Apps / Business Intelligence Synapse Analytics Studio Experience Platform MANAGEMENT SECURITY MONITORING METASTORE Azure Data Lake Storage Languages SQL Python . NET Java Scala R Form Factors PROVISIONED ON-DEMAND Analytics Runtimes DATA INTEGRATION Common Data Model Enterprise Security Optimized for Analytics

Wear multiple hats today! Integrated data platform for BI, AI and continuous intelligence

Lab abstract Integrated data platform for BI, AI and continuous intelligence

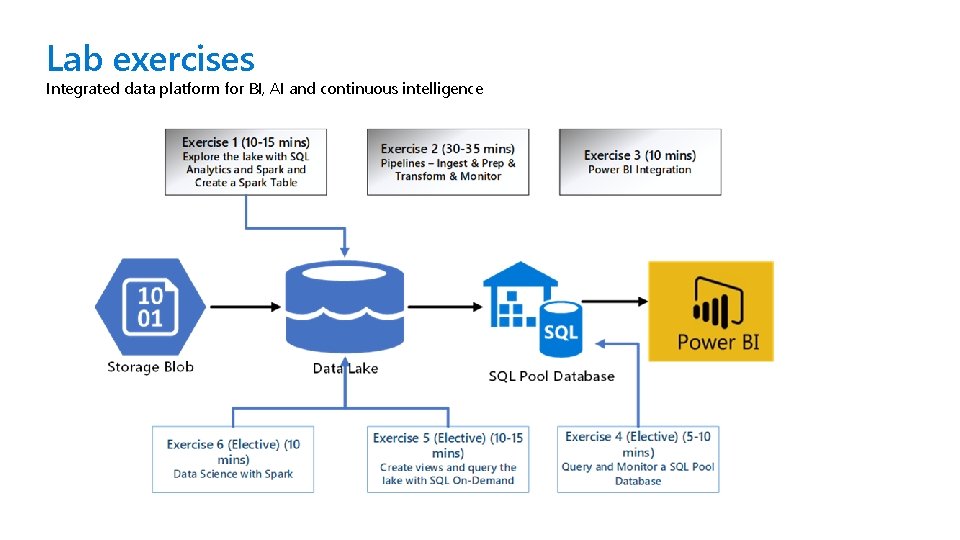

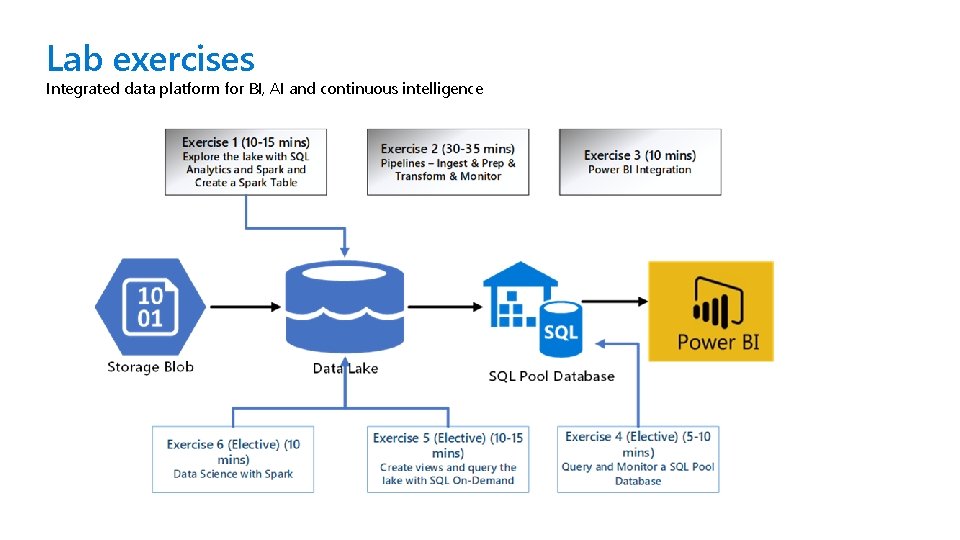

Lab exercises Integrated data platform for BI, AI and continuous intelligence

Materials Ø A workstation with a direct access to an Azure Synapse workspace and Studio with: § § § 1 ADLSg 2 account with datasets of nyc cab rides, weather, and holiday data 1 SQL Pool 500 DWUs with 3 staging (backup) tables and 3 tables 1 Spark Pool with up to 8 medium size nodes memory optimized 1 Power BI report 17 Datasets [most of them used to configure the workspace] 4 pipelines [most of them used to configure the workspace] Ø A PDF guided documentation in your workspace Ø Specifics to fill in the SQL scripts and notebooks: § § <AAD User ID> - odl_user_XXXXXX@cloudlabsaioutlook. onmicrosoft. com <ADLSg 2 Account Name> - labworkspace. XXXXXX <Primary Key> of your ADLSg 2 <SQL Pool Name> - sqlpool (for every workspace) Ø Printed map of each task Ø We will time the pace of each task with countdown of each exercise

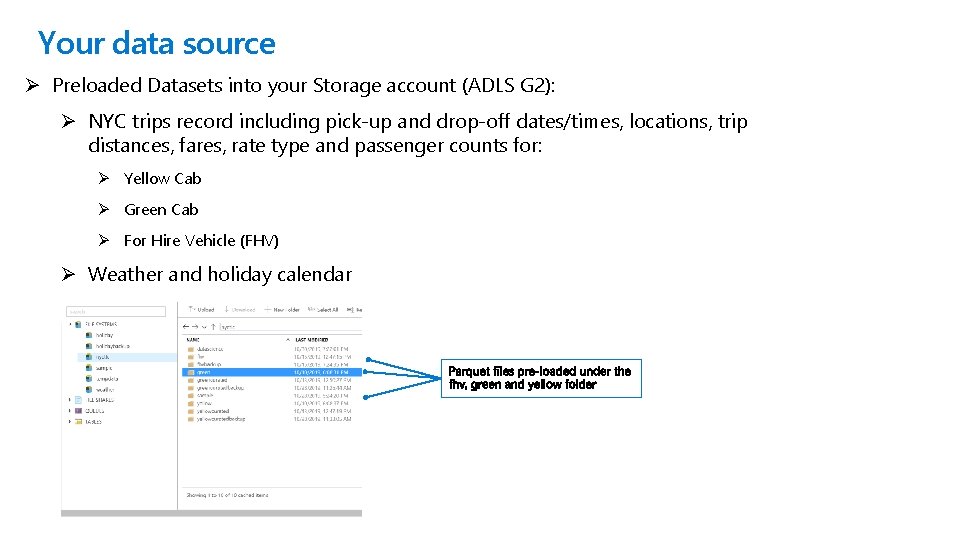

Your data source Ø Preloaded Datasets into your Storage account (ADLS G 2): Ø NYC trips record including pick-up and drop-off dates/times, locations, trip distances, fares, rate type and passenger counts for: Ø Yellow Cab Ø Green Cab Ø For Hire Vehicle (FHV) Ø Weather and holiday calendar

Exercise 1 – Data discovery and exploration Time check: 10 min In this task, you will browse your data lake using SQL On-Demand. Go into the Yellow folder inside the nyctlc folder select the year and month folders of your choice, then select a file, right click and select “New SQL script”. In this task, you will browse your data lake using Spark. Go into the Yellow. Cab folder inside the nyctlc folder select the year and month folders of your choice, then select a file, right click and select “New Notebook”. Data discovery and exploration is done from the “Data” section on the left navigation bar Explore the functionalities you can get in a notebook, SQL script and browsing the data explorer, both in the storage and database

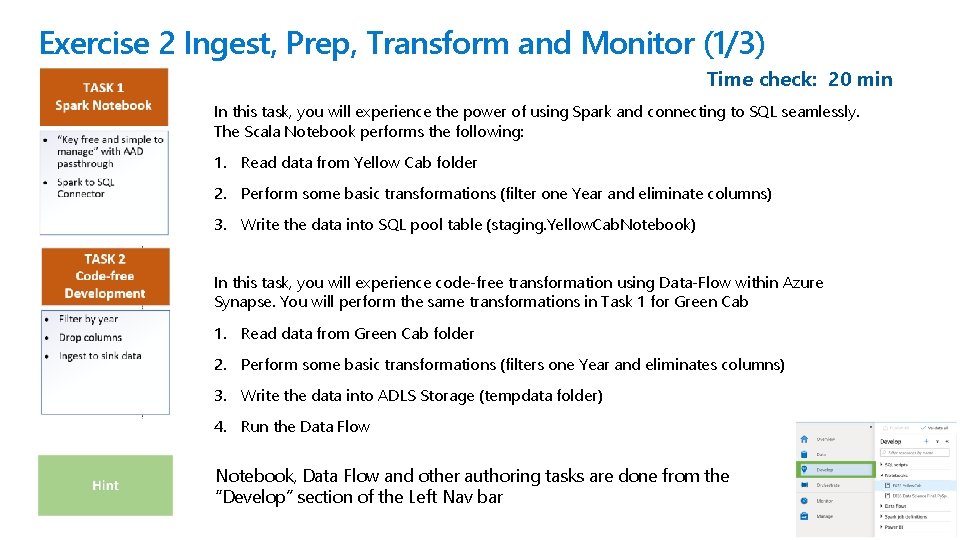

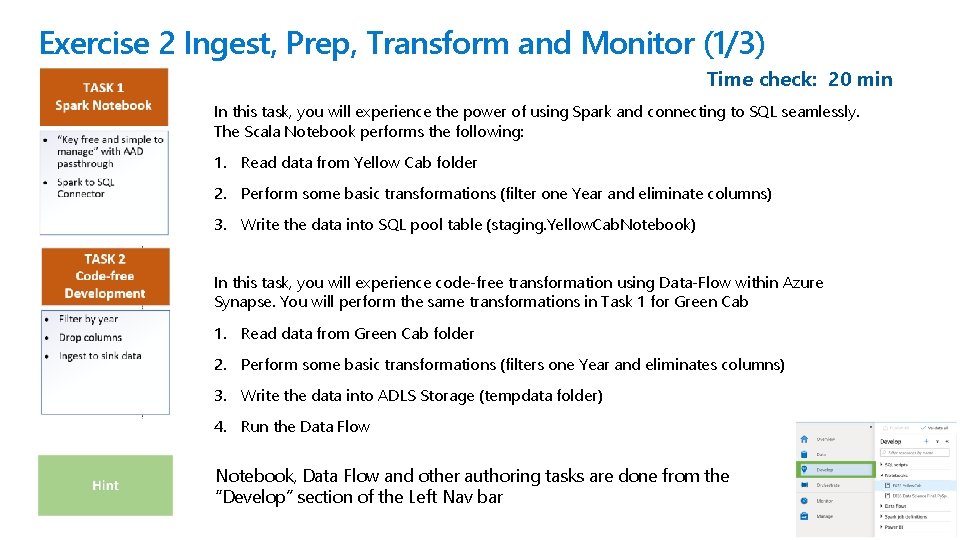

Exercise 2 Ingest, Prep, Transform and Monitor (1/3) Time check: 20 min In this task, you will experience the power of using Spark and connecting to SQL seamlessly. The Scala Notebook performs the following: 1. Read data from Yellow Cab folder 2. Perform some basic transformations (filter one Year and eliminate columns) 3. Write the data into SQL pool table (staging. Yellow. Cab. Notebook) In this task, you will experience code-free transformation using Data-Flow within Azure Synapse. You will perform the same transformations in Task 1 for Green Cab 1. Read data from Green Cab folder 2. Perform some basic transformations (filters one Year and eliminates columns) 3. Write the data into ADLS Storage (tempdata folder) 4. Run the Data Flow Notebook, Data Flow and other authoring tasks are done from the “Develop” section of the Left Nav bar

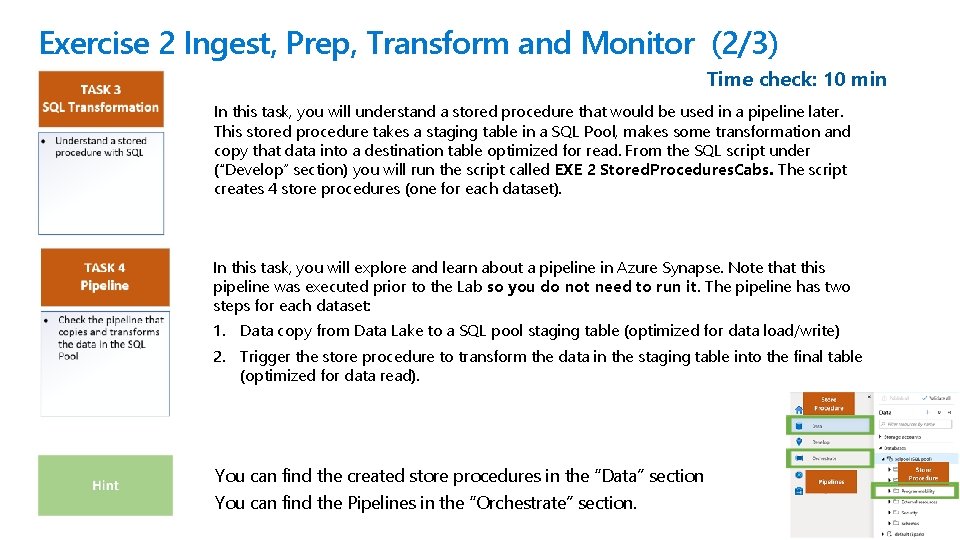

Exercise 2 Ingest, Prep, Transform and Monitor (2/3) Time check: 10 min In this task, you will understand a stored procedure that would be used in a pipeline later. This stored procedure takes a staging table in a SQL Pool, makes some transformation and copy that data into a destination table optimized for read. From the SQL script under (“Develop” section) you will run the script called EXE 2 Stored. Procedures. Cabs. The script creates 4 store procedures (one for each dataset). In this task, you will explore and learn about a pipeline in Azure Synapse. Note that this pipeline was executed prior to the Lab so you do not need to run it. The pipeline has two steps for each dataset: 1. Data copy from Data Lake to a SQL pool staging table (optimized for data load/write) 2. Trigger the store procedure to transform the data in the staging table into the final table (optimized for data read). You can find the created store procedures in the “Data” section You can find the Pipelines in the “Orchestrate” section.

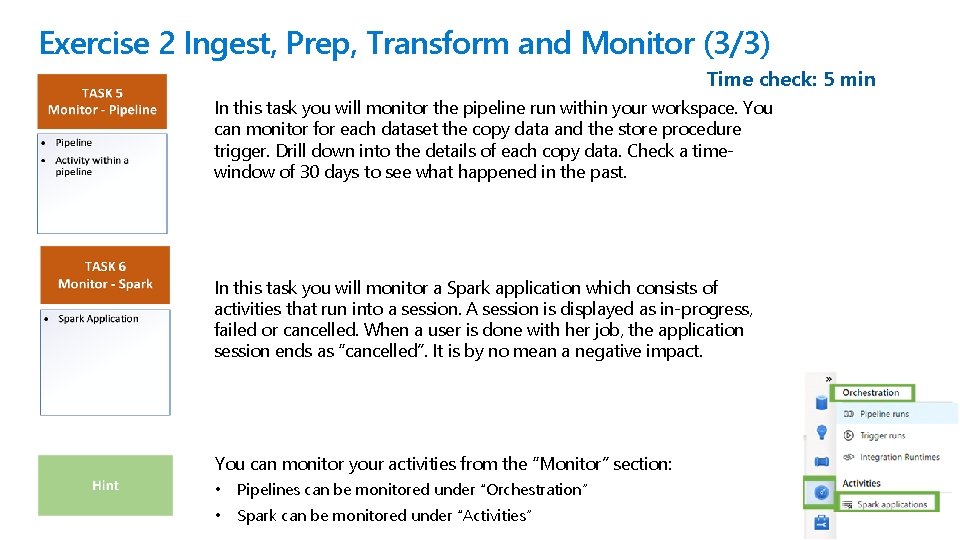

Exercise 2 Ingest, Prep, Transform and Monitor (3/3) Time check: 5 min In this task you will monitor the pipeline run within your workspace. You can monitor for each dataset the copy data and the store procedure trigger. Drill down into the details of each copy data. Check a timewindow of 30 days to see what happened in the past. In this task you will monitor a Spark application which consists of activities that run into a session. A session is displayed as in-progress, failed or cancelled. When a user is done with her job, the application session ends as “cancelled”. It is by no mean a negative impact. You can monitor your activities from the “Monitor” section: • Pipelines can be monitored under “Orchestration” • Spark can be monitored under “Activities”

Exercise 3 Power BI integration Time check: 5 min In this task you will add visualizations to a pre-created Power BI report using Azure Synapse Analytics integration. A Power BI workspace, an Azure Synapse PBI Linked service and a Dataset have been created for you. You can build your visualization within the Power BI report in Azure Synapse. You can create a Power BI dataset and a Report from the “Develop” section

Exercise 4 -5 BI with SQL pool and SQL On-Demand Time check: 10 -15 min In this exercise you will perform some high-performance query reading the SQL pool Final tables. You can compare the Market Share across time of the Yellow Cab, Green Cab and FHV over a period and chart it directly in the SQL Script. Try to build the query yourself by querying the final tables from the “Data” section of your SQL pool. Monitor and find the query by using the SQL script: “Monitor query” under the “Develop” section. In this exercise you will perform the same query you ran in the SQL Pool (EXE 4) but over the Data Lake. We will first ask you to create three views over the data lake and then query the data and visualize the results in the charts directly in SQL scripts under the “Develop” section. Performance to query the lake will not be as strong as the query performance in a SQL Pool but SQL Analytics On-Demand is a powerful and flexible capability for data exploration and low-cost BI with infrequent access to the lake. No data movement is required.

Exercise 6 Data science with Py. Spark Time check: 10 min In this exercise you will perform the tasks of a Data Scientist and build a model to predict the impact of holiday and weather for a given trip. You will import an Azure Synapse Py. Spark Notebook into your workspace. The Notebook is based on the Yellow cab data set. You will perform the following steps: data ingestion, exploration, data prep and generate test and training. Data Scientists are also Data Engineers. Ultimately you will evaluate the area under the ROC model which is close to 1 and this is an excellent result. The model includes several simplifications and train/test iterations have not been included. After importing “EXE 6 Data Science Final_Py. Spark” you will need to replace the ADLS G 2 Storage account "<Your. ADLSAccount>" with your Account name and run all cells.

Please evaluate this session Your feedback is important to us! https: //aka. ms/ignite. mobileapp https: //myignite. techcommunity. microsoft. com/evaluations

Find this session in Microsoft Tech Community