Twister 4 Azure Parallel Data Analytics on Azure

![Iterative Map. Reduce Frameworks • Twister[1] – Map->Reduce->Combine->Broadcast – Long running map tasks (data Iterative Map. Reduce Frameworks • Twister[1] – Map->Reduce->Combine->Broadcast – Long running map tasks (data](https://slidetodoc.com/presentation_image_h2/9fe82aba73512f1a772327e419838a52/image-9.jpg)

![Others • Mate-EC 2[6] – Local reduction object • Network Levitated Merge[7] – RDMA/infiniband Others • Mate-EC 2[6] – Local reduction object • Network Levitated Merge[7] – RDMA/infiniband](https://slidetodoc.com/presentation_image_h2/9fe82aba73512f1a772327e419838a52/image-10.jpg)

- Slides: 43

Twister 4 Azure: Parallel Data Analytics on Azure Judy Qiu Thilina Gunarathne SALSA HPC Group http: //salsahpc. indiana. edu School of Informatics and Computing Indiana University CAREER Award

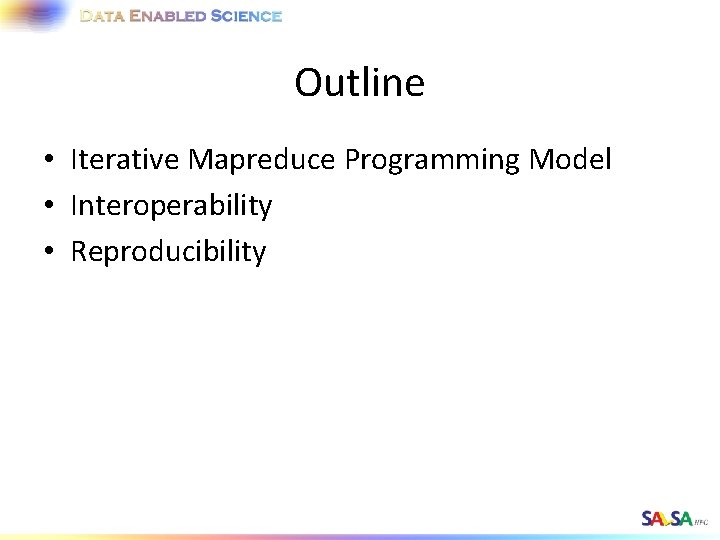

Outline • Iterative Mapreduce Programming Model • Interoperability • Reproducibility

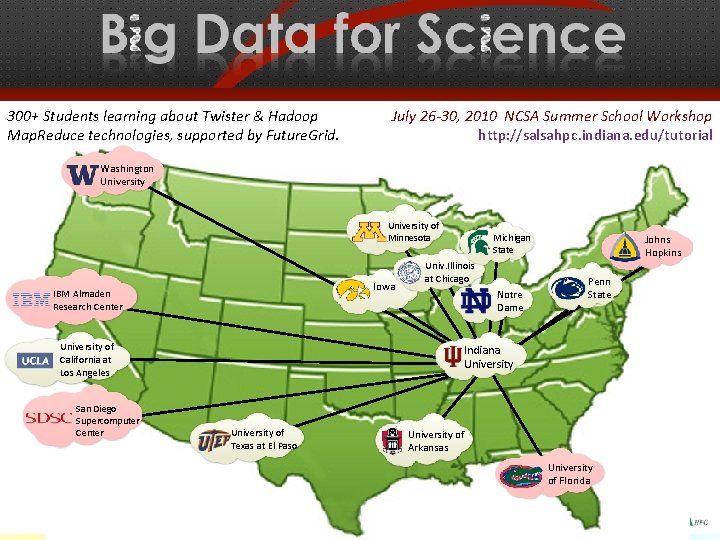

300+ Students learning about Twister & Hadoop Map. Reduce technologies, supported by Future. Grid. July 26 -30, 2010 NCSA Summer School Workshop http: //salsahpc. indiana. edu/tutorial Washington University of Minnesota Iowa IBM Almaden Research Center University of California at Los Angeles San Diego Supercomputer Center Michigan State Univ. Illinois at Chicago Notre Dame Johns Hopkins Penn State Indiana University of Texas at El Paso University of Arkansas University of Florida

Intel’s Application Stack SALSA

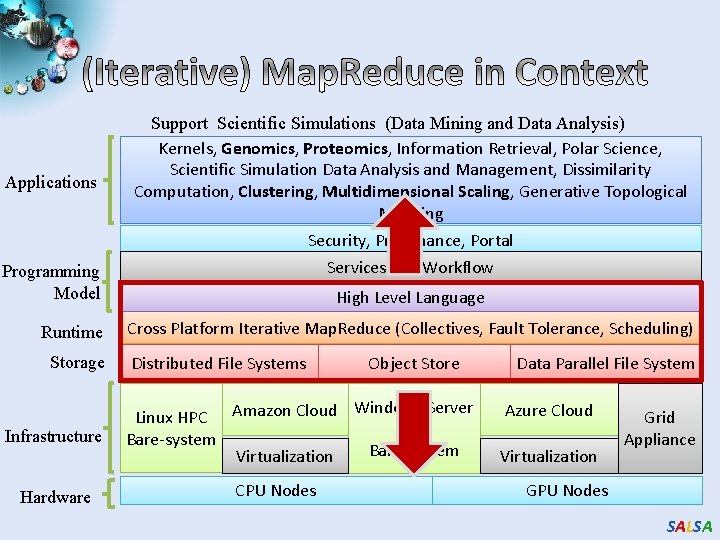

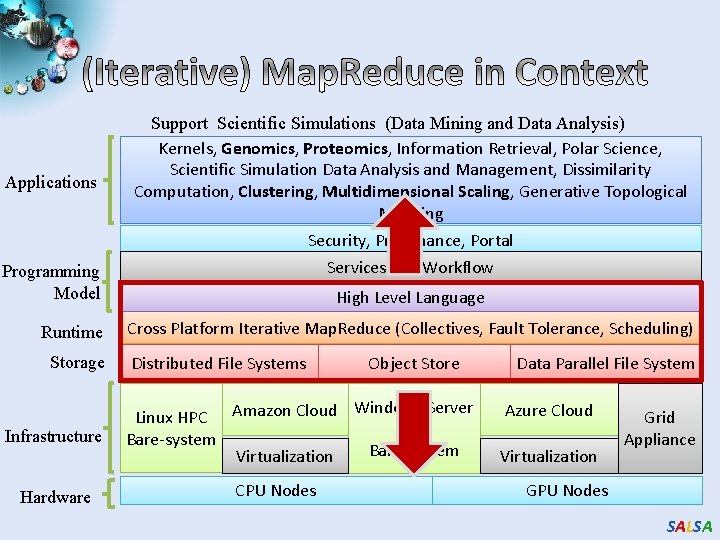

Applications Support Scientific Simulations (Data Mining and Data Analysis) Kernels, Genomics, Proteomics, Information Retrieval, Polar Science, Scientific Simulation Data Analysis and Management, Dissimilarity Computation, Clustering, Multidimensional Scaling, Generative Topological Mapping Security, Provenance, Portal Services and Workflow Programming Model Runtime Storage Infrastructure Hardware High Level Language Cross Platform Iterative Map. Reduce (Collectives, Fault Tolerance, Scheduling) Distributed File Systems Object Store Windows Server Linux HPC Amazon Cloud HPC Bare-system Virtualization CPU Nodes Data Parallel File System Azure Cloud Virtualization Grid Appliance GPU Nodes SALSA

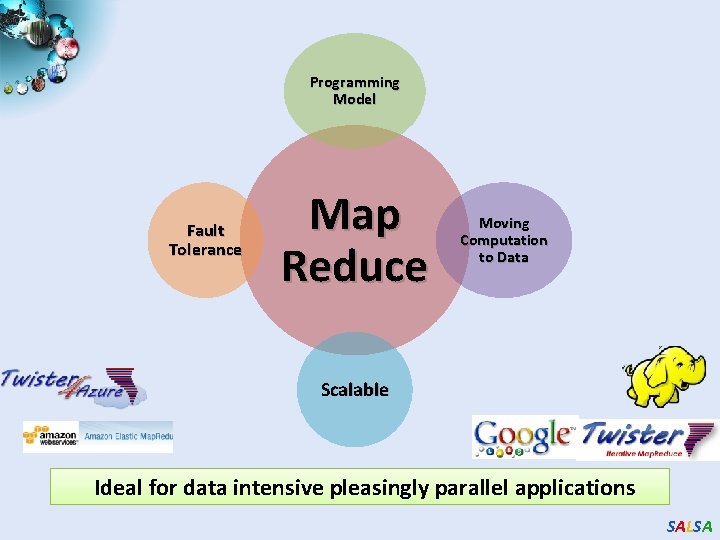

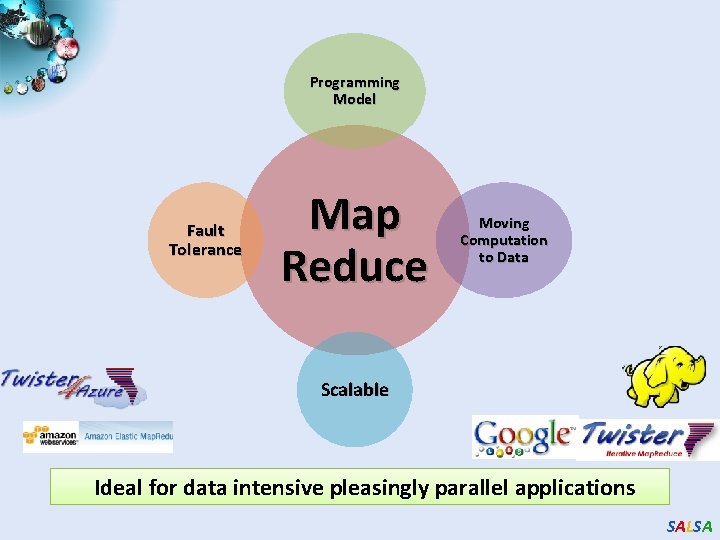

Programming Model Fault Tolerance Map Reduce Moving Computation to Data Scalable Ideal for data intensive pleasingly parallel applications SALSA

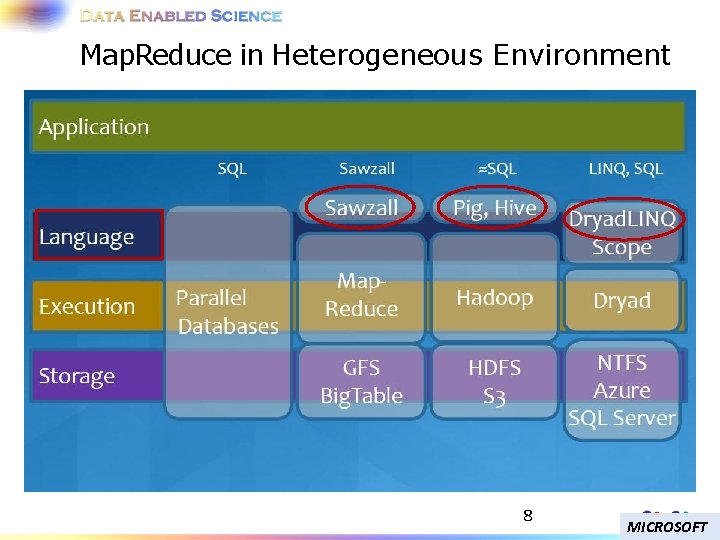

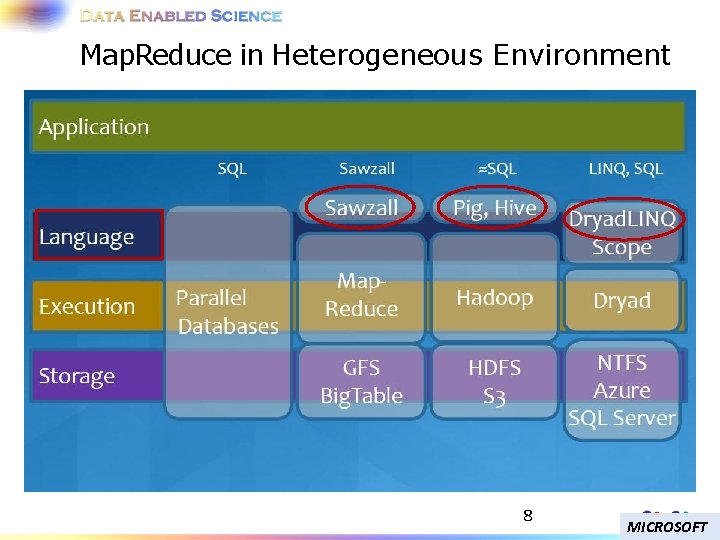

Map. Reduce in Heterogeneous Environment 8 MICROSOFT

![Iterative Map Reduce Frameworks Twister1 MapReduceCombineBroadcast Long running map tasks data Iterative Map. Reduce Frameworks • Twister[1] – Map->Reduce->Combine->Broadcast – Long running map tasks (data](https://slidetodoc.com/presentation_image_h2/9fe82aba73512f1a772327e419838a52/image-9.jpg)

Iterative Map. Reduce Frameworks • Twister[1] – Map->Reduce->Combine->Broadcast – Long running map tasks (data in memory) – Centralized driver based, statically scheduled. • Daytona[3] – Iterative Map. Reduce on Azure using cloud services – Architecture similar to Twister • Haloop[4] – On disk caching, Map/reduce input caching, reduce output caching • Spark[5] – Iterative Mapreduce Using Resilient Distributed Dataset to ensure the fault tolerance

![Others MateEC 26 Local reduction object Network Levitated Merge7 RDMAinfiniband Others • Mate-EC 2[6] – Local reduction object • Network Levitated Merge[7] – RDMA/infiniband](https://slidetodoc.com/presentation_image_h2/9fe82aba73512f1a772327e419838a52/image-10.jpg)

Others • Mate-EC 2[6] – Local reduction object • Network Levitated Merge[7] – RDMA/infiniband based shuffle & merge • Asynchronous Algorithms in Map. Reduce[8] – Local & global reduce • Map. Reduce online[9] – online aggregation, and continuous queries – Push data from Map to Reduce • Orchestra[10] – Data transfer improvements for MR • i. Map. Reduce[11] – Async iterations, One to one map & reduce mapping, automatically joins loop-variant and invariant data • Cloud. Map. Reduce[12] & Google App. Engine Map. Reduce[13] – Map. Reduce frameworks utilizing cloud infrastructure services

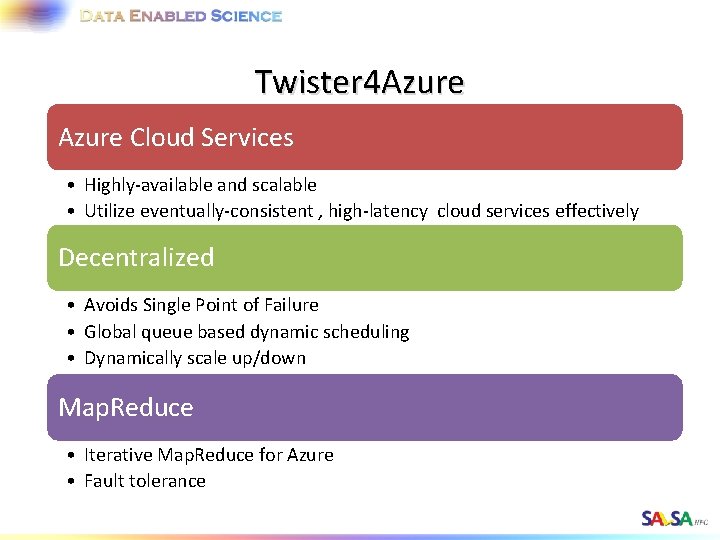

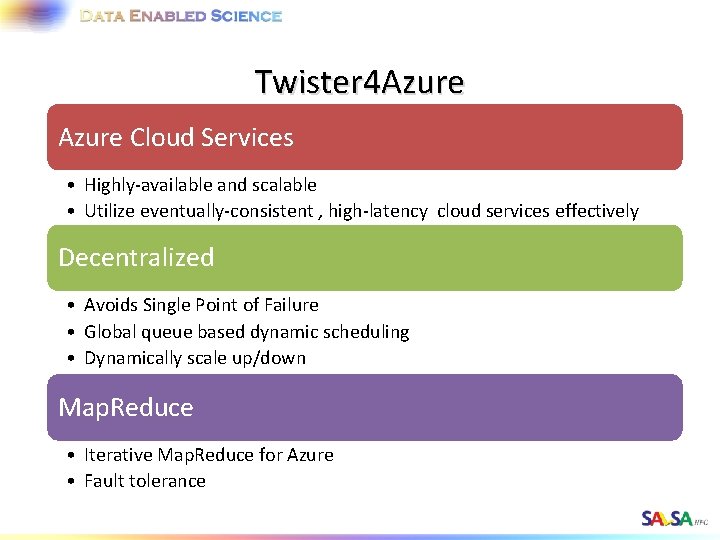

Twister 4 Azure Cloud Services • Highly-available and scalable • Utilize eventually-consistent , high-latency cloud services effectively • Minimal maintenance and management overhead Decentralized • Avoids Single Point of Failure • Global queue based dynamic scheduling • Dynamically scale up/down Map. Reduce • Iterative Map. Reduce for Azure • Fault tolerance

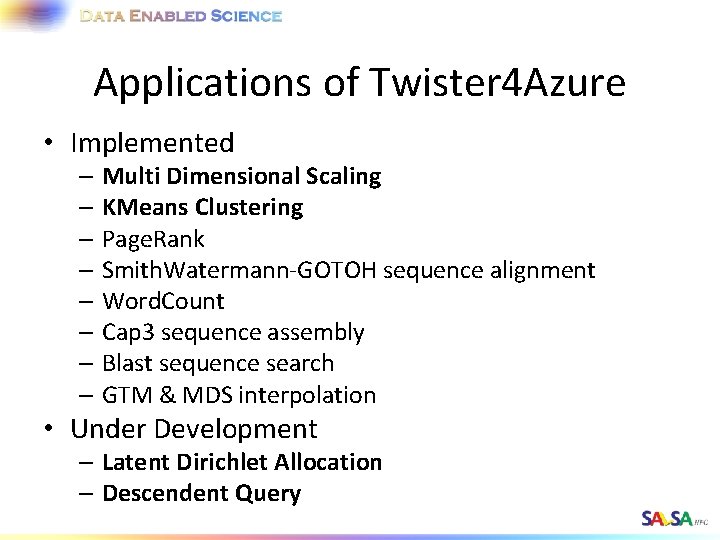

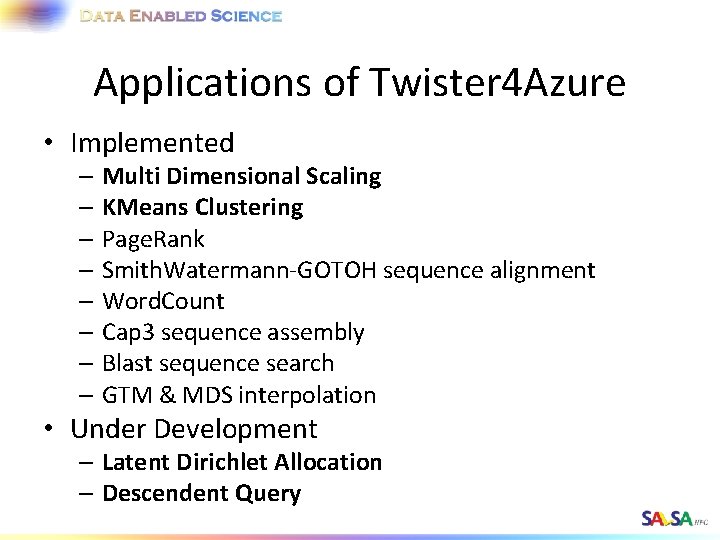

Applications of Twister 4 Azure • Implemented – Multi Dimensional Scaling – KMeans Clustering – Page. Rank – Smith. Watermann-GOTOH sequence alignment – Word. Count – Cap 3 sequence assembly – Blast sequence search – GTM & MDS interpolation • Under Development – Latent Dirichlet Allocation – Descendent Query

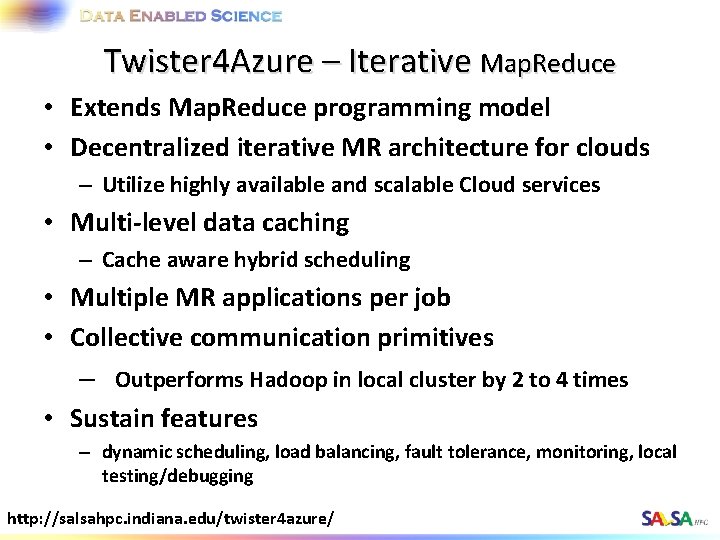

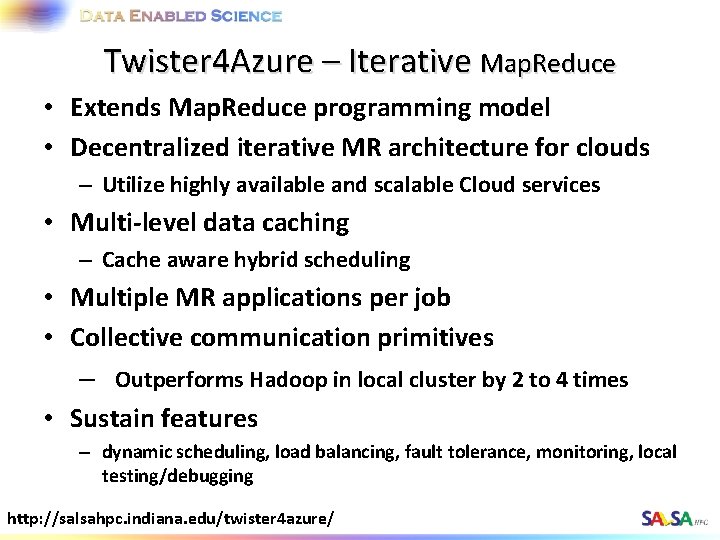

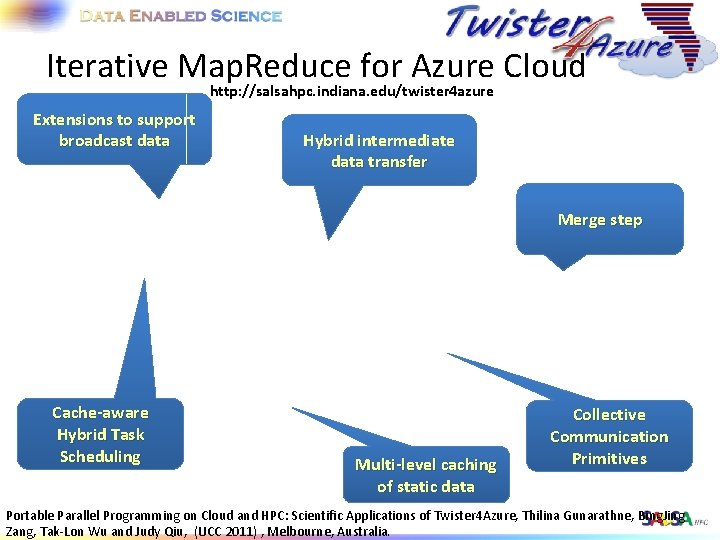

Twister 4 Azure – Iterative Map. Reduce • Extends Map. Reduce programming model • Decentralized iterative MR architecture for clouds – Utilize highly available and scalable Cloud services • Multi-level data caching – Cache aware hybrid scheduling • Multiple MR applications per job • Collective communication primitives – Outperforms Hadoop in local cluster by 2 to 4 times • Sustain features – dynamic scheduling, load balancing, fault tolerance, monitoring, local testing/debugging http: //salsahpc. indiana. edu/twister 4 azure/

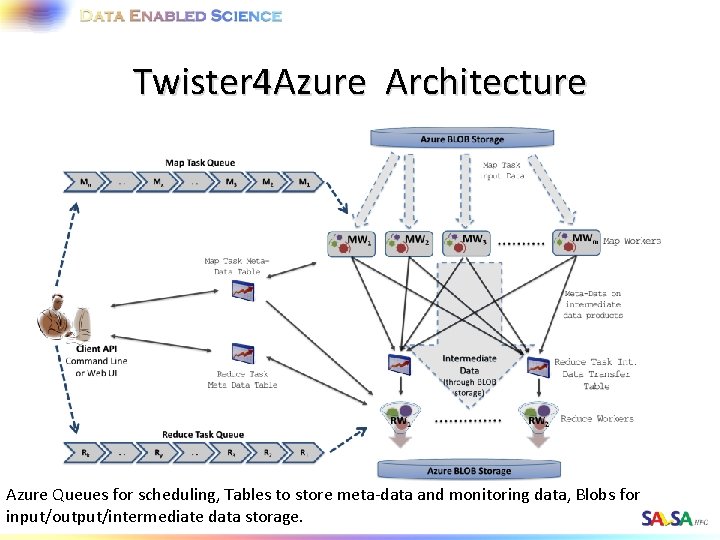

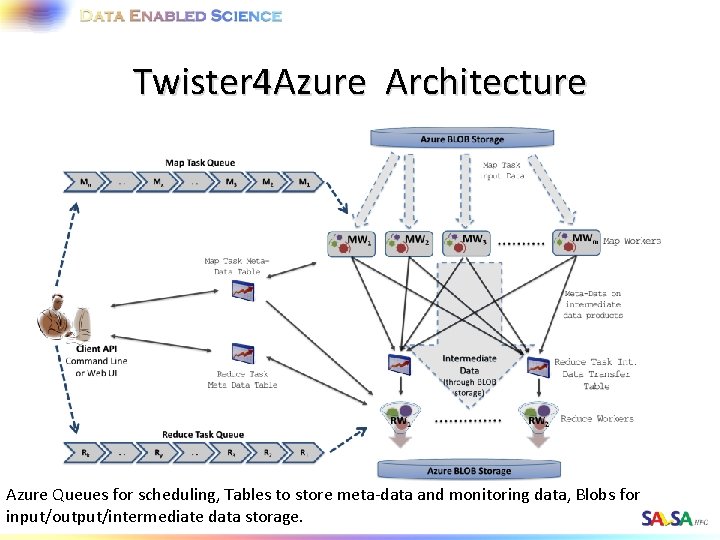

Twister 4 Azure Architecture Azure Queues for scheduling, Tables to store meta-data and monitoring data, Blobs for input/output/intermediate data storage.

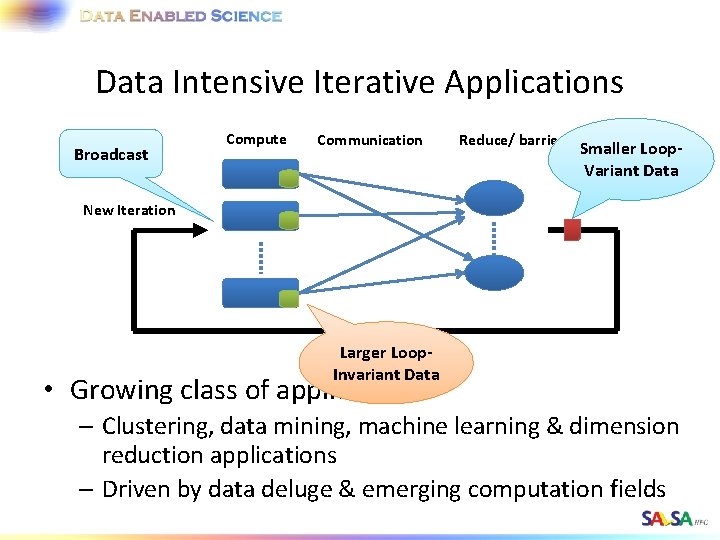

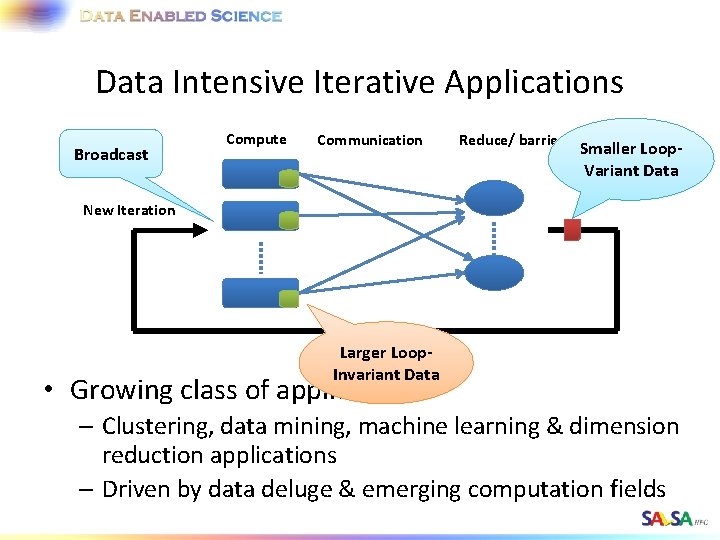

Data Intensive Iterative Applications Broadcast Compute Communication Reduce/ barrier Smaller Loop. Variant Data New Iteration Larger Loop. Invariant Data • Growing class of applications – Clustering, data mining, machine learning & dimension reduction applications – Driven by data deluge & emerging computation fields

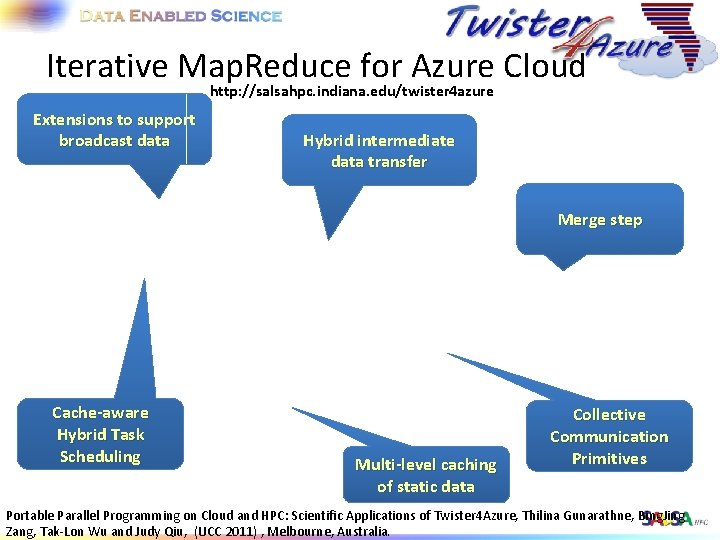

Iterative Map. Reduce for Azure Cloud http: //salsahpc. indiana. edu/twister 4 azure Extensions to support broadcast data Hybrid intermediate data transfer Merge step Cache-aware Hybrid Task Scheduling Multi-level caching of static data Collective Communication Primitives Portable Parallel Programming on Cloud and HPC: Scientific Applications of Twister 4 Azure, Thilina Gunarathne, Bing. Jing Zang, Tak-Lon Wu and Judy Qiu, (UCC 2011) , Melbourne, Australia.

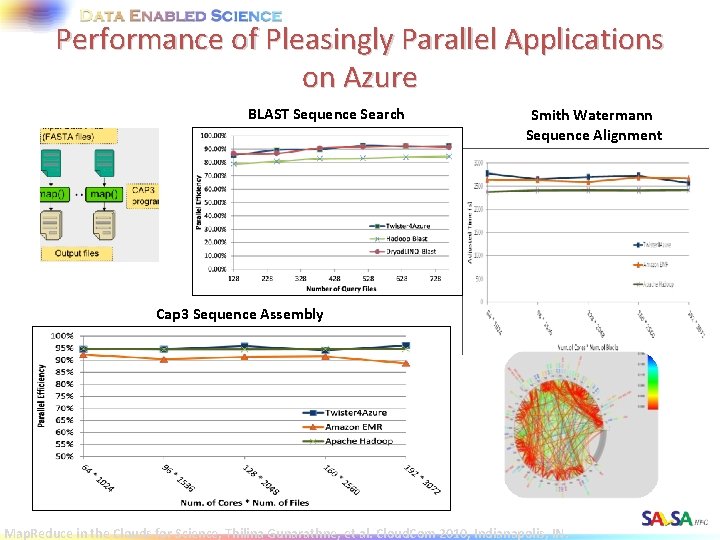

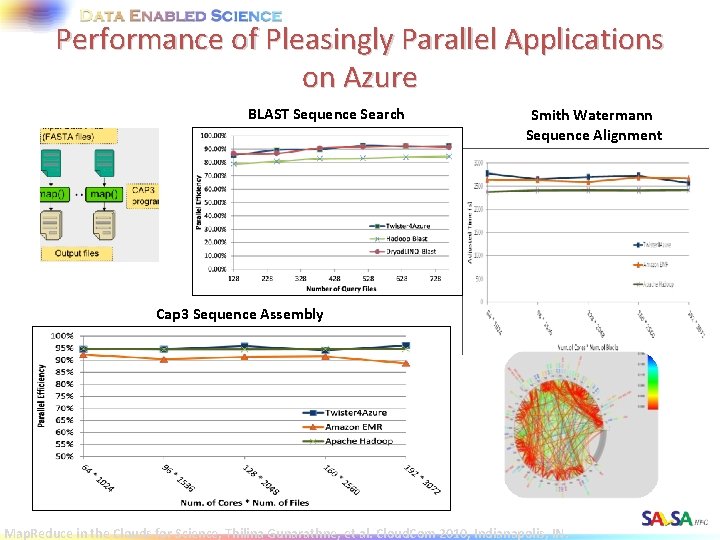

Performance of Pleasingly Parallel Applications on Azure BLAST Sequence Search Smith Watermann Sequence Alignment Cap 3 Sequence Assembly Map. Reduce in the Clouds for Science, Thilina Gunarathne, et al. Cloud. Com 2010, Indianapolis, IN.

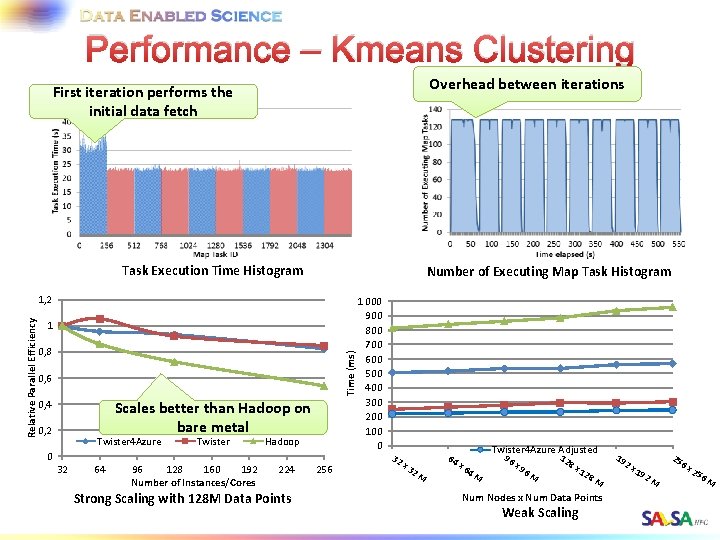

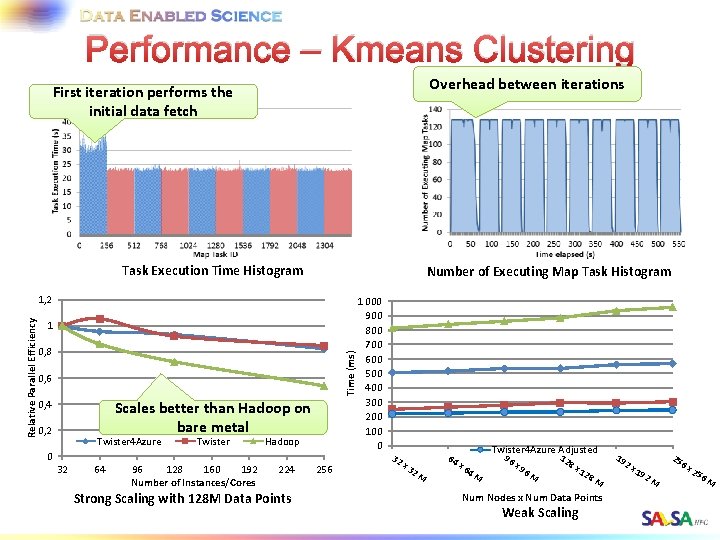

Performance – Kmeans Clustering Overhead between iterations First iteration performs the initial data fetch Task Execution Time Histogram Number of Executing Map Task Histogram 1 0, 8 Time (ms) Relative Parallel Efficiency 1, 2 0, 6 0, 4 Scales better than Hadoop on bare metal 0, 2 Twister 4 Azure Twister Hadoop 0 32 64 96 128 160 192 Number of Instances/Cores 224 Strong Scaling with 128 M Data Points 256 1 000 900 800 700 600 500 400 300 200 100 0 32 x 3 2 M 64 Twister 4 Azure Adjusted x 6 4 M 96 x 9 6 M 12 8 x 12 8 M Num Nodes x Num Data Points Weak Scaling 19 2 x 19 25 2 M 6 x 25 6 M

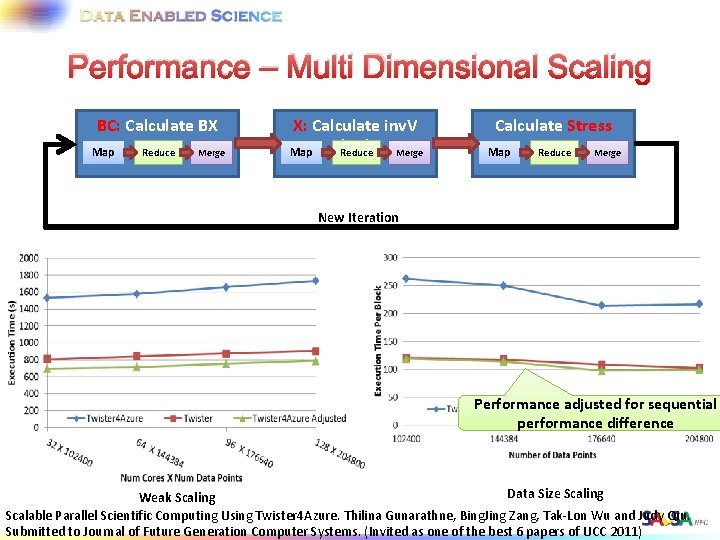

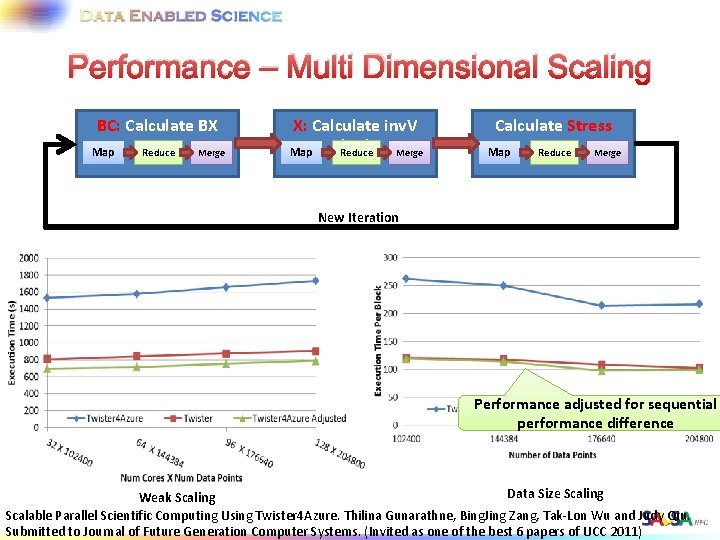

Performance – Multi Dimensional Scaling BC: Calculate BX Map Reduce Merge X: Calculate inv. V (BX) Merge Reduce Map Calculate Stress Map Reduce Merge New Iteration Performance adjusted for sequential performance difference Data Size Scaling Weak Scaling Scalable Parallel Scientific Computing Using Twister 4 Azure. Thilina Gunarathne, Bing. Jing Zang, Tak-Lon Wu and Judy Qiu. Submitted to Journal of Future Generation Computer Systems. (Invited as one of the best 6 papers of UCC 2011)

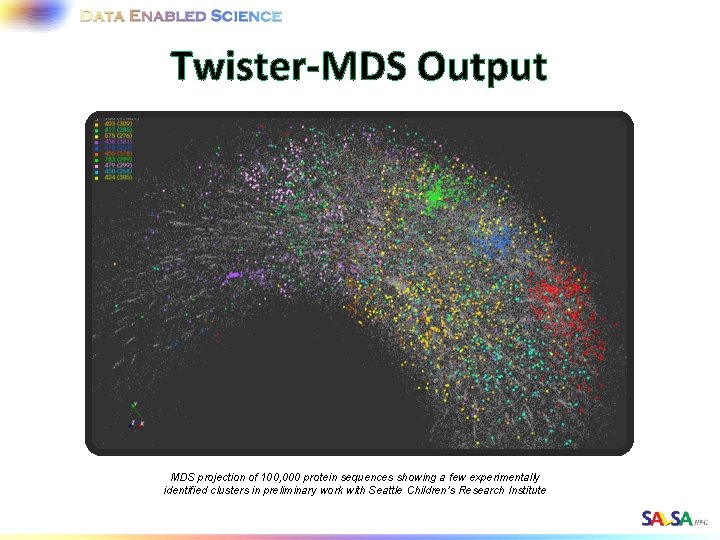

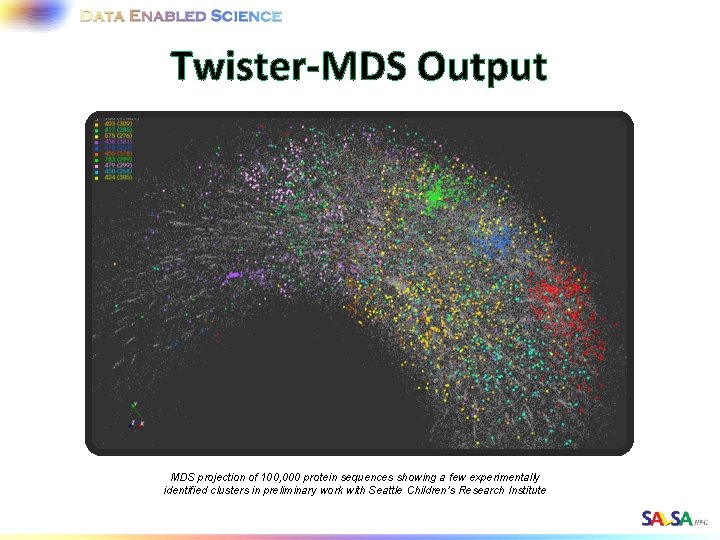

Twister-MDS Output MDS projection of 100, 000 protein sequences showing a few experimentally identified clusters in preliminary work with Seattle Children’s Research Institute

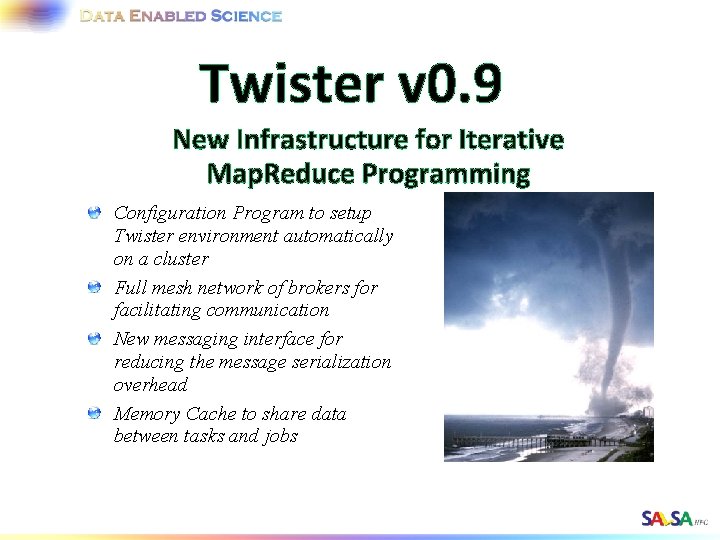

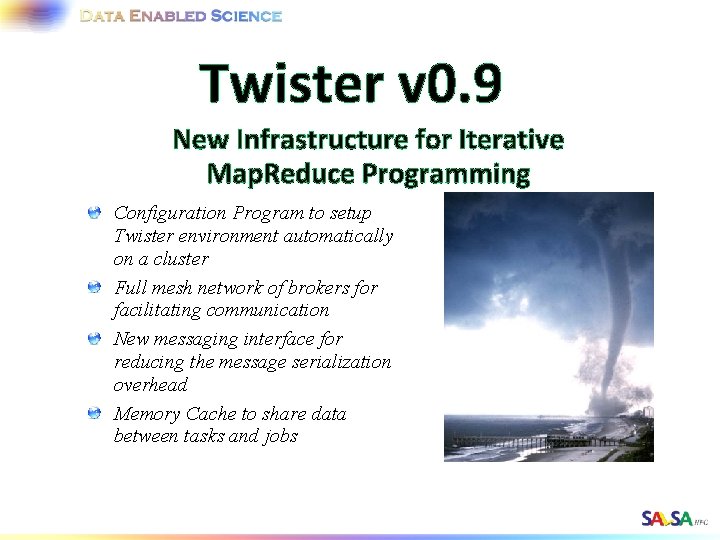

Twister v 0. 9 New Infrastructure for Iterative Map. Reduce Programming Configuration Program to setup Twister environment automatically on a cluster Full mesh network of brokers for facilitating communication New messaging interface for reducing the message serialization overhead Memory Cache to share data between tasks and jobs

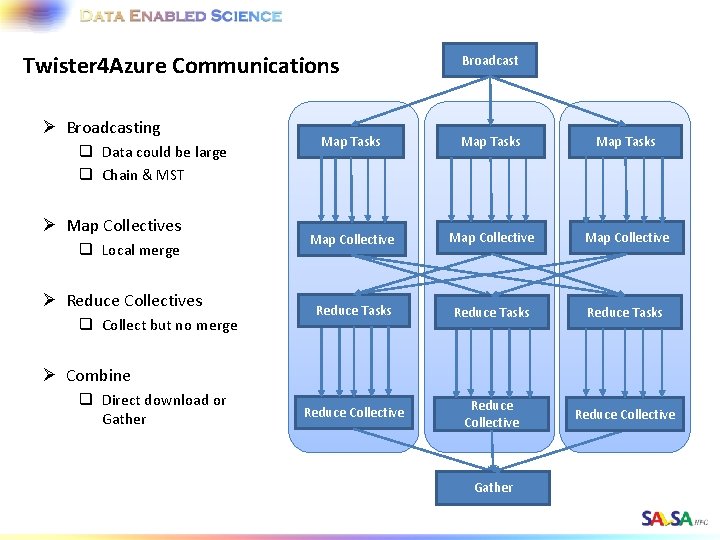

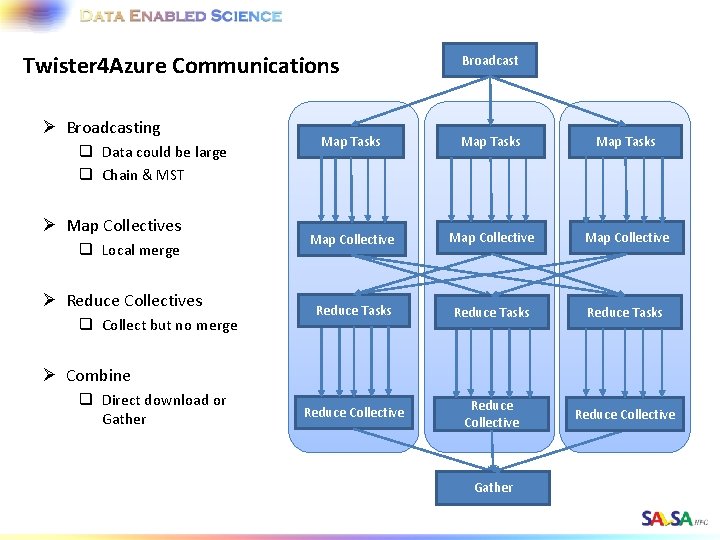

Twister 4 Azure Communications Ø Broadcasting q Data could be large q Chain & MST Ø Map Collectives q Local merge Ø Reduce Collectives q Collect but no merge Broadcast Map Tasks Map Collective Reduce Tasks Reduce Collective Ø Combine q Direct download or Gather

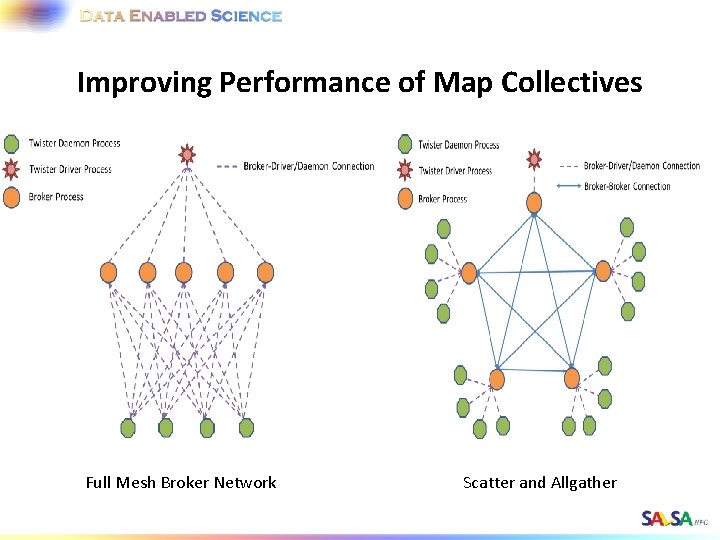

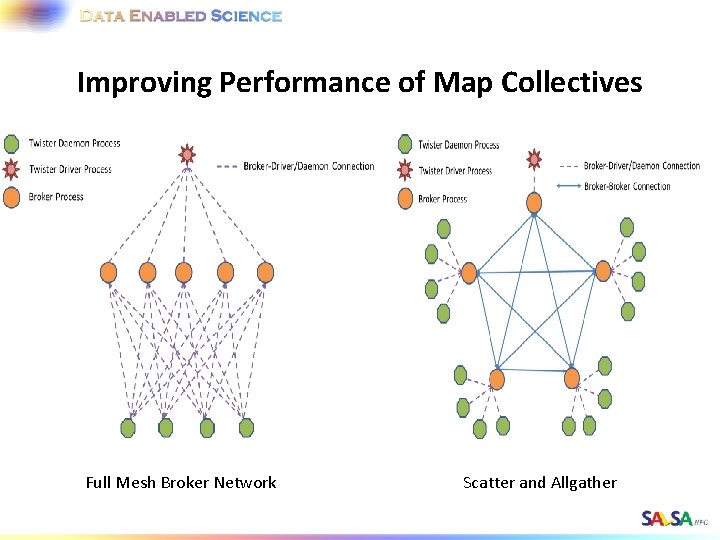

Improving Performance of Map Collectives Full Mesh Broker Network Scatter and Allgather

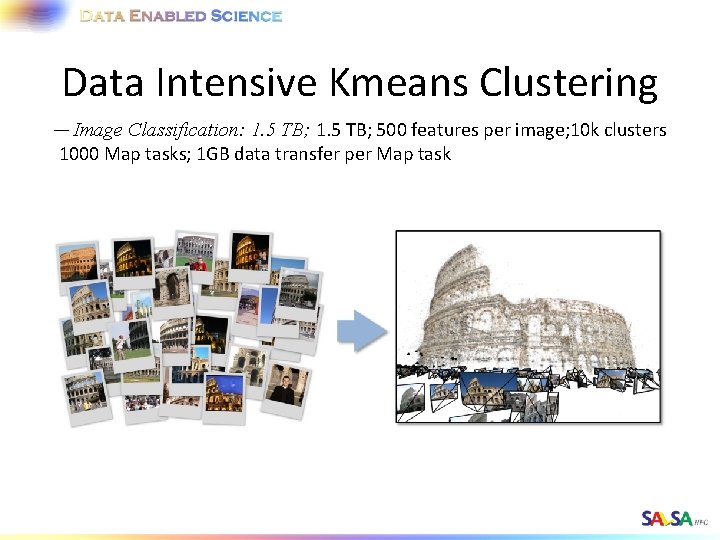

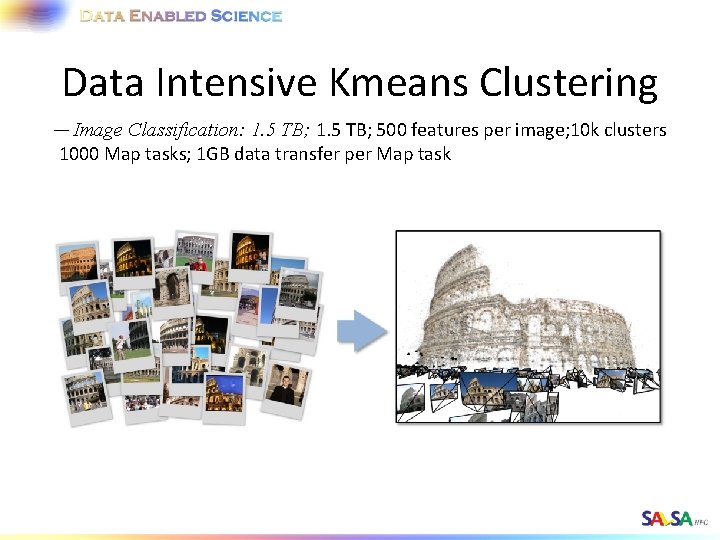

Data Intensive Kmeans Clustering ─ Image Classification: 1. 5 TB; 500 features per image; 10 k clusters 1000 Map tasks; 1 GB data transfer per Map task

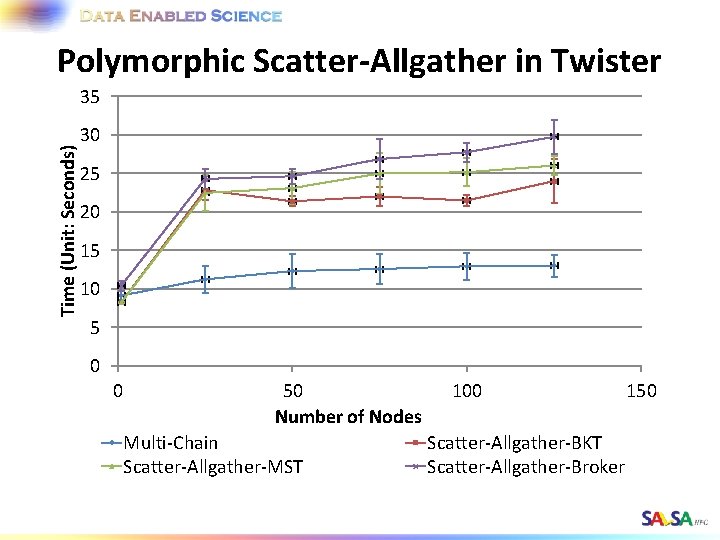

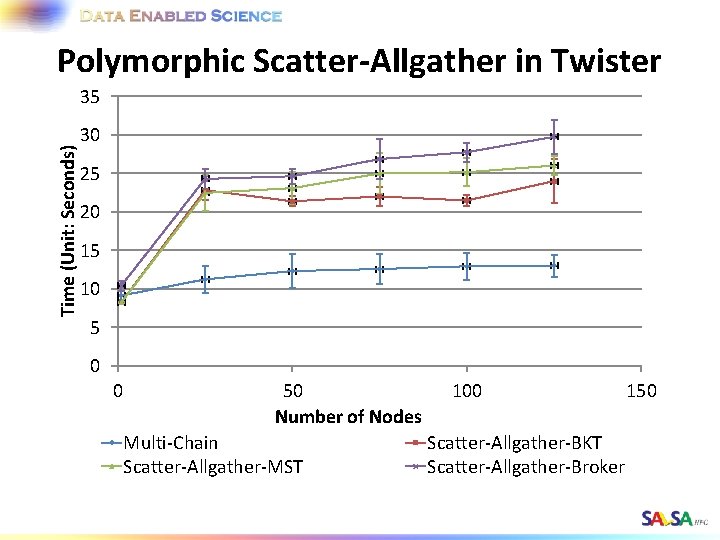

Polymorphic Scatter-Allgather in Twister Time (Unit: Seconds) 35 30 25 20 15 10 5 0 0 50 Number of Nodes Multi-Chain Scatter-Allgather-MST 100 Scatter-Allgather-BKT Scatter-Allgather-Broker 150

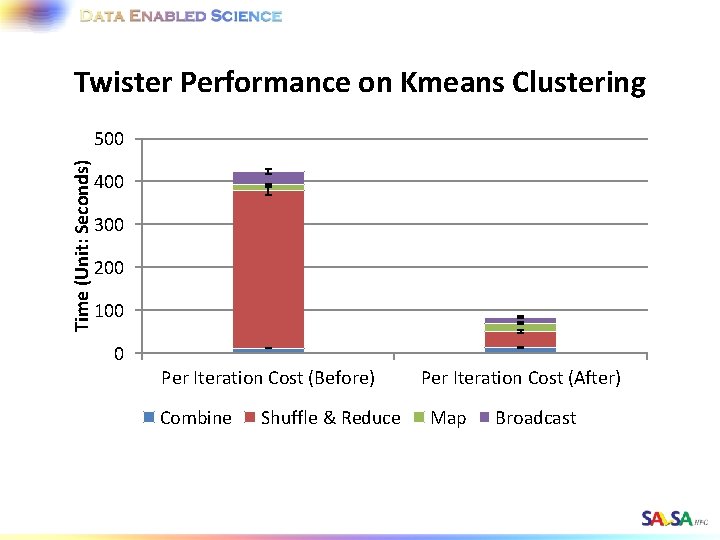

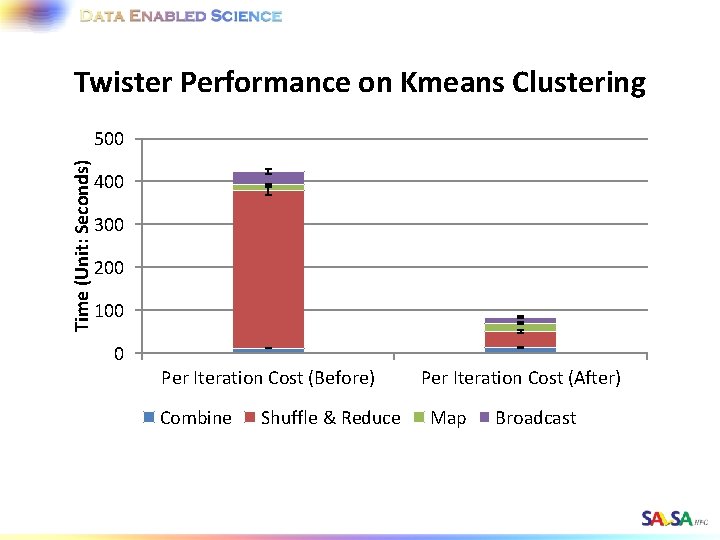

Twister Performance on Kmeans Clustering Time (Unit: Seconds) 500 400 300 200 100 0 Per Iteration Cost (Before) Combine Shuffle & Reduce Per Iteration Cost (After) Map Broadcast

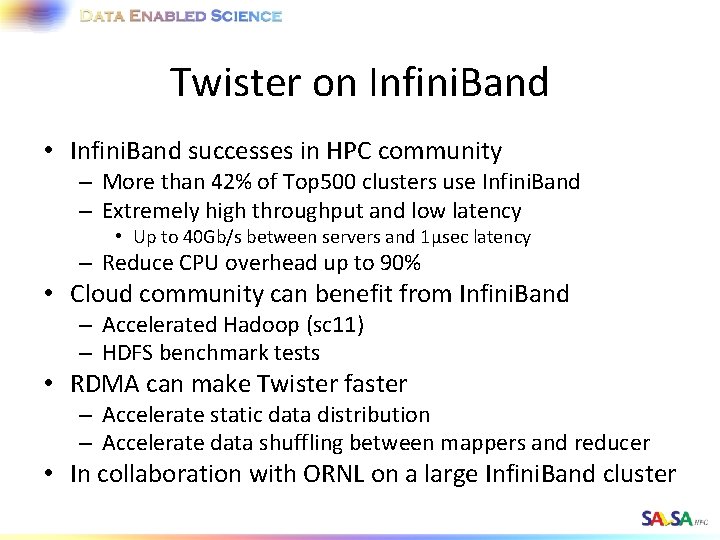

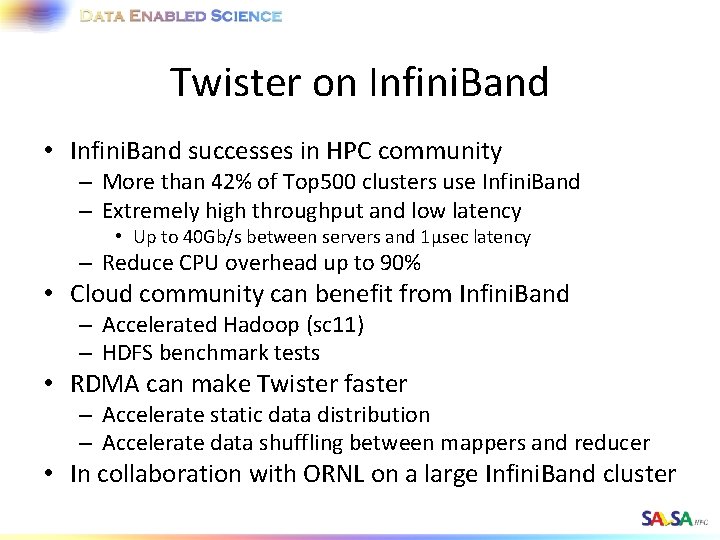

Twister on Infini. Band • Infini. Band successes in HPC community – More than 42% of Top 500 clusters use Infini. Band – Extremely high throughput and low latency • Up to 40 Gb/s between servers and 1μsec latency – Reduce CPU overhead up to 90% • Cloud community can benefit from Infini. Band – Accelerated Hadoop (sc 11) – HDFS benchmark tests • RDMA can make Twister faster – Accelerate static data distribution – Accelerate data shuffling between mappers and reducer • In collaboration with ORNL on a large Infini. Band cluster

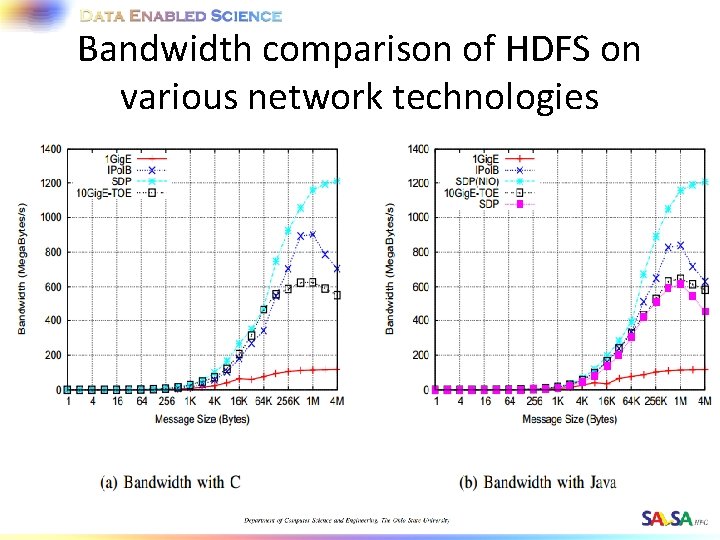

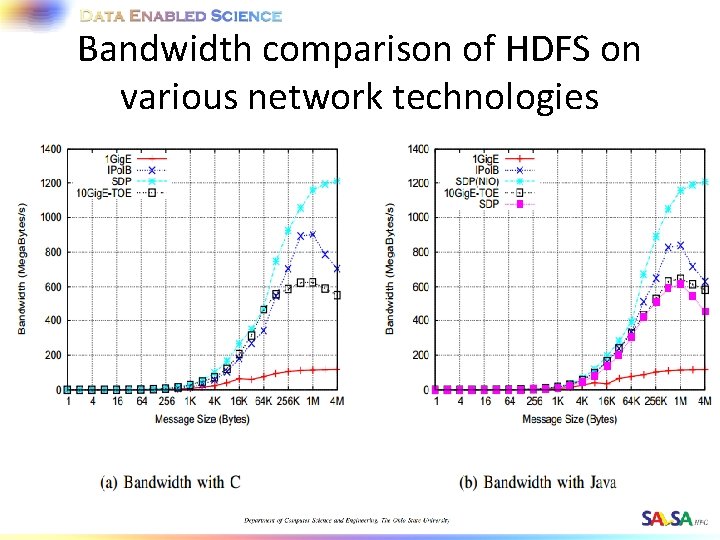

Bandwidth comparison of HDFS on various network technologies

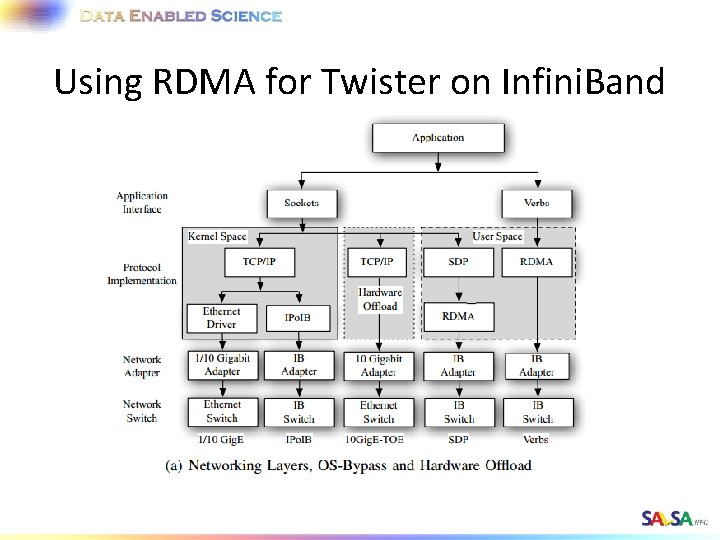

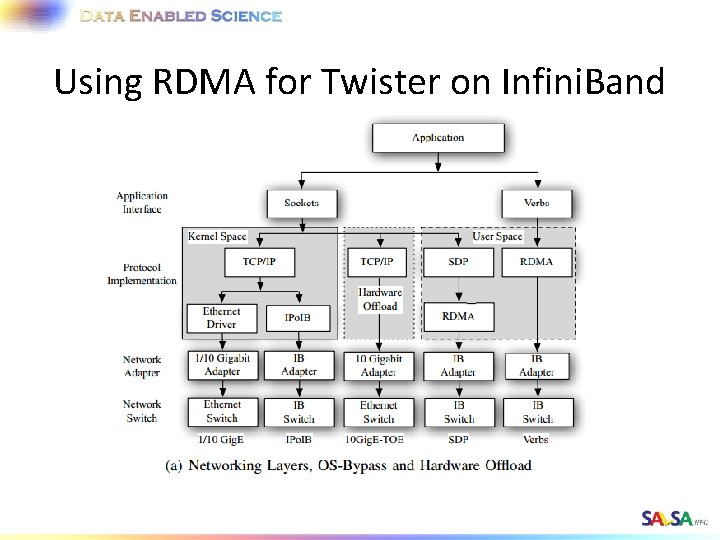

Using RDMA for Twister on Infini. Band

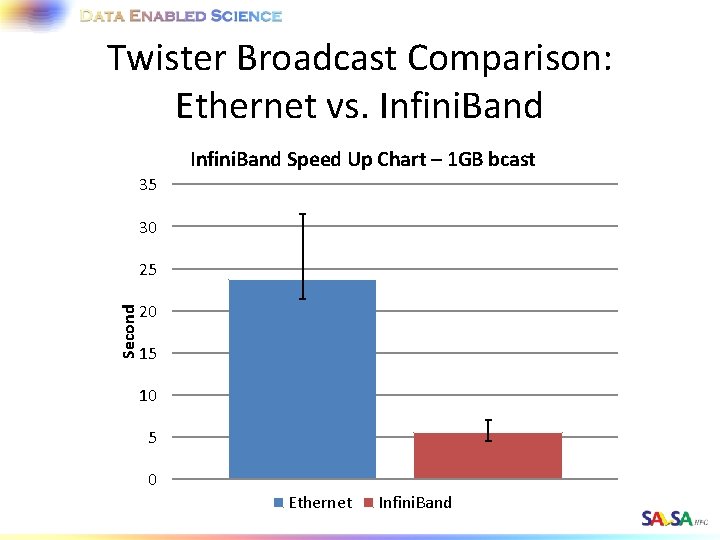

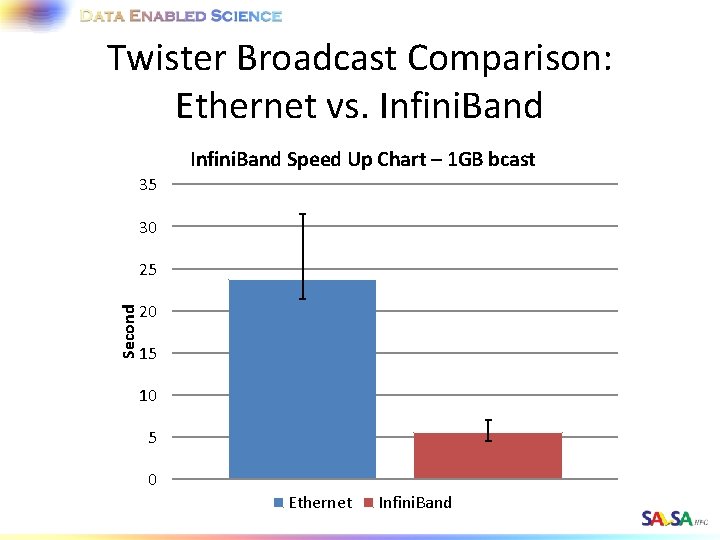

Twister Broadcast Comparison: Ethernet vs. Infini. Band Speed Up Chart – 1 GB bcast 35 30 Second 25 20 15 10 5 0 Ethernet Infini. Band

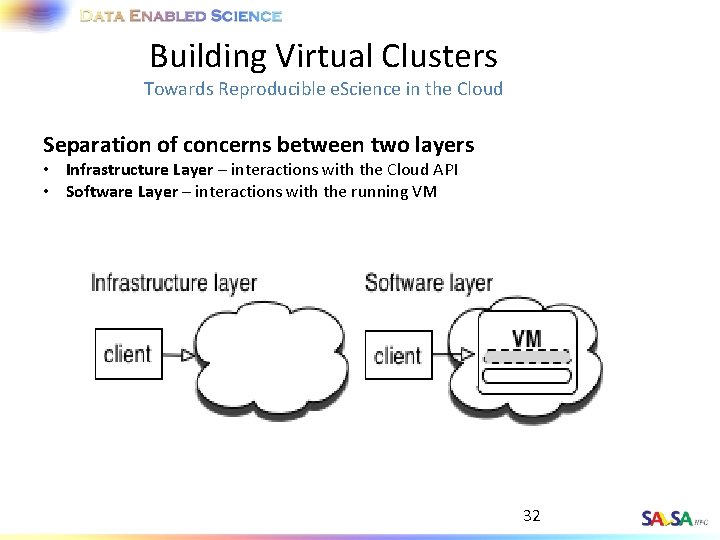

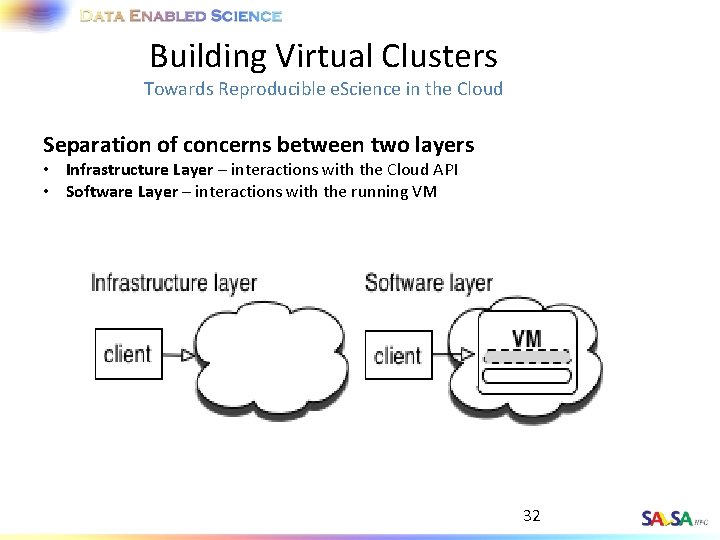

Building Virtual Clusters Towards Reproducible e. Science in the Cloud Separation of concerns between two layers • Infrastructure Layer – interactions with the Cloud API • Software Layer – interactions with the running VM 32

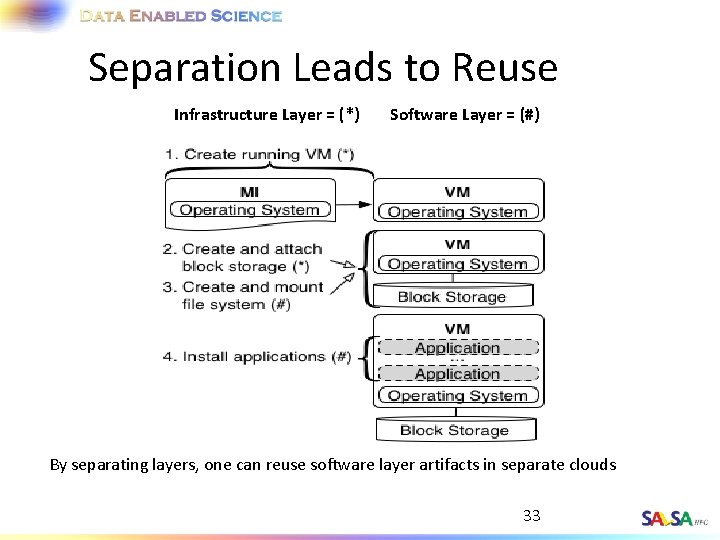

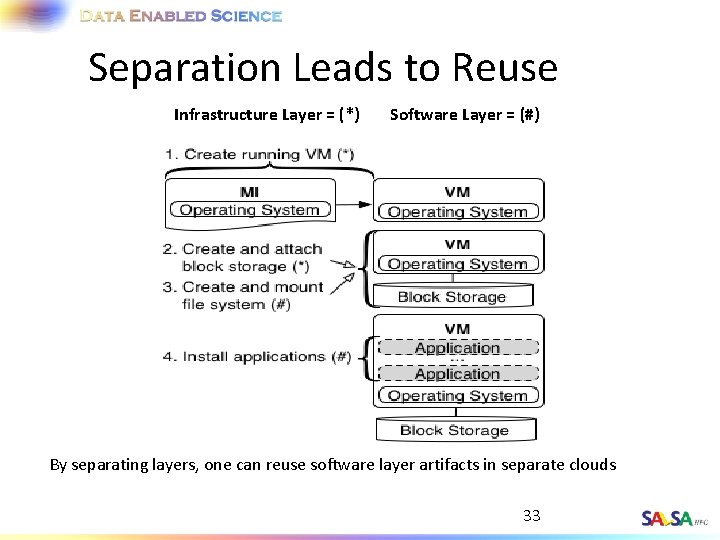

Separation Leads to Reuse Infrastructure Layer = (*) Software Layer = (#) By separating layers, one can reuse software layer artifacts in separate clouds 33

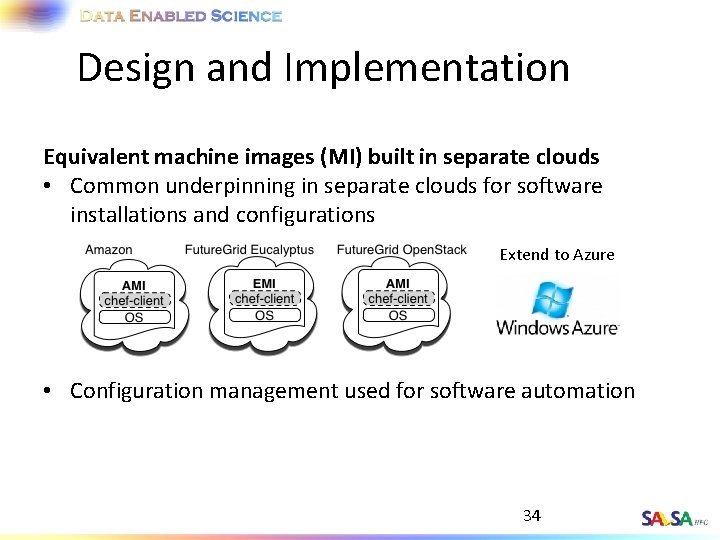

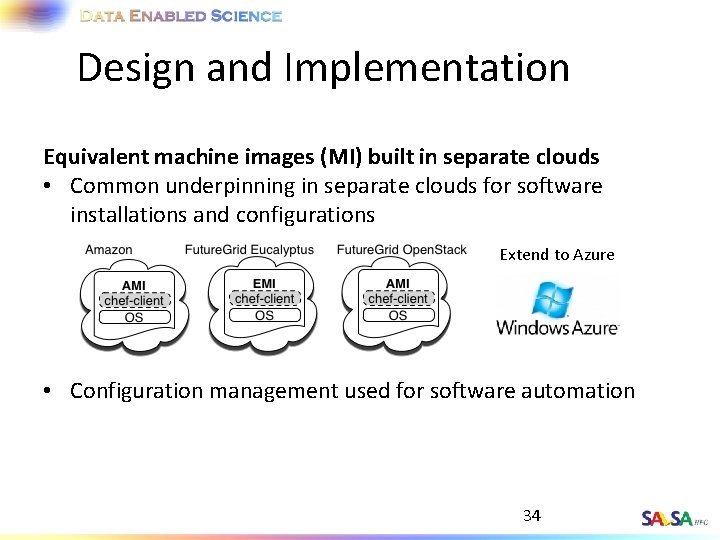

Design and Implementation Equivalent machine images (MI) built in separate clouds • Common underpinning in separate clouds for software installations and configurations Extend to Azure • Configuration management used for software automation 34

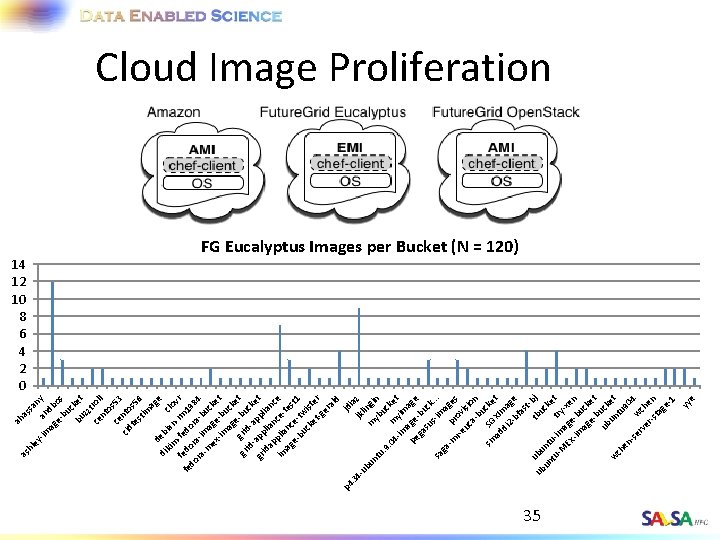

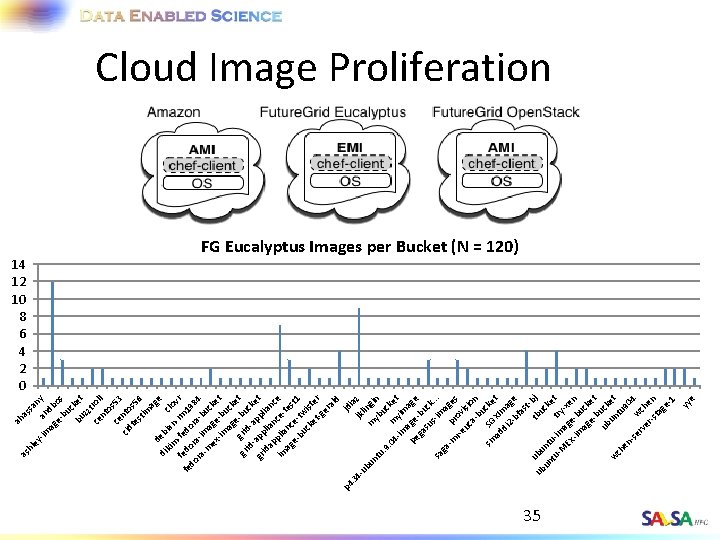

-im sa ah as an ny ag db e- os bu c bu ket zz t ce roll nt o ce s 53 n cid to te s 5 st 6 im ag de e di b kim ian clo -f -r vr f fe edo m 19 do ra ra 8 ra -im -b 4 -m u ex age cke -im -b t ag uck e e gr grid -bu t id - -a ck gr app ppl et id ap lian ianc im plia ce- e ag nc tes e- e- t 1 bu tw ck ist et er -g er al d jd p 4 ia 34 jkl z -u in bu m gi nt yb n u. u 9. 04 m cke -im yim t pe age ga -bu su ck s-i. . . sa m ga -m p age r r-e ov s uc isio abu n sm SG cke ad XIm t di 2 - age bf as t-b u t bu j ub bu ck un nt et tu u-i t r m -M y EX age -xe -im -b n ag uck e- et b ub uck un et w ch tu en 90 -se 4 w rv er che -st n ag e 1 yy e ey as hl Cloud Image Proliferation 14 12 10 8 6 4 2 0 FG Eucalyptus Images per Bucket (N = 120) 35

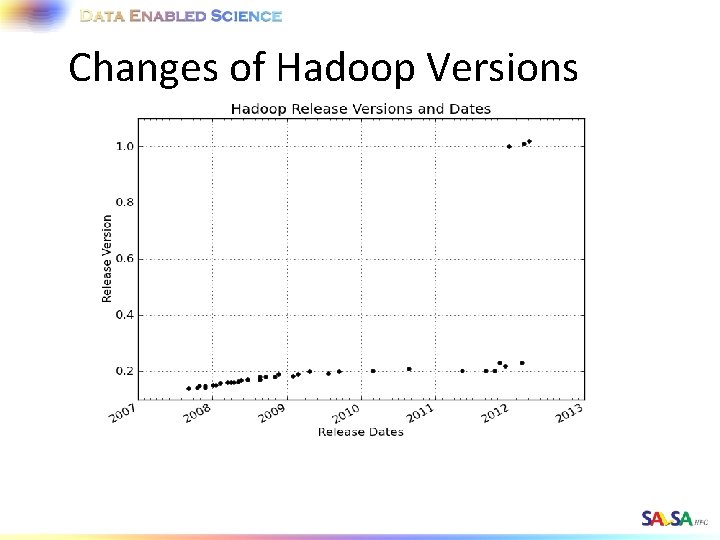

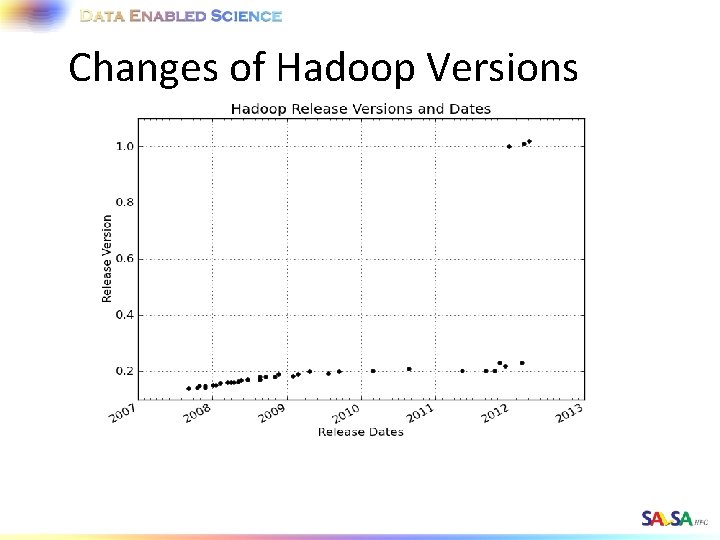

Changes of Hadoop Versions

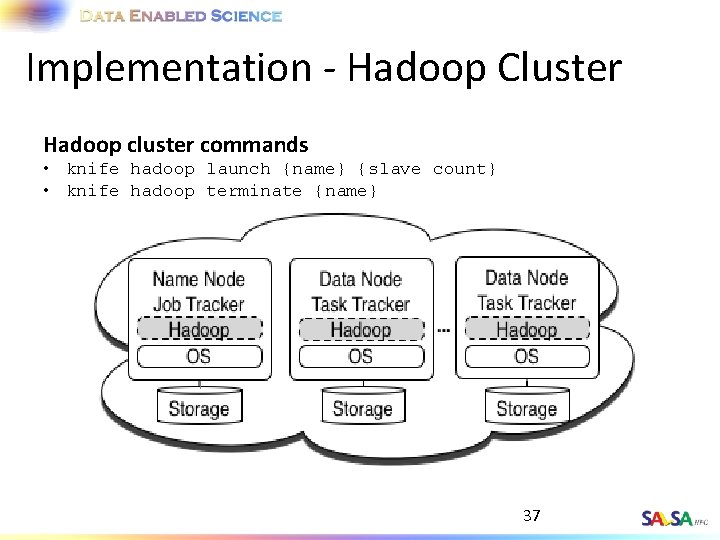

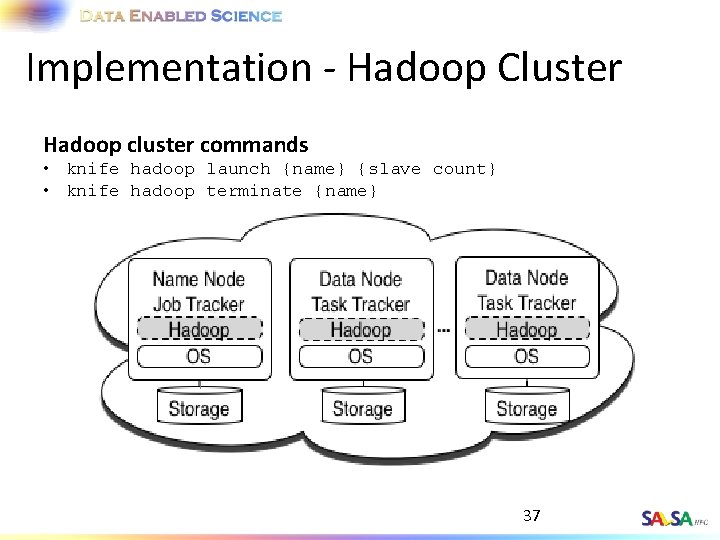

Implementation - Hadoop Cluster Hadoop cluster commands • knife hadoop launch {name} {slave count} • knife hadoop terminate {name} 37

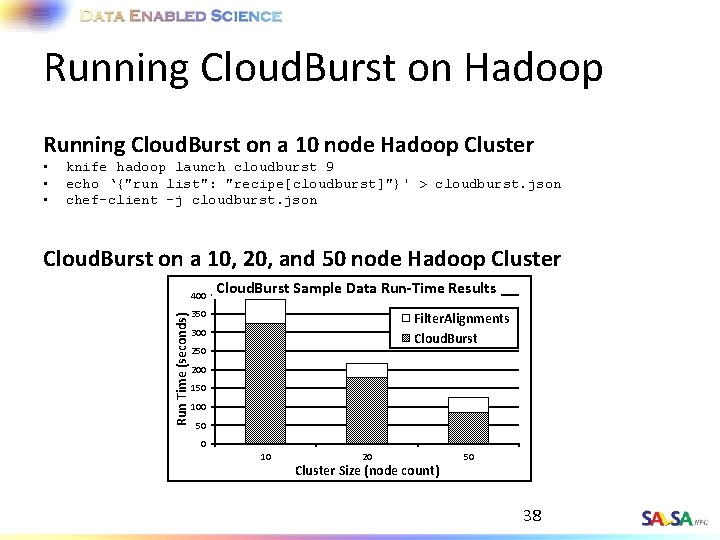

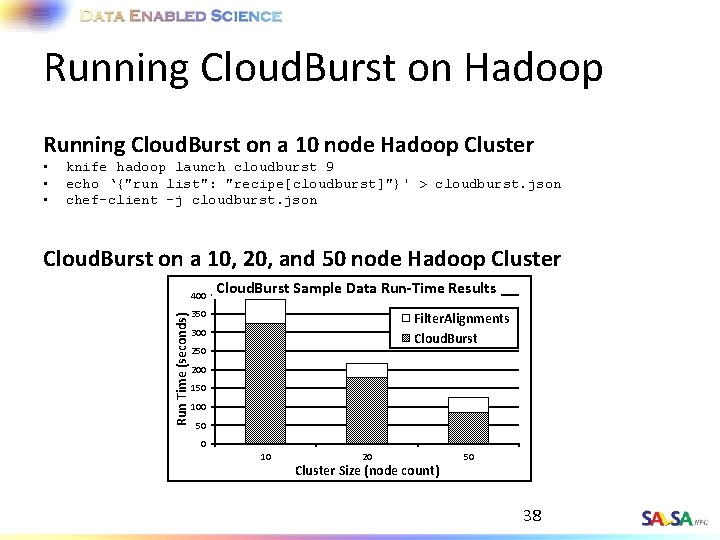

Running Cloud. Burst on Hadoop Running Cloud. Burst on a 10 node Hadoop Cluster • • • knife hadoop launch cloudburst 9 echo ‘{"run list": "recipe[cloudburst]"}' > cloudburst. json chef-client -j cloudburst. json Cloud. Burst on a 10, 20, and 50 node Hadoop Cluster Run Time (seconds) 400 Cloud. Burst Sample Data Run-Time Results 350 Filter. Alignments Cloud. Burst 300 250 200 150 100 50 0 10 20 Cluster Size (node count) 50 38

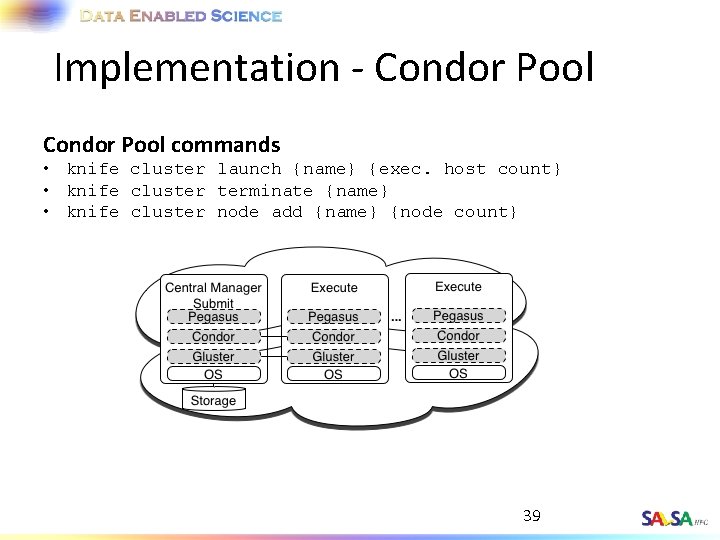

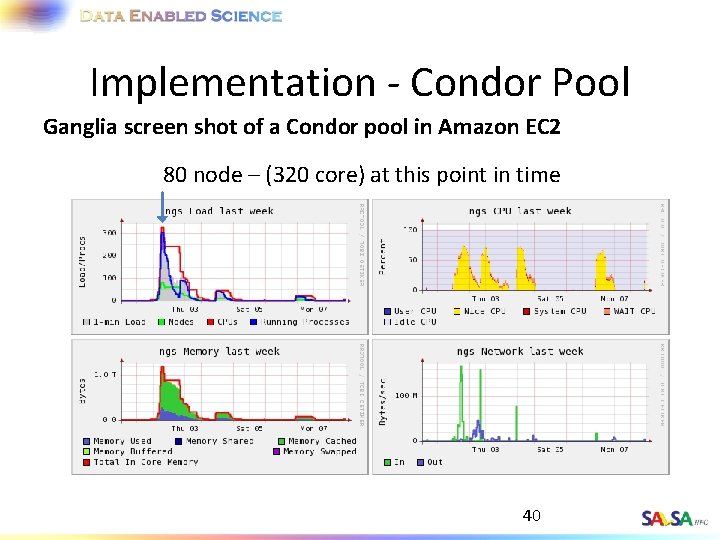

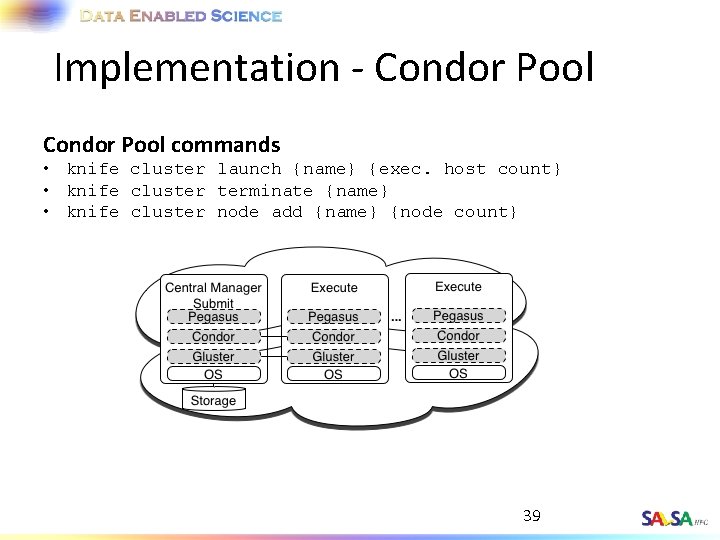

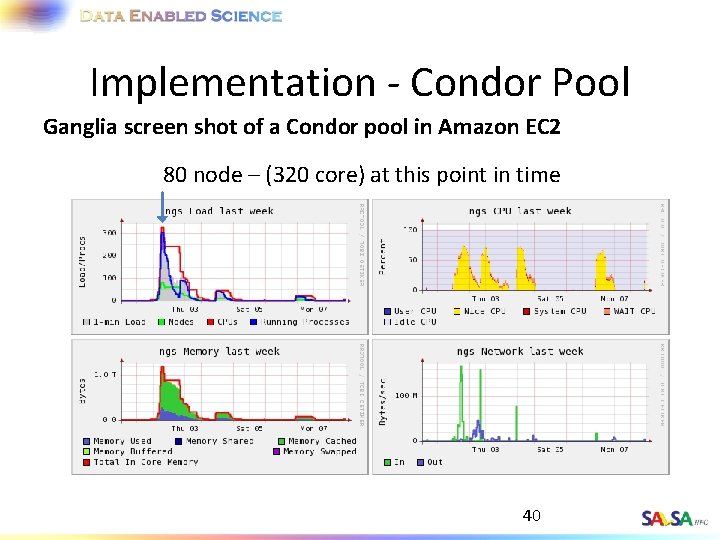

Implementation - Condor Pool commands • knife cluster launch {name} {exec. host count} • knife cluster terminate {name} • knife cluster node add {name} {node count} 39

Implementation - Condor Pool Ganglia screen shot of a Condor pool in Amazon EC 2 80 node – (320 core) at this point in time 40

SALSA HPC Group http: //salsahpc. indiana. edu School of Informatics and Computing Indiana University

1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. References M. Isard, M. Budiu, Y. Yu, A. Birrell, D. Fetterly, Dryad: Distributed data-parallel programs from sequential building blocks, in: ACM SIGOPS Operating Systems Review, ACM Press, 2007, pp. 59 -72 J. Ekanayake, H. Li, B. Zhang, T. Gunarathne, S. Bae, J. Qiu, G. Fox, Twister: A Runtime for iterative Map. Reduce, in: Proceedings of the First International Workshop on Map. Reduce and its Applications of ACM HPDC 2010 conference June 20 -25, 2010, ACM, Chicago, Illinois, 2010. Daytona iterative map-reduce framework. http: //research. microsoft. com/en-us/projects/daytona/. Y. Bu, B. Howe, M. Balazinska, M. D. Ernst, Ha. Loop: Efficient Iterative Data Processing on Large Clusters, in: The 36 th International Conference on Very Large Data Bases, VLDB Endowment, Singapore, 2010. Matei Zaharia, Mosharaf Chowdhury, Michael J. Franklin, Scott Shenker, Ion Stoica, University of Berkeley. Spark: Cluster Computing with Working Sets. Hot. Cloud’ 10 Proceedings of the 2 nd USENIX conference on Hot topics in cloud computing. USENIX Association Berkeley, CA. 2010. Yanfeng Zhang , Qinxin Gao , Lixin Gao , Cuirong Wang, i. Map. Reduce: A Distributed Computing Framework for Iterative Computation, Proceedings of the 2011 IEEE International Symposium on Parallel and Distributed Processing Workshops and Ph. D Forum, p. 11121121, May 16 -20, 2011 Tekin Bicer, David Chiu, and Gagan Agrawal. 2011. MATE-EC 2: a middleware for processing data with AWS. In Proceedings of the 2011 ACM international workshop on Many task computing on grids and supercomputers (MTAGS '11). ACM, New York, NY, USA, 5968. Yandong Wang, Xinyu Que, Weikuan Yu, Dror Goldenberg, and Dhiraj Sehgal. 2011. Hadoop acceleration through network levitated merge. In Proceedings of 2011 International Conference for High Performance Computing, Networking, Storage and Analysis (SC '11). ACM, New York, NY, USA, , Article 57 , 10 pages. Karthik Kambatla, Naresh Rapolu, Suresh Jagannathan, and Ananth Grama. Asynchronous Algorithms in Map. Reduce. In IEEE International Conference on Cluster Computing (CLUSTER), 2010. T. Condie, N. Conway, P. Alvaro, J. M. Hellerstein, K. Elmleegy, and R. Sears. Mapreduce online. In NSDI, 2010. M. Chowdhury, M. Zaharia, J. Ma, M. I. Jordan and I. Stoica, Managing Data Transfers in Computer Clusters with Orchestra SIGCOMM 2011, August 2011 M. Zaharia, M. Chowdhury, M. J. Franklin, S. Shenker and I. Stoica. Spark: Cluster Computing with Working Sets, Hot. Cloud 2010, June 2010. Huan Liu and Dan Orban. Cloud Map. Reduce: a Map. Reduce Implementation on top of a Cloud Operating System. In 11 th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, pages 464– 474, 2011 App. Engine Map. Reduce, July 25 th 2011; http: //code. google. com/p/appengine-mapreduce.