Administrative Stuff MP 1 grades Fridays class in

- Slides: 29

Administrative Stuff • MP 1 grades • Friday’s class in 2, Education Building. 1/23/2022 CS 320 © U of I 2001 1

CS 320/ECE 392/CSE 302 Data Parallel Architectures & Message Passing Architectures Department of Computer Science University of Illinois at Urbana-Champaign 1/23/2022 CS 320 © U of I 2001 2

Contents • Brief overview of Data Parallel Architectures. • Overview of Message Passing Architectures. • Message Passing Programming Model 1/23/2022 CS 320 © U of I 2001 3

References • Parallel Computer Architecture, Culler, Singh & Gupta, Chapter 1 • Highly Parallel Computing, Almasi & Gottlieb, Chapter 4 • In Search of Clusters, Pfister. 1/23/2022 CS 320 © U of I 2001 4

Data Parallel Programming • Programming model – Operations performed in parallel on each element of data structure – Logically single thread of control, performs sequential or parallel steps – Conceptually, a processor associated with each data element 1/23/2022 CS 320 © U of I 2001 5

Data Parallel Systems • Architectural model – Array of many simple, cheap processors with little memory each • Processors don’t sequence through instructions – Attached to a control processor that issues instructions – Specialized and general communication, cheap global synchronization 1/23/2022 CS 320 © U of I 2001 6

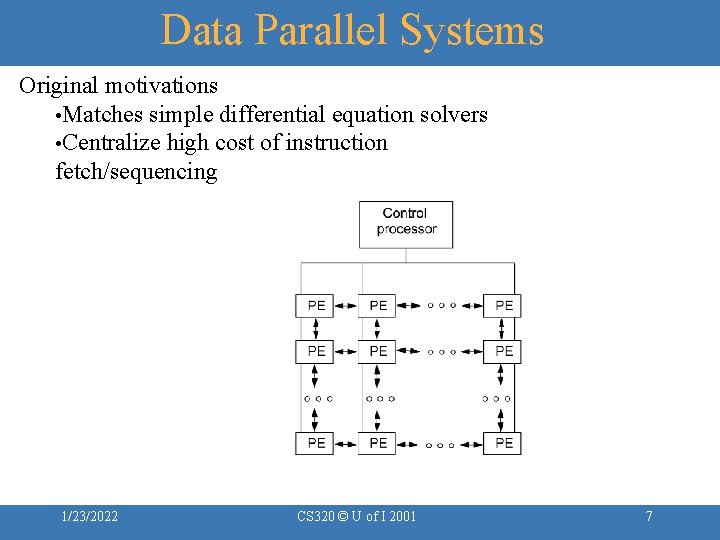

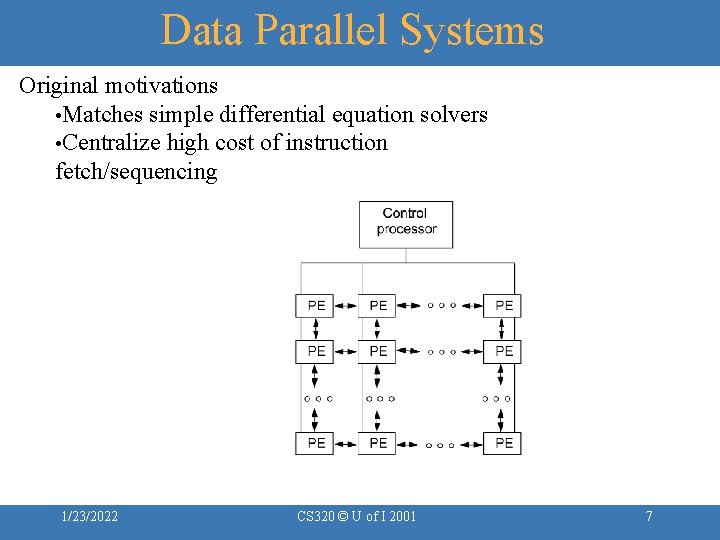

Data Parallel Systems Original motivations • Matches simple differential equation solvers • Centralize high cost of instruction fetch/sequencing 1/23/2022 CS 320 © U of I 2001 7

Application of Data Parallelism – Each PE contains an employee record with his/her salary If salary > 100 K then salary = salary *1. 05 else salary = salary *1. 10 – Logically, the whole operation is a single step – Some processors enabled for arithmetic operation, others disabled 1/23/2022 CS 320 © U of I 2001 8

Application of Data Parallelism • Other examples: – Finite differences, linear algebra, . . . – Document searching, graphics, image processing, . . . • Some recent machines: – Thinking Machines CM-1, CM-2 (and CM-5) – Maspar MP-1 and MP-2 1/23/2022 CS 320 © U of I 2001 9

Example: CM 2 • The CM-2 Connection Machine from Thinking Machines Corporation was a massively parallel SIMD computing system consisting of 64 K, 32 K, or 16 K data processors (K = 1024). • Each data processor has 64 Kbits (8 Kbytes) of bit-addressable local memory, a bit-serial ALU that can operate on variable length operands, four 1 -bit flag registers, a router interface, a NEWS grid interface, and an I/O interface. 1/23/2022 CS 320 © U of I 2001 10

Example: CM 2 • Each data processor can access memory at the rate of 5 Mbits/s. A fully configured CM-2 thus has 512 Mbytes of memory that can be read or written at the rate of 300 Gbits/s. • When 64 K data processors are operating in parallel, each performing a 32 -bit addition, the CM-2 parallel processing unit operates at about 2500 MIPS. 1/23/2022 CS 320 © U of I 2001 11

Example: CM 2 • In addition to the standard ALU, the CM-2 unit allows for optional floating point accelerator chips that are shared among every group of 32 data processors • Aggregate peak floating point performance of the CM-2 is 3500 MFLOPS. • The CM-2 system was designed to operate under the programmed control of a front end computer which maybe a SUN/4 workstation, a Symbolics 3600 LISP machine, or a DEC VAX 8000 series computer. 1/23/2022 CS 320 © U of I 2001 12

Example: CM 2 • The front end machines provide the program development and execution environment. • All Connection Machine programs execute in the front end; during the course of the execution the front end issues instructions to the CM-2 parallel processing unit. 1/23/2022 CS 320 © U of I 2001 13

Evolution and Convergence • Rigid control structure (SIMD in Flynn taxonomy) • Popular when cost savings of centralized sequencer high – 60 s when CPU was a cabinet – Replaced by vectors in mid-70 s • More flexible w. r. t. memory layout and easier to manage – Revived in mid-80 s when 32 -bit datapath slices just fit on chip – No longer true with modern microprocessors 1/23/2022 CS 320 © U of I 2001 14

Evolution and Convergence • Other reasons for demise – Simple, regular applications have good locality, can do well anyway – Loss of applicability due to hardwiring data parallelism • MIMD machines as effective for data parallelism and more general 1/23/2022 CS 320 © U of I 2001 15

Evolution and Convergence • Prog. model converges with SPMD (single program multiple data) – Contributes need for fast global synchronization – Structured global address space, implemented with either SAS or MP 1/23/2022 CS 320 © U of I 2001 16

Message Passing Architectures • Complete computer as building block, including I/O – Communication via explicit I/O operations • Programming model: directly access only private address space (local memory), comm. via explicit messages (send/receive) 1/23/2022 CS 320 © U of I 2001 17

Message Passing Architectures • High-level block diagram similar to distributed-memory SAS – But comm. integrated at IO level, needn’t be into memory system – Like networks of workstations (clusters), but tighter integration – Easier to build than scalable SAS • Programming model more removed from basic hardware operations – Library or OS intervention 1/23/2022 CS 320 © U of I 2001 18

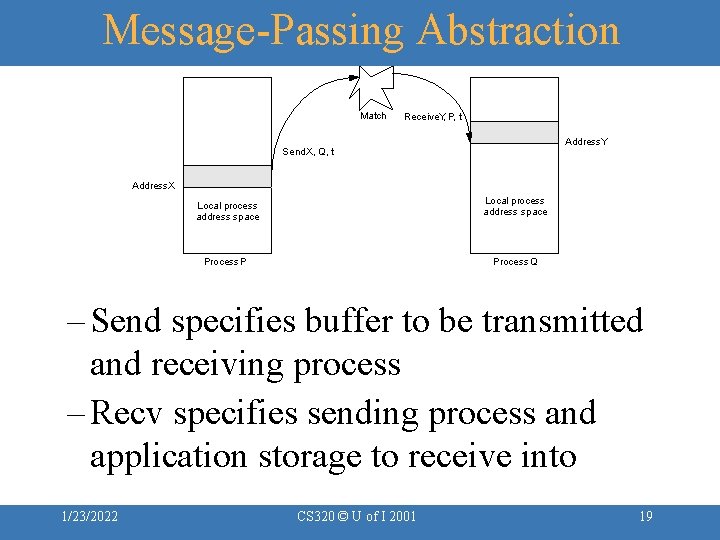

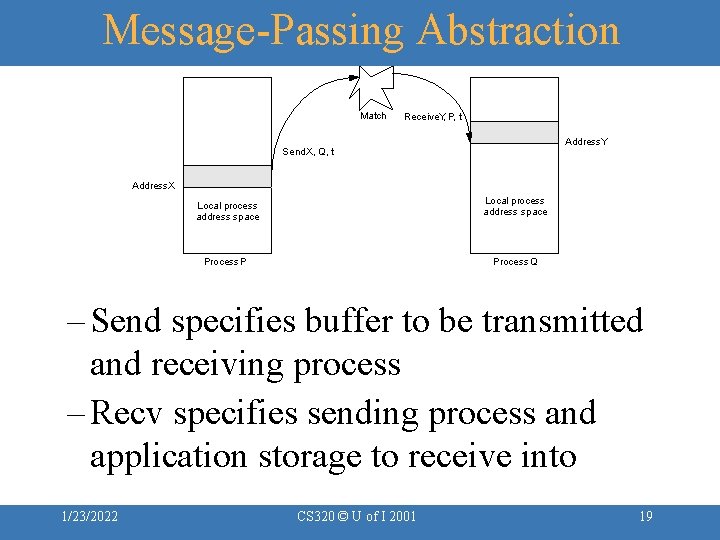

Message-Passing Abstraction Match Receive. Y, P, t Address. Y Send. X, Q, t Address. X Local process address space Process P Process Q – Send specifies buffer to be transmitted and receiving process – Recv specifies sending process and application storage to receive into 1/23/2022 CS 320 © U of I 2001 19

Message-Passing Abstraction – Memory to memory copy, but need to name processes – Optional tag on send and matching rule on receive – User process names local data and entities in process/tag space too – In simplest form, the send/recv match achieves pairwise synch event • Other variants too – Many overheads: copying, buffer management, protection 1/23/2022 CS 320 © U of I 2001 20

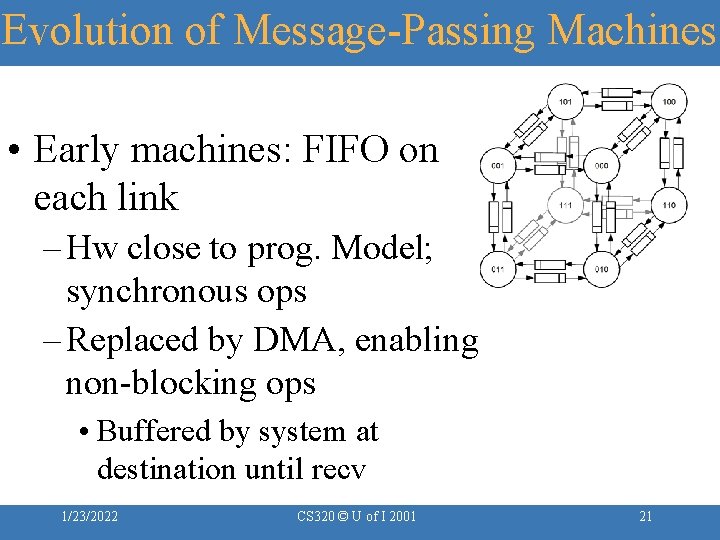

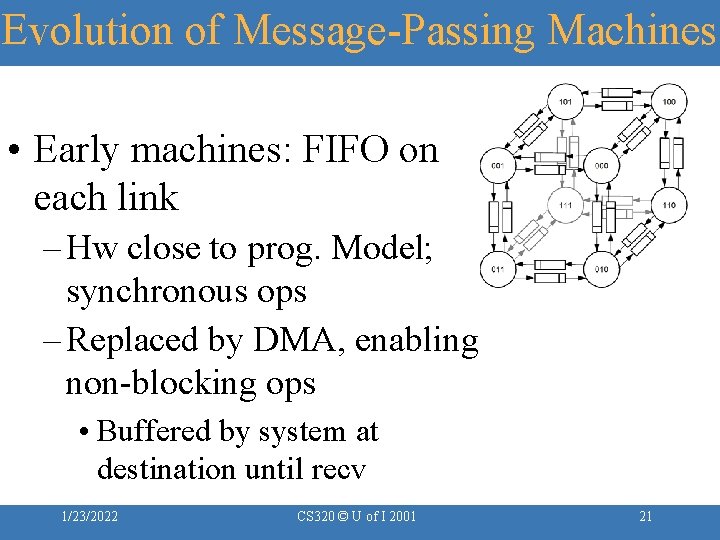

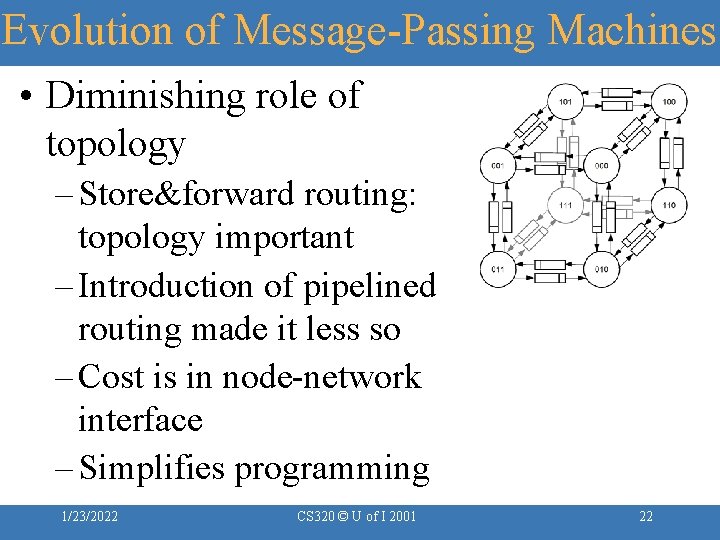

Evolution of Message-Passing Machines • Early machines: FIFO on each link – Hw close to prog. Model; synchronous ops – Replaced by DMA, enabling non-blocking ops • Buffered by system at destination until recv 1/23/2022 CS 320 © U of I 2001 21

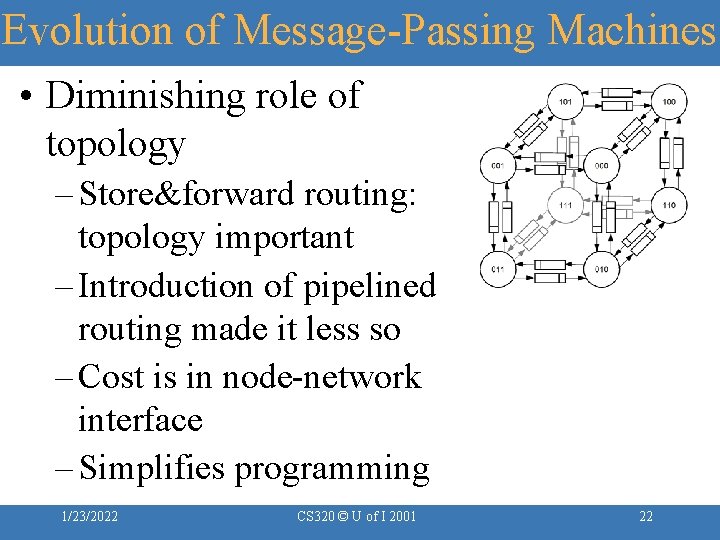

Evolution of Message-Passing Machines • Diminishing role of topology – Store&forward routing: topology important – Introduction of pipelined routing made it less so – Cost is in node-network interface – Simplifies programming 1/23/2022 CS 320 © U of I 2001 22

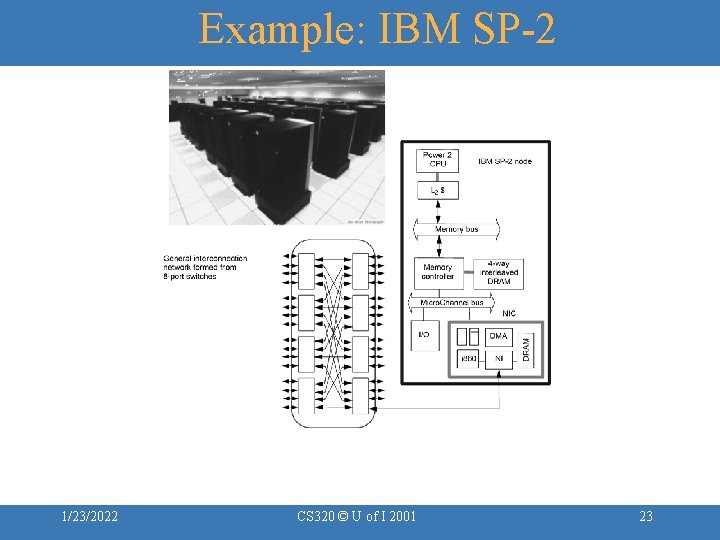

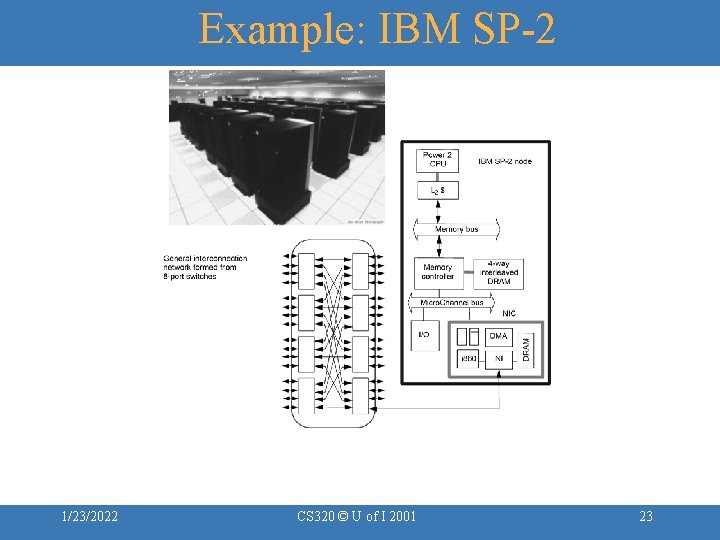

Example: IBM SP-2 1/23/2022 CS 320 © U of I 2001 23

Example: IBM SP-2 – Made out of essentially complete RS 6000 workstations – Network interface integrated in I/O bus (bw limited by I/O bus) 1/23/2022 CS 320 © U of I 2001 24

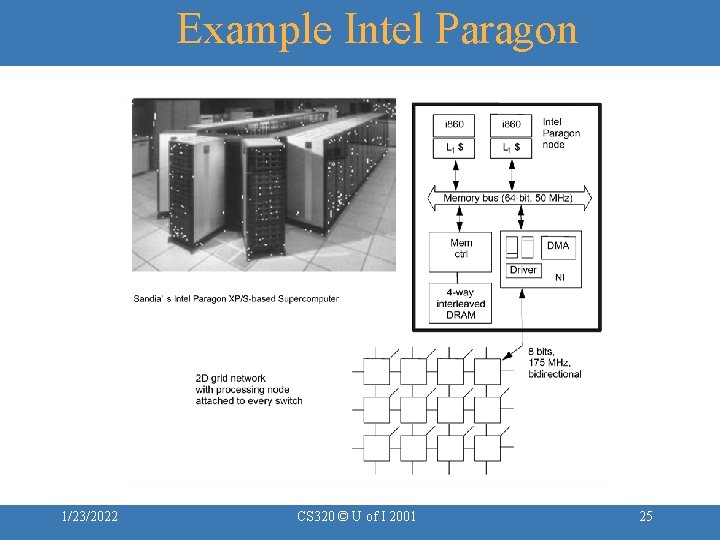

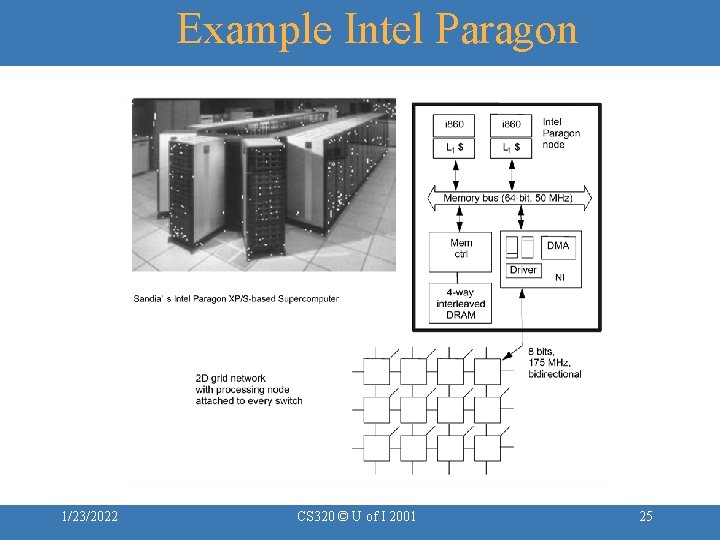

Example Intel Paragon 1/23/2022 CS 320 © U of I 2001 25

Message Passing Programming Model • Naming: Processes can name private data directly. – No shared address space • Operations: Explicit communication through send and receive – Send transfers data from private address space to another process – Receive copies data from process to private address space – Must be able to name processes 1/23/2022 CS 320 © U of I 2001 26

Message Passing Programming Model • Ordering: – Program order within a process – Send and receive can provide pt to pt synch between processes – Mutual exclusion inherent • Can construct global address space: – Process number + address within process address space – But no direct operations on these names 1/23/2022 CS 320 © U of I 2001 27

Summary • Overview of Data Parallel and Message Passing Architectures • Message Passing Programming 1/23/2022 CS 320 © U of I 2001 28

What is Next? • Message Passing Programming • MPI • Read chapters 2, 3 of Using MPI 1/23/2022 CS 320 © U of I 2001 29