A Joint SelfAttention Model for Aspect Category Detection

- Slides: 15

A Joint Self-Attention Model for Aspect Category Detection in ECommerce Reviews Siyu Wang Southwestern University of Finance and Economics China

Ø Introduction Aspect Category Detection (ACD) is a multi-label task that aims to assign labels to aspects in reviews. Example: • “The service in this restaurant is really good!” • —— Service “the waiters in the restaurant are very nice, but the dishes are really average! ” —— “Service” and “Food”

Ø Introduction The ADC task can be achieved by lexicon-based method and machine learning based method. • The lexicon-based methods do not require the labeled dataset, but certain the designed rules. However, it cannot deal with sentences that contain implicit semantics well. • The machine learning based model usually has two drawbacks: u. The word-level self-attention model is good at handling short sentences, but would lead to a long-term memorization burden from LSTM. u. The sentence-level self-attention mechanism can meet the challenge of the long-term memory burden, but fails to deal with short sentences.

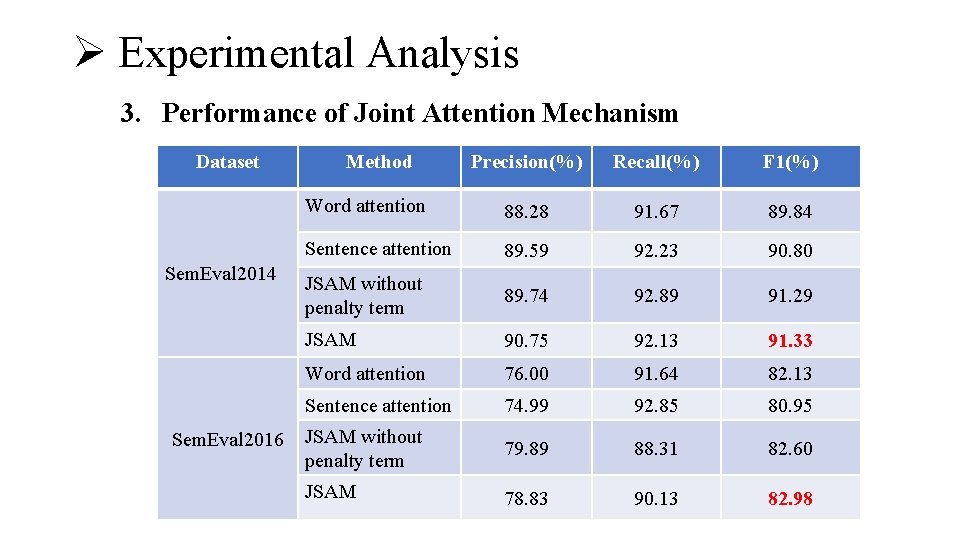

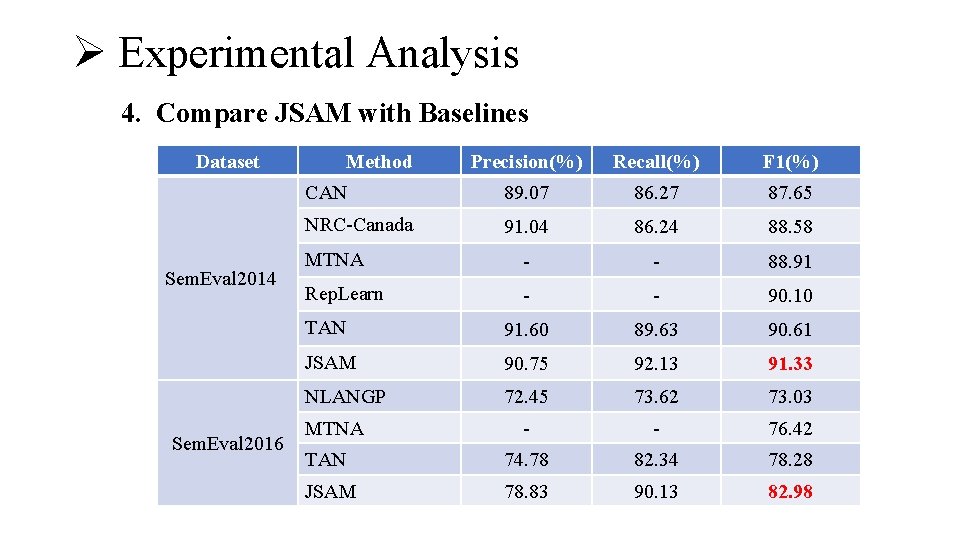

Ø Motivation • This study develops a novel model named Joint Self-Attention Model (JSAM) to meet the challenge of the ADC task, that is combining a word-level attention model and a sentence-level attention model. • The experiments are conducted on Sem. Eval 2014 and Sem. Eval 2016 datasets, and F 1 -Score of JSAM reaches 91. 33% on Sem. Eval 2014 dataset, which is 0. 72% higher than the second best model. Meanwhile, the F 1 -Score is 4. 6% higher than the best model on Sem. Eval 2016 dataset.

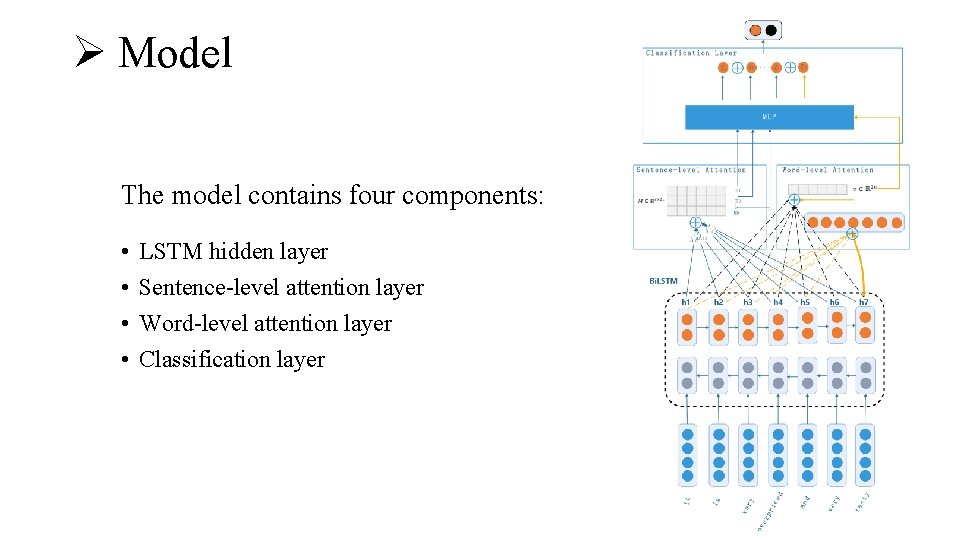

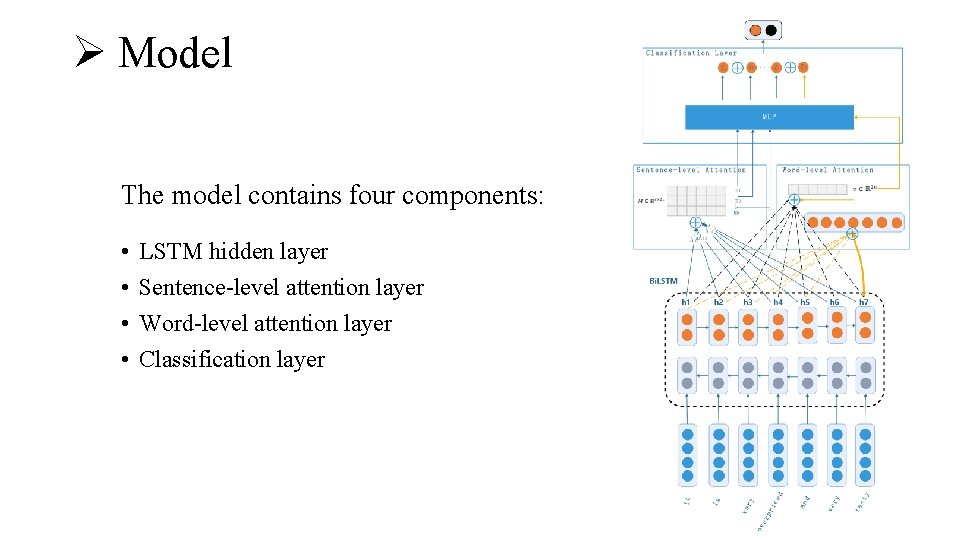

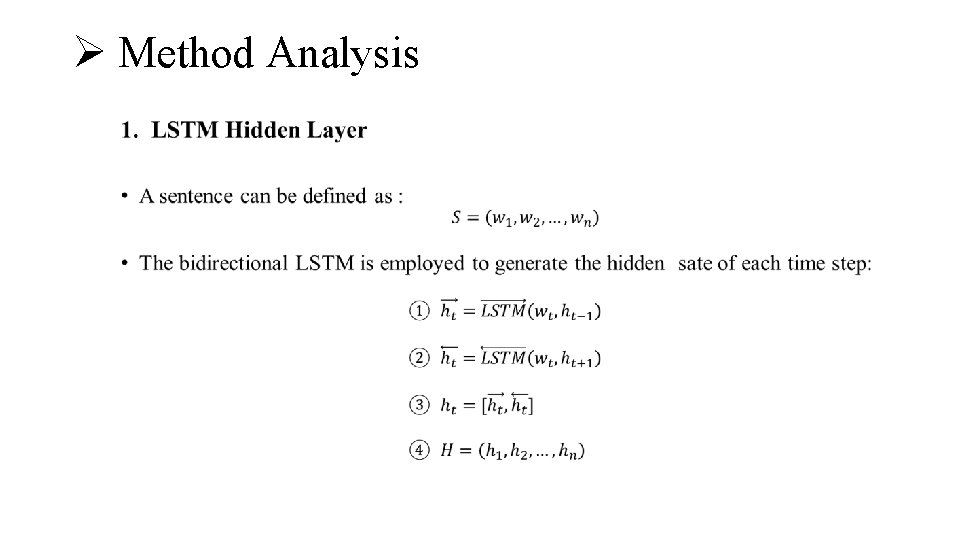

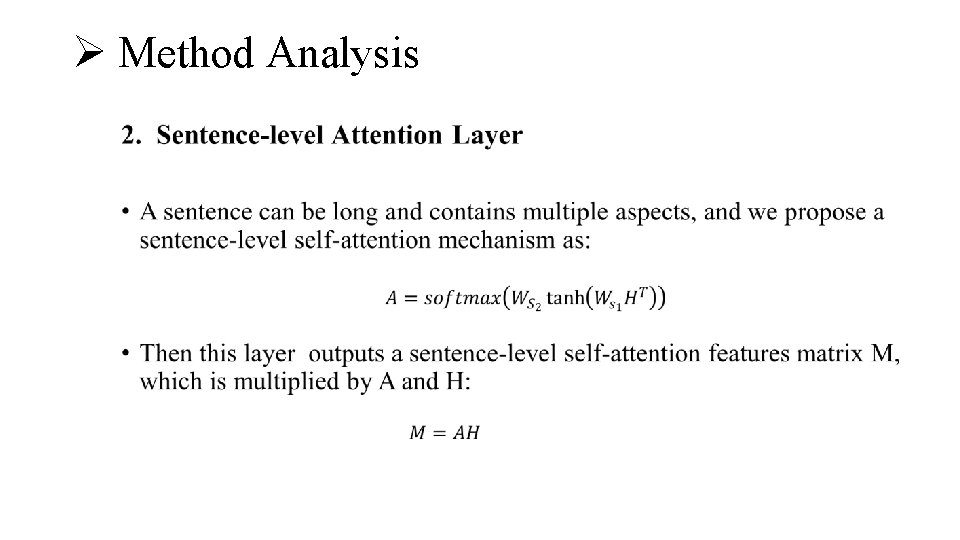

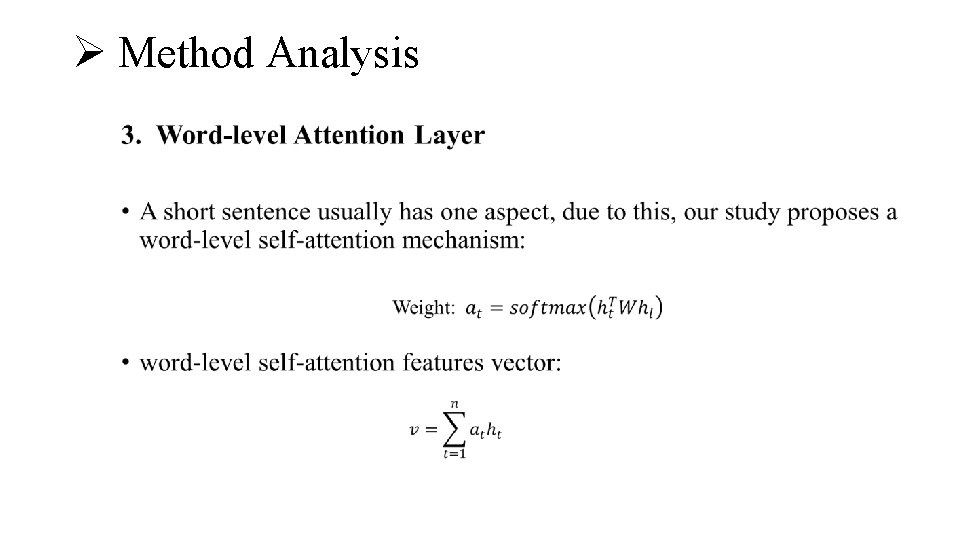

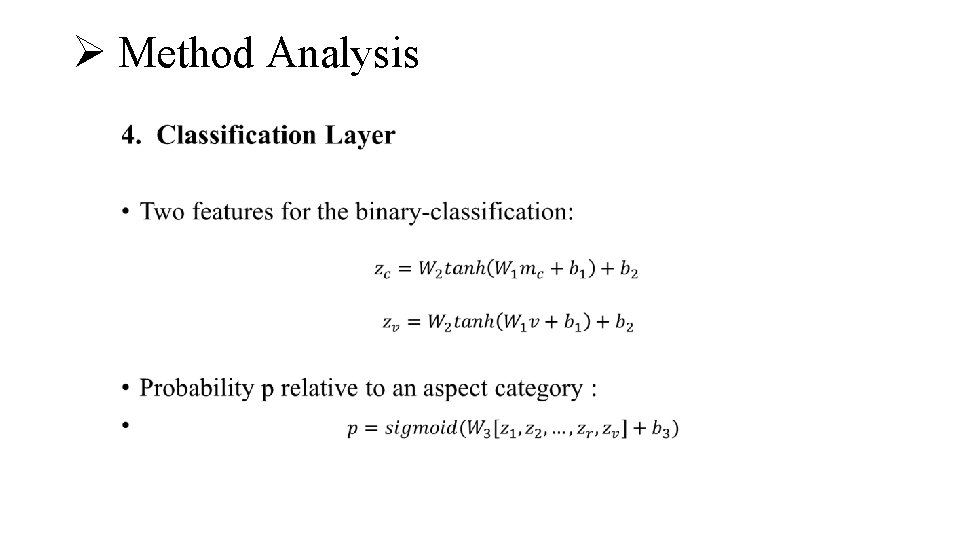

Ø Model The model contains four components: • • LSTM hidden layer Sentence-level attention layer Word-level attention layer Classification layer

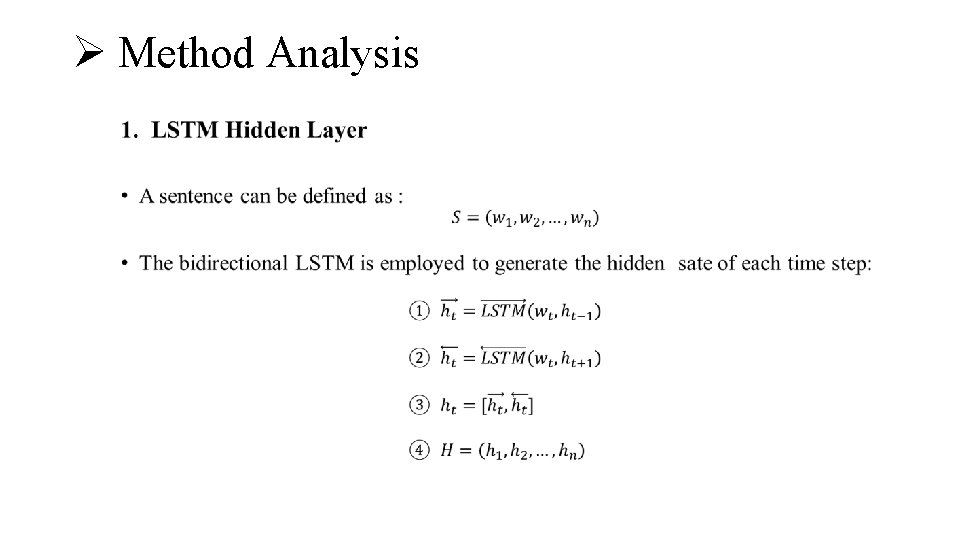

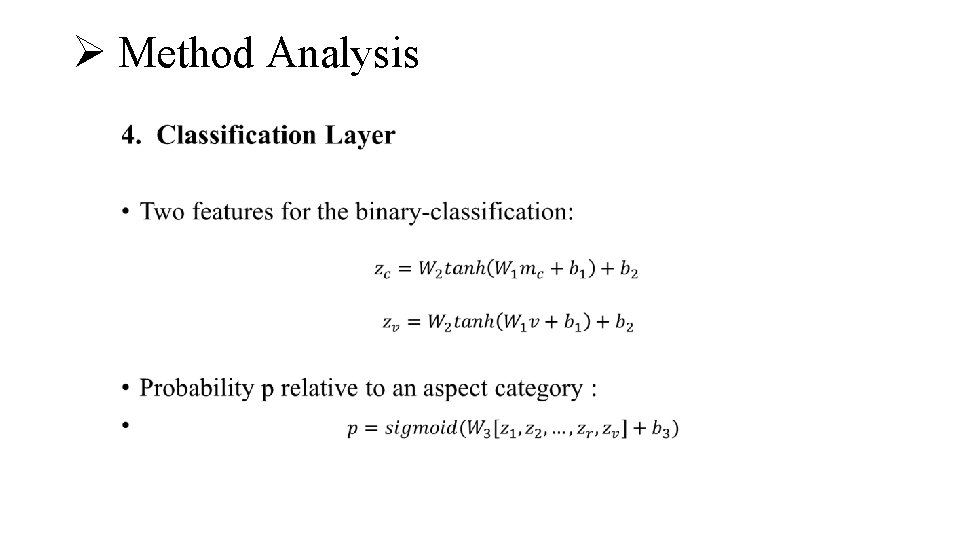

Ø Method Analysis •

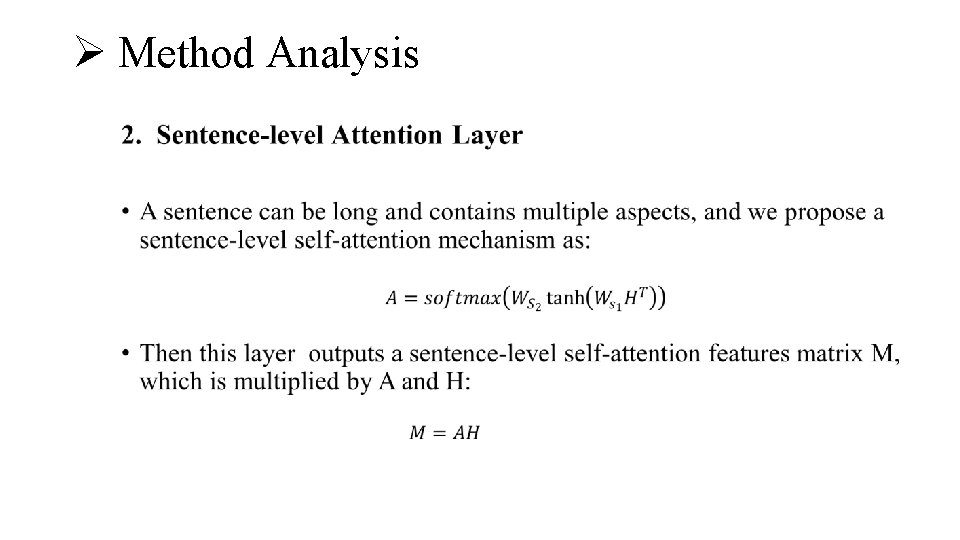

Ø Method Analysis •

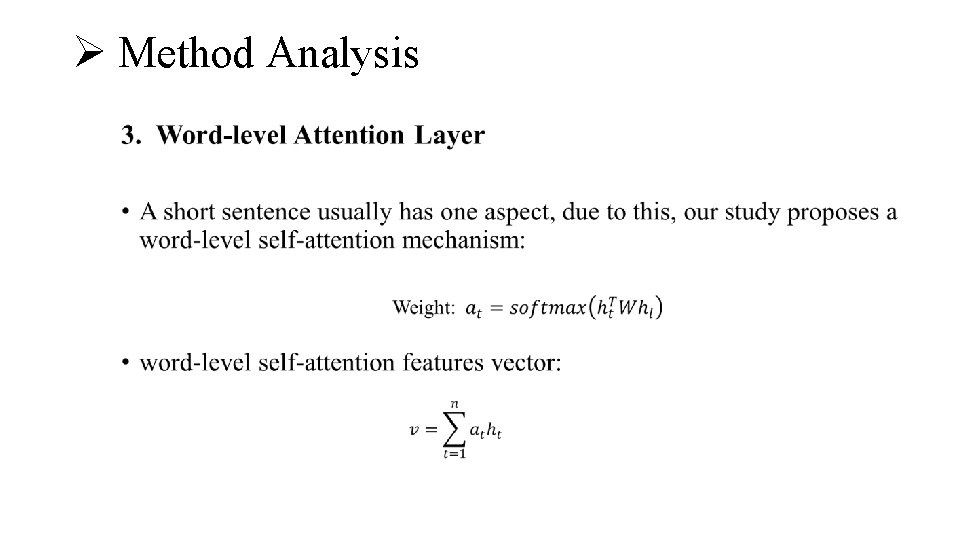

Ø Method Analysis •

Ø Method Analysis •

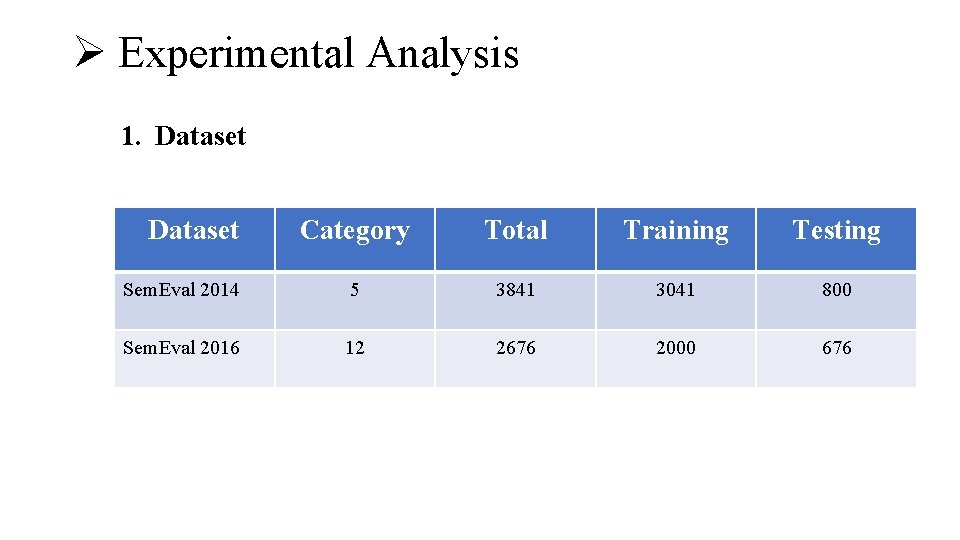

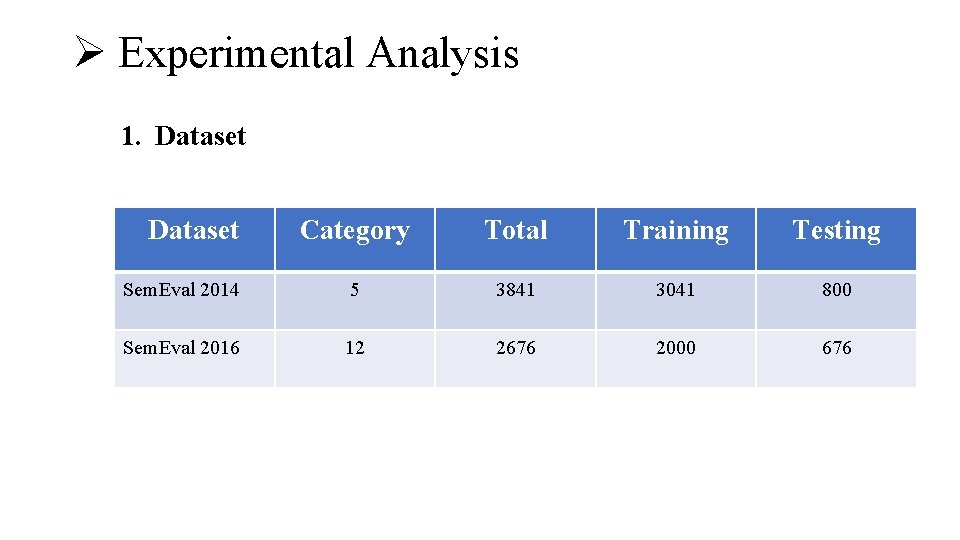

Ø Experimental Analysis 1. Dataset Category Total Training Testing Sem. Eval 2014 5 3841 3041 800 Sem. Eval 2016 12 2676 2000 676

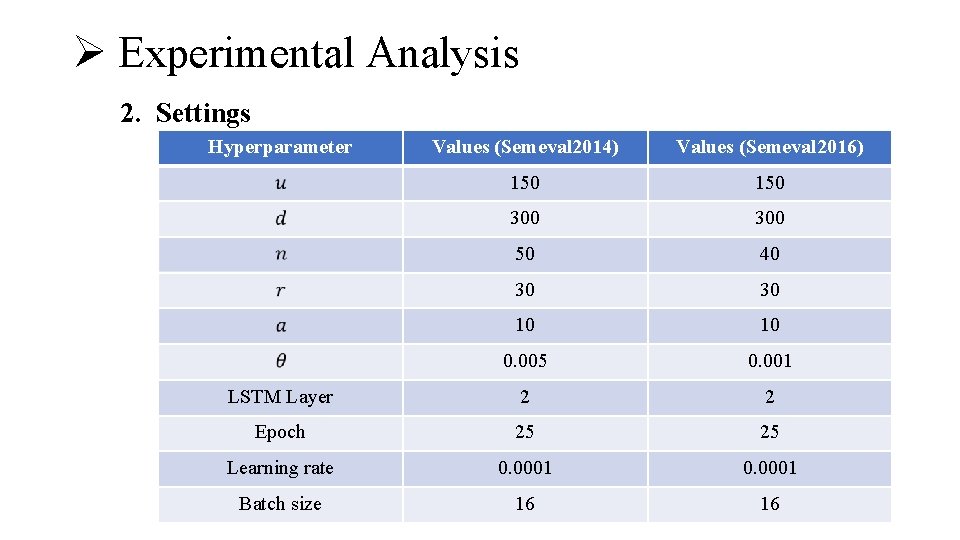

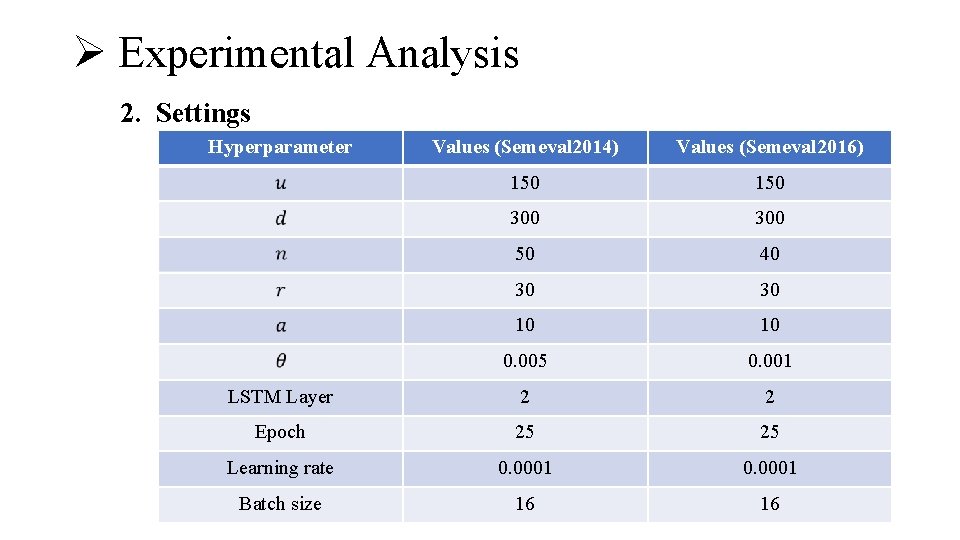

Ø Experimental Analysis 2. Settings Hyperparameter Values (Semeval 2014) Values (Semeval 2016) 150 300 50 40 30 30 10 10 0. 005 0. 001 LSTM Layer 2 2 Epoch 25 25 Learning rate 0. 0001 Batch size 16 16

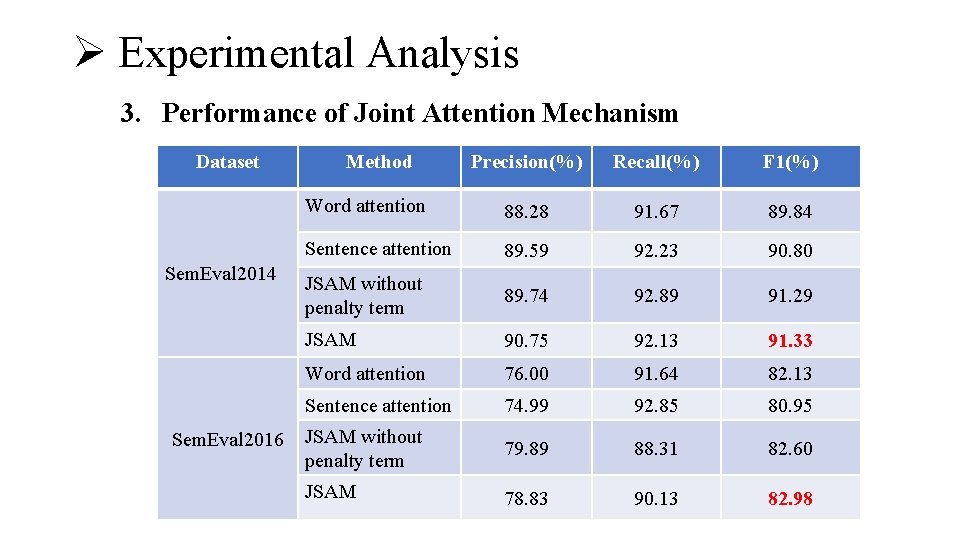

Ø Experimental Analysis 3. Performance of Joint Attention Mechanism Dataset Sem. Eval 2014 Sem. Eval 2016 Method Precision(%) Recall(%) F 1(%) Word attention 88. 28 91. 67 89. 84 Sentence attention 89. 59 92. 23 90. 80 JSAM without penalty term 89. 74 92. 89 91. 29 JSAM 90. 75 92. 13 91. 33 Word attention 76. 00 91. 64 82. 13 Sentence attention 74. 99 92. 85 80. 95 JSAM without penalty term 79. 89 88. 31 82. 60 JSAM 78. 83 90. 13 82. 98

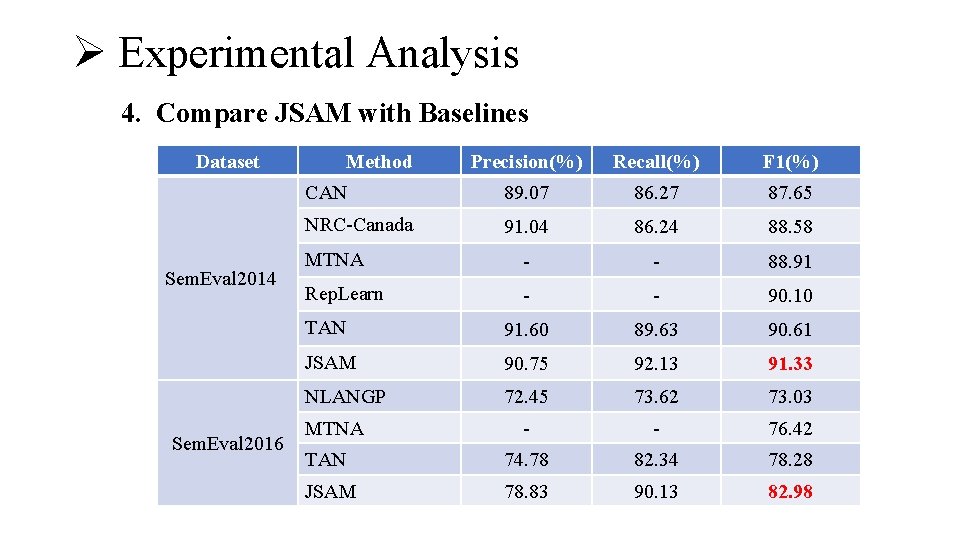

Ø Experimental Analysis 4. Compare JSAM with Baselines Dataset Sem. Eval 2014 Sem. Eval 2016 Method Precision(%) Recall(%) F 1(%) CAN 89. 07 86. 27 87. 65 NRC-Canada 91. 04 86. 24 88. 58 MTNA - - 88. 91 Rep. Learn - - 90. 10 TAN 91. 60 89. 63 90. 61 JSAM 90. 75 92. 13 91. 33 NLANGP 72. 45 73. 62 73. 03 - - 76. 42 TAN 74. 78 82. 34 78. 28 JSAM 78. 83 90. 13 82. 98 MTNA

Ø Conclusion The model leverages the advantages of two types of self-attention mechanisms on meeting the challenge of both long sentences and short sentences. The experimental results demonstrate that JSAM outperforms baselines. The experimental results also show that the penalty term added in the objective function to prevent overfitting shows a positive effect on improving the performance.

The end Thank you !