6 Evaluation of measuring tools validity Psychometrics 201213

- Slides: 31

6. Evaluation of measuring tools: validity Psychometrics. 2012/13. Group A (English)

• The validity of inferences that can be made from the scores obtained by subjects in the test refers to the relationship that can be established between the empirical evidence obtained and theoretical concept that we have about the construct that we try to measure. • “No matter” how reliable the measures provided in the test are, if they do not refer to what we want to measure, the scores obtained will be difficult to interpret. • Three types of evidence are often argued to address the validity of a test: content, construct and criterion.

Content validity

• Objective: To analyze the extent to which items that conform the test are a relevant and representative sample of the construct on which we want to do inferences. – – • Relevant: the need for a clear and comprehensive specification of all possible observable behaviors that are representative of the construct to be measured. Representative: the need that all these behaviors are represented in the test. It is usual to use a group of experts to analyze: – – That the test does not include irrelevant aspects of the domain of interest. The inclusion of all the important elements that define the domain.

• • • The study results will be based on subjective judgments emitted by the experts. To make the specification of the domain we can use a table of specifications (viewed in previous chapter). To assess the relevance of the items in relation to the domain (Hambleton, 1980): – – – • 1. Introduce cards with each of the items to the experts. 2. Each expert shall state on a scale from 1 (poor fit) to 5 (excellent fit) the degree of fit of each item with its corresponding specification in the domain. 3. Calculate the mean or median of the items assigned by experts to each item. The value obtained indicates the degree of relevance of the item. Respect to representativeness, the ideal would be to have a bank of items related to the domain of interest and extract a random sample of items from it.

Construct validity

• • This type of validation is what gives meaning to test scores, allowing you to have evidence that observable behaviors that have been chosen as indicators of the construct are valid. To perform this validation we require: – – – • To carefully define the construct of interest from existing theories about it, and postulate a series of hypotheses about the nature and degree of relationship between the construct (unobservable latent variable) and a number of variables (directly observable behaviors) and between the construct of interest and other constructs. To design the appropriate measuring instrument that must have relevant and representative elements of behavior that are specific and concrete manifestations of the construct. To obtain evidence of the relationship between the scores obtained by applying the test and the hypothesized variables. If we confirm that the relationships postulated in the hypotheses, as predicted by theory, we can consider that both the construct and the test are valid.

a) The multitrait-multimethod matrix • It allows us to analyze the external structure of the test. • Logic: it attempts to measure the same construct using different procedures and different constructs through the same procedure, and after obtaining all measures calculating intercorrelations between them. • If the correlations between measures of the same construct obtained through different procedures are high, the construct will be validated and there will be "convergent validity". • If the correlations between the measurements obtained from different constructs with the same procedure are high, the construct will be validated and there will be "discriminant validity".

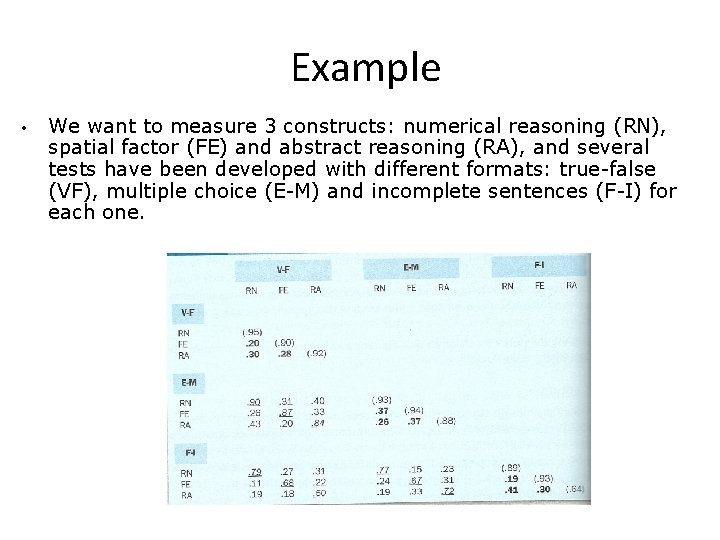

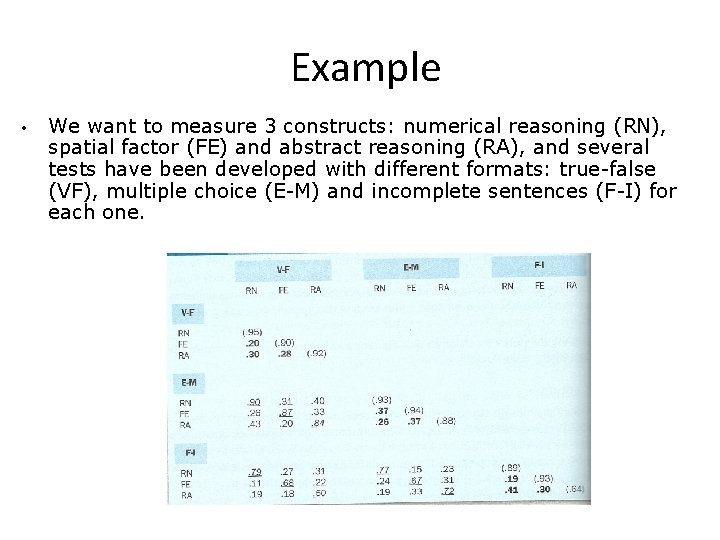

Example • We want to measure 3 constructs: numerical reasoning (RN), spatial factor (FE) and abstract reasoning (RA), and several tests have been developed with different formats: true-false (VF), multiple choice (E-M) and incomplete sentences (F-I) for each one.

• Valuesin parentheses on the diagonal of the matrix: coefficients of reliability. • Values shown in italics and underlined: correlations obtained by measuring the same construct with different procedures. The amount of these valuesprovides information about the convergent validity. • Values shown in bold: correlations obtained by measuring different constructs with the same procedures. To see if there is evidence of discriminant validity we have to compare the values relating to the convergent validity with those who are in bold. Since the first are significantly higher than the second, we can say that there is evidence of discriminant validity.

b) Factor analysis • It allows to test hypotheses about the internal structure of the construct and its relationships with other variables. • It will allow us to discover the structure underlying the scores obtained by subjects in the various test items or in a set of tests. • Exploratory factor analysis: there is no previous hypotheses about the number of dimensions underlying to the construct, it is the technique which provide us with this information. • Confirmatory factor analysis: there are previous hypotheses about the underlying structure and the number of existing dimensions, using the technique we check whether we can accept the proposed hypotheses.

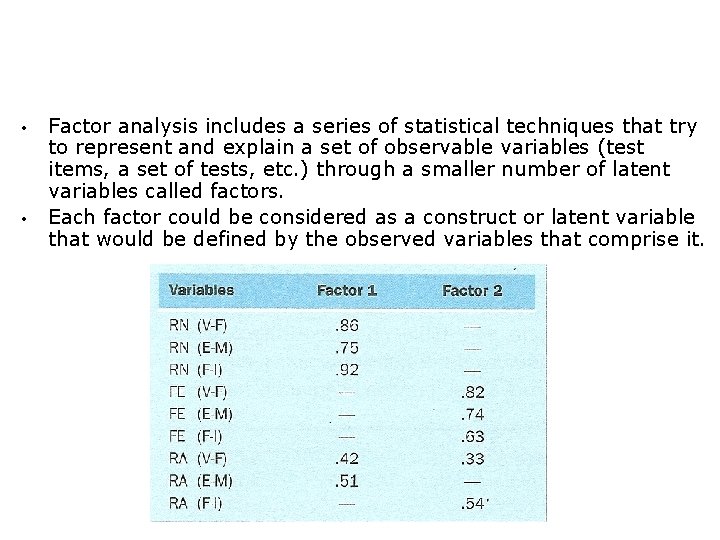

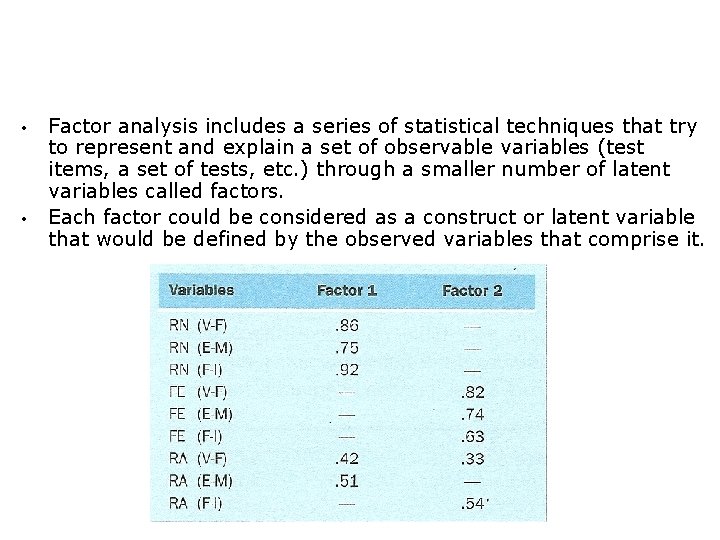

• • Factor analysis includes a series of statistical techniques that try to represent and explain a set of observable variables (test items, a set of tests, etc. ) through a smaller number of latent variables called factors. Each factor could be considered as a construct or latent variable that would be defined by the observed variables that comprise it.

Criterion validity

• This type of validation aims to obtain evidence about the extent to which scores obtained in the test can be effectively used to make inferences about the real behavior of subjects in a criterion that can not be measured directly. • The way to analyze the relationship between the test and the criterion depends on many factors like the complexity of the criteria and the difficulty for define it clearly. • When the criterion measure is obtained after the test: predictive validity (appropriate for the selection or classification of people, for example, jobs). • When the criterion measure is obtained at the same time as the test: concurrent validity (adequate for diagnosis).

Statistical procedures for criterion validity A single predictor test and a single indicator criteria: correlation and simple linear regression

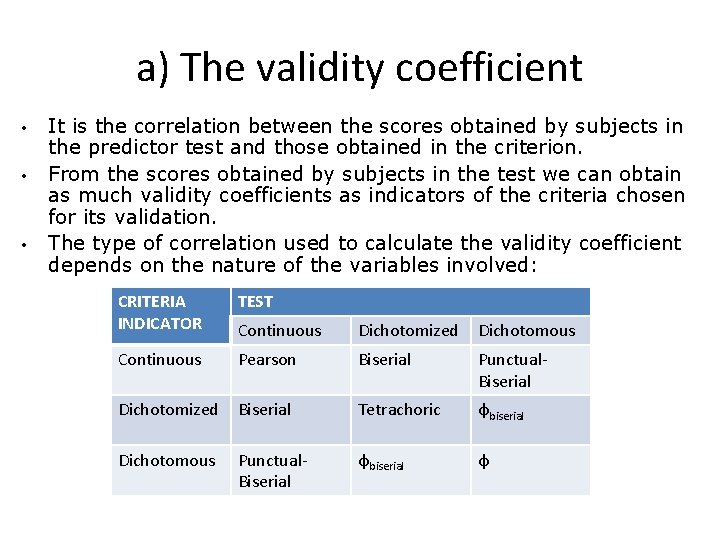

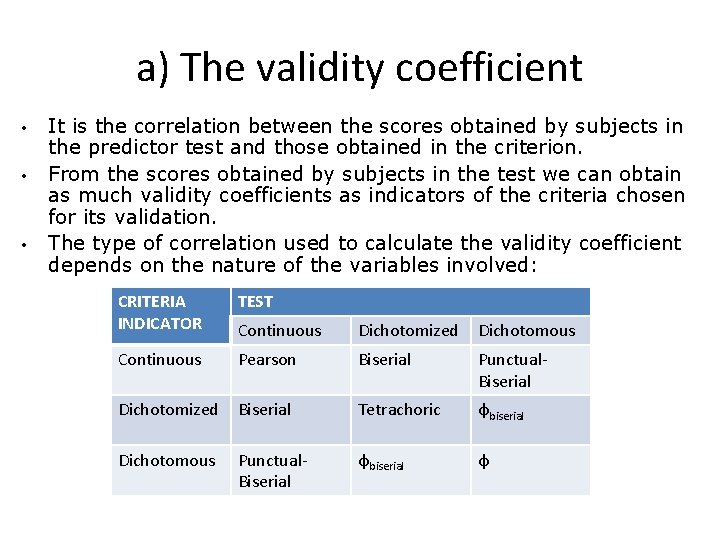

a) The validity coefficient • • • It is the correlation between the scores obtained by subjects in the predictor test and those obtained in the criterion. From the scores obtained by subjects in the test we can obtain as much validity coefficients as indicators of the criteria chosen for its validation. The type of correlation used to calculate the validity coefficient depends on the nature of the variables involved: CRITERIA INDICATOR TEST Continuous Dichotomized Dichotomous Continuous Pearson Biserial Punctual. Biserial Dichotomized Biserial Tetrachoric φbiserial Dichotomous Punctual. Biserial φbiserial φ

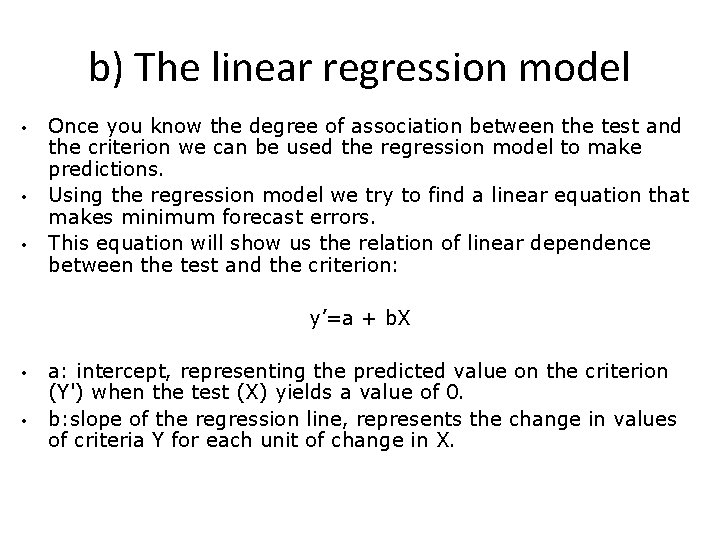

b) The linear regression model • • • Once you know the degree of association between the test and the criterion we can be used the regression model to make predictions. Using the regression model we try to find a linear equation that makes minimum forecast errors. This equation will show us the relation of linear dependence between the test and the criterion: y’=a + b. X • • a: intercept, representing the predicted value on the criterion (Y') when the test (X) yields a value of 0. b: slope of the regression line, represents the change in values of criteria Y for each unit of change in X.

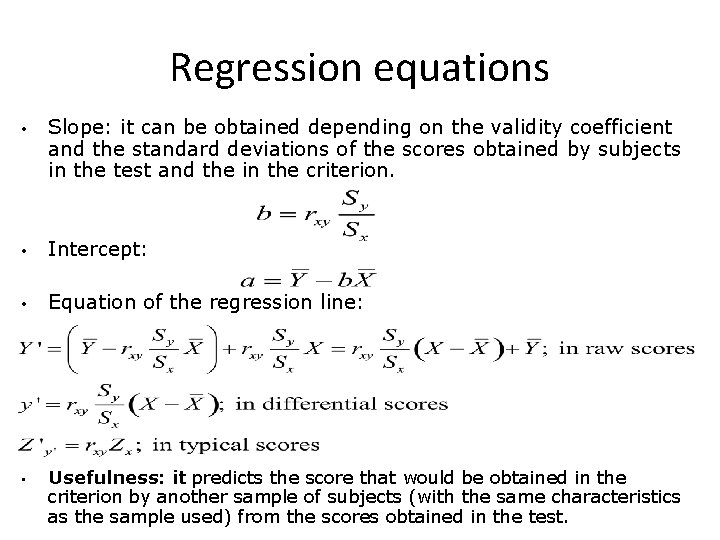

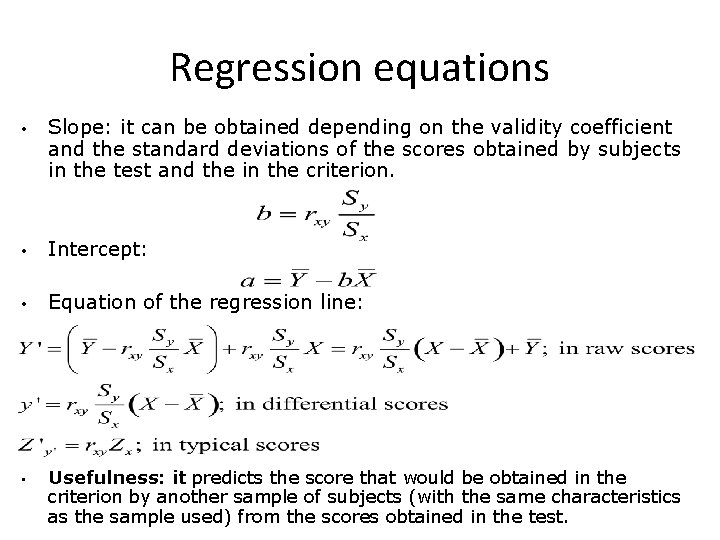

Regression equations • Slope: it can be obtained depending on the validity coefficient and the standard deviations of the scores obtained by subjects in the test and the in the criterion. • Intercept: • Equation of the regression line: • Usefulness: it predicts the score that would be obtained in the criterion by another sample of subjects (with the same characteristics as the sample used) from the scores obtained in the test.

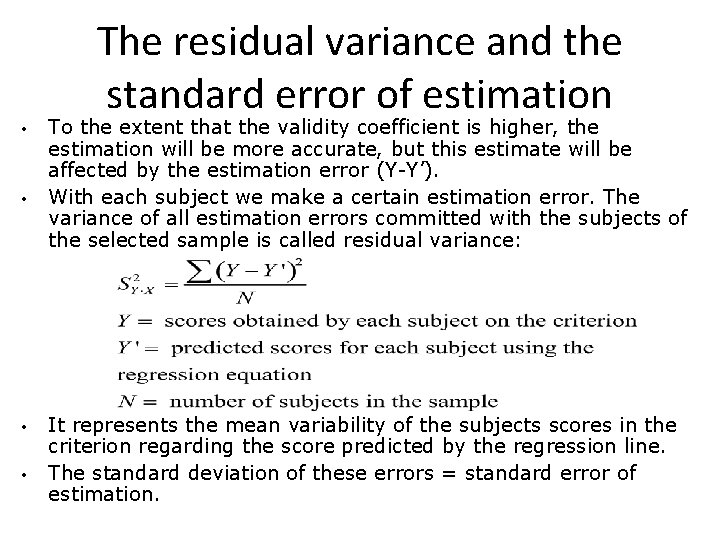

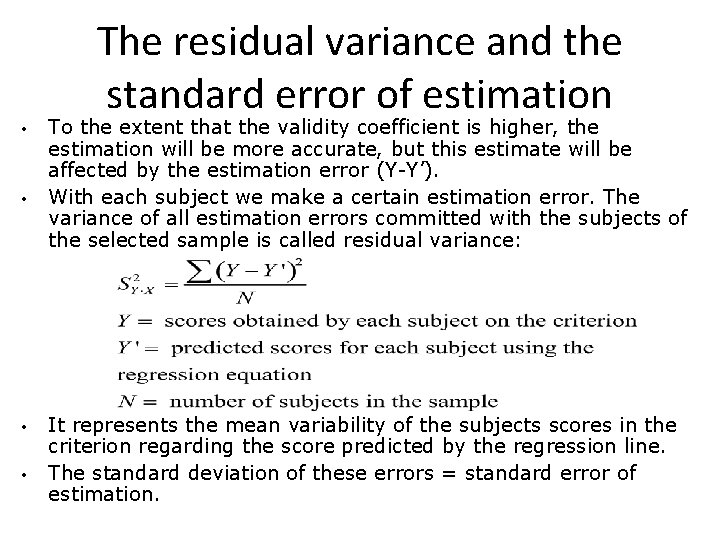

The residual variance and the standard error of estimation • • To the extent that the validity coefficient is higher, the estimation will be more accurate, but this estimate will be affected by the estimation error (Y-Y’). With each subject we make a certain estimation error. The variance of all estimation errors committed with the subjects of the selected sample is called residual variance: It represents the mean variability of the subjects scores in the criterion regarding the score predicted by the regression line. The standard deviation of these errors = standard error of estimation.

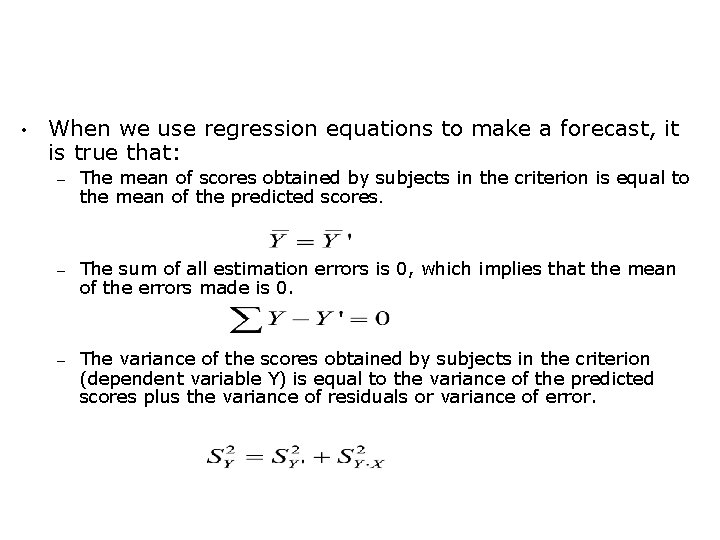

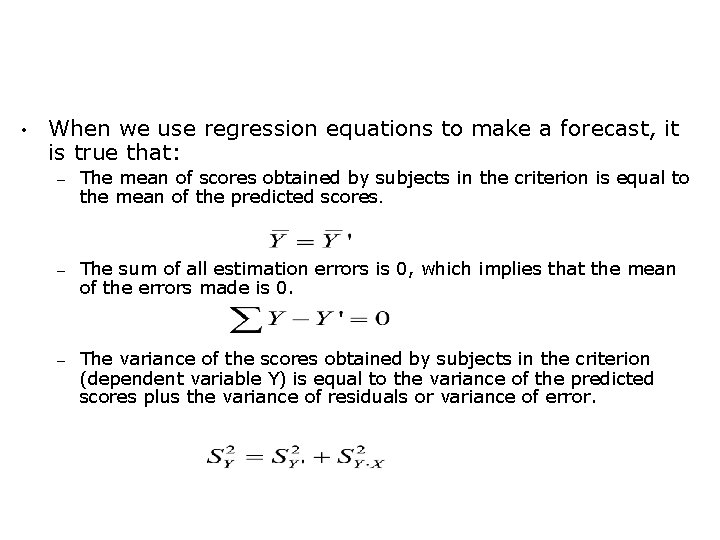

• When we use regression equations to make a forecast, it is true that: – The mean of scores obtained by subjects in the criterion is equal to the mean of the predicted scores. – The sum of all estimation errors is 0, which implies that the mean of the errors made is 0. – The variance of the scores obtained by subjects in the criterion (dependent variable Y) is equal to the variance of the predicted scores plus the variance of residuals or variance of error.

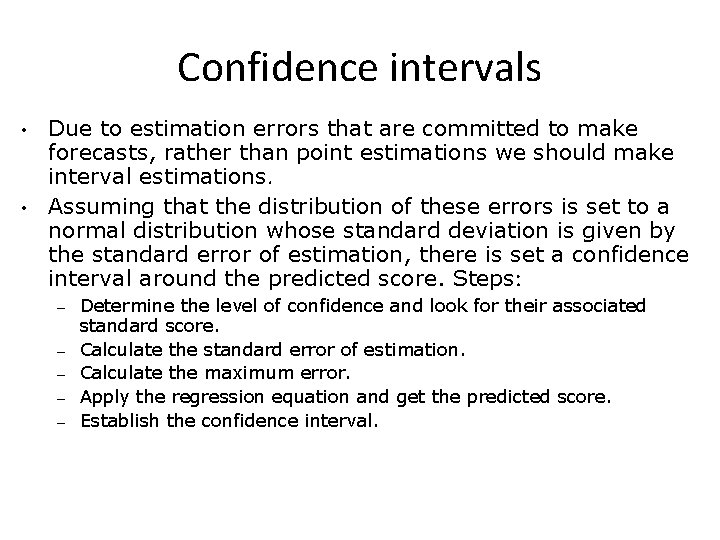

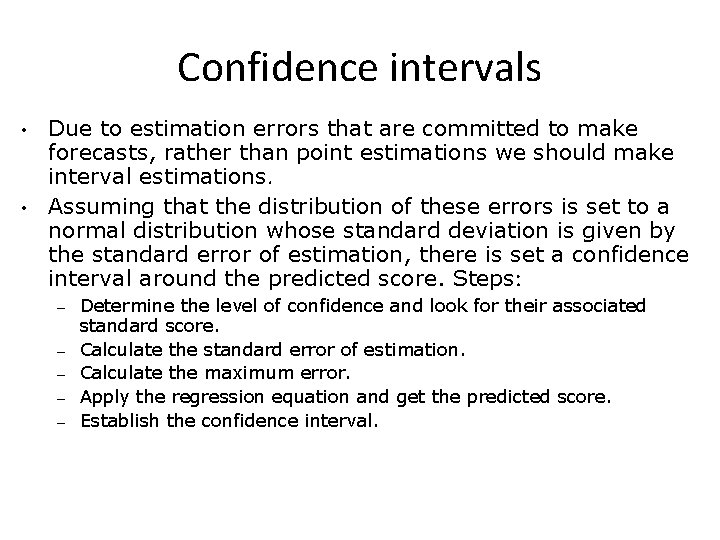

Confidence intervals • • Due to estimation errors that are committed to make forecasts, rather than point estimations we should make interval estimations. Assuming that the distribution of these errors is set to a normal distribution whose standard deviation is given by the standard error of estimation, there is set a confidence interval around the predicted score. Steps: – – – Determine the level of confidence and look for their associated standard score. Calculate the standard error of estimation. Calculate the maximum error. Apply the regression equation and get the predicted score. Establish the confidence interval.

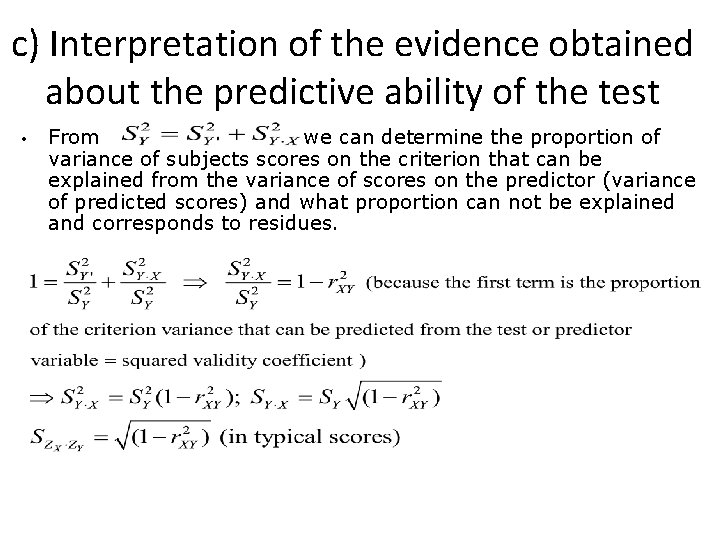

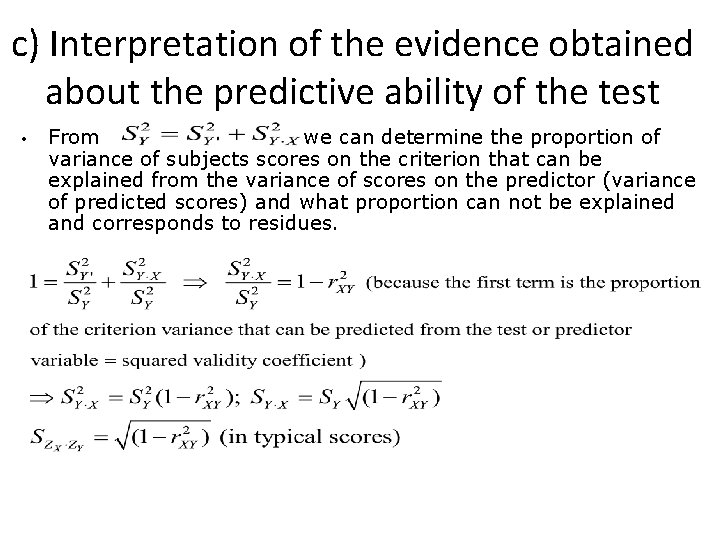

c) Interpretation of the evidence obtained about the predictive ability of the test • From we can determine the proportion of variance of subjects scores on the criterion that can be explained from the variance of scores on the predictor (variance of predicted scores) and what proportion can not be explained and corresponds to residues.

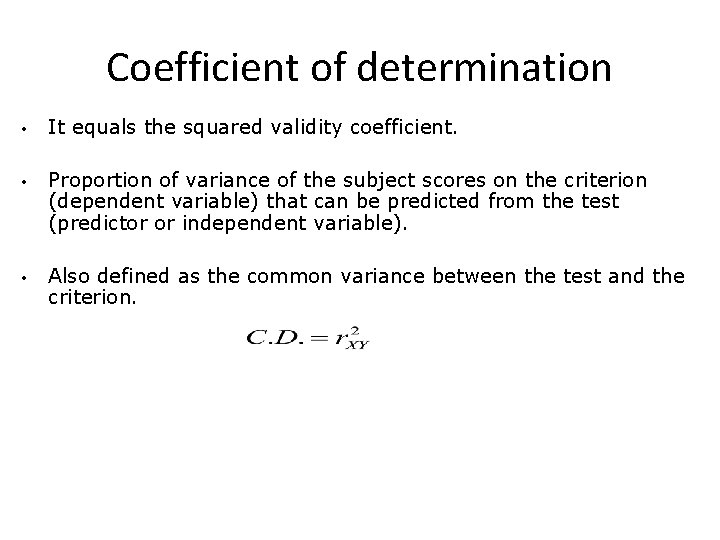

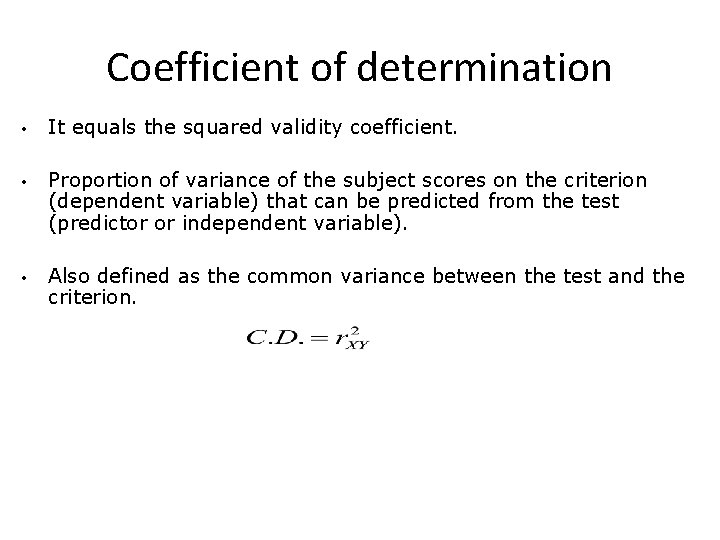

Coefficient of determination • It equals the squared validity coefficient. • Proportion of variance of the subject scores on the criterion (dependent variable) that can be predicted from the test (predictor or independent variable). • Also defined as the common variance between the test and the criterion.

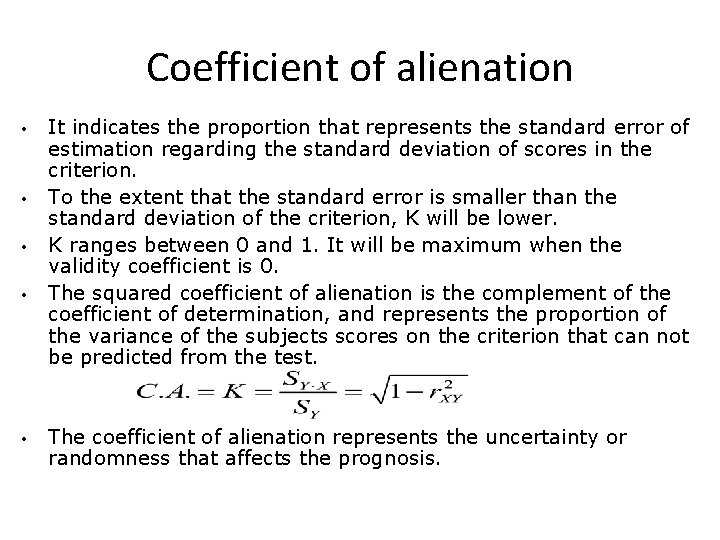

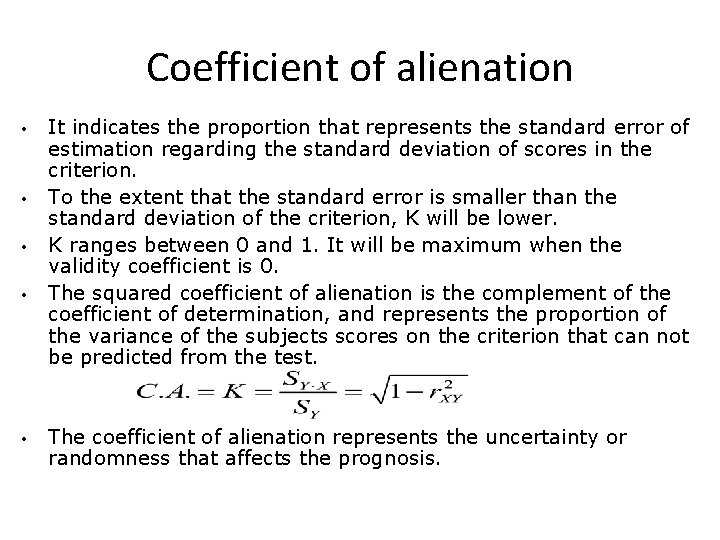

Coefficient of alienation • • • It indicates the proportion that represents the standard error of estimation regarding the standard deviation of scores in the criterion. To the extent that the standard error is smaller than the standard deviation of the criterion, K will be lower. K ranges between 0 and 1. It will be maximum when the validity coefficient is 0. The squared coefficient of alienation is the complement of the coefficient of determination, and represents the proportion of the variance of the subjects scores on the criterion that can not be predicted from the test. The coefficient of alienation represents the uncertainty or randomness that affects the prognosis.

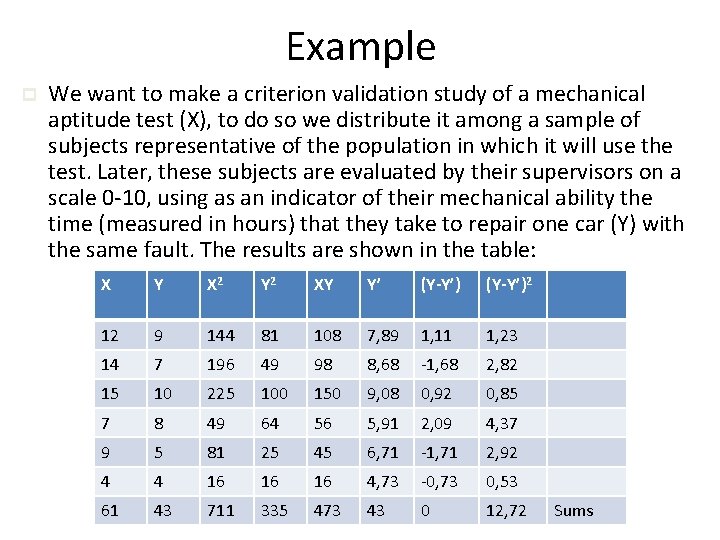

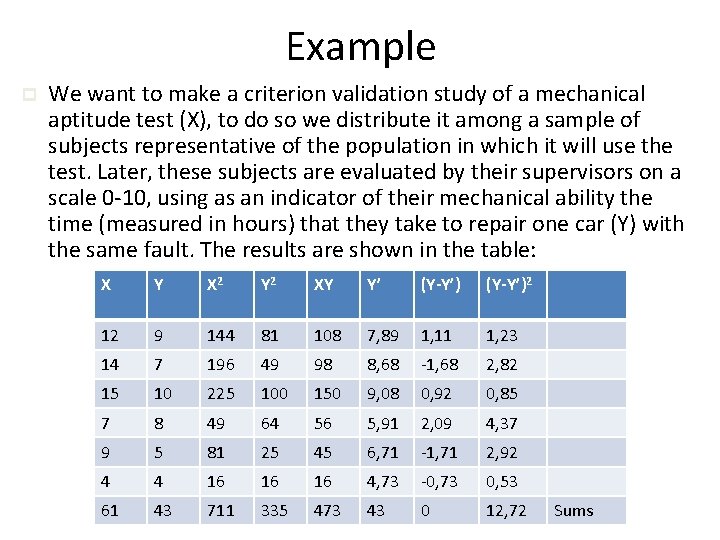

Example p We want to make a criterion validation study of a mechanical aptitude test (X), to do so we distribute it among a sample of subjects representative of the population in which it will use the test. Later, these subjects are evaluated by their supervisors on a scale 0 -10, using as an indicator of their mechanical ability the time (measured in hours) that they take to repair one car (Y) with the same fault. The results are shown in the table: X Y X 2 Y 2 XY Y’ (Y-Y’)2 12 9 144 81 108 7, 89 1, 11 1, 23 14 7 196 49 98 8, 68 -1, 68 2, 82 15 10 225 100 150 9, 08 0, 92 0, 85 7 8 49 64 56 5, 91 2, 09 4, 37 9 5 81 25 45 6, 71 -1, 71 2, 92 4 4 16 16 16 4, 73 -0, 73 0, 53 61 43 711 335 473 43 0 12, 72 Sums

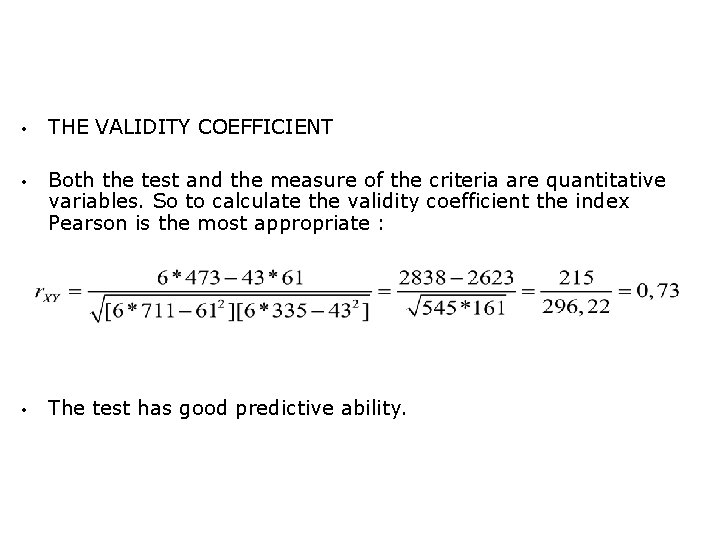

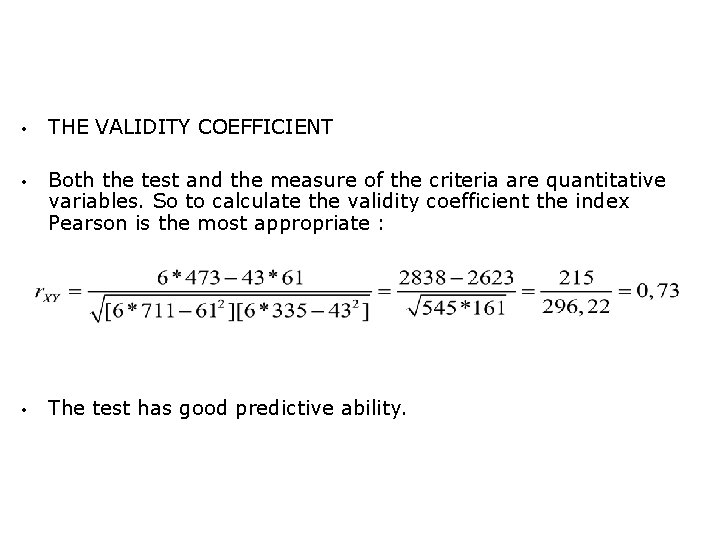

• THE VALIDITY COEFFICIENT • Both the test and the measure of the criteria are quantitative variables. So to calculate the validity coefficient the index Pearson is the most appropriate : • The test has good predictive ability.

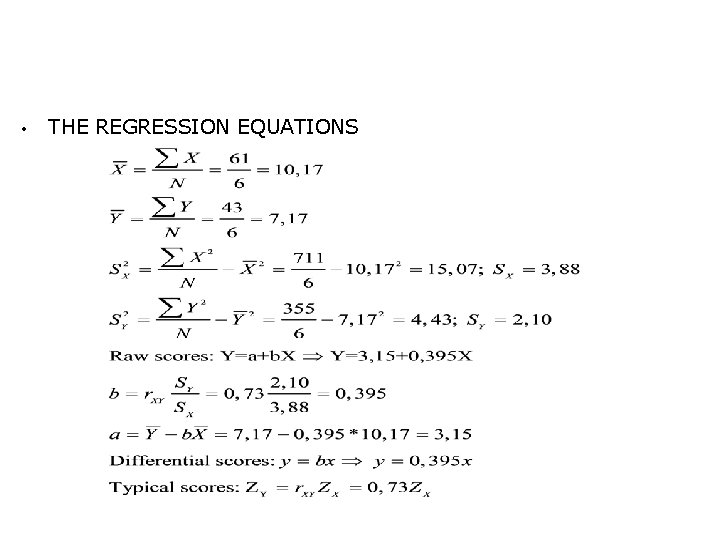

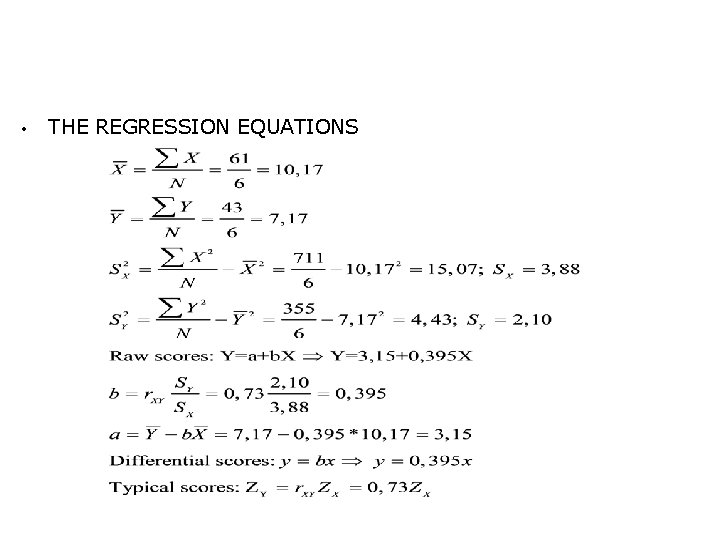

• THE REGRESSION EQUATIONS

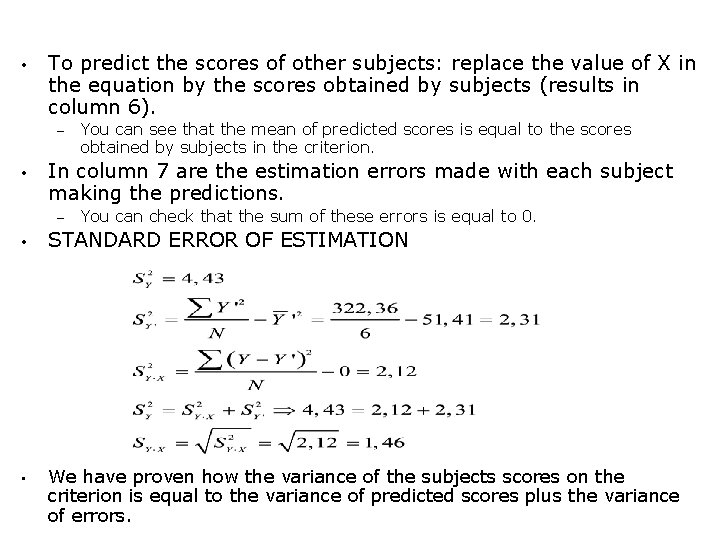

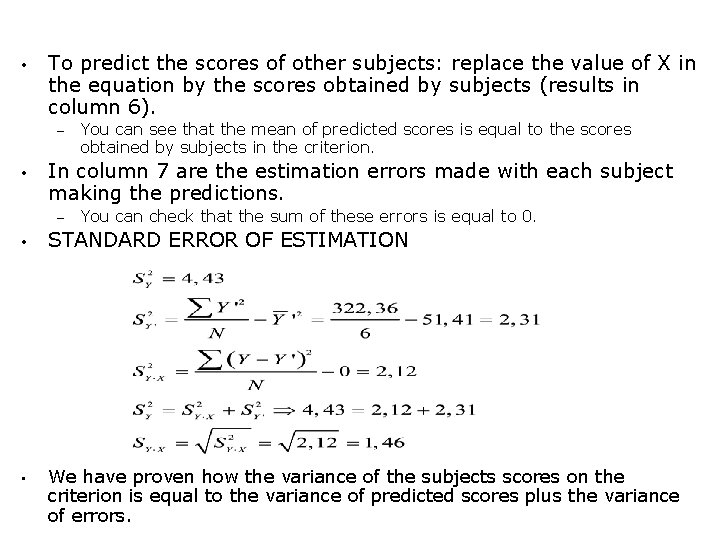

• To predict the scores of other subjects: replace the value of X in the equation by the scores obtained by subjects (results in column 6). – • You can see that the mean of predicted scores is equal to the scores obtained by subjects in the criterion. In column 7 are the estimation errors made with each subject making the predictions. – You can check that the sum of these errors is equal to 0. • STANDARD ERROR OF ESTIMATION • We have proven how the variance of the subjects scores on the criterion is equal to the variance of predicted scores plus the variance of errors.

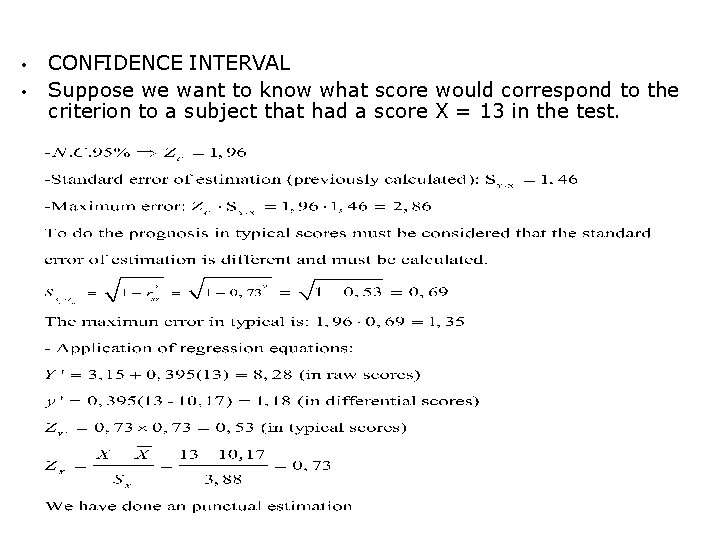

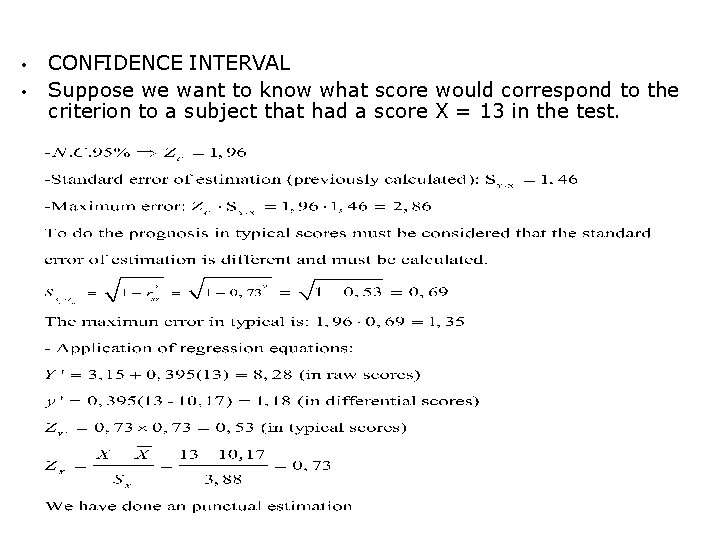

• • CONFIDENCE INTERVAL Suppose we want to know what score would correspond to the criterion to a subject that had a score X = 13 in the test.

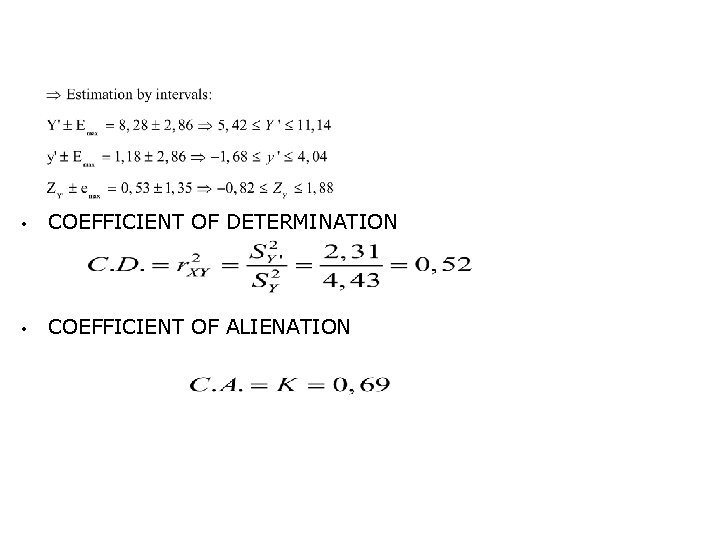

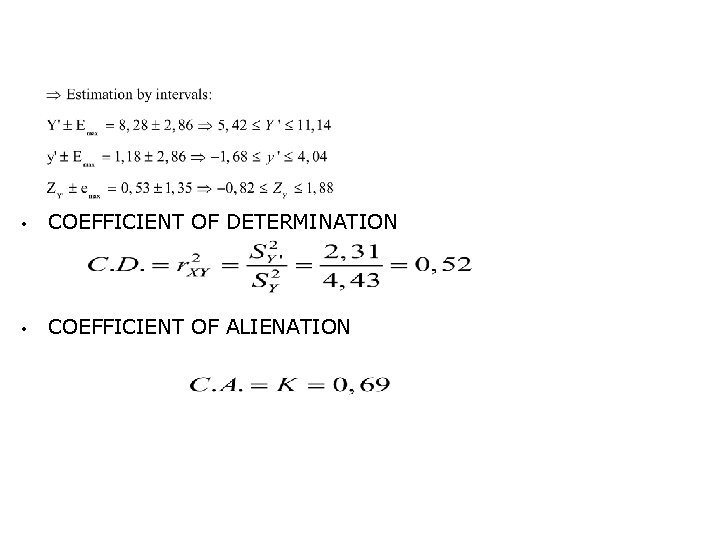

• COEFFICIENT OF DETERMINATION • COEFFICIENT OF ALIENATION

• There is 52% of common variance between the two variables, or from the variation of scores obtained by subjects in the test we can predict 52% of the scores variance of the same subjects on the criterion, leaving 48% of the scores variance on the criteria not explained by the test (48% of error variance). • The standard error of estimation represents 69% of the standard deviation of scores on the criteria, so there is a high % of uncertainty in the forecasts.