10 Datamining Clustering Methods Clustering a set is

- Slides: 20

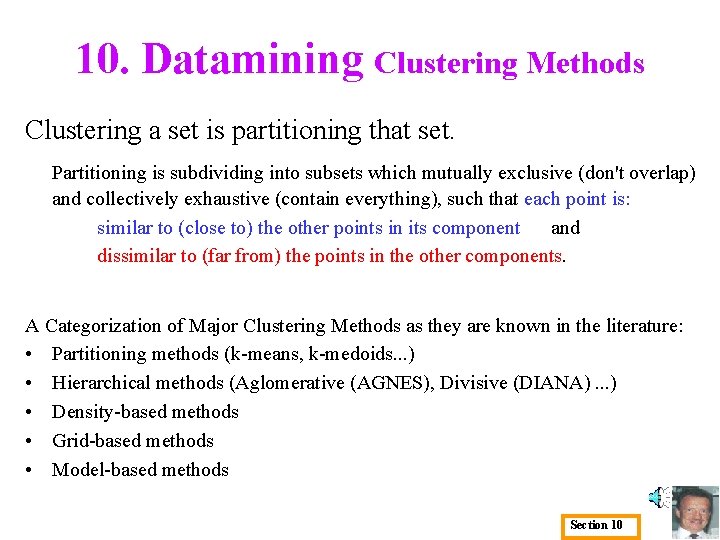

10. Datamining Clustering Methods Clustering a set is partitioning that set. Partitioning is subdividing into subsets which mutually exclusive (don't overlap) and collectively exhaustive (contain everything), such that each point is: similar to (close to) the other points in its component and dissimilar to (far from) the points in the other components. A Categorization of Major Clustering Methods as they are known in the literature: • Partitioning methods (k-means, k-medoids. . . ) • Hierarchical methods (Aglomerative (AGNES), Divisive (DIANA). . . ) • Density-based methods • Grid-based methods • Model-based methods Section 10

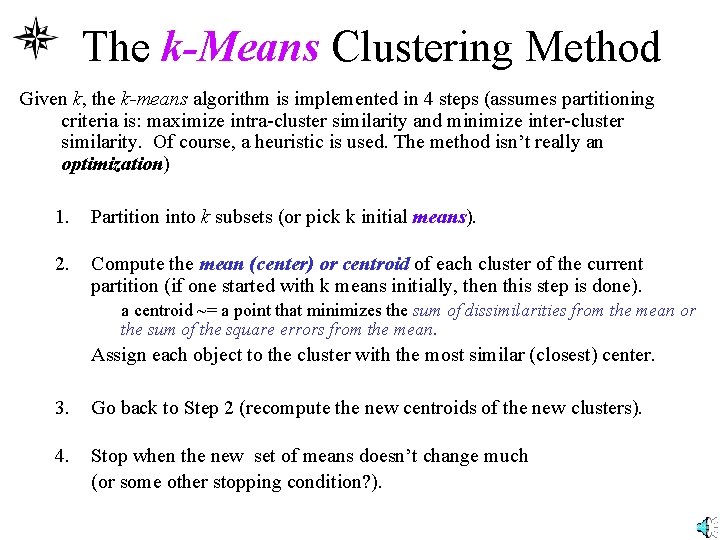

The k-Means Clustering Method Given k, the k-means algorithm is implemented in 4 steps (assumes partitioning criteria is: maximize intra-cluster similarity and minimize inter-cluster similarity. Of course, a heuristic is used. The method isn’t really an optimization) 1. Partition into k subsets (or pick k initial means). 2. Compute the mean (center) or centroid of each cluster of the current partition (if one started with k means initially, then this step is done). a centroid ~= a point that minimizes the sum of dissimilarities from the mean or the sum of the square errors from the mean. Assign each object to the cluster with the most similar (closest) center. 3. Go back to Step 2 (recompute the new centroids of the new clusters). 4. Stop when the new set of means doesn’t change much (or some other stopping condition? ).

k-Means Clustering annimated centroids are red, Step 1: assign each point to the closest centroid. set points are blue Step 3: re-assign each point to closest centroid. Step 2: recalculate centroids. 10 10 9 9 8 8 7 7 6 6 5 5 4 4 3 3 2 2 1 1 0 0 0 1 2 3 4 5 6 7 8 9 10 Step 4: repeat 2 and 3 until Stop_Condition=true What are the Strengths of k-Means Clustering? It is relatively efficient: O(tkn), n is # objects, k is # clusters t is # iterations. Normally, k, t << n. Weakness? It is applicable only when mean is defined (e. g. , a vector space or similarity space). There is a need to specify k, the number of clusters, in advance. It is sensitive to noisy data and outliers. It can fail to converge (or converge too slowly).

The K-Medoids Clustering Method • Find representative objects, called medoids, (which must be an actual objects from the set, where as the means seldom are points in the set itself). • PAM (Partitioning Around Medoids, 1987) – Choose an initial set of k medoids. – Iteratively replace one of the medoids by a non-medoid. – If it improves the aggregate similarity measure, retain the replacement. Do this over all medoid-nonmedoid pairs. – PAM works for small data sets, but it does not scale well to large data sets. • Later Modifications of PAM: – CLARA (Clustering LARge Applications) (Kaufmann, Rousseeuw, 1990) Sub-samples then applies PAM. – CLARANS (Clustering Large Applications based on RANdom Search) (Ng & Han, 1994): Randomized the sampling of CLARA.

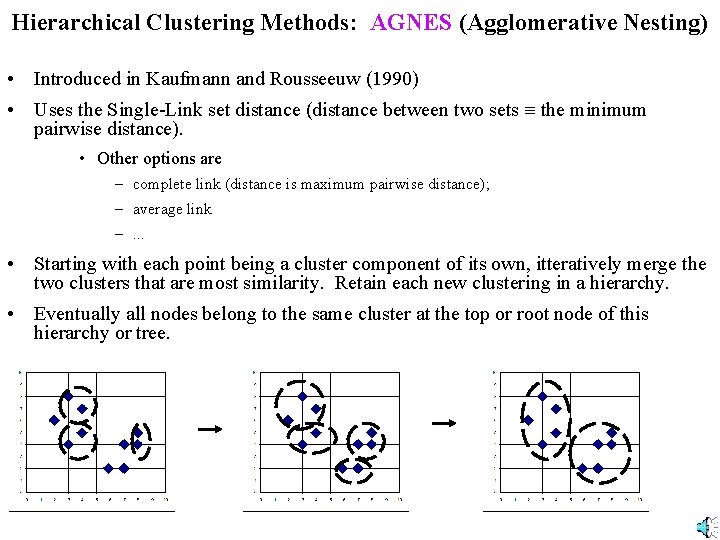

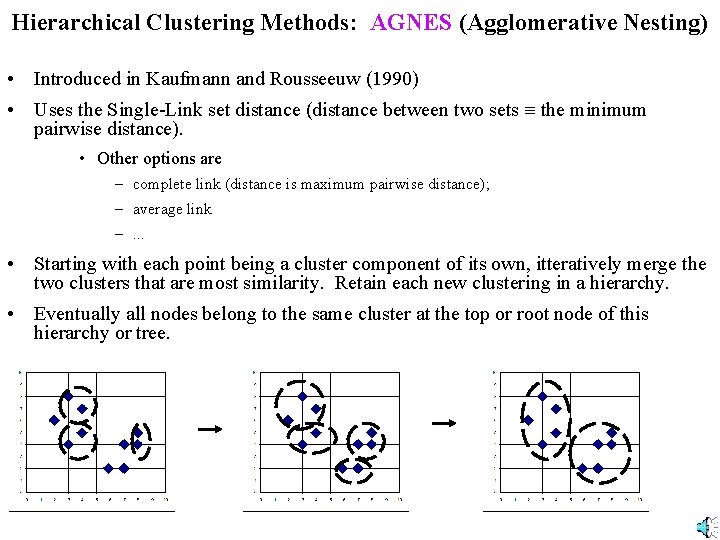

Hierarchical Clustering Methods: AGNES (Agglomerative Nesting) • Introduced in Kaufmann and Rousseeuw (1990) • Uses the Single-Link set distance (distance between two sets the minimum pairwise distance). • Other options are – complete link (distance is maximum pairwise distance); – average link –. . . • Starting with each point being a cluster component of its own, itteratively merge the two clusters that are most similarity. Retain each new clustering in a hierarchy. • Eventually all nodes belong to the same cluster at the top or root node of this hierarchy or tree.

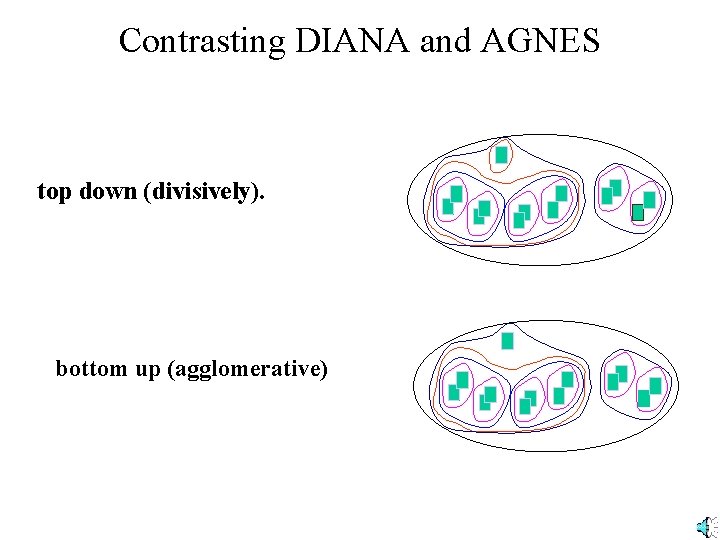

DIANA (Divisive Analysis) • Introduced in Kaufmann and Rousseeuw (1990) • Reverse AGNES (initially all objects are in one cluster; then itteratively split cluster components into two components according to some criteria (e. g. , maximize some aggregate measure of pairwise dissimilarity again) • Eventually each node forms a cluster on its own

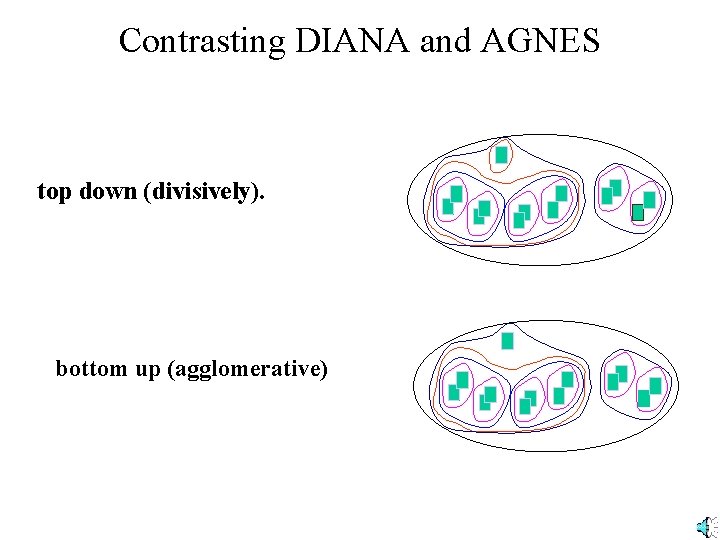

Contrasting DIANA and AGNES top down (divisively). bottom up (agglomerative)

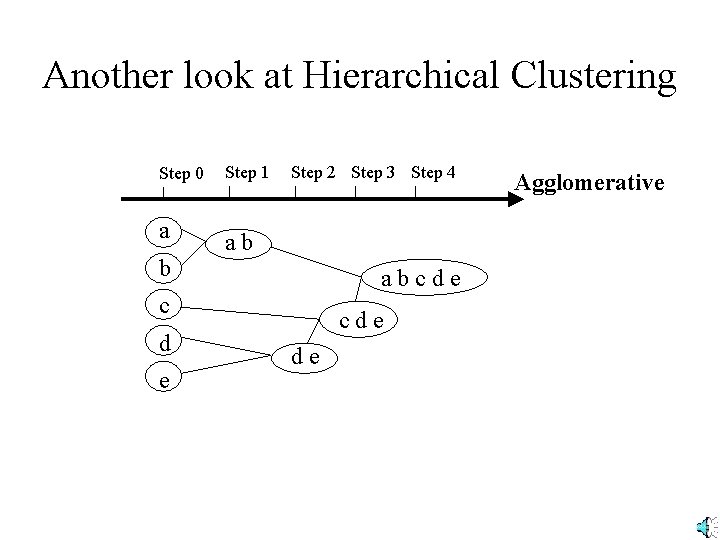

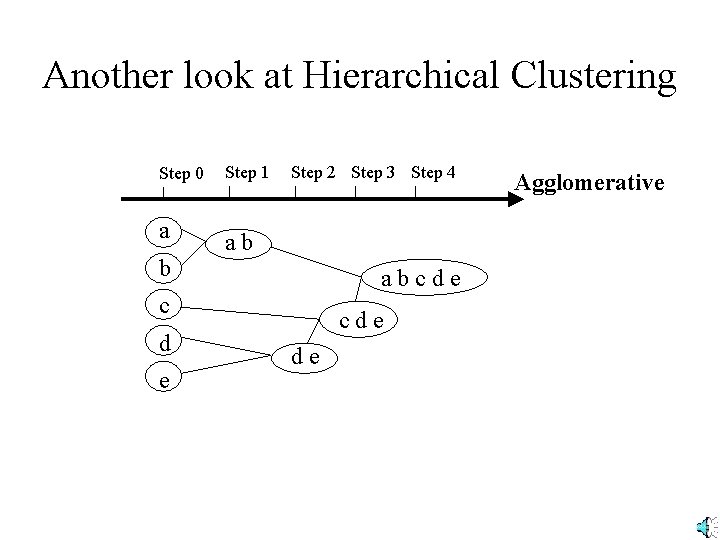

Another look at Hierarchical Clustering Step 0 a b Step 1 Step 2 Step 3 Step 4 ab abcde c d e cde de Agglomerative

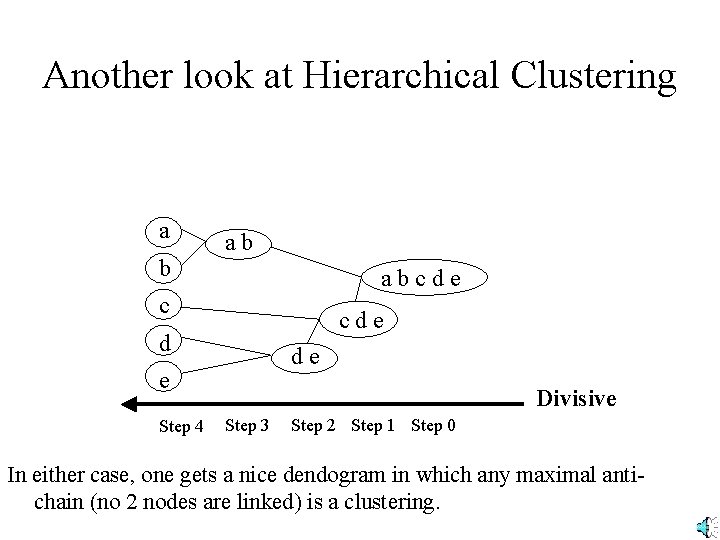

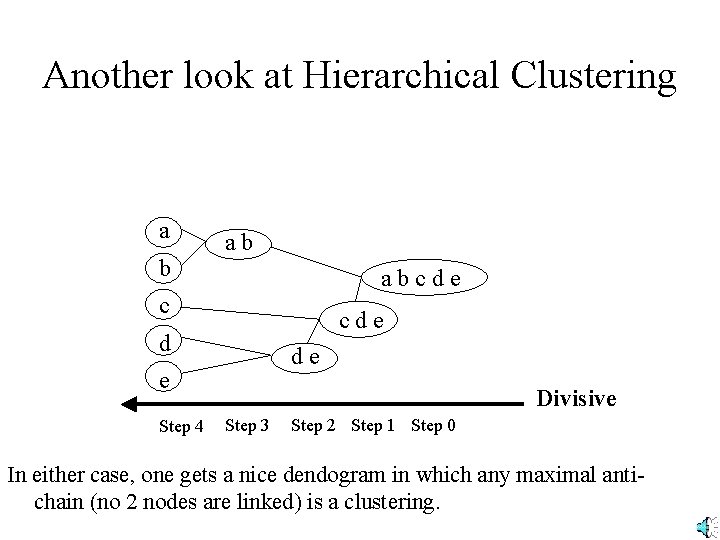

Another look at Hierarchical Clustering a b ab abcde c cde d de e Step 4 Divisive Step 3 Step 2 Step 1 Step 0 In either case, one gets a nice dendogram in which any maximal antichain (no 2 nodes are linked) is a clustering.

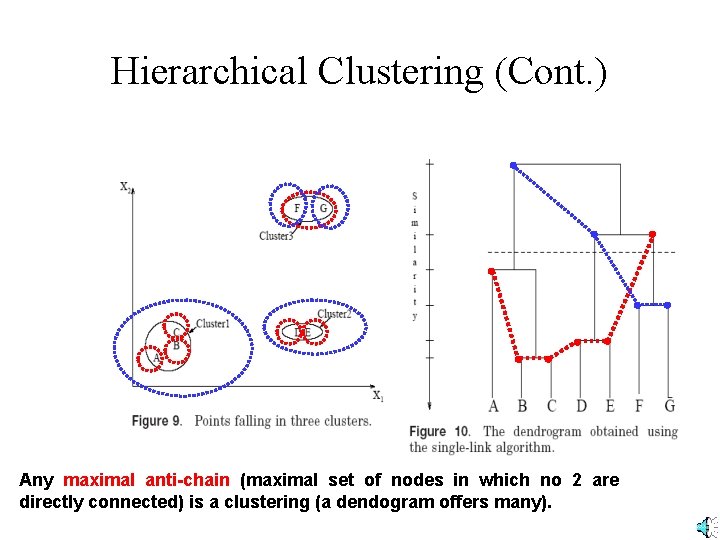

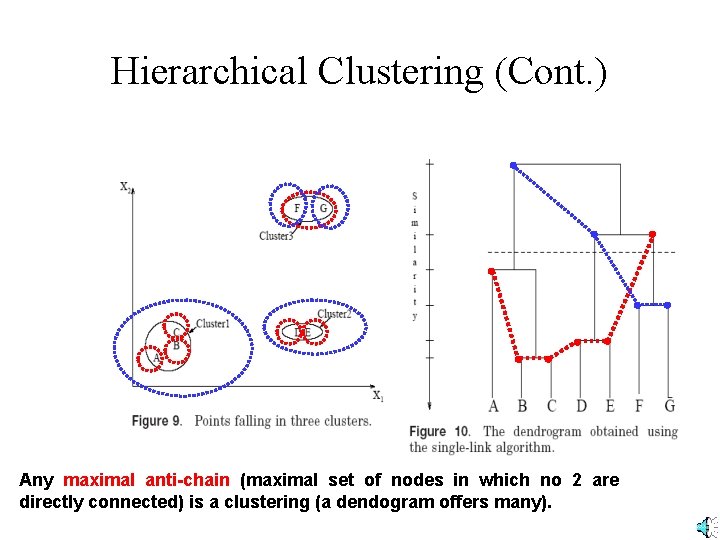

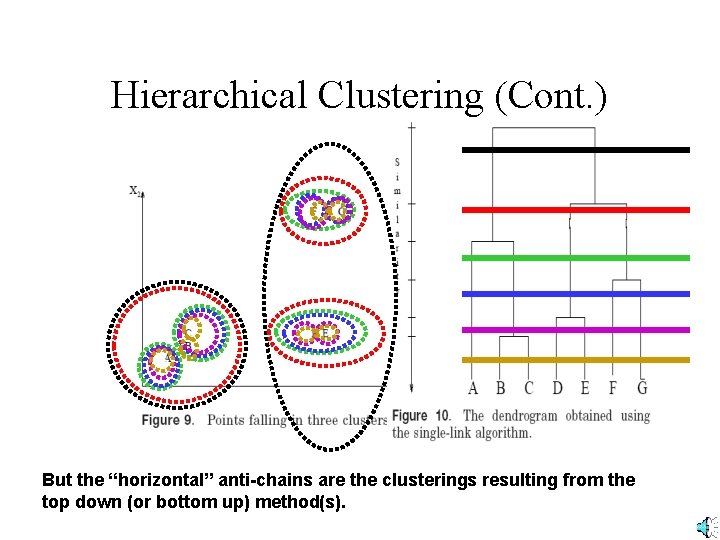

Hierarchical Clustering (Cont. ) Any maximal anti-chain (maximal set of nodes in which no 2 are directly connected) is a clustering (a dendogram offers many).

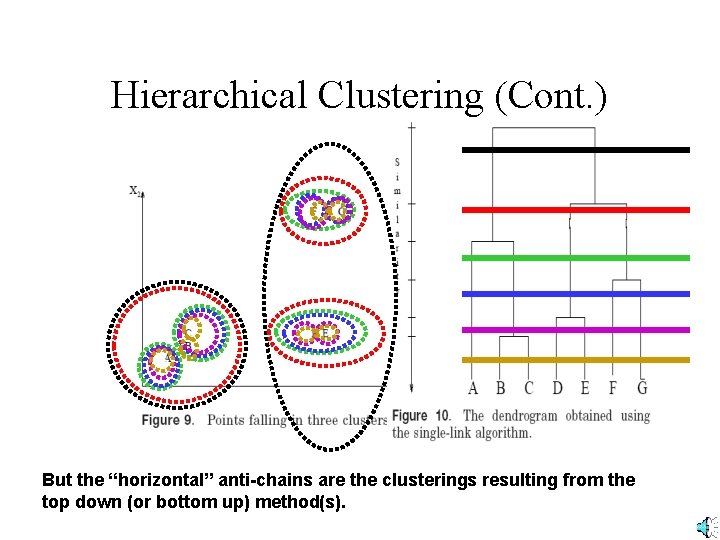

Hierarchical Clustering (Cont. ) But the “horizontal” anti-chains are the clusterings resulting from the top down (or bottom up) method(s).

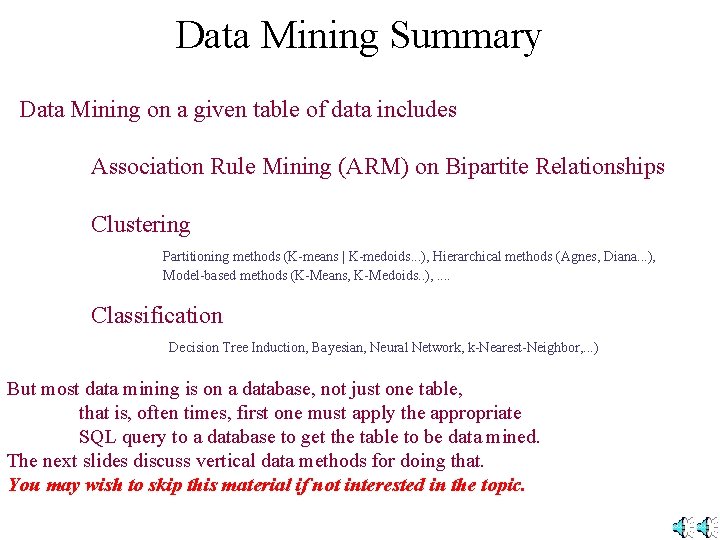

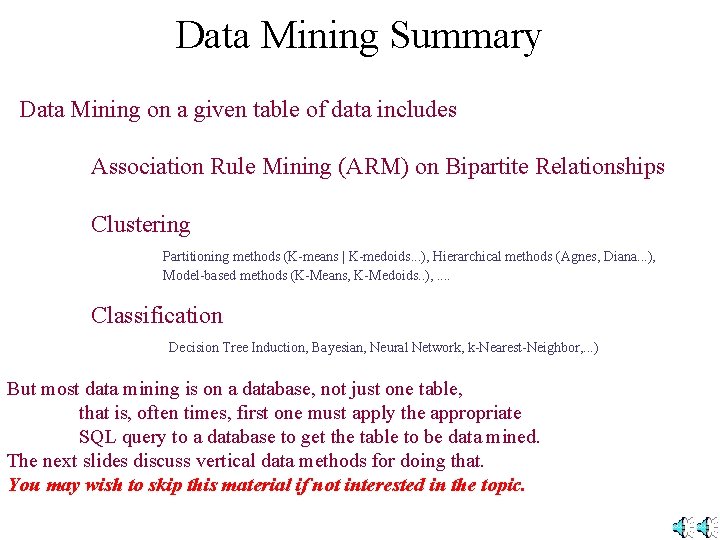

Data Mining Summary Data Mining on a given table of data includes Association Rule Mining (ARM) on Bipartite Relationships Clustering Partitioning methods (K-means | K-medoids. . . ), Hierarchical methods (Agnes, Diana. . . ), Model-based methods (K-Means, K-Medoids. . ), . . Classification Decision Tree Induction, Bayesian, Neural Network, k-Nearest-Neighbor, . . . ) But most data mining is on a database, not just one table, that is, often times, first one must apply the appropriate SQL query to a database to get the table to be data mined. The next slides discuss vertical data methods for doing that. You may wish to skip this material if not interested in the topic.

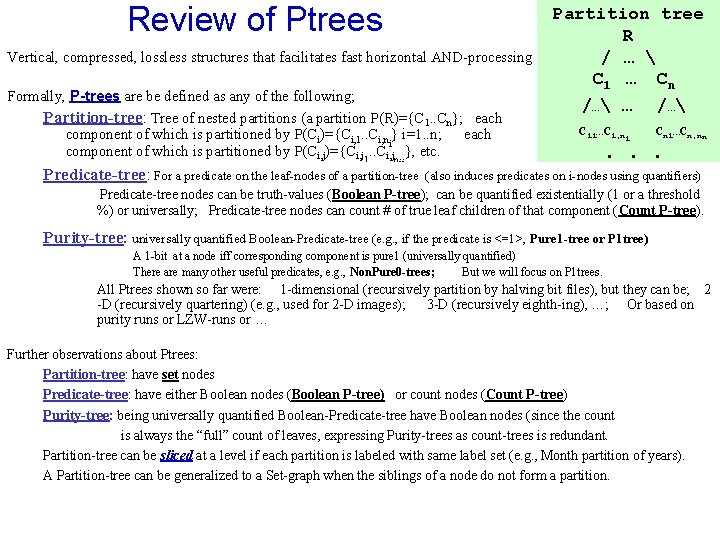

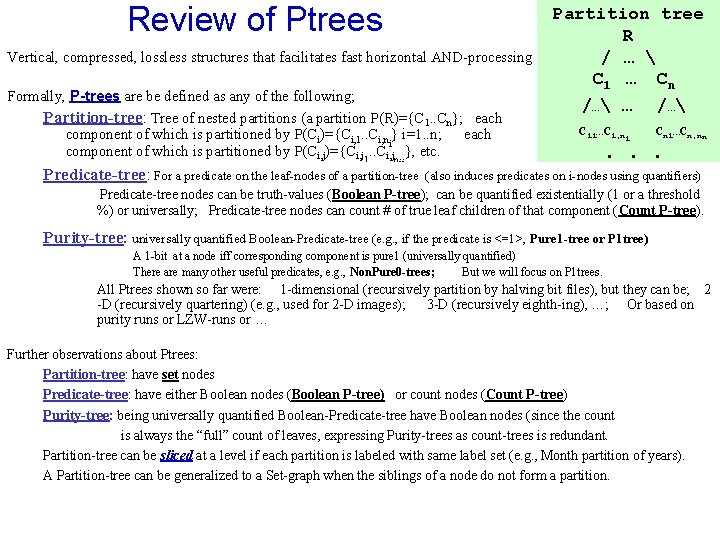

Review of Ptrees Vertical, compressed, lossless structures that facilitates fast horizontal AND-processing Formally, P-trees are be defined as any of the following; Partition-tree: Tree of nested partitions (a partition P(R)={C 1. . Cn}; each component of which is partitioned by P(Ci)={Ci, 1. . Ci, ni} i=1. . n; each component of which is partitioned by P(Ci, j)={Ci, j 1. . Ci, jn }, etc. Partition tree R / … C 1 … C n /… … / … C 11…C 1, n 1 ij Predicate-tree: For a predicate on the leaf-nodes of a partition-tree Cn 1…Cn, nn . . . (also induces predicates on i-nodes using quantifiers) Predicate-tree nodes can be truth-values (Boolean P-tree); can be quantified existentially (1 or a threshold %) or universally; Predicate-tree nodes can count # of true leaf children of that component (Count P-tree). Purity-tree: universally quantified Boolean-Predicate-tree (e. g. , if the predicate is <=1>, Pure 1 -tree or P 1 tree) A 1 -bit at a node iff corresponding component is pure 1 (universally quantified) There are many other useful predicates, e. g. , Non. Pure 0 -trees; But we will focus on P 1 trees. All Ptrees shown so far were: 1 -dimensional (recursively partition by halving bit files), but they can be; 2 -D (recursively quartering) (e. g. , used for 2 -D images); 3 -D (recursively eighth-ing), …; Or based on purity runs or LZW-runs or … Further observations about Ptrees: Partition-tree: have set nodes Predicate-tree: have either Boolean nodes (Boolean P-tree) or count nodes (Count P-tree) Purity-tree: being universally quantified Boolean-Predicate-tree have Boolean nodes (since the count is always the “full” count of leaves, expressing Purity-trees as count-trees is redundant. Partition-tree can be sliced at a level if each partition is labeled with same label set (e. g. , Month partition of years). A Partition-tree can be generalized to a Set-graph when the siblings of a node do not form a partition.

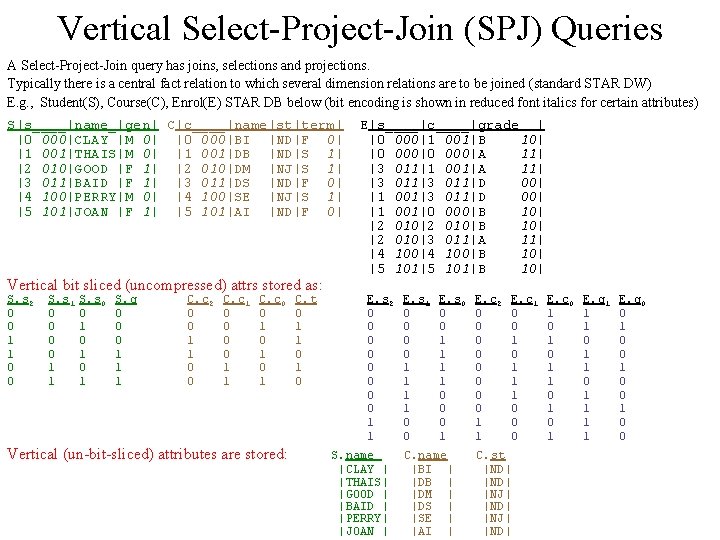

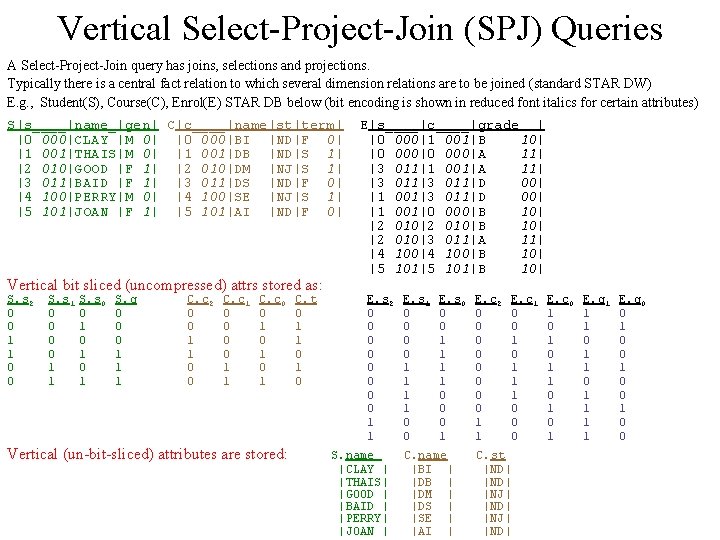

Vertical Select-Project-Join (SPJ) Queries A Select-Project-Join query has joins, selections and projections. Typically there is a central fact relation to which several dimension relations are to be joined (standard STAR DW) E. g. , Student(S), Course(C), Enrol(E) STAR DB below (bit encoding is shown in reduced font italics for certain attributes) S|s____|name_|gen| C|c____|name|st|term| |0 000|CLAY |M 0| |0 000|BI |ND|F 0| |1 001|THAIS|M 0| |1 001|DB |ND|S 1| |2 010|GOOD |F 1| |2 010|DM |NJ|S 1| |3 011|BAID |F 1| |3 011|DS |ND|F 0| |4 100|PERRY|M 0| |4 100|SE |NJ|S 1| |5 101|JOAN |F 1| |5 101|AI |ND|F 0| Vertical bit sliced (uncompressed) attrs stored as: S. s 2 0 0 1 1 0 0 S. s 1 0 0 1 1 S. s 0 0 1 0 1 S. g 0 0 0 1 1 1 C. c 2 0 0 1 1 0 0 C. c 1 0 0 1 1 C. c 0 0 1 0 1 Vertical (un-bit-sliced) attributes are stored: C. t 0 1 1 0 E|s____|c____|grade | |0 000|1 001|B 10| |0 000|A 11| |3 011|1 001|A 11| |3 011|D 00| |1 001|3 011|D 00| |1 001|0 000|B 10| |2 010|B 10| |2 010|3 011|A 11| |4 100|B 10| |5 101|B 10| E. s 2 0 0 0 0 1 1 S. name |CLAY | |THAIS| |GOOD | |BAID | |PERRY| |JOAN | E. s 1 0 0 1 1 0 0 E. s 0 0 0 1 1 0 0 0 1 C. name |BI | |DB | |DM | |DS | |SE | |AI | E. c 2 0 0 0 0 1 1 E. c 1 0 0 1 1 1 0 0 0 C. st |ND| |NJ| |ND| E. c 0 1 0 1 E. g 1 1 1 0 1 1 E. g 0 0 1 0 0

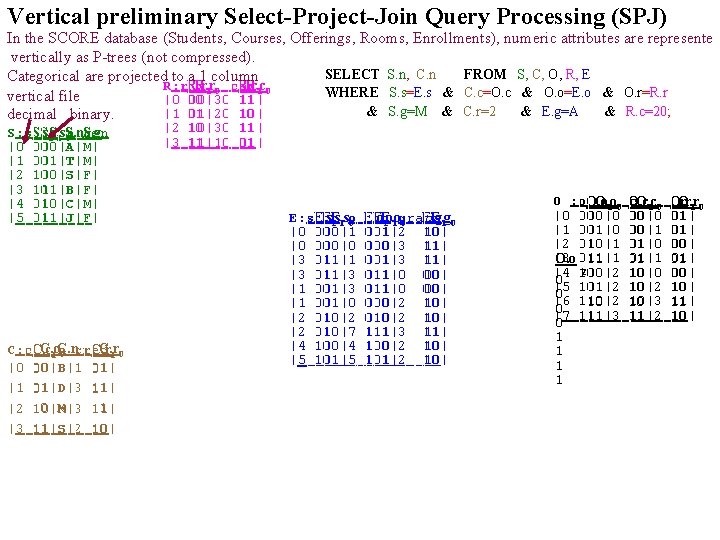

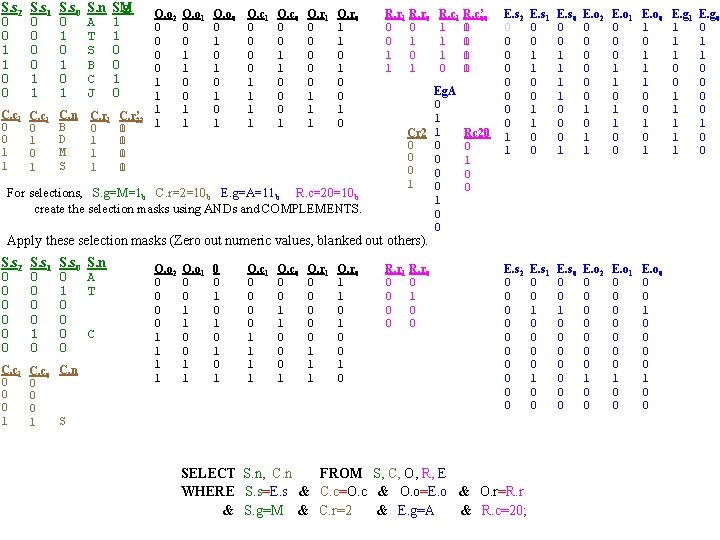

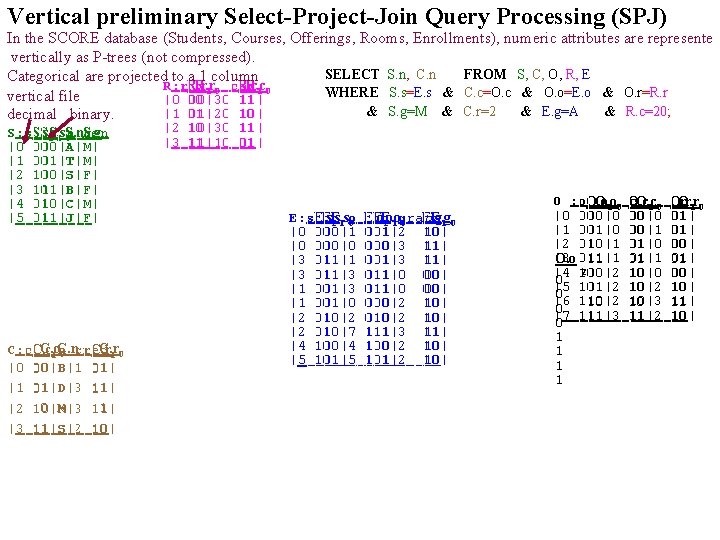

Vertical preliminary Select-Project-Join Query Processing (SPJ) In the SCORE database (Students, Courses, Offerings, Rooms, Enrollments), numeric attributes are represente vertically as P-trees (not compressed). SELECT S. n, C. n FROM S, C, O, R, E Categorical are projected to a 1 column R: r. R. r cap R. r R. c 10 10 WHERE S. s=E. s & C. c=O. c & O. o=E. o & O. r=R. r vertical file |0 0 00|30 0 1 11| 1 & S. g=M & C. r=2 & E. g=A & R. c=20; |1 0 01|20 1 1 10| 0 decimal binary. S: s. S. s S. g 2 S. s 1 S. n 0 n gen |0 0 000|A|M| 00 A M |1 0 001|T|M| 01 T M |2 1 100|S|F| 00 S F |3 1 111|B|F| 01 B F |4 0 010|C|M| 10 C M |5 0 011|J|F| 11 J F C. c C. r C: c. C. c n 0 cred C. r 1 C. n 10 0 B 1 |0 0 00|B|1 01| 0 1 D 1 |1 0 01|D|3 11| 1 0 M 1 |2 1 10|M|3 11| 1 1 S 0 |3 1 11|S|2 10| 1 |2 1 10|30 0 1 11| 1 |3 1 11|10 1 0 01| 1 E: s. E. s 21 o 0 |0 0 000|1 00 |0 0 000|0 00 |3 0 011|1 11 |3 0 011|3 11 |1 0 001|3 01 |1 0 001|0 01 |2 0 010|2 10 |2 0 010|7 10 |4 1 100|4 00 |5 1 101|5 01 E. o E. g 2 1 grade 0 1 0 0 001|2 01 110| 0 0 000|3 00 111| 1 0 001|3 01 111| 1 0 011|0 11 000| 0 0 000|2 00 110| 0 0 010|2 10 110| 0 1 111|3 11 111| 1 1 100|2 00 110| 0 1 101|2 01 110| 0 O : o. O. o 1 0 |0 000|0 00 |1 001|0 01 |2 010|1 10 |3 11 O. o 011|1 2 |4 100|2 00 0 101|2 |5 01 0 |6 110|2 10 0 111|3 |7 11 0 1 1 c. O. c 10 00|0 0 0 00|1 0 0 01|0 0 1 01|1 0 1 10|0 1 0 10|2 1 0 10|3 1 0 11|2 1 1 r 10 O. r 01| 0 1 00| 0 0 10| 1 0 11| 1 1 10| 1 0

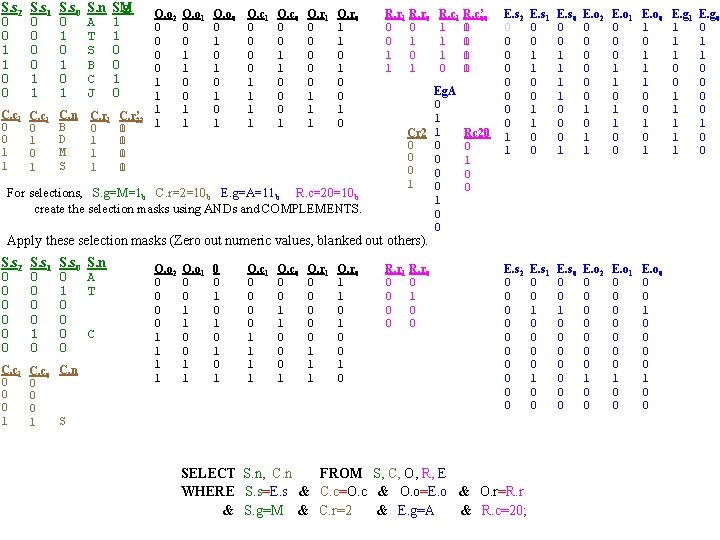

S. s 2 0 0 1 1 0 0 S. s 1 0 0 1 1 S. s 0 0 1 0 1 S. n A T S B C J C. c 1 0 0 1 1 C. c 1 0 1 C. n B D M S C. r 1 0 1 1 1 S. g SM 1 1 0 0 1 0 C. r’ 22 C. r 1 0 1 0 0 1 O. o 2 0 0 1 1 O. o 1 0 0 1 1 O. o 0 0 1 0 1 O. c 1 0 0 1 1 O. c 0 0 0 1 1 0 0 0 1 O. r 1 0 0 0 1 1 1 O. r 0 1 1 0 0 1 0 For selections, S. g=M=1 b C. r=2=10 b E. g=A=11 b R. c=20=10 b create the selection masks using ANDs and COMPLEMENTS. R. r 1 R. r 0 0 1 1 R. c’ R. c 00 1 1 1 0 1 0 0 1 Eg. A 0 1 Cr 2 1 0 0 0 1 0 0 Rc 20 0 1 0 0 E. s 2 0 0 0 0 1 1 E. s 1 0 0 1 1 0 0 E. s 0 0 0 1 1 0 0 0 1 E. o 2 0 0 0 1 0 1 1 E. o 1 0 0 0 1 1 0 0 E. o 0 1 1 1 0 0 1 E. s 2 0 0 0 0 0 E. s 1 0 0 0 0 1 0 0 E. s 0 0 0 1 0 0 0 0 E. o 2 0 0 0 0 1 0 0 E. o 1 0 0 0 0 1 0 0 E. o 0 0 0 1 0 0 Apply these selection masks (Zero out numeric values, blanked out others). S. s 2 0 0 0 S. s 1 0 0 1 0 S. s 0 0 1 0 0 C. c 1 0 0 0 1 C. c 0 C. n 0 0 0 S 1 S. n A T C O. o 2 0 0 1 1 O. o 1 0 0 1 0 1 O. c 1 0 0 1 1 O. c 0 0 0 1 1 0 0 0 1 O. r 1 0 0 0 1 1 1 O. r 0 1 1 0 0 1 0 R. r 1 R. r 0 0 1 0 0 SELECT S. n, C. n FROM S, C, O, R, E WHERE S. s=E. s & C. c=O. c & O. o=E. o & O. r=R. r & S. g=M & C. r=2 & E. g=A & R. c=20; E. g 1 1 0 0 1 1 1 E. g 0 0 1 1 0 0

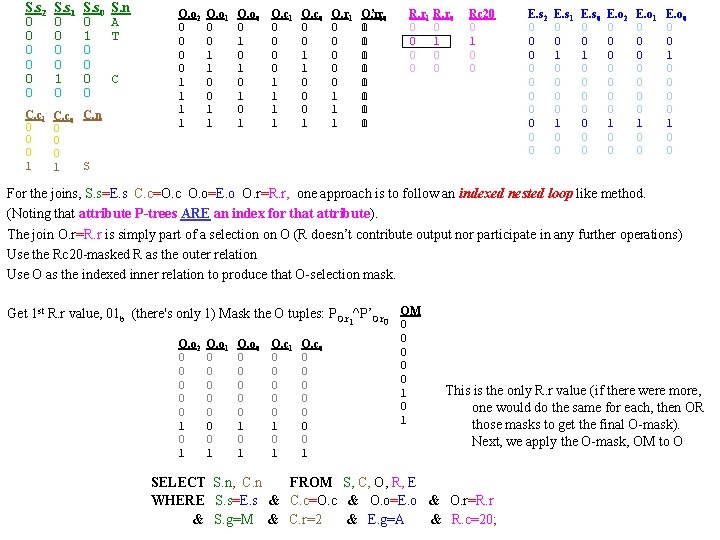

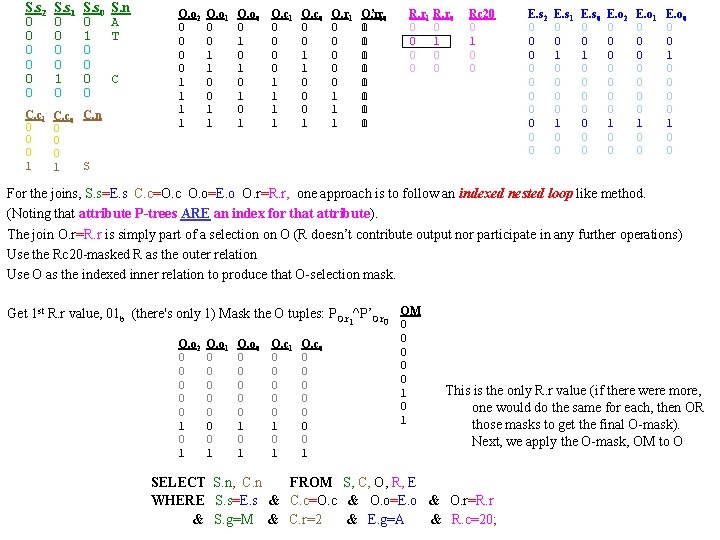

S. s 2 0 0 0 S. s 1 0 0 1 0 S. s 0 0 1 0 0 C. c 1 0 0 0 1 C. c 0 C. n 0 0 0 S 1 S. n A T C O. o 2 0 0 1 1 O. o 1 0 0 1 1 O. o 0 0 1 0 1 O. c 1 0 0 1 1 O. c 0 0 0 1 1 0 0 0 1 O. r 1 0 0 0 1 1 1 O’. r 00 O. r 1 0 0 1 R. r 1 R. r 0 0 1 0 0 Rc 20 0 1 0 0 E. s 2 0 0 0 0 0 E. s 1 0 0 0 0 1 0 0 E. s 0 0 0 1 0 0 0 0 E. o 2 0 0 0 0 1 0 0 E. o 1 0 0 0 0 1 0 0 E. o 0 0 0 1 0 0 For the joins, S. s=E. s C. c=O. c O. o=E. o O. r=R. r, one approach is to follow an indexed nested loop like method. (Noting that attribute P-trees ARE an index for that attribute). The join O. r=R. r is simply part of a selection on O (R doesn’t contribute output nor participate in any further operations) Use the Rc 20 -masked R as the outer relation Use O as the indexed inner relation to produce that O-selection mask. Get 1 st R. r value, 01 b (there's only 1) Mask the O tuples: PO. r ^P’O. r 1 O. o 2 0 0 0 1 O. o 1 0 0 0 0 1 O. o 0 0 0 1 0 1 O. c 1 0 0 0 1 O. c 0 0 0 0 1 0 OM 0 0 0 1 This is the only R. r value (if there were more, one would do the same for each, then OR those masks to get the final O-mask). Next, we apply the O-mask, OM to O SELECT S. n, C. n FROM S, C, O, R, E WHERE S. s=E. s & C. c=O. c & O. o=E. o & O. r=R. r & S. g=M & C. r=2 & E. g=A & R. c=20;

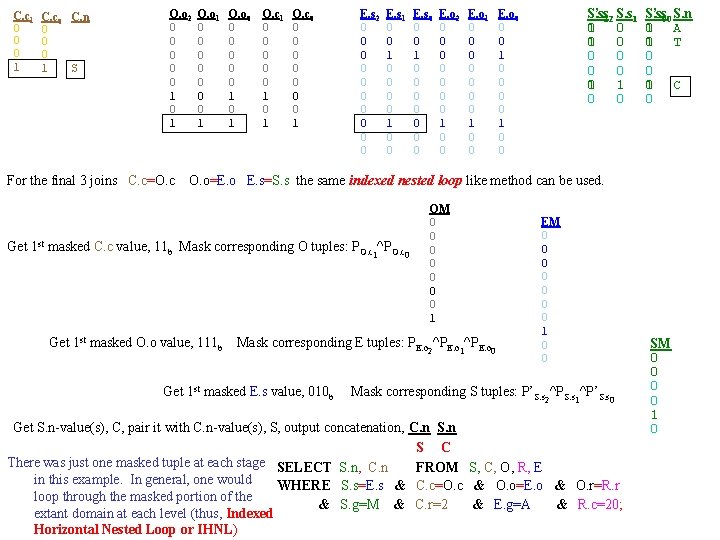

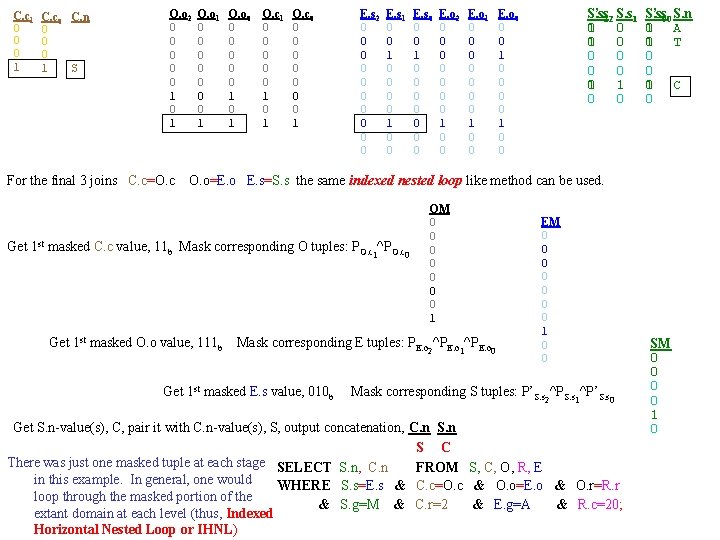

C. c 1 0 0 0 1 C. c 0 C. n 0 0 0 1 S O. o 2 0 0 0 1 For the final 3 joins C. c=O. c O. o 1 0 0 0 0 1 O. o 0 0 0 1 0 1 O. c 1 0 0 0 1 O. c 0 0 0 0 1 E. s 2 0 0 0 0 0 E. s 1 0 0 0 0 1 0 0 E. s 0 0 0 1 0 0 0 0 E. o 2 0 0 0 0 1 0 0 E. o 1 0 0 0 0 1 0 0 S’. s 22 S. s 1 S. s 0 0 1 0 0 0 1 1 0 0 E. o 0 0 0 1 0 0 S’. s 00 S. n S. s 0 A 1 1 T 0 0 C 1 0 O. o=E. o E. s=S. s the same indexed nested loop like method can be used. Get 1 st masked C. c value, 11 b Mask corresponding O tuples: PO. c ^PO. c 1 Get 1 st masked O. o value, 111 b 0 OM 0 0 0 0 1 Mask corresponding E tuples: PE. o ^PE. o Get 1 st masked E. s value, 010 b 2 1 0 EM 0 0 0 0 1 0 0 Mask corresponding S tuples: P’S. s ^P’S. s 2 1 0 Get S. n-value(s), C, pair it with C. n-value(s), S, output concatenation, C. n S C There was just one masked tuple at each stage SELECT S. n, C. n FROM S, C, O, R, E in this example. In general, one would WHERE S. s=E. s & C. c=O. c & O. o=E. o & O. r=R. r loop through the masked portion of the & S. g=M & C. r=2 & E. g=A & R. c=20; extant domain at each level (thus, Indexed Horizontal Nested Loop or IHNL) SM 0 0 1 0

Vertical Select-Project-Join-Classification Query Given previous SCORE Training Database (not presented as just one training table), predict what course a male student will register for, who got an A in a previous course in Room with a capacity of 20. This is a matter of applying the previous complex SPJ query first to get the pertinent Training table and then classifying the above unclassified sample (e. g. , using, 1 -nearest neighbour classification). The result of the SPJ is the single row Training Set, (S, C) and so the prediction is course=C.

Thank you.