1 Scoring our scoring HOW DO WE KNOW

- Slides: 16

1 Scoring our scoring HOW DO WE KNOW WE ARE SCORING WELL? MIKE TAYLOR, BABS SINGING CATEGORY DIRECTOR SEPTEMBER 2017

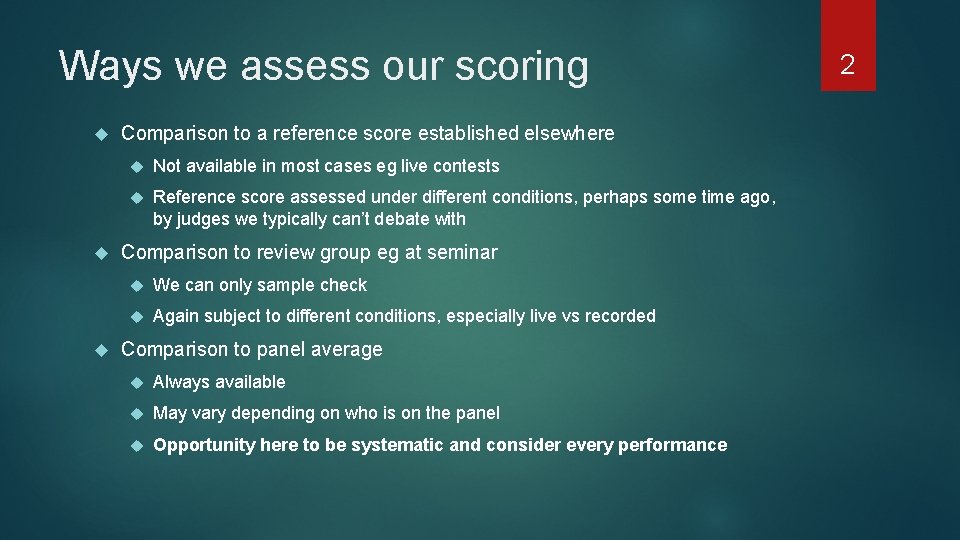

Ways we assess our scoring Comparison to a reference score established elsewhere Not available in most cases eg live contests Reference score assessed under different conditions, perhaps some time ago, by judges we typically can’t debate with Comparison to review group eg at seminar We can only sample check Again subject to different conditions, especially live vs recorded Comparison to panel average Always available May vary depending on who is on the panel Opportunity here to be systematic and consider every performance 2

Comparison to panel average is the best approach we have. We are used to using this We currently flag an asterisk score if 10 point spread or 5 away from the panel average. But this only highlights performances where the score spread exceeds a predetermined threshold It is possible to have a quality indicator for every performance – how close together were the panel judges. ‘Standard deviation’ is a way to measure how close together our scores are on a performance 3

What is standard deviation? Mathematical definition is of interest to some but not essential to know. Look it up here: https: //en. wikipedia. org/wiki/Standard_deviation For our purposes, think of it as a sort of average distance from the panel average. Here’s an example… 4

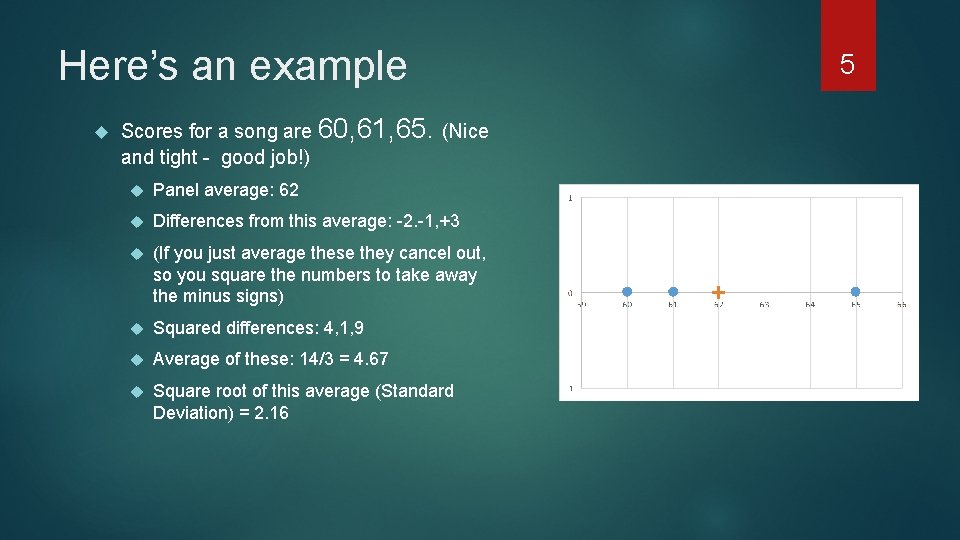

Here’s an example Scores for a song are 60, 61, 65. (Nice and tight - good job!) Panel average: 62 Differences from this average: -2. -1, +3 (If you just average these they cancel out, so you square the numbers to take away the minus signs) Squared differences: 4, 1, 9 Average of these: 14/3 = 4. 67 Square root of this average (Standard Deviation) = 2. 16 5

Another example Scores for a song are 60, 61, 71. (We might want to worry about this one) Panel average: 64 Differences from this average: -4. -3, +7 Squared differences: 16, 9, 49 Average of these: 74/3 = 24. 67 Square root of this average (Standard Deviation) = 4. 97 6

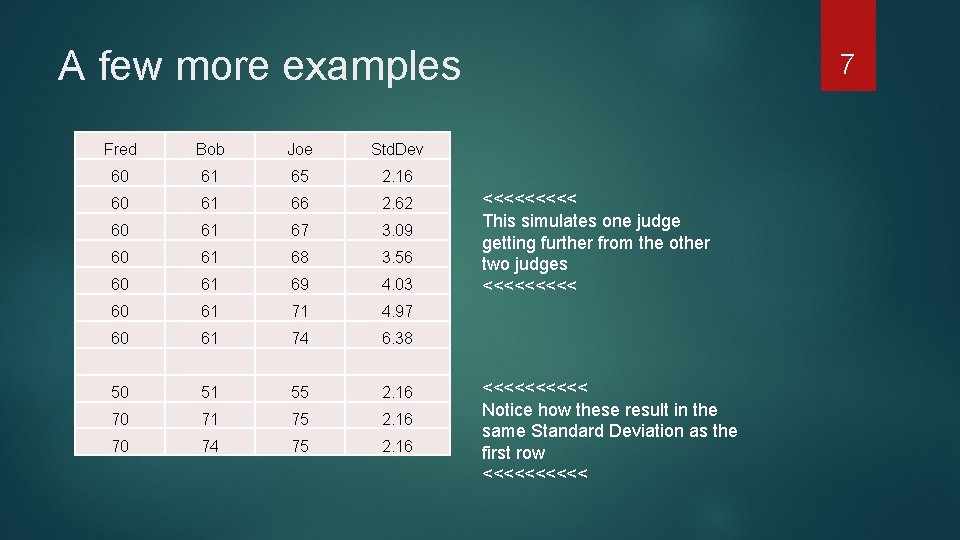

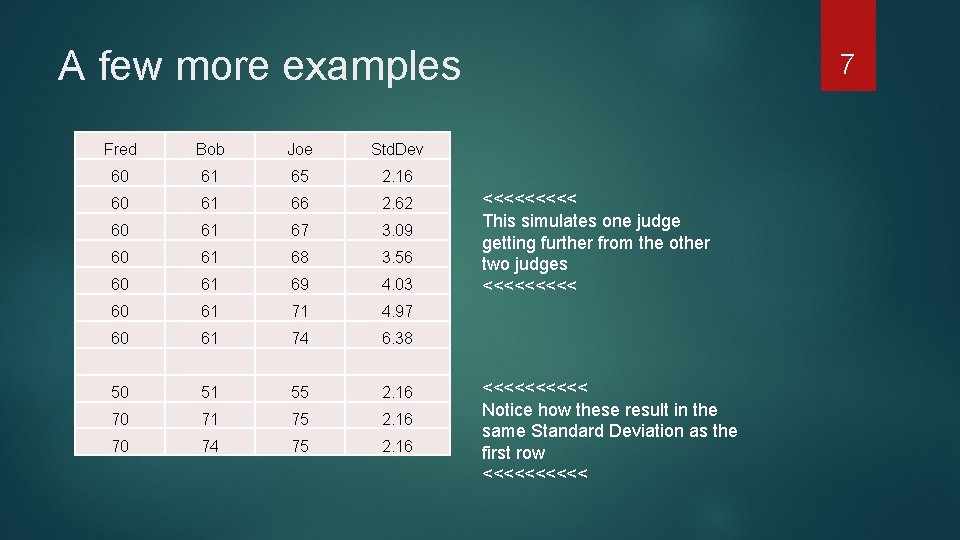

A few more examples Fred Bob Joe Std. Dev 60 61 65 2. 16 60 61 66 2. 62 60 61 67 3. 09 60 61 68 3. 56 60 61 69 4. 03 60 61 71 4. 97 60 61 74 6. 38 50 51 55 2. 16 70 71 75 2. 16 70 74 75 2. 16 7 <<<<< This simulates one judge getting further from the other two judges <<<<<<<<<< Notice how these result in the same Standard Deviation as the first row <<<<<

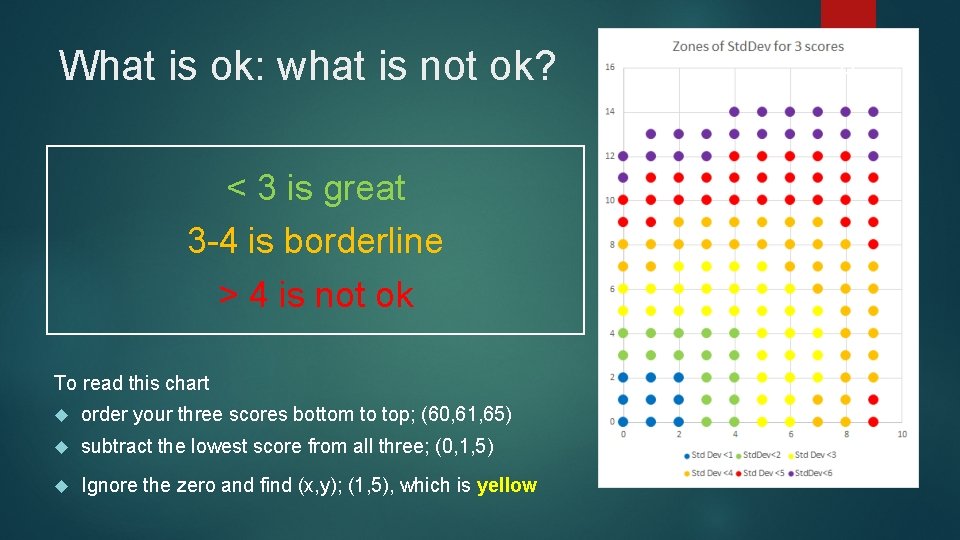

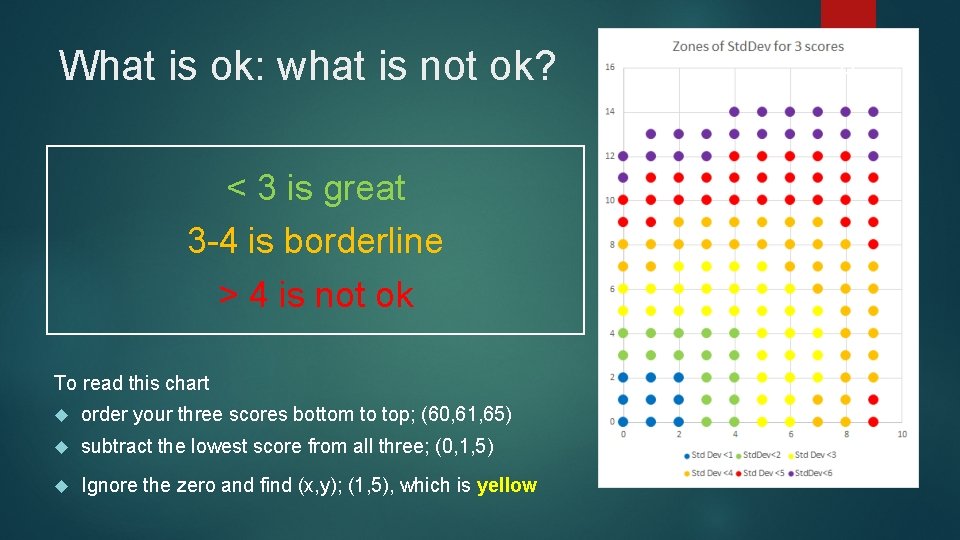

What is ok: what is not ok? < 3 is great 3 -4 is borderline > 4 is not ok To read this chart order your three scores bottom to top; (60, 61, 65) subtract the lowest score from all three; (0, 1, 5) Ignore the zero and find (x, y); (1, 5), which is yellow 8

So what? We can look at standard deviation for each performance and see how we do overall in a whole contest We can look at the average of the Std Devs for all the performances and have a single figure to represent that panel’s judging closeness. We can see if we do better year on year for ‘closeness of scoring’ 9

10 How well do we do?

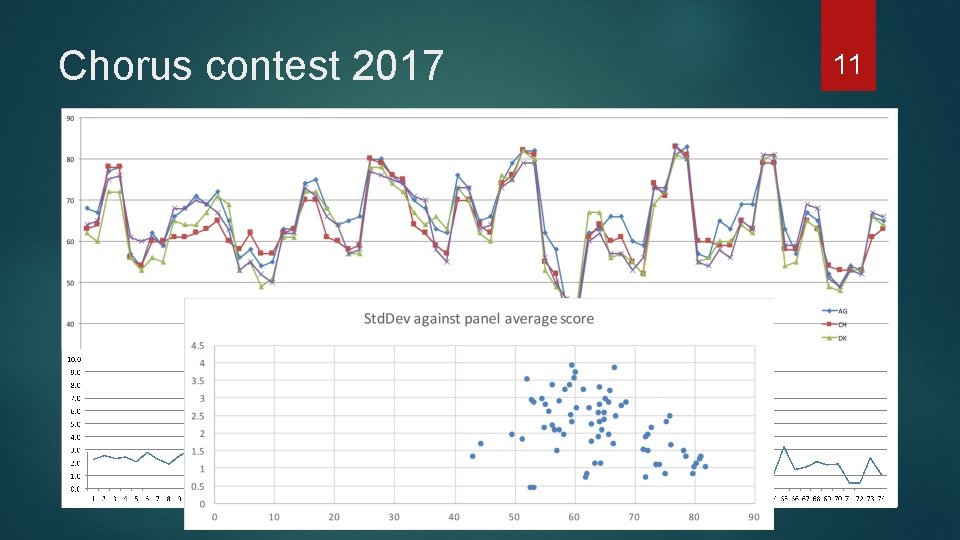

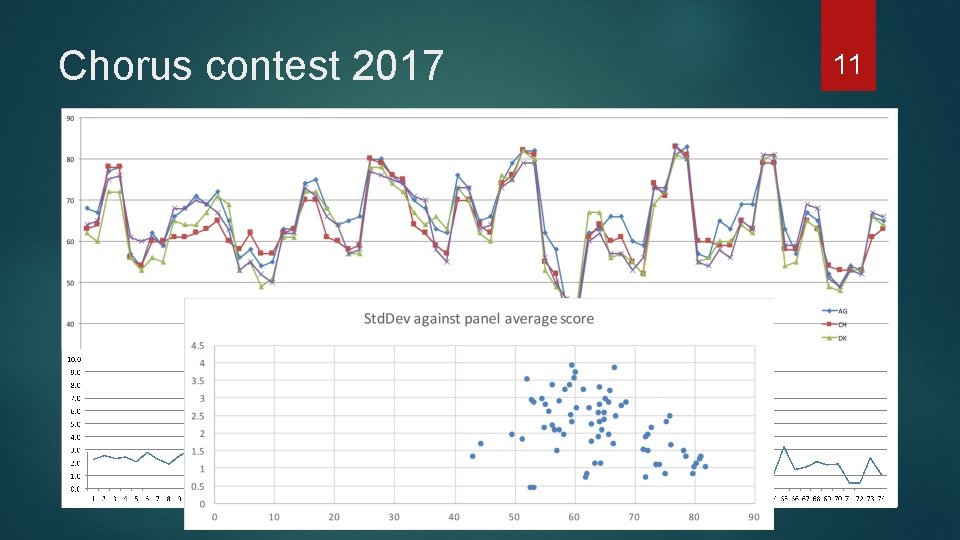

Chorus contest 2017 11

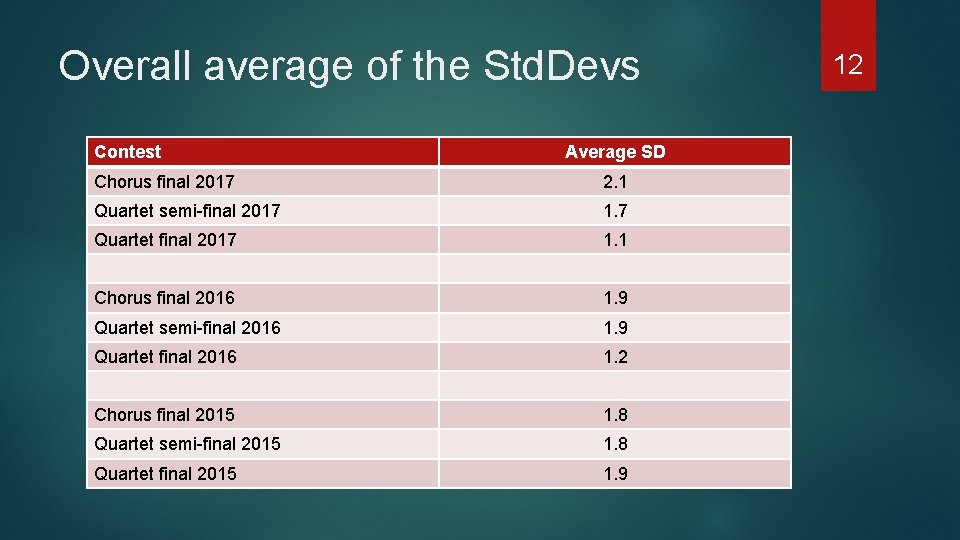

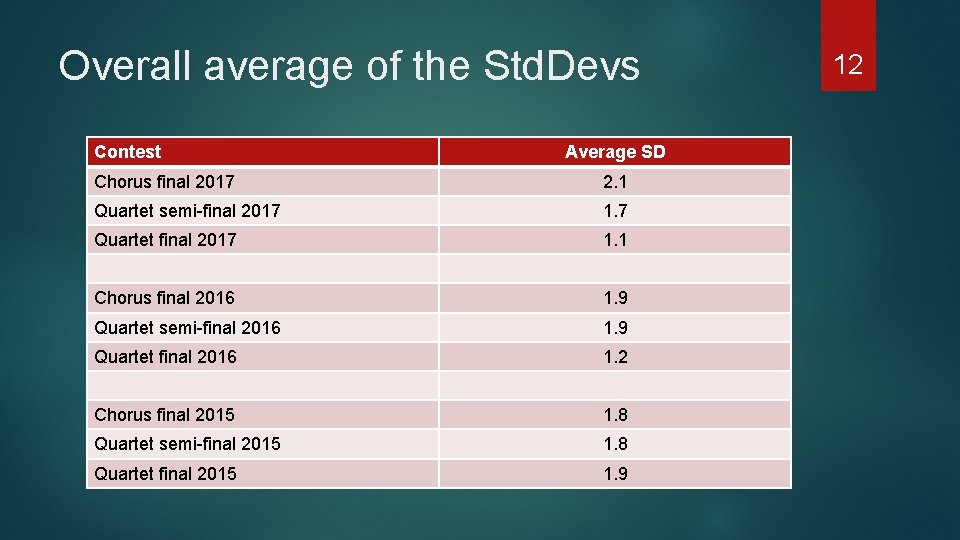

Overall average of the Std. Devs Contest Average SD Chorus final 2017 2. 1 Quartet semi-final 2017 1. 7 Quartet final 2017 1. 1 Chorus final 2016 1. 9 Quartet semi-final 2016 1. 9 Quartet final 2016 1. 2 Chorus final 2015 1. 8 Quartet semi-final 2015 1. 8 Quartet final 2015 1. 9 12

Observations We are usually better on quartet finals – some evidence to show we are tighter at judging higher scoring groups. We were slightly worse this year in choruses than previously This was a four person panel not sure how much change in this figure is significant Quartets this year had the tightest scoring in last three years. 13

What about individual judge performance? Each judge has a distance from the panel average for each song. You can take an average of the magnitude of these distances across a contest and see how well the judge did. (eg on average I was 1. 6 pts away from the panel average in the chorus final in 2017) You can also take an actual average to see if the judge was overall kinder or meaner than the panel as a whole. This would be interesting to track across contests to see if a judge is always kinder or meaner. Having measured how “generous” a judge is in a contest, you can correct for this before measuring the average distance to the panel mean. That way you are more accurately measuring just the tendency to differ from the others. 14

Caveats All this presupposes that being close together is a sign of quality. You can argue that we have multiple judges to accept and allow variation. However, if you don’t presuppose closeness = goodness, you have no way to measure how well we are doing. Assessing an individual judge’s performance depends on the other judges on the panel – one might have been right every time and the other two skewed the outcome. Over time we all judge with each other and those who are consistently ‘in the middle’ would emerge. 15

Acknowledgements The scoring analysis has been developed and produced after each contest by David King. He has done an incredible amount of work. He merits great praise, and the work greater visibility. 16