WLCG Service Report Maria Gironecern ch WLCG Management

![Analysis of the availability plots: Week of 14/02/2011 ATLAS [No significant failures to report] Analysis of the availability plots: Week of 14/02/2011 ATLAS [No significant failures to report]](https://slidetodoc.com/presentation_image_h2/d1278de4e29d2f4053148d7f21719eae/image-12.jpg)

- Slides: 13

WLCG Service Report Maria. Girone@cern. ch ~~~ WLCG Management Board, 22 nd February 2011

Introduction • This report covers the two weeks since the last MB report • GGUS-SNOW testing & go-live • Service Incident Reports received from IN 2 P 3 • Shared area issues; Storage degradation issues • SIRs also for PIC ZFS corruption, CERN DB upgrade & KIT batch • Update on CNAF-BNL network issue GGUS: 61440 • Some other issues that might require SIRs, e. g. GGUS unavailability over past weekend 2

IN 2 P 3 Issues (from 2010) • Shared area issues – AFS latency • • July the 8 th 2010 to January the 7 th 2011 • A few % of LHCb jobs timing out (600 s) on setting up LHCb environment – indicates slow AFS response • The environment setup phase of LHCb jobs has shown to be very intensive in terms of AFS access operations. • The number of concurrent jobs on all 24 core machines was reduced from 28 (24 grid jobs) to 21 (18 grid jobs). Also a development release (1. 5. 78) of the AFS client is now deployed on the whole cluster – shown to be much more efficient than the latest public release (1. 4. 12), and reliable enough according to our local tests. d. Cache degradation • • • September the 23 rd 2010 to November the 22 nd 2010 Change implemented in checksum calculation On advice of d. Cache. org, new feature of 1. 9. 5 -22 enabled to better use public network interface (& not OPN) 3

Recent Issues (CERN, PIC, KIT) • CERN DB upgrade of LCGR • LCGR database was down on Tuesday 25. 01 from 09: 30 to 17: 00 due to the problems encountered during the database upgrade to 10. 2. 0. 5. (Scheduled and expected downtime was 2. 5 hours). • excessive messages in the AQ tables: bugs 7379282 and 7494199 (not yet fixed) • Some delays in updating CERN site status board • PIC ZFS corruption • 250 TB of ATLAS data partially unavailable (2 pool servers) • 21 Jan – 8 Feb • 60% of files available a few days later (R/O) • Two week recovery period after which a few hundred files could not be recovered (corrupted) • Still some issues to be resolved: h/w failures during scheduled power-off and ZFS corruption itself • KIT batch degradation • One of two Grid. Ka subclusters was unavailable or degraded for several day • KIT hit a bug introduced in the latest version of PBSPro which uses a new license system 4

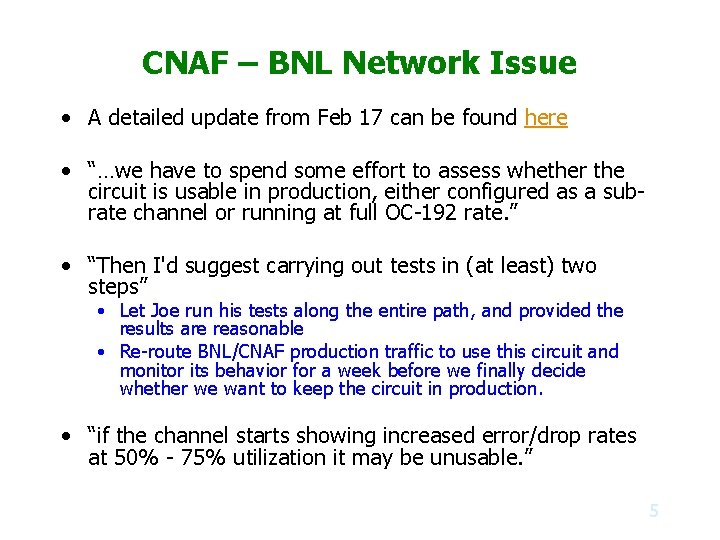

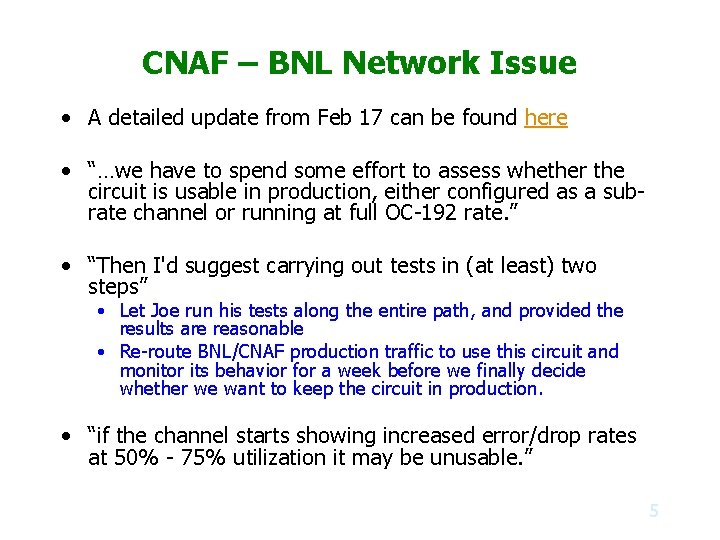

CNAF – BNL Network Issue • A detailed update from Feb 17 can be found here • “…we have to spend some effort to assess whether the circuit is usable in production, either configured as a subrate channel or running at full OC-192 rate. ” • “Then I'd suggest carrying out tests in (at least) two steps” • Let Joe run his tests along the entire path, and provided the results are reasonable • Re-route BNL/CNAF production traffic to use this circuit and monitor its behavior for a week before we finally decide whether we want to keep the circuit in production. • “if the channel starts showing increased error/drop rates at 50% - 75% utilization it may be unusable. ” 5

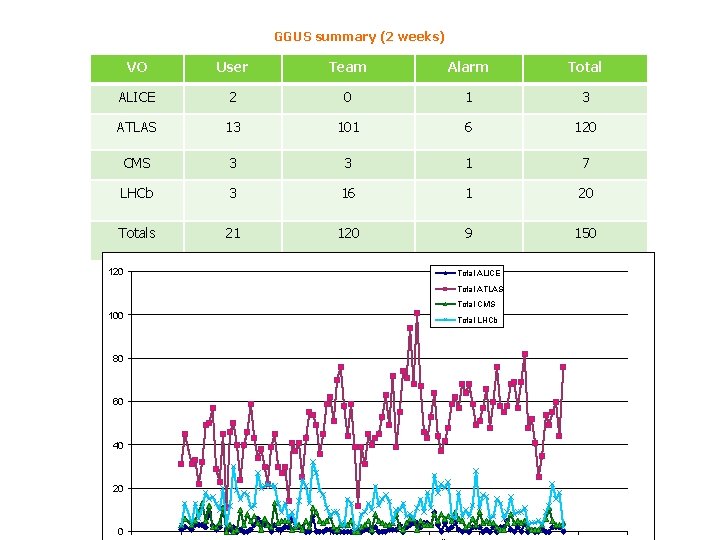

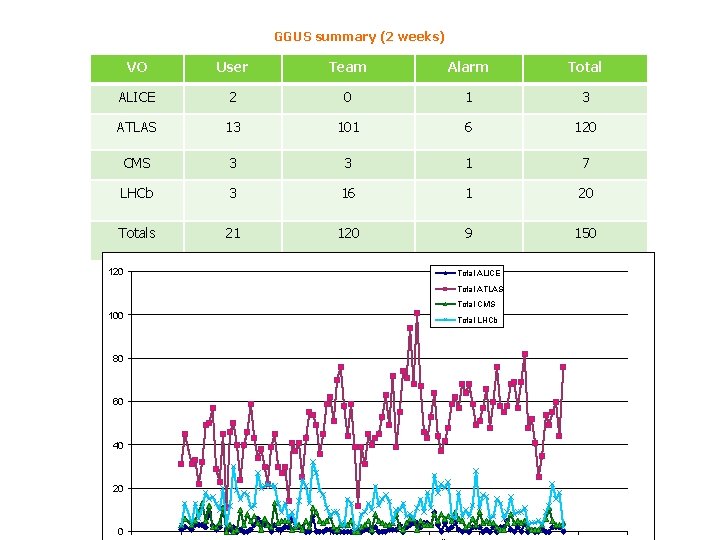

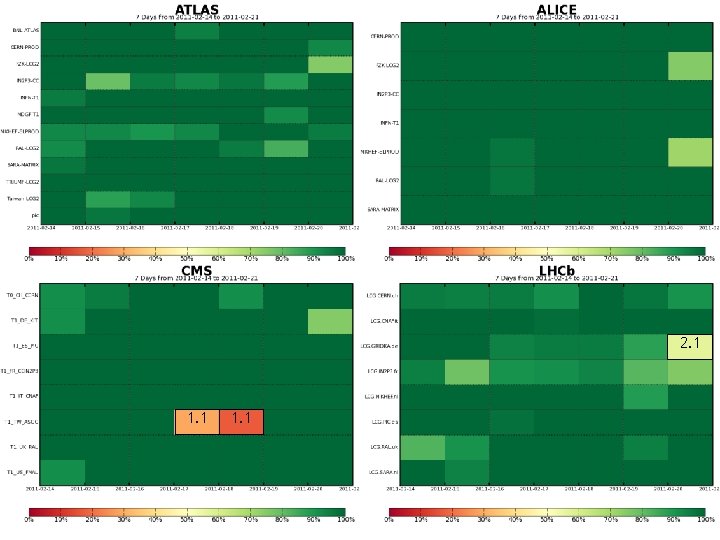

GGUS summary (2 weeks) VO User Team Alarm Total ALICE 2 0 1 3 ATLAS 13 101 6 120 CMS 3 3 1 7 LHCb 3 16 1 20 Totals 21 120 9 150 120 Total ALICE Total ATLAS Total CMS 100 80 60 40 20 0 Total LHCb

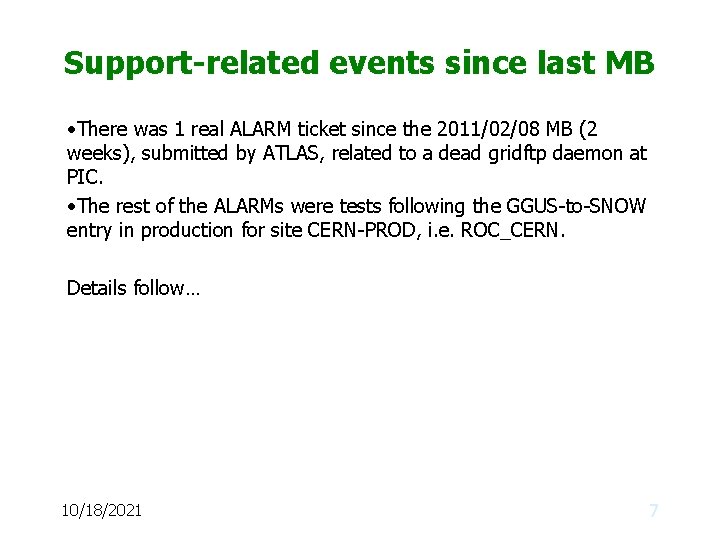

Support-related events since last MB • There was 1 real ALARM ticket since the 2011/02/08 MB (2 weeks), submitted by ATLAS, related to a dead gridftp daemon at PIC. • The rest of the ALARMs were tests following the GGUS-to-SNOW entry in production for site CERN-PROD, i. e. ROC_CERN. Details follow… 10/18/2021 7

ATLAS ALARM->TRANSFERS TO/FROM PIC FAIL Time (UTC) What happened 2011/02/10 05: 44 GGUS ALARM ticket, automatic email notification to tier 1 -alarms@pic. es AND automatic assignment to NGI_Ibergrid. 2011/02/10 06: 34 Site admin finds gridftp daemons dead and restarts them. 2011/02/10 06: 52 Sites sets ticket to ‘solved’ as transfers restarted. Related TEAM ticket GGUS: 67200 is solved around 11 am. 2011/02/10 07: 15 Submitter sets the ticket to status ‘verified’. • https: //gus. fzk. de/ws/ticket_info. php? ticket=67205 10/18/2021 WLCG MB Report WLCG Service Report 8

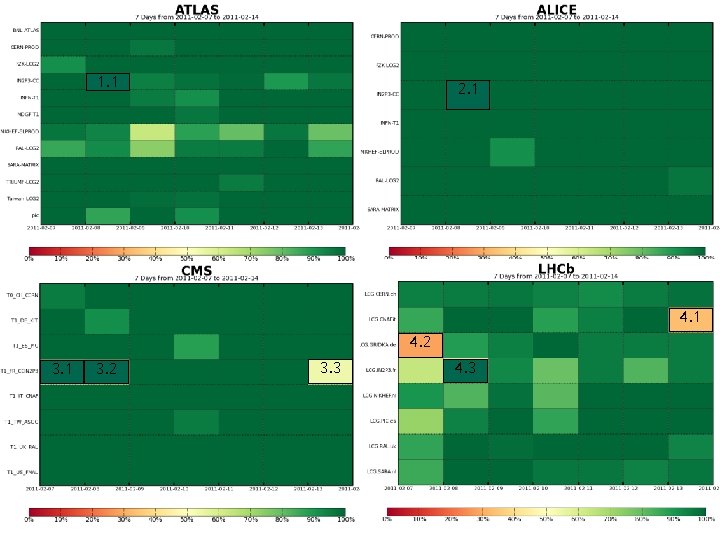

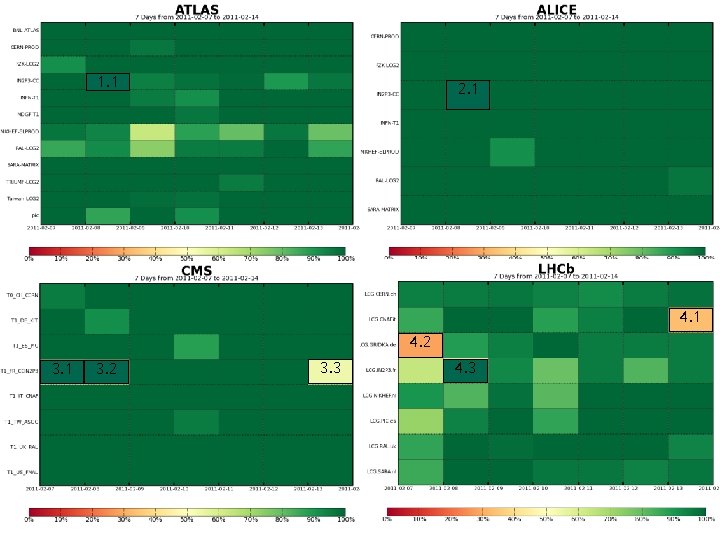

1. 1 2. 1 4. 2 3. 1 3. 2 3. 3 4. 3

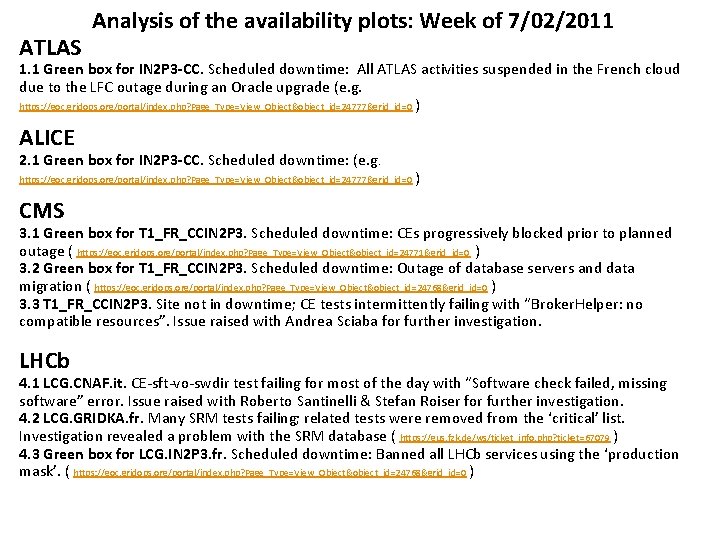

ATLAS Analysis of the availability plots: Week of 7/02/2011 1. 1 Green box for IN 2 P 3 -CC. Scheduled downtime: All ATLAS activities suspended in the French cloud due to the LFC outage during an Oracle upgrade (e. g. https: //goc. gridops. org/portal/index. php? Page_Type=View_Object&object_id=24777&grid_id=0 ) ALICE 2. 1 Green box for IN 2 P 3 -CC. Scheduled downtime: (e. g. https: //goc. gridops. org/portal/index. php? Page_Type=View_Object&object_id=24777&grid_id=0 ) CMS 3. 1 Green box for T 1_FR_CCIN 2 P 3. Scheduled downtime: CEs progressively blocked prior to planned outage ( https: //goc. gridops. org/portal/index. php? Page_Type=View_Object&object_id=24771&grid_id=0 ) 3. 2 Green box for T 1_FR_CCIN 2 P 3. Scheduled downtime: Outage of database servers and data migration ( https: //goc. gridops. org/portal/index. php? Page_Type=View_Object&object_id=24768&grid_id=0 ) 3. 3 T 1_FR_CCIN 2 P 3. Site not in downtime; CE tests intermittently failing with “Broker. Helper: no compatible resources”. Issue raised with Andrea Sciaba for further investigation. LHCb 4. 1 LCG. CNAF. it. CE-sft-vo-swdir test failing for most of the day with “Software check failed, missing software” error. Issue raised with Roberto Santinelli & Stefan Roiser for further investigation. 4. 2 LCG. GRIDKA. fr. Many SRM tests failing; related tests were removed from the ‘critical’ list. Investigation revealed a problem with the SRM database ( https: //gus. fzk. de/ws/ticket_info. php? ticket=67079 ) 4. 3 Green box for LCG. IN 2 P 3. fr. Scheduled downtime: Banned all LHCb services using the ‘production mask’. ( https: //goc. gridops. org/portal/index. php? Page_Type=View_Object&object_id=24768&grid_id=0 )

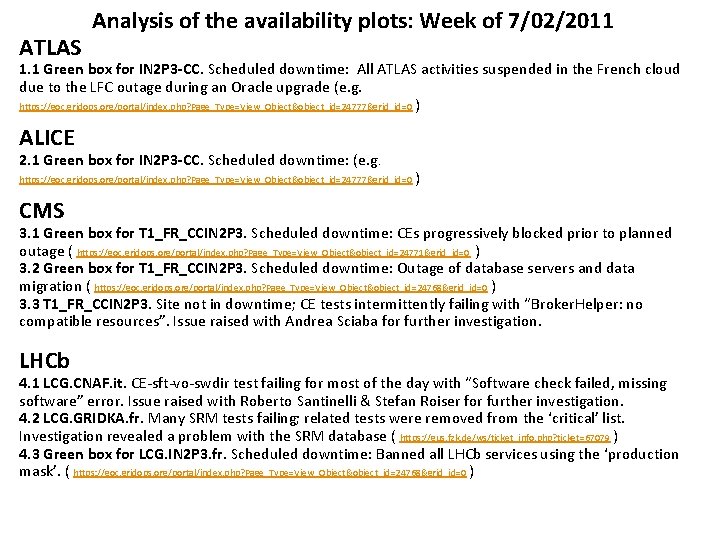

2. 1 1. 1

![Analysis of the availability plots Week of 14022011 ATLAS No significant failures to report Analysis of the availability plots: Week of 14/02/2011 ATLAS [No significant failures to report]](https://slidetodoc.com/presentation_image_h2/d1278de4e29d2f4053148d7f21719eae/image-12.jpg)

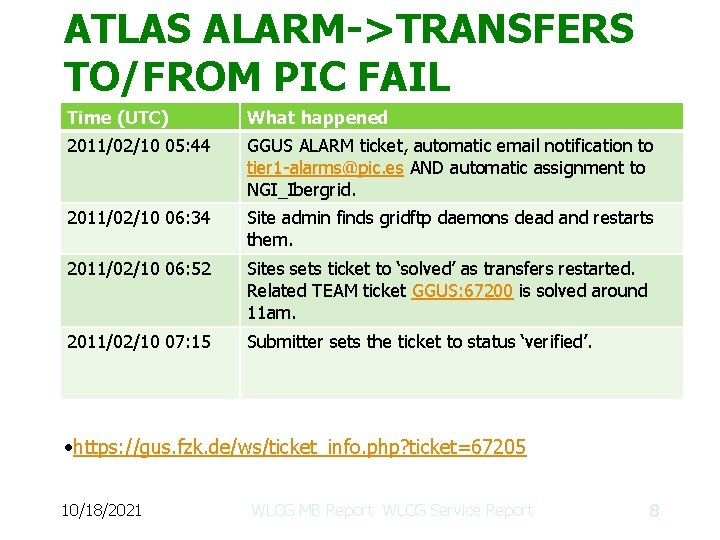

Analysis of the availability plots: Week of 14/02/2011 ATLAS [No significant failures to report] ALICE [No significant failures to report] CMS 1. 1 T 1_TW_ASGC. No downtime registered. SAM tests persistently failing due to a CASTOR overload. Issue resolved 2011 -02 -19 at 10. 42 CET. (https: //gus. fzk. de/ws/ticket_info. php? ticket=67573). LHCb 2. 1 LCG. GRIDKA. de. No downtime registered. SAM tests intermittently failing on CEs with the following errors: “authentication failed: GSS Major Status: Authentication Failed GSS Minor Status Error Chain: init. c: 499: globus_gss_assist_init_sec_context_async: Error during context initialization init_sec_contex” “the ls –R command on a subdirectory of the shared area took more than 150 seconds while are expected less than 60 seconds” Note that all VOs experienced similar GSS errors at GRIDKA during the late morning/early afternoon of 2011 -02 -20, though the LHCb availability suffered most.

Conclusions • Rather smooth running, as shown by GGUS tickets and Site Usability plots • The number of SIRs received does not represent problems during the past two weeks – they refer to incidents in previous periods • GGUS – SNOW migration on-going • First beam splash events reported – looking forward to another successful year of LHC data taking 13

Oración a chiquitunga para pedir un milagro

Oración a chiquitunga para pedir un milagro Difference between progress report and status report

Difference between progress report and status report Sperling 1960

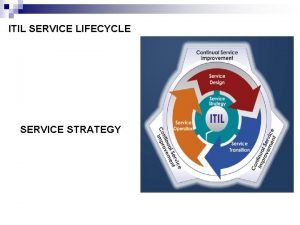

Sperling 1960 Itil service lifecycle service transition

Itil service lifecycle service transition Itil service lifecycle

Itil service lifecycle Csi 7 step improvement process

Csi 7 step improvement process Desired service and adequate service

Desired service and adequate service Service provider and service consumer

Service provider and service consumer Mpls class of service

Mpls class of service New service development process cycle

New service development process cycle Service owner vs service manager

Service owner vs service manager Service improvement plan for service desk

Service improvement plan for service desk Adp self service

Adp self service Top management and middle management

Top management and middle management