Validity in Testing Are we testing what we

- Slides: 11

Validity in Testing “Are we testing what we think we’re testing? ”

Components of test validity • Construct validity – Are we measuring theoretical language ‘construct’ that we claim to measure (general ones like ‘reading ability’, specific ones like ‘pronoun referent awareness’) – Content validity – if the test items are representative of all the skills or structures in question – Criterion-related validity – does the test give similar results to more thorough assessments?

Content validity • The items test the targeted skill • The selection of items is appropriate for the skills (important skills have more items, some less important skills are not addressed) • Accurately reflects test specs • Requires a principled selection (but not based on ‘easy’ items to create/score)

Criterion-related validity • Concurrent validity – the test and the other more thorough assessment occur at the same time – 100 students receive a 5 minute mini-interview to assess speaking skills; of that group 5 are given more complete 45 -minute interviews. Do the scores for the 5 who did both match? (the degree of agreement is called the validity coefficient, a number between 0 -1) – If yes, there is a degree of concurrent validity; if no, there is not.

Validity Coefficients Student Short Version 2 3 TWE Mario Short Version 1 6 Ana 3 3 3 Jenny 4 5 4 Asuka 6 5 5 Igor 4 6

Criterion-related validity • Predictive validity – can the test predict future success? – Have you noticed if passing a test consistently means that a student will do well later on? – This can often be a subjective, anecdotal kind of measure, by teachers over a long period of time with many students – Reality check: there are MANY other factors that affect success (motivation, background knowledge, etc. )

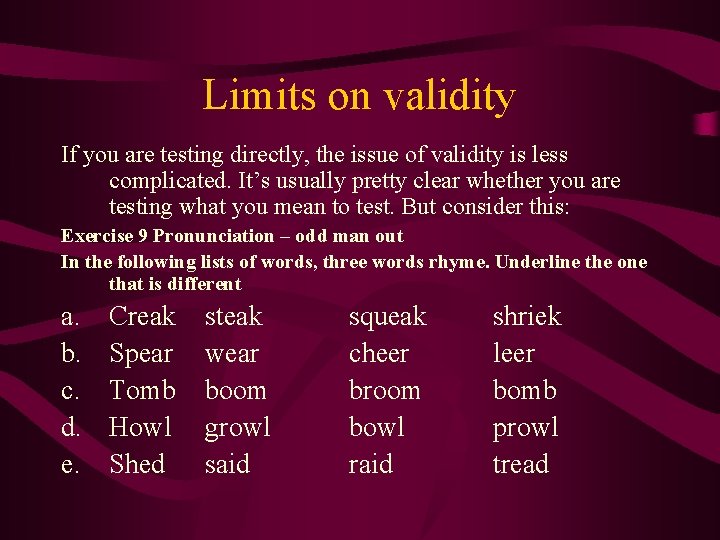

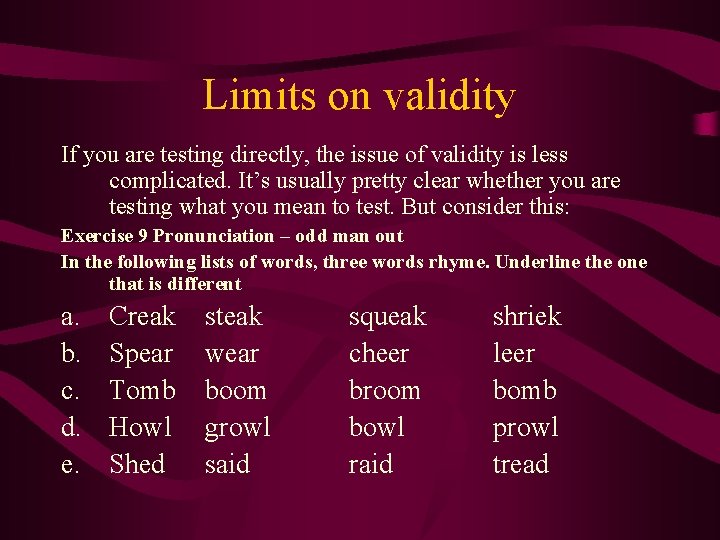

Limits on validity If you are testing directly, the issue of validity is less complicated. It’s usually pretty clear whether you are testing what you mean to test. But consider this: Exercise 9 Pronunciation – odd man out In the following lists of words, three words rhyme. Underline the one that is different a. b. c. d. e. Creak Spear Tomb Howl Shed steak wear boom growl said squeak cheer broom bowl raid shriek leer bomb prowl tread

Validity in scoring • How items are scored affects their validity • If the construct is reading, scores on short answers that deduct for punctuation and grammar are not valid • Measuring more than one construct in an item makes it more likely to be less valid How can you address this kind of problem?

Face validity Teachers, students and administrators usually believe that: • A test of grammar should test… grammar! • A test of pronunciation requires… speaking! • A test of writing means that a student must… write! Do you agree?

Making tests more valid • Write detailed test specs • Use direct testing whenever possible • Check that scoring is based only on the target skill • Keep tests reliable How important is it to check for validity?

Considering Validity • Find a test given at UABC (if possible one you wrote, or one you are familiar with) • Make a judgment on its validity, considering content, criterion, scoring, and face validity. • Discuss this with several other people and present a short summary of the discussion to the whole group. John Bunting (2004) presentation in the Course: Testing, Assessment and Teaching- A program for EFL Teachers at UABC. Facultad de Idiomas, UABC