Reliability and Validity Testing Definitions z Validity the

Reliability and Validity Testing

Definitions z. Validity - the extent to which a test measures what it is designed to measure z. Reliability - the extent to which a test or measure is reproducible

Validity 4 Logical (face) - how much the measure obviously involves the performance. 4 Construct - how well the measure relates to theory 4 Content - how well the outcome evaluates the intervention 4 Criterion - how well the test measures against a set standard

Assessment of Validity 4 Criterion validity â Concurrent (persamaan) â Predictive (Prediksi) â Prescriptive (Petunjuk)

Bland Altman Bias Dispersion of the Bias Relationship of Bias to value M = Experimental measured value GS = Gold Standard measured value

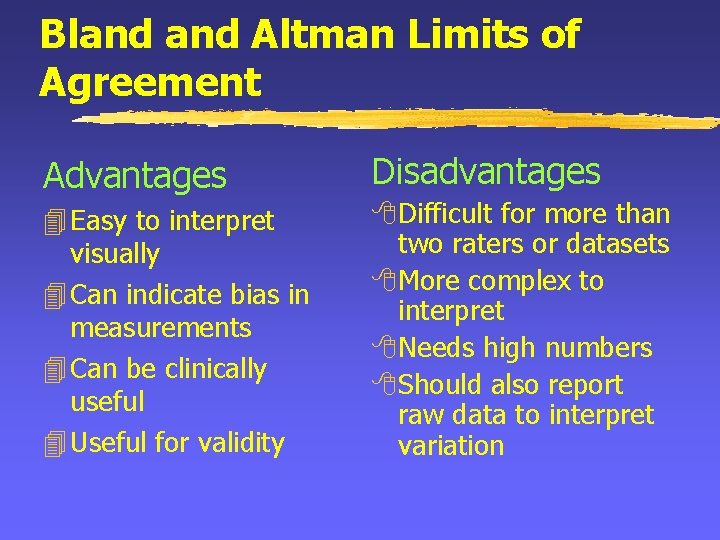

Bland Altman Limits of Agreement Advantages 4 Easy to interpret visually 4 Can indicate bias in measurements 4 Can be clinically useful 4 Useful for validity Disadvantages 8 Difficult for more than two raters or datasets 8 More complex to interpret 8 Needs high numbers 8 Should also report raw data to interpret variation

Reliability 4 A measure CANNOT be valid but NOT reliable 4 However a measure CAN BE reliable but NOT valid

Reliability Observed score = True score + Error score True score hard to evaluate but we can estimate the error score

Sources of Error The Participants

Sources of Error The Testing Poor directions Additional motivation Inconsistent protocol

Sources of Error The Scoring The scorers Type of scoring system

Sources of Error The Instrumentation Calibration Inaccuracies Sensitivity

Statistical techniques 7 Pearsons r 4 ICC 4 Limits of agreement 4 Cronbachs alpha 4 Kappa statistic 4 Weighted kappa statistic

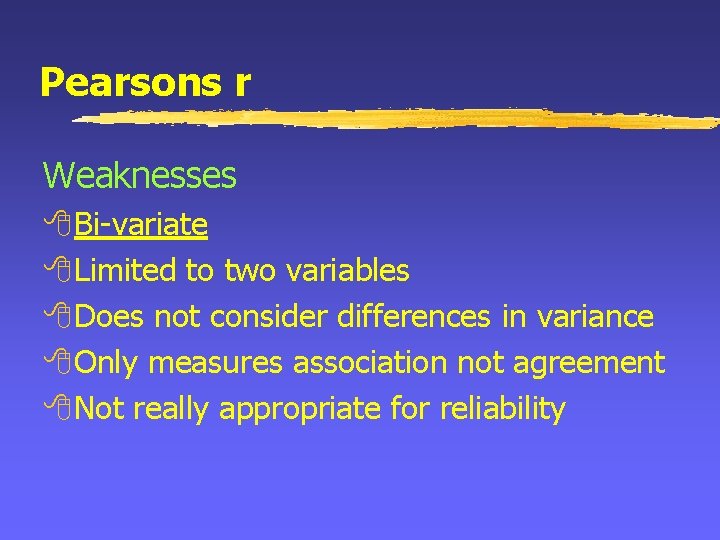

Pearsons r Weaknesses 8 Bi-variate 8 Limited to two variables 8 Does not consider differences in variance 8 Only measures association not agreement 8 Not really appropriate for reliability

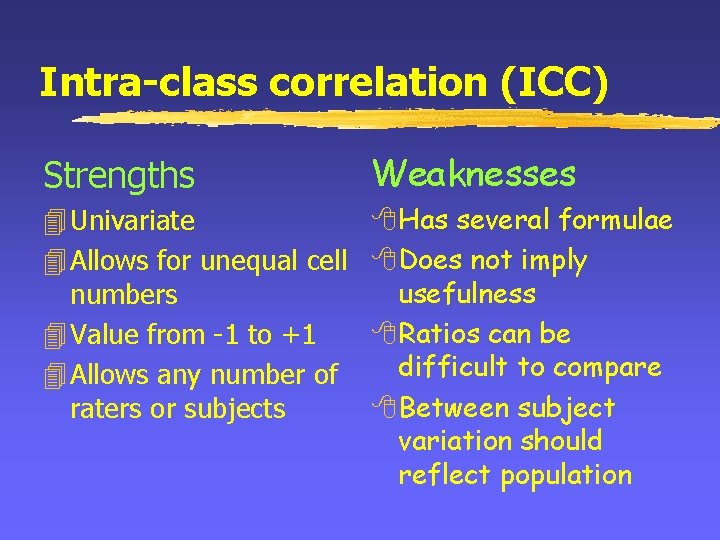

Intra-class correlation (ICC) Strengths Weaknesses 4 Univariate 4 Allows for unequal cell numbers 4 Value from -1 to +1 4 Allows any number of raters or subjects 8 Has several formulae 8 Does not imply usefulness 8 Ratios can be difficult to compare 8 Between subject variation should reflect population

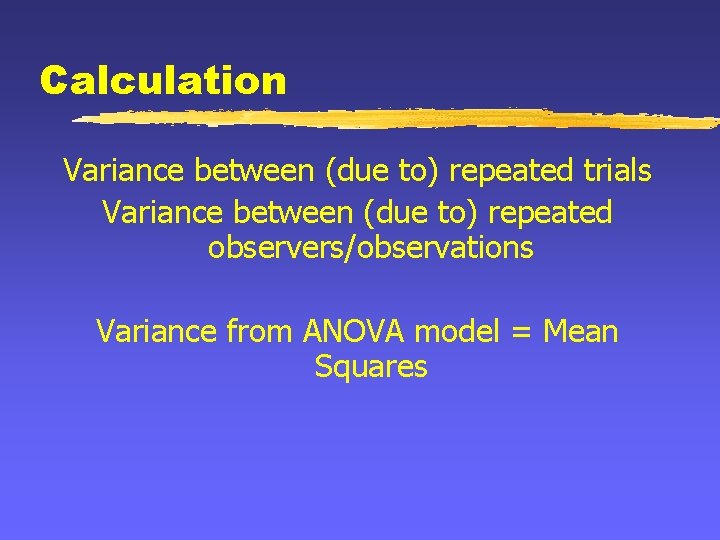

Calculation Variance between (due to) repeated trials Variance between (due to) repeated observers/observations Variance from ANOVA model = Mean Squares

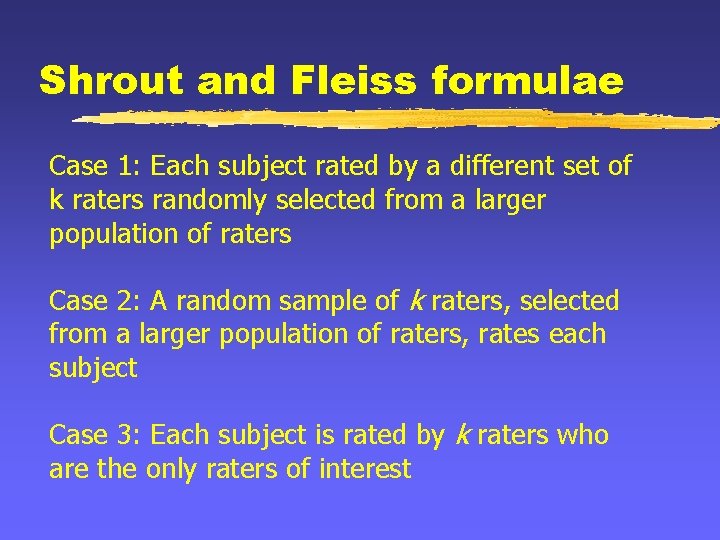

Shrout and Fleiss formulae Case 1: Each subject rated by a different set of k raters randomly selected from a larger population of raters Case 2: A random sample of k raters, selected from a larger population of raters, rates each subject Case 3: Each subject is rated by k raters who are the only raters of interest

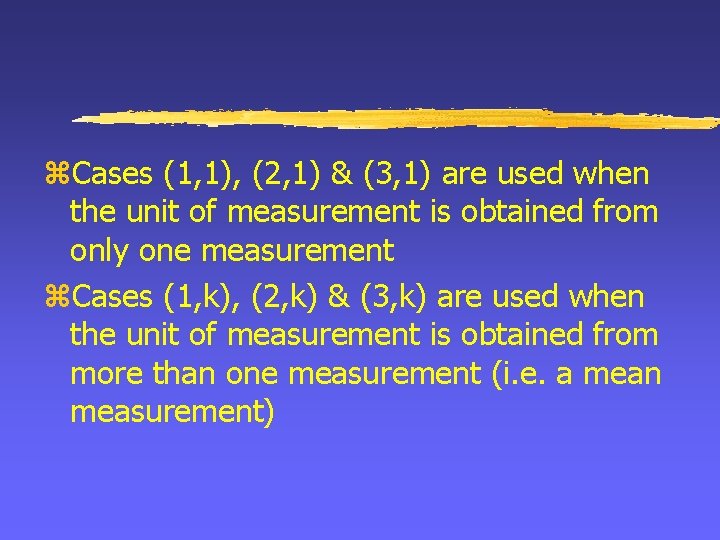

z. Cases (1, 1), (2, 1) & (3, 1) are used when the unit of measurement is obtained from only one measurement z. Cases (1, k), (2, k) & (3, k) are used when the unit of measurement is obtained from more than one measurement (i. e. a mean measurement)

How to calculate z. Use equations and values obtained from ANOVA’s (Rankin and Stokes, 1998) z. Use macros downloaded from SPSS. com (may not work with all versions of SPSS)

Cronbachs Alpha 4 Generalised measure of reliability 4 Easy to interpret 4 Similar to intraclass correlation

Kappa statistics 4 Kappa statistic âNominal data 4 Weighted Kappa statistic âOrdinal data

Generating ICC’s Need z. Correct macro z. Data laid out appropriately z. Two lines of syntax to run macros z. All files resident in the same directory

References 4 Sim J (1993) Measurement validity in Physical Therapy research. Physical Therapy, 73 (2); 48 -55 4 Rankin G, Stokes M (1998) Reliability of assessment tools in rehabilitation: an illustration of appropriate statistical analyses. Clinical Rehabilitation, 12; 187 4 Bland JM, Altman DG (1986) Statistical methods for assessing agreement between two methods of clinical measurement. Lancet, Feb 8; 307 -310. 4 Kreb DE (1984) Intraclass correlation coefficients: Use and calculation. Physical Therapy, 64 (10); 15811582. 4 Thomas JR, Nelson JK (2001) Research Methods in Physical Activity 4 th Ed. Human Kinetics, Leeds. 4 George, K, Batterham, A & Sulliavan, I (2000) Validity in clinical research: a review of basic concepts and definitions. Physical Therapy in Sport, 1; 19 -27

more references 4 Eliasziw M, Young SL, Woodbury MG, Fryday-Field K (1994) Statistical methodology for the concurrent assessment of interrater and intrarater reliability: Using gonimetric measurements as an example. Physical Therapy, 74 (8); 777 -788. 4 Keating J, Maryas T (1998) Unreliable inferences from reliable measurements. Australian Journal of Physiotherapy, 44 (1); 5 -10. 4 Greenfield MLH, Kuhn JE, Wotjys EM (1998) Validity and Reliability. American Journal of Sports Medicine, 26 (3); 483 -485. 4 Batterham, A. M. & George, K. P. (2000) Reliability in evidence-based clinical practice: a primer for allied health professionals. Physical Therapy in Sport, 1; 5461

- Slides: 26