Understanding Virtualization Overhead to Optimize VM Mechanisms and

- Slides: 10

Understanding Virtualization Overhead to Optimize VM Mechanisms and Configurations Fabricio Benevenuto (Souza) , Jose Renato Santos, Yoshio Turner, G. (John) Janakiraman ISSL, HP Labs © 2004 Hewlett-Packard Development Company, L. P. The information contained herein is subject to change without notice

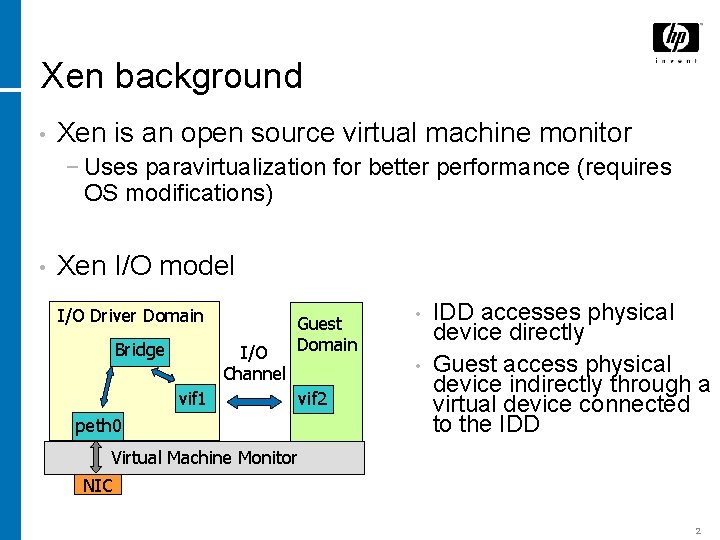

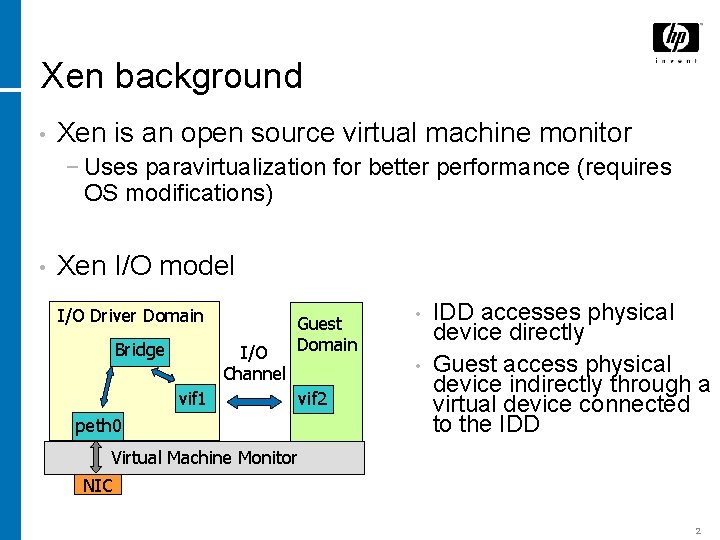

Xen background • Xen is an open source virtual machine monitor − Uses paravirtualization for better performance (requires OS modifications) • Xen I/O model I/O Driver Domain Bridge I/O Channel Guest Domain vif 1 peth 0 • • vif 2 IDD accesses physical device directly Guest access physical device indirectly through a virtual device connected to the IDD Virtual Machine Monitor NIC 2

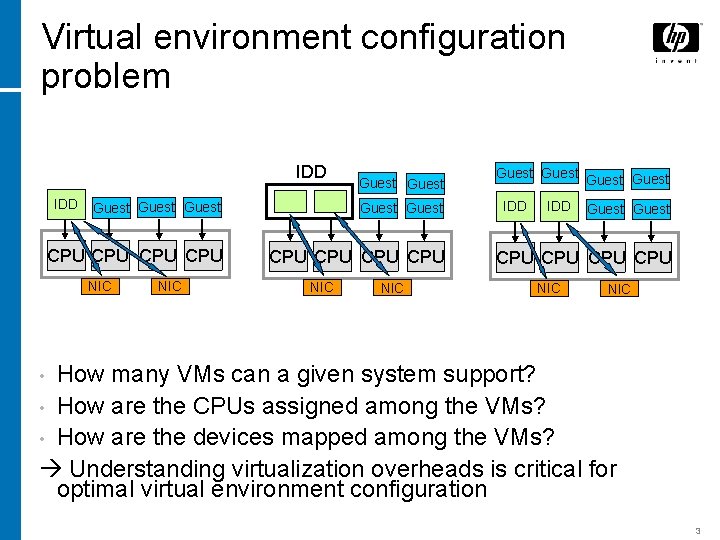

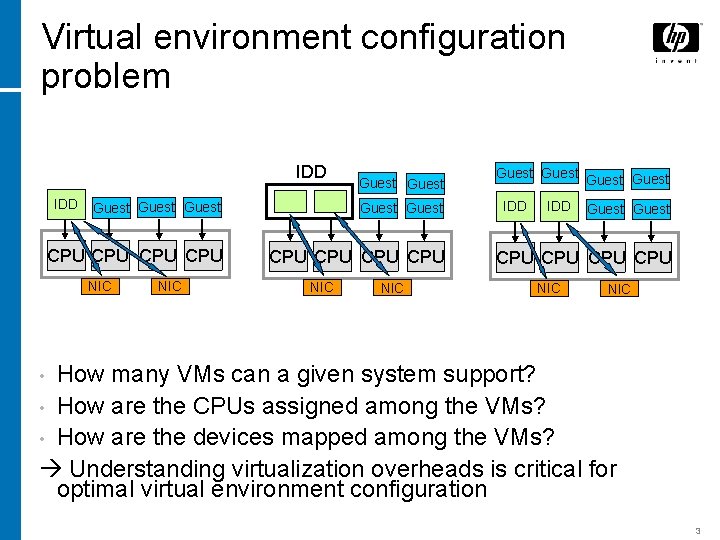

Virtual environment configuration problem IDD Guest Guest CPU CPU NIC NIC Guest IDD Guest CPU CPU NIC How many VMs can a given system support? • How are the CPUs assigned among the VMs? • How are the devices mapped among the VMs? Understanding virtualization overheads is critical for optimal virtual environment configuration • 3

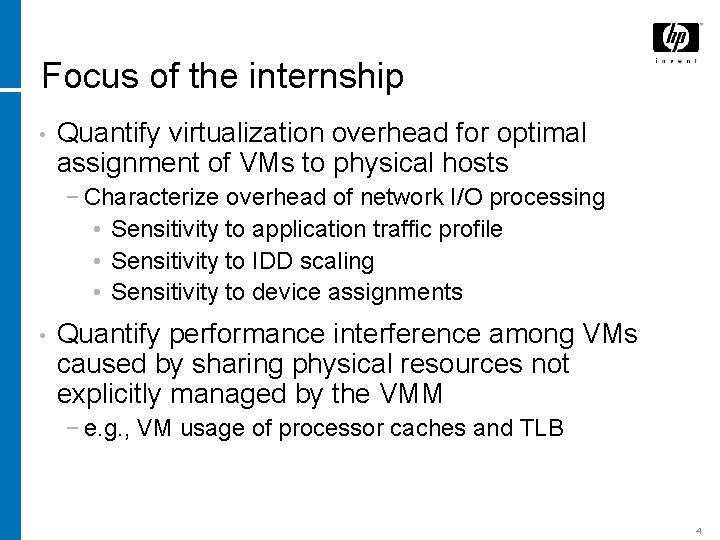

Focus of the internship • Quantify virtualization overhead for optimal assignment of VMs to physical hosts − Characterize overhead of network I/O processing • Sensitivity to application traffic profile • Sensitivity to IDD scaling • Sensitivity to device assignments • Quantify performance interference among VMs caused by sharing physical resources not explicitly managed by the VMM − e. g. , VM usage of processor caches and TLB 4

Experimental setup • 4 -way SMP (Proliant DL-580) − Two gig. E NICs (Intel E 1000) connected to two client machines • Benchmarks − TCP stream benchmark: single connection − Linux kernel compilation • Used Xenoprof to measure CPU utilization, cache and TLB misses − Uses hardware counters to measure events such as cache misses, TLB misses, and clock cycles 5

I/O processing overhead in Xen: Single NIC • What CPU allocation is needed to support I/O processing? • 3 to 5 times higher CPU utilization (IDD + guest) for same throughput compared to Linux 6

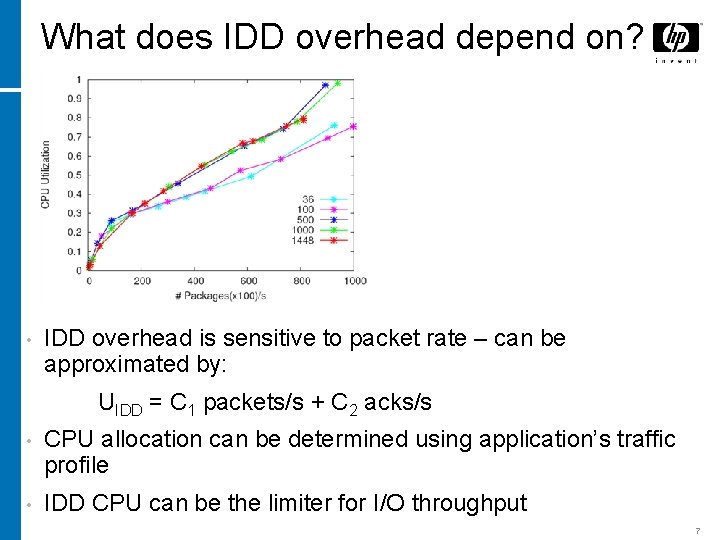

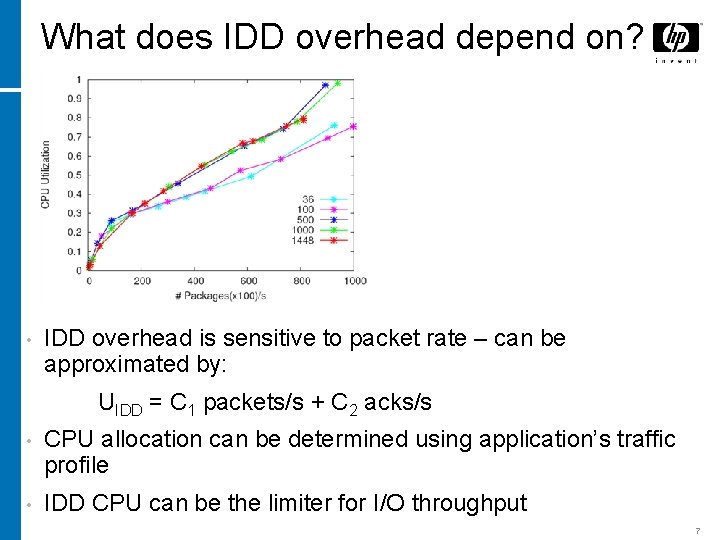

What does IDD overhead depend on? • IDD overhead is sensitive to packet rate – can be approximated by: UIDD = C 1 packets/s + C 2 acks/s • CPU allocation can be determined using application’s traffic profile • IDD CPU can be the limiter for I/O throughput 7

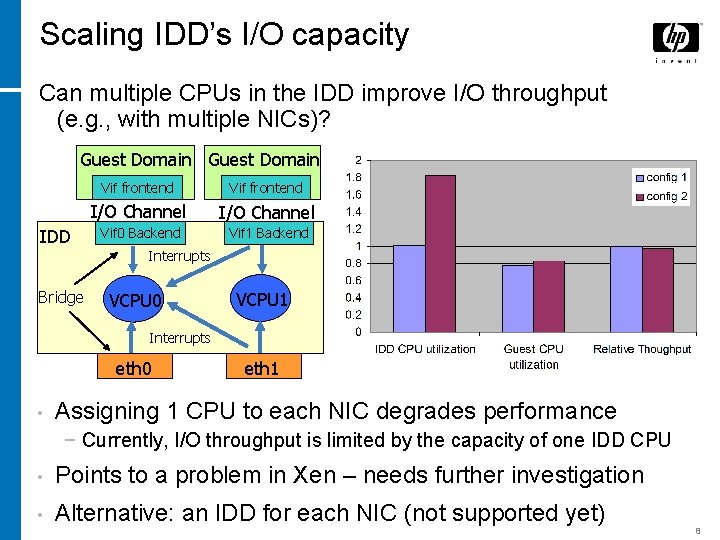

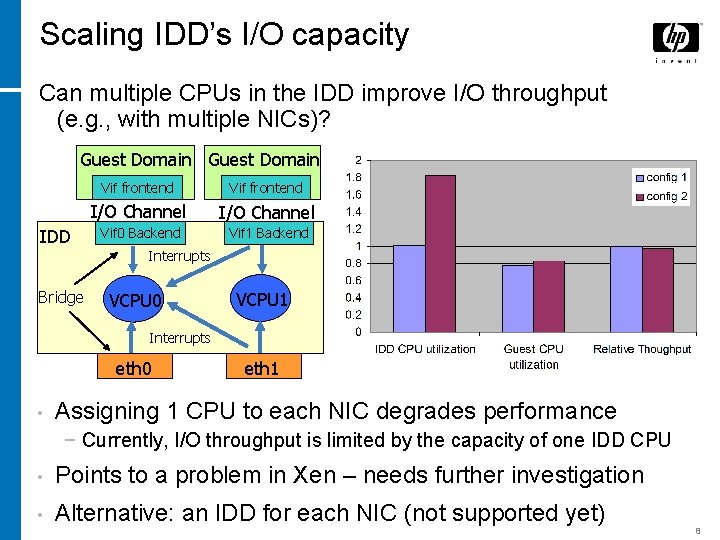

Scaling IDD’s I/O capacity Can multiple CPUs in the IDD improve I/O throughput (e. g. , with multiple NICs)? Guest Domain IDD Vif frontend I/O Channel Vif 0 Backend Vif 1 Backend Interrupts Bridge VCPU 0 VCPU 1 Interrupts eth 0 • eth 1 Assigning 1 CPU to each NIC degrades performance − Currently, I/O throughput is limited by the capacity of one IDD CPU • Points to a problem in Xen – needs further investigation • Alternative: an IDD for each NIC (not supported yet) 8

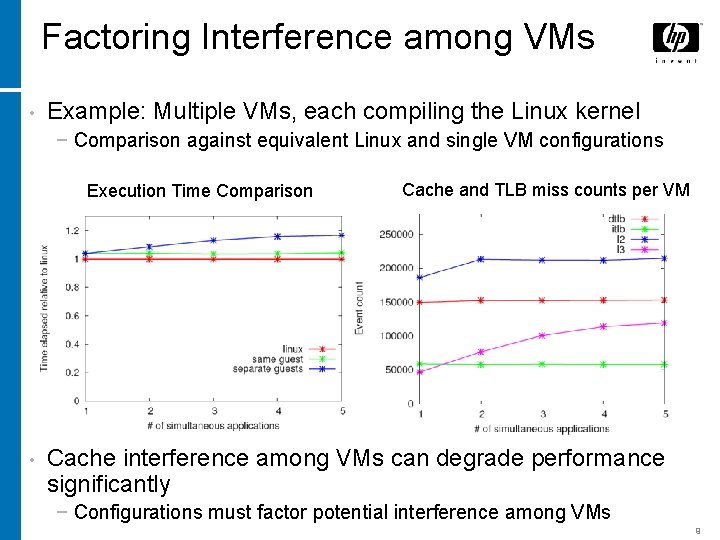

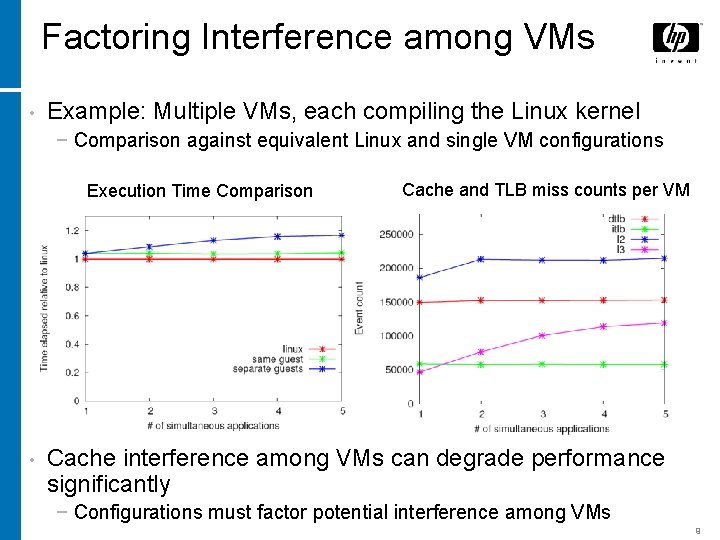

Factoring Interference among VMs • Example: Multiple VMs, each compiling the Linux kernel − Comparison against equivalent Linux and single VM configurations Execution Time Comparison • Cache and TLB miss counts per VM Cache interference among VMs can degrade performance significantly − Configurations must factor potential interference among VMs 9

Summary and Future Work Summary • Findings provide guidance to configure Xen: − CPU requirement for I/O processing can be estimated from application traffic profile − I/O processing is limited by the capacity of a single CPU − Must account for degradation due to the sharing of resources that are not explicitly managed by the VMM • Exposed scalability and efficiency problems in Xen’s I/O model – needs improvement Future work • Extend study to workloads and platforms of typical customer environments (e. g. , HP-IT) • Develop an automated configuration tool 10