The Presidency Department of Planning Monitoring and Evaluation

- Slides: 29

The Presidency Department of Planning Monitoring and Evaluation MANAGEMENT PERFORMANCE ASSESSMENT TOOL (MPAT) MPAT 2013 (1. 3) RESULTS

Why assess management practices? Ø Capable and developmental state is prerequisite to achieving NDP objectives Ø Weak administration is a recurring theme and is leading to poor service delivery, e. g. § Shortages of ARVs in some provinces § Non-payment of suppliers within 30 days Ø MPAT measures whether things are being done right or better Ø Departments must also be assessed against the outcomes and their strategic and annual plans to determine if they are doing the right things The Presidency: Department of Planning Monitoring and Evaluation 2

Background Ø DPME, together with the Offices of the Premier and transversal policy departments have since 2011 been assessing the quality of management practices Ø Noted improvements are evident from the 2012 results across most departments - In some areas of management however there has not been significant improvement Ø DPME has documented good practices since 2011 to assist departments to improve their management practices The Presidency: Department of Planning Monitoring and Evaluation 3

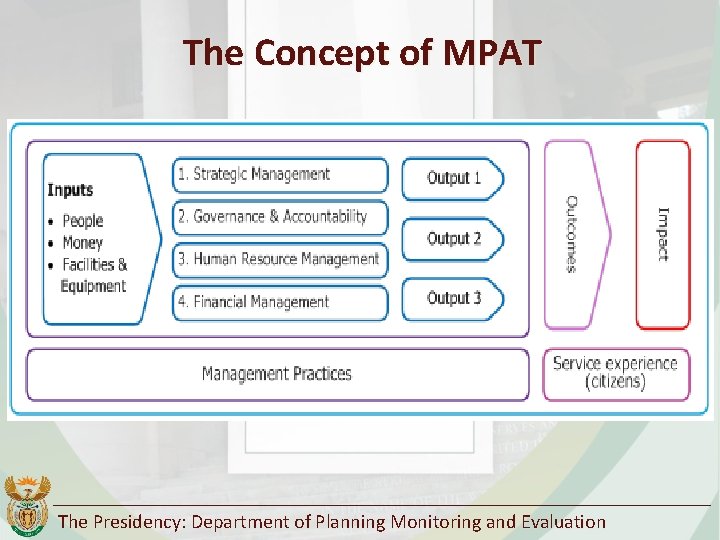

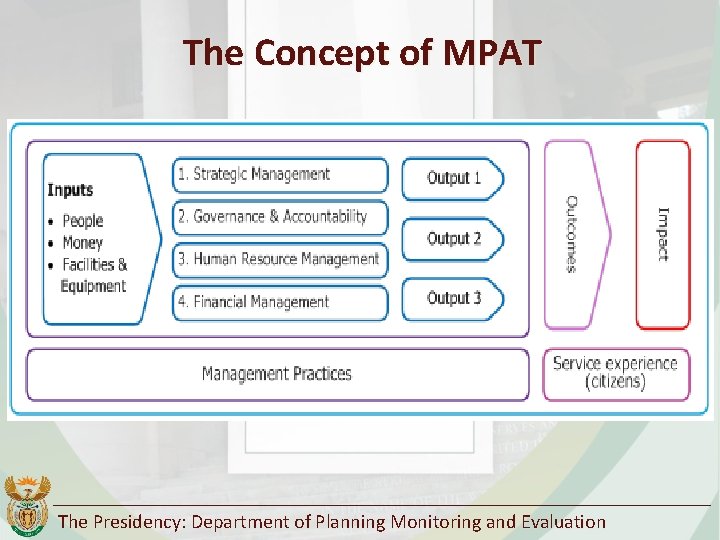

The Concept of MPAT The Presidency: Department of Planning Monitoring and Evaluation

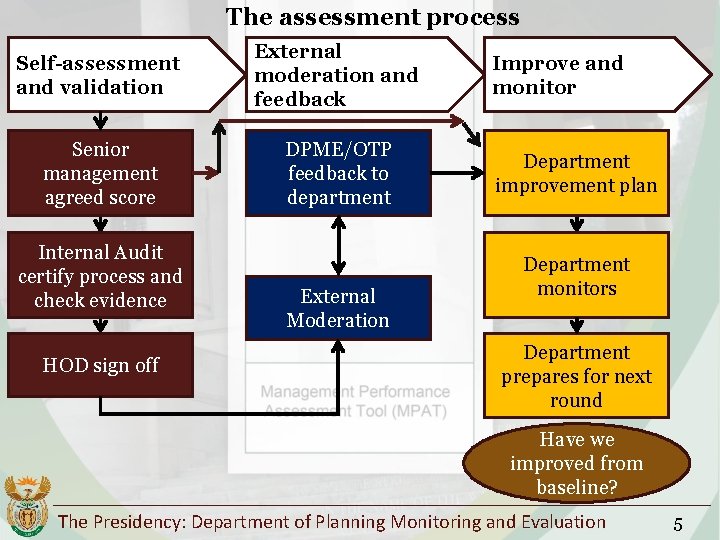

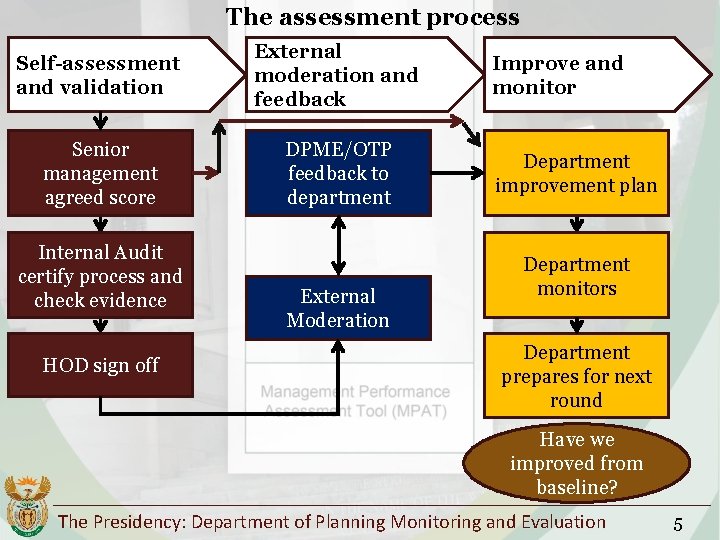

The assessment process Self-assessment and validation External moderation and feedback Senior management agreed score DPME/OTP feedback to department Internal Audit certify process and check evidence HOD sign off External Moderation Improve and monitor Department improvement plan Department monitors Department prepares for next round Have we improved from baseline? The Presidency: Department of Planning Monitoring and Evaluation 5

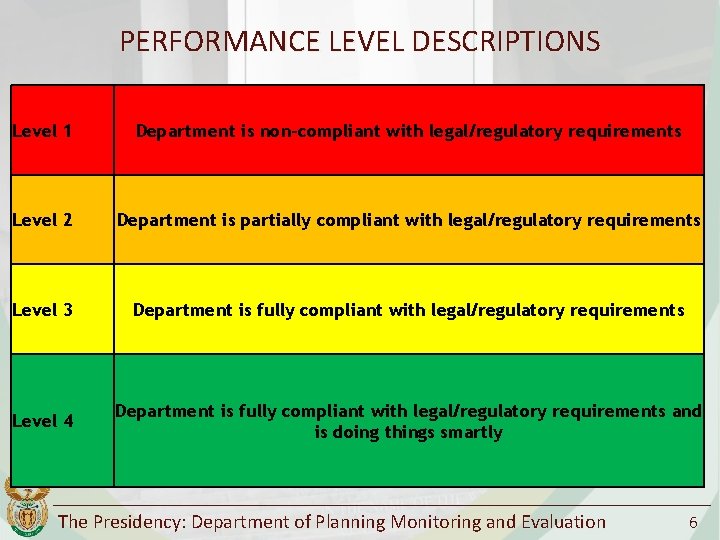

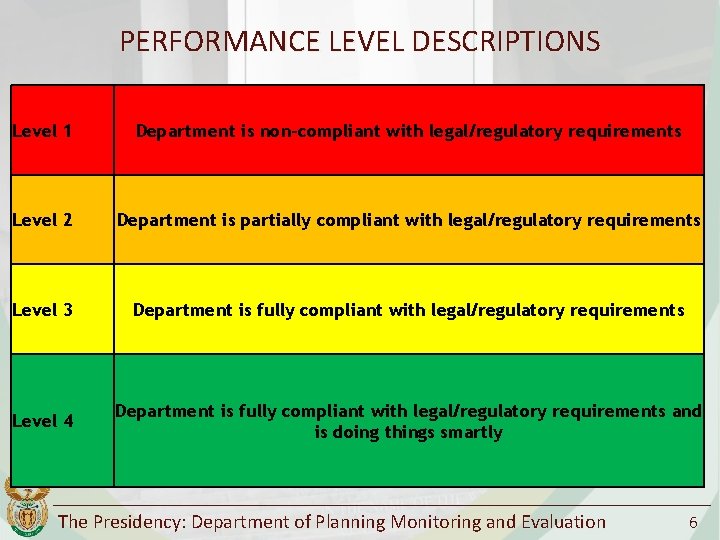

PERFORMANCE LEVEL DESCRIPTIONS Level 1 Department is non-compliant with legal/regulatory requirements Level 2 Department is partially compliant with legal/regulatory requirements Level 3 Department is fully compliant with legal/regulatory requirements Level 4 Department is fully compliant with legal/regulatory requirements and is doing things smartly The Presidency: Department of Planning Monitoring and Evaluation 6

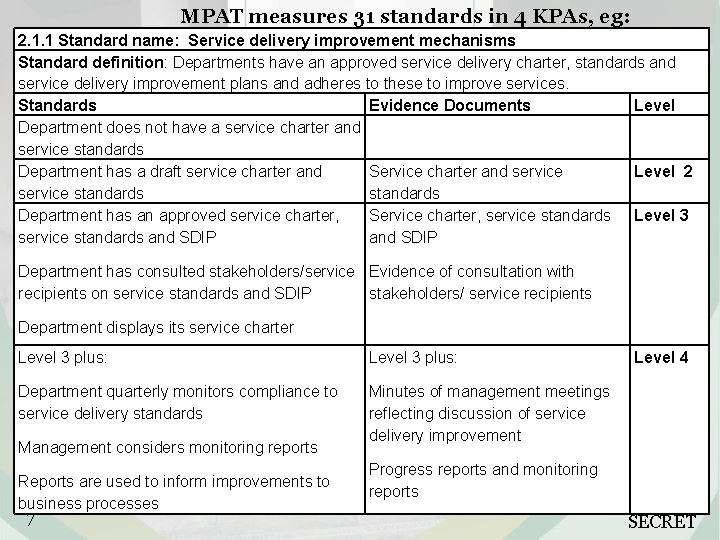

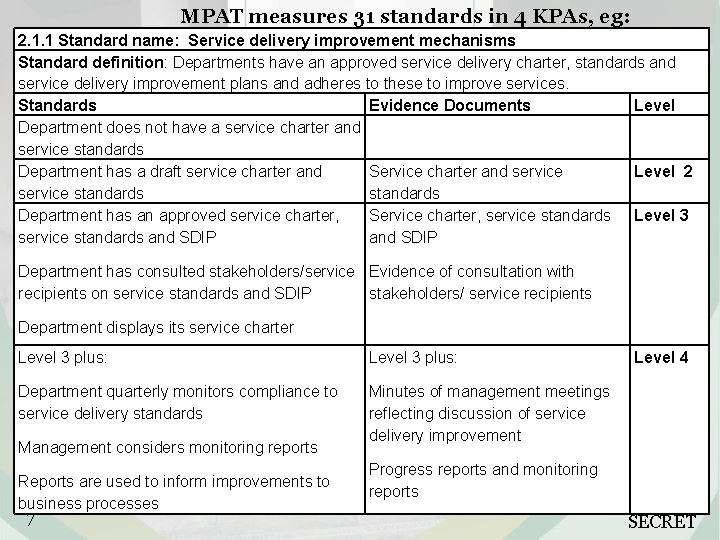

MPAT measures 31 standards in 4 KPAs, eg: 2. 1. 1 Standard name: Service delivery improvement mechanisms Standard definition: Departments have an approved service delivery charter, standards and service delivery improvement plans and adheres to these to improve services. Standards Evidence Documents Level Department does not have a service charter and Level 1 service standards Department has a draft service charter and Service charter and service Level 2 service standards Department has an approved service charter, Service charter, service standards Level 3 service standards and SDIP Department has consulted stakeholders/service Evidence of consultation with recipients on service standards and SDIP stakeholders/ service recipients Department displays its service charter Level 3 plus: Department quarterly monitors compliance to service delivery standards Minutes of management meetings reflecting discussion of service delivery improvement Management considers monitoring reports Reports are used to inform improvements to business processes 7 Level 4 Progress reports and monitoring reports SECRET

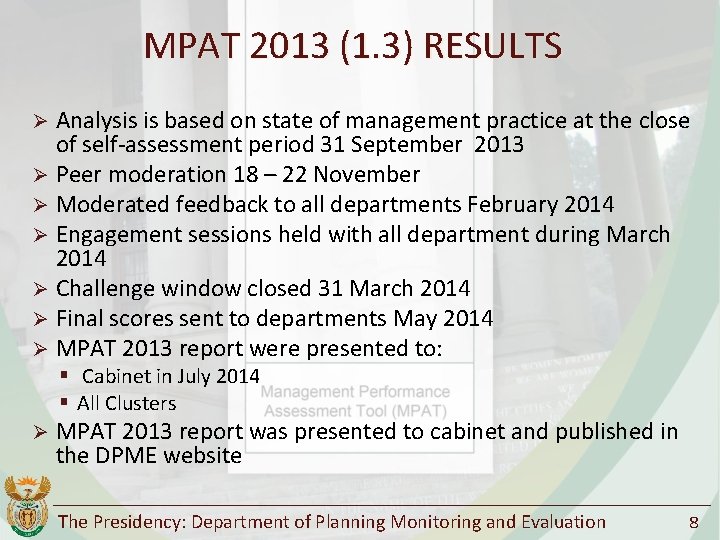

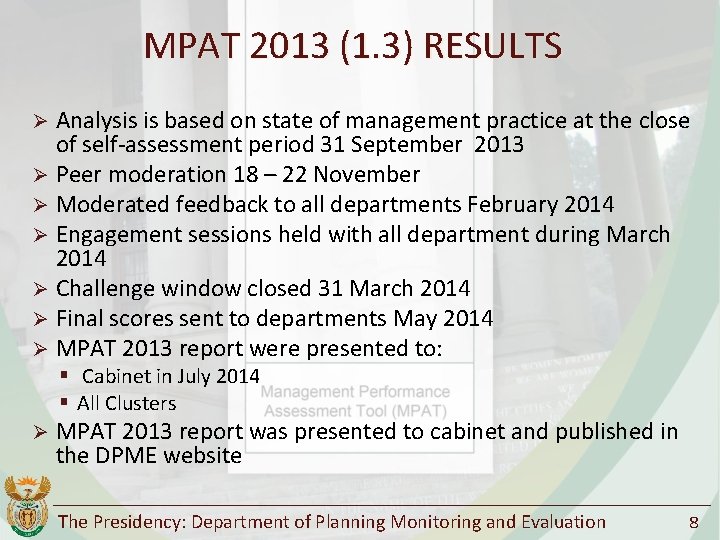

MPAT 2013 (1. 3) RESULTS Ø Analysis is based on state of management practice at the close of self-assessment period 31 September 2013 Ø Peer moderation 18 – 22 November Ø Moderated feedback to all departments February 2014 Ø Engagement sessions held with all department during March 2014 Ø Challenge window closed 31 March 2014 Ø Final scores sent to departments May 2014 Ø MPAT 2013 report were presented to: § Cabinet in July 2014 § All Clusters Ø MPAT 2013 report was presented to cabinet and published in the DPME website The Presidency: Department of Planning Monitoring and Evaluation 8

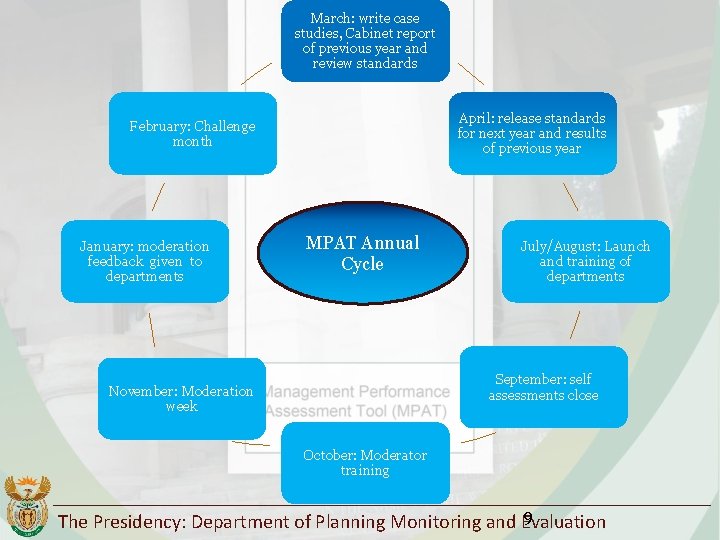

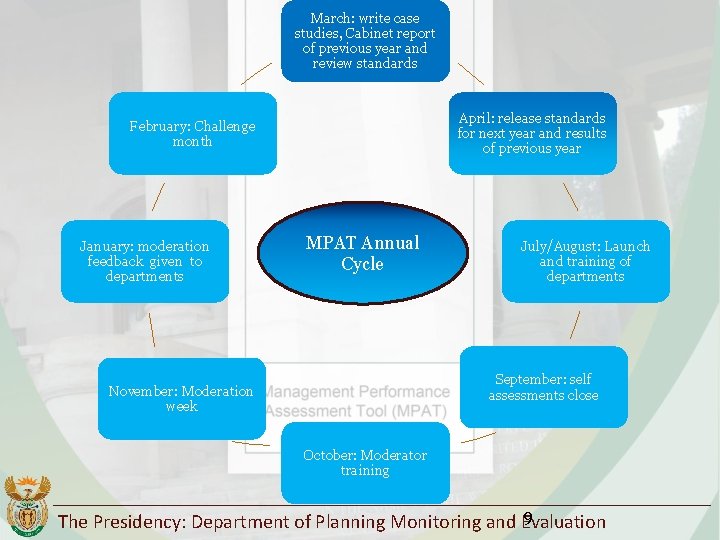

March: write case studies, Cabinet report of previous year and review standards April: release standards for next year and results of previous year February: Challenge month January: moderation feedback given to departments MPAT Annual Cycle July/August: Launch and training of departments September: self assessments close November: Moderation week October: Moderator training 9 The Presidency: Department of Planning Monitoring and Evaluation

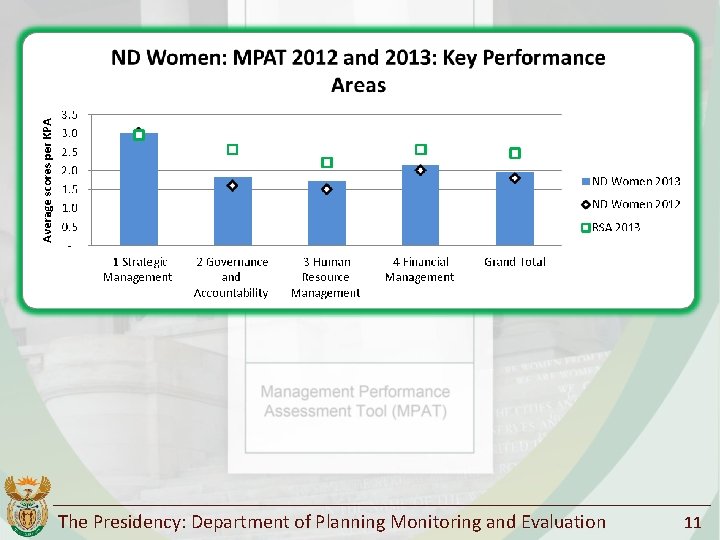

MPAT Result

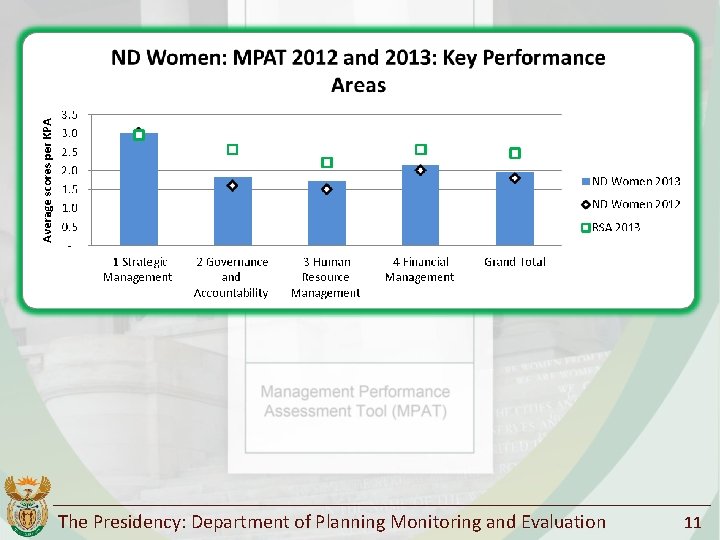

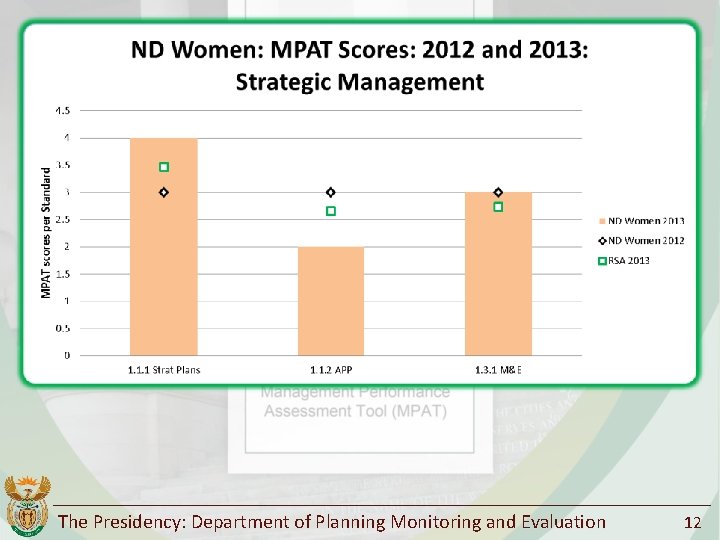

The Presidency: Department of Planning Monitoring and Evaluation 11

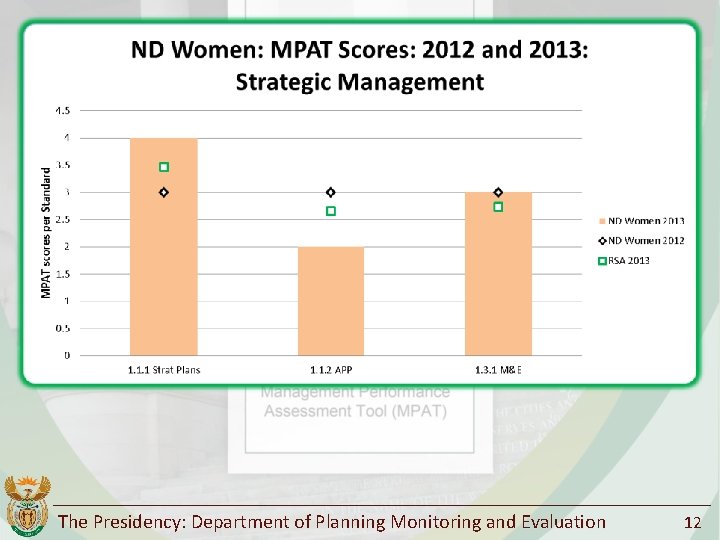

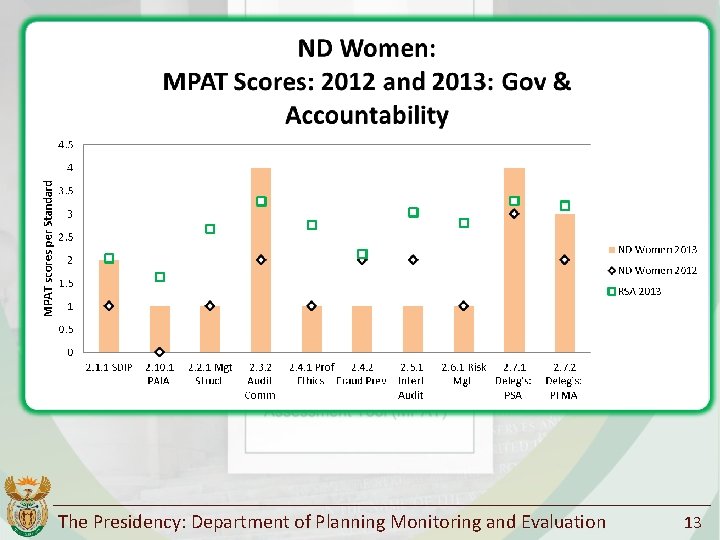

The Presidency: Department of Planning Monitoring and Evaluation 12

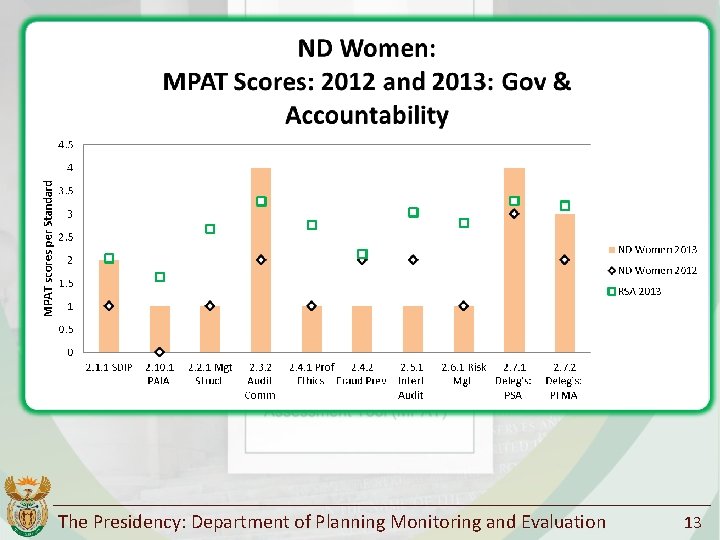

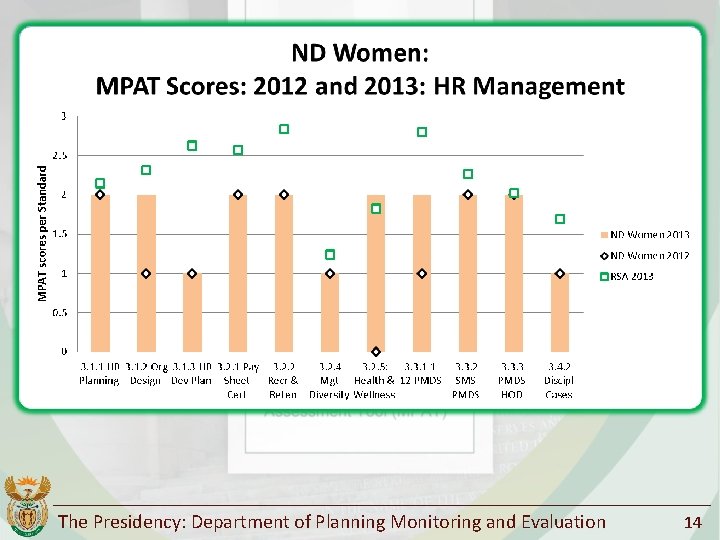

The Presidency: Department of Planning Monitoring and Evaluation 13

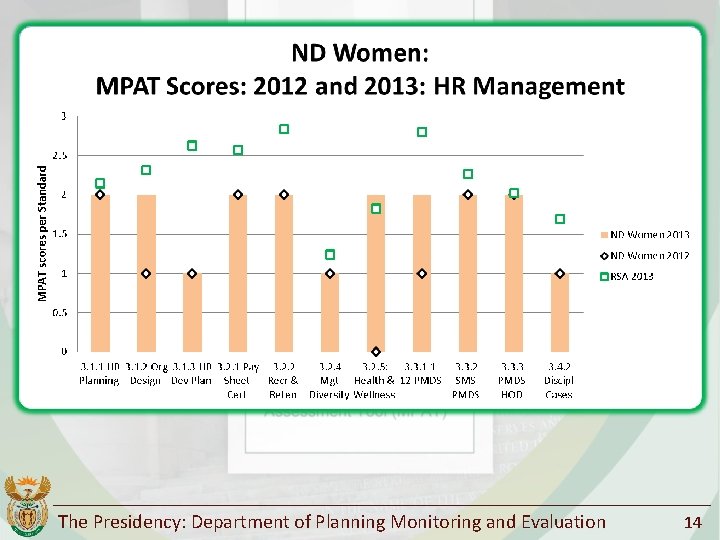

The Presidency: Department of Planning Monitoring and Evaluation 14

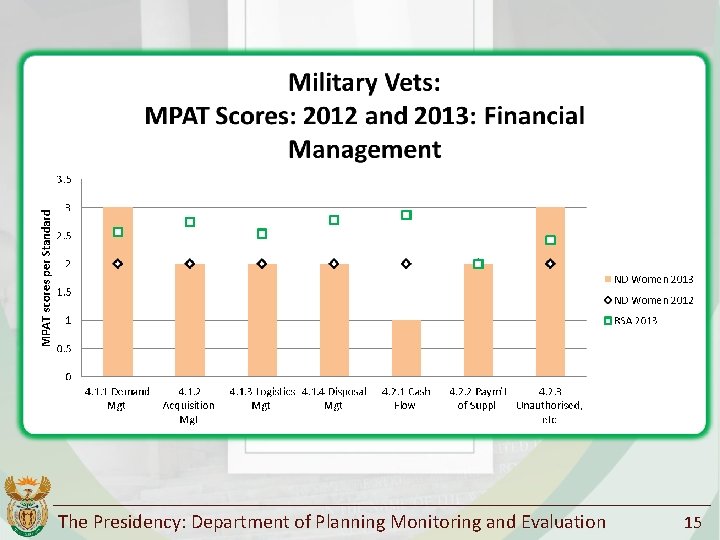

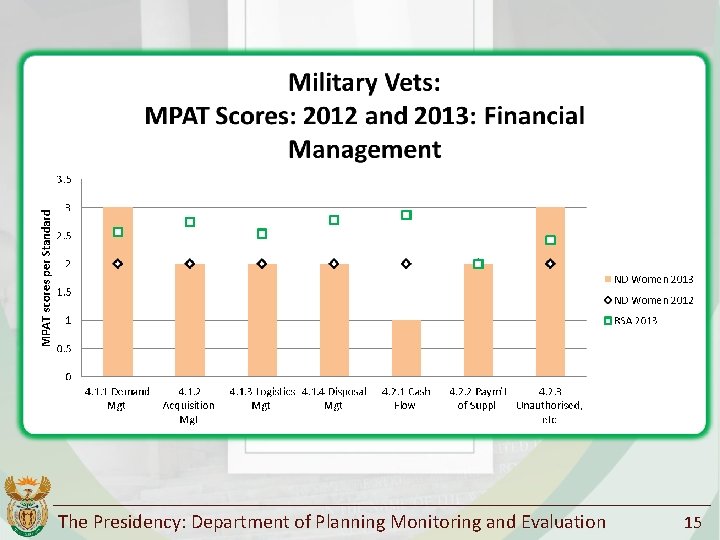

The Presidency: Department of Planning Monitoring and Evaluation 15

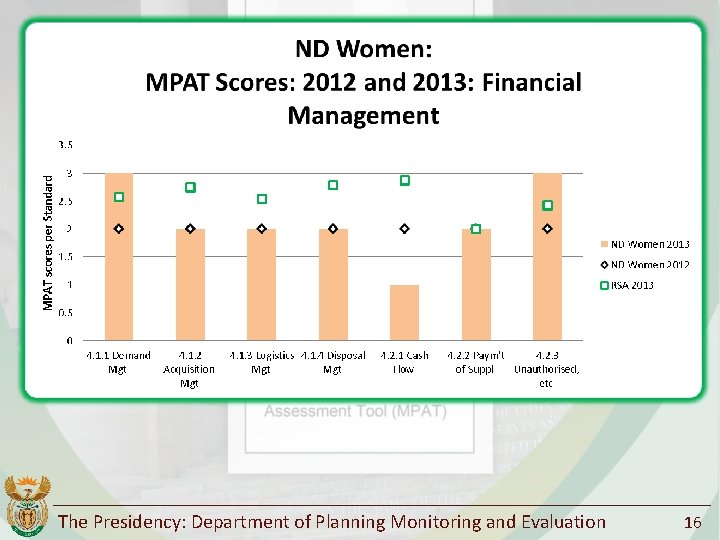

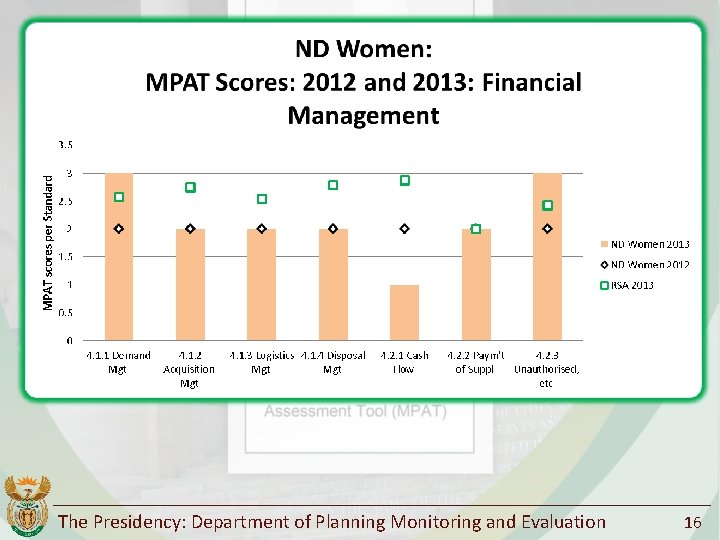

The Presidency: Department of Planning Monitoring and Evaluation 16

Analysis against external criteria

Analysis against external criteria Ø Statistical analysis of results, together with data on certain external criteria, indicated that: § HR-related standards are particularly important for achieving results in terms of the Auditor. General’s indicator of meeting more than 80% of performance targets in the APP § Senior Management Service (SMS) stability (the proportion of DGs and DDGs in office for more than three years) correlated frequently with a range of MPAT standards The Presidency: Department of Planning Monitoring and Evaluation 18

Lessons from Data Analysis Ø Statistical analysis of results, together with data on certain external criteria, indicated that: § No clear indication that Strategic Planning and HR Management are related § Senior Management Service (SMS) stability (the proportion of DGs and DDGs in office for more than three years) correlated frequently across a range of MPAT standards The Presidency: Department of Planning Monitoring and Evaluation 19

Lessons from Data Analysis Ø The following standards correlate most with department achieving the APP targets as measured by the Auditor General § Planning and monitoring under Strategy § Integrity and risk management under Governance § Good organisational design and planning under HR, plus administrative-level performance management § Control of unauthorised expenditure under Finance The Presidency: Department of Planning Monitoring and Evaluation 20

Case Studies

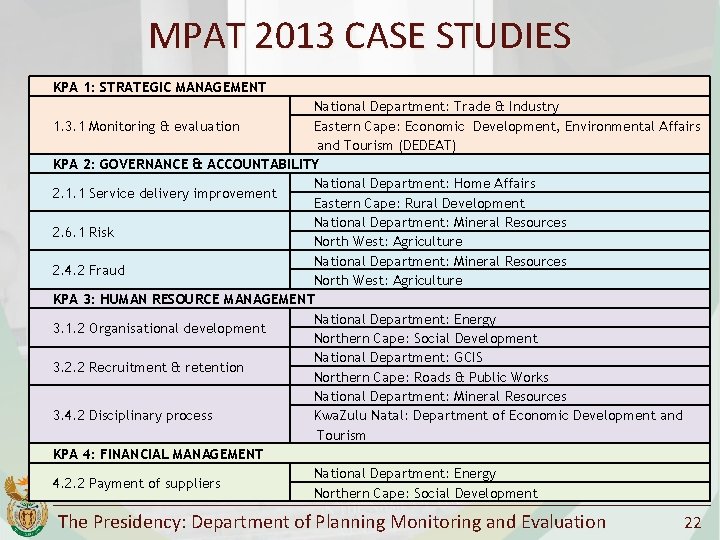

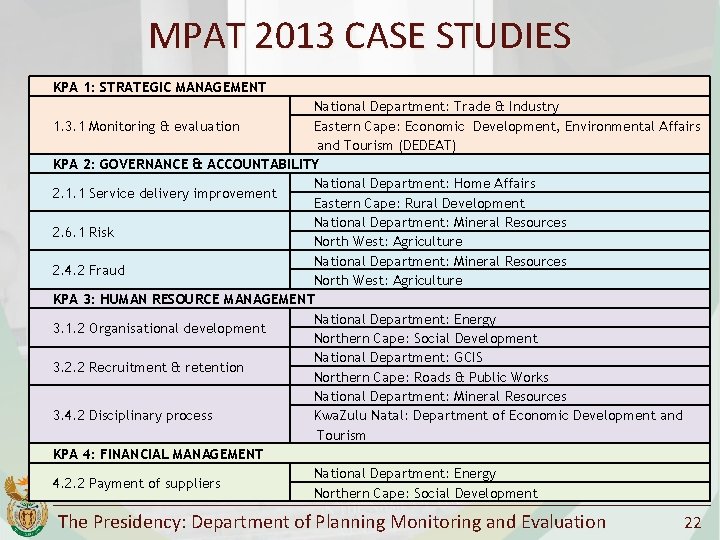

MPAT 2013 CASE STUDIES KPA 1: STRATEGIC MANAGEMENT National Department: Trade & Industry Eastern Cape: Economic Development, Environmental Affairs 1. 3. 1 Monitoring & evaluation and Tourism (DEDEAT) KPA 2: GOVERNANCE & ACCOUNTABILITY National Department: Home Affairs 2. 1. 1 Service delivery improvement Eastern Cape: Rural Development National Department: Mineral Resources 2. 6. 1 Risk North West: Agriculture National Department: Mineral Resources 2. 4. 2 Fraud North West: Agriculture KPA 3: HUMAN RESOURCE MANAGEMENT National Department: Energy 3. 1. 2 Organisational development Northern Cape: Social Development National Department: GCIS 3. 2. 2 Recruitment & retention Northern Cape: Roads & Public Works National Department: Mineral Resources 3. 4. 2 Disciplinary process Kwa. Zulu Natal: Department of Economic Development and Tourism KPA 4: FINANCIAL MANAGEMENT National Department: Energy 4. 2. 2 Payment of suppliers Northern Cape: Social Development The Presidency: Department of Planning Monitoring and Evaluation 22

Lessons from Case Studies Ø National departments still do not comply with DPSA requirements for SDIP compliance – DHA only level 1 despite intense interventions to improve service delivery Ø Northern Cape Social Development made effective use of DPSA Guide and Toolkit in Organisational Design this ensured that the department was positioned to implement its War on Poverty Programme – Foetal alcohol syndrome reduced by 30% in De Aar The Presidency: Department of Planning Monitoring and Evaluation 23

Lessons from Case Studies Ø Effective monitoring and tracking of disciplinary cases by Department of Mineral Resources ensures management run disciplinary processes and that all disciplinary cases are resolved within 90 days Ø Northern Cape Social Development through leadership commitment not only implemented effective decentralised delegations but managed to pay suppliers within 5 days The Presidency: Department of Planning Monitoring and Evaluation 24

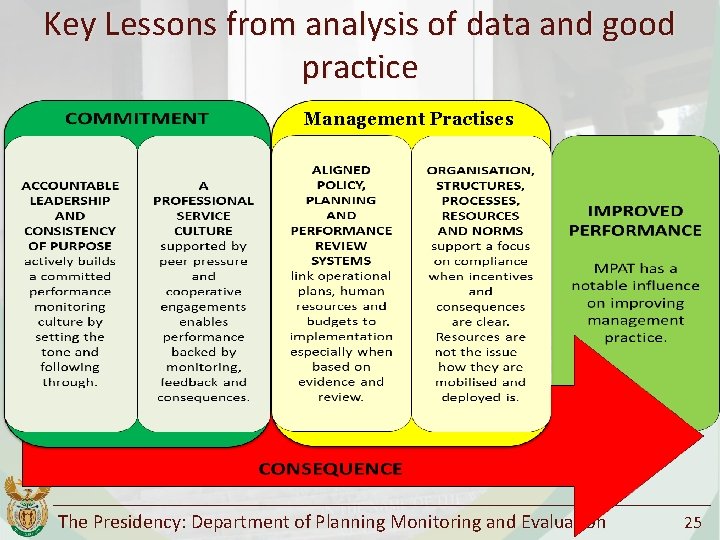

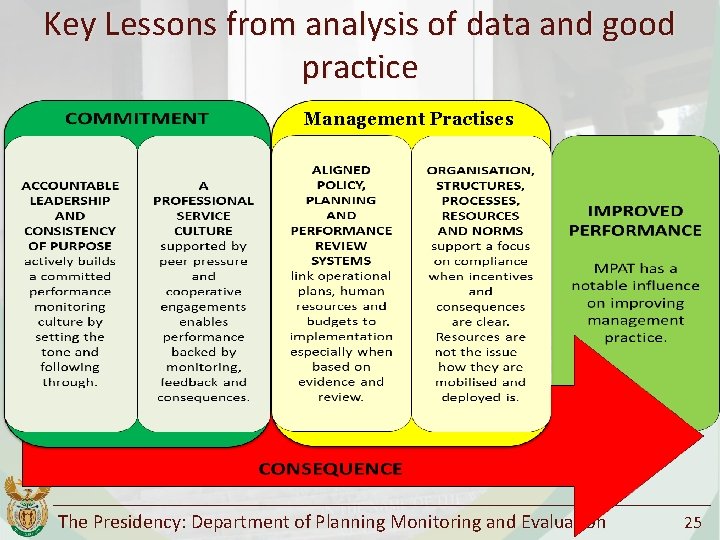

Key Lessons from analysis of data and good practice Management Practises The Presidency: Department of Planning Monitoring and Evaluation 25

Ke ya leboga Ke a leboha Ke a leboga Ngiyabonga Ndiyabulela Ngiyathokoza Ngiyabonga Inkomu Ndi khou livhuha Dankie Thank you The Presidency: Department of Planning Monitoring and Evaluation

Additional Slides 27

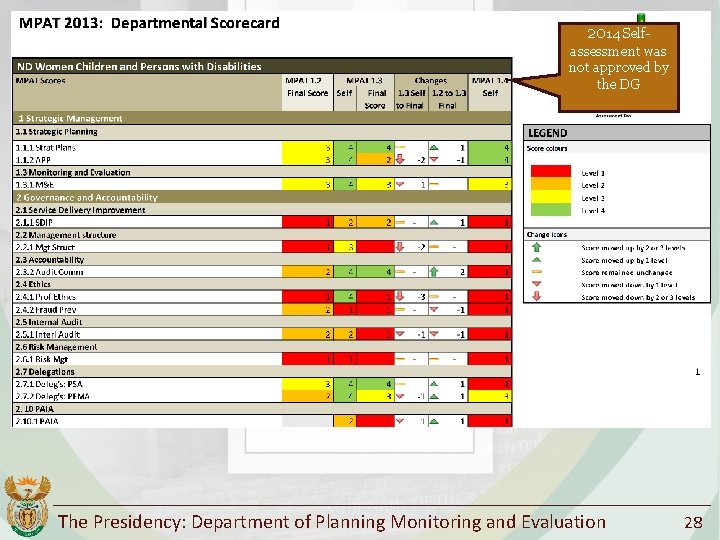

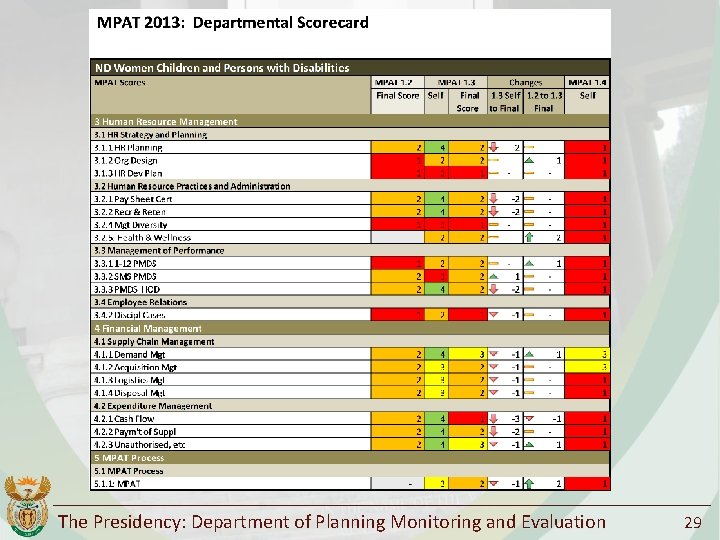

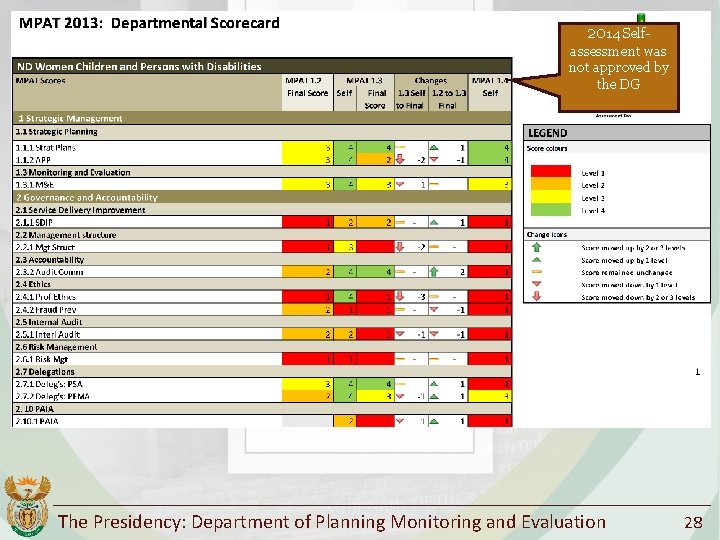

2014 Selfassessment was not approved by the DG The Presidency: Department of Planning Monitoring and Evaluation 28

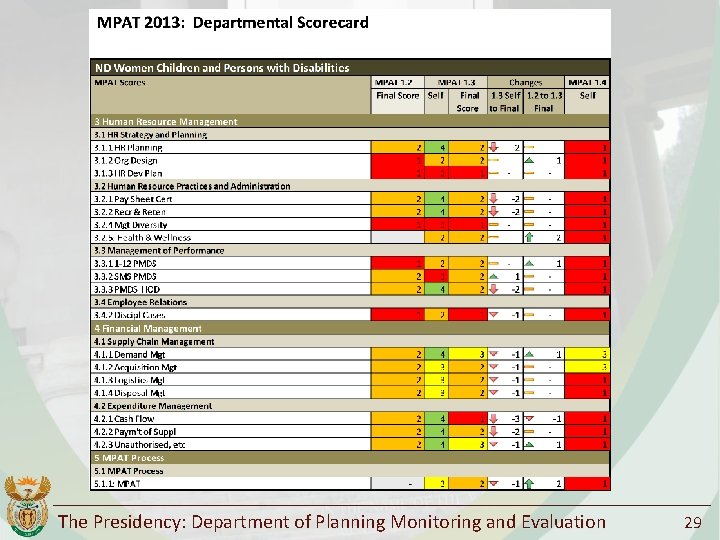

The Presidency: Department of Planning Monitoring and Evaluation 29

Monitoring and evaluation of family planning programs

Monitoring and evaluation of family planning programs Importance of planning, monitoring and evaluation

Importance of planning, monitoring and evaluation What is visitor pre registration in picme

What is visitor pre registration in picme M&e cycle

M&e cycle Comparison between monitoring and evaluation

Comparison between monitoring and evaluation Basic principles of monitoring and evaluation

Basic principles of monitoring and evaluation M&e dashboard

M&e dashboard Knowledge management monitoring and evaluation

Knowledge management monitoring and evaluation Me planning

Me planning Principles of monitoring and evaluation

Principles of monitoring and evaluation Basics of monitoring and evaluation

Basics of monitoring and evaluation Constraints in monitoring and evaluation

Constraints in monitoring and evaluation Centre for evaluation and monitoring

Centre for evaluation and monitoring Types of project evaluation

Types of project evaluation Monitoring and evaluation in advocacy

Monitoring and evaluation in advocacy Budget monitoring and evaluation

Budget monitoring and evaluation Monitoring and evaluation image

Monitoring and evaluation image Introduction to monitoring and evaluation

Introduction to monitoring and evaluation Monitoring and evaluation tool sample deped

Monitoring and evaluation tool sample deped Research methods in monitoring and evaluation

Research methods in monitoring and evaluation Example of monitoring and evaluation in project proposal

Example of monitoring and evaluation in project proposal Difference between monitoring and evaluation

Difference between monitoring and evaluation Example of monitoring and evaluation in project proposal

Example of monitoring and evaluation in project proposal M&e framework

M&e framework School monitoring, evaluation and plan adjustment sample

School monitoring, evaluation and plan adjustment sample Swot analysis in school improvement plan

Swot analysis in school improvement plan Centre for assessment and monitoring

Centre for assessment and monitoring Presidency line of succession

Presidency line of succession Institutional presidency definition

Institutional presidency definition Chapter 20 section 2 the harding presidency

Chapter 20 section 2 the harding presidency