Sheffield Victims of Mad Cow Disease Or is

- Slides: 10

Sheffield -- Victims of Mad Cow Disease? ? Or is it really possible to develop a named entity recognition system in 4 days on a surprise language with no native speakers and no training data?

Named Entity Recognition n n Decided to work on resource collection and development for NE Test our claims about ANNIE being easy to adapt to new languages and tasks. Rule-based meant we didn’t need training data. But could we write rules without even knowing any Cebuano?

Adapting ANNIE for Cebuano n n n Default IE system is for English, but some modules can be used directly Used tokeniser, splitter, POS tagger, gazetteer, NE grammar, orthomatcher splitter and orthomatcher unmodified added tokenisation post-processing, new lexicon for POS tagger and new gazetteers Modified POS tagger implementation and NE grammars

Gazetteer n n n Perhaps surprisingly, very little info on Web mined English texts about Philippines for names of cities, first names, organisations. . . used bilingual dictionaries to create “finite” lists such as days of week, months of year. . mined Cebuano texts for “clue words” by combination of bootstrapping, guessing and bilingual dictionaries kept English gazetteer because many English proper nouns and little ambiguity

NE grammars n n n Most English JAPE rules based on POS tags and gazetteer lookup Grammars can be reused for languages with similar word order, orthography etc. Most of the rules left as for English, with a few minor adjustments

Evaluation (1) n n n System annotated 10 news texts and output as colour-coded HTML. Evaluation on paper by native Cebuano speaker from UMD. Evaluation not perfect due to lack of annotator training 85. 1% Precision, 58. 2% Recall, 71. 65% Fmeasure Non-reusable

Evaluation (2) n n n 2 nd evaluation used 21 news texts, hand tagged on paper and converted to GATE annotations later System annotations compared with “gold standard” Reusable

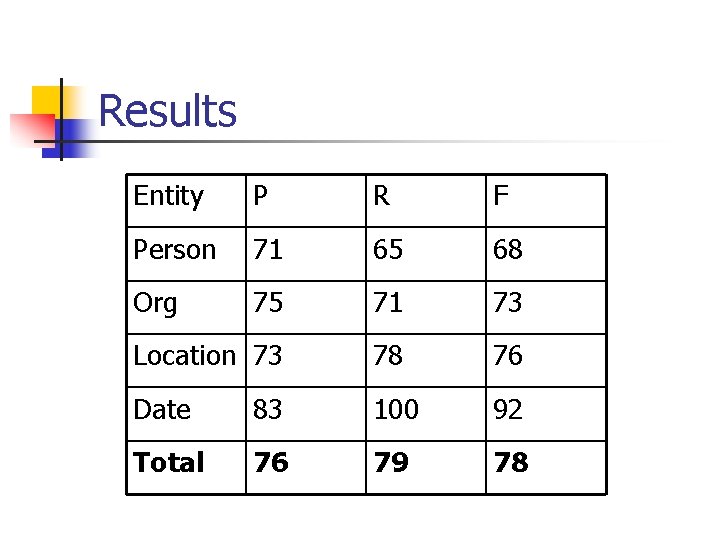

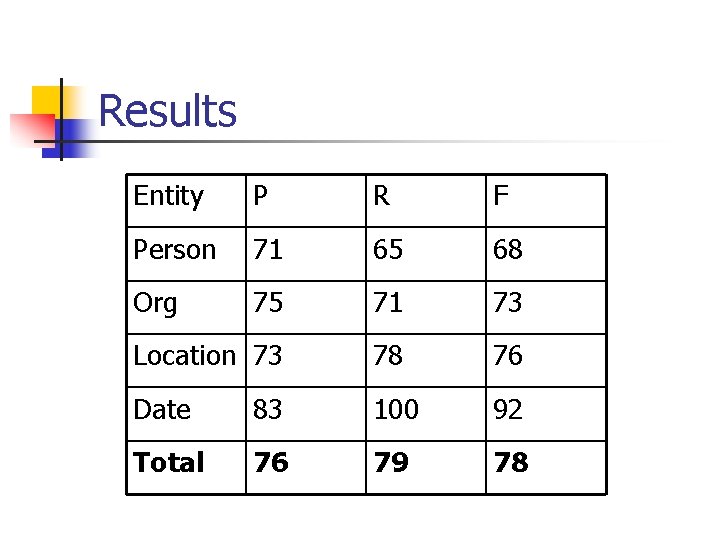

Results Entity P R F Person 71 65 68 Org 75 71 73 Location 73 78 76 Date 83 100 92 Total 76 79 78

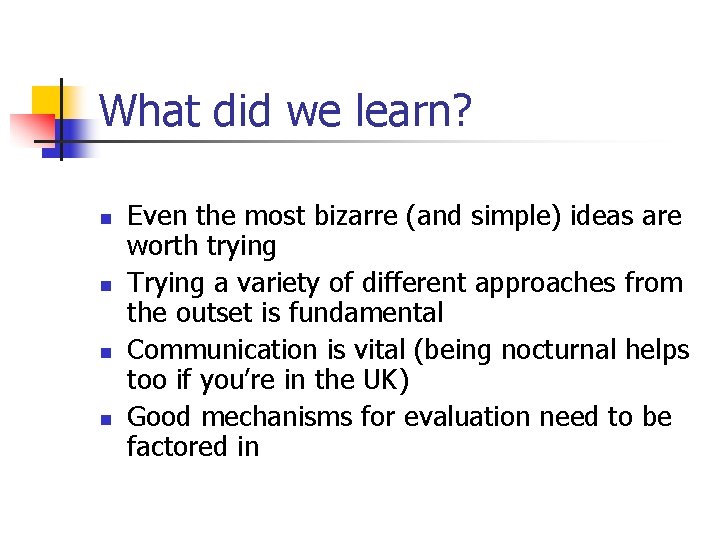

What did we learn? n n Even the most bizarre (and simple) ideas are worth trying Trying a variety of different approaches from the outset is fundamental Communication is vital (being nocturnal helps too if you’re in the UK) Good mechanisms for evaluation need to be factored in

Comparative proteomics kit ii western blot module

Comparative proteomics kit ii western blot module Mad cow disease

Mad cow disease Mad cow disease in humans

Mad cow disease in humans Communicable disease and non communicable disease

Communicable disease and non communicable disease After positioning yourself directly above the victims head

After positioning yourself directly above the victims head Gerald gallego

Gerald gallego Alexis serial

Alexis serial Hyatt skywalk collapse

Hyatt skywalk collapse Canadian centre for victims of torture

Canadian centre for victims of torture Types of victims according to mendelsohn

Types of victims according to mendelsohn Thourghout

Thourghout