Reactive Scala HW 1 Scala Check used wScala

![Scala. Check failure [info] ! Priority. Queue. testb: Falsified after 0 passed tests. [info] Scala. Check failure [info] ! Priority. Queue. testb: Falsified after 0 passed tests. [info]](https://slidetodoc.com/presentation_image/0c9a24fb019f61a51085570ba6581cb5/image-6.jpg)

- Slides: 13

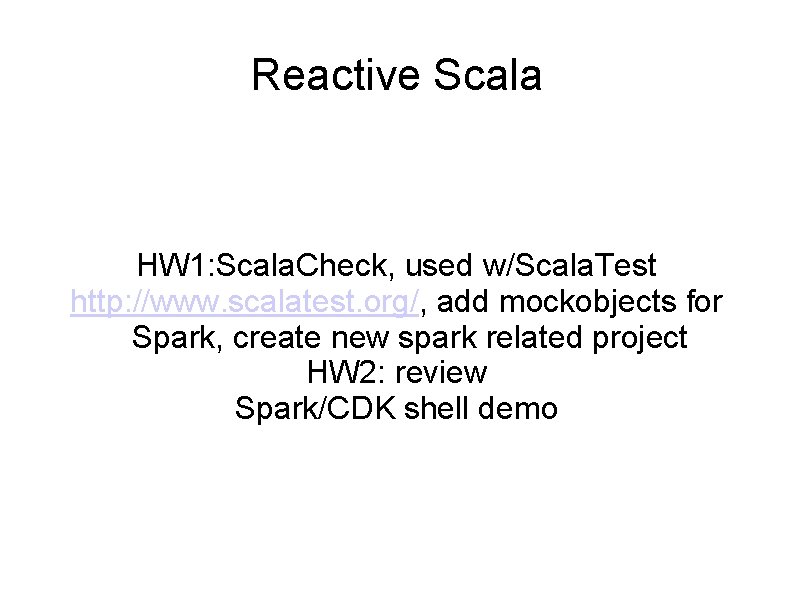

Reactive Scala HW 1: Scala. Check, used w/Scala. Test http: //www. scalatest. org/, add mockobjects for Spark, create new spark related project HW 2: review Spark/CDK shell demo

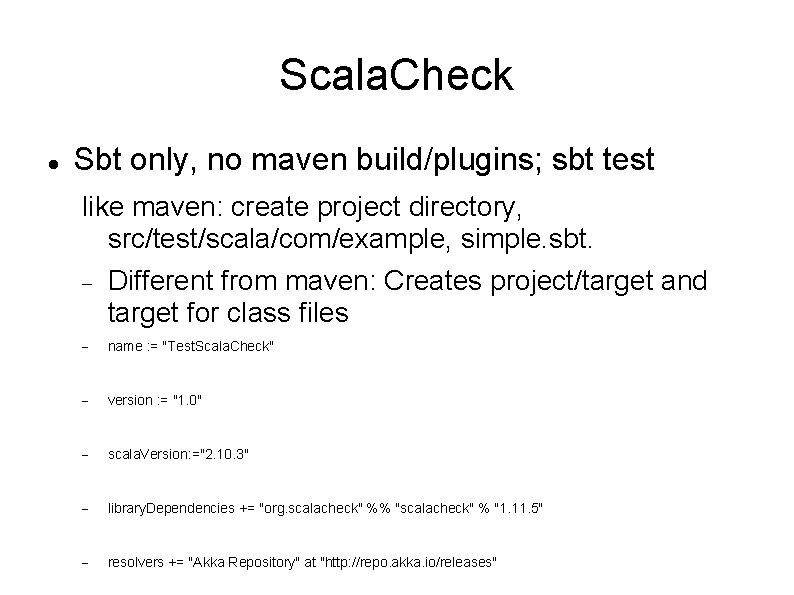

Scala. Check Sbt only, no maven build/plugins; sbt test like maven: create project directory, src/test/scala/com/example, simple. sbt. Different from maven: Creates project/target and target for class files name : = "Test. Scala. Check" version : = "1. 0" scala. Version: ="2. 10. 3" library. Dependencies += "org. scalacheck" %% "scalacheck" % "1. 11. 5" resolvers += "Akka Repository" at "http: //repo. akka. io/releases"

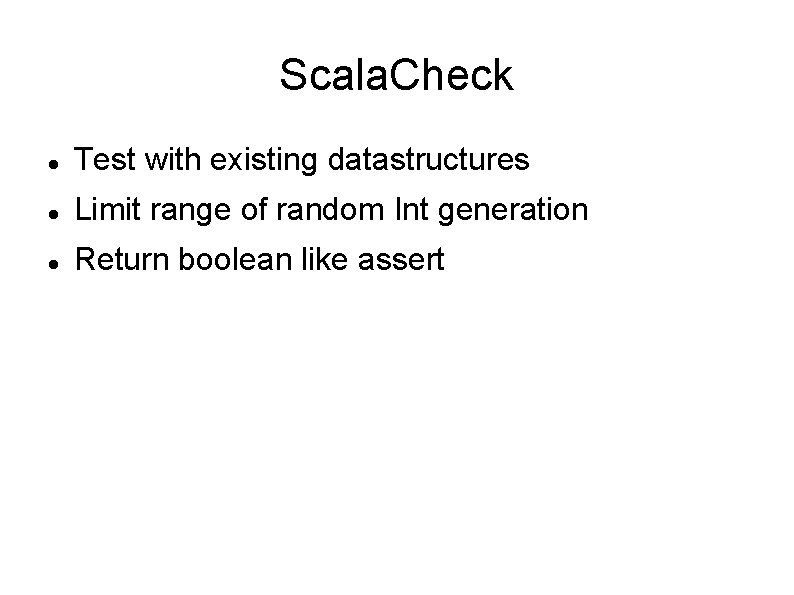

Scala. Check Test with existing datastructures Limit range of random Int generation Return boolean like assert

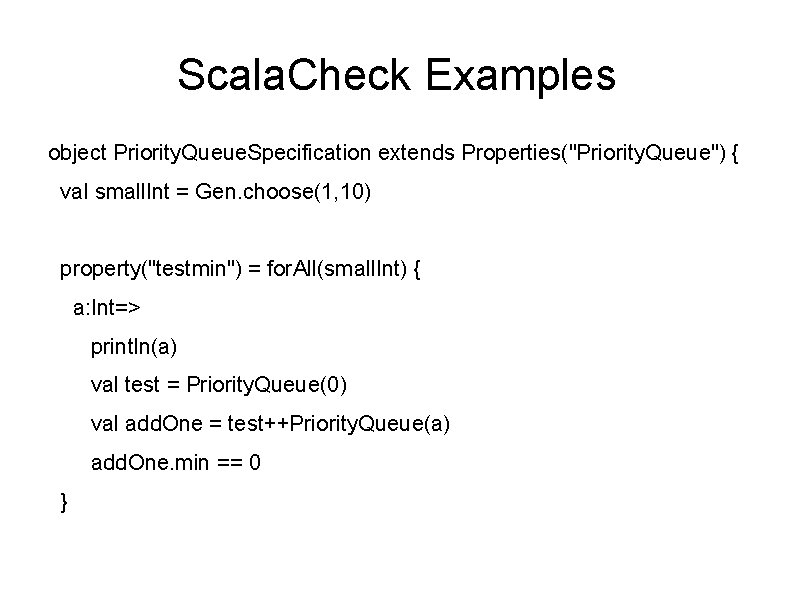

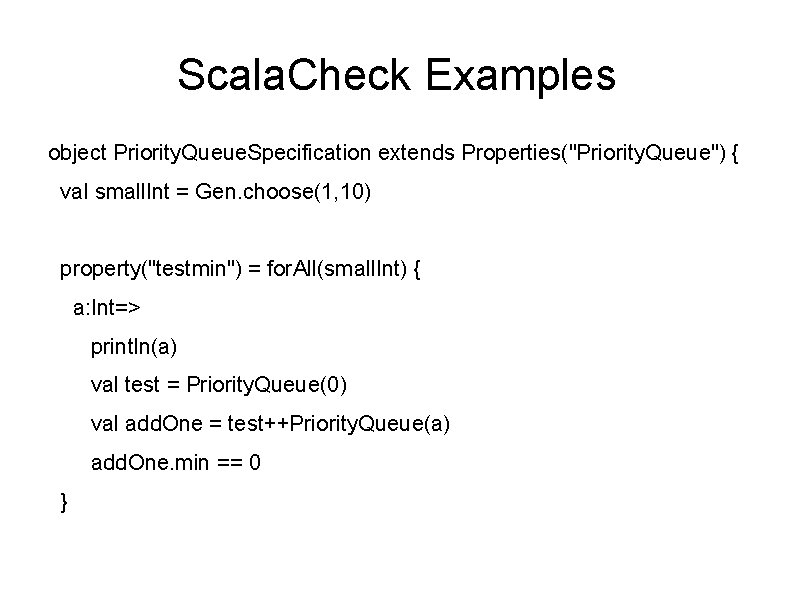

Scala. Check Examples object Priority. Queue. Specification extends Properties("Priority. Queue") { val small. Int = Gen. choose(1, 10) property("testmin") = for. All(small. Int) { a: Int=> println(a) val test = Priority. Queue(0) val add. One = test++Priority. Queue(a) add. One. min == 0 }

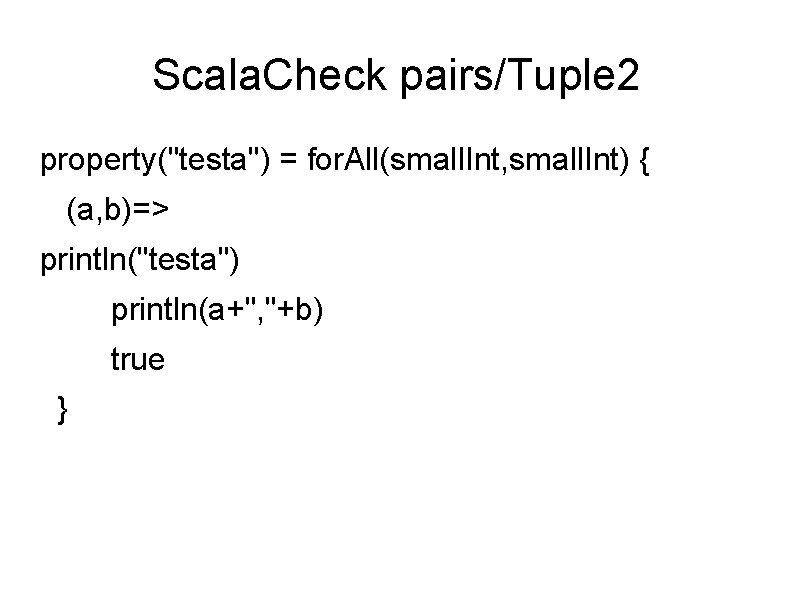

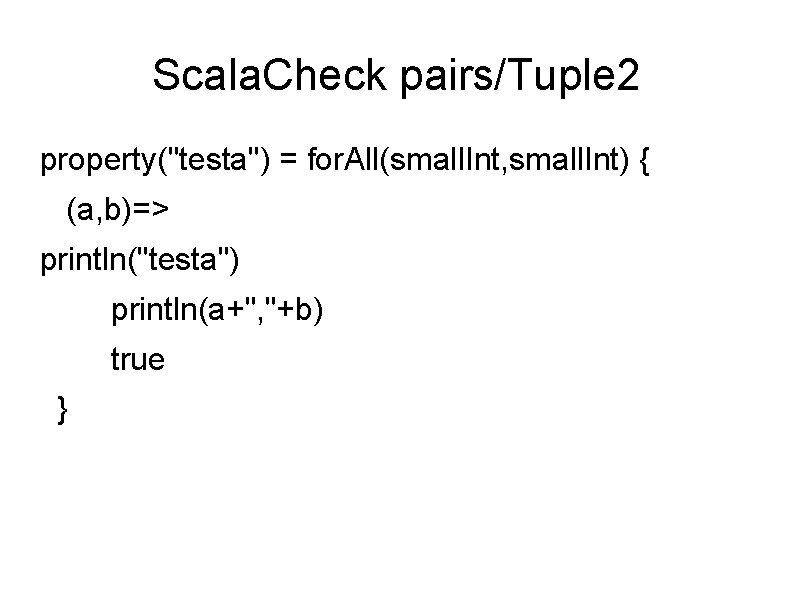

Scala. Check pairs/Tuple 2 property("testa") = for. All(small. Int, small. Int) { (a, b)=> println("testa") println(a+", "+b) true }

![Scala Check failure info Priority Queue testb Falsified after 0 passed tests info Scala. Check failure [info] ! Priority. Queue. testb: Falsified after 0 passed tests. [info]](https://slidetodoc.com/presentation_image/0c9a24fb019f61a51085570ba6581cb5/image-6.jpg)

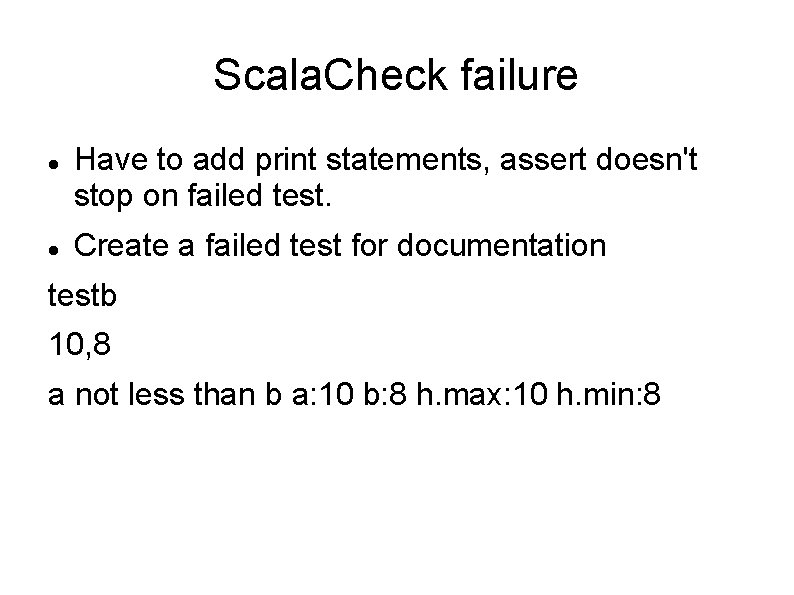

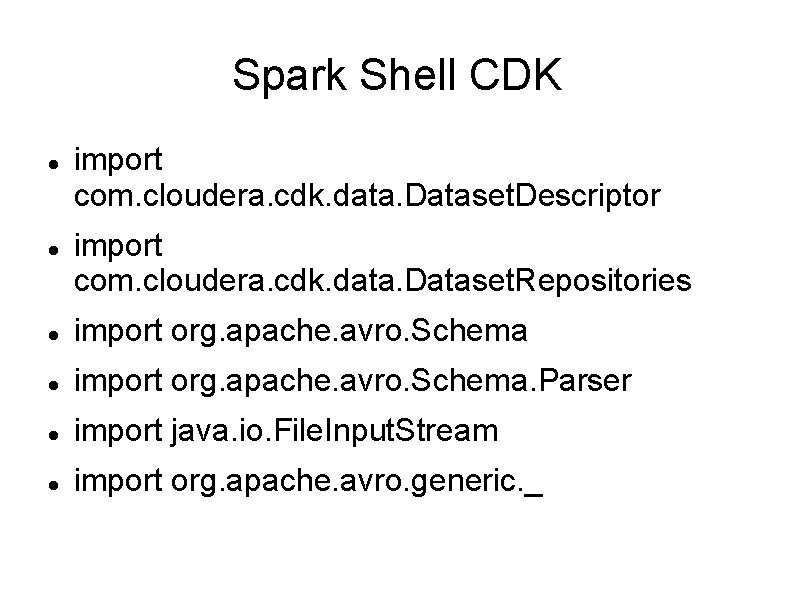

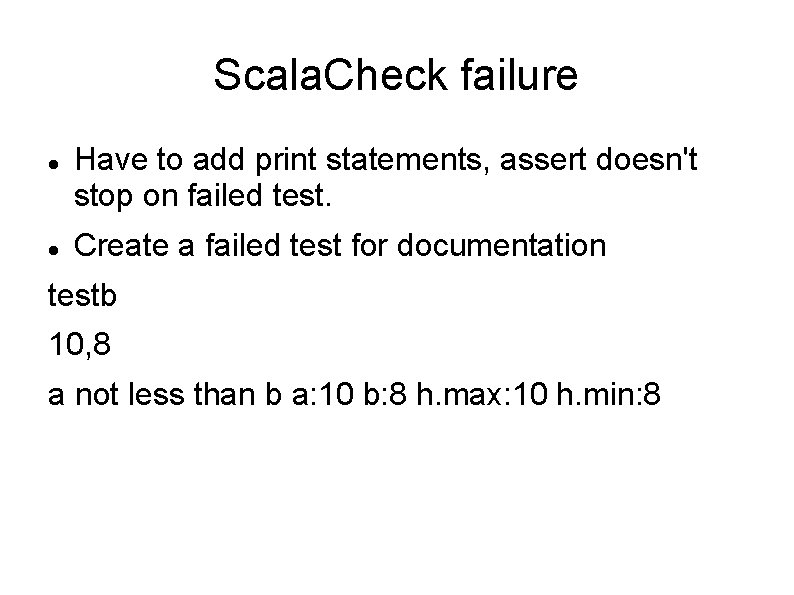

Scala. Check failure [info] ! Priority. Queue. testb: Falsified after 0 passed tests. [info] > ARG_0: 1 [info] > ARG_0_ORIGINAL: 10 [info] > ARG_1: 2 [info] > ARG_1_ORIGINAL: 8 Means input 10, 8 failed on pair input on property “testb”

Scala. Check failure Have to add print statements, assert doesn't stop on failed test. Create a failed test for documentation testb 10, 8 a not less than b a: 10 b: 8 h. max: 10 h. min: 8

Scala. Check debug in steps if(a<b) { println("a less than b a: "+a+" , b: "+b+"h. min: "+h. min+" h. max: "+h. max) a==h. min && b==h. max } else if (a>b) { println("a not less than b a: "+a+" b: "+b+" h. max: "+h. max+" h. min: "+h. min) a==h. max && b==h. min } else //println("equal a: "+a+" b: "+b+" h. max: "+h. max+" h. min: "+h. min) //(a==h. max) && (b==h. max) && (a==h. min) && (b==h. min) true

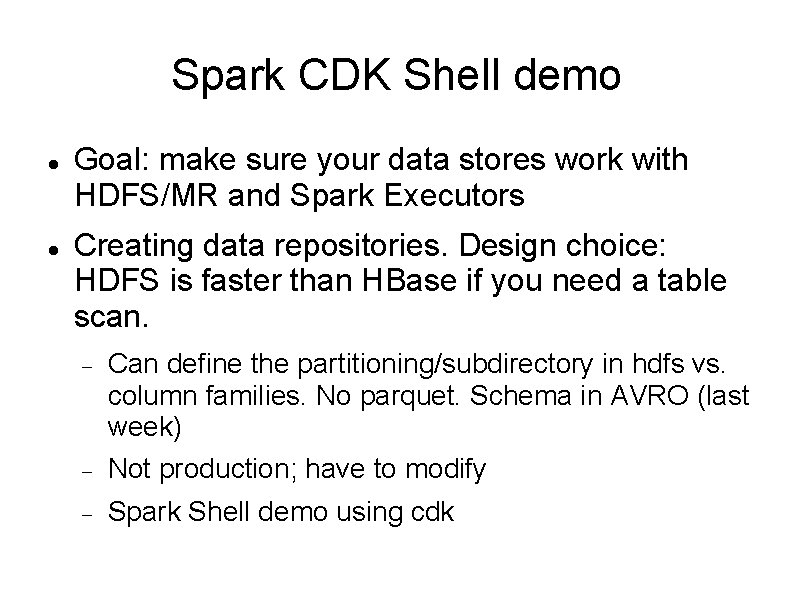

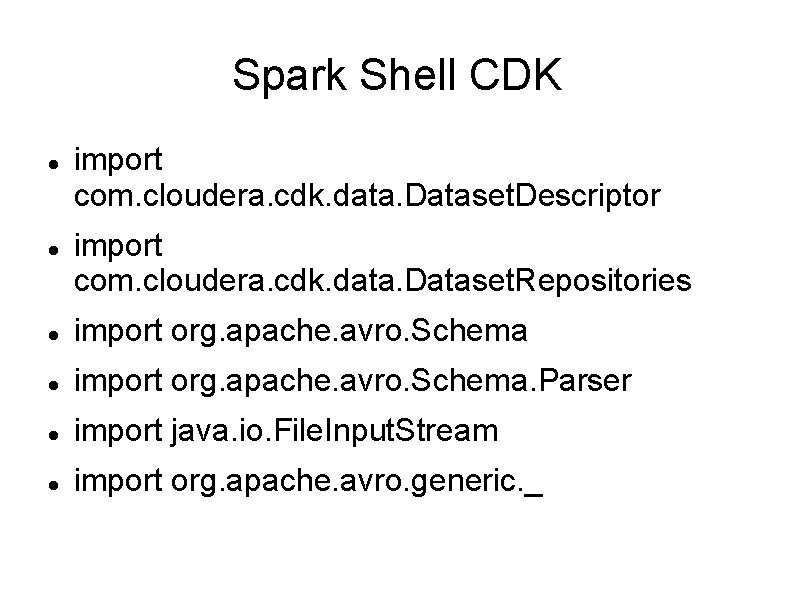

Spark CDK Shell demo Goal: make sure your data stores work with HDFS/MR and Spark Executors Creating data repositories. Design choice: HDFS is faster than HBase if you need a table scan. Can define the partitioning/subdirectory in hdfs vs. column families. No parquet. Schema in AVRO (last week) Not production; have to modify Spark Shell demo using cdk

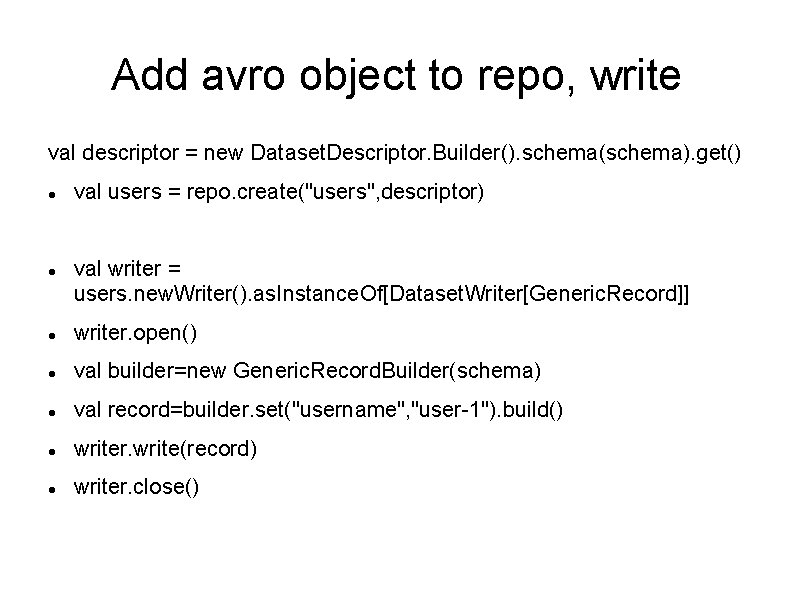

Modifications create directory /usr/lib/spark/cdk/lib copy the avro and cdk-data-set jars manually into /usr/lib/spark/cdk/lib modify compute-classpath. sh to pick up the jars in this new directory Start spark shell Add imports

Spark Shell CDK import com. cloudera. cdk. data. Dataset. Descriptor import com. cloudera. cdk. data. Dataset. Repositories import org. apache. avro. Schema. Parser import java. io. File. Input. Stream import org. apache. avro. generic. _

Create repo val repo = Dataset. Repositories. open("repo: file: /tmp/testsc alashellcdk"); case class User(username: String) val schema = new Parser(). parse(new File. Input. Stream("/usr/lib/spark/user. avsc")) creating schema in code doesn't work.

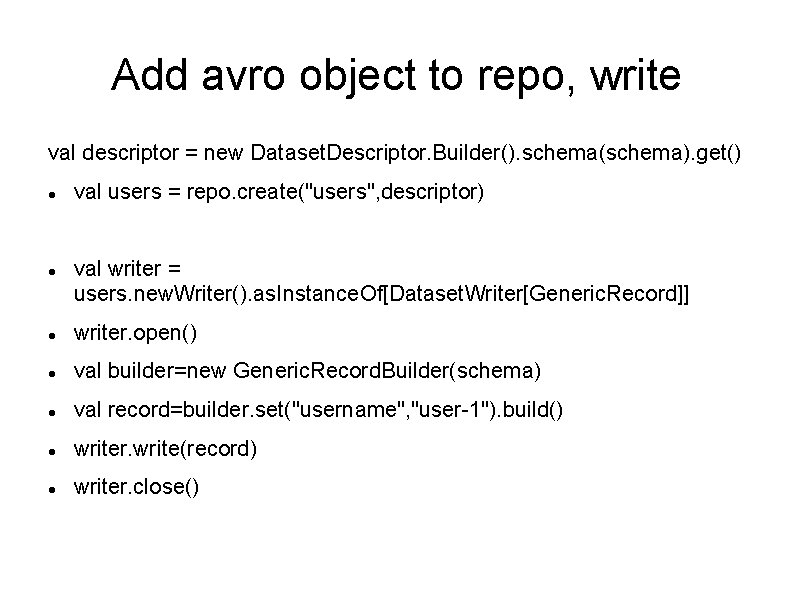

Add avro object to repo, write val descriptor = new Dataset. Descriptor. Builder(). schema(schema). get() val users = repo. create("users", descriptor) val writer = users. new. Writer(). as. Instance. Of[Dataset. Writer[Generic. Record]] writer. open() val builder=new Generic. Record. Builder(schema) val record=builder. set("username", "user-1"). build() writer. write(record) writer. close()

Wscala

Wscala Baziller membran

Baziller membran Cavitas tympani arka duvarı

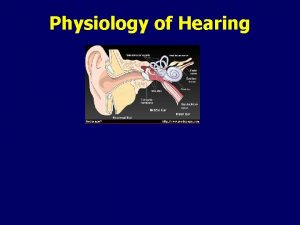

Cavitas tympani arka duvarı Scala vestibuli and scala tympani

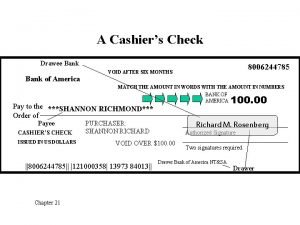

Scala vestibuli and scala tympani Cashiers check bank of america

Cashiers check bank of america Quickchek menu

Quickchek menu Check in check out forms

Check in check out forms Advantages and disadvantages of boundary fill algorithm

Advantages and disadvantages of boundary fill algorithm Cash control systems

Cash control systems Check in check out behavior intervention

Check in check out behavior intervention Check in check out pbis

Check in check out pbis Check your progress 1

Check your progress 1 Check in check out system for students

Check in check out system for students Check in and check out intervention

Check in and check out intervention