Proto DUNE Joint data challenge Report Steven Timm

- Slides: 12

Proto. DUNE Joint data challenge Report Steven Timm for the Proto. DUNE data challenge team Proto. DUNE data exploitation review 10 May 2018

Proto. DUNE Joint Data Challenge - Joint data challenge was executed April 9 -13, 2018 - Participating groups: • Proto. DUNE-SP DAQ (Savage, Lehmann) • Proto. DUNE SP DQM (Potekhin) • DUNE data movement (Timm, Fuess, Mandrichenko, Illingworth) • Proto. DUNE-DP DAQ (Pennachio) • CENF Neutrino Platform Support (Benekos) • DUNE Processing/Production (Herner/Furic) • DUNE Software Management (Junk, Yang) • DUNE Databases (Buchanan, Allen, etc) • DUNE Software and Computing leadership (Norman, Schellman) • Operations lead (Ding) • Monitoring (Retzke) - Contributions in orange have been covered in detail in other talks. 2 Presenter Name | Presentation Title

Data Challenge Goals • Data Movement / DAQ - Run data movement without disrupting the DAQ operation - Sustained full 20 GBit/s bandwidth EHN-1 to EOS to Fermilab - Transfer full rate to Fermilab without knocking d. Cache over. • DQM—Test Data Quality Monitoring at full rate • Processing—Do keep-up processing as data comes in. • Database—Read CERN beam hardware, transmit to Fermilab, read Fermilab constants in offline processing. • Monitoring—Have all above systems in central monitoring dashboard. 3 Presenter Name | Presentation Title

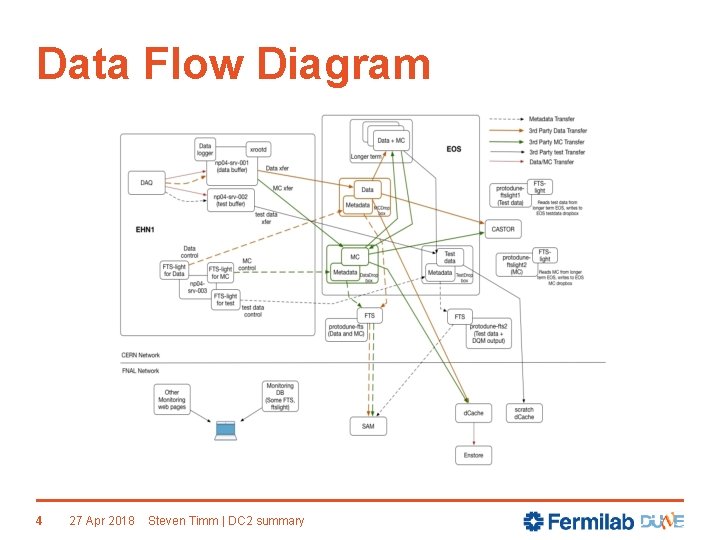

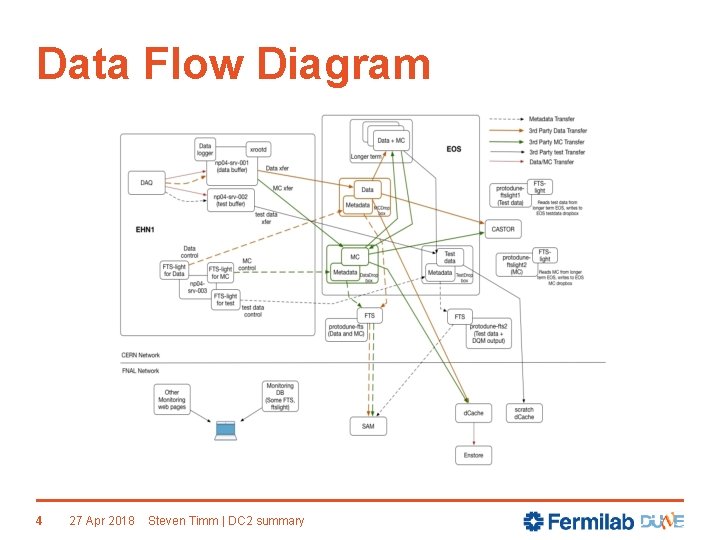

Data Flow Diagram 4 27 Apr 2018 Steven Timm | DC 2 summary

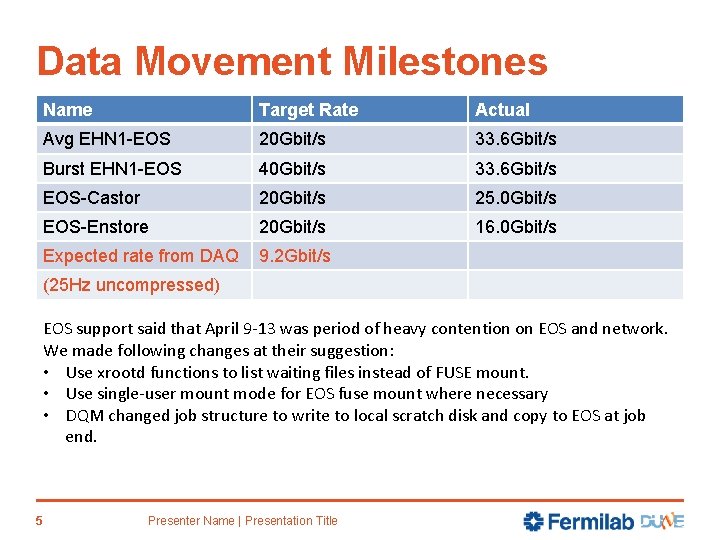

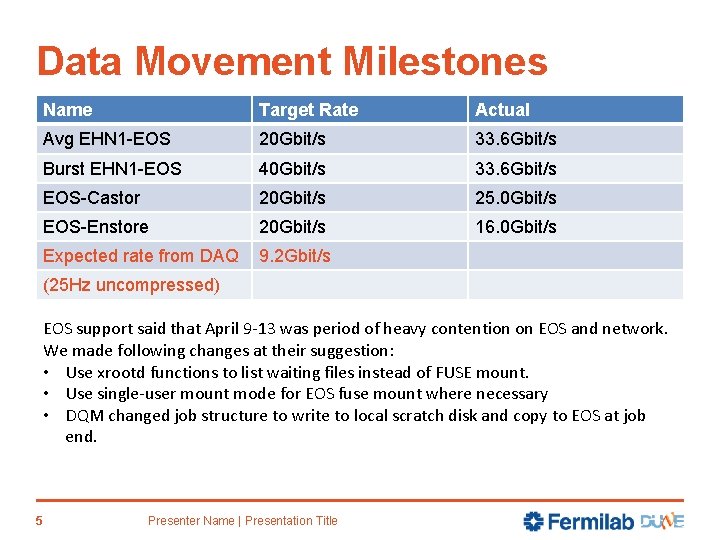

Data Movement Milestones Name Target Rate Actual Avg EHN 1 -EOS 20 Gbit/s 33. 6 Gbit/s Burst EHN 1 -EOS 40 Gbit/s 33. 6 Gbit/s EOS-Castor 20 Gbit/s 25. 0 Gbit/s EOS-Enstore 20 Gbit/s 16. 0 Gbit/s Expected rate from DAQ 9. 2 Gbit/s (25 Hz uncompressed) EOS support said that April 9 -13 was period of heavy contention on EOS and network. We made following changes at their suggestion: • Use xrootd functions to list waiting files instead of FUSE mount. • Use single-user mount mode for EOS fuse mount where necessary • DQM changed job structure to write to local scratch disk and copy to EOS at job end. 5 Presenter Name | Presentation Title

Milestones • Keep-up Production - Successfully operated reconstruction on both coldbox test “data” files and copied protodune MC files - Ran reco jobs at a variety of sites including CERN Tier 0, other European EGI sites, and Open Science Grid in addition to FNAL - Submitted and kept track of all jobs using the “POMS” system - Results stored to tape - Used the ”IFBeam” module to read the database • DQM (see previous talk) - Demonstrated availability and stability of core DQM web services - Ran above expected scale in production, 600 simultaneous jobs - Successful interface with Data Handling to get input and store results - Validated operation of payloads available at the time. - Modified file handling of EOS files for better stability. • Database (see previous talk today) - 6 Successfully read data from DIP devices at CERN, transmitted to FNAL to store in IFBEAM. Presenter Name | Presentation Title

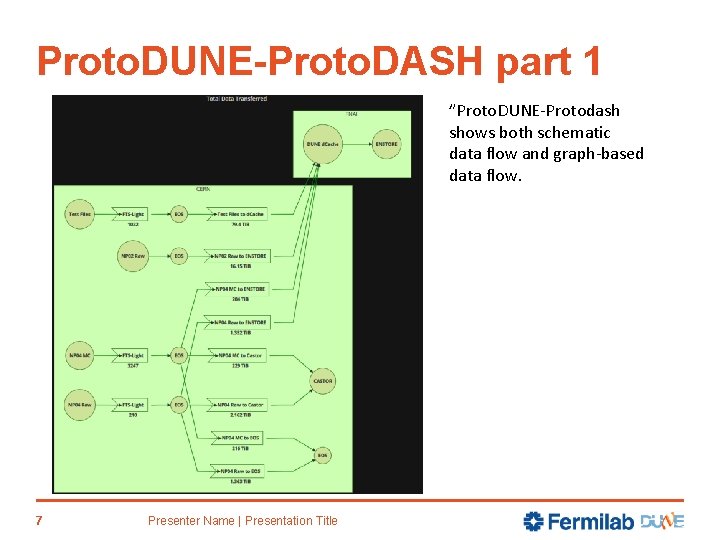

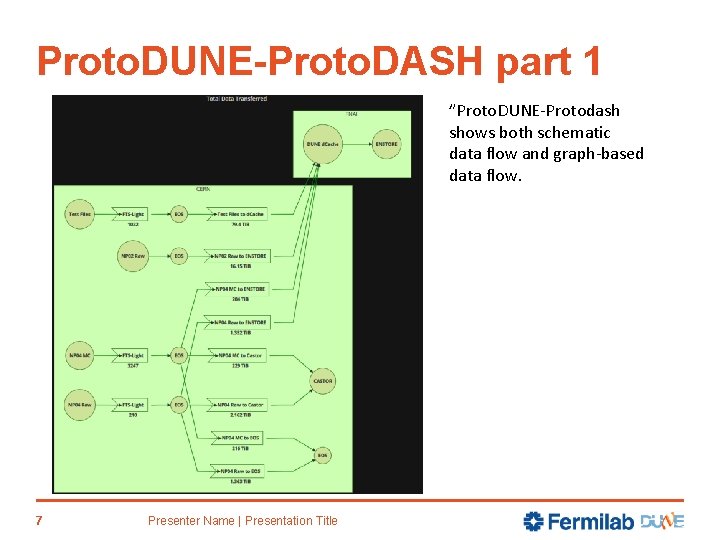

Proto. DUNE-Proto. DASH part 1 ”Proto. DUNE-Protodash shows both schematic data flow and graph-based data flow. 7 Presenter Name | Presentation Title

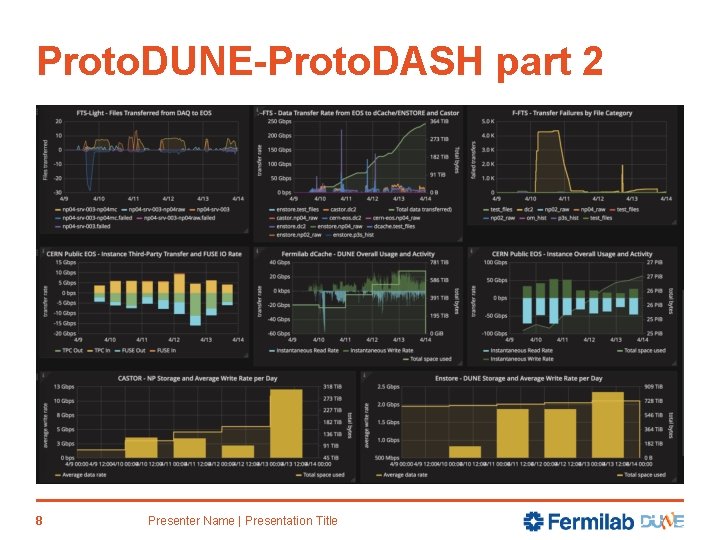

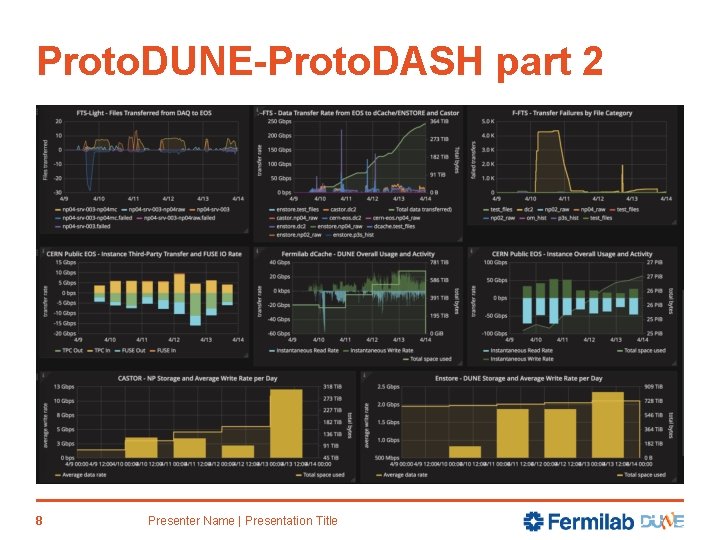

Proto. DUNE-Proto. DASH part 2 8 Presenter Name | Presentation Title

DAQ Buffer testing • Wrote junk data with “fio” as fast as we could (500 MByte/s x 4 10 spindle RAID 5 arrays). • Read it via xrootd with FTS-Light • Didn’t crash the DAQ or the data buffer machines • Got full read and write bandwidth at same time • No significant difference between stock kernel and bleeding-edge verson 4 kernel. • Conclusion—the two data buffer machines are performant enough in both disk and network to be the data loggers for the DAQ and copy that data to the rest of the world. • Data movement from Expt. Hall to CERN central computing is ready to go. • Much more detail and graphs on this in the full data challenge report, can show afterwards if needed. 9 Presenter Name | Presentation Title

Action Items / Lessons Learned 1 • Need to boost EOS->Fermilab d. Cache data rate, are hitting a plateau - Dedicated ”read pool” nodes for Proto. DUNE currently being commissioned. - Talking to CMS people to see what they do different - Investigating CERN FTS 3 • Need to boost tape write speed at Fermilab - We had access to 2 of 13 T 10000 D drives during the challenge - Average tape write rate about 16 TB a day (2 8 TB tapes) - New tape robot is on its way to FNAL with more, bigger and faster LTO 8 drives, scheduled to be deployed and commissioned before the run. - CASTOR cache-to-tape latency faster, 2 hrs the peak time to get a file to tape. 10 Presenter Name | Presentation Title

Action Items / Lessons Learned 2 • Will need at least 30% of EOS allocation just to buffer files in transit (just for SP—the allocation is shared with DP and they will need buffer space too) • Therefore—need policy on what files will be available on EOS for analysis and good/bad run flag to inform it, and caching software to move files in/out. • DUNE data management actively investigating Rucio and CERN FTS 3 for these purposes. • Also—more real info on what the data rate will be, the better we can plan. . Wide range of numbers still out there. 11 Presenter Name | Presentation Title

Conclusions • Demonstrated successful operations of Data Acquisition, Data movement, Data Quality Monitoring, Offline Reconstruction, Monitoring, and Beam Database. • Moved and processed 300 TB of “data”, roughly equal to 1 week of Protodune-SP running • Built automated structures for data movement, keep-up processing, data quality monitoring, regular monitoring, and other systems that can also be used for the run. • Identified action items now being addressed in preparation for run. 12 Presenter Name | Presentation Title