Pat Reco EstimationTraining Alexandros Potamianos School of ECE

- Slides: 15

Pat. Reco: Estimation/Training Alexandros Potamianos School of ECE, NTUA Fall 2014 -15

Estimation/Training w Goal: Given observed data (re-)estimate the parameters of the model e. g. , for a Gaussian model estimate the mean and variance for each class

Supervised-Unsupervised w Supervised training: All data has been (manually) labeled, i. e. , assigned to classes w Unsupervised training: Data is not assigned a class label

Observable data w Fully observed data: all information necessary for training is available (features, class labels etc. ) w Partially observed data: some of the features or some of the class labels are missing

Supervised Training (fully observable data) w Maximum likelihood estimation (ML) w Maximum a posteriori estimation (MAP) w Bayesian estimation (BE)

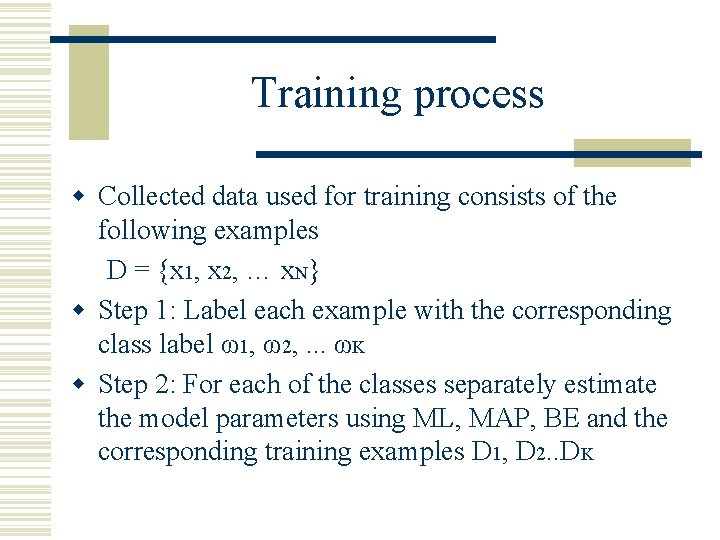

Training process w Collected data used for training consists of the following examples D = {x 1, x 2, … x. N} w Step 1: Label each example with the corresponding class label ω1, ω2, . . . ωΚ w Step 2: For each of the classes separately estimate the model parameters using ML, MAP, BE and the corresponding training examples D 1, D 2. . DK

Training Process: Step 1 D = {x 1, x 2, x 3, x 4, x 5, … x. N} Label manually ω1, ω2, . . . ωΚ D 1 = {x 11, x 12, x 13, … x 1 N 1} D 2 = {x 21, x 22, x 23, … x 2 N 2} ………… DK = {x. K 1, x. K 2, x. K 3, … x. KNk}

Training Process: Step 2 w Maximum Likelihood θ 1 = argmaxΘ P(D 1|θ 1) w Maximum-a-posteriori θ 1 = argmaxΘ P(D 1|θ 1) P(θ 1) w Bayesian estimation P (x|D 1) = P(x|θ 1)P(θ 1|D 1) dθ 1

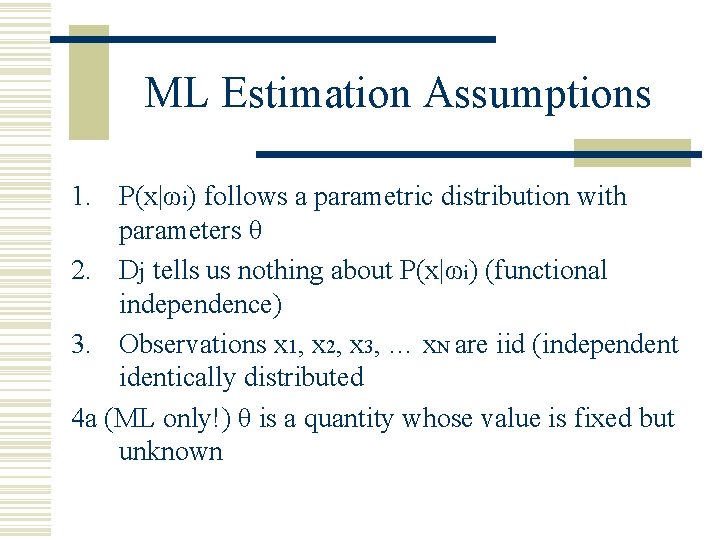

ML Estimation Assumptions 1. P(x|ωi) follows a parametric distribution with parameters θ 2. Dj tells us nothing about P(x|ωi) (functional independence) 3. Observations x 1, x 2, x 3, … x. N are iid (independent identically distributed 4 a (ML only!) θ is a quantity whose value is fixed but unknown

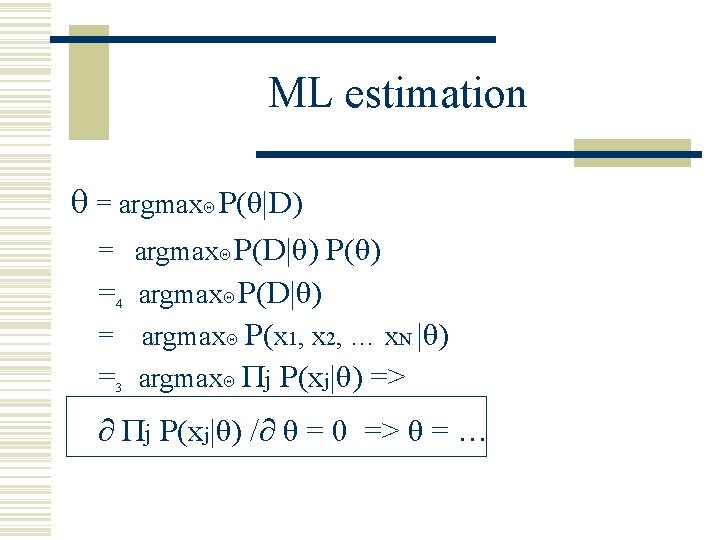

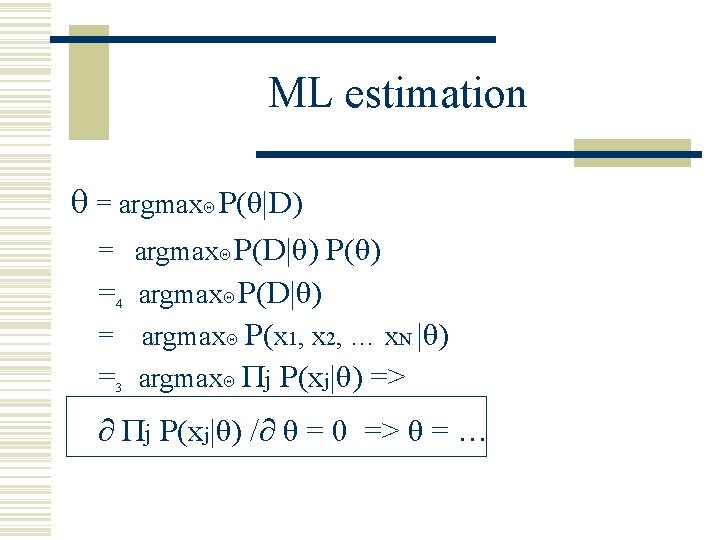

ML estimation θ = argmaxΘ P(θ|D) = argmaxΘ P(D|θ) P(θ) =4 argmaxΘ P(D|θ) = argmaxΘ P(x 1, x 2, … x. N |θ) =3 argmaxΘ Πj P(xj|θ) => Πj P(xj|θ) / θ = 0 => θ = …

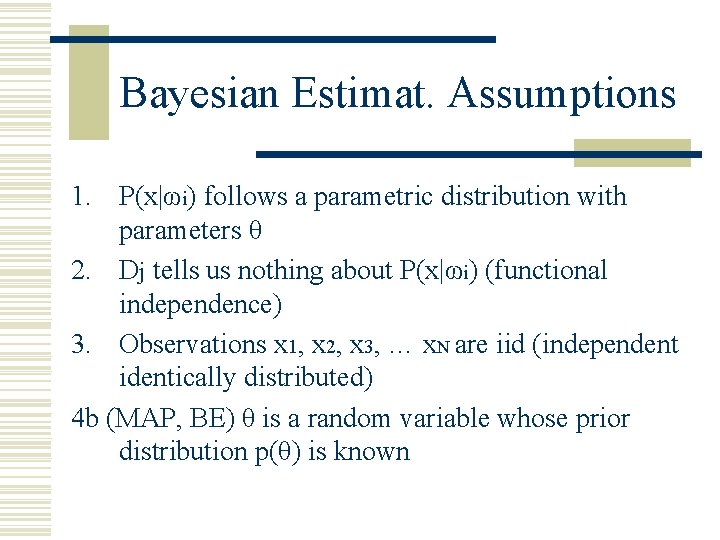

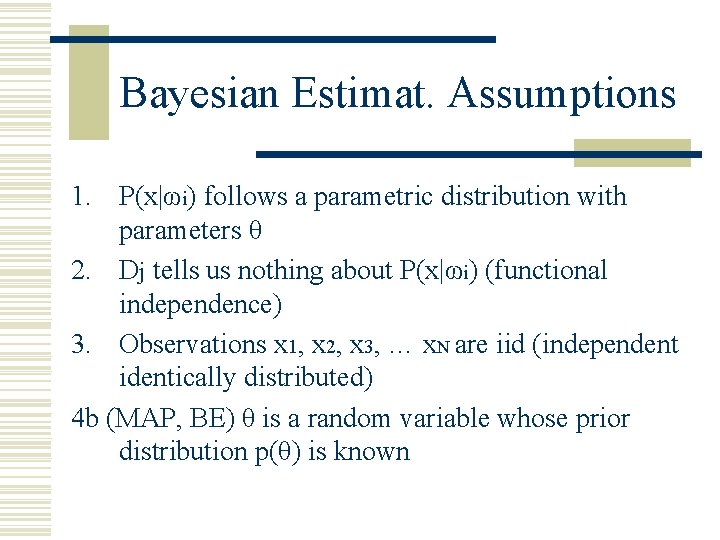

Bayesian Estimat. Assumptions 1. P(x|ωi) follows a parametric distribution with parameters θ 2. Dj tells us nothing about P(x|ωi) (functional independence) 3. Observations x 1, x 2, x 3, … x. N are iid (independent identically distributed) 4 b (MAP, BE) θ is a random variable whose prior distribution p(θ) is known

Bayesian Estimation P (x|D) = P(x, θ|D) dθ = P(x|θ, D)P(θ|D) dθ = P(x|θ)P(θ|D) dθ STEP 1: P(θ) P(θ|D) = P(D|θ)P(θ)/P(D) STEP 2: P(x|θ) P (x|D)

Bayesian Estimate for Gaussian pdf and priors If P(x|θ) = Ν(μ, σ2) and p(θ) = Ν(μ 0, σ02) then STEP 1: P(θ|D)=Ν(μn, σn 2) STEP 2: P(x|D)=N(μn, σ2+σn 2 ) μn = σ 2 /(n σ 2 + σ2) (Σj xj) + σ2 /(n σ 2 + σ2) μ 0 0 σn 2 = σ2 σ02 /(n σ02 + σ2) For large n (number of training samples) maximum likelihood and Bayesian estimation equivalent!!!

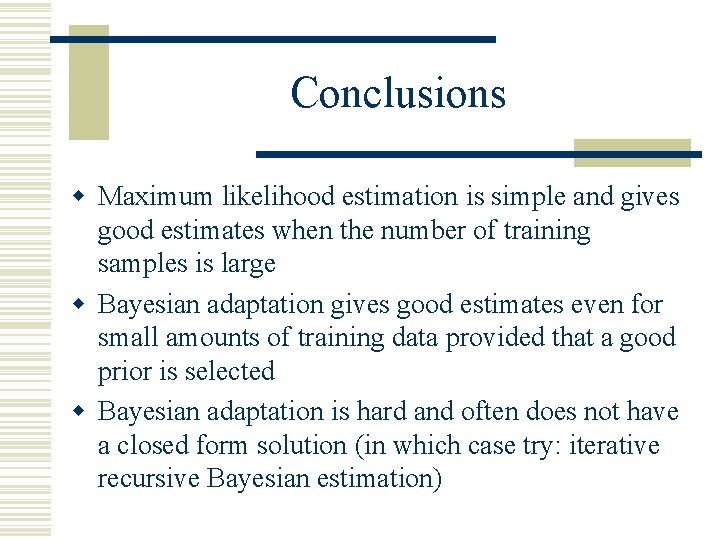

Conclusions w Maximum likelihood estimation is simple and gives good estimates when the number of training samples is large w Bayesian adaptation gives good estimates even for small amounts of training data provided that a good prior is selected w Bayesian adaptation is hard and often does not have a closed form solution (in which case try: iterative recursive Bayesian estimation)

Alexandros potamianos

Alexandros potamianos Alexandros potamianos

Alexandros potamianos Reco reco portugal

Reco reco portugal Pat pat seguimiento

Pat pat seguimiento Potamianos ntua

Potamianos ntua Alexandros spyridonidis

Alexandros spyridonidis Reco cement

Reco cement Oracle mman

Oracle mman Reco condusef

Reco condusef Dlaha atlanta

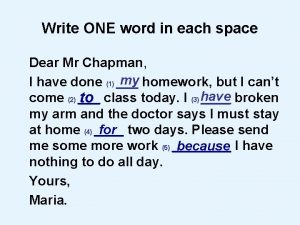

Dlaha atlanta Complete the text. write one word in each space

Complete the text. write one word in each space Pat oliphant cartoon archive

Pat oliphant cartoon archive Phonetically definition

Phonetically definition Pat coso

Pat coso Princess pat lyrics

Princess pat lyrics Same song pat mora

Same song pat mora