Language Model in Turkish IR Melih Kandemir F

- Slides: 29

Language Model in Turkish IR Melih Kandemir F. Melih Özbekoğlu Can Şardan Ömer S. Uğurlu

Outline • • • Indexing problem and proposed solution Previous Work System Architecture Language Modeling Concept Evaluation of the System Conclusion

Indexing Problem • “A Language Modeling Approach to Information Retrieval” Jay M. Ponte and. W. Bruce Croft, 1998 • Indexing model is important at probabilistic retrieval model • Current models do not lead to improved retrieval results

Indexing Problem • Failure because of unwarranted assumptions: • 2 -Poisson model – “elite” documents • N-Poisson model – Mixture of more than 2 Poission distributions

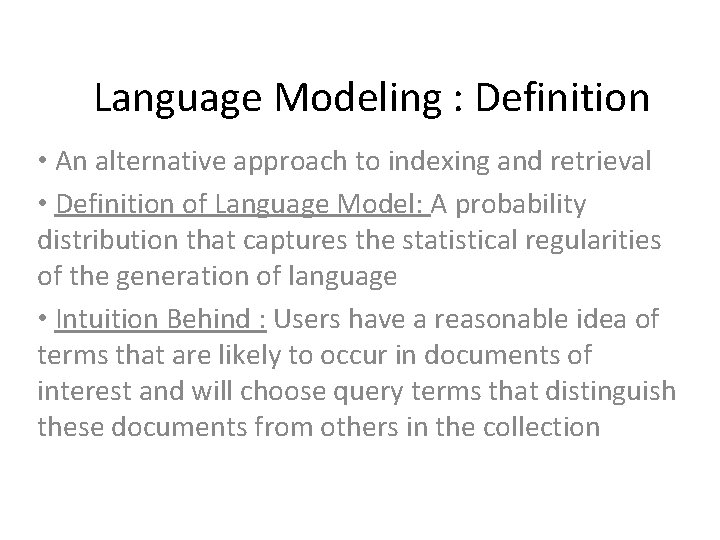

Proposed Solution • Retrieval based on probabilistic language modeling • Language model refers to probabilistic distribution that captures statistical regularities of the generation of language • A language model is inferred for each document

Proposed Solution • Estimate probability of generating the query • Documents are ranked according to these probabilities • Users have a reasonable idea of terms • tf, idf are integral parts of language model

Previous Work • Robertson–Sparck Jones model and Croft –Harper model – They focus on relevance • Fuhr integrated indexing and retrieval models. – Used statistics as heuristics • Wong and Yao used utility theory and information theory

Previous Work • Kalt’s approach is the most similar – Maximum likelihood estimator is used – Collection statistics are integral parts of the model – Documents are members of language classes

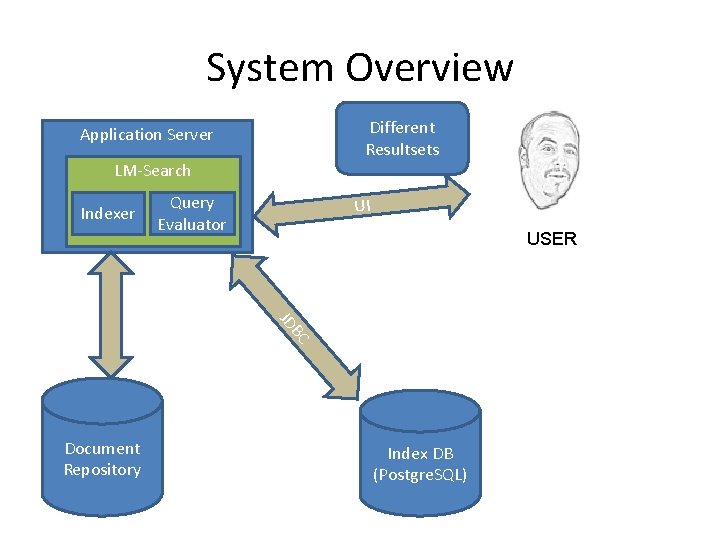

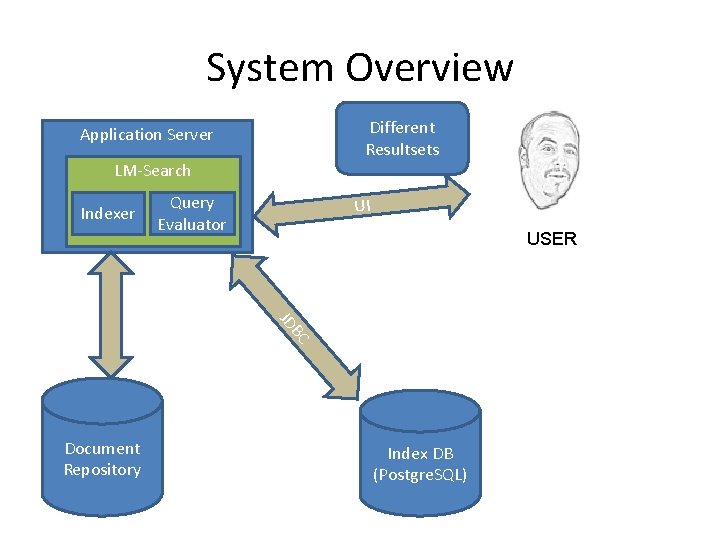

System Overview Different Resultsets Application Server LM-Search Indexer Query Evaluator UI USER BC JD Document Repository Index DB (Postgre. SQL)

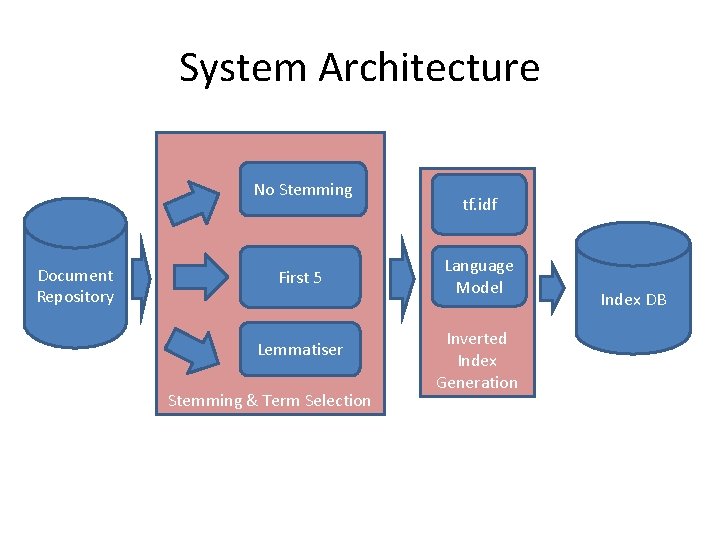

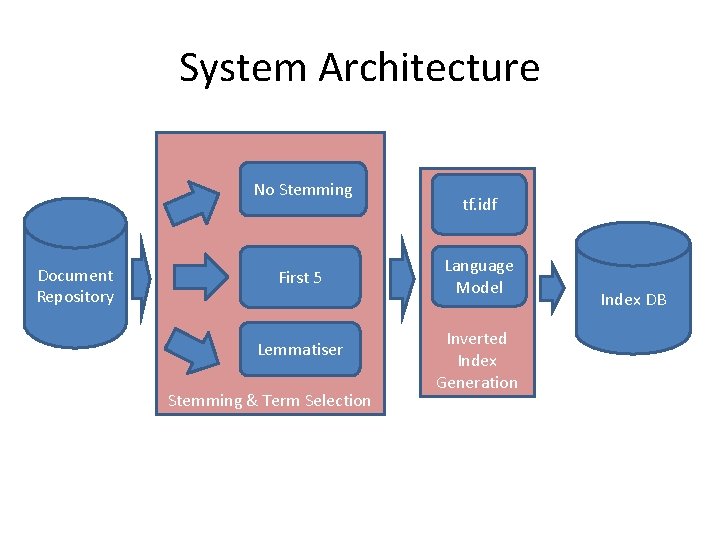

System Architecture No Stemming Document Repository First 5 Lemmatiser Stemming & Term Selection tf. idf Language Model Inverted Index Generation Index DB

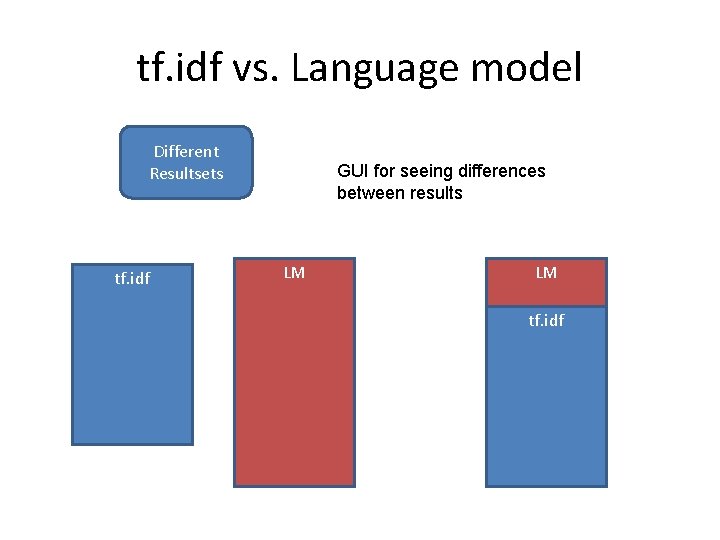

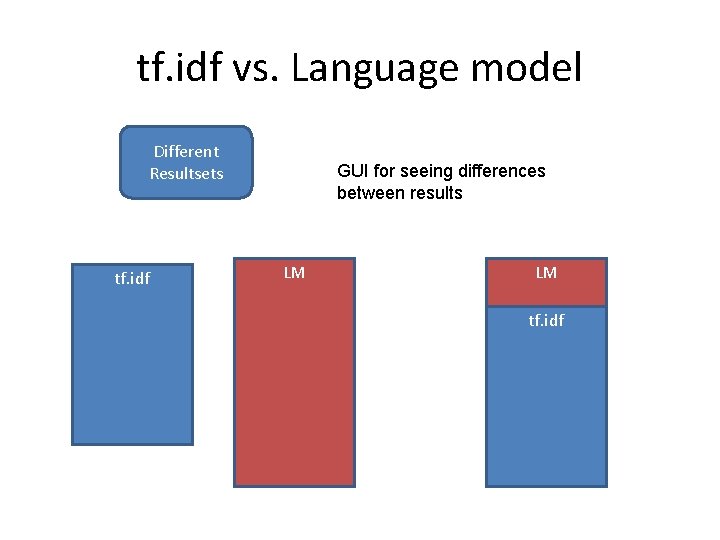

tf. idf vs. Language model Different Resultsets tf. idf GUI for seeing differences between results LM LM tf. idf

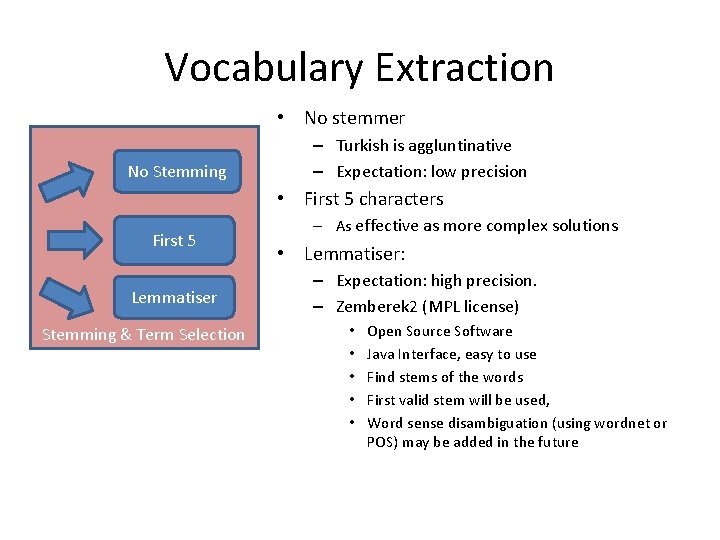

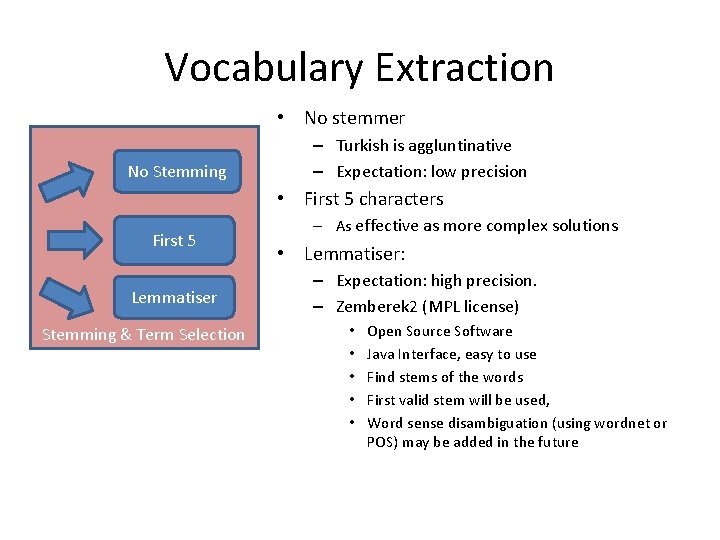

Vocabulary Extraction • No stemmer No Stemming – Turkish is aggluntinative – Expectation: low precision • First 5 characters First 5 Lemmatiser Stemming & Term Selection – As effective as more complex solutions • Lemmatiser: – Expectation: high precision. – Zemberek 2 (MPL license) • • • Open Source Software Java Interface, easy to use Find stems of the words First valid stem will be used, Word sense disambiguation (using wordnet or POS) may be added in the future

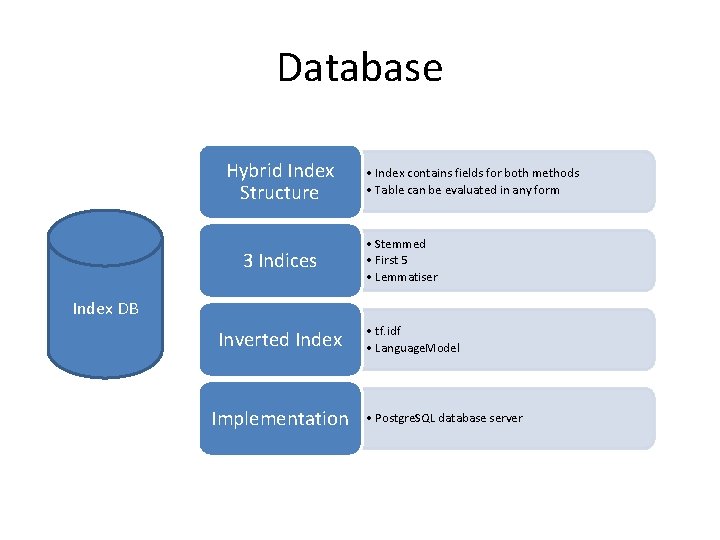

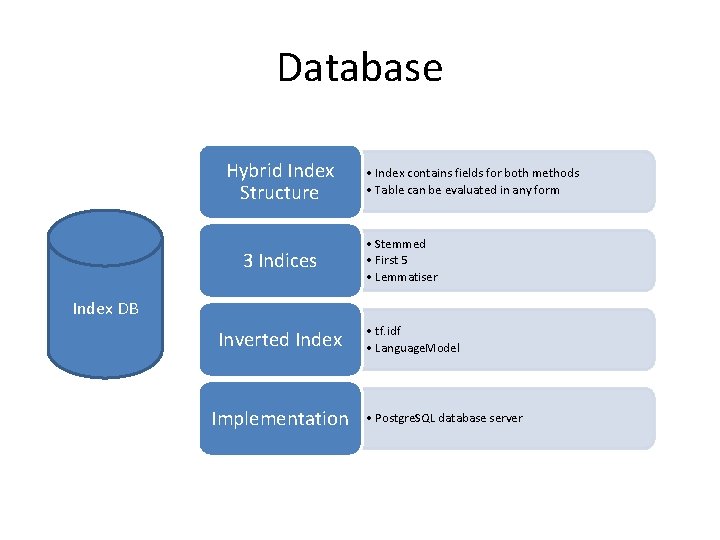

Database Hybrid Index Structure 3 Indices • Index contains fields for both methods • Table can be evaluated in any form • Stemmed • First 5 • Lemmatiser Index DB Inverted Index Implementation • tf. idf • Language. Model • Postgre. SQL database server

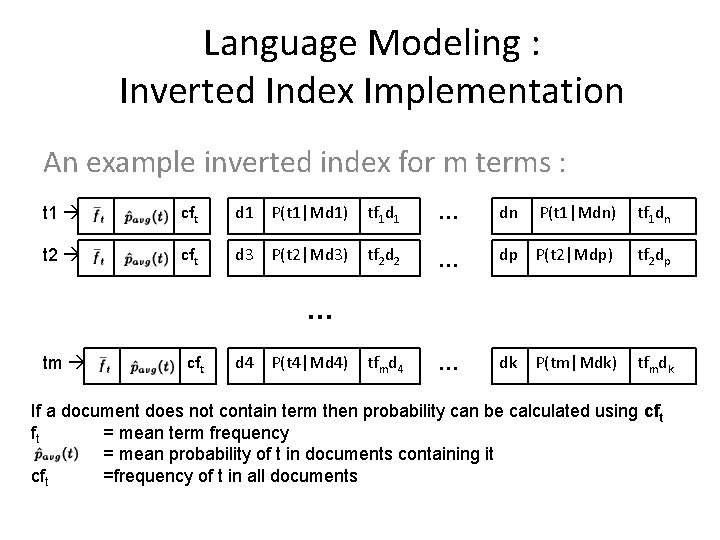

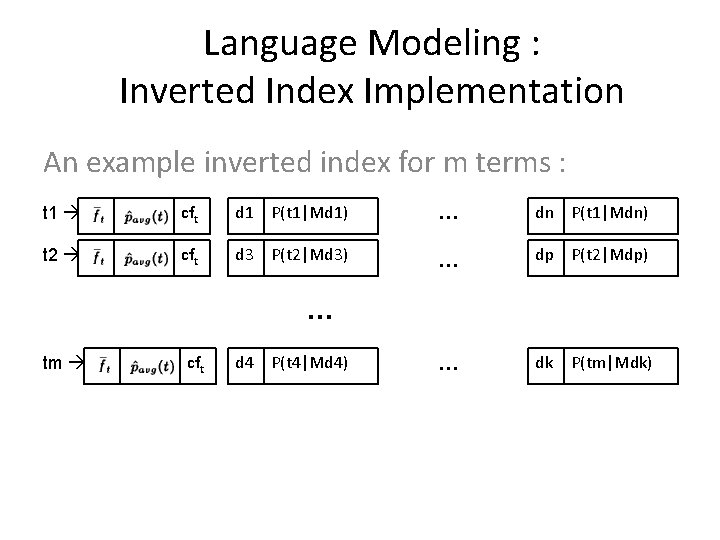

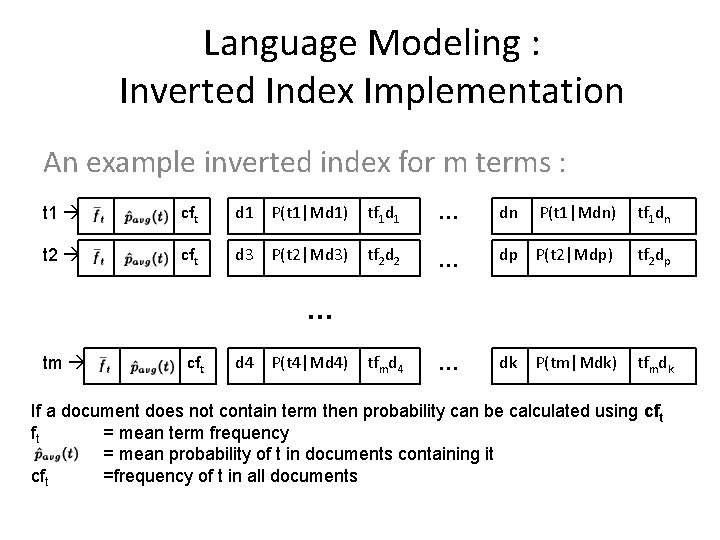

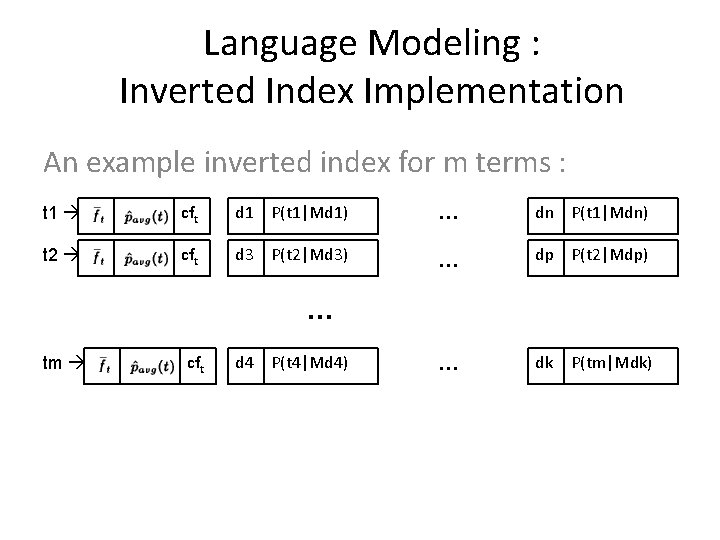

Language Modeling : Inverted Index Implementation An example inverted index for m terms : t 1 cft d 1 P(t 1|Md 1) tf 1 d 1 … dn P(t 1|Mdn) tf 1 dn t 2 cft d 3 P(t 2|Md 3) tf 2 d 2 … dp P(t 2|Mdp) tf 2 dp tfmd 4 … dk tfmdk … tm cft d 4 P(t 4|Md 4) P(tm|Mdk) If a document does not contain term then probability can be calculated using cft ft = mean term frequency = mean probability of t in documents containing it cft =frequency of t in all documents

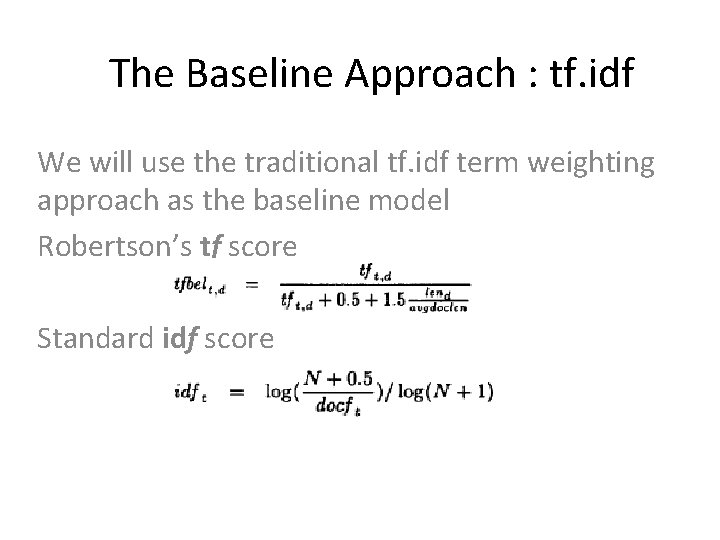

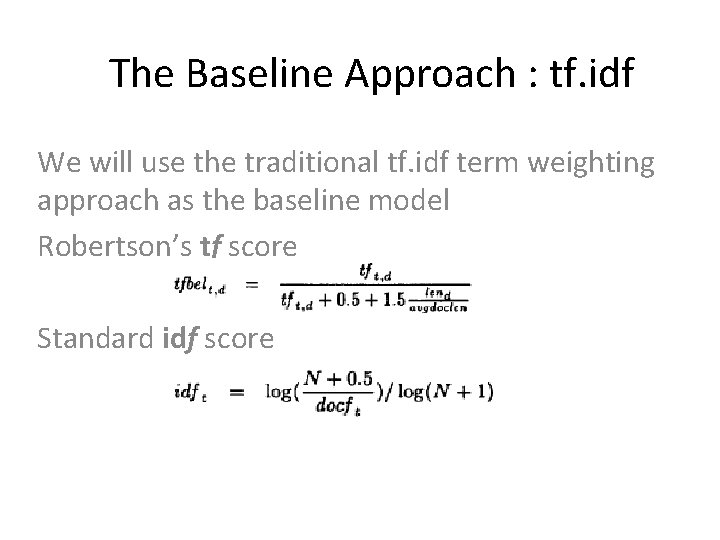

The Baseline Approach : tf. idf We will use the traditional tf. idf term weighting approach as the baseline model Robertson’s tf score Standard idf score

Language Modeling : Definition • An alternative approach to indexing and retrieval • Definition of Language Model: A probability distribution that captures the statistical regularities of the generation of language • Intuition Behind : Users have a reasonable idea of terms that are likely to occur in documents of interest and will choose query terms that distinguish these documents from others in the collection

Language Modeling : The Approach The following assumptions are not made : • Term distributions in the documents are parametric • Documents are members of pre-defined classes • “Query generation probability” rather than “Probability of relevance”

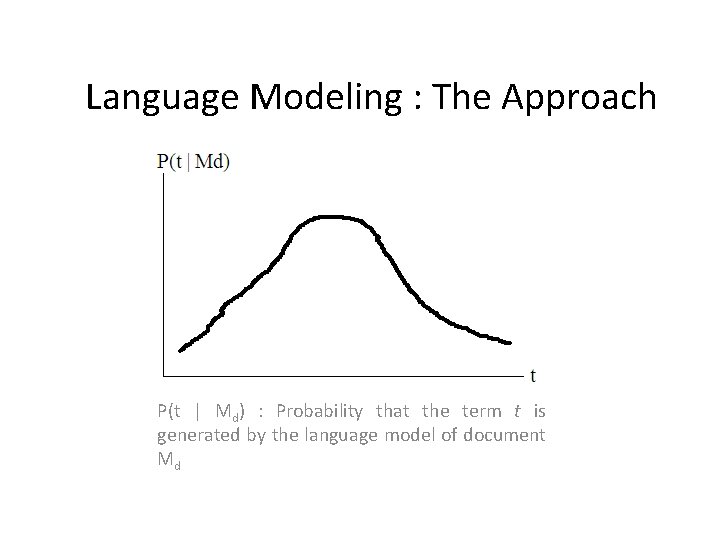

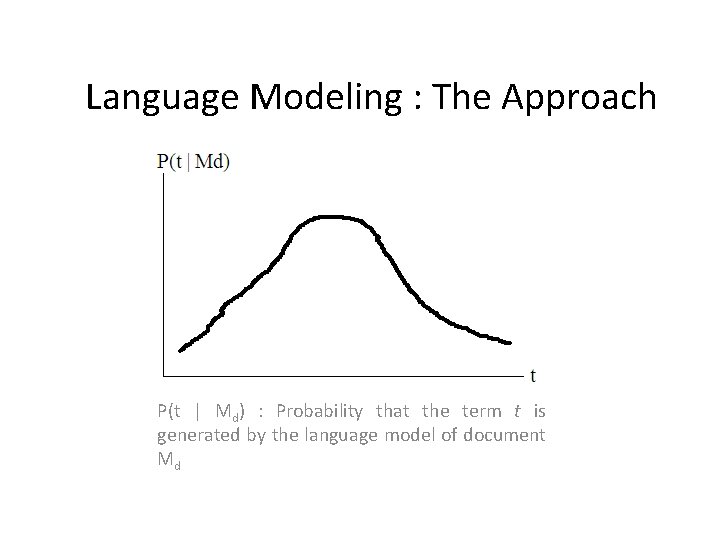

Language Modeling : The Approach P(t | Md) : Probability that the term t is generated by the language model of document Md

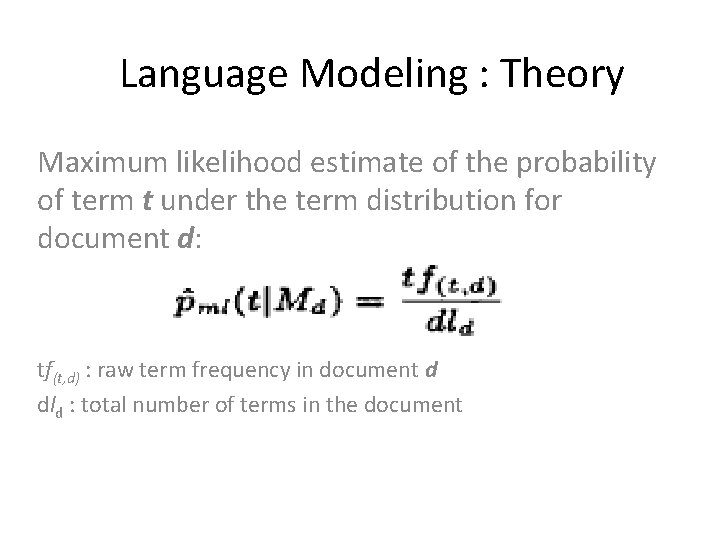

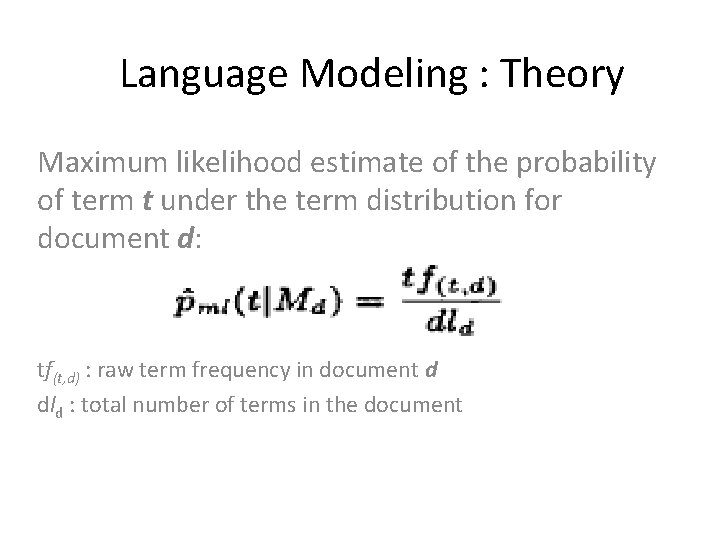

Language Modeling : Theory Maximum likelihood estimate of the probability of term t under the term distribution for document d: tf(t, d) : raw term frequency in document d dld : total number of terms in the document

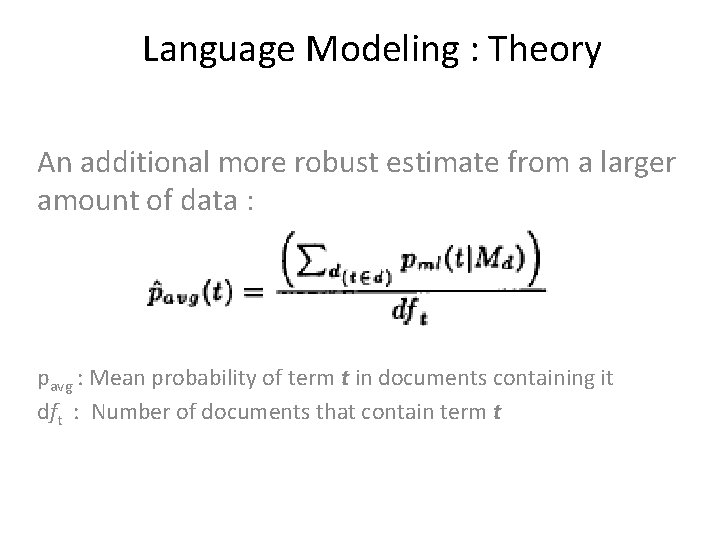

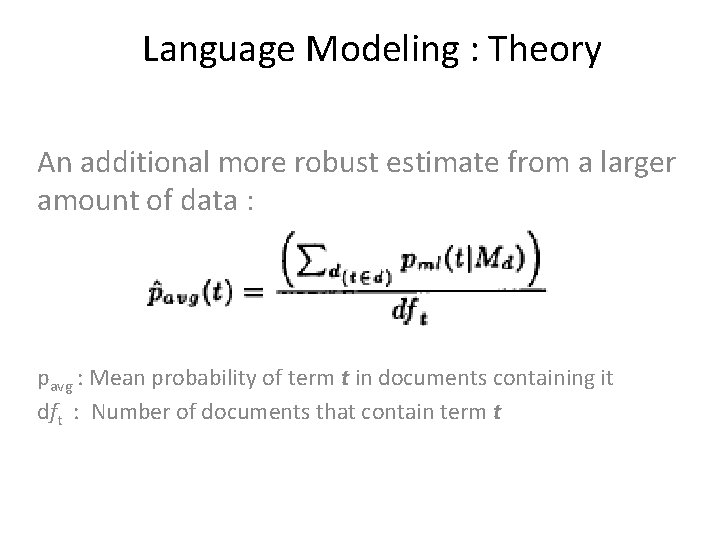

Language Modeling : Theory An additional more robust estimate from a larger amount of data : pavg : Mean probability of term t in documents containing it dft : Number of documents that contain term t

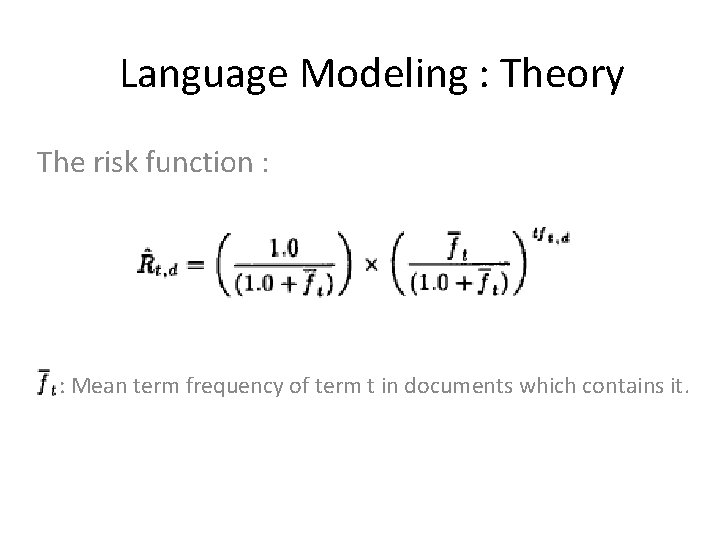

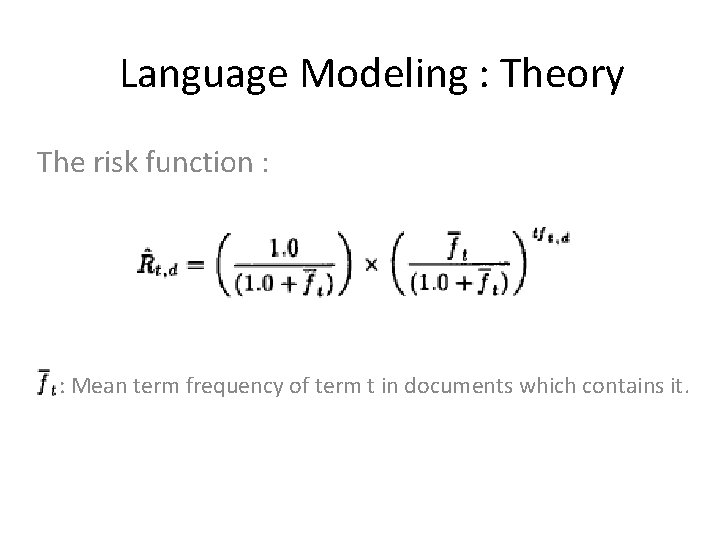

Language Modeling : Theory The risk function : : Mean term frequency of term t in documents which contains it.

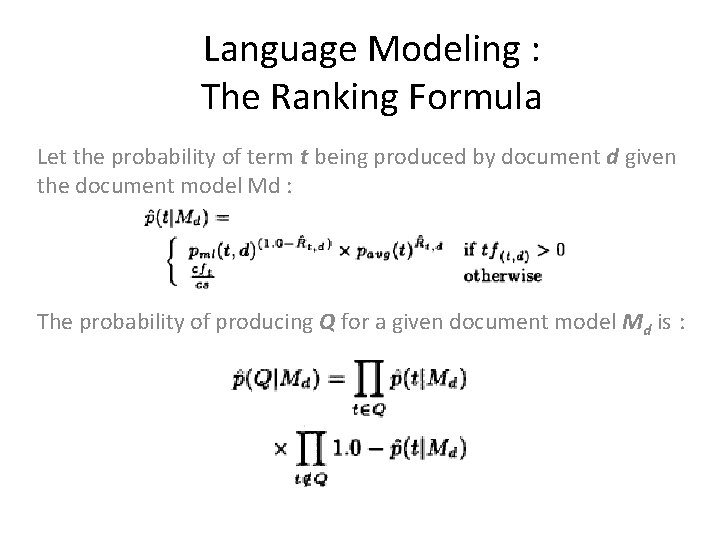

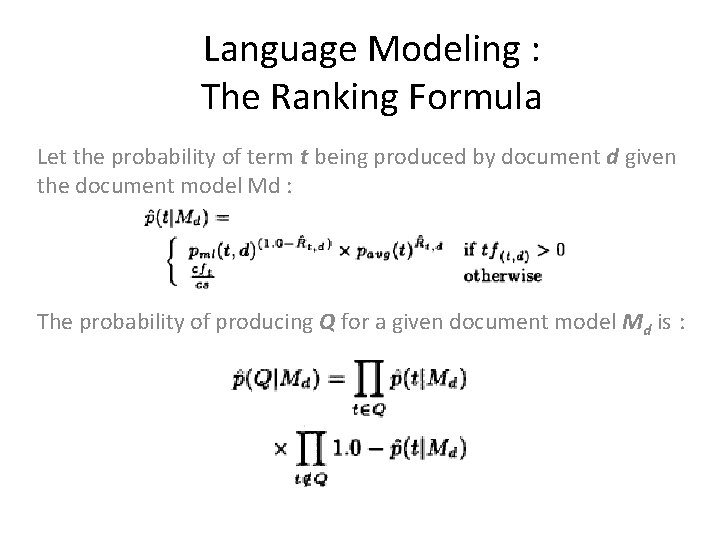

Language Modeling : The Ranking Formula Let the probability of term t being produced by document d given the document model Md : The probability of producing Q for a given document model Md is :

Language Modeling : Inverted Index Implementation An example inverted index for m terms : t 1 cft d 1 P(t 1|Md 1) … dn P(t 1|Mdn) t 2 cft d 3 P(t 2|Md 3) … dp P(t 2|Mdp) … dk … tm cft d 4 P(t 4|Md 4) P(tm|Mdk)

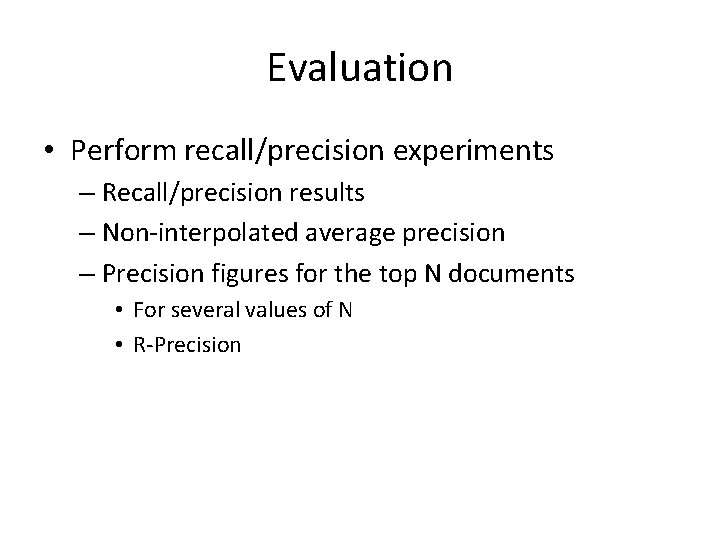

Evaluation • Perform recall/precision experiments – Recall/precision results – Non-interpolated average precision – Precision figures for the top N documents • For several values of N • R-Precision

Other Metrics • Compare the baseline (tf. idf) results to our language model. – Percent Change between two result sets –I/D • I : count of queries performance improved • D : count of queries performance changed

Document Repository Document Source • • Milliyet (2001 -2005) XML file ( 1. 1 GB ) 408304 news Ready for indexing • XML Schema. . . (FIXME)

Summary • Indexing and stemming – Zemberek 2 lemmatiser – Java environment • Data – News archive from 2001 to 2005, from Milliyet • Evaluation – Methods will be compared according to performance over recall/precision values

Conclusion • First language modelling approach to Turkish IR • The LM approach – Non-parametric – Less assumptions – Relaxed • Expected a better performance than baseline tf. idf method

Thanks for listening … Any Questions?

Keziban kandemir

Keziban kandemir Melih kaan can

Melih kaan can Cardeflex

Cardeflex Facts about turkish culture

Facts about turkish culture Turkish language

Turkish language Turkish washcloth seaweed

Turkish washcloth seaweed Turkish sentence structure vs english

Turkish sentence structure vs english Tk 183 bermuda

Tk 183 bermuda Turkish gold coins wedding gift

Turkish gold coins wedding gift Turkish myths

Turkish myths Turkish paragraph

Turkish paragraph Turkish music history

Turkish music history National poet of turkey

National poet of turkey Turkish family law

Turkish family law Turkish riddles

Turkish riddles Turkish court of accounts

Turkish court of accounts Best wishes in turkish

Best wishes in turkish Turkish classical music

Turkish classical music Turkish caviar

Turkish caviar Turkish drinks non alcoholic

Turkish drinks non alcoholic Turkish wedding traditions

Turkish wedding traditions Turkish tax system

Turkish tax system The turkish bath

The turkish bath Turkish bible translations

Turkish bible translations Kuwait turkish participation bank inc

Kuwait turkish participation bank inc Turkish software company

Turkish software company Gustave doyen

Gustave doyen Pound to turkish lira

Pound to turkish lira Gizem somuncu

Gizem somuncu Turkish sentiment analysis

Turkish sentiment analysis