IE 590 D Applied Ergonomics Lecture 26 Ergonomics

- Slides: 15

IE 590 D Applied Ergonomics Lecture 26 – Ergonomics in Manufacturing & Automation Vincent G. Duffy Associate Prof. School of IE and ABE Thursday April 13, 2006 1

IE 486 Work Analysis & Design II Instructor: Vincent Duffy, Ph. D. Associate Prof. Lecture 19 – Automation in Work Design Thurs. April 5, 2007 2

Automation - Chapter 16 in Wickens, et al. Initial example: China Airlines – automation did such a good job initially that pilots were unaware of the problem …. failing engine – danger of automation: on long flights, flight crew can be lulled into ‘complacency’ or ‘calm/slow to recognize the need to respond’ – sometimes automation is trusted more than it should be 3

QOTD Address the similarities in discussion about automation and decision making. Why automate? Briefly describe different levels of automation What are some potential problems in automated systems? Briefly discuss automation in complex systems. How should one manage failure of automation? What is the goal of automation? What are characteristics of human-centered automation? 4

Similarities between discussion about automation and decision-making Note that in the discussions about automation they talk a lot about decision making. – Essentially what you see is that automation has some impact on how decisions are made so you see references to Rasmussen and discussions about how automation can SUPPORT perception, cognition and control 5

Automation & decision making Perception: one challenge is to make sure appropriate/reliable ‘sensitivity’ so as to not cause ‘loss of trust’ ground proximity warning systems in aircraft Cognition: decision making is considered more complex than perception so is the development of automated devices to replace or assist in functions related to – intelligent reasoning Control sensing position and trend: cruise control in driving 6 controls pedal, but steering is still manual

Why automate? 1. Either dangerous or impossible for humans to perform the equivalent task 2. Humans may carry out the task poorly examples include: fatigue on long flights, humans not good at ‘vigilant monitoring’ 3. To aid the human in doing things recall: the human memory is vulnerable to forgetting 4. Because the technology is available and inexpensive eg. A computer operator may be less expensive over time. 7

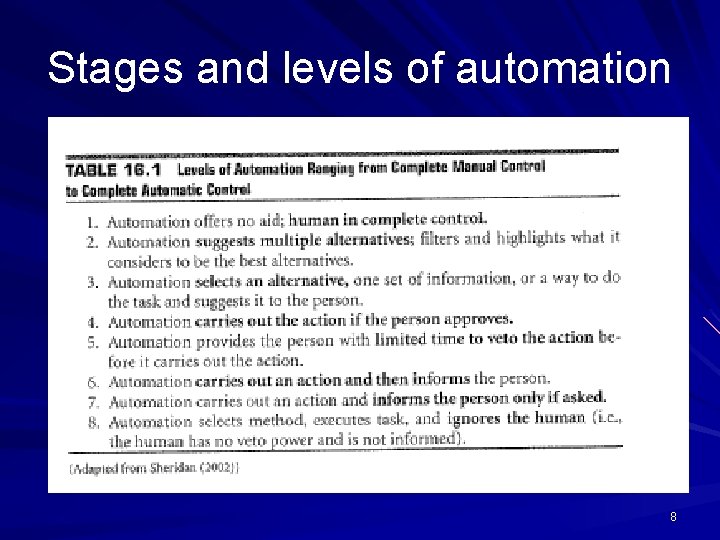

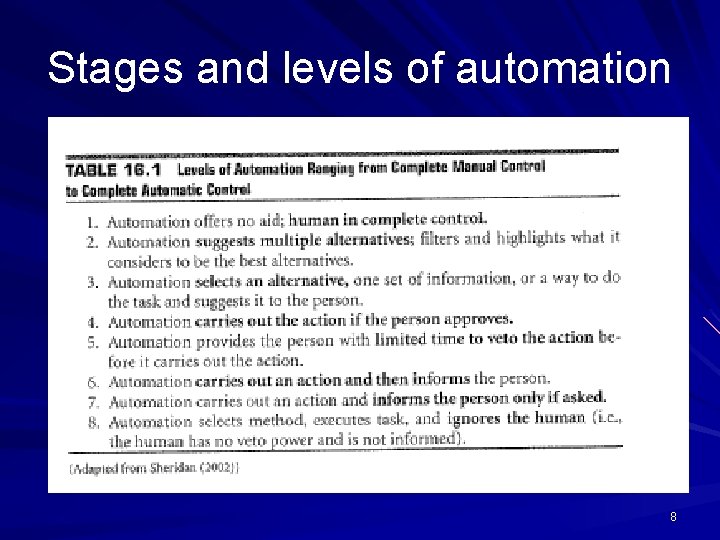

Stages and levels of automation 8

Problems in automation 1. It may be unreliable – key parts may fail 2. Human operator may make an error in use – Korean air - shot down over Soviet airspace pilots misprogrammed the navigation system ‘dumb and dutiful’ 3. Does what it should, but APPEARS to be in error – eg. Sudden shift in air speed or altitude 9 then operator may make inappropriate intervention 9

Automation in complex systems Combinations of lags and complexity plus hazardous processes leads to increased need for automation in some cases – when things go wrong: eg. Three mile island, Bhopal, Chernobyl It is important to keep the operator involved while monitoring the automation – high situation awareness – how to do this when there is little to do? An important challenge for the designer 10

Managing failure 1. Ensure safety 2. Minimize damage to the plant 3. Identify the nature of the fault -diagnosis – too much concern for one or the other may cause additional problems – balance is needed - by the manager it is important to keep in mind that good ‘displays’ can help in diagnosis information should be presented in a way that is consistent with the operators ‘mental model’ of the plant 11

Use of integrated displays may help the manager understand the relationships between key plant variables – example: nuclear accident display showed that signal was given to close a valve. However, the valve was stuck open and the understanding of the current status of system ‘cooling’ was improperly understood by decision makers 12

Goal Keep the human in touch with the automated process give more authority to the human over automation choose a level of involvement for the human that leads to maximum performance – and creates maximum satisfaction These may not all be compatible! – Especially when we consider that a human ‘out of the loop’ for some time may lose skills!13

Human-centered automation Wickens suggests: – Keep the human informed with good displays – keep the human trained so long as there is the possibility that they may go to manual operations – keep the operator in the loop maintain situation awareness without giving back the gains of automation (without reverting totally to manual control) – make the automation flexible and adaptive flexible - one operator chooses control, the other not adaptive - the level of automation may change by condition 14

Trust Inefficiency or ineffectiveness can lead to mis-trust -lack of trust – if too much trust - good automation, then poor detection - rare signal events may be missed - and poorer detection over time poor situation awareness - many times SA is better if they monitor a system than perform the task manually however, they are less likely to intervene if they don’t have confidence or don’t think they understand how the system really works skill loss - can occur without participation in decisions over time 15