FSI Next Gen Stack Requirements Moiz Kohari VP

- Slides: 15

FSI Next Gen Stack Requirements Moiz Kohari – VP Engineering Patrick Mullaney – Architect April 21, 2008

What FSI wants • • 2 Zero latency stack Deterministic latency in the presence of max load Scaling of throughput as the number of cores increases Sockets-like API Memory based API, direct access Identity integration at the device level Enterprise Support © Novell Inc, Confidential & Proprietary

Bandwidth Issues and possible solution Memory Bandwidth Issue • I/O bandwidth is increasing at a faster rate than memory bandwidth (PCI Express “Lane” vs I/O device slots) The I/O Bottleneck • • Each application manages its own connected sockets An I/O operation (send or recv) results in a context switch and a memcpy Possible Solution • 3 Create an I/O processing Engine that manages “connected” sockets on applications' behalf © Novell Inc, Confidential & Proprietary

Memory Based Messaging Scheme • • 4 Certain applications may want to leverage memory based messaging Requires access to atomic operations over the fabric, for high performance synchronization schemes (provided under OFA) • Access to RDMA over the fabric (provided under OFA) • Standard API's for distributed application development © Novell Inc, Confidential & Proprietary

What we currently see Native Stack • latency – due to scheduling (-rt addresses this) – latency due to lock contention (huge) – context switches (app->softirq->driver) – excess queuing (qdisc) – latency due to protocol overhead – latency due to feature bloat OFED 5 – verbs API provides excellent latency – ULPs introduce latency and COS contention © Novell Inc, Confidential & Proprietary

What we currently see Native Linux Stack • 6 Scaling of udp throughput dependent benchmarks have shown marginal scalability as the number of processors increases © Novell Inc, Confidential & Proprietary

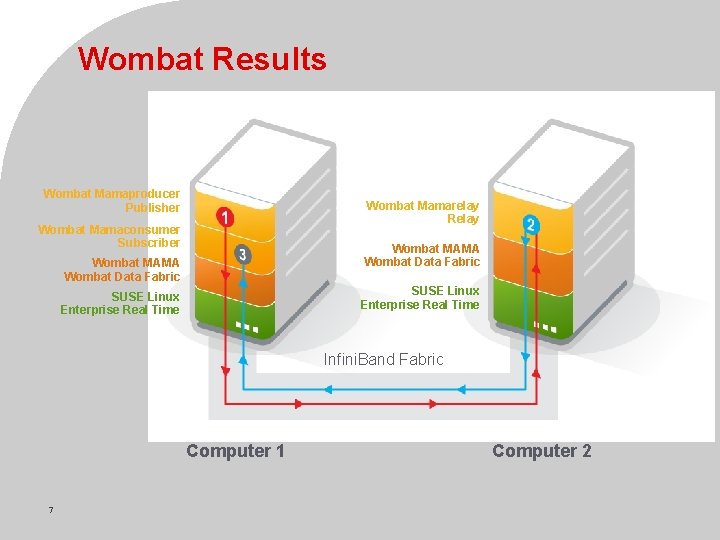

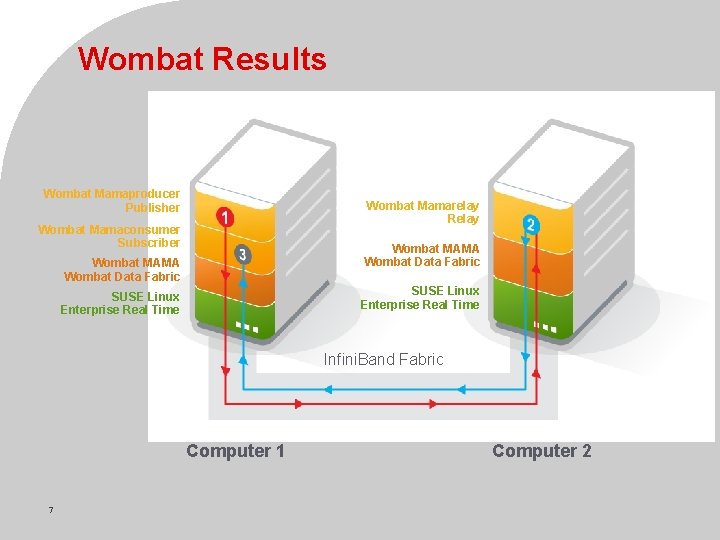

Wombat Results Wombat Mamaproducer Publisher Wombat Mamarelay Relay Wombat Mamaconsumer Subscriber Wombat MAMA Wombat Data Fabric SUSE Linux Enterprise Real Time Infini. Band Fabric Computer 1 7 © Novell Inc, Confidential & Proprietary Computer 2

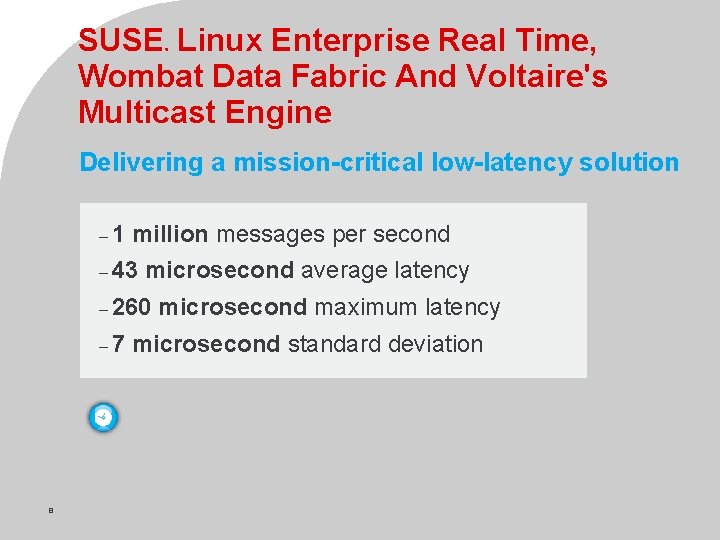

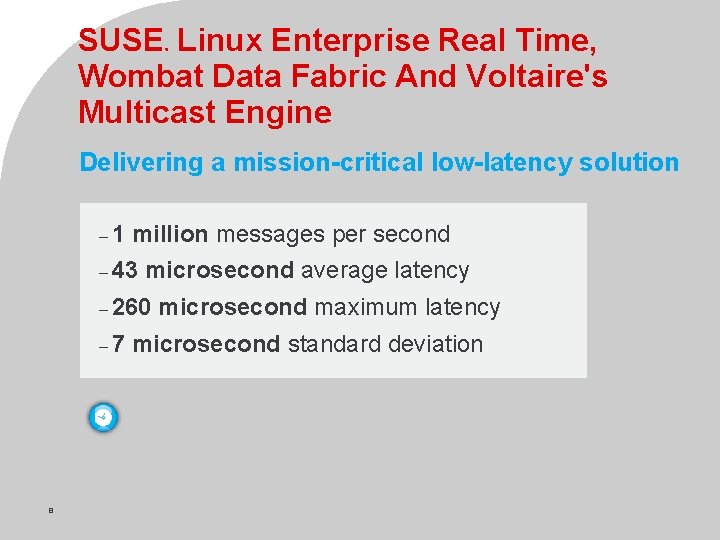

SUSE Linux Enterprise Real Time, Wombat Data Fabric And Voltaire's Multicast Engine ® Delivering a mission-critical low-latency solution – 1 million messages per second – 43 microsecond average latency – 260 – 7 8 microsecond maximum latency microsecond standard deviation © Novell Inc, Confidential & Proprietary

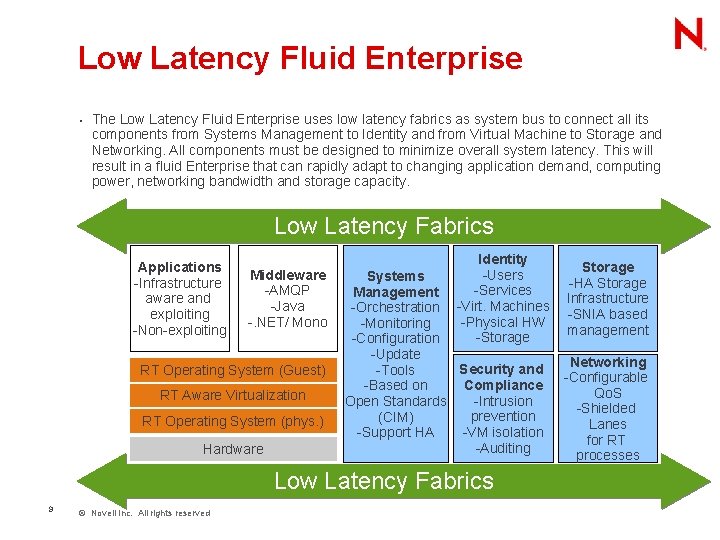

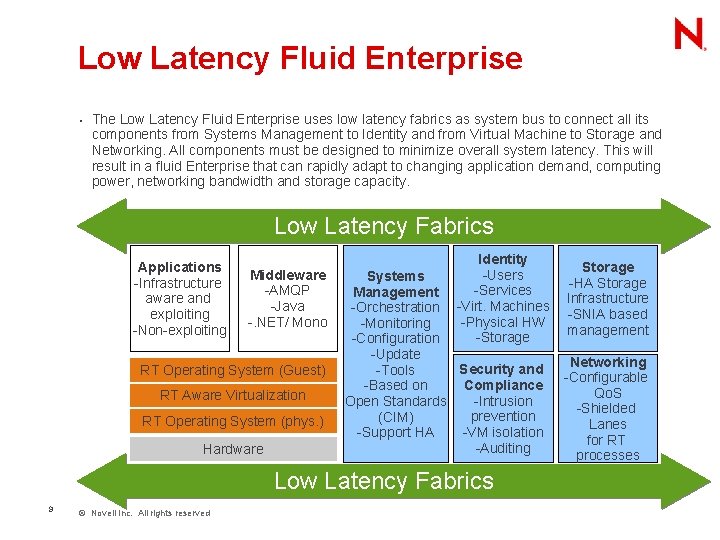

Low Latency Fluid Enterprise • The Low Latency Fluid Enterprise uses low latency fabrics as system bus to connect all its components from Systems Management to Identity and from Virtual Machine to Storage and Networking. All components must be designed to minimize overall system latency. This will result in a fluid Enterprise that can rapidly adapt to changing application demand, computing power, networking bandwidth and storage capacity. Low Latency Fabrics Applications -Infrastructure aware and exploiting -Non-exploiting Middleware -AMQP -Java -. NET/ Mono RT Operating System (Guest) RT Aware Virtualization RT Operating System (phys. ) Hardware Identity -Users -Services -Virt. Machines -Physical HW -Storage Systems Management -Orchestration -Monitoring -Configuration -Update Security and -Tools Compliance -Based on -Intrusion Open Standards prevention (CIM) -VM isolation -Support HA -Auditing Low Latency Fabrics 9 © Novell Inc. All rights reserved Storage -HA Storage Infrastructure -SNIA based management Networking -Configurable Qo. S -Shielded Lanes for RT processes

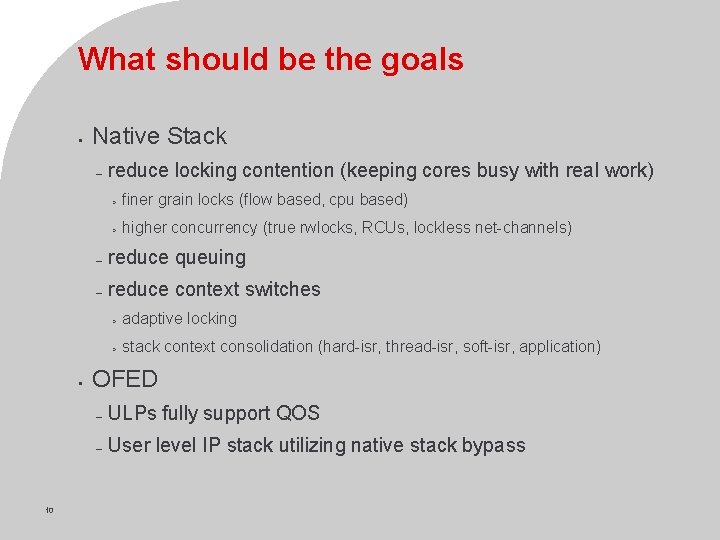

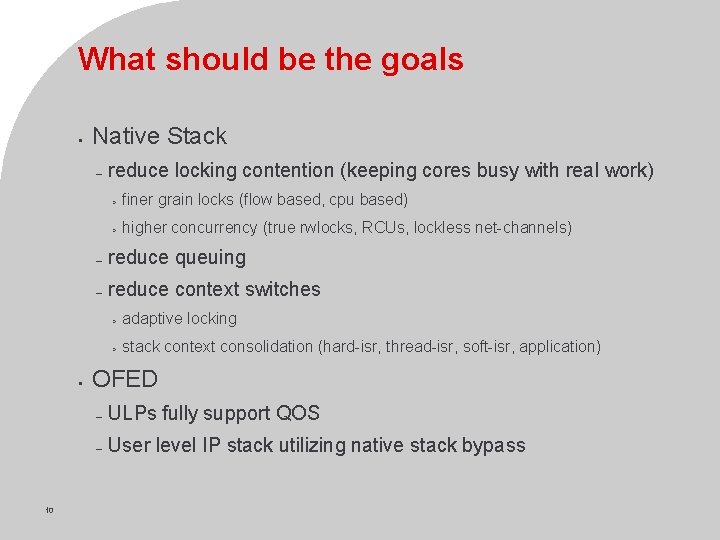

What should be the goals • Native Stack – • 10 reduce locking contention (keeping cores busy with real work) > finer grain locks (flow based, cpu based) > higher concurrency (true rwlocks, RCUs, lockless net-channels) – reduce queuing – reduce context switches > adaptive locking > stack context consolidation (hard-isr, thread-isr, soft-isr, application) OFED – ULPs fully support QOS – User level IP stack utilizing native stack bypass © Novell Inc, Confidential & Proprietary

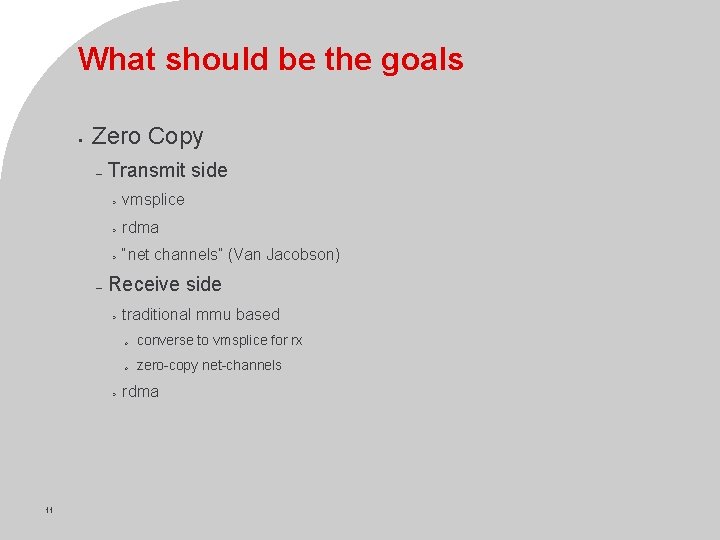

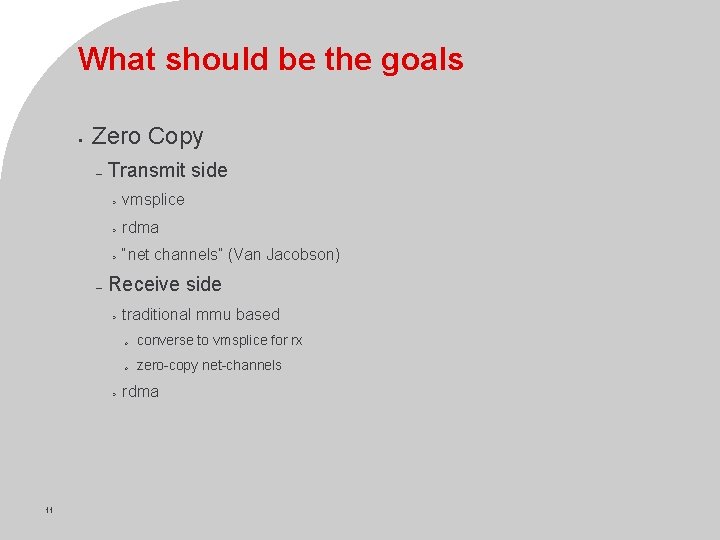

What should be the goals • Zero Copy – – Transmit side > vmsplice > rdma > “net channels” (Van Jacobson) Receive side > > 11 traditional mmu based » converse to vmsplice for rx » zero-copy net-channels rdma © Novell Inc, Confidential & Proprietary

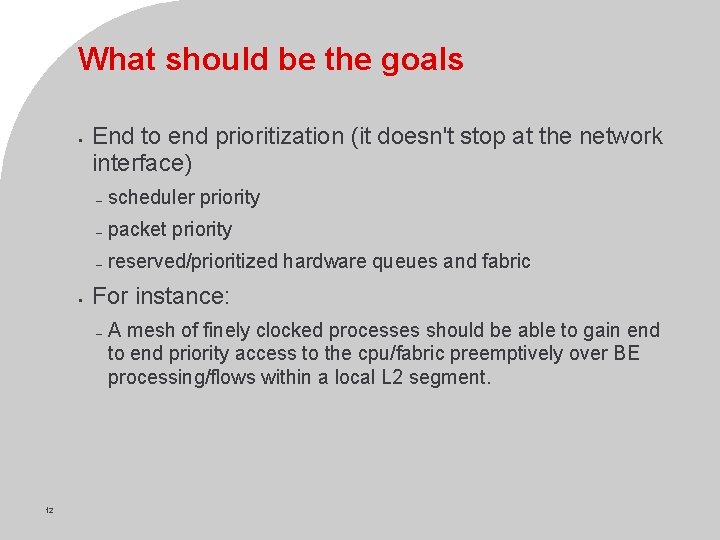

What should be the goals • • End to end prioritization (it doesn't stop at the network interface) – scheduler priority – packet priority – reserved/prioritized hardware queues and fabric For instance: – 12 A mesh of finely clocked processes should be able to gain end to end priority access to the cpu/fabric preemptively over BE processing/flows within a local L 2 segment. © Novell Inc, Confidential & Proprietary

Join the Lizard Blizzard! . . . Questions? 14 © Novell Inc, Confidential & Proprietary

Unpublished Work of Novell, Inc. All Rights Reserved. This work is an unpublished work and contains confidential, proprietary, and trade secret information of Novell, Inc. Access to this work is restricted to Novell employees who have a need to know to perform tasks within the scope of their assignments. No part of this work may be practiced, performed, copied, distributed, revised, modified, translated, abridged, condensed, expanded, collected, or adapted without the prior written consent of Novell, Inc. Any use or exploitation of this work without authorization could subject the perpetrator to criminal and civil liability. General Disclaimer This document is not to be construed as a promise by any participating company to develop, deliver, or market a product. Novell, Inc. , makes no representations or warranties with respect to the contents of this document, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc. , reserves the right to revise this document and to make changes to its content, at any time, without obligation to notify any person or entity of such revisions or changes. All Novell marks referenced in this presentation are trademarks or registered trademarks of Novell, Inc. in the United States and other countries. All third-party trademarks are the property of their respective owners.