FineGrained Failover Using Connection Migration Alex C Snoeren

- Slides: 20

Fine-Grained Failover Using Connection Migration Alex C. Snoeren, David G. Andersen, Hari Balakrishnan MIT Laboratory for Computer Science

The Problem Client Content server Servers Fail. More often than users want to know…

Solution: Server Redundancy Use a healthy one at all times.

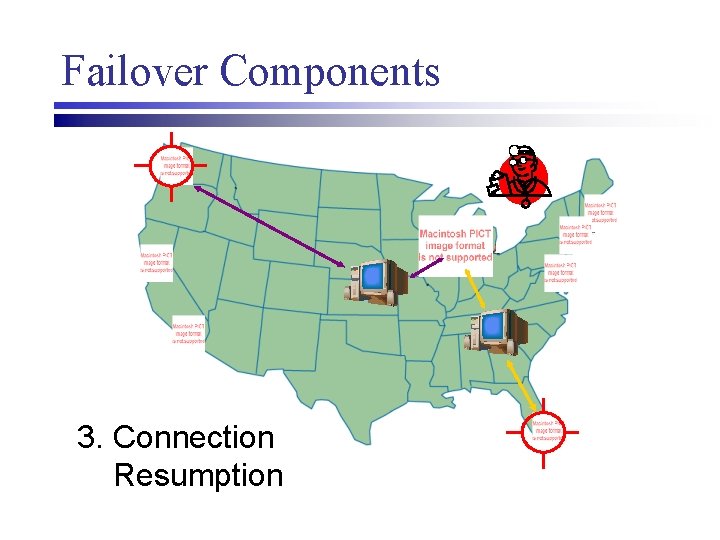

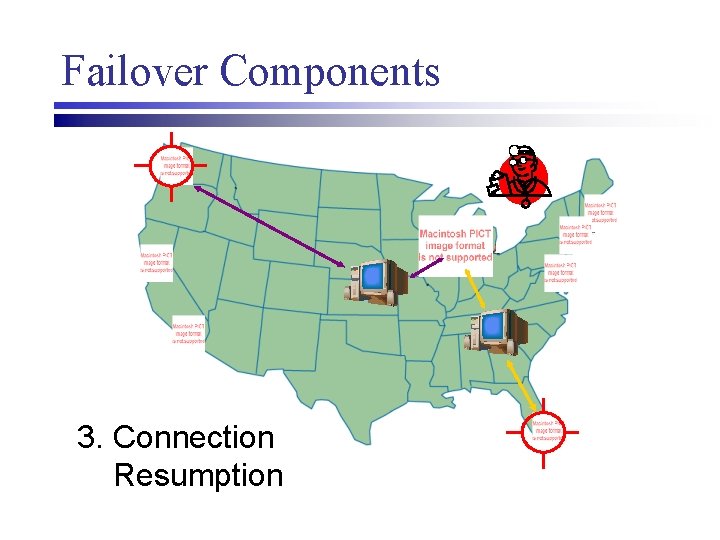

Failover Components 1. 3. Health Connection 2. Server Monitoring Resumption Selection

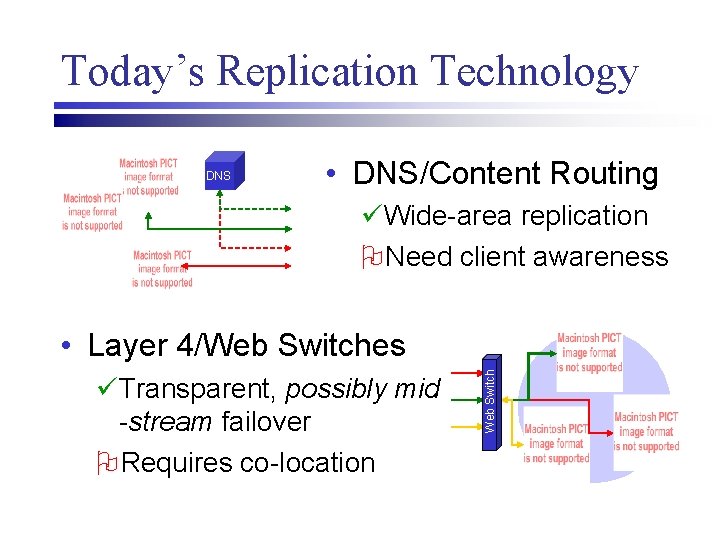

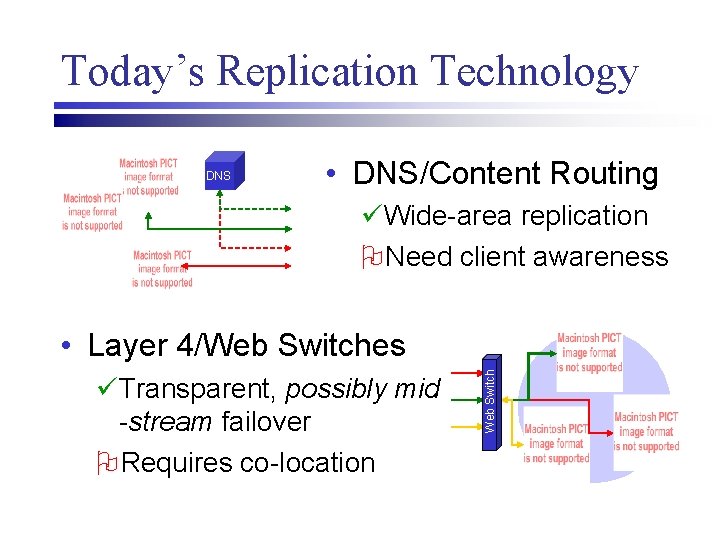

Today’s Replication Technology DNS • DNS/Content Routing üWide-area replication ONeed client awareness üTransparent, possibly mid -stream failover ORequires co-location Web Switch • Layer 4/Web Switches

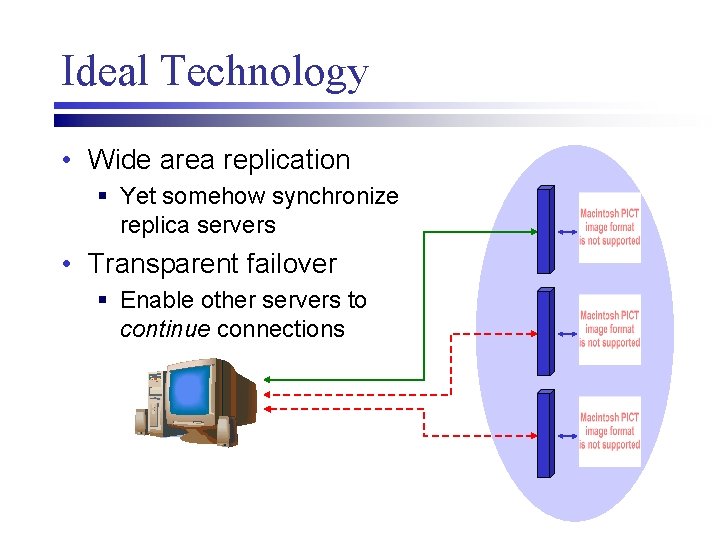

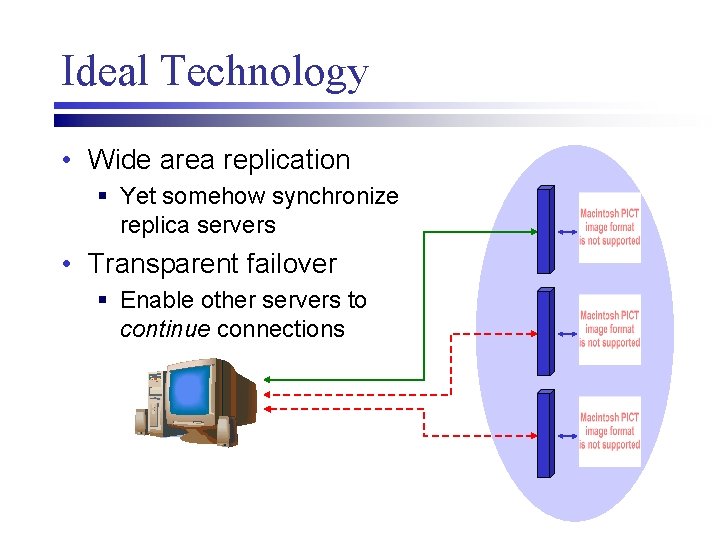

Ideal Technology • Wide area replication § Yet somehow synchronize replica servers • Transparent failover § Enable other servers to continue connections

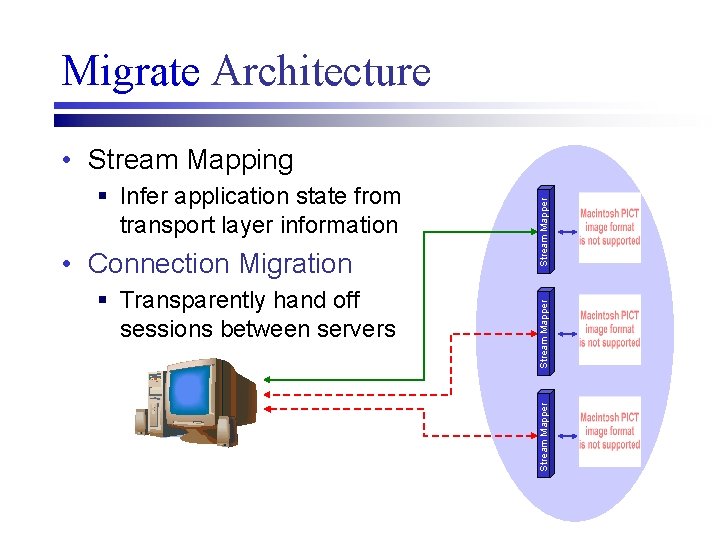

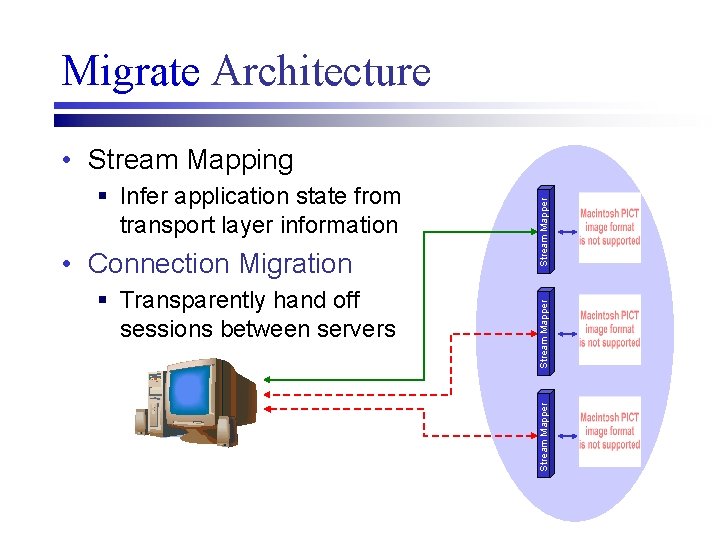

Migrate Architecture § Transparently hand off sessions between servers Stream Mapper • Connection Migration Stream Mapper § Infer application state from transport layer information Stream Mapper • Stream Mapping

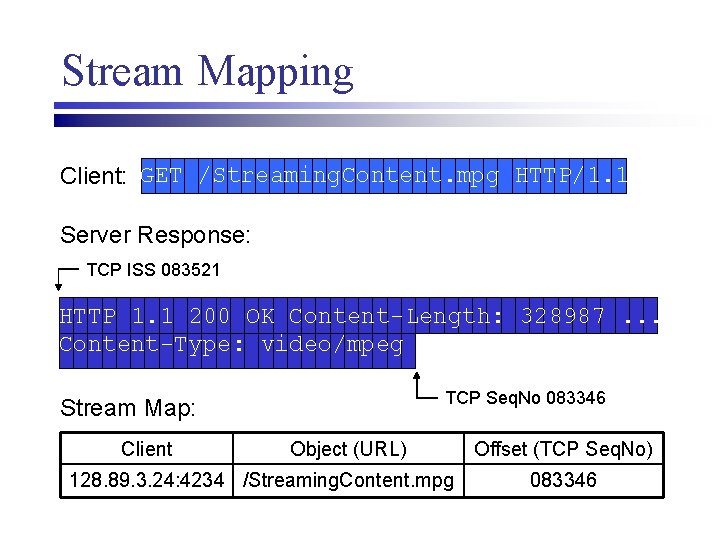

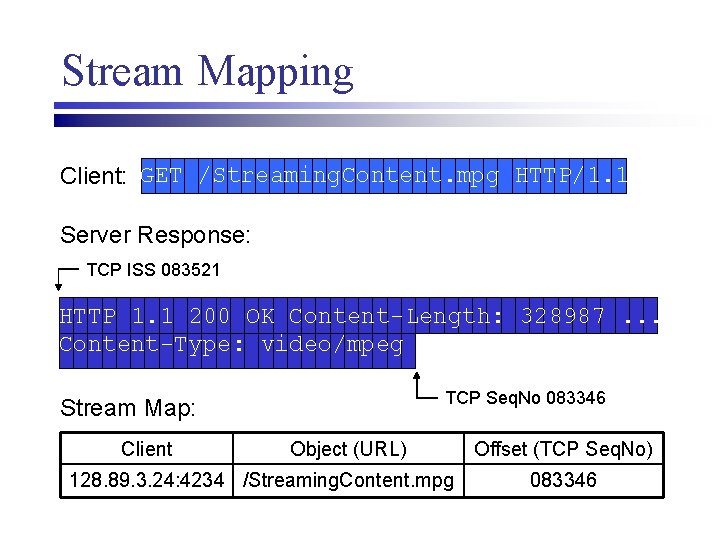

Stream Mapping Client: GET /Streaming. Content. mpg HTTP/1. 1 Server Response: TCP ISS 083521 HTTP 1. 1 200 OK Content-Length: 328987. . . Content-Type: video/mpeg TCP Seq. No 083346 Stream Map: Client Object (URL) 128. 89. 3. 24: 4234 /Streaming. Content. mpg Offset (TCP Seq. No) 083346

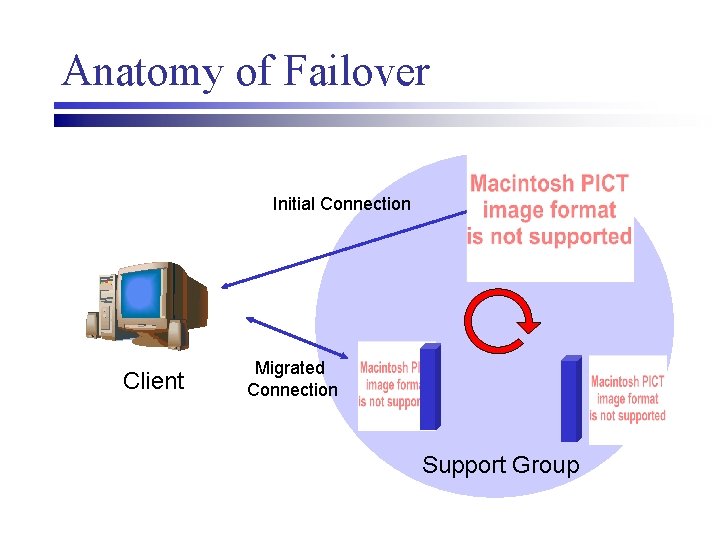

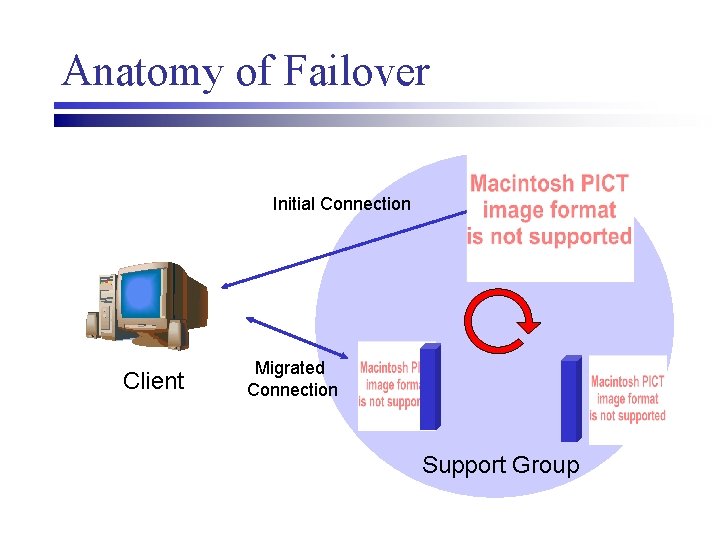

Anatomy of Failover Initial Connection Client Migrated Connection Support Group

Support Groups • Set of partially mirrored servers § All servers able to provide same content § Can be topologically diverse • Synchronize on per-connection basis § Servers need not be complete mirrors § Connections from a failed server can be handled by a different support server § Connections may have distinct support groups

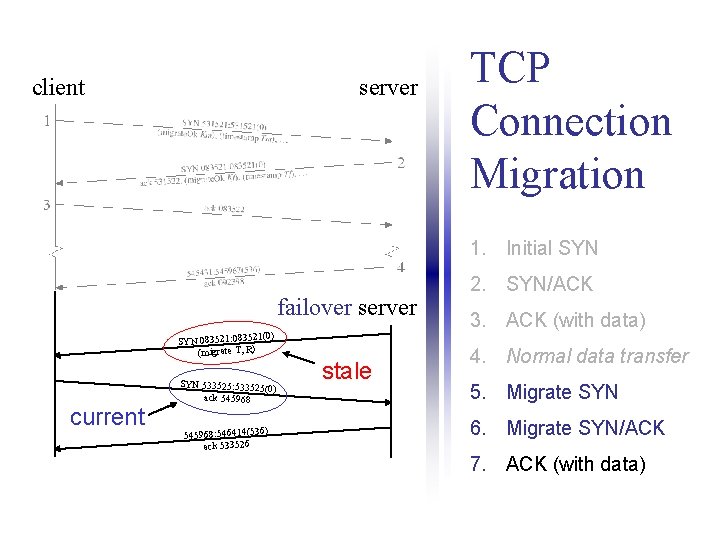

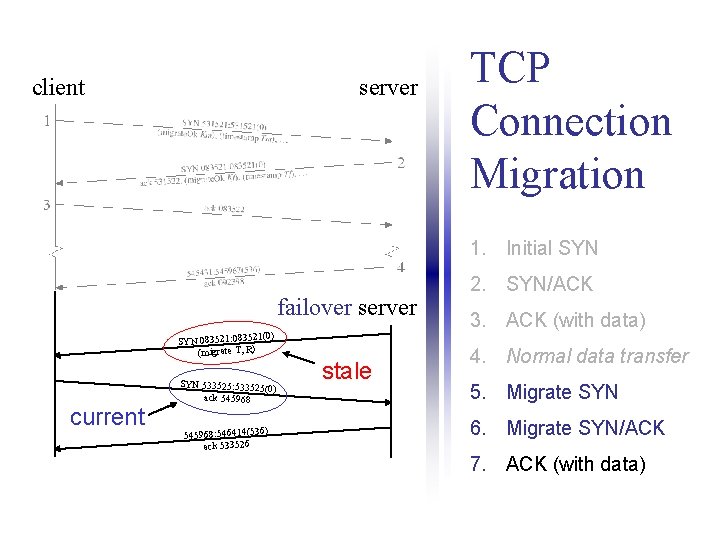

Soft State Synchronization • Synchronize within support groups § Periodic advertisements § Advertise client application object requests § Communicate initial transport layer state • Only initial state need be communicated § Current info inferred from transport layer § Clients will reject redundant migrates from stale support servers

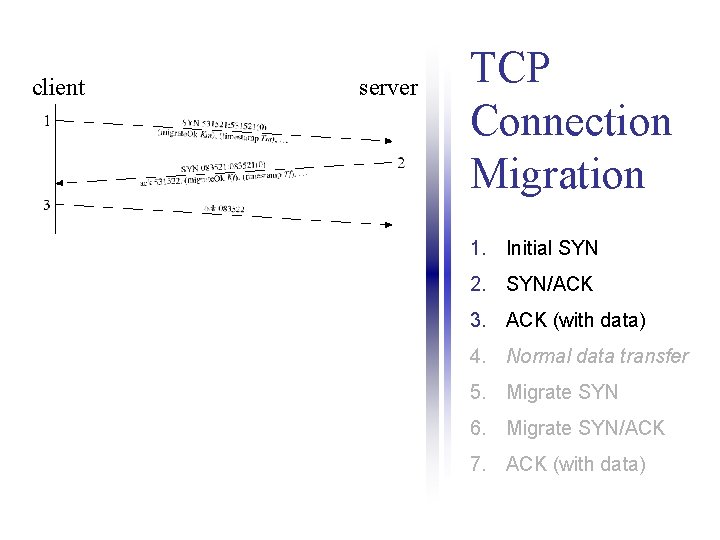

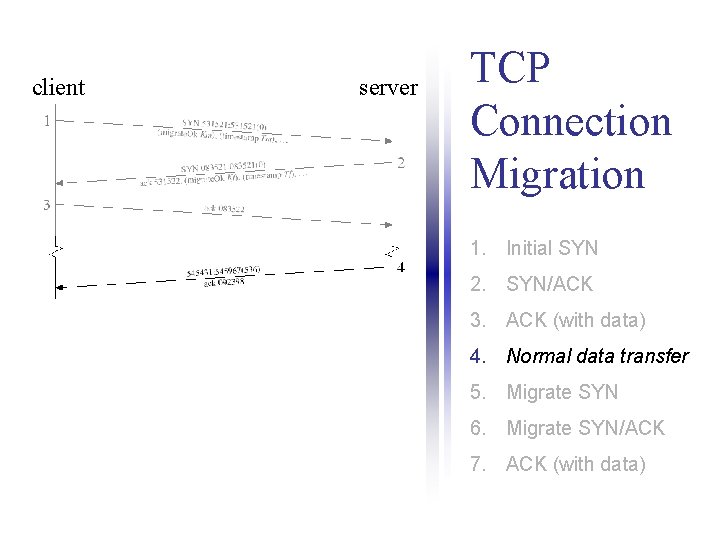

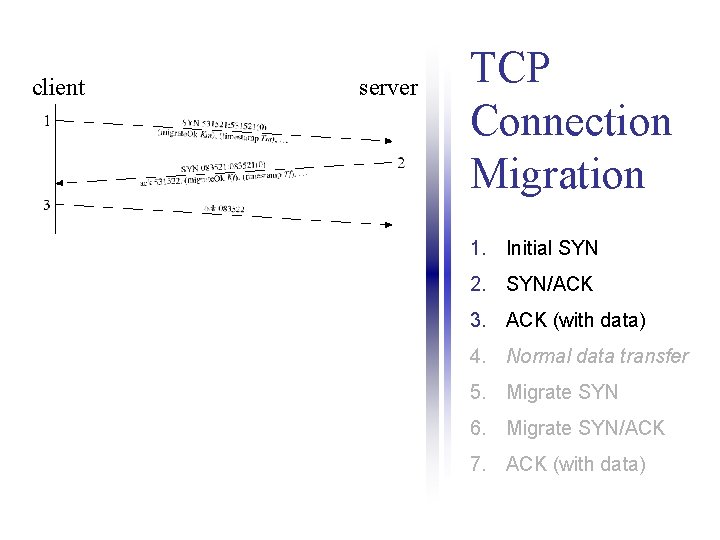

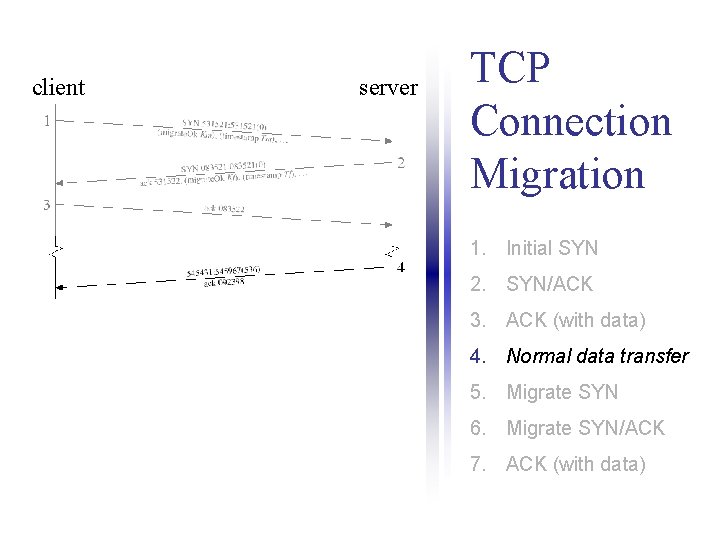

client server TCP Connection Migration 1. Initial SYN 2. SYN/ACK 3. ACK (with data) 4. Normal data transfer 5. Migrate SYN 6. Migrate SYN/ACK 7. ACK (with data)

client server TCP Connection Migration 1. Initial SYN 2. SYN/ACK 3. ACK (with data) 4. Normal data transfer 5. Migrate SYN 6. Migrate SYN/ACK 7. ACK (with data)

client server TCP Connection Migration 1. Initial SYN failover server 1(0) SYN 083521: 08352 (migrate T, R) current SYN 533525: 533525(0) ack 545968: 546414(53 ack 533526 6) stale 2. SYN/ACK 3. ACK (with data) 4. Normal data transfer 5. Migrate SYN 6. Migrate SYN/ACK 7. ACK (with data)

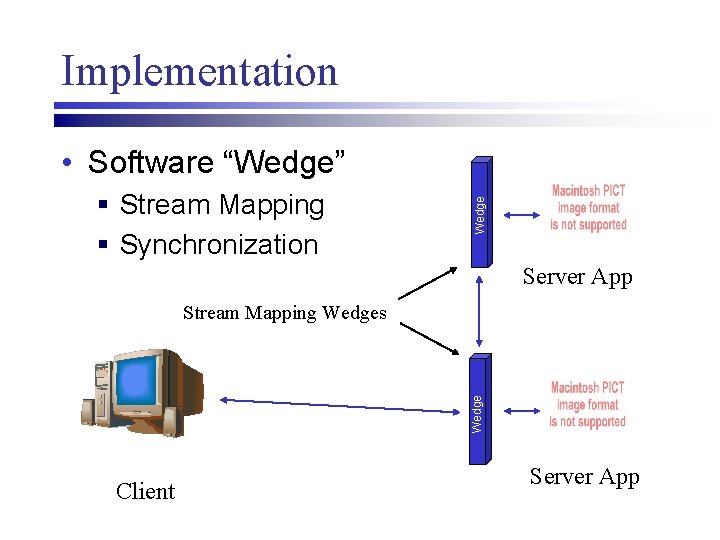

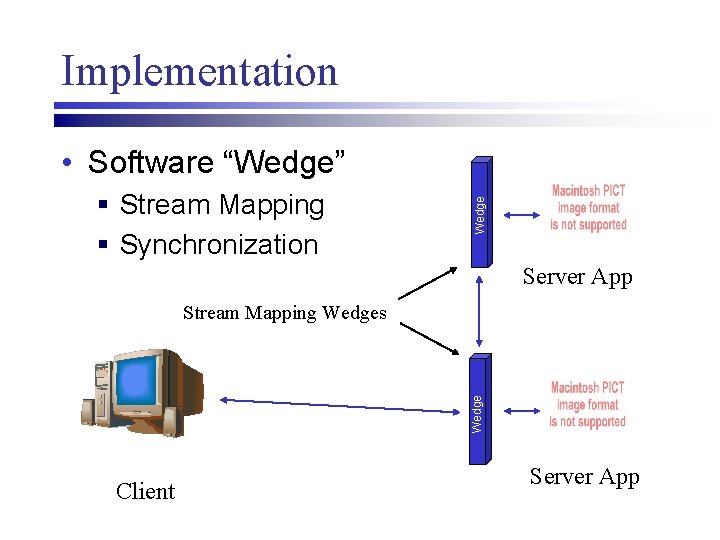

Implementation § Stream Mapping § Synchronization Wedge • Software “Wedge” Server App Wedge Stream Mapping Wedges Client Server App

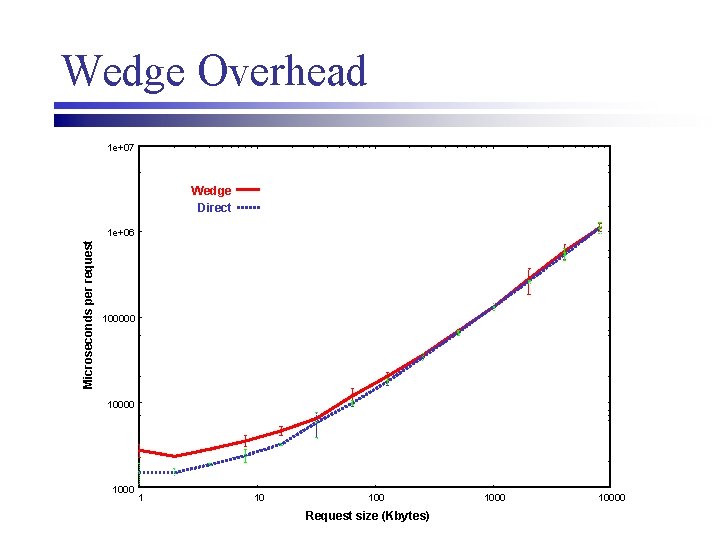

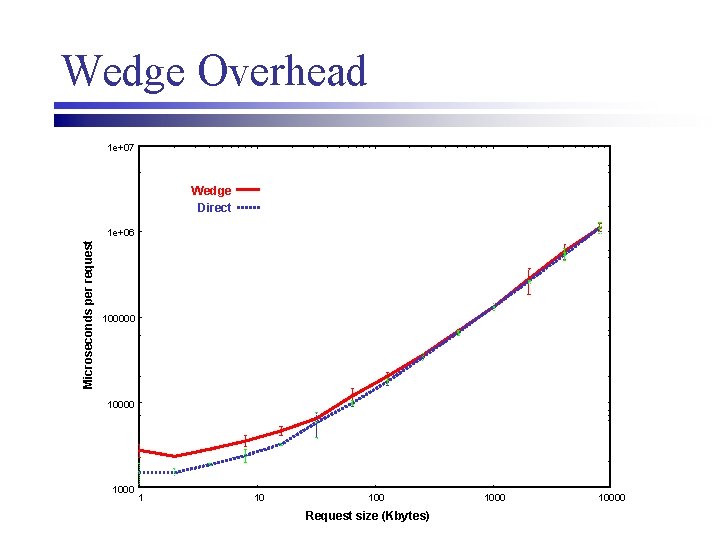

Wedge Overhead 1 e+07 Wedge Direct Microseconds per request 1 e+06 100000 1000 1 10 100 Request size (Kbytes) 10000

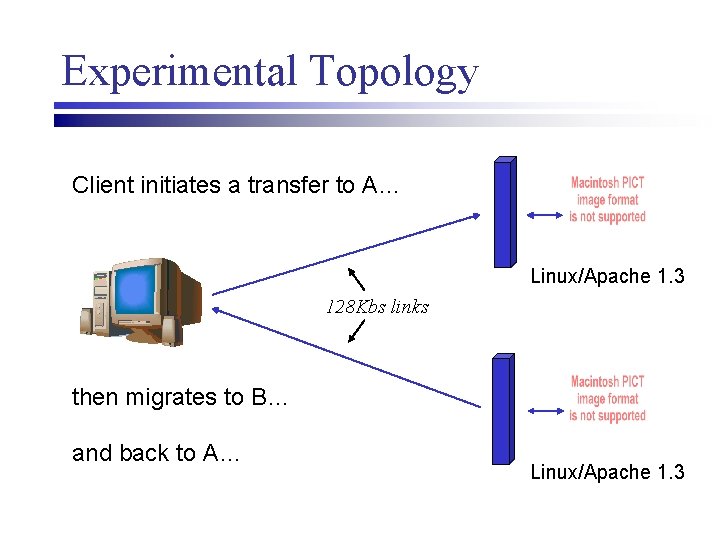

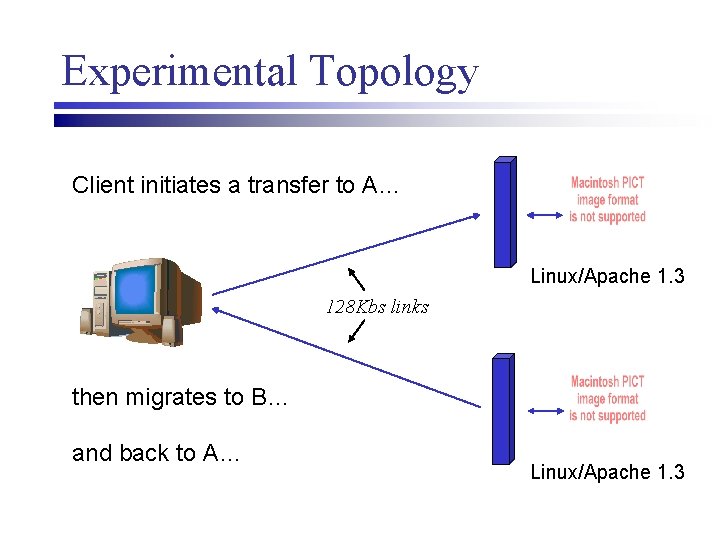

Experimental Topology Client initiates a transfer to A… Linux/Apache 1. 3 128 Kbs links then migrates to B… and back to A… Linux/Apache 1. 3

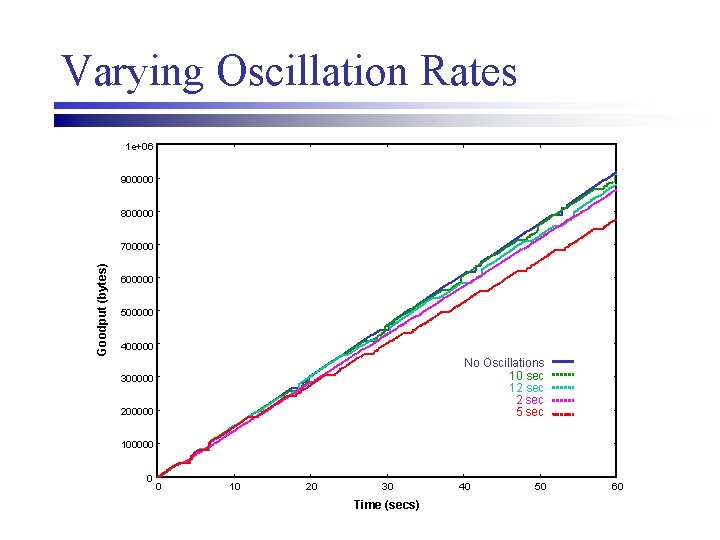

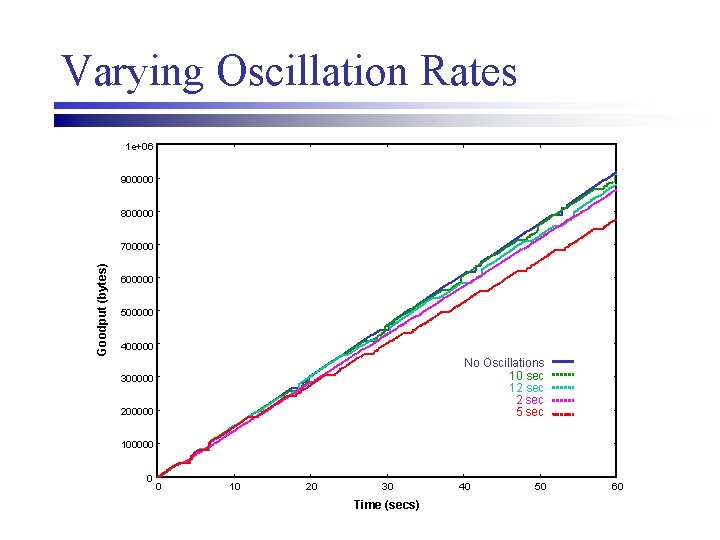

Varying Oscillation Rates 1 e+06 900000 800000 Goodput (bytes) 700000 600000 500000 400000 No Oscillations 10 sec 12 sec 5 sec 300000 200000 100000 0 0 10 20 30 Time (secs) 40 50 60

Benefits & Limitations • Enable wide area server replication § Low server synchronization overhead § Infer current state from transport layer • Robust even under adverse loads § Health monitors can be overly reactive § Gracefully handle cascaded failures • Leverages connection migration § Requires modern transport stack

Networks and Mobile Systems Software available on the web: http: //nms. lcs. mit. edu/software/migrate