European Language Resources Association Evaluations and Language resources

- Slides: 16

European Language Resources Association Evaluations and Language resources Distribution Agency An Exit Strategy to Capitalise on the CLEF Evaluation Campaigns Kevin Mc. TAIT ELRA/ELDA 75013 Paris France mctait@elda. fr http: //www. elda. fr/ CLEF 2003 KM/1 ELRA/ELDA

Objectives of CLEF Workpackage 5: 1. Capitalise on data collection efforts during CLEF campaigns 2. Enable reproduction of experimental conditions i. e. same reusable training and test data to other players in R&D community for benchmarking purposes CLEF 2003 KM/2 ELRA/ELDA

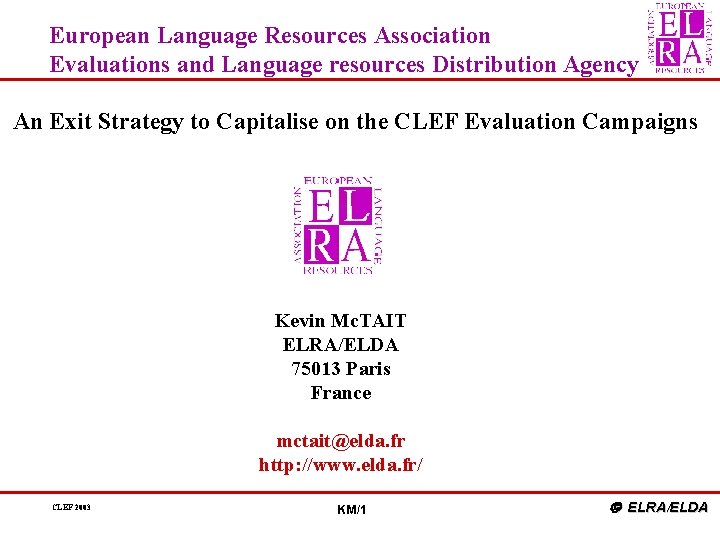

Implementation Plan Implementation in 3 stages: 1. • 2. • • 3. • • • Simplify negotiation of distribution rights of data collections Secure rights for distribution post-CLEF Produce Evaluation Package Data, scoring tools, methodologies, protocols, metrics DVD/CD Documentation, specifications, validation reports, quality stamp Enable CLIR R&D community benchmark CLIR systems - invaluable Fix costing arrangements (distribution costs etc. ) Exploit ELRA/ELDA’s distribution and promotion procedures ELRA catalogue Long term availability and wide audience (all LE areas, even outside CLIR) Communication: website, newsletter, members news, conferences (LREC, Lang. Tech, ACL etc. ) Task similar to LRs distribution (raison d’être ELRA/ELDA) Clearing house for HLT Evaluation & Evaluation Resources CLEF 2003 KM/3 ELRA/ELDA

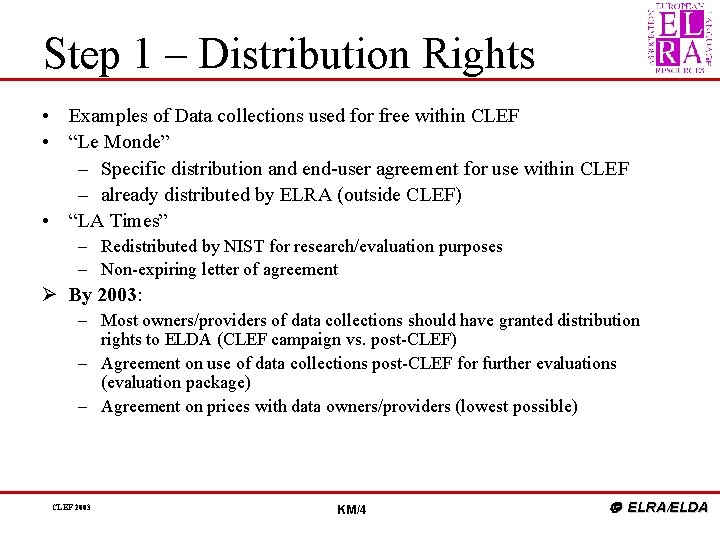

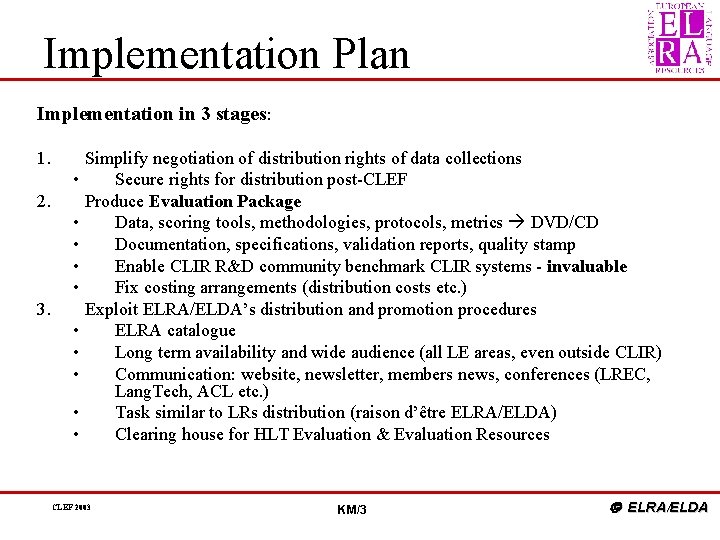

Step 1 – Distribution Rights • Examples of Data collections used for free within CLEF • “Le Monde” – Specific distribution and end-user agreement for use within CLEF – already distributed by ELRA (outside CLEF) • “LA Times” – Redistributed by NIST for research/evaluation purposes – Non-expiring letter of agreement Ø By 2003: – Most owners/providers of data collections should have granted distribution rights to ELDA (CLEF campaign vs. post-CLEF) – Agreement on use of data collections post-CLEF for further evaluations (evaluation package) – Agreement on prices with data owners/providers (lowest possible) CLEF 2003 KM/4 ELRA/ELDA

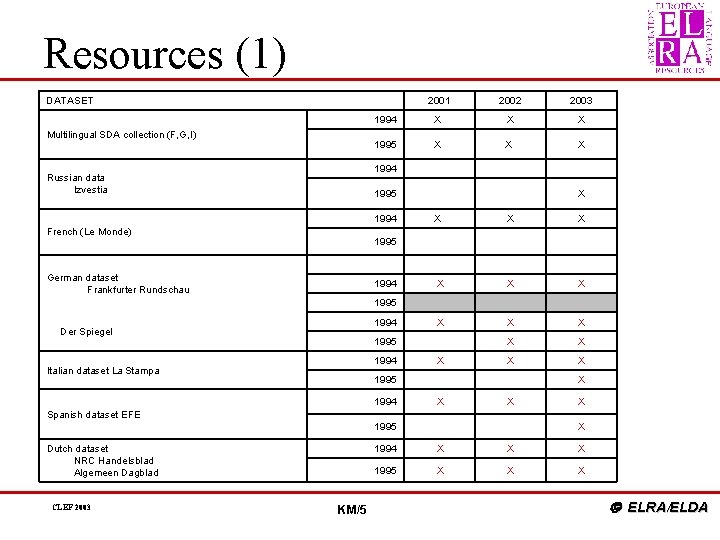

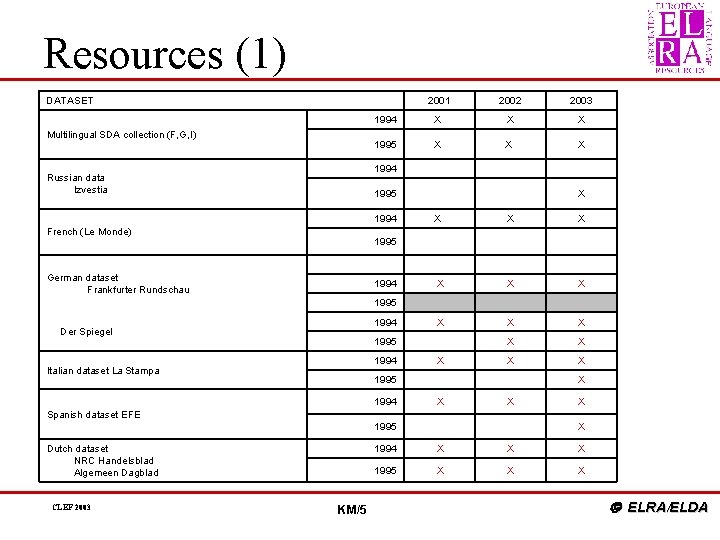

Resources (1) DATASET Multilingual SDA collection (F, G, I) Russian data Izvestia French (Le Monde) 2001 2002 2003 1994 X X X 1995 X X X 1994 1995 X 1994 X X X 1995 1994 X X X 1995 X X 1994 X X X 1995 X 1994 X X X 1995 X X X German dataset Frankfurter Rundschau Der Spiegel Italian dataset La Stampa Spanish dataset EFE Dutch dataset NRC Handelsblad Algemeen Dagblad CLEF 2003 KM/5 ELRA/ELDA

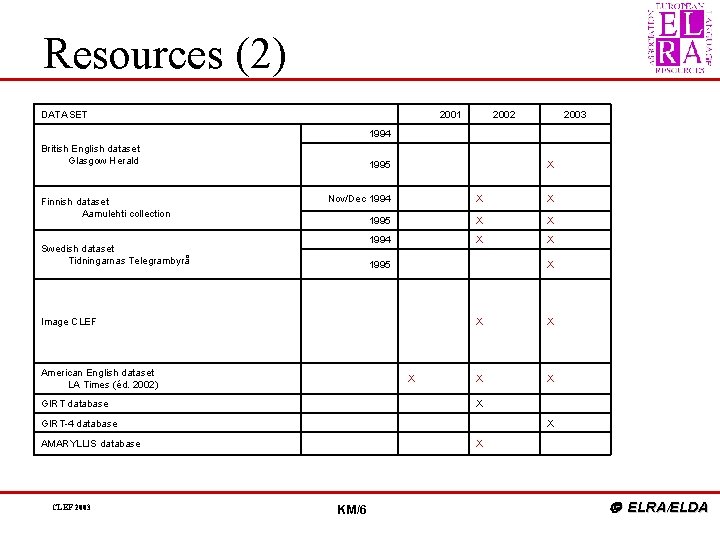

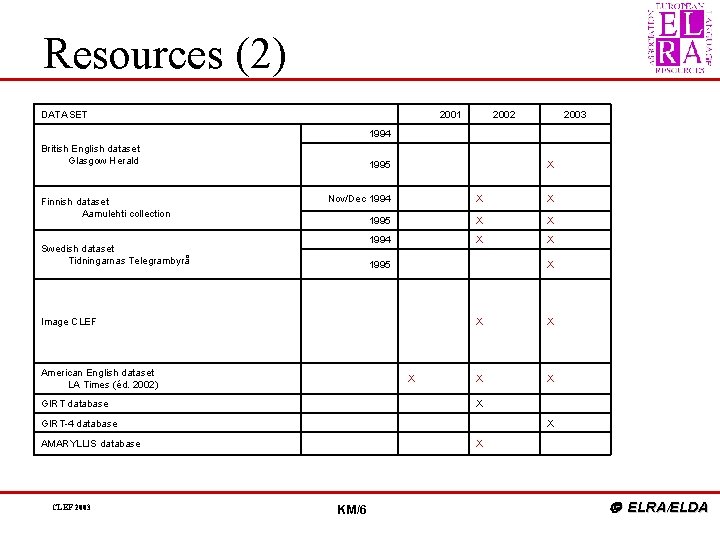

Resources (2) DATASET 2001 1995 X Nov/Dec 1994 X X 1995 X X 1994 X X 1995 X X X Swedish dataset Tidningarnas Telegrambyrå Image CLEF 2003 1994 British English dataset Glasgow Herald Finnish dataset Aamulehti collection 2002 American English dataset LA Times (éd. 2002) X X X GIRT database X GIRT-4 database X AMARYLLIS database X CLEF 2003 KM/6 ELRA/ELDA

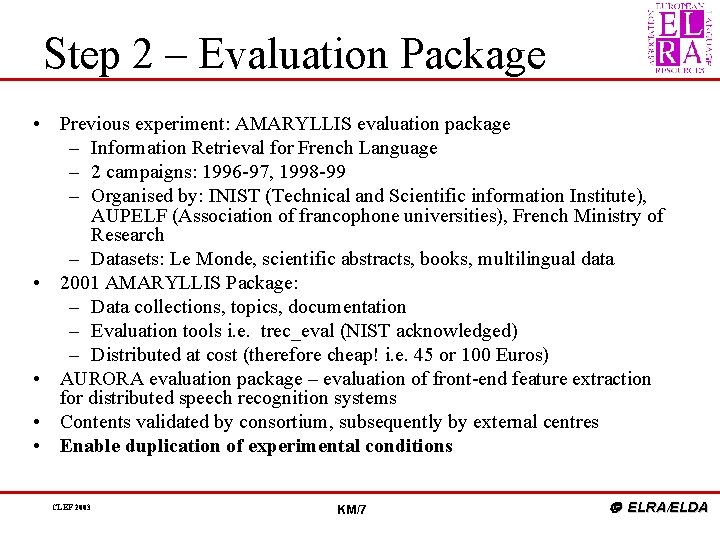

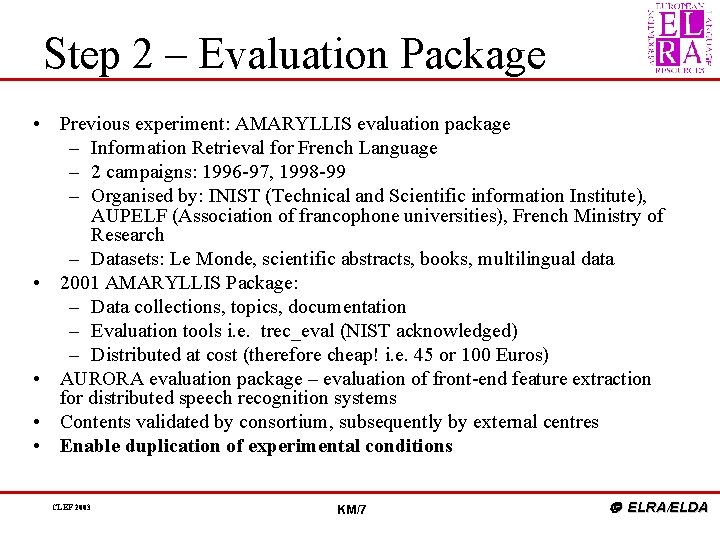

Step 2 – Evaluation Package • Previous experiment: AMARYLLIS evaluation package – Information Retrieval for French Language – 2 campaigns: 1996 -97, 1998 -99 – Organised by: INIST (Technical and Scientific information Institute), AUPELF (Association of francophone universities), French Ministry of Research – Datasets: Le Monde, scientific abstracts, books, multilingual data • 2001 AMARYLLIS Package: – Data collections, topics, documentation – Evaluation tools i. e. trec_eval (NIST acknowledged) – Distributed at cost (therefore cheap! i. e. 45 or 100 Euros) • AURORA evaluation package – evaluation of front-end feature extraction for distributed speech recognition systems • Contents validated by consortium, subsequently by external centres • Enable duplication of experimental conditions CLEF 2003 KM/7 ELRA/ELDA

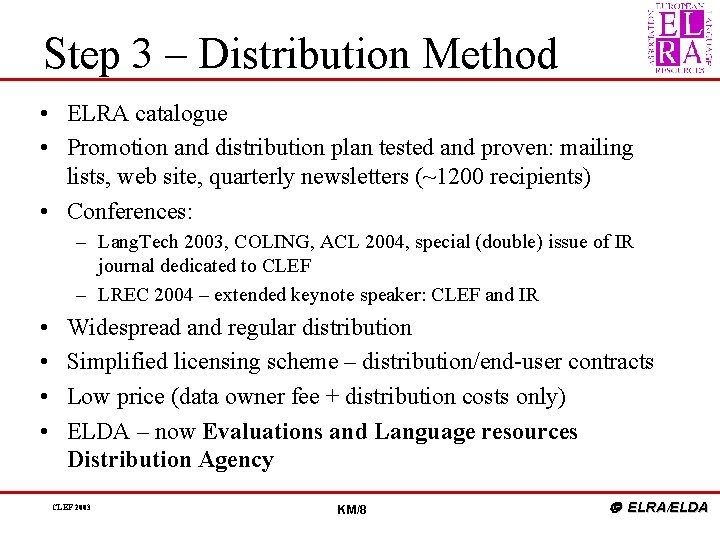

Step 3 – Distribution Method • ELRA catalogue • Promotion and distribution plan tested and proven: mailing lists, web site, quarterly newsletters (~1200 recipients) • Conferences: – Lang. Tech 2003, COLING, ACL 2004, special (double) issue of IR journal dedicated to CLEF – LREC 2004 – extended keynote speaker: CLEF and IR • • Widespread and regular distribution Simplified licensing scheme – distribution/end-user contracts Low price (data owner fee + distribution costs only) ELDA – now Evaluations and Language resources Distribution Agency CLEF 2003 KM/8 ELRA/ELDA

Why ELRA/ELDA? • Clearing house for LRs (Speech, text corpora, lexica, mulitmodal)/ – Commission, production, validation, distribution LRs in legally sound framework • Experience in the production, validation, packaging and distribution of Language Resources (+legal issues) • Evaluation and Evaluation Resources is related activity (HLT developers/evaluators are users of LRs) • Evaluation infrastructure/network of (R&D) centres providing evaluation resources, software, methodologies, protocols • Carry out independent evaluation (ethical) ELRA/ELDA (evaluation department) has set up a European clearing house for HLT evaluation in the same way that ELDA has become a major clearing house for Language Resources. CLEF 2003 KM/9 ELRA/ELDA

Evaluation Experience • AURORA • AMARYLLIS • ARCADE/ROMANSEVAL – Word sense disambiguation • TC-STAR(_P) – Speech-to-Speech Translation • Technolangue/EVALDA – Bilingual alignment, terminology extraction, machine translation, Q/A systems, parsing technology, BN transcription, speech synthesis, man-machine dialogue systems CLEF 2003 KM/10 ELRA/ELDA

Evaluation Projects ðAMARYLLIS (Multilingual/Parallel corpora) Promoting the creation of corpora and evaluation procedures for the French language (i) Evaluation of information retrieval systems in French text corpora (ii) Methodology of evaluation for similar search tools CLEF 2003 KM/12 ELRA/ELDA

Evaluation Projects ðARCADE/ROMANSEVAL Promoting research in the field of multilingual alignment • Evaluation of parallel text alignment systems In collaboration with SENSEVAL/ROMANSEVAL exercise on word-sense disambiguation for Romance languages CLEF 2003 KM/13 ELRA/ELDA

Evaluation Projects ðTC-STAR(_P) Preparatory Action for Speech to Speech Translation • WP: Language Resources and Evaluation Infrastructure EU funded preparatory project (6 th Framework) for TC-STAR project (Technology and Corpora for Speech to Speech Translation). CLEF 2003 KM/14 ELRA/ELDA

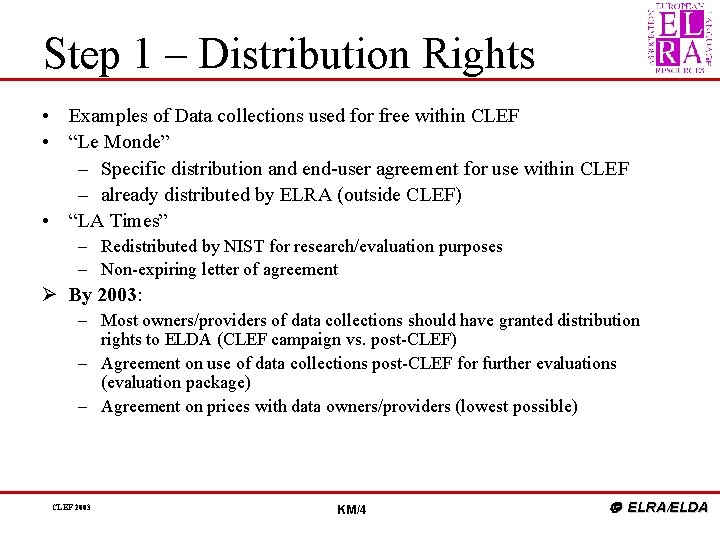

Evaluation Projects ðTechnolangue/EVALDA Permanent evaluation infrastructure for French • Evaluation for French HLT French government funded project for the evaluation of 8 human language technologies. CLEF 2003 KM/15 ELRA/ELDA

Technolangue • Techno. Langue and the EVALDA project – – – • Corpus Alignment • Terminology extractions • Machine Translation • Syntactic Parsers • Q/A Systems • Broadcast News Transcription Systems – • (Text to ) Speech Synthesis – • Dialogue Systems • (1. 2 M€ budget) CLEF 2003 KM/16 ELRA/ELDA

Technolangue/EVALDA A Permanent infrastructure that would focus on: Ø R&D on (all) Evaluation issues Ø Elaborations of Evaluation protocols, assessment tools, Ø Production of Language Resources and Validation Ø Coordination team for the management and supervision of all projects Ø Logistics and support Ø Capitalisation of the outcome of each and every project (evaluation resources, tools, methodologies, protocols, best-practices) Ø ELDA evaluation department operational: expanding team of engineers CLEF 2003 KM/17 ELRA/ELDA