DUNE 35 ton Prototype Offline News and Announcements

- Slides: 14

DUNE 35 -ton Prototype Offline News and Announcements Tom Junk, Tingjun Yang, Michelle Stancari, Mark Convery Fermilab, SLAC DUNE Collaboration Meeting: September 2 -5, 2015 https: //indico. fnal. gov/conference. Display. py? conf. Id=10100 Current plan is to have four parallel sessions: 2 35 t, 2 Software & computing. LAr. Soft Coordination Meeting August 11: https: //indico. fnal. gov/conference. Display. py? conf. Id=10257 art/LAr. Soft Course, August 3 -7: Looking over the slides is highly recommended! https: //indico. fnal. gov/conference. Display. py? conf. Id=9928 Fermilab Computing Sector Liaisons’ Meeting August 12: https: //fermipoint. fnal. gov/organization/cs/scd/CS%20 Liaison%20 Meetings%20 Library/Forms/Modified%20 this%20 week. aspx TRJ DUNE 35 -ton Offline News and 8/13/15 Announcements 1

8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 2

8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 3

35 -ton Computing TSW (Technical Scope of Work) Delineates computing services and support 35 -ton needs in order to commission, operate, and analyze the collected data. Already reviewed twice by the 35 -ton group. TSW Link on Share. Point Sent to the Computing Sector for review till the end of August and send out for signatures on September 1. Computing Sector people for review: Ray Pasetes, Mitch Renfer, Rob Harris, Adam Lyon, Stu Fuess, Margaret Votava 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 4

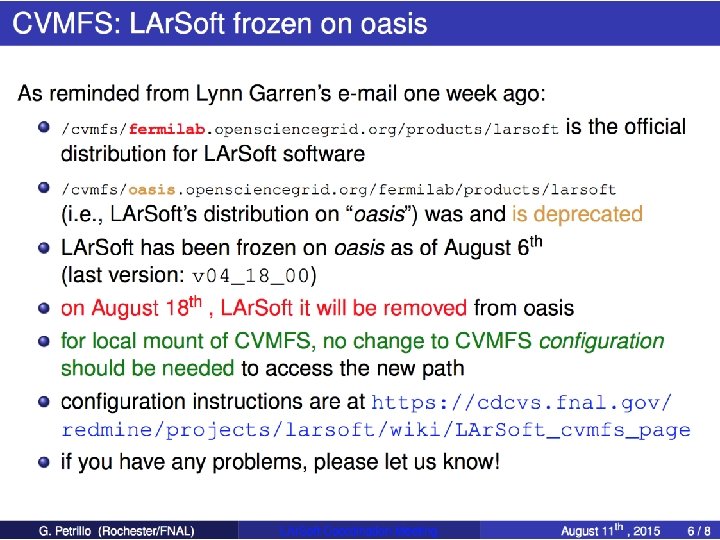

DUNE Computing Resources • New DUNE service desk pull-down menu category: E-1062. Getting more functional! Now has sub-categories • DUNE VO has been created – Steve Timm and Tom Junk are admins, Steve Timm is the security contact. • Still some work to get the VO accepted by grid worker sites. • LBNE VO users should be grandfathered in (though may are suspended due to expired Acceptable Use Policy forms) • New DUNE VO membership to be granted along with DUNE interactive accounts. Other DUNE resources: TO DO dunegpvm 01. fnal. gov through dunegpvm 10. fnal. gov: Service desk ticket submitted. “Tail end of a long process”. Meeting to kick off general DUNE renaming/new instance creation July 29, organized by Q. Li. DUNE Blue. Arc areas – to do 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 5

DUNE Computing Resources /grid/fermiapp/products/dune Area requested in a Service Desk ticket. M. Kirby had helped us set up the /grid/fermiapp/products/lbne area. /pnfs/dune/scratch – not there yet /pnfs/dune/persistent -- this exists and shares space with /pnfs/lbne/persistent DUNE redmine area – already there! dunetpc redmine/repo – working on it! See Tingjun’s talk on Aug 11. Renaming all files and changing lbne in the contents of files, all the while retaining git history (using git mv). daqinput 35 t and rootfiles are kept with lbne in their names in order not to disrupt ongoing daq work. 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 6

DAQ Data Transfer Status All complete VST rootfiles through the end of July 2015 transferred from /data/lbnedaq/data on lbne 35 t-gateway 01 to offline storage (SAM). https: //cdcvs. fnal. gov/redmine/projects/35 ton/wiki/LBNE 35 t. Vertical. Slice. Test. Data. Files from May 2015 onwards are still on the gateway 01 node – can be deleted. Data disk on the gateway node is 93% full – still has 17 GB left. Script for copying data from the gateway node to lbnegpvm* with a target directory on d. Cache written and tested. New lbnedaq shared account created on the lbnegpvm’s. So far I cannot log on to it however, and submitted a Service Desk ticket. This is so we don’t have to use Mike Wallbank’s Kerberos ticket to transfer DQM plots. But also useful for data, but. . . But Ed Simmonds (SCD) does not recommend using the gpvm’s as part of the DAQ chain. Not 24 x 7 resilient. To minimize “hops”, we’d like to mount Blue. Arc /lbne/data, /lbne/data 2 and d. Cache /pnfs/lbne/scratch on lbnegateway 02. fnal. gov 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 7

d. Cache News, Advice Scratch space: /pnfs/lbne/scratch -- very large, but there is a Least Recently Used eviction algorithm. File lifetime is of order 1 month. NOv. A writes to scratch very frequently. Persistent space: /pnfs/lbne/persistent /pnfs/dune/persistent Size: 150 TB (shared between lbne and dune areas) Hardware failure may result in file loss – this area is not backed up. See Qizhong’s description of how to store files on tape. No quotas (yet). d. Cache is meant to be used for data that may also be on tape. Most suited to write once, read-many access patterns. Several consequences of the d. Cache architecture (from R. Illingworth) Files are immutable (cannot be modified) once written Latency is usually low for files on disk, but very high for files retrieved from tape Under heavy load accesses are queued by file Uncoordinated or random access of files that are not in cache can perform very badly, creating large backlogs 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 8

d. Cache News, Advice lbne d. Cache areas are not tape-backed, though we have a File Transfer Service dropbox set up on /pnfs/lbne/scratch/lbnepro which is used for storing data on tape. Tape-backed d. Cache areas can be very slow to access ls is a slow operation – especially in directories with many files ls –l is a very slow operation – if ls produces color output for terminals, then it needs to query the file type (directory, symlink, ordinary file) and protection bits (executable) to color the name properly. Can be really slow. I haven’t tried using du on d. Cache yet – probably prohibitively inefficient. Need a tool to track usage. We may request quotas to be placed on our persistent d. Cache area. Over time, we may run out of space anyhow even with quotas as there is high turnover of collaborators. 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 9

d. Cache News, Advice From Robert Illingworth: Performing a mv between areas with different types of retention policies doesn’t change their retention policy, only the metadata. To change the retention policy a file must be copied to a space with the desired retention policy. Some commands won’t work at all. Some may work, but could act erratically. Some will work in some contexts, but not in others. Stick to simple stuff - write a file and open it up in root, for example. For copying multiple files in and out of d. Cache locations use “ifdh cp” rather than plain cp. There is no plan to NFS mount d. Cache on Fermi. Grid nodes. Grid jobs must use some other access method. xrootd can be used to stream data to a job where that would be more efficient than copying the whole thing over. Blue. Arc is not going away! Mounts of the data areas on grid workers are going away though (/lbne/app mounts on grid workers are not going away). 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 10

Empty Files on d. Cache Sometimes file transfers to d. Cache fail and an empty file is left. zero-length files by themselves are not problems on most filesystems, but d. Cache has additional layers of metadata which are missing in the case of failed transfers. Periodically I get an e-mail from CS listing emtpy files with missing layers and am asked to track down users to delete them so as not to clutter the check for them. It’s a nice quality control step if CS tells us about failed transfers, but frequently it comes late. If a user’s jobs finish and output is lost, the user frequently finds out about it right away. Examples of such files have so far been log files that users don’t always care about and thus may notice for a while. A concern is the rate at which file transfer errors occur (and not just zero-length files made by users). Currently evaluating d. Cache vs. Blue. Arc for the target for transfer of DAQ files. 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 11

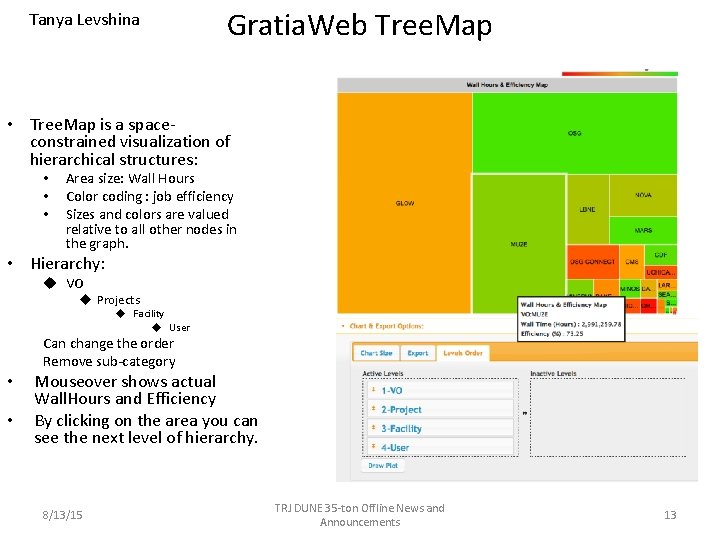

Tanya Levshina Grid Accounting • Gratia collects finished jobs records. • The data include CPU Hours (User and System), Wall Hours, Exit Code etc. • We calculate efficiency as (CPUUser + CPUSystem)/Wall. Hours • Gratia. Web is GUI to Gratia • Tree. Map efficiency plot was recently released in productionhttp: //gratiaweb. grid. iu. edu/gratia/xml/osg_hours_efficiency_tr ee_map_by_vo_project_facility 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 12

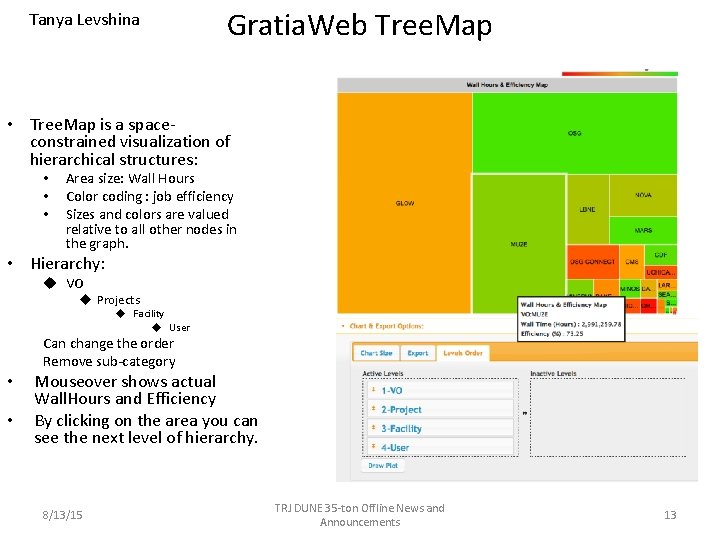

Tanya Levshina Gratia. Web Tree. Map • Tree. Map is a spaceconstrained visualization of hierarchical structures: • • • Area size: Wall Hours Color coding : job efficiency Sizes and colors are valued relative to all other nodes in the graph. • Hierarchy: u VO u Projects u Facility u User Can change the order Remove sub-category • • Mouseover shows actual Wall. Hours and Efficiency By clicking on the area you can see the next level of hierarchy. 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 13

LBNE VO Efficiency Job efficiency has been low on LBNE for some users – currently beam simulations and Fast. MC have had some problems with low (CPU hours/wall hours). Usual cause is waiting for CPN locks when using ifdh cp to/from Blue. Arc disks. We should get weekly reports, and some experiments asked for triggered warnings if inefficiency spikes. 8/13/15 TRJ DUNE 35 -ton Offline News and Announcements 14

Prototype vs prototype 2 reddit

Prototype vs prototype 2 reddit Design prototype test reddit

Design prototype test reddit Que ton aliment soit ton médicament

Que ton aliment soit ton médicament Game de culori

Game de culori 0 856m = cm

0 856m = cm Tabel angka indek

Tabel angka indek Pvu announcements

Pvu announcements /r/announcements

/r/announcements David ritthaler

David ritthaler Fahrenheit 451 part 3 test

Fahrenheit 451 part 3 test Potentiial

Potentiial General announcements

General announcements Soft news

Soft news Chapter 4 probability and counting rules answer key

Chapter 4 probability and counting rules answer key Both of the statues on the shelf is broken

Both of the statues on the shelf is broken