Development of New Kaon Selectors Kalanand Mishra University

- Slides: 9

Development of New Kaon Selectors Kalanand Mishra University of Cincinnati Kalanand Mishra Ba. Bar Coll. Meeting February, 2007 0/8

Overview of Neural Net Training • The input variables for neural net are: likelihoods from SVT, DCH, DRC (both global and track-based) and momentum and polar angle ( ) of the tracks. • Separate neural net training for ‘Good Quality’ and ‘Poor Quality’* tracks: gives two family of selectors “KNNGood. Qual“ and “KNNNo. Qual”. * Poor Quality tracks are defined as belonging to one of the following categories: - outside DIRC acceptance - passing through the cracks between DIRC bars - no DCH hits in layers > 35 - EMC energy < 0. 15 Ge. V Kalanand Mishra Ba. Bar Coll. Meeting February, 2007 1/8

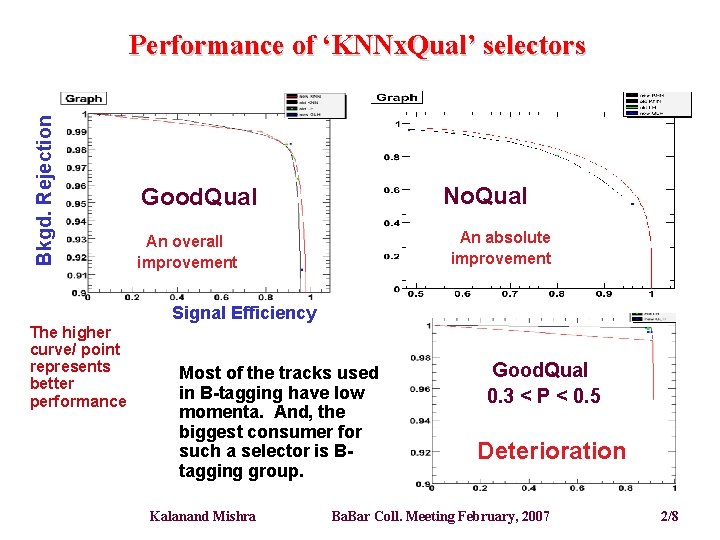

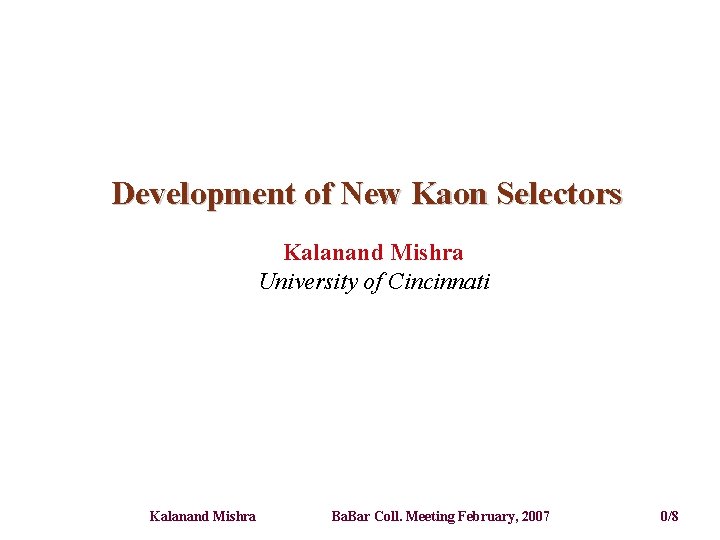

Bkgd. Rejection Performance of ‘KNNx. Qual’ selectors No. Qual Good. Qual An absolute improvement An overall improvement Signal Efficiency The higher curve/ point represents better performance Most of the tracks used in B-tagging have low momenta. And, the biggest consumer for such a selector is Btagging group. Kalanand Mishra Good. Qual 0. 3 < P < 0. 5 Deterioration Ba. Bar Coll. Meeting February, 2007 2/8

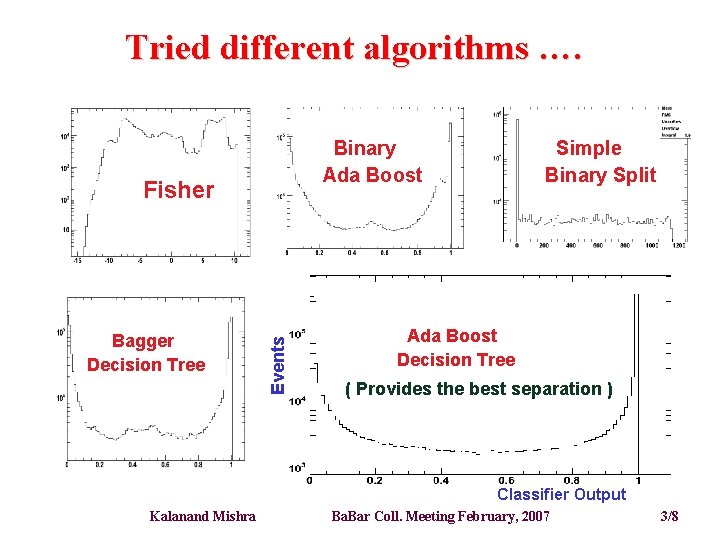

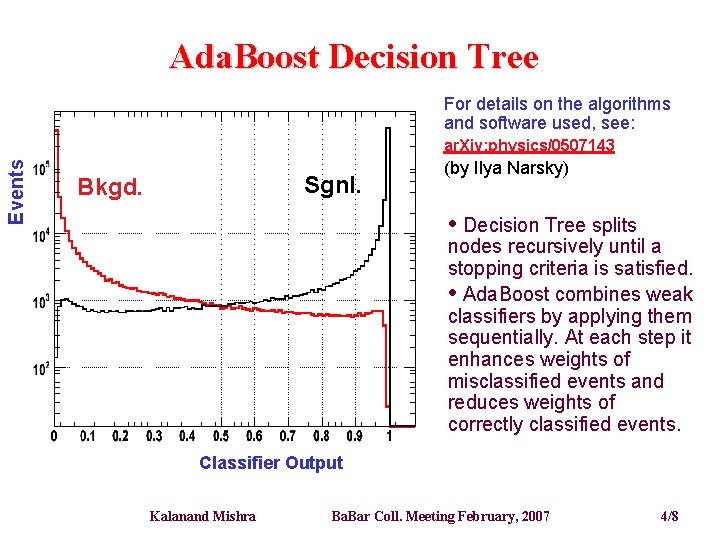

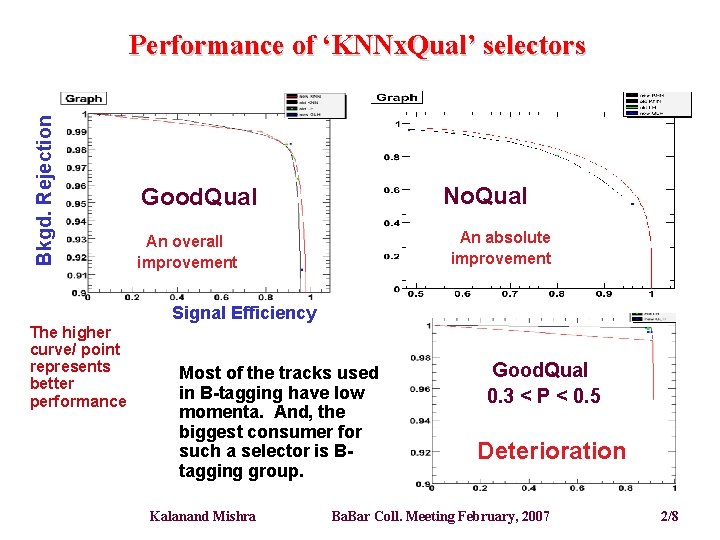

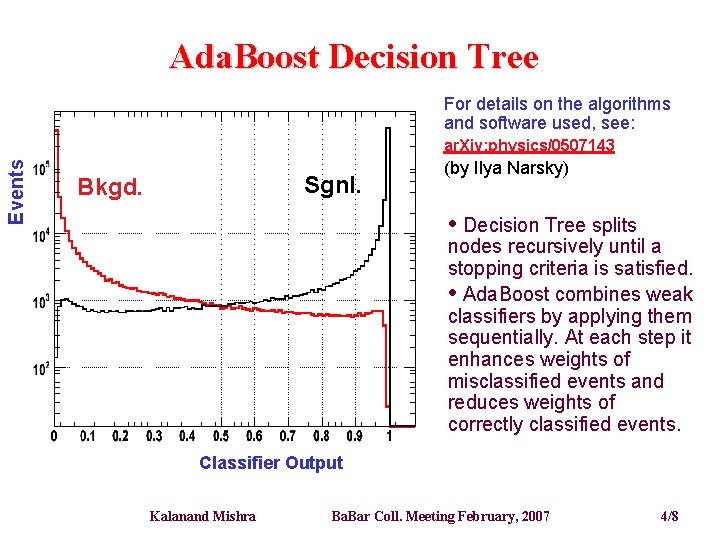

Tried different algorithms …. Binary Ada Boost Bagger Decision Tree Events Fisher Simple Binary Split Ada Boost Decision Tree ( Provides the best separation ) Classifier Output Kalanand Mishra Ba. Bar Coll. Meeting February, 2007 3/8

Ada. Boost Decision Tree For details on the algorithms and software used, see: Events ar. Xiv: physics/0507143 Sgnl. Bkgd. (by Ilya Narsky) • Decision Tree splits nodes recursively until a stopping criteria is satisfied. • Ada. Boost combines weak classifiers by applying them sequentially. At each step it enhances weights of misclassified events and reduces weights of correctly classified events. Classifier Output Kalanand Mishra Ba. Bar Coll. Meeting February, 2007 4/8

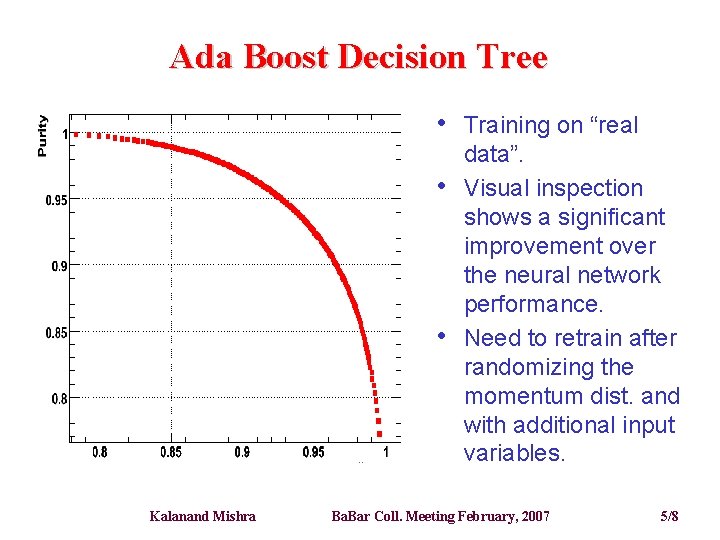

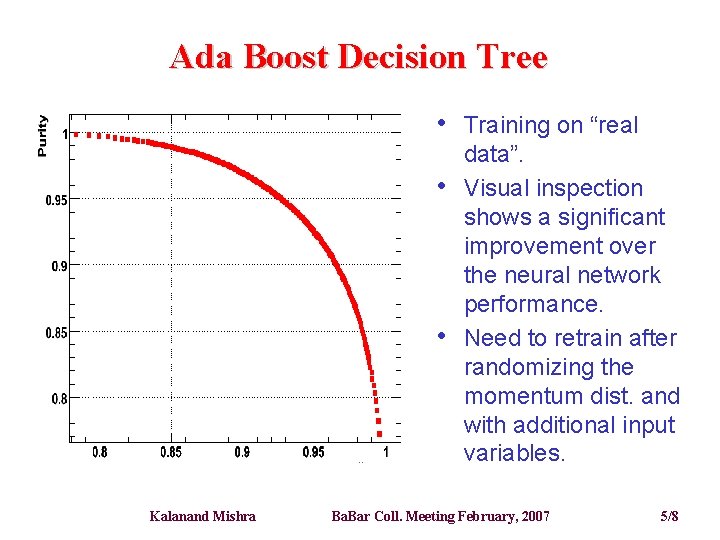

Ada Boost Decision Tree • • • Kalanand Mishra Training on “real data”. Visual inspection shows a significant improvement over the neural network performance. Need to retrain after randomizing the momentum dist. and with additional input variables. Ba. Bar Coll. Meeting February, 2007 5/8

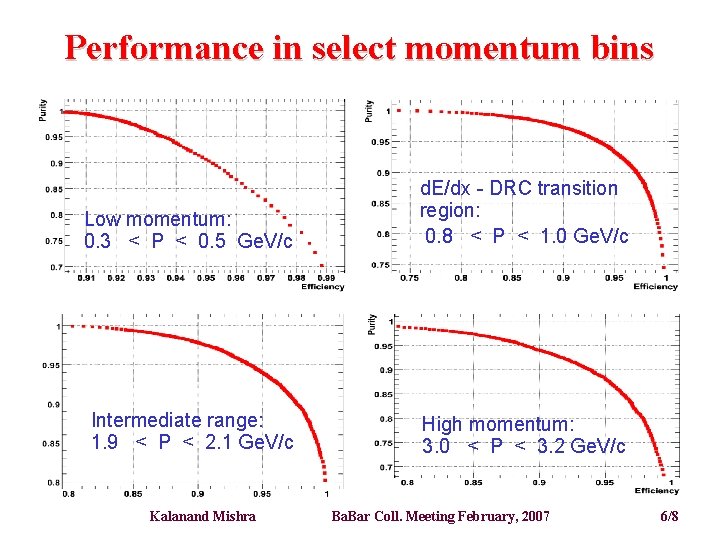

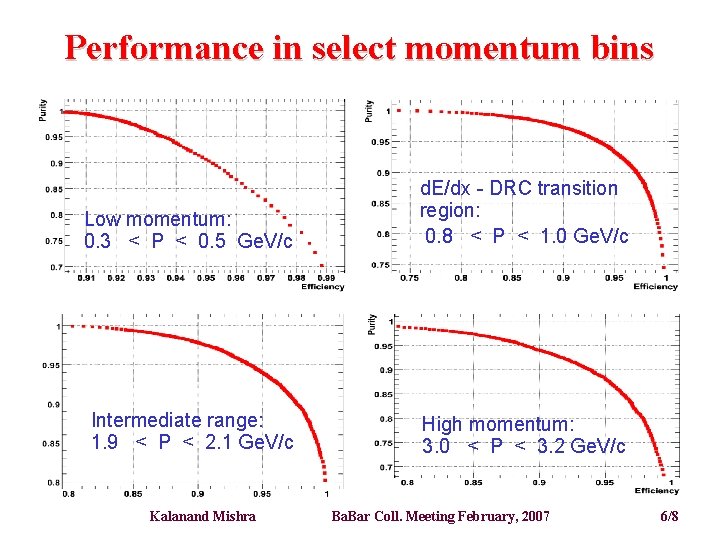

Performance in select momentum bins Low momentum: 0. 3 < P < 0. 5 Ge. V/c d. E/dx - DRC transition region: 0. 8 < P < 1. 0 Ge. V/c Intermediate range: 1. 9 < P < 2. 1 Ge. V/c High momentum: 3. 0 < P < 3. 2 Ge. V/c Kalanand Mishra Ba. Bar Coll. Meeting February, 2007 6/8

Things to do …. • • Randomize the momentum distributions of signal and background events before training Add additional input discriminating variables : - # signal and bkgd. Cherenkov photons in the ring - # total drift chamber hits and hits in the last 5 layers - # hits in the silicon detector - …… other suggestions ! Add other background categories - proton, …. . Finalize the cuts and implement the selectors : - it will be a single family of selectors : no separate selectors for “good” and “poor” quality tracks - should we still call it a KNN selector ? Kalanand Mishra Ba. Bar Coll. Meeting February, 2007 7/8

Summary • • • Significant efforts underway to develop a “new version” of the KNN selectors. The goal is to develop a powerful non-LH kaon selector using the best performing classifier (or a combination of classifiers). Such a selector is expected to be able to replace the current KNN selectors for B-tagging purposes, and should be a meaningful alternative of the LH selectors for Physics analyses. Kalanand Mishra Ba. Bar Coll. Meeting February, 2007 8/8