DEISA www deisa org Achim Streit Forschungszentrum Jlich

- Slides: 25

DEISA www. deisa. org Achim Streit Forschungszentrum Jülich in der Helmholtz-Gesellschaft GRID@Large - Lisbon, August 29 -30 2005 A. Streit -1 -

Agenda l Introduction l SA 3: Resource Management l DEISA Extreme Computing Initiative l Conclusion GRID@Large - Lisbon, August 29 -30 2005 A. Streit -2 -

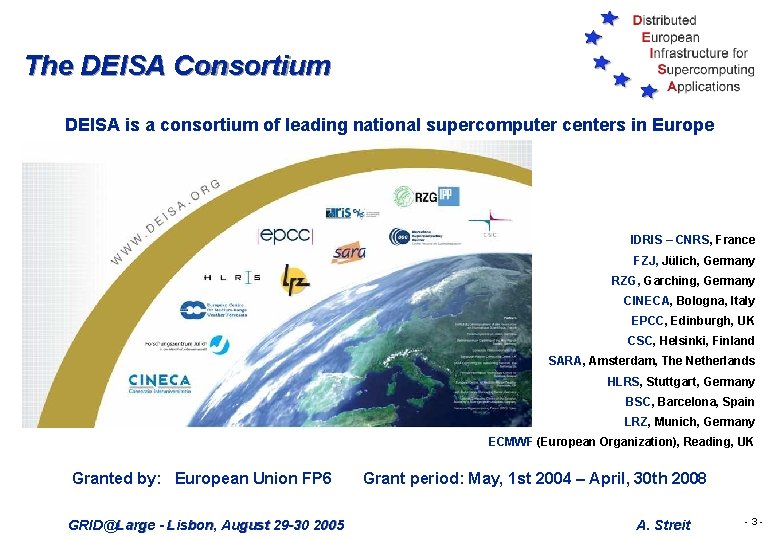

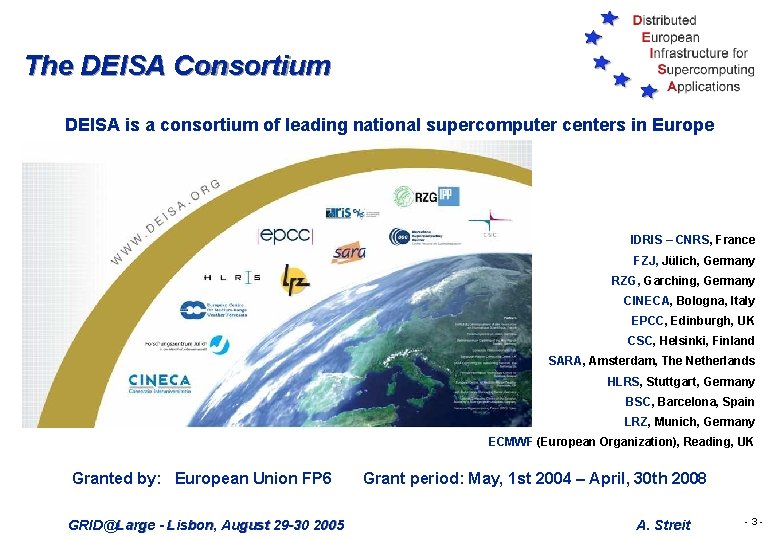

The DEISA Consortium DEISA is a consortium of leading national supercomputer centers in Europe IDRIS – CNRS, France FZJ, Jülich, Germany RZG, Garching, Germany CINECA, Bologna, Italy EPCC, Edinburgh, UK CSC, Helsinki, Finland SARA, Amsterdam, The Netherlands HLRS, Stuttgart, Germany BSC, Barcelona, Spain LRZ, Munich, Germany ECMWF (European Organization), Reading, UK Granted by: European Union FP 6 GRID@Large - Lisbon, August 29 -30 2005 Grant period: May, 1 st 2004 – April, 30 th 2008 A. Streit -3 -

DEISA objectives l To enable Europe’s terascale science by the integration of Europe’s most powerful supercomputing systems. l Enabling scientific discovery across a broad spectrum of science and technology is the only criterion for success l DEISA is an European Supercomputing Service built on top of existing national services. l DEISA deploys and operates a persistent, production quality, distributed, heterogeneous supercomputing environment with continental scope. GRID@Large - Lisbon, August 29 -30 2005 A. Streit -4 -

Basic requirements and strategies for the DEISA research Infrastructure l Fast deployment of a persistent, production quality, grid empowered supercomputing infrastructure with continental scope. l European supercomputing service built on top of existing national services requires reliability and non disruptive behavior. l User and application transparency l Top-down approach: technology choices result from the business and operational models of our virtual organization. DEISA technology choices are fully open. GRID@Large - Lisbon, August 29 -30 2005 A. Streit -5 -

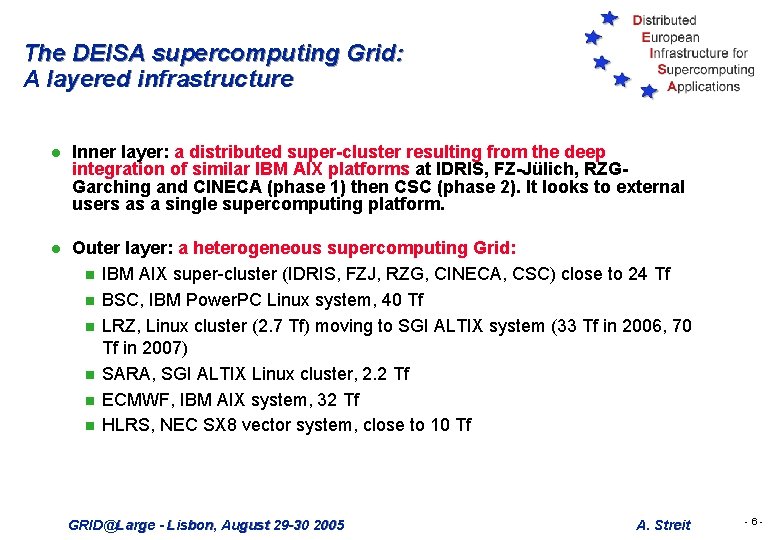

The DEISA supercomputing Grid: A layered infrastructure l Inner layer: a distributed super-cluster resulting from the deep integration of similar IBM AIX platforms at IDRIS, FZ-Jülich, RZGGarching and CINECA (phase 1) then CSC (phase 2). It looks to external users as a single supercomputing platform. l Outer layer: a heterogeneous supercomputing Grid: n IBM AIX super-cluster (IDRIS, FZJ, RZG, CINECA, CSC) close to 24 Tf n BSC, IBM Power. PC Linux system, 40 Tf n LRZ, Linux cluster (2. 7 Tf) moving to SGI ALTIX system (33 Tf in 2006, 70 Tf in 2007) n SARA, SGI ALTIX Linux cluster, 2. 2 Tf n ECMWF, IBM AIX system, 32 Tf n HLRS, NEC SX 8 vector system, close to 10 Tf GRID@Large - Lisbon, August 29 -30 2005 A. Streit -6 -

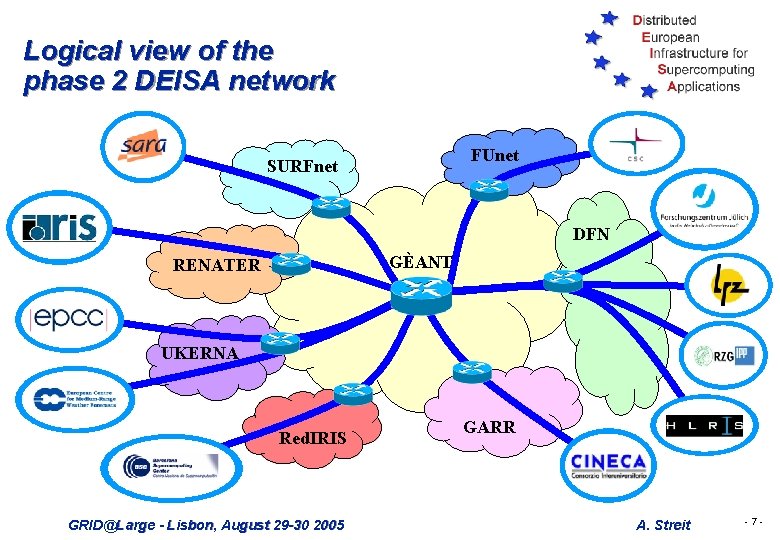

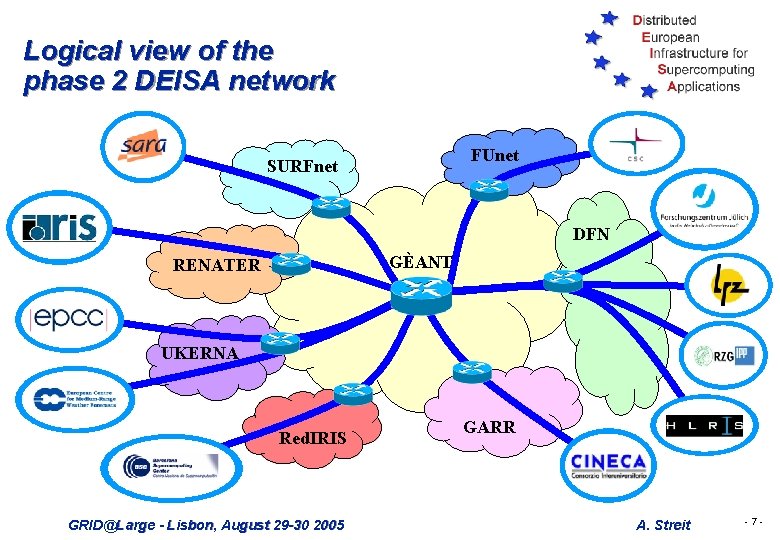

Logical view of the phase 2 DEISA network FUnet SURFnet DFN GÈANT RENATER UKERNA Red. IRIS GRID@Large - Lisbon, August 29 -30 2005 GARR A. Streit -7 -

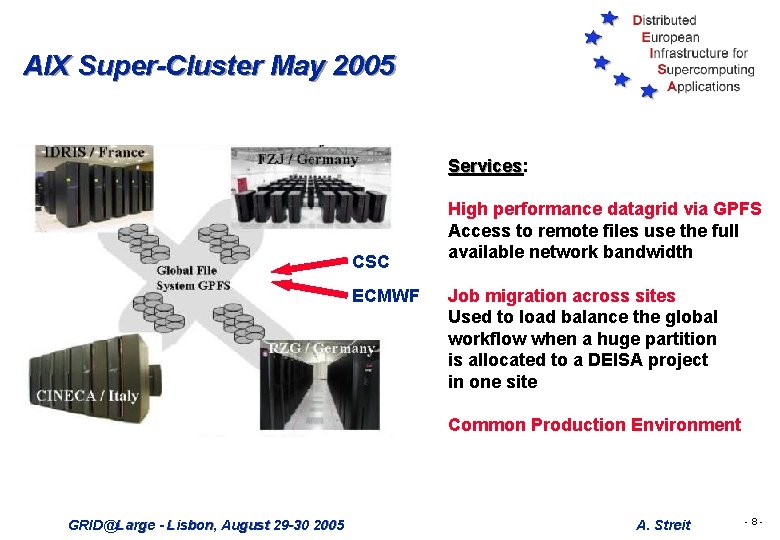

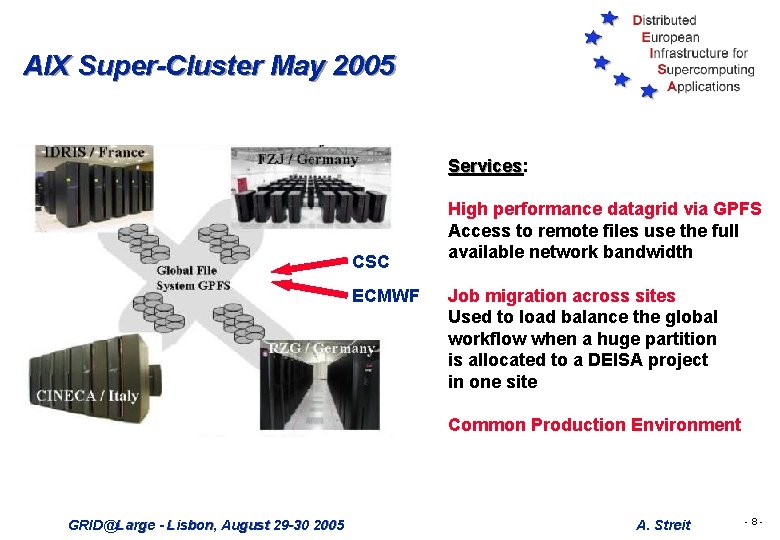

AIX Super-Cluster May 2005 Services: Services CSC ECMWF High performance datagrid via GPFS Access to remote files use the full available network bandwidth Job migration across sites Used to load balance the global workflow when a huge partition is allocated to a DEISA project in one site Common Production Environment GRID@Large - Lisbon, August 29 -30 2005 A. Streit -8 -

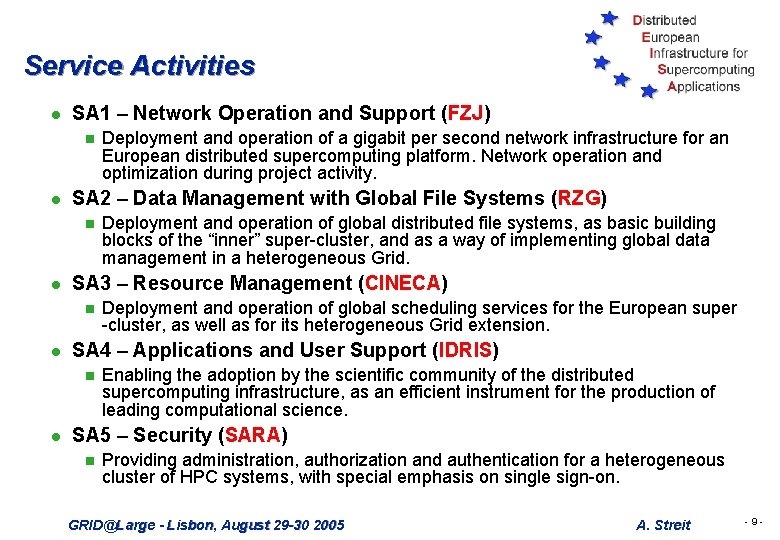

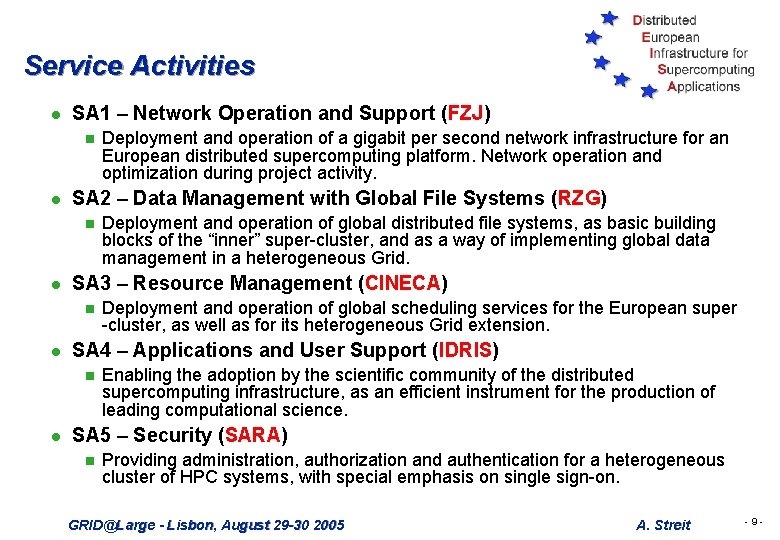

Service Activities l SA 1 – Network Operation and Support (FZJ) n l SA 2 – Data Management with Global File Systems (RZG) n l Deployment and operation of global scheduling services for the European super -cluster, as well as for its heterogeneous Grid extension. SA 4 – Applications and User Support (IDRIS) n l Deployment and operation of global distributed file systems, as basic building blocks of the “inner” super-cluster, and as a way of implementing global data management in a heterogeneous Grid. SA 3 – Resource Management (CINECA) n l Deployment and operation of a gigabit per second network infrastructure for an European distributed supercomputing platform. Network operation and optimization during project activity. Enabling the adoption by the scientific community of the distributed supercomputing infrastructure, as an efficient instrument for the production of leading computational science. SA 5 – Security (SARA) n Providing administration, authorization and authentication for a heterogeneous cluster of HPC systems, with special emphasis on single sign-on. GRID@Large - Lisbon, August 29 -30 2005 A. Streit -9 -

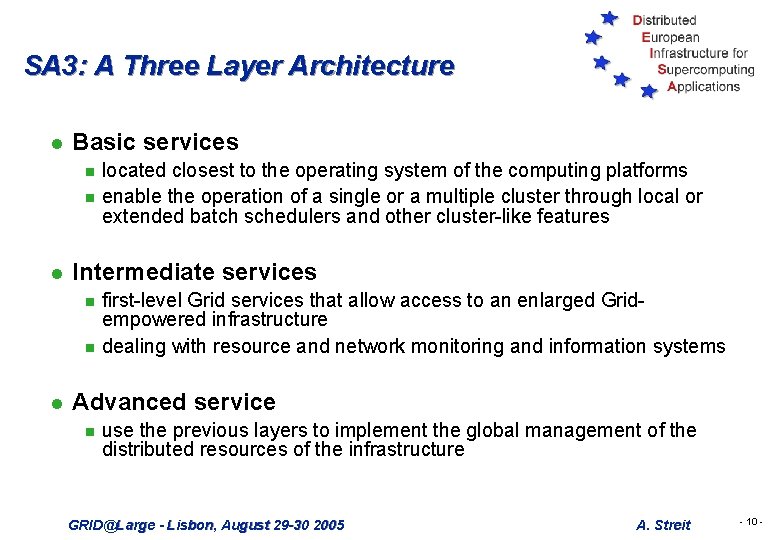

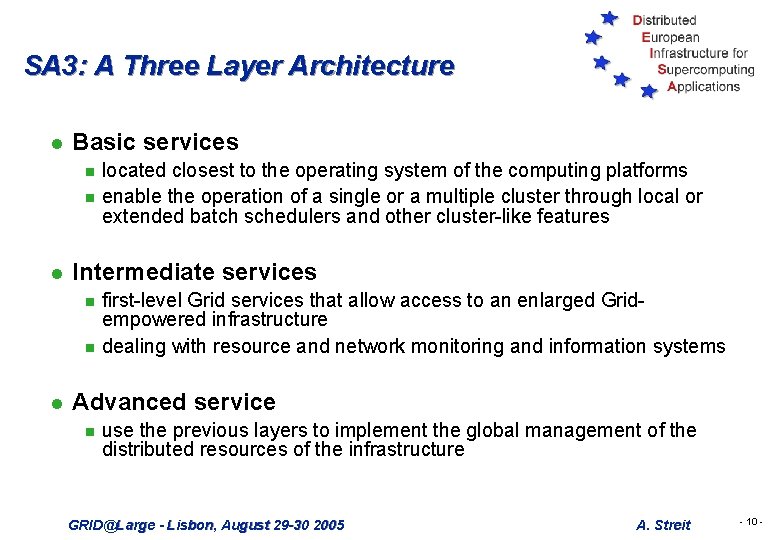

SA 3: A Three Layer Architecture l Basic services n n l Intermediate services n n l located closest to the operating system of the computing platforms enable the operation of a single or a multiple cluster through local or extended batch schedulers and other cluster-like features first-level Grid services that allow access to an enlarged Gridempowered infrastructure dealing with resource and network monitoring and information systems Advanced service n use the previous layers to implement the global management of the distributed resources of the infrastructure GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 10 -

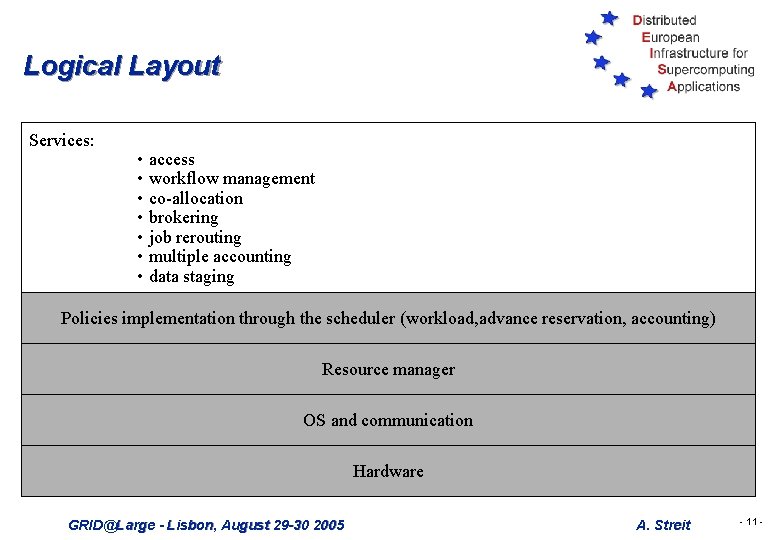

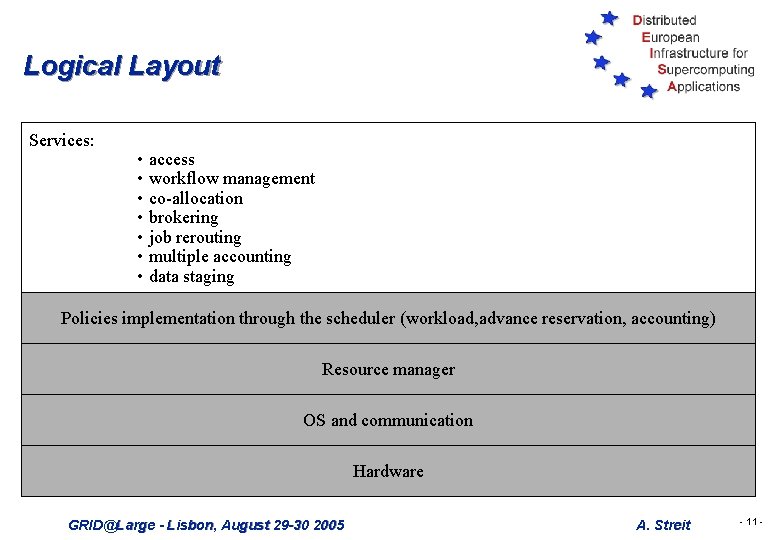

Logical Layout Services: • • access workflow management co-allocation brokering job rerouting multiple accounting data staging Policies implementation through the scheduler (workload, advance reservation, accounting) Resource manager OS and communication Hardware GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 11 -

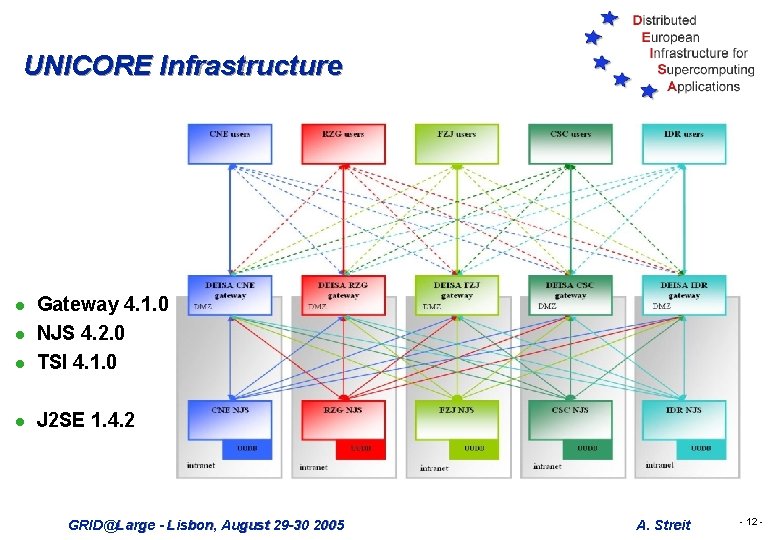

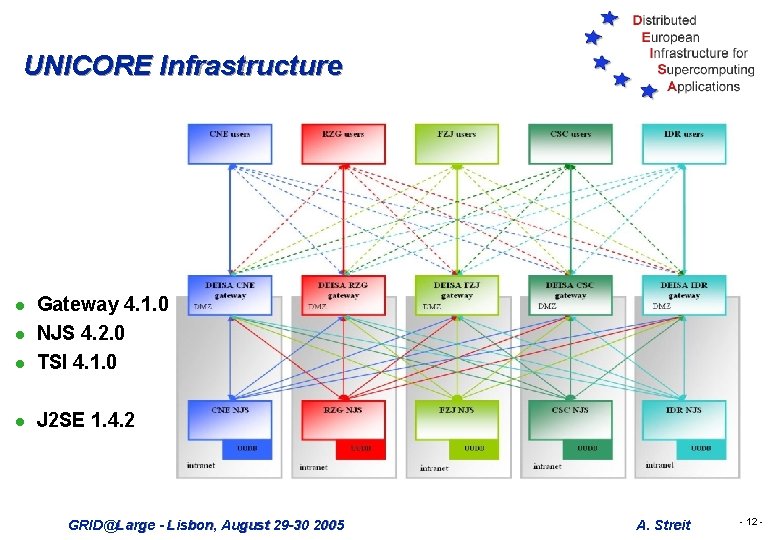

UNICORE Infrastructure l Gateway 4. 1. 0 NJS 4. 2. 0 TSI 4. 1. 0 l J 2 SE 1. 4. 2 l l GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 12 -

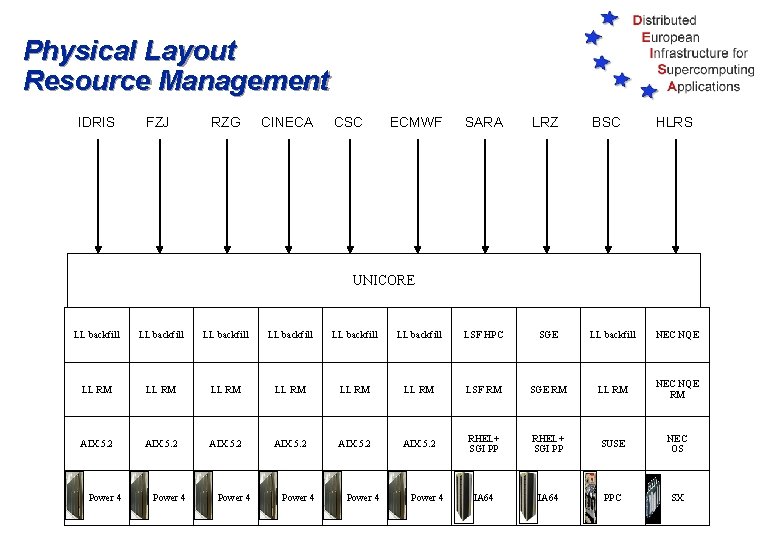

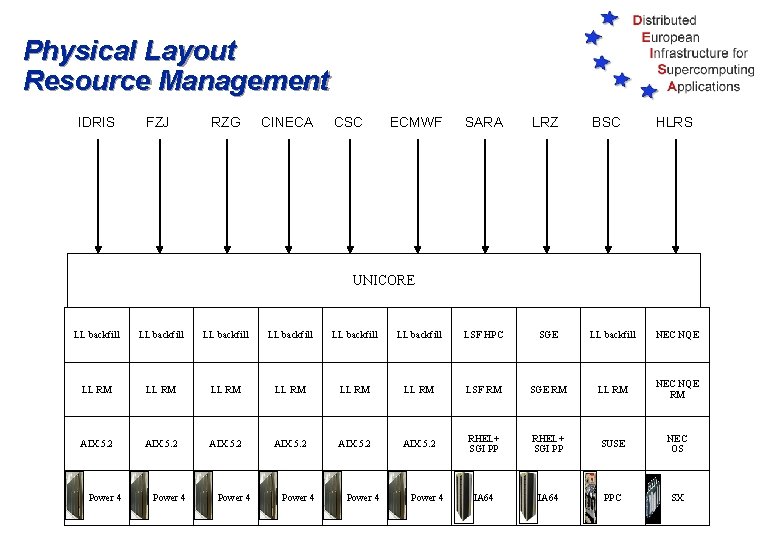

Physical Layout Resource Management IDRIS FZJ RZG CINECA CSC ECMWF SARA LRZ BSC HLRS UNICORE LL backfill LL backfill LSF HPC SGE LL backfill NEC NQE LL RM LL RM LSF RM SGE RM LL RM NEC NQE RM AIX 5. 2 RHEL+ SGI PP SUSE NEC OS IA 64 PPC SX Power 4 GRID@Large - Lisbon, August 29 -30 2005 Power 4 A. Streit - 13 -

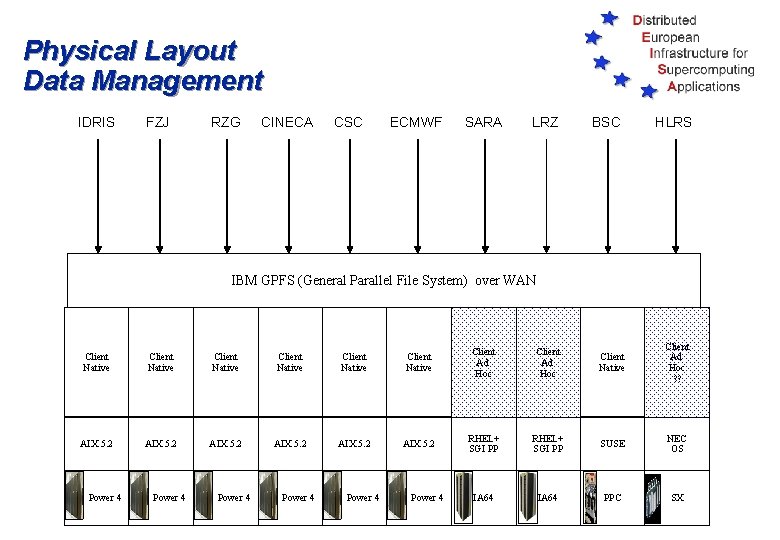

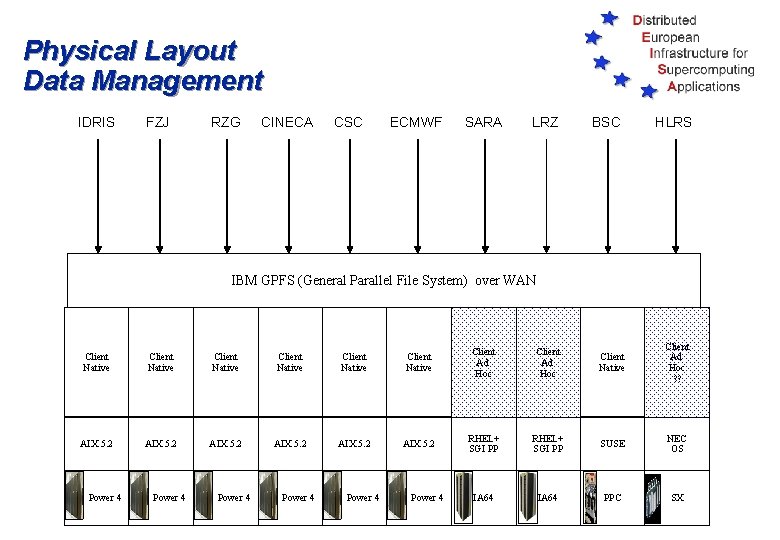

Physical Layout Data Management IDRIS FZJ RZG CINECA CSC ECMWF SARA LRZ BSC HLRS IBM GPFS (General Parallel File System) over WAN Client Native Client Native Client Ad Hoc ? ? AIX 5. 2 RHEL+ SGI PP SUSE NEC OS IA 64 PPC SX Power 4 GRID@Large - Lisbon, August 29 -30 2005 Power 4 A. Streit - 14 -

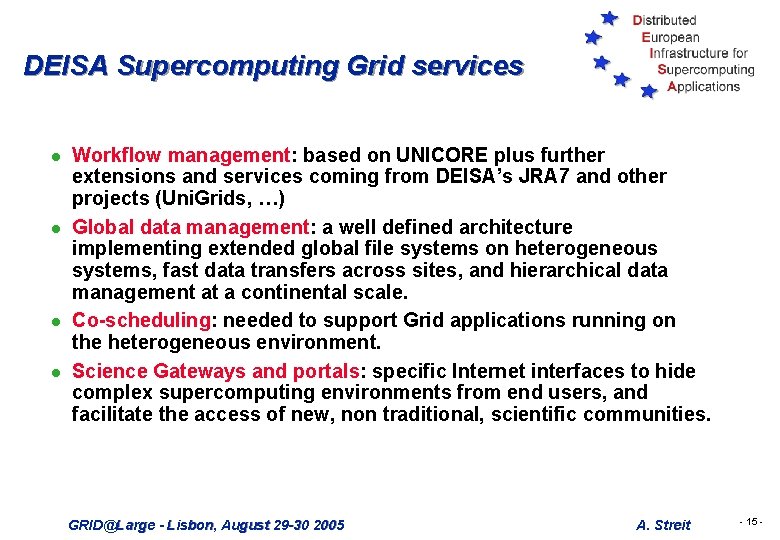

DEISA Supercomputing Grid services l l Workflow management: based on UNICORE plus further extensions and services coming from DEISA’s JRA 7 and other projects (Uni. Grids, …) Global data management: a well defined architecture implementing extended global file systems on heterogeneous systems, fast data transfers across sites, and hierarchical data management at a continental scale. Co-scheduling: needed to support Grid applications running on the heterogeneous environment. Science Gateways and portals: specific Internet interfaces to hide complex supercomputing environments from end users, and facilitate the access of new, non traditional, scientific communities. GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 15 -

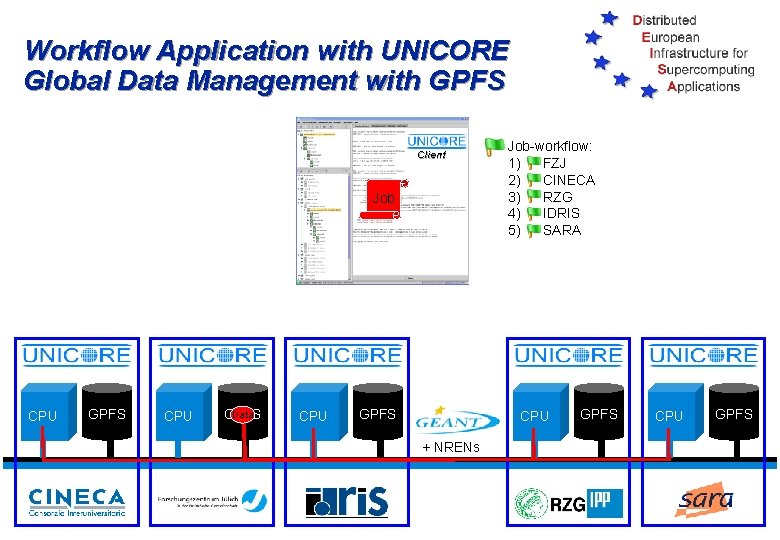

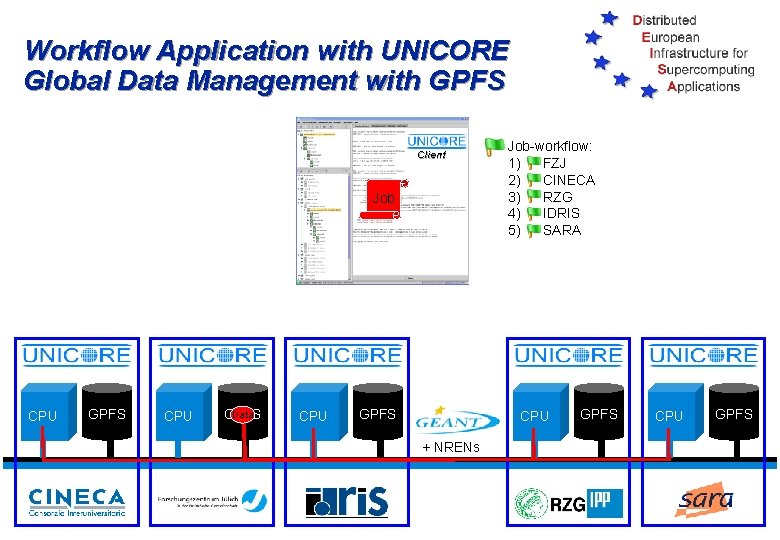

Workflow Application with UNICORE Global Data Management with GPFS Client Job CPU GPFS CPU Data GPFS CPU GPFS Job-workflow: 1) FZJ 2) CINECA 3) RZG 4) IDRIS 5) SARA CPU GPFS + NRENs GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 16 -

Resource Management Information System (RMIS) l l l Deliver up to date and complete resource management information about the grid Provide relevant information to system administrators from remote sites and to end-users Our approach n n performed a implementation-independent system analysis attempted to model the DEISA distributed supercomputer platform designed to operate the grid identified the resource management part as a sub-system needing to interface other sub-systems to get relevant information other sub-systems use external tools (monitoring tools, data bases and batch system) with which we need to interface GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 17 -

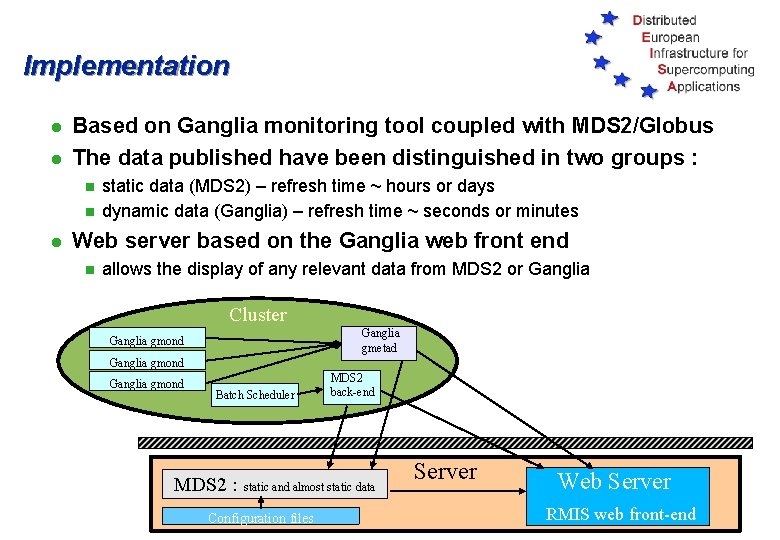

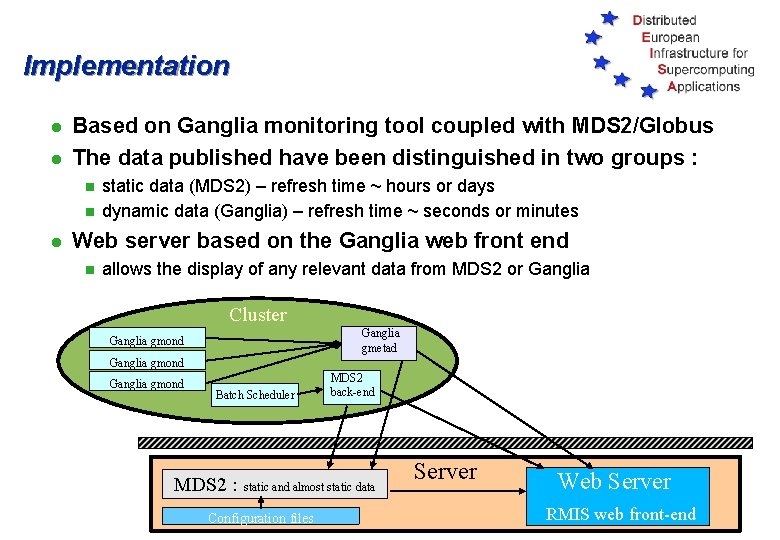

Implementation l l Based on Ganglia monitoring tool coupled with MDS 2/Globus The data published have been distinguished in two groups : n n l static data (MDS 2) – refresh time ~ hours or days dynamic data (Ganglia) – refresh time ~ seconds or minutes Web server based on the Ganglia web front end n allows the display of any relevant data from MDS 2 or Ganglia Cluster Ganglia gmetad Ganglia gmond Batch Scheduler MDS 2 back-end Firewall MDS 2 : static and almost static data Configuration files GRID@Large - Lisbon, August 29 -30 2005 Server Web Server RMIS web front-end A. Streit - 18 -

Portals (Science Gateways) l Same concept as Tera. Grid’s Science Gateways l Needed to enhance the outreach of supercomputing infrastructures l Hiding complex supercomputing environments from end users, providing discipline specific tools and support, and moving in some cases towards community allocations. l There is already work done by DEISA on Genomics and Material Sciences portals l Intense brainstorming on the desing of a global strategy, if possible interoperable with Tera. Grid’s Science Gateways GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 19 -

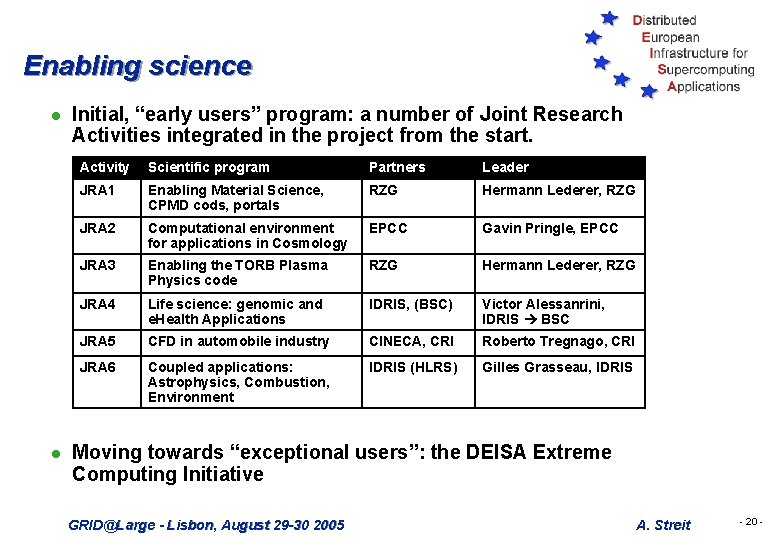

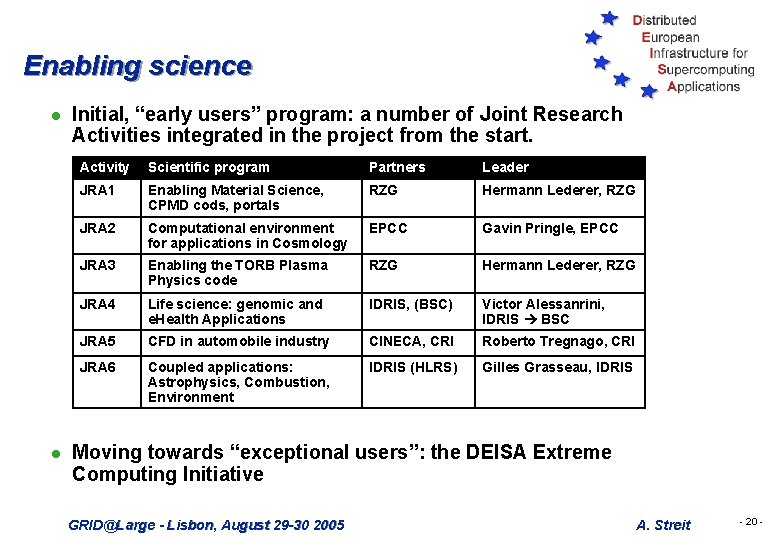

Enabling science l l Initial, “early users” program: a number of Joint Research Activities integrated in the project from the start. Activity Scientific program Partners Leader JRA 1 Enabling Material Science, CPMD cods, portals RZG Hermann Lederer, RZG JRA 2 Computational environment for applications in Cosmology EPCC Gavin Pringle, EPCC JRA 3 Enabling the TORB Plasma Physics code RZG Hermann Lederer, RZG JRA 4 Life science: genomic and e. Health Applications IDRIS, (BSC) Victor Alessanrini, IDRIS BSC JRA 5 CFD in automobile industry CINECA, CRI Roberto Tregnago, CRI JRA 6 Coupled applications: Astrophysics, Combustion, Environment IDRIS (HLRS) Gilles Grasseau, IDRIS Moving towards “exceptional users”: the DEISA Extreme Computing Initiative GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 20 -

The Extreme Computing Initiative l Identification, deployment and operation of a number of “flagship” applications in selected areas of science and technology l Applications must rely on the DEISA Supercomputing Grid services (application profiles have been clearly defined). They will benefit from exceptional resources from the DEISA pool. l Applications are selected on the basis of scientific excellence, innovation potential, and relevance criteria. l European call for proposals: April 1 st May 30, 2005 GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 21 -

Evaluation and allocation of DEISA resources l National evaluation committees evaluate the proposals and determine priorities. l On the basis of this information, the DEISA consortium examines how the applications map to the resources available in the DEISA pool, and negotiates internally the way the resources will be allocated and the final priorities for projects. l Exceptional DEISA resources will be allocated – as in large scientific instruments – at well defined time windows (to be negotiated with the users). GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 22 -

DEISA Extreme Computing Initative DECI l l l Call for Expressions of Interest / Proposals in April and May 2005 50 proposals submitted Requested CPU time: 32 million CPU-hr European countries involved n Finland, France, Germany, Greece, Hungary, Italy, Netherlands, Russia, Spain, Sweden, Switzerland, UK Proposals n n n n Materials Science, Quantum Chemistry, Quantum Computing: 16 Astrophysics (Cosmology, Stars, Solar Sys. ): 13 Life Sciences, Biophysics, Bioinformatics: 8 CFD, Fluid Mechanics, Combustion: 5 Earth Sciences, Climate Research: 4 Plasma Physics: 2 QCD, Particle Physics, Nuclear Physics: 2 GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 23 -

Conclusions l DEISA adopts Grid technologies to integrate national supercomputing infrastructures, and to provide an European Supercomputing Service. l Service activities are supported by the coordinated action of the national center's staffs. DEISA operates as a virtual European supercomputing centre. l The big challenge we are facing is enabling new, first class computational science. l Integrating leading supercomputing platforms with Grid technologies creates a new research dimension in Europe. GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 24 -

October 11– 12, 2005 ETSI Headquarters, Sophia Antipolis, France http: //summit. unicore. org/2005 In conjunction with Grids@work: Middleware, Components, Users, Contest and Plugtests http: //www. etsi. org/plugtests/GRID. htm Supported by GRID@Large - Lisbon, August 29 -30 2005 A. Streit - 25 -

Achim streit

Achim streit Cern theory

Cern theory James moody sociology

James moody sociology Achim rettinger

Achim rettinger Achim stahl

Achim stahl Achim schweikard

Achim schweikard Dr stanislaw landau

Dr stanislaw landau Jlich

Jlich Jlich

Jlich Conoid sturm

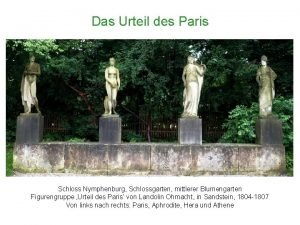

Conoid sturm Schiedsrichter im streit um den zankapfel

Schiedsrichter im streit um den zankapfel Streit der körperteile autor

Streit der körperteile autor Ies suel fuengirola

Ies suel fuengirola Organizational chart logistics

Organizational chart logistics Seminole breechcloth

Seminole breechcloth [email protected]

[email protected] Healthy eating | esol nexus (britishcouncil.org)

Healthy eating | esol nexus (britishcouncil.org) Adb org chart

Adb org chart Http //www.python.org/

Http //www.python.org/ Www.nutrition.org.uk

Www.nutrition.org.uk Cvv

Cvv Webdhis kzn

Webdhis kzn Alcreadyness

Alcreadyness Agendaweb.org numbers

Agendaweb.org numbers My inprs

My inprs Iso.org

Iso.org