Decision Making Outline Maximum Expected Utility MEU Decision

- Slides: 26

Decision Making

Outline • Maximum Expected Utility (MEU) • Decision network • Making decisions Russell & Norvig, chapter 16

Acting Under Uncertainty • With no uncertainty, rational decision is to pick action with “best” outcome Two actions • #1 leads to great outcome • #2 leads to good outcome It’s only rational to pick #1 Assumes outcome is 100% certain • With uncertainty, it’s a little harder Two actions • #1 has 1% probability to lead to great outcome • #2 has 90% probability to lead to good outcome What is the rational decision?

Acting Under Uncertainty • Maximum Expected Utility (MEU) Pick action that leads to best outcome averaged over all possible outcomes of the action • How do we compute the MEU? Easy once we know the probability of each outcome and their utility

Utility • Value of a state or outcome • Computed by utility function U(S) = utility of state S U(S) [0, 1] if normalized

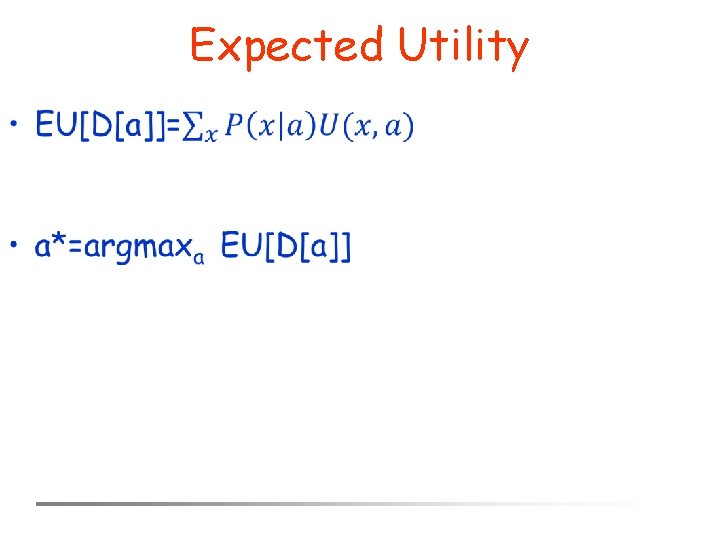

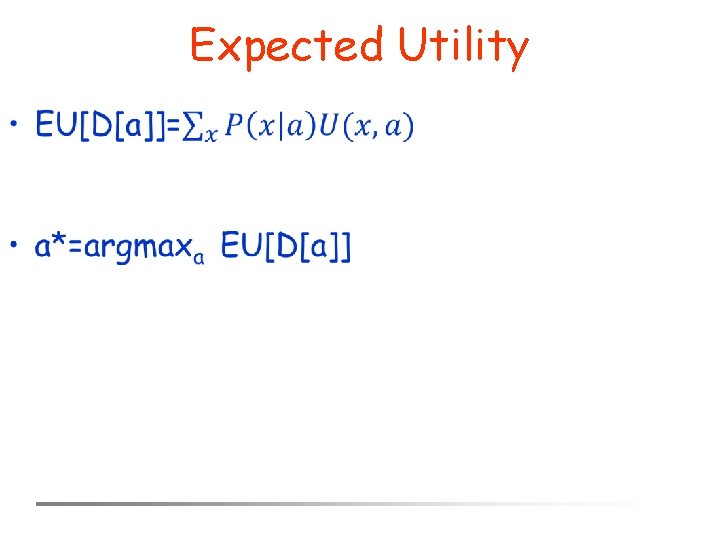

Expected Utility • Sum of utility of each possible outcome times probability of that outcome Known evidence E about the world Action A has i possible outcomes, with probability P(Resulti(A)|Do(A), E) Utility of each outcome is U(Resulti(A)) • Evaluation function of the state of the world given Resulti(A) • EU(A|E)= i P(Resulti(A)|Do(A), E) U(Resulti(A))

Maximum Expected Utility • List all possible actions Aj • For each action, list all possible outcomes Resulti(Aj) • Compute EU(Aj|E) • Pick action that maximises EU

Utility of Money • Use money as measure of utility? • Example A 1 = 100% chance of $1 M A 2 = 50% chance of $3 M or nothing EU(A 2) = $1. 5 M > $1 M = EU(A 1) • Is that rational?

Utility of Money • Utility/Money relationship is logarithmic, not linear • Example EU(A 2) =. 45 <. 46 = EU(A 1) • Insurance EU(paying) = –U(value of premium) EU(not paying) = U(value of premium) – U(value of house) * P(losing house)

Decision Network • Our agent makes decisions given evidence Observed variables and conditional probability tables of hidden variables • Similar to conditional probability Probability of variables given other variables Relationships represented graphically in Bayesian network • Could we make a similar graph here?

Decision Network • Sometimes called influence diagram • Like a Bayesian Network for decision making Start with variables of problem Add decision variables that the agent controls Add utility variable that specify how good each state is

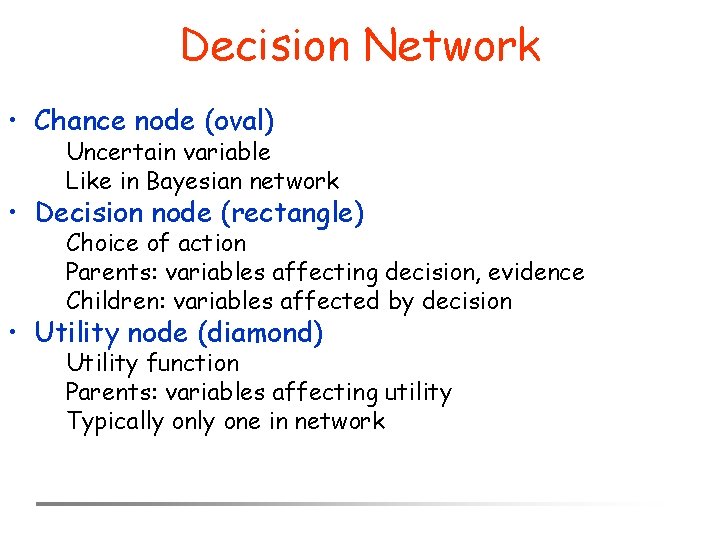

Decision Network • Chance node (oval) Uncertain variable Like in Bayesian network • Decision node (rectangle) Choice of action Parents: variables affecting decision, evidence Children: variables affected by decision • Utility node (diamond) Utility function Parents: variables affecting utility Typically one in network

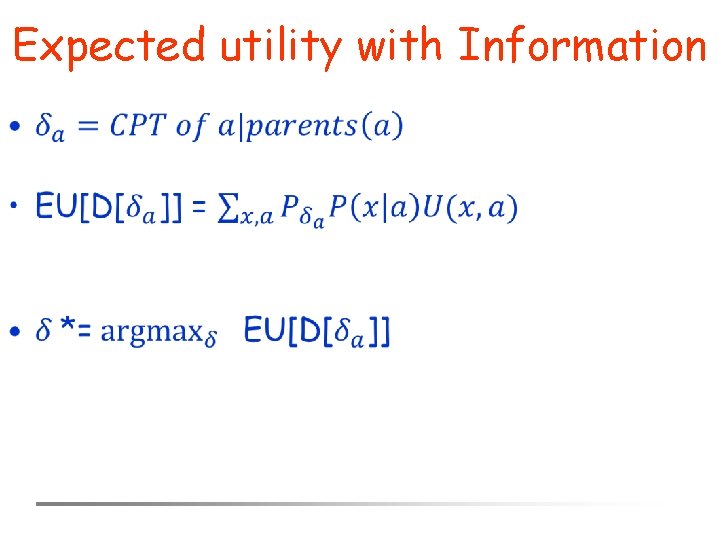

Expected Utility •

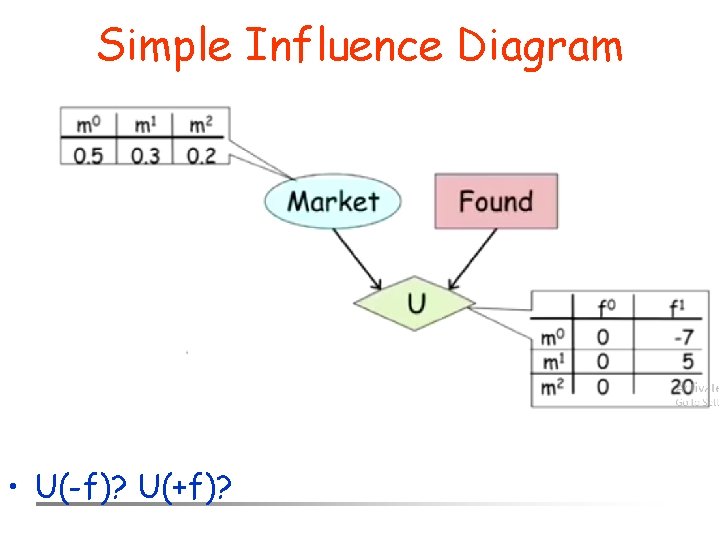

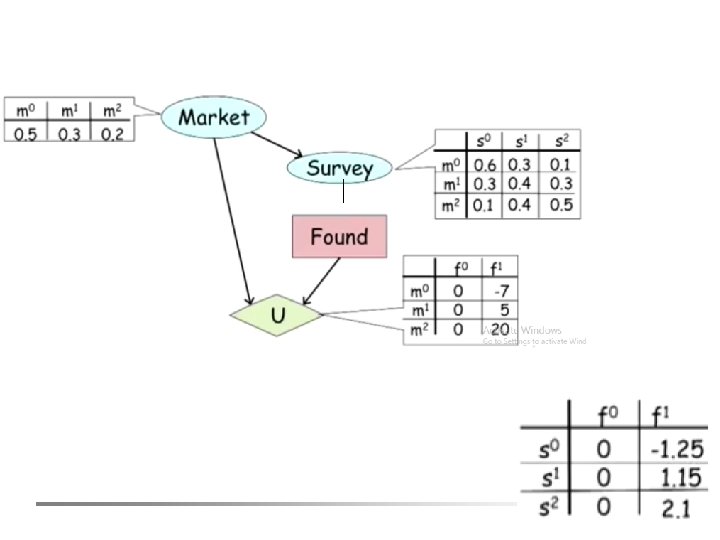

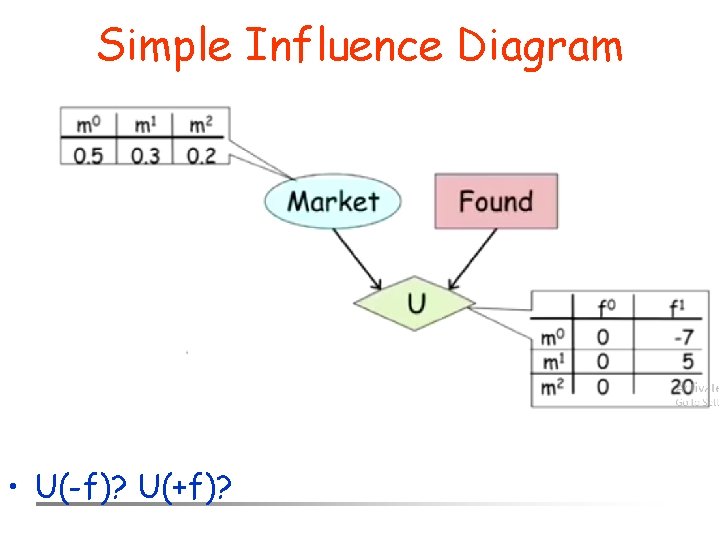

Simple Influence Diagram • U(-f)? U(+f)?

Decision Network Example Study Lucky P(L) = 0. 75 L F T F S F F T P(E) 0. 01 0. 5 0. 9 W F T F E F F T H 0. 2 0. 6 0. 8 T T 0. 99 Pass. Exam Win Happiness L P(W) F T 0. 01 0. 4

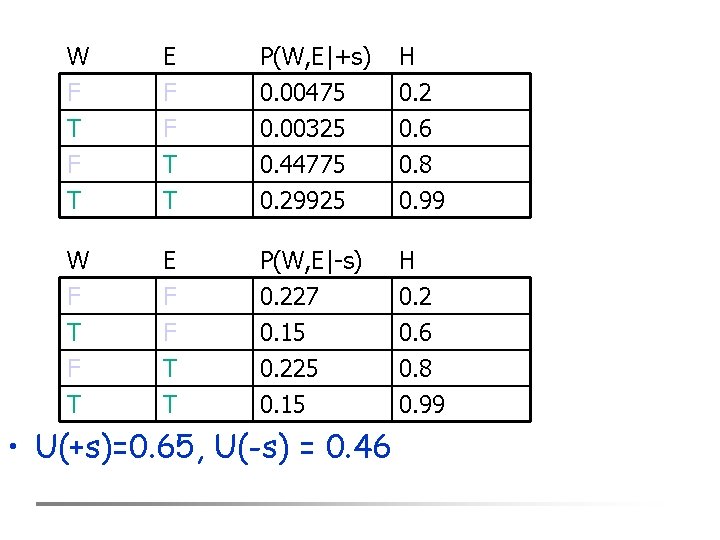

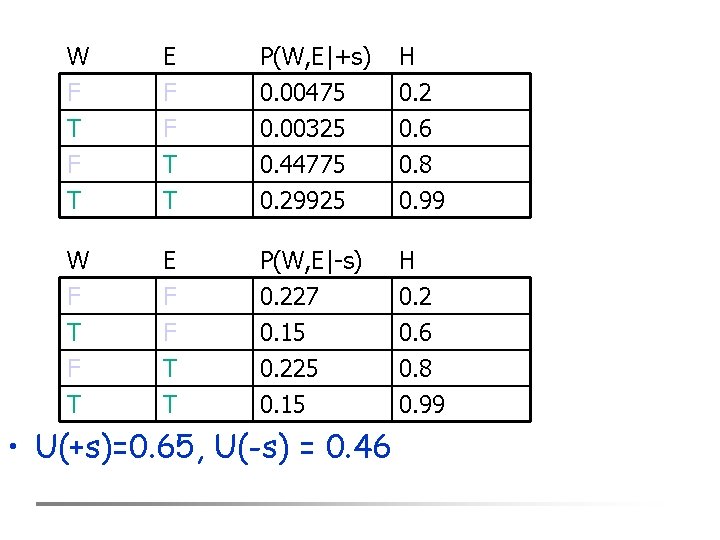

W F T F E F F T P(W, E|+s) 0. 00475 0. 00325 0. 44775 H 0. 2 0. 6 0. 8 T T 0. 29925 0. 99 W F T F E F F T P(W, E|-s) 0. 227 0. 15 0. 225 H 0. 2 0. 6 0. 8 T T 0. 15 0. 99 • U(+s)=0. 65, U(-s) = 0. 46

More Complex Influence Diagram

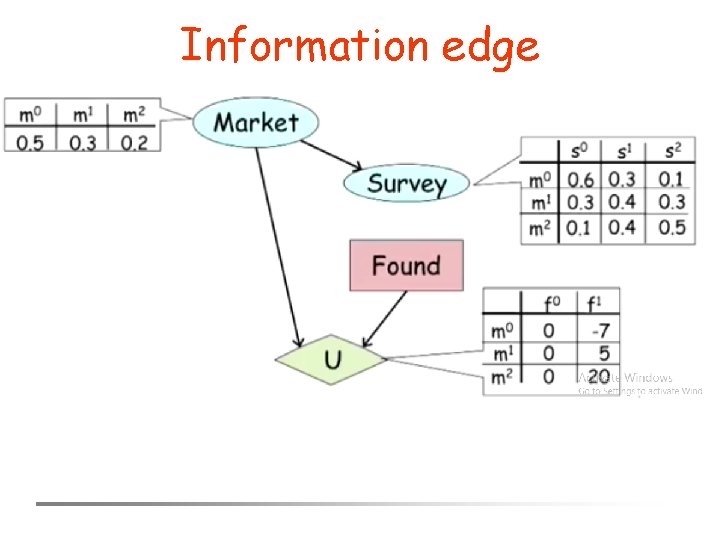

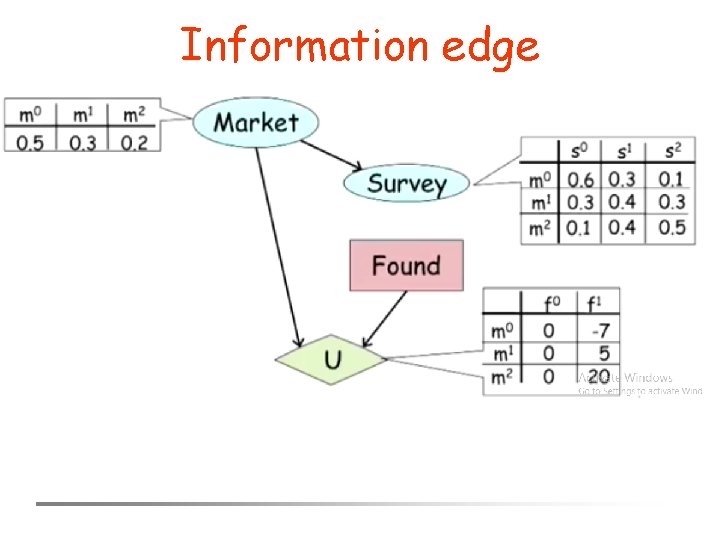

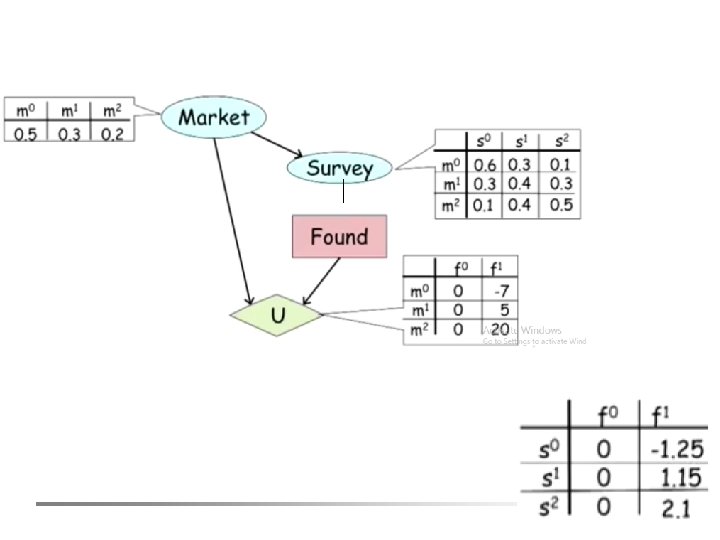

Information edge

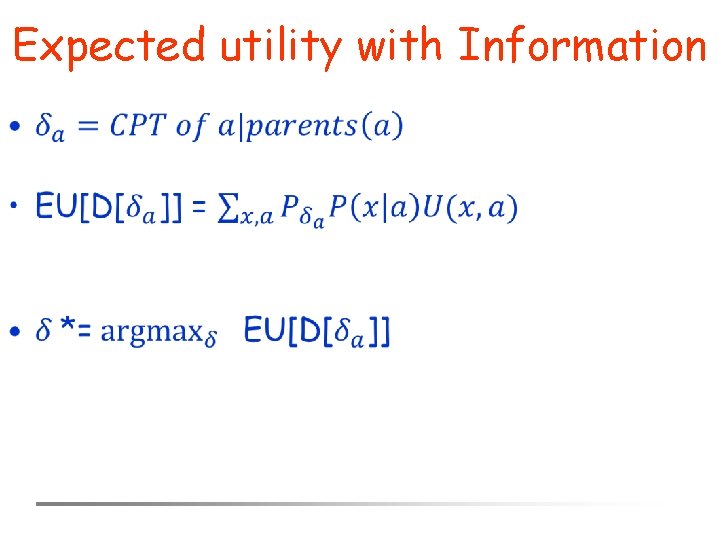

Expected utility with Information •

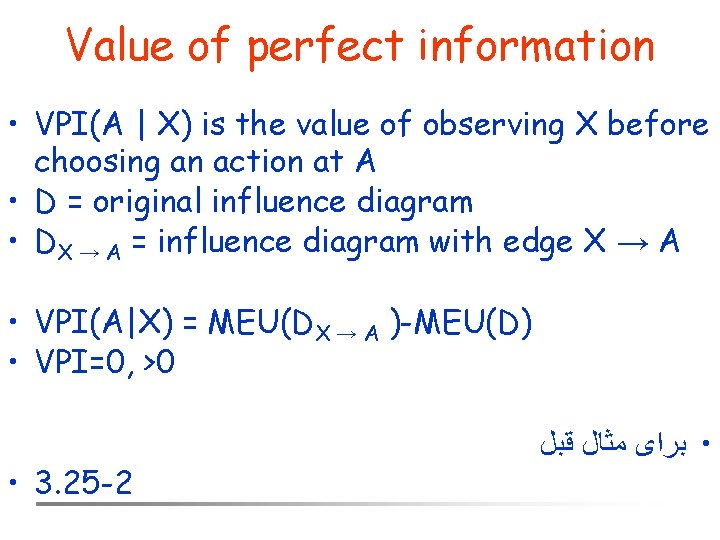

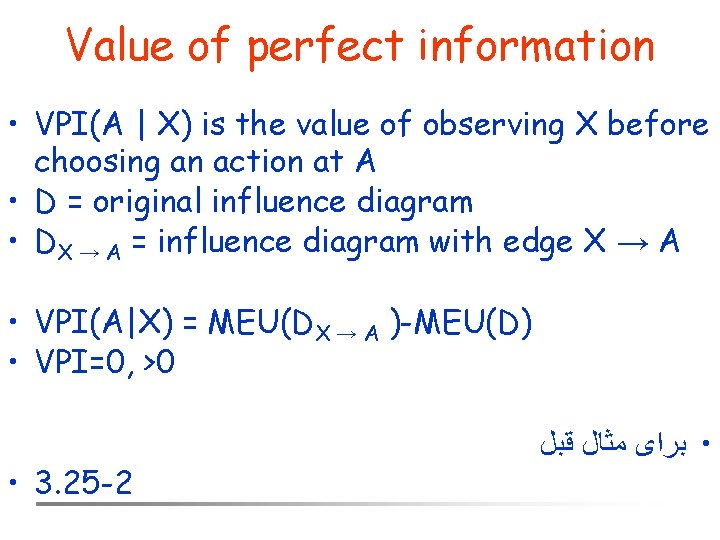

Value of perfect information • VPI(A | X) is the value of observing X before choosing an action at A • D = original influence diagram • DX → A = influence diagram with edge X → A • VPI(A|X) = MEU(DX → A )-MEU(D) • VPI=0, >0 • 3. 25 -2 • ﺑﺮﺍی ﻣﺜﺎﻝ ﻗﺒﻞ

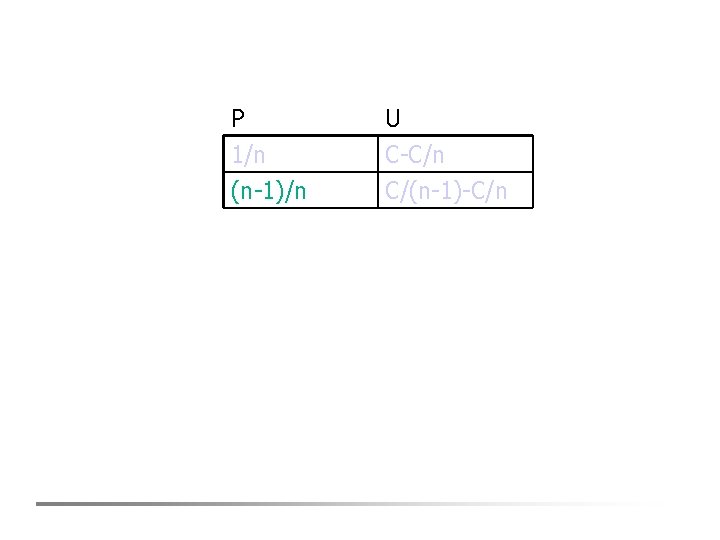

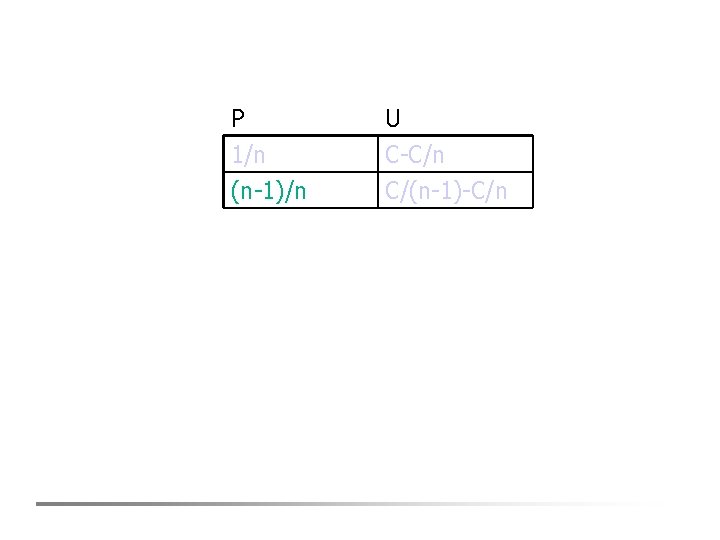

• oil company is hoping to buy one of n indistinguishable • assume further that exactly one of the blocks contains oil worth C dollars, while • the others are worthless. • The asking price of each block is C/n dollars. • Now suppose that a seismologist offers the company the results of a survey of block • number 3. How much should the company be willing to pay for the information?

P 1/n (n-1)/n U C-C/n C/(n-1)-C/n

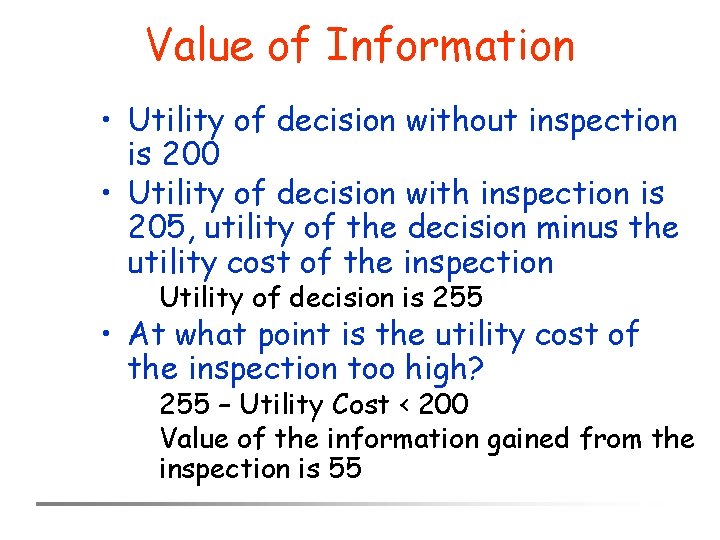

Value of Information • Utility of decision without inspection is 200 • Utility of decision with inspection is 205, utility of the decision minus the utility cost of the inspection Utility of decision is 255 • At what point is the utility cost of the inspection too high? 255 – Utility Cost < 200 Value of the information gained from the inspection is 55

Value of Information • Information has value if It causes a change in the decision The new decision has higher utility than the old one • The value is Non-negative Zero for irrelevant facts Zero for information already known