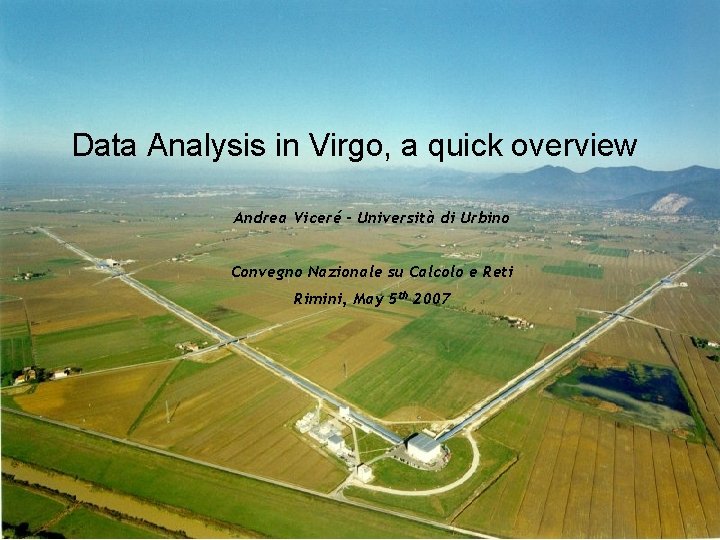

Data Analysis in Virgo a quick overview Andrea

- Slides: 14

Data Analysis in Virgo, a quick overview Andrea Viceré – Università di Urbino Convegno Nazionale su Calcolo e Reti Rimini, May 5 th 2007

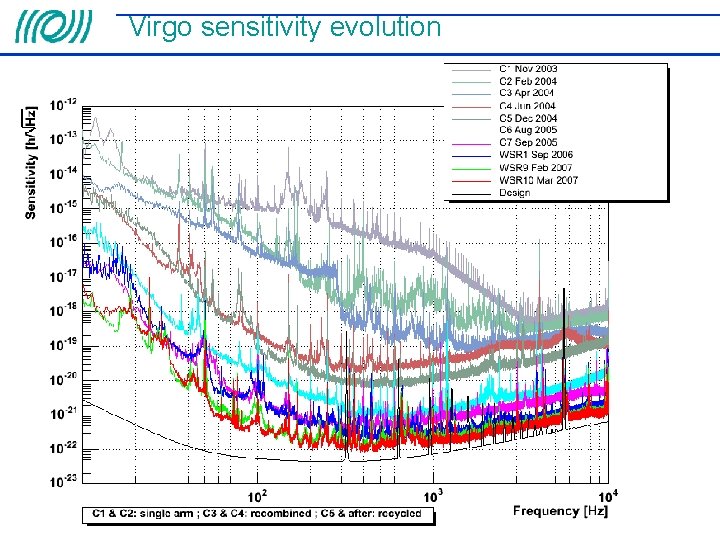

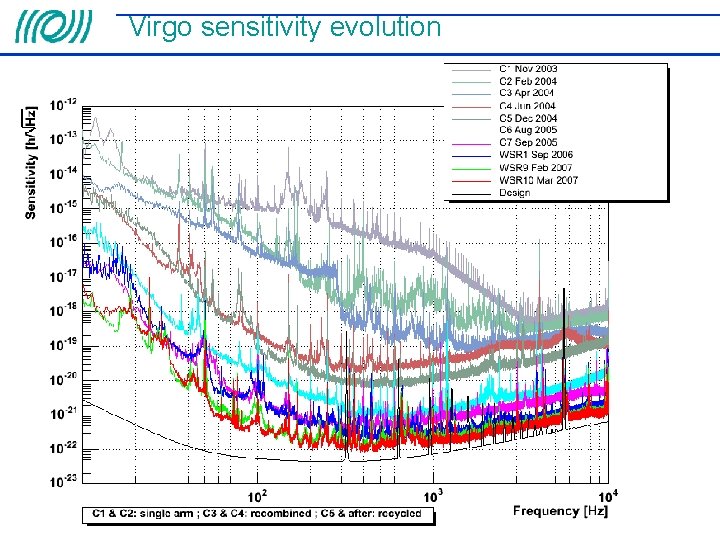

Virgo sensitivity evolution

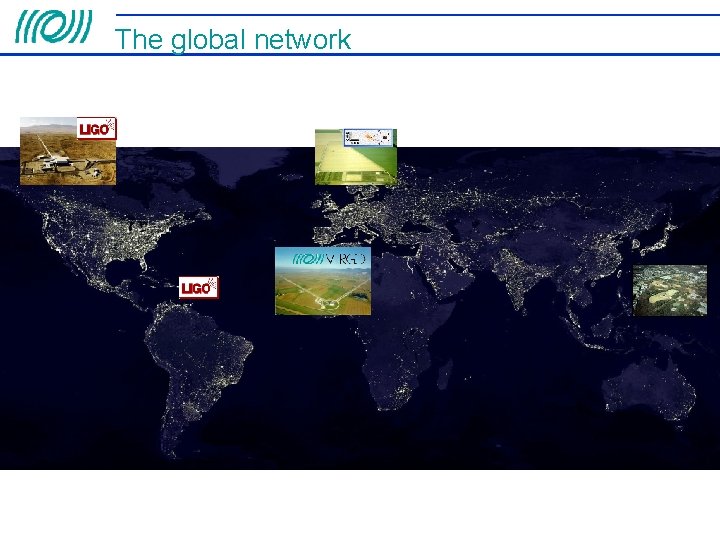

The global network

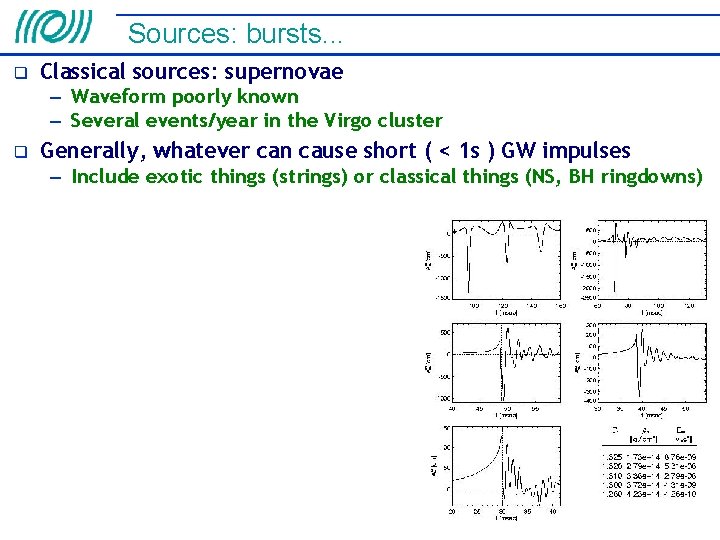

Sources: bursts. . . Classical sources: supernovae – Waveform poorly known – Several events/year in the Virgo cluster Generally, whatever can cause short ( < 1 s ) GW impulses – Include exotic things (strings) or classical things (NS, BH ringdowns)

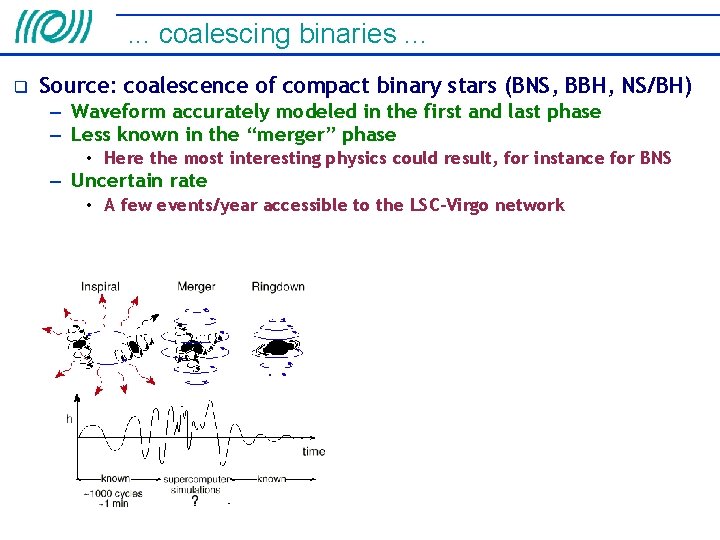

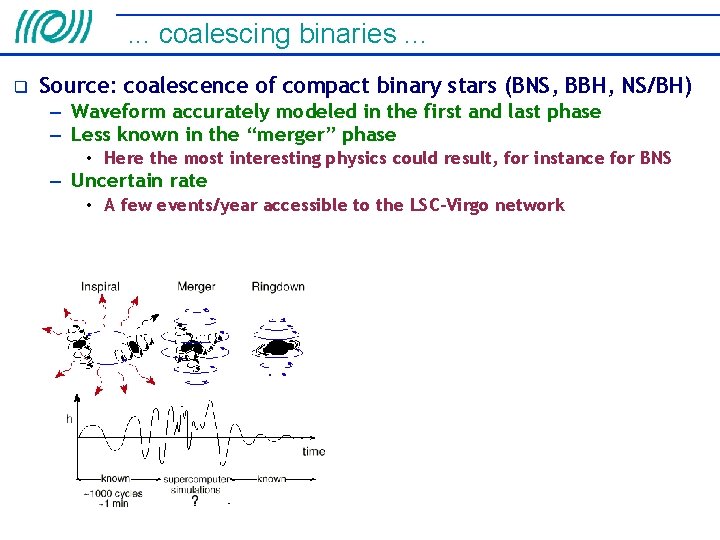

. . . coalescing binaries. . . Source: coalescence of compact binary stars (BNS, BBH, NS/BH) – Waveform accurately modeled in the first and last phase – Less known in the “merger” phase • Here the most interesting physics could result, for instance for BNS – Uncertain rate • A few events/year accessible to the LSC-Virgo network

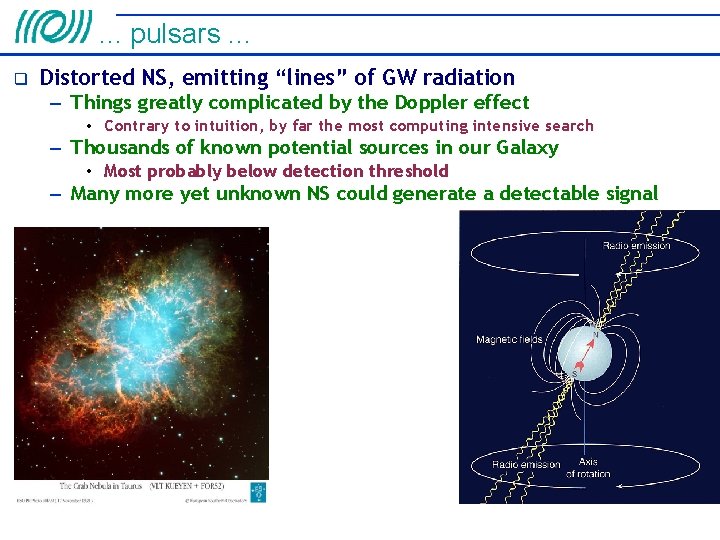

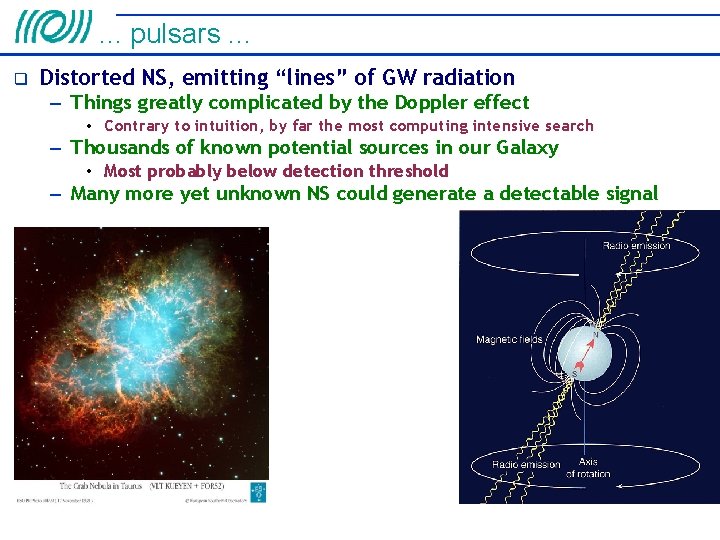

. . . pulsars. . . Distorted NS, emitting “lines” of GW radiation – Things greatly complicated by the Doppler effect • Contrary to intuition, by far the most computing intensive search – Thousands of known potential sources in our Galaxy • Most probably below detection threshold – Many more yet unknown NS could generate a detectable signal

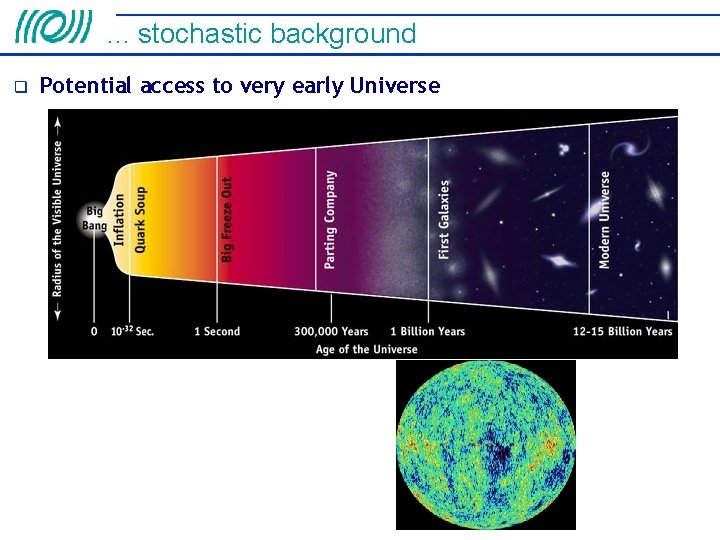

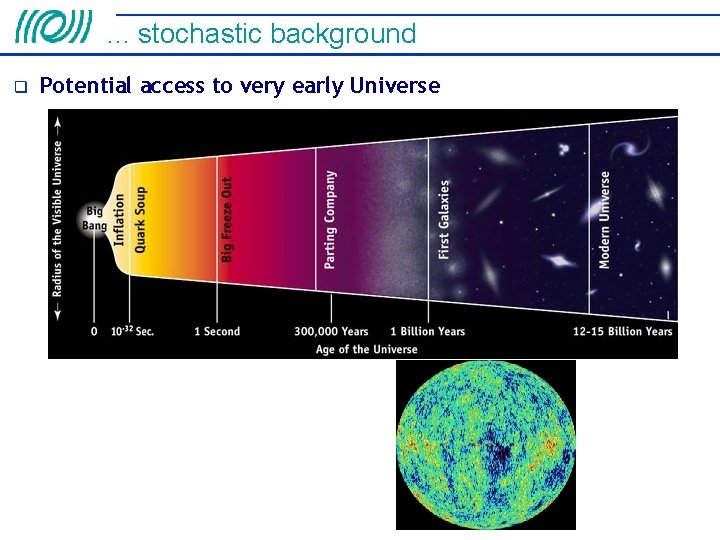

. . . stochastic background Potential access to very early Universe

What is Data Analysis in Virgo? To characterize the detector noise – Start from the raw data channels – Produces figures of merit, and more detailed data quality info To translate interferometer output into equivalent GW strain – This is h-reconstruction, including partial cancellation of noises • Start from a subset of the raw-data channels • Produce time-series in the audio band + data quality information To search for impulsive, that is finite duration events – Namely Burst (Supernova) and Coalescing Binaries searches • Kept distinct more by event duration than by physics source • Start from reduced data (h-reconstructed) • Produce “events” + veto information To search for “always present” signals – The Pulsar searches look for quasi-monochromatic signals • Start from reduced data (h-reconstructed) • Produce “candidates”: potentially emitting locations in the sky – The Stochastic background search looks for GW noise • Need also data from other detectors • Produce estimates of the background strength

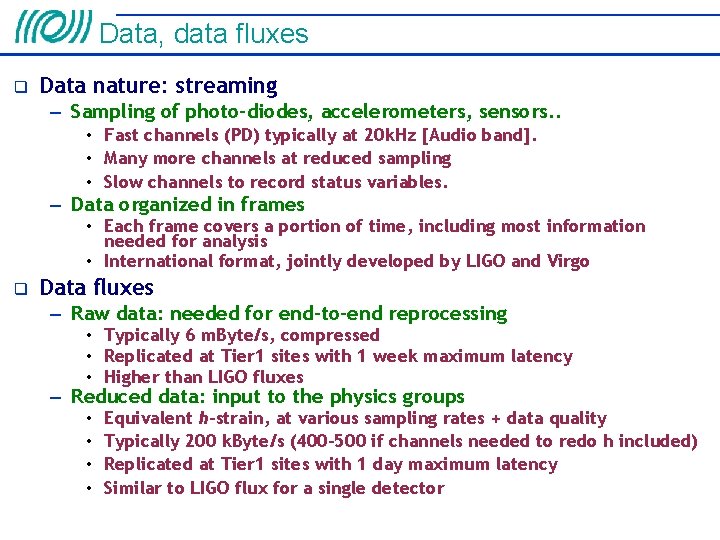

Data, data fluxes Data nature: streaming – Sampling of photo-diodes, accelerometers, sensors. . • Fast channels (PD) typically at 20 k. Hz [Audio band]. • Many more channels at reduced sampling • Slow channels to record status variables. – Data organized in frames • Each frame covers a portion of time, including most information needed for analysis • International format, jointly developed by LIGO and Virgo Data fluxes – Raw data: needed for end-to-end reprocessing • Typically 6 m. Byte/s, compressed • Replicated at Tier 1 sites with 1 week maximum latency • Higher than LIGO fluxes – Reduced data: input to the physics groups • • Equivalent h-strain, at various sampling rates + data quality Typically 200 k. Byte/s (400 -500 if channels needed to redo h included) Replicated at Tier 1 sites with 1 day maximum latency Similar to LIGO flux for a single detector

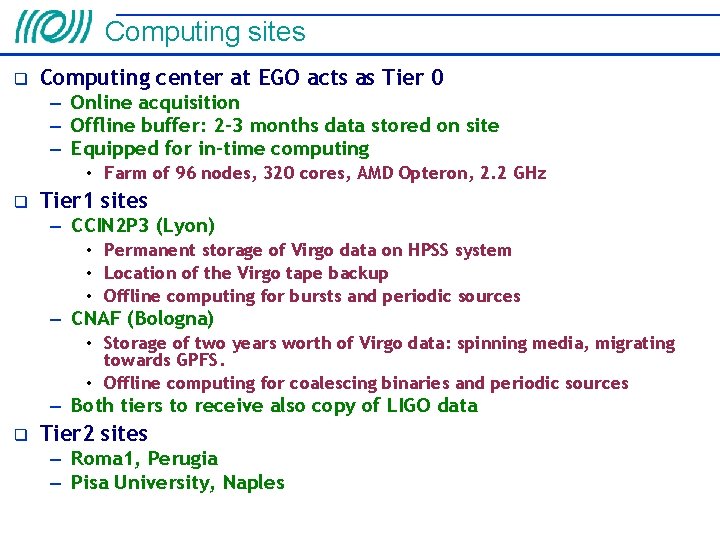

Computing sites Computing center at EGO acts as Tier 0 – Online acquisition – Offline buffer: 2 -3 months data stored on site – Equipped for in-time computing • Farm of 96 nodes, 320 cores, AMD Opteron, 2. 2 GHz Tier 1 sites – CCIN 2 P 3 (Lyon) • Permanent storage of Virgo data on HPSS system • Location of the Virgo tape backup • Offline computing for bursts and periodic sources – CNAF (Bologna) • Storage of two years worth of Virgo data: spinning media, migrating towards GPFS. • Offline computing for coalescing binaries and periodic sources – Both tiers to receive also copy of LIGO data Tier 2 sites – Roma 1, Perugia – Pisa University, Naples

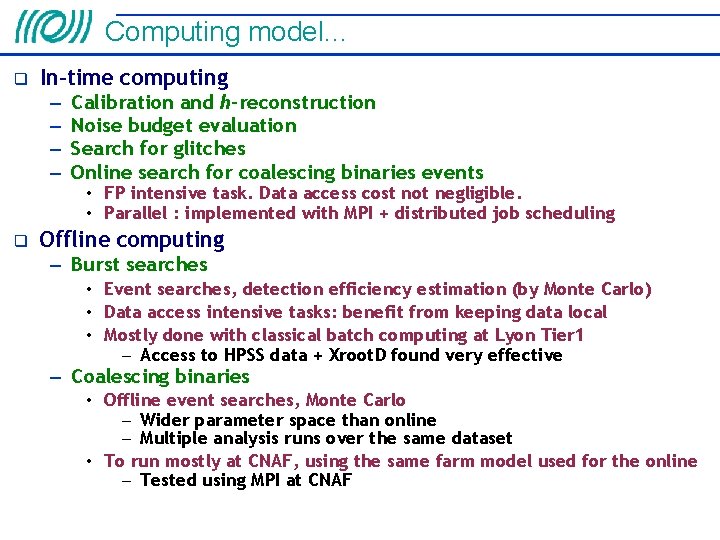

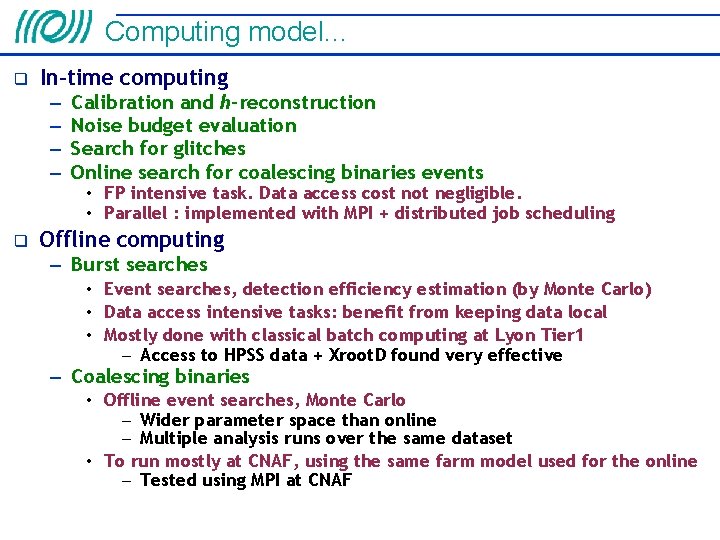

Computing model. . . In-time computing – – Calibration and h-reconstruction Noise budget evaluation Search for glitches Online search for coalescing binaries events • FP intensive task. Data access cost not negligible. • Parallel : implemented with MPI + distributed job scheduling Offline computing – Burst searches • Event searches, detection efficiency estimation (by Monte Carlo) • Data access intensive tasks: benefit from keeping data local • Mostly done with classical batch computing at Lyon Tier 1 – Access to HPSS data + Xroot. D found very effective – Coalescing binaries • Offline event searches, Monte Carlo – Wider parameter space than online – Multiple analysis runs over the same dataset • To run mostly at CNAF, using the same farm model used for the online – Tested using MPI at CNAF

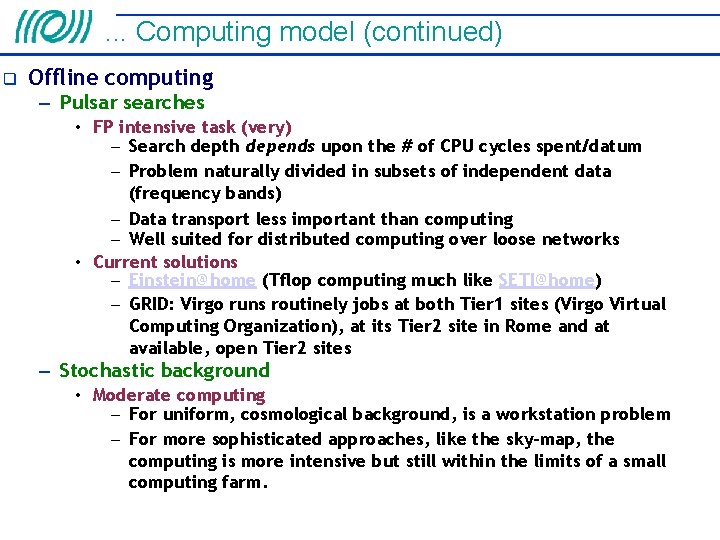

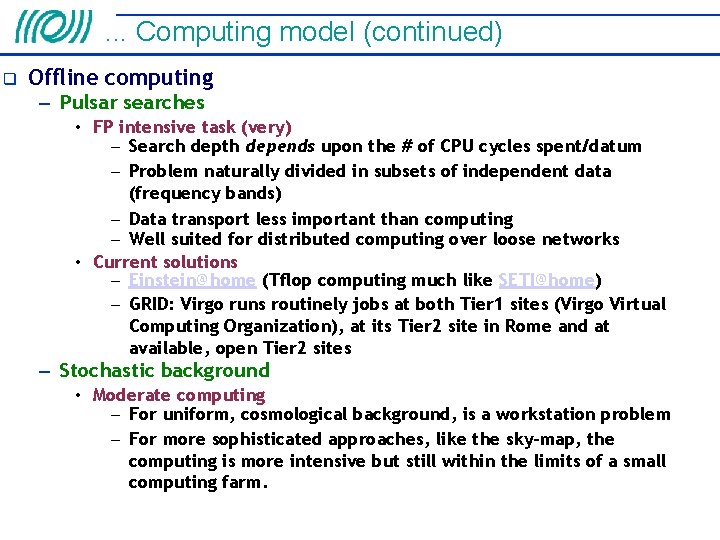

. . . Computing model (continued) Offline computing – Pulsar searches • FP intensive task (very) – Search depth depends upon the # of CPU cycles spent/datum – Problem naturally divided in subsets of independent data (frequency bands) – Data transport less important than computing – Well suited for distributed computing over loose networks • Current solutions – Einstein@home (Tflop computing much like SETI@home) – GRID: Virgo runs routinely jobs at both Tier 1 sites (Virgo Virtual Computing Organization), at its Tier 2 site in Rome and at available, open Tier 2 sites – Stochastic background • Moderate computing – For uniform, cosmological background, is a workstation problem – For more sophisticated approaches, like the sky-map, the computing is more intensive but still within the limits of a small computing farm.

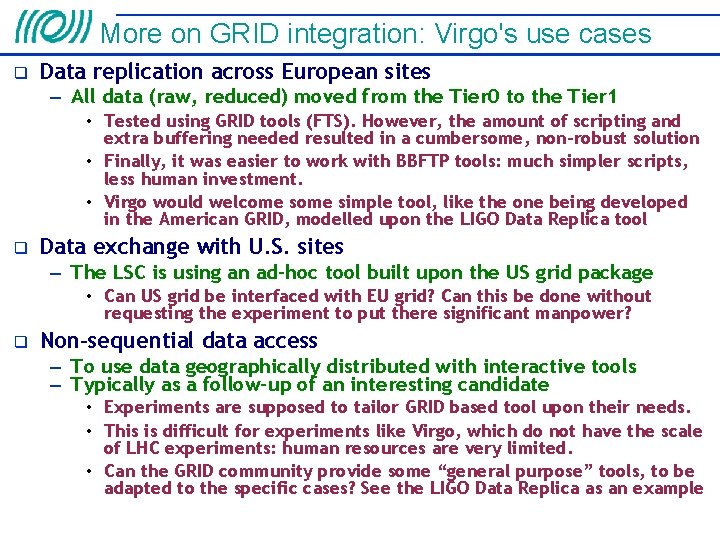

More on GRID integration: Virgo's use cases Data replication across European sites – All data (raw, reduced) moved from the Tier 0 to the Tier 1 • Tested using GRID tools (FTS). However, the amount of scripting and extra buffering needed resulted in a cumbersome, non-robust solution • Finally, it was easier to work with BBFTP tools: much simpler scripts, less human investment. • Virgo would welcome simple tool, like the one being developed in the American GRID, modelled upon the LIGO Data Replica tool Data exchange with U. S. sites – The LSC is using an ad-hoc tool built upon the US grid package • Can US grid be interfaced with EU grid? Can this be done without requesting the experiment to put there significant manpower? Non-sequential data access – To use data geographically distributed with interactive tools – Typically as a follow-up of an interesting candidate • Experiments are supposed to tailor GRID based tool upon their needs. • This is difficult for experiments like Virgo, which do not have the scale of LHC experiments: human resources are very limited. • Can the GRID community provide some “general purpose” tools, to be adapted to the specific cases? See the LIGO Data Replica as an example

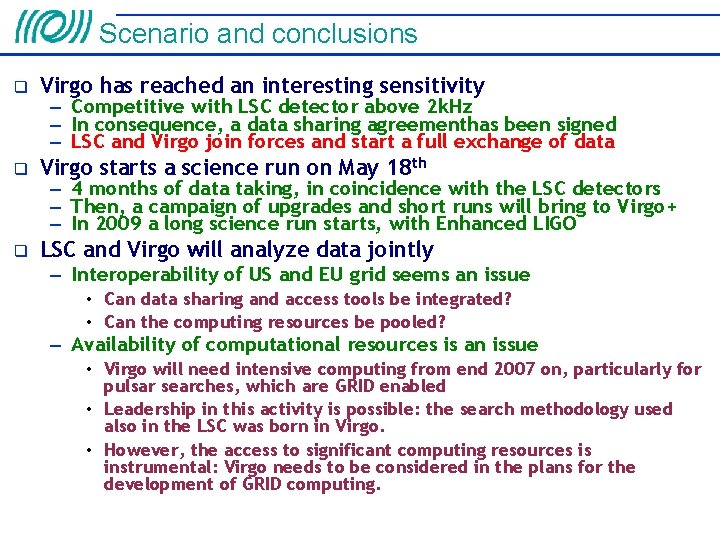

Scenario and conclusions Virgo has reached an interesting sensitivity Virgo starts a science run on May 18 th LSC and Virgo will analyze data jointly – Competitive with LSC detector above 2 k. Hz – In consequence, a data sharing agreementhas been signed – LSC and Virgo join forces and start a full exchange of data – 4 months of data taking, in coincidence with the LSC detectors – Then, a campaign of upgrades and short runs will bring to Virgo+ – In 2009 a long science run starts, with Enhanced LIGO – Interoperability of US and EU grid seems an issue • Can data sharing and access tools be integrated? • Can the computing resources be pooled? – Availability of computational resources is an issue • Virgo will need intensive computing from end 2007 on, particularly for pulsar searches, which are GRID enabled • Leadership in this activity is possible: the search methodology used also in the LSC was born in Virgo. • However, the access to significant computing resources is instrumental: Virgo needs to be considered in the plans for the development of GRID computing.